Abstract

Recent advances in artificial intelligence (AI) have led to its widespread industrial adoption, with machine learning systems demonstrating superhuman performance in a significant number of tasks. However, this surge in performance, has often been achieved through increased model complexity, turning such systems into “black box” approaches and causing uncertainty regarding the way they operate and, ultimately, the way that they come to decisions. This ambiguity has made it problematic for machine learning systems to be adopted in sensitive yet critical domains, where their value could be immense, such as healthcare. As a result, scientific interest in the field of Explainable Artificial Intelligence (XAI), a field that is concerned with the development of new methods that explain and interpret machine learning models, has been tremendously reignited over recent years. This study focuses on machine learning interpretability methods; more specifically, a literature review and taxonomy of these methods are presented, as well as links to their programming implementations, in the hope that this survey would serve as a reference point for both theorists and practitioners.

1. Introduction

Artificial intelligence (AI) had for many years mostly been a field focused heavily on theory, without many applications of real-world impact. This has radically changed over the past decade as a combination of more powerful machines, improved learning algorithms, as well as easier access to vast amounts of data enabled advances in Machine Learning (ML) and led to its widespread industrial adoption[1]. Around 2012 Deep Learning methods [2] started to dominate accuracy benchmarks, achieving superhuman results and further improving in the subsequent years. As a result, today, a lot of real-world problems in different domains, stretching from retail and banking [3,4] to medicine and healthcare [5,6,7], are tackled while using machine learning models.

However, this improved predictive accuracy has often been achieved through increased model complexity. A prime example is the deep learning paradigm, which is at the heart of most state-of-the-art machine learning systems. It allows for machines to automatically discover, learn, and extract the hierarchical data representations that are needed for detection or classification tasks. This hierarchy of increasing complexity combined with the fact that vast amounts of data are used to train and develop such complex systems, while, in most cases, boosts the systems’ predictive power, inherently reducing their ability to explain their inner workings and mechanisms. As a consequence, the rationale behind their decisions becomes quite hard to understand and, therefore, their predictions hard to interpret.

There is clear trade-off between the performance of a machine learning model and its ability to produce explainable and interpretable predictions. On the one hand, there are the so called black-box models, which include deep learning [2] and ensembles [8,9,10]. On the other hand, there are the so called white-box or glass-box models, which easily produce explainable results—with common examples, including linear [11] and decision-tree based [12] models. Although more explainable and interpretable, the latter models are not as powerful and they fail achieve state-of-the-art performance when compared to the former. Both their poor performance and the ability to be well-interpreted and easily-explained come down to the same reason: their frugal design.

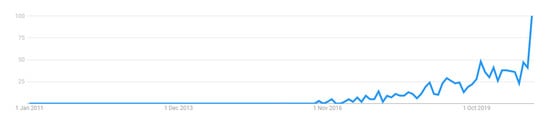

Systems whose decisions cannot be well-interpreted are difficult to be trusted, especially in sectors, such as healthcare or self-driving cars, where also moral and fairness issues have naturally arisen. This need for trustworthy, fair, robust, high performing models for real-world applications led to the revival of the field of eXplainable Artificial Intelligence (XAI) [13]—a field focused on the understanding and interpretation of the behaviour of AI systems, which. in the years prior to its revival, had lost the attention of the scientific community, as most research focused on the predictive power of algorithms rather than the understanding behind these predictions. The popularity of the search term “Explainable AI” throughout the years, as measured by Google Trends, is illustrated in Figure 1. The noticeable spike in recent years, indicating the of rejuvenation of the field, is also reflected in the increased research output of the same period.

Figure 1.

Google Trends Popularity Index (Max value is 100) of the term “Explainable AI” over the last ten years (2011–2020).

The Contribution of this Survey

As the demand for more explainable machine learning models with interpretable predictions rises, so does the need for methods that can help to achieve these goals. This survey will focus on providing an extensive and in-depth identification, analysis, and comparison of machine learning interpretability methods. The end goal of the survey is to serve as a reference point for both theorists and practitioners not only by providing a taxonomy of the existing methods, but also by scoping the best use cases for each of the methods and also providing links to their programming implementations–the latter being found in the Appendix A section.

2. Fundamental Concepts and Background

2.1. Explainability and Interpretability

The terms interpretability and explainability are usually used by researchers interchangeably; however, while these terms are very closely related, some works identify their differences and distinguish these two concepts. There is not a concrete mathematical definition for interpretability or explainability, nor have they been measured by some metric; however, a number of attempts have been made [14,15,16] in order to clarify not only these two terms, but also related concepts such as comprehensibility. However, all these definitions lack mathematical formality and rigorousness [17]. One of the most popular definitions of interpretability is the one of Doshi-Velez and Kim, who, in their work [15], define it as “the ability to explain or to present in understandable terms to a human”. Another popular definition came from Miller in his work [18], where he defines interpretability as “the degree to which a human can understand the cause of a decision”. Although intuitive, these definitions lack mathematical formality and rigorousness [17].

Based on the above, interpretability is mostly connected with the intuition behind the outputs of a model [17]; with the idea being that the more interpretable a machine learning system is, the easier it is to identify cause-and-effect relationships within the system’s inputs and outputs. For example, in image recognition tasks, part of the reason that led a system to decide that a specific object is part of an image (output) could be certain dominant patterns in the image (input). Explainability, on the other hand, is associated with the internal logic and mechanics that are inside a machine learning system. The more explainable a model, the deeper the understanding that humans achieve in terms of the internal procedures that take place while the model is training or making decisions. An interpretable model does not necessarily translate to one that humans are able to understand the internal logic of or its underlying processes. Therefore, regarding machine learning systems, interpretability does not axiomatically entail explainability, or vice versa. As a result, Gilpin et al. [16] supported that interpretability alone is insufficient and that the presence of explainability is also of fundamental importance. Mostly aligned with the work of Doshi-Velez and Kim [15], this study considers interpretability to be a broader term than explainability.

2.2. Evaluation of Machine Learning Interpretability

Doshi-Velez and Kim [15] proposed the following classification of evaluation methods for interpretability: application-grounded, human-grounded, and functionally-grounded, subsequently discussing the potential trade-offs among them. Application-grounded evaluation concerns itself with how the results of the interpretation process affect the human, domain expert, end-user in terms of a specific and well-defined task or application. Concrete examples under this type of evaluation include whether an interpretability method results in better identification of errors or less discrimination. Human-grounded evaluation is similar to application-grounded evaluation; however, there are two main differences: first, the tester in this case does not have be a domain expert, but can be any human end-user and secondly, the end goal is not to evaluate a produced interpretation with respect to its fitness for a specific application, but rather to test the quality of produced interpretation in a more general setting and measure how well the general notions are captured. An example of measuring how well an interpretation captures the abstract notion of an input would be for humans to be presented with different interpretations of the input, and them selecting the one that they believe best encapsulates the essence of it. Functionally-grounded evaluation does not require any experiments that involve humans, but instead uses formal, well-defined mathematical definitions of interpretability to evaluate quality of an interpretability method. This type of evaluation usually follows the other two types of evaluation: once a class of models has already passed some interpretability criteria via human-grounded or application-grounded experiments, then mathematical definitions can be used to further rank the quality of the interpretability models. Functionally-grounded evaluation is also appropriate when experiments that involve humans cannot be applied for some reason (e.g ethical considerations) or when the proposed method has not reached a mature enough stage to be evaluated by human users. That said, determining the right measurement criteria and metric for each case is challenging and remains an open problem.

2.3. Related Work

The concepts of interpretability and explainability are hard to rigorously define; however, multiple attempts have been made towards that goal, the most emblematic works being [14,15].

The work of Gilpin et al. [16] constitutes another attempt to define the key concepts around interpretability in machine learning. The authors, while focusing mostly on deep learning, also proposed a taxonomy, by which the interpretability methods for neural networks could be classified into three different categories. The first one encompasses methods that emulate the processing of data in order to create insights for the connections between inputs and outputs of the model. The second category contains approaches that try to explain the representation of data inside a network, while the last category consists of transparent networks that explain themselves. Lastly, the author recognises the promising nature of the progress achieved in the field of explaining deep neural networks, but also highlights the lack of combinatorial approaches, which would attempt to merge different techniques of explanation, claiming that such types of methods would result in better explanations.

Adadi and Berrada [17] conducted an extensive literature review, collecting and analysing 381 different scientific papers between 2004 and 2018. They arranged all of the scientific work in the field of explainable AI along four main axes and stressed the need for more formalism to be introduced in the field of XAI and for more interaction between humans and machines. After highlighting the trend of the community to explore explainability only in terms of modelling, they proposed embracing explainability in other aspects of machine learning. Finally, they suggested a potential research direction that would be towards the composition of existing explainability methods.

Another survey that attempted to categorise the existing explainability methods is this of Guidotti et al. [19]. Firstly, the authors identified four categories for each method based on the type of problem that they were created to tackle. One category for explaining black-box models, one for inspecting them, one for explaining their outcomes, and, finally, one for creating transparent black box models. Subsequently, they proposed a taxonomy that takes into account the type of underlying explanation model (explanator), the type of data used as input, the problem the method encounters, as well as the black box model that was “opened”. As with works previously discussed, the lack of formality and need for a definition of metrics for evaluating the performance of interpretability methods was highlighted once again, while the incapacity of most black-box explainability methods to interpret models that make decisions based on unknown or latent features was also raised. Lastly, the lack of interpretability techniques in the field of recommender systems is identified and an approach according to which models could be learned directly from explanations is proposed.

Upon identifying the lack of formality and ways to measure the performance of interpretability methods, Murdoch et al. [20] published a survey in 2019, in which they created an interpretability framework in the hope that it would help to bridge the aforementioned gap in the field. The Predictive, Descriptive, Relevant (PDR) framework introduced three types of metrics for rating the interpretability methods, predictive accuracy, descriptive accuracy, and relevancy. To conclude, they dealt with transparent models and post-hoc interpretation, as they believed that post-hoc interpretability could be used to elevate the predictive accuracy of a model and that transparent models could increase their use cases by increasing predictive accuracy—making clear, that, in some cases, the combination of the two methods is ideal.

A more recent study carried out by Arrieta et al. [21] introduced a different type of arrangement that initially distinguishes transparent and post-hoc methods and subsequently created sub-categories. An alternative taxonomy specifically for the deep learning interpretability methods, due to their high volume, was developed. Under this taxonomy, four categories were proposed: one for providing explanations regarding deep network processing, one in relation to the explanation of deep network representation, one concerned with the explanation of producing systems, and one encompassing hybrids of transparent and black-box methods. Finally, the authors dived into the concept of Responsible Artificial Intelligence, a methodology introducing a series of criteria for implementing AI in organizations.

3. Different Scopes of Machine Learning Interpretability: A Taxonomy of Methods

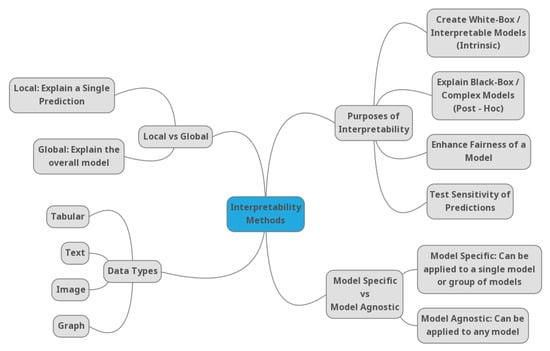

Different view-points exist when it comes to looking at the the emerging landscape of interpretability methods, such as the type of data these methods deal with or whether they refer to global or local properties. The classification of machine learning interpretability techniques should not be one-sided. There are exist different points of view, which distinguish and could further divide these methods. Hence, in order for a practitioner to identify the ideal method for the specific criteria of each problem encountered, all aspects of each method should be taken into consideration.

A especally important separation of interpretability methods could happen based on the type of algorithms that could be applied. If their application is only restricted to a specific family of algorithms, then these methods are called model-specific. In contrast, the methods that could be applied in every possible algorithm are called model agnostic. Additionally, one crucial aspect of dividing the interpretability methods is based on the scale of interpretation. If the method provides an explanation only for a specific instance, then it is a local one and, if the method explains the whole model, then it is global. At last, one crucial factor that should be taken into consideration is the type of data on which these methods could be applied. The most common types of data are tabular and images, but there are also some methods for text data. Figure 2 presents a summarized mind-map, which visualizes the different aspects by which an interpretability method could be classified. These aspects should always be taken into consideration by practitioners, in order for the ideal method with respect to their needs to be identified.

Figure 2.

Taxonomy mind-map of Machine Learning Interpretability Techniques.

This taxonomy focuses on the purpose that these methods were created to serve and the ways through which they accomplish this purpose. As a result, according to the presented taxonomy, four major categories for interpretability methods are identified: methods for explaining complex black-box models, methods for creating white-box models, methods that promote fairness and restrict the existence of discrimination, and, lastly, methods for analysing the sensitivity of model predictions.

3.1. Interpretability Methods to Explain Black-Box Models

This first category encompasses methods that are concerned with black-box pre-trained machine learning models. More specifically, such methods do not try to create interpretable models, but, instead, try to interpret already trained, often complex models, such as deep neural networks. That is also why they sometimes are referred to as post-hoc interpretability methods in the related scientific literature.

Under this taxonomy, this category, due to the volume of scientific work around deep learning related interpretability methodologies, is split into two sub-categories, one specifically for deep learning methods and one concerning all other black-box models. For each of these sub-categories, a summary of the included methods is shown in Table 1 and Table 2 respectively.

Table 1.

Interpretability Methods to Explain Deep Learning Models.

Table 2.

Interpretability Methods to Explain any Black-Box Model.

3.1.1. Interpretability Methods to Explain Deep Learning Models

The widespread adoption of deep learning methods, combined with the fact that it is in their very nature to produce black-box machine learning systems, has led to a considerable amount of experiments and scientific work around them and, therefore, tools regarding their interpretability. A substantial portion of attention regarding python tools is focused on deep learning for images more specifically on the concept of saliency in images, as initially proposed in [22]. Saliency refers to unique features, such as pixels or resolution of the image in the context of visual processing. These unique features depict the visually alluring locations in an image and a saliency map is a topographical representation of them.

Gradients: first proposed in [23], the gradients explanation technique, as its name suggests, is gradient-based attribution method, according to which each gradient quantifies how much a change in each input dimension would a change the predictions in a small neighborhood around the input. Consequently, the method computes an image-specific class saliency map corresponding to the gradient of an output neuron with respect to the input, highlighting the areas of the given image, discriminative with respect to the given class. An improvement over the initial method was proposed in [24], where the well-known Krizhevsky network [25] was utilised in order to outperform state-of-the-art saliency models by a large margin, increasing the amount of explained information by 67% when compared to state-of-the art. Furthermore, in [26], a task-specific pre-training scheme was designed in order to make the multi-context modeling suited for saliency detection.

Integrated Gradients [27] is gradient-based attribution a method that attempts to explain predictions that are made by deep neural network by attributing them to the network’s input features. It is essentially is a variation on calculating the gradient of the prediction output with respect to the features of the input, as implemented by the simpler Gradients method. Under this variation, a much desired property, which is known as completeness or Efficiency [28] or Summation to Delta [29], is satisfied: the attributions sum up to the target output minus the target output that was evaluated at the baseline. Moreover, two fundamental axioms that attribution methods ought to satisfy are identified: sensitivity and implementation invariance. Upon highlighting that most known attribution methods do not satisfy these axioms, they propose the integrated gradients method as a simple way obtain great interpretability results. Another work, closely related to the integrated gradients method, was proposed in [30], where attributions are used in order to help identify weaknesses of three question-answer models better than the conventional models, while also to provide workflow superiority.

DeepLIFT [29] is a popular algorithm that was designed to be applied on top of deep neural network predictions. The method, as described in [29], is an improvement over its first form [29], also known as the “Gradient * Input” method, where it was observed that saliency maps that were obtained using the gradient method can be greatly enhanced by multiplying the gradient with the input signal—an operation that is essentially a first-order Taylor approximation of how the output would change if the input were set to zero. The method’s superiority was demonstrated by showing considerable benefits over gradient-based methods when applied to models that were trained on natural images and genomics data. By observing the activation of each neuron, it assigns them contribution scores, calculated by comparing the difference of the output from some reference output to the differences of the inputs from their reference inputs. By optionally giving separate consideration to positive and negative contributions, DeepLIFT can also reveal dependencies that are missed by other approaches, such as the Integrated Gradients approach [27].

Guided BackPropagation [31], which is also known as guided saliency, is a variant of the deconvolution approach [32] for visualizing features learned by CNNs, which can also be applied to a broad range of network structures. Under this approach, the use of max-pooling in convolutional neural networks for small images is questioned and the replacement of max-pooling layers by a convolutional layer with increased stride is proposed, resulting in no loss of accuracy on several image recognition benchmarks.

Deconvolution, as proposed in [32], is a technique for visualizing Convolutional Neural Networks (CNNs or ConvNets) by utilising De-convolutional Networks (DeconvNets or DCNNs), as initially proposed in [33]. DeconvNets use the same components, such as filtering and pooling, but in reverse fashion: instead of mapping pixels to features, they apply the opposite. Originally, in [33] DeconvNets were proposed as a way of performing unsupervised learning; however, in [32] they are not used in any learning capacity, but rather as a tool to provide insight into the function of intermediate feature layers and pieces of information of an already trained CNN. More specifically, a novel way of mapping feature activity in intermediate layers back to the input feature space (pixels in the case of images) was proposed, showing what input pattern originally caused a given activation in the feature maps. This is done through a DeconvNet being attached to each of CNN layers, providing a continuous path back to image pixels.

Class Activation Maps, or CAMs, first introduced in [34], is another deep learning intrepretability method used for CNNs. More specifically, it’s used to indicate the discriminative regions of an image used by a CNN to identify the category of the image. A feature vector is created by computing and concatenating the averages of the activations of convolutional feature maps that are located just before the final output layer. Subsequently, a weighted sum of this vector is fed to the final softmax loss layer. Using this simple architecture, the importance of the image regions, pertaining to their classification, can, therefore, be identified by projecting back the weights of the output layer on to the convolutional feature maps. CAM has two distinct drawbacks: Firstly, in order to be applied, it requires that neural networks have a very specific structure in their final layers and, for all other networks, the structure needs to be changed and the network needs to be re-trained under the new architecture. Secondly, the method, being constrained to only visualising the final convolutional layers of a CNN, is only useful when it comes to interpreting the very last stages of the network’s image classification and it is unable to provide any insight into the previous stages.

Grad-CAM [35] is a strict generalization of CAM that can produce visual explanations for any CNN, regardless of its architecture, thus overcoming one of the limitations of CAM. As a gradient-based method, Grad-CAM uses the class-specific gradient information flowing into the final convolutional layer of a CNN in order to produce a coarse localization map of the important regions in the image when it comes to classification, making CNN-based models more transparent. The authors of Grad-CAM also demonstrated how the technique can be combined with existing pixel-space visualizations to create a high-resolution class-discriminative visualization, Guided Grad-CAM. By generating visual explanations in order to better understand image classification of popular networks while using both Grad-CAM and Guided Grad-CAM, it was shown that the proposed techniques outperform pixel-space gradient visualizations (Guided Backpropagation and Deconvolution) when evaluated in terms of localisation (the ability to localise objects in images using holistic image class labels only) and faithfulness (the ability to accurately explain the function learned by a model). While an improvement over CAM, Grad-CAM has its own limitations, the most notable including its inability to localize multiple occurrences of an object in an image, due its partial derivative assumptions, its inability to accurately determine class-regions coverage in an image, and the possible loss in signal due the continual upsampling and downsampling processes.

Grad-CAM++ [36] is an extension of the Grad-CAM method that provides better visual explanations of CNN model predictions. More specifically, object localization is extended to multiple object instances in a single image while using a weighted combination of the positive partial derivatives of the last convolutional layer feature maps with respect to a specific class score as weights to generate a visual explanation for the corresponding class label. This is especially helpful in multi-label classification problems, while the different weight assigned to each pixel makes it possible to capture the importance of each pixel separately in the gradient feature map.

Layer-wise Relevance Propagation (LRP) [37] is a “decomposition of nonlinear classifiers” technique that brings interpretability to highly complex deep neural networks by propagating their predictions backwards. The proposed propagation procedure satisfies a conservation property, whereby the magnitude of any output is remains intact, as it is backpropagated through the lower-level layers of the network: Starting from the output neurons going all the way back to the input-layer neurons, each neuron redistributes to the lower layer the same amount of information as it received from the higher layer. The method can be applied to various data types, such as images, text, and more, as well as various neural network architectures.

By pointing out and exploiting the fact that the gradient of the loss function with respect to the input can be interpreted as a sensitivity map, Smilkov et al. [38] created SmoothGrad, a method that can be applied in order to reduce noise in order visually sharpen such sensitivity maps. SmoothGrad can be combined with other sensitivity map algorithms, such as the Integrated Gradients [27] and Guided BackPropagation [31], in order to produce enhanced sensitivity maps—more specifically, two smoothing approaches were explored and experimented with: The first one, which had an excellent smoothing impact, calculates the average of maps made from many small perturbations of a given instance, while the second perturbs the data with random noise and then performs the training step. The experiments showed that these two techniques can have an additive effect, and combining them provides superior results to applying them separately. Upon performing a series of experiments, the authors conclude that the estimated smoothed gradient leads to sharper visualisations and more coherent sensitivity maps when compared to the non-smoothed gradient.

In order to interpret the predictions of deep neural networks for images, the RISE algorithm [39] creates a saliency map for any black-box model, indicating how important each pixel of the image with respect to the network’s prediction. The method follows a simple yet powerful approach: each input image is multiplied element-wise with random masks and the resulting image is subsequently fed to the model for classification. The model produces a probability-like score for the masked images with respect to each of the available classes and a saliency map for the original image is created as a linear combination of the masks. The coefficients of this linear combination are calculated while using the score that was produced by the model for the corresponding masked inputs with respect to target class.

In [42], the idea of Concept Activation Vectors (CAVs) was introduced, providing a human-friendly interpretation of a neural network internal state; an intuition of how sensitive a prediction is to a user-defined concept and how important the concept is to the classification itself. One of the issues with saliency maps is that concepts in an image, such as the “human” concept or the “animal” concept, cannot be expressed as pixels and are not in the input features either and therefore cannot be captured by saliency maps. To address this CAVs try to provide a translation between the input vector space and the high-level concept space; a CAV corresponding to a concept is essentially a vector in the direction of the values (the result of activation functions in a network’s neurons) of that concept’s set of examples. By utilising CAVs, the TCAV method provides a quantitative measure of importance of a concept if and only if the network has learned about it. Furthermore, TCAV can reveal any concept learnt, even if it was not explicitly tagged within the training set or even if was not part of the input feature set.

Yosinski et al. [40] proposed applying regularisation as an additional processing step in the saliency map creating process. More specifically, by introducing four primary regularization techniques, they enforced stronger prior distributions in order to promote bias towards more recognisable and interpretable visualisations. They showed that the best results were obtained when the different regularisers were combined, while each of these regularisation methods can also individually enhance interpretability.

In [43], an interpretability technique for neural networks operating in the natural language processing (NLP) domain was proposed. Under this approach, smaller, tailored pieces of the original input text are extracted and then used as input in order to try and produce the same output prediction as the original full-text input. These small pieces, called rationales, provide the necessary explanation and justification for the output in terms of the input. The architecture consists of two components, a generator and an encoder, which are trained to function well as a whole. The generator produces candidate rationales, and the encoder uses them to produce predicted probability scores. The generator and the encoder are trained jointly, and, through the minimization of the cost function, it is decided which candidates will be characterised as rationals. Essentially, the two components work together in order to find subsets of text that are highly associated with the predicted score.

Deep Taylor decomposition [41] is a method that decomposes a neural network’s output, for given input instance, into contributions of this instance by backpropagating the explanations from the output layer to the input. Its usefulness was demonstrated within the computer vision paradigm, in order to measure the importance of single pixels in image classification tasks; however, the method can also be applied to different types of data as both a visualization tool as well as a tool for more complex analysis. The proposed approach has strong links to relevance propagation; the theoretical connections between the Taylor decomposition of a function and rule-based relevance propagation techniques are thoroughly discussed, demonstrating a close relationship between the two approaches for a particular class of neural networks. Deep Taylor decomposition produces heatmaps, enable the user to deeply understand the impact of each single input pixel when classifying a previously unseen image. It does not require hyperparameter tuning, is robust under different architectures and datasets, and works both with custom deep network models as well as with existing pre-trained ones.

Kindermans et al. [44] showed that, while the Deconvolution [32], Guided BackPropagation [31], and LRP [37] methods help in interpreting deep neural networks, they do not produce the theoretically correct interpretation, even in the simplest neural network setting; a linear model developed while using data that were produced by a linear generative model. Using this simplified setup, the authors showed that the direction of the network’s gradient does not necessarily provide an estimate for the signal in the data, but instead corresponds to the relationship between the signal and noise; the array of parameters that are learnt by the network is in the noise-cancelling direction rather than the direction of the signal. In order to address this issue, after introducing a quality criterion for neuron-wise signal estimators in order to evaluate existing methods and ultimately obtain estimators that optimize towards this criterion, the authors propose two interpretation methods that are are theoretically sound for linear models, PatternNet and PatternAtrribution. The former is used to estimate the correct direction, improving upon the DeConvNet[32] and Guided BackPropagation[31] visualizations, while the latter to identify how much the different signal dimensions contribute to the output through the network layers. As both of the methods treat neurons independently, the produced interpretation is a superposition of the interpretations of the individual neurons.

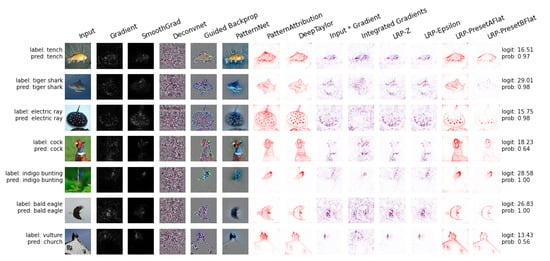

In Figure 3, a comparison of several interpretability methods for explaining deep learning models on ImageNet sample images, while using the innvestigate package, is presented.

Figure 3.

Comparison of Interpretability Methods to Explain Deep Learning Models on ImageNet sample images, using the innvestigate package.

3.1.2. Interpretability Methods to Explain any Black-Box Model

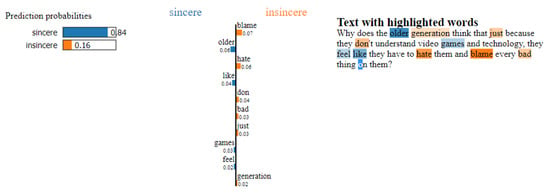

This section focuses on interpretability techniques, which can be applied to any black-box model. First introduced in [45], the local interpretable model-agnostic explanations (LIME) method is one of the most popular interpretability methods for black-box models. Following a simple yet powerful approach, LIME can generate interpretations for single prediction scores produced by any classifier. For any given instance and its corresponding prediction, simulated randomly-sampled data around the neighbourhood of input instance, for which the prediction was produced, are generated. Subsequently, while using the model in question, new predictions are made for generated instances and weighted by their proximity to the input instance. Lastly, a simple, interpretable model, such as a decision tree, is trained on this newly-created dataset of perturbed instances. By interpreting this local model, the initial black box model is consequently interpreted. Although LIME is powerful and straightforward, it has its drawbacks. In 2020, the first theoretical analysis of LIME [46] was published, validating the significance and meaningfulness of LIME, but also proving that poor choices in terms of parameters could lead LIME to missing out on important features. Figure 4 illustrates the application of the LIME method, in order to explain the rationale behind the classification of an instance of the Quora Insincere Questions Dataset.

Figure 4.

Local interpretable model-agnostic explanations (LIME) is used to explain the rationale behind the classification of an instance of the Quora Insincere Questions Dataset.

Zafar and Khan [47] supported that the random perturbation and feature selection methods that LIME utilises result in unstable generated interpretations. This is because, for the same prediction, different interpretations can be generated, which can be problematic for deployment. In order to address this uncertainty, a deterministic version of LIME, DLIME is proposed. In this version, random perturbation is replaced with hierarchical clustering to group the data and k-nearest neighbours (KNN) to select the cluster that is believed where the instance in question belongs. Using three medical datasets among multiple explanations, they demonstrate the superiority of DLIME over LIME in terms of the Jacart Similarity.

SHAP: Shapley Additive explanations (SHAP) [48] is a game-theory inspired method that attempts to enhance interpretability by computing the importance values for each features for individual predictions. Firstly, the authors define the class of additive feature attribution methods, which unifies six current methods, including LIME [45], DeepLIFT [29], and Layer-Wise Relevance Propagation [49], which all use the same explanation model. Subsequently, they propose SHAP values as a unified measure of feature importance that maintains three desirable properties: local accuracy, missingness, and consistency. Finally, they present several different methods for SHAP value estimation and provide experiments demonstrating not only the superiority of these values in terms of differentiating among the different output classes, but also in terms of better aligning with human intuition when compared to many other existing methods.

Ancors: in [50], another model-agnostic interpretability approach that works for any black-box model with a high probability guarantee was proposed. Under this approach, high-precision, if-then rules, called anchors, are created and utilised in order to represent local, sufficient conditions for prediction. More specifically, given a prediction for an instance, an anchor is defined as a rule that sufficiently decides the prediction locally, which means that any changes to other feature values of the instance do not essentially affect the prediction value. The anchors are constructed incrementally while using a bottom-up approach. More specifically, each anchor is initialized with an empty rule, one that applies to every instance. Subsequently, in iterative fashion, new candidate rules are generated and the candidate rule with the highest estimated precision replaces the previous for that specific anchor. If, at any point, the current candidate rule meets the definition of an anchor, the desired anchor has been identified and the iterative process terminates. The authors note that this approach, attempting to discover the shortest anchor, does not directly compute and optimise towards the highest coverage. However, they highlight that such short anchors are likely to have a high coverage. By conducting a user study, the authors demonstrated that anchors not only lead to higher human precision when compared to linear explanations, but they require less effort by the user in both their application and understanding/interpretation.

Originally proposed in [51], the contrastive explanations method (CEM) is capable of generating, what the authors call, contrastive explanations for any black box model. More specifically, given any input and its corresponding prediction, the method can identify not only which features should be minimally and sufficiently present for that specific prediction to be produced, but also which features what should be minimally and necessarily absent. Many interpretation methods focus on the former part and ignore the features that are minimally, but critically, absent when trying to form an interpretation. However, according to the authors, these absent parts play an important role when it comes to forming interpretations and such interpretations are natural to humans, as demonstrated in domains, such as healthcare and criminology. Luss et al. [52] extended the CEM framework to images with much richer structure. This was achieved by defining monotonic functions that correspond to, and enable, the introduction of more concepts into an image without the deletion of any existing ones.

Wachter et al. [53] proposed a lightweight model agnostic interpretability method providing counterfactual explanations, called counterfactuals. A counterfactual explanation of a prediction describes the smallest possible change that can be applied to the feature values, so that the output prediction can be changed to a desired predefined output. The goal of this approach was not to shed light on the inner workings of a black-box system or provide insight on its decision-making, but, instead, to identify and reveal which external factors would require changing in order for the desired output to be produced. The authors highlight that counterfactual explanations, being a minimal interpretability form, are not appropriate in all scenarios and pinpoint that, in cases where the goal is the understanding of a black-box system’s functionality or the rationalisation of automated decisions, using counterfactual explanations alone may even be insufficient. Despite the downsides that are described above, counterfactual explanations can serve as an easy first step that balances between the desired properties of transparency, explainability, and accountability, as well as regulatory business interests.

Van Looveren et al. [54] underlined some problems with the previous counterfactual approach [53], most notably that it does not take local, class-specific interpretability into account, as well as that the counterfactual searching process, growing proportionally to the dimensionality of the feature space, can be computationally expensive. In order to address these issues, they proposed an improved faster, model agnostic technique for finding explainable counterfactual explanations of classifier predictions. This novel method incorporates class prototypes, constructed using either an encoder or class specific k-d trees, in the cost function to enable the perturbations to converge much faster to an interpretable counterfactual, hence removing the computational bottleneck and making the method more suitable for practical applications. In order to illustrate the effectiveness of their approach and the quality of the produced counterfactuals, the authors introduced two new metrics focusing on local interpretability at the instance level. By conducting experiments on both image data (MNIST dataset) and tabular data (Wisconsin Breast Cancer dataset), they showed that prototypes help to produce counterfactuals of superior quality. Finally, they pointed out that the perturbation of an input variable implies some notion of distance or rank among the different values of the variable; a notion that is not naturally present in categorical variables. Therefore, producing meaningful perturbations and subsequent counterfactuals for categorical features is not as straightforward. To this end, the authors proposed the use of embeddings, based on pairwise distances between the different values of a categorical variable, and empirically demonstrated the effectiveness of the proposed embeddings when combined with their method on census data.

Protodash: the work that was detailed in [55] regarding prototypes was extended in [56] by associating non-negative weightings to prototypes corresponding to their contribution, consequently creating a unifying coherent framework for both prototypes and criticisms/outliers. Moreover, under the proposed framework, since any symmetric positive definite kernel can be used, resulting in objective functions with nice properties. Subsequently, the authors propose ProtoDash, a fast, mathematically sound approximation algorithm for prototype selection that operates under the proposed framework to optimally select prototypes and learn their non-negative weights.

Permutation importance (PIMP) [57] is a heuristic approach that attempts to correct the feature importance bias through the normalisation of feature importance measures. The method, following the assumption that the random importance of a feature follows some probability distribution, attempts to estimate its parameters. This is achieved by repeatedly permuting the output array of predictions and subsequently measuring the distribution of importance for each variable on the non-permuted output. The derived p-value serves as a proxy to the corrected measure of feature importance. The usefulness of the method was demonstrated while using both simulated and real-word data to improve interpretability. As a result, an improved Random Forest (RF) model, called PIMP-RF, was proposed, which was trained on the most important features, as determined by the PIMP algorithm. PIMP can be used to complement and improve any feature-importance ranking algorithm by assigning p-values to each variable according to their permuted importance, thus improving model performance as well as model interpretability.

L2X [58] is a real-time instance-wise feature selection method that can also be used for model interpretation. More specifically, given a single training example, it tries to find the subset of its input features that are more informative in terms of the corresponding prediction for that instance. The subset is decided by a feature selector, through variational approximation, which is solely optimised towards maximising the mutual information between input features and the respective label. In the same study, a new measure called post-hoc accuracy was proposed in order to evaluate the performance of the L2X method in a quantitative way. Experiments using both real and synthetic data sets illustrate the effectiveness of the method not only in terms of post-hoc accuracy, but also terms of human-judgment evaluation, especially when it comes to nonlinear additive and feature-switching data sets.

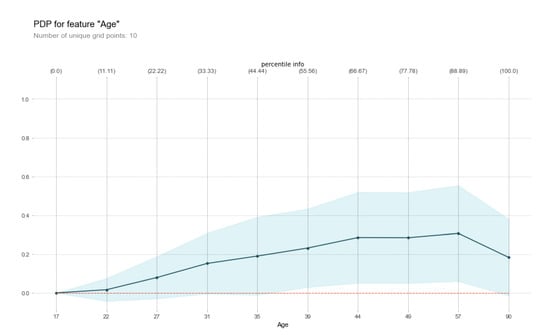

Friedman [59] proposed PDPs, a visualisation tool that helps to interpret any black box predictive model by plotting the impact of specific features or subsets of features on a the model’s predictions. More specifically, PDPs show how a certain set of features affects the average predicted value by marginalizing the rest of the features (its complement feature set) out. PDPs are usually very simplistic and they do not take all the different feature interactions into account. As a result, most of the time they cannot provide a very accurate approximation of the true functional relationships between the dependent and independent variables. However, they can often reveal useful information, thus greatly assisting in interpreting black box models, especially in cases where most of these interactions are of low order. Although primarily used to identify the partial relationship between a set of given features and the corresponding predicted value, PDPs can also provide visualisations for both single and multi-class problems, as well as for the interactions between features. In Figure 5, the PDP of a Random Forest model is presented, illustrating the relationship between age (feature) and income percentile (label) while using the Census Income dataset (UCI Machine Learning Repository).

Figure 5.

PDP of a Random Forest model, illustrating the relationship between age (feature) and income percentile (label) using the Census Income dataset (UCI Machine Learning Repository).

Originally proposed in [60], ICE plots is a model agnostic interpretability method, which builds on the concept of PDPs and improves it. After highlighting the limitations of PDPs in capturing the complexity of the modeled relationship in the case where of substantial interaction effects are present, the authors present a refinement of he original concept. Under this innovative refinement, each plot illustrates the functional relationship between the predicted value and the feature for individual instances. As a result, given a feature, the entire distribution of individual conditional expectation functions becomes available, which enables the identification of heterogeneities and their extent.

Another method that is closely-related to PDPs is the Accumulated Local Effect (ALE) plots [61]. ALE plots trying to address the most significant shortcoming of PDPs, their assumption of independence among features, compute the conditional instead of the marginal distribution. More specifically, in order to average over other features, instead of averaging the predictions, ALE plots calculate the average differences in predictions, thus blocking the effect of correlated features.

LIVE [62] is method that is similar to LIME [45], as they both utilise surrogate models to approximate local properties of the black box models and produce coefficients of these surrogate models that are subsequently used as interpretations. However, LIVE differentiates itself from LIME in terms of local exploration as well as in terms of handling of interpretable inputs. LIVE does not create an interpretable input space by transforming the input features, but, instead, makes use of the original feature space; artificial datapoints for local exploration are generated by perturbing the datapoint in question, one feature at a time. Because the perturbed datapoints very closely match the original ones, similarities among them are measured while using the identity kernel is employed, while the original features are used as interpretable inputs.

The breakDown method, as proposed in [62], is similar to SHAP [48], as both, based on the conditioned responses of a black-box model, attempt to attribute them proportionally to the input features. However, unlike SHAP, in which the contribution of a feature is averaged over all possible conditionings, the breakDown method deals with conditionings in a greedy way, only considering a single series of nested conditionings. This approach, although not as theoretically sound as SHAP, is faster to compute and more natural in terms of interpretation.

ProfWeight: Dhurandhar et al. [63] proposed transferring knowledge from high-performing pre-trained deep neural networks to a low performing, but interpretable non-complex model to improve its performance. This was achieved by using confidence scores that are indicative of the higher level data representations that were learnt by the intermediate layers of the deep neural network, as weights during the training process of the interpretable, non-complex model.

This concludes the examination of machine interpretability methods that explain the black-box models. A diverse pool of methods, exploiting different kinds of information, have been developed, offering explanations for the different types of models as well as the different types of data, with the majority of the literature focussing on image and text data. That said, there has not been a best-in-class method developed to address every need, as most methods focus on either a specific type of model, or a specific type of data, or their scope is either local or global, but not both. Of the methods presented, SHAP is the most complete method, providing explanations for any model and any type of data, doing so at both a global and local scope. However, SHAP is not without shortcomings: The kernel version of SHAP, KernelSHAP, like most permutation based methods, does not take feature dependence into account, thus often over-weighing unlikely data points and, while TreeSHAP, the tree version of SHAP, solves this problem, its reliance on conditional expected predictions is known to produce non-intuitive feature importance values as features with no impact on predictions can be assigned an importance value that is different to zero.

3.2. Interpretability Methods to Create White-Box Models

This category encompasses methods that create interpretable and easily understandable from humans models. The models in this category are often called intrinsic, transparent, or white-box models. Such models include the linear, decision tree, and rule-based models and some other more complex and sophisticated models that are equally transparent and, therefore, promising for the interpretability field. This work will focus on more complex models, as the linear, decision tree and elementary rule-based models have been extensively discussed in many other scientific studies. A summary of the discussed interpretability methods to create white-box models can be found in Table 3.

Table 3.

Interpretability Methods to Create White-Box Models.

Ustun and Rudin [64] proposed Supersparse Linear Integer Models (SLIM), a type of predictive system that only allows for additions, subtraction, and multiplications of input features to generate predictions, thus being highly interpretable.

In [65], Microsoft presented two case studies on real medical data, where naturally interpretable generalized additive models with pairwise interactions (GA2Ms), as originally proposed in [66], achieved state-of-the-art accuracy, showing that GA2Ms are the first step towards deploying interpretable high-accuracy models in applications like healthcare, where interpretability is of utmost importance. GA2Ms are generalized additive models (GAM) [67], but with a few tweaks that set them apart, in terms of predictive power, from traditional GAMs. More specifically, GA2Ms are trained while using modern machine learning techniques such as bagging and boosting, while their boosting procedure uses a round-robin approach through features in order to reduce the undesirable effects of co-linearity. Furthermore, any pairwise interaction terms are automatically identified and, therefore, included, which further increases their predictive power. In terms of interpretability, as additive models, GA2Ms are naturally interpretable, being able to calculate the contributions of each feature towards the final prediction in a modular way, thus making it easy for humans to understand the degree of impact of each feature and gain useful insight into the model’s predictions.

Boolean Rule Column Generation [68] is a technique that utilises Boolean rules, either in their disjunctive normal form (DNF) or in their conjunctive normal form (CNF), in order to create predictive models. In this case, interpretability is achieved through rule simplicity: a low number Boolean rules with few clauses and conditions in each clause can more easily be understood and interpreted by humans. The authors highlighted that most column generation algorithms, although efficient, can lead to computational issues when it comes to learning rules for large datasets, due to the exponential size of the rule-space, which corresponds to all possible conjunctions or disjunctions of the input features. As a solution, they introduced an approximate column-generation algorithm that employs randomization in order to efficiently search the rule-space and learn interpretable DNF or CNF classification rules while optimally balancing the tradeoff between classification accuracy and rule simplicity.

Generalized Linear Rule Models [69], which are often referred to as rule ensembles, are Generalized Linear Models (GLMs) [70] that are linear combinations of rule-based features. The benefit of such models is that they are naturally interpretable, while also being relatively complex and flexible, since rules are able to capture nonlinear relationships and dependencies. Under the proposed approach, a GLM is re-fit as rules are created, thus allowing for existing rules to be re-weighted, ultimately producing a weighted combination of rules.

Hind et al. [71] introduced TED, a framework for producing local explanations that satisfy the complexity mental model of a domain. The goal of TED is not to dig into the reasoning process of a model, but, instead, to mirror the reasoning process of a human expert in a specific domain, who effectively creates an domain-specific explanation system.

In summary, not a lot of progress has been made in recent years towards developing white-box models. This is most likely the result of the immense complexity modern applications require, in combination with the inherent limitations of such models in terms of predictive power—especially in computer vision and natural language processing, where the difference in performance when compared to deep learning models is unbridgeable. Furthermore, because models are increasingly expected to perform well on more than one tasks and transfer of knowledge from one domain to another is becoming a common theme, white-box models, currently being able to perform well only in a single task, are losing traction within the literature and they are dropping further behind in terms of interest.

3.3. Interpretability Methods to Restrict Discrimination and Enhance Fairness in Machine Learning Models

Because machine learning systems are increasingly adopted in real life applications, any inequities or discrimination that are promoted by those systems have the potential to directly affect human lives. Machine Learning Fairness is a sub-domain of machine learning interpretability that focuses solely on the social and ethical impact of machine learning algorithms by evaluating them in terms impartiality and discrimination. The study of fairness in machine learning is becoming more broad and diverse, and it is progressing rapidly. Traditionally, the fairness of a machine learning system has been evaluated by checking the models’ predictions and errors across certain demographic segments, for example, groups of a specific ethnicity or gender. In terms of dealing with a lack of fairness, a number of techniques have been developed both to remove bias from training data and from model predictions and to train models that learn to make fair predictions in the first place. In this section, the most widely-used machine learning fairness methods are presented, discussed and finally summarised in Table 4.

Table 4.

Interpretability Methods to Restrict Discrimination and Enhance Fairness in Machine Learning Models.

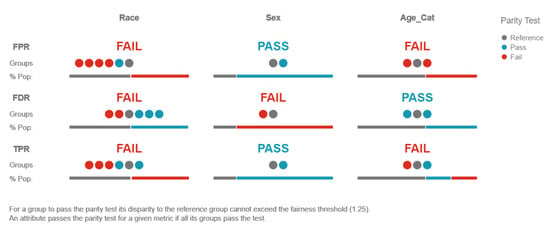

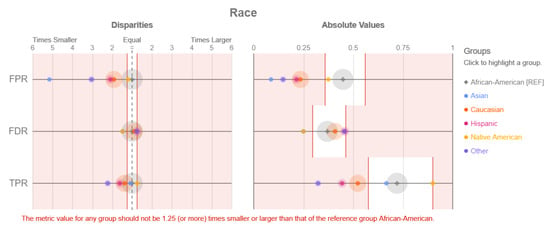

Disparate impact testing [72] is a model agnostic method that is able to assess the fairness of a model, but is not able to provide any insight or detail regarding the causes of any discovered bias. The method conducts a series of simple experiments that highlight any differences in terms of model predictions and errors across different demographic groups. More specifically, it can detect biases regarding ethnicity, gender, disability status, marital status, or any other demographic. While straightforward and efficient when it comes to selecting the most fair model, the method, due to the simplicity of its tests, might fail to pick up on local occurrences of discrimination, especially in complex models.

A way to ensure fairness in machine learning models is to alter the model construction process. In [73], three different data preprocessing techniques to ensure fairness in classification tasks are analysed. The first presented technique, which is called suppression, detects the features that correlate the most, according to some threshold with any sensitive features, such as gender or age. In order to diminish the impact of the sensitive features in the model’s decisions, the sensitive features along with their most correlated features are removed before training. This forces the model to learn from and, therefore, base its decisions on other attributes, thus not being biased against certain demographic segments. The second technique is called “massaging the dataset” and it was originally proposed in [74]. In order to remove the discrimination from the input data, according to this technique, some relabelling is applied to of some instances in the dataset. First, using a ranker, the instances most likely to be victims (discriminated ones) or most likely to be profiters (favoured ones) are detected, according to their probability of belonging to the corresponding class without taking the sensitives attributes into account. Subsequently, their labels are changed and a classifier is trained on this modified data that is free of bias. Finally the idea behind the third preprocessing technique, as initially presented in [75], is to apply different weights to the instances of the dataset based on frequency counts with respect to the sensitive column. The weight of an instance is calculated as the expected probability that its sensitive feature value and class appear together, while assuming that they are independent, divided by the respective observed probability. Reweighing has a similar effect to the “massaging” approach, but its major advantage is that it does alter the labels of the dataset. Similarly to disparate impact testing, the described data preproecessing methods might fail to pick up on local occurrences of discrimination, especially in complex models.

Another data preprocessing technique for removing the bias from machine learning models was proposed in [76]. More specifically, having the following three goals in mind: controlling discrimination, limiting distortion in individual instances, and preserving utility, the authors derived a convex optimization for learning a data representation that captures these goals.

Adversarial debiasing [77] is a framework for mitigating biases concerning demographic segments in machine learning systems by selecting a feature regarding the segment of interest and, subsequently, training a main model and an adversarial model simultaneously. The main model is trained in order to predict the label, whereas the adversarial model based on the main model’s prediction for each instance tries to predict the demographic segment of the instance; the objective is to maximize the main model’s ability to correctly predict the label, while, at the same time, minimizing the adversarial model’s ability to predict the demographic segment in question. Adversarial debiasing can be applied to both regression and classification tasks, regardless of the complexity of the chosen model. With regards to the sensitive features of interest, both continuous and discrete values can be handled and any imposed constraints can enforced across multiple definitions of fairness.

Kamiran et al. [78] pointed out that many of the methods that make classifiers aware of discriminatory biases require data modifications or algorithm tweaks and they are not very flexible with respect to multiple sensitive feature handling and control over the performance vs. discrimination trade-off. As a solution to these problems, two new methods methods that utilise decision theory in order to create discrimination-aware classifiers were proposed, namely Reject Option based Classification (ROC) and Discrimination-Aware Ensemble (DAE), neither of which require any data preprocessing or classifier adjustments. ROC can be viewed as a cost-based classification method, in which misclassifying an instance of a non-favoured group as negative results is much higher punishment than wrongly predicting a member of a favored group as negative. DAE employs an ensemble of classifiers. Ensembles, by nature, can be very useful in reducing bias. According to the authors, this is because the greater the number of classifiers and the more diverse these classifiers are, the higher the probability that some of them will be fair. Under this assumption, the discrimination awareness of such an ensemble can be controlled by adjusting the diversity of its voting classifiers, while the trade-off between accuracy and discrimination in DAEs depends on the disagreements between the voting classifiers and number of instances that are incorrectly classified.

Liu et al. [79] highlighted that most work in machine learning fairness had mostly studied the notion of fairness within static environments, and it had not been concerned with how decisions change the underlying population over time. They argued that seemingly fair decision rules have the potential to cause harm to disadvantaged groups and presented the case of loan decisions as an example where the introduction of seemingly fair rules can all decrease the credit score of the affected population over time. After emphasising the importance of temporal modelling and continuous measurement in evaluating what is considered fair, they concluded that in order for fairness rules to be set, rather than just considering what seems to be fair at a stationary point, an approach that takes the long term effects of such rules on the population in dynamic fashion into consideration is needed.

The problem of algorithmically allocating resources when in shortage was studied in [80] and, more specifically, the notion of fairness within this procedure in terms of groups and the potential consequences. An efficient learning algorithm is proposed that converges to an optimal fair allocation, even without any prior knowledge of the frequency of instances in each group; only the number of instances that received the resource in a given allocation is known, rather than the total number of instances. This can be translated to the fact that the creditworthiness of individuals not given loans is not known in the case of loan decisions or to the fact that some crimes committed in areas of low policing presence are not known either. As an application their framework, the authors considered the predictive policing problem, and experimentally evaluated their proposed algorithm on the Philadelphia Crime Incidents dataset. The effectiveness of the proposed method was proven, as, although trained on arrest data that were produced by its own predictions for the previous days, potentially resulting in feedback loops, the algorithm managed to overcome them.

Feedback loops in the context of predictive policing and the allocation of policing resources were also studied in [81]. More specifically, the authors first highlighted that feedback loops are a known issue in predictive policing systems, where a common scenario includes police resources being spent repeatedly on the same areas, regardless of the true crime rate. Subsequently, they developed a mathematical model of predictive policing which revealed the reasons behind the occurrence of feedback loops and showed that a relationship exists between the severity of problems that are caused by a runaway feedback loop and the variance in crime rates among area. Finally, upon acknowledging that incidents reported by citizens can alleviate the impact of runaway feedback, the authors demonstrated ways of altering the model inputs, though which predictive policing systems, which are able to overcoming the runaway feedback loop and, therefore, capable of learning the true crime rate, can be produced.

Models of strategic manipulation is a category of models that attempt to capture the dynamics between a classifier and agents in an environment, where all of the agents are capable, to the same degree, of manipulating their features in order to deceit the classifier into making a decision in their favour. In real world social environments, however, an individual’s ability to adapt to an algorithm does not merely relate to their personal benefit of getting a favourable decision, instead it heavily depends on a number of complex social interactions and factors within the environment. In [82] strategic manipulation models were studied and adapted in an environment of social inequality, in which different social groups have to pay different costs of manipulation. It was proven that, in such a setting, a decision making model exhibited a behaviour where some members of the advantaged group incorrectly received a favourable decision, while some members of the disadvantaged group incorrectly received a non-favourable one. The results also demonstrated that any tools attempting to evaluate an individual’s fitness or eligibility can potentially have harmful social consequences when the individuals’ capacities to adaptively respond differ. Finally, the authors conclude that the increasing use of decision-making machine learning tools in our imperfect social world will require the design of suitable models and the development of a sound theoretical background that would explicitly address critical issues, such as social stratification and unequal access, in order for true fairness to be achieved.

Milli et al. [83] also studied how individuals adjust their behaviour strategically to manipulate decision rules in order to gain favourable treatment from decision-making models. They reiterated that the design of more conservative decision boundaries in an effort to enhance robustness of decision making systems against such forms of distributional shift is significantly needed in order for fairness to be achieved. However, the authors showed, through experimentation, that although stricter decision boundaries add benefit to the decision maker, this is done at the expense of the individuals being classified. There is, therefore, some trade-off between the accuracy of the decision maker and the impact to the individuals in question. More specifically, a notion of “social burden” was introducedin order to quantify the cost of strategic decision making as the expected cost that a positive individual needs to meet to be correctly classified as positive, and it was proven that any increase in terms of the accuracy of the decision maker necessarily corresponds to an increase in the social burden for the individuals. Lastly, they empirically demonstrated that any extra costs occurring for individuals have the potential to be disproportionately harmful towards the already disadvantaged groups of individuals, highlighting that any strategy towards more accurate decision making must also weigh in social welfare and fairness factors.

Counterfactual fairness, which is defined strictly in [84], attempts to capture the intuition that a decision affecting an individual is fair if it would affect the same individual in the same way both the actual world and in a counterfactual world, where the individual would be a member of a different demographic group. In the same study, it was argued that it was crucial for causality in fairness to be addressed and subsequently a framework for modeling fairness using tools from causal inference was proposed. According to the authors, any measures of causality in fairness measures should not only consist of quantities free of counterfactuals, but is also essential that counterfactual causal guarantees are pursued. The proposed framework, which is based on the idea of counterfactual fairness, allows for the users to produce models that, instead of merely ignoring sensitive attributes that potentially reflect social biases towards individuals, are able to take such features into consideration and compensate for them accordingly.

The fairness of word embeddings, a vectorised representation of text data, used in many real world machine learning application, was studied in [85] and it was revealed that word embeddings, even those that were trained on Google News articles, carry strong gender bias. More specifically, two very useful, in terms of embedding debiasing, properties were shown. Firstly, it was shown that there exists a direction in the embedding space towards which gender stereotypes can be captured. Secondly, it was shown that gender neutral words can be linearly separated from gender definition words in the embedding space. Subsequently, metrics for quantifying both the direct and indirect gender stereotypes present in the word embeddings were created and an algorithm that utilises the previous two properties and tweaks the embedding vectors in order for gender bias to be removed was proposed by the authors.

According to [86], fairness should be realised not only segment-wise, but also at an individual level. In order to achieve this, fairness was formulated into a data representation problem, where any representations learnt would need to be optimised towards two competing objectives: similar individuals should have similar encodings; however, such encodings should be ignorant of any sensitive information regarding the individual.

In [87], three approaches for making the naive Bayes classifier discrimination-free were proposed. The first approach was to regulate the conditional probability distribution of the sensitive feature values given that the label is positive, by simply boosting the probability of the disadvantaged sensitive feature values given the positive label, while, at the same time, decreasing the probability of the favoured sensitive feature values given the positive label. While easy to follow and implement, this approach brings the downside of either reducing or boosting the number of positive labels that are produced by the model, depending on the difference between the frequency of the favoured sensitive values and frequency of the discriminated sensitive values in the input data. The second approach involves training a different model for every sensitive attribute value. The case where a sensitive feature has two values, and, therefore, two models were trained, was illustrated: one model was developed using only the rows that had a favoured sensitive value, while another model only utilised the rows that had a discriminated sensitive value. The different models are part of a meta-model, where discrimination is mitigated by adjusting the conditional probability, as described in the first approach. In the third approach, a latent variable is introduced to the modelling procedure, which corresponds to the non-biased label and the model parameters were optimized towards likelihood-maximisation while using the expectation-maximization (EM) algorithm.

In [88], a framework for fair classification, which consisted of two parts, was presented. More specifically, the first part involves the development of a task-specific metric in order to evaluate the degree of similarity among individuals with respect to the classification task, whereas the second part consists of an algorithmic procedure that is capable of maximizing the objective function, subject to the fairness constraint, according to which, similar decisions should be made for similar individuals. Furthermore, the framework was adjusted towards the much related goal of guaranteeing statistical parity, while, as previously, ensuring that similar individuals are provided with analogous decisions. Finally, the close relationship between privacy and fairness was discussed and, more specifically, how fairness can be further promoted using tools and approaches developed within the framework of differential privacy.

The difference between the fairness of the decision making process, also known as procedural fairness, and the fairness of the decision outcomes, also known as distributive fairness, was brought up by the authors of [89], who also emphasised that the majority of the scientific work on machine learning fairness revolved around the latter. For this gap to be bridged, procedural fairness metrics were introduced in order for the impact of input features used in the decision to be taken into consideration and for the moral judgments of humans regarding the use of these features to be quantified.

Building on from [90], where the concept of meritocratic fairness was introduced, Kearns et al. [91] performed a more comprehensive analysis on the broader issue of realising superior guarantees in terms of performance, while relaxing the model assumptions. Furthermore, the issue of fairness in infinite linear bandit problems was studied and a scheme for meritocratic fairness regarding online linear problems was produced, which was significantly more generic and robust than the existing methods. Under this scheme, fairness is satisfied by ensuring optimality in terms of reward: no actions that lead to preferential treatments are taken, unless the algorithm is certain that the reward of such an action would be higher reward. In practice, this is achieved by calculating confidence intervals around the expected rewards for the different individuals and, based on this process, two individuals are said to be linked if their corresponding confidence intervals are overlapping, and chained if they can reach each other through a chain of intermediate linked individuals.

The fact that the majority of notions or definitions of machine learning fairness merely focus on predefined social segments was criticised in [96]. More specifically, it was highlighted that such simplistic constraints, while forcing classifiers to achieve fairness at segment-level, can potentially bring discrimination upon sub-segments that consist of certain combinations of the sensitive feature values. As a first step towards addressing this, the authors proposed defining fairness across an exponential or infinite number of sub-segments, which were determined over the space of sensitive feature values. To this end, an algorithm that produces the most fair, in terms of sub-segments, distribution over classifiers was proposed. This is achieved by the algorithm through viewing the sub-segment fairness as a zero-sum game between a Learner and an Auditor, as well as through a series of heuristics.

Following up from other studies demonstrating that the exclusion of sensitive features cannot fully eradicate discrimination from model decisions, Kamishima et al. [99] presented and analysed three major causes of unfairness in machine learning: prejudice, underestimation, and negative legacy. In order to address the issue of indirect prejudice, a regulariser that was capable of restricting the dependence of any probabilistic discriminative model on sensitive input features was developed. By incorporating the proposed regulariser to logistic regression classifiers, the authors demonstrated its effectiveness in purging prejudice.