Abstract

Sentiment polarity classification in social media is a very important task, as it enables gathering trends on particular subjects given a set of opinions. Currently, a great advance has been made by using deep learning techniques, such as word embeddings, recurrent neural networks, and encoders, such as BERT. Unfortunately, these techniques require large amounts of data, which, in some cases, is not available. In order to model this situation, challenges, such as the Spanish TASS organized by the Spanish Society for Natural Language Processing (SEPLN), have been proposed, which pose particular difficulties: First, an unwieldy balance in the training and the test set, being this latter more than eight times the size of the training set. Another difficulty is the marked unbalance in the distribution of classes, which is also different between both sets. Finally, there are four different labels, which create the need to adapt current classifications methods for multiclass handling. Traditional machine learning methods, such as Naïve Bayes, Logistic Regression, and Support Vector Machines, achieve modest performance in these conditions, but used as an ensemble it is possible to attain competitive execution. Several strategies to build classifier ensembles have been proposed; this paper proposes estimating an optimal weighting scheme using a Differential Evolution algorithm focused on dealing with particular issues that multiclass classification and unbalanced corpora pose. The ensemble with the proposed optimized weighting scheme is able to improve the classification results on the full test set of the TASS challenge (General corpus), achieving state of the art performance when compared with other works on this task, which make no use of NLP techniques.

1. Introduction

Sentiment polarity refers to the opinion people have about an entity (e.g., film, service, news, etc.). Several machine learning methods have been used to automatically determine polarity of text published on Internet [1,2,3,4]. In general, polarity is automatically determined in various domains using different approaches, for example, in health prediction [5,6,7,8] or transportation [9].

The task of sentiment polarity task can be tackled as a supervised classification problem, where classes correspond to the polarity expressed in opinions (v. gr. positive or negative); classifiers are trained on tagged examples and they generate a model that relates features to the corresponding tag. Some classifiers are able to learn better particular features, while other classifiers that fail on particular cases perform well on others. Ensemble learning uses a set of classifiers to combine their predictions in different ways. [10] showed that an ensemble of classifiers is more accurate than its individual members if each of these members has an error rate less than 0.5, and they generate different errors when classifying new instances—i.e., members are accurate and diverse. A way to combine their predictions is to apply a voting strategy than can give the same weight to each classifier (hard voting) or different weights (soft voting). There are two determining factors concerning a voting ensemble that have been studied, the set of classifiers to be combined [11] and the weight assigned to each classifier [12]. This work focuses on the second problem.

Our main goal is to find the best weights assigned to each classifier in ensemble learning while using a soft voting scheme to improve sentiment polarity classification in a multiclass, unbalanced corpus. Particularly, we focus on the Spanish TASS task [13] organized by the Spanish Society for Natural Language Processing (SEPLN) (www.sepln.org). There are several challenges to tackle in this task:

- The fact that polarities are specified in four labels: positive, negative, neutral, and none. Thus, this task has to be modeled as a multiclass problem.

- The corpus we experiment with, possesses several difficulties: it is designed to have a small training subset (approximately 10%), while the test set is around 90%.

- Classes are not uniformly represented, and their distribution varies in the two subsets.

Hence, in this work, the weighting scheme for Twitter sentiment polarity in an unbalanced corpus with four possible polarity values (positive, negative, neutral, and none) is addressed through an optimization approach. This approach involves the formulation of an optimization problem, where its solution is based on the use of the differential evolution algorithm.

Despite that several works [14,15] have proposed different strategies for calculating the weighting scheme of an ensemble, the corpora used for their experiments are balanced, so that, to our knowledge, the effects of applying a weighting scheme on an unbalanced corpus have not been explored. In addition, classifiers of previous works are designed to only learn two possible outputs (positive or negative). Adjusting the weighting scheme for multiclass classification, unlike binary classification, could be more challenging when considering the number of possible combinations in the solutions. Therefore, this paper proposes a solution to optimize the weighting scheme of an ensemble to tackle a multiclassification problem with unbalanced classes.

The rest of this paper is structured, as follows: Section 2 gives details of current methods dealing with this problem. Section 3 describes some preliminaries, such as details on the selected task, classifiers, and a formal definition of ensemble learning; Section 4 presents the main proposal of this work—the evolutionary optimization of the weighted ensemble classification. In Section 5, the experiments and results are presented, and finally in Section 6 our conclusions are drawn.

2. Related Work

The problem of learning from unbalanced datasets has been addressed in early works, such as [16]. With the aim of improving the performance of SVMs in the imbalanced dataset context, the authors integrate over-sampling and under-sampling to balance the data and propose the ensemble of SVM (EnSVM) model in order to integrate the classification results of weak classifiers constructed individually on the processed data, and develop a genetic algorithm-based model called EnSVM+ to improve the performance of classification through classifier selection. Inspired by this work, we aimed to propose an ensemble, but focused both on linguistic features and multiclass problems.

Regarding linguistic features, ref. [17] describes a linguistic analysis framework, in which a number of similarity or dissimilarity features are extracted for each entailment pair in a data set and various classifier methods are evaluated based on the instance data that were derived from the extracted features. They compare and contrast the performance of single and ensemble based learning algorithms of datasets from the RTE1 to RTE5 challenges. They show that only one heterogeneous ensemble approach demonstrated a slight improvement over the technique of Naïve Bayes and none of the homogeneous methods were more accurate than Naïve Bayes. Nevertheless, finding an optimal combination of classifiers is still an important issue.

Over the past few years, the use of evolutionary computing techniques in classification tasks has increased because these techniques help finding an approximate solution closer to the global solution, while retaining, at the same time, independence to particular characteristics of the optimization problem, such as discontinuities, nonlinearities, the need of discrete design variables, etc. Additionally, evolutionary computing techniques are flexible in the sense that they allow merging diverse strategies in order to improve the exploration and exploitation capabilities of the algorithm in the evolutionary process. In [18], the multi-objective version of Binary Bat Algorithm with local search strategies employing social learning concepts in designing random walks is used on three widely-used micro array cancer datasets to explore significant bio-markers. A bio-inspired hierarchical model for analyzing musical timbre is presented in [19]; the model extracts three profiles for timbre: time-averaged spectrum, global temporal envelope, and instantaneous roughness. Different weight assignment for each features in ensemble learning-based classification has been applied in [20].

Related to text classification, the Arabic Text Classification system (ATC-FA) is proposed in [21]; this system combines the algorithm of Support Vector Machines (SVM) with an intelligent Feature Selection method (FS) based on the Firefly Algorithm (FA). Genetic programming has been used in [22] to generate alternative term-weighting schemes (TWSs) in text classification, allowing to improve the performance of current schemes in text classification by combining TWSs, terms (TRs), and term-document (TDRs) with a predefined set of operators.

In [23], a hybrid ensemble pruning scheme that is based on clustering and randomized search for text sentiment classification is proposed. A consensus clustering scheme is presented to deal with the instability of clustering results that consists of self-organizing map algorithm (SOM), expectation maximization (EM), and K-means++ (KM++). The classifiers of the ensemble are initially clustered into groups according to their predictive characteristics. Subsequently, two classifiers from each cluster are selected as candidate classifiers based on their pairwise diversity. The search space of candidate classifiers is explored by the elitist Pareto-based multi-objective evolutionary algorithm for diversity reinforcement (ENORA).

In [24], a model is introduced in order to predict whether a tweet contains a location or not and show that location prediction is a useful pre-processing step for location extraction. To evaluate the model, the Ritter dataset and MSM2013 dataset were used. To train the model, they tried different machine learning algorithms: the Naive Baiyes (NB), Support Vector Machine (SMO), and Random Forest (RF) using 10-folds cross validation. To optimize accuracy and true positives, the thresholds were varied (0.05, 0.20, 0.50, 0.75) for NB and RF, and for SMO was varied epsilon (0.05, 0.20, 0.50, 0.75). The conclusion was that RF and NB are the best machine learning solutions for this problem they perform better than SMO.

Usually, sets of classifiers are more accurate than the individual classifiers that integrate them when any of their individual members has an error rate of less than , and, in general, individual members have different errors when classifying new examples—that is, they are precise and diverse [10]. In recent years, deep learning methods have achieved high performance for several tasks; however, there are several problems for which a traditional machine learning approach is able to obtain state of the art results, given that an appropriate ensemble is constructed [15,25,26,27,28].

In this sense, different schemes have been tried to combine the predictions of the base classifiers that form the ensemble classification. Particularly, for the soft weighting scheme, there has been two main approaches: the use of meta-heuristic algorithms proposed by [14] and the estimation of a weighting scheme based on the probabilities of classifiers and their accuracy, as described by [15].

In [14], the use of meta-heuristic algorithms in the weighting of ensemble learning improves classification’s performance. Onan et al. proposed including a weighted ensemble learning for the analysis of the polarity opinion (positive and negative) based on differential evolution. Ensemble learning incorporates the following classifiers: Bayesian Logistic Regression (BLR), NB, Linear Discriminant Analysis (LDA), LR, and SVM. The allocation of the appropriate weighting values to classifier outputs is established as an optimization problem where precision and recall are the objective functions. Their proposal improves the accuracy of the base classifiers and other classic methods of ensemble learning.

In [15], the polarity of opinion is determined in two classes (positive and negative) of tweets of the Stanford Sentiment140 English corpus, proposing a combination scheme of the ensemble learning of the weights for the base classifiers NB, RandomForest (RF), SVM, and LR. The proposal considers the weighting of the accuracies of each base classifier along with their probabilities of predict a negative or positive class to calculate prediction scores. According to these scores, the authors determine the polarity of the training data. If negative and positive scores are equal, the cosine similarity is calculated with other tweets in test data and the most similar tweet prediction is chosen. With ensemble learning, the accuracy of the base classifier with better precision (SVM) is improved by 0.2%.

A multiobjective optimization-based weighted voting scheme was presented in [29]. Zhang et al. [30] propose adjusting the weight values of each base classifier by using the DE algorithm. Onan et al. [14] present a static classifier selection involving majority voting error and forward search; and, Ankit and Seleena [15] consider the weighting of the accuracies of each base classifier along with their probabilities of predict a negative or positive class to calculate prediction scores. It is important to recall that the corpora used for all these experiments are balanced (Except for First GOP debate twitter sentiment dataset used in [15]). Additionally, classifiers of previous works are designed to only learn two possible outputs (positive or negative). This is why this paper proposes estimating an optimal weighting scheme using a Differential Evolution algorithm focused in dealing with particular issues that multiclass classification and unbalanced corpora pose.

3. Preliminaries

This section outlines the selected task (Section 3.1); briefly describes it as a multiclassification task (Section 3.2); gives details on selected classifiers (Section 3.3); and, formally defines the problem of ensemble classification that will be used throughout the rest of this work (Section 3.4).

3.1. The Twitter Sentiment Polarity Task

In this research, the Spanish TASS corpus that was organized by the Spanish Society for Natural Language Processing (SEPLN) was used. This corpus contains 68,017 tweets divided into two sets: an E training set with 7219 tweets and a Z test set with 60,798, with polarity frequencies indicated in Table 1. As can be seen, this is a strongly unbalanced corpus, additional to the particularity that the test Z set is more than eight times greater than the training set E.

Table 1.

Opinion polarity distribution of tweets in TASS corpus.

This work aims to train with the E set to automatically classify the Z set of TASS in the polarities of opinion: Positive (P), Negative (N), None (NONE), or Neutral (NEU), hence the need of multiclass classification. This is detailed in next section.

3.2. Multiclass Classification

Classification problems can be categorized according to the number of different values that classes can have. In binary classification, there are only two mutually exclusive classes; for instance, the spam detection task has two possible outputs: spam or valid email [31]. When the classification problem has more than two possible class values, it is considered to be a multiclass problem. An example of multiclass classification is to determine whether an opinion is positive, negative, or neutral [32]. Well-known classifiers, like Decision Trees and Neural Networks, can handle multiclass problems natively, but binary classifiers can be adapted to support multiclass classification. One of the most used strategies to transform a multiclass problem to a binary problem is One vs One (OVO) [33]. This strategy divides the original data set into two-class subsets, learning a different model for each new subset. Consider the following dataset to better explain this strategy:

The OVO strategy creates a data subset for each possible combination of pair of classes. For an m class problem, OVO creates data sets and each data set is used to train a binary classifier that can distinguish between different pairs of classes [34]. For the dataset above, OVO creates the following data sets:

New instances can be predicted based on majority voting.

3.3. Classifiers

Three classifiers were selected relying on previous results that have shown good performance [32] to create the ensemble. Because Logistic Regression and Support Vector Machines are binary classifiers, it was necessary to use the multiclass transformation strategy explained in Section 3.2. The classifying methods (referred as classifiers from now on) and their parameters are described next:

- Multinomial Naïve Bayes (NB). This is a native multiclass classification algorithm. It is based on Bayes’s theorem. It is called naïve because of the assumption of class conditional independence, but, in spite of this, good results are obtained with this algorithm, comparable to other more complex techniques like neural networks [35]. This classifier has an additive smoothing parameter, called alpha, which value was set to 0.5.

- Logistic Regression (LR). Models the probability of events’ occurrence as a linear function of a set of predictor variables and can be used to predict the value of dependent variables. Because this algorithm builds a prediction model instead of a estimated point of dependent variables, it is used as a effective classifier [36]. Parameter C corresponding to the inverse of regularization strength was set to 1.0.

- Support Vector Machine (SVM). This algorithm uses a nonlinear matching method to transform the original dataset into a higher dimension—namely, a hyper-plane that acts as the decision boundary for partitioning data into classes [37]. Support vector machines are used to determine an optimal decision boundary to partition data into different classes. It is important to mention that SVM does not generate class probabilities, but they were calculated while using the algorithm proposed by [38]. Radial base was used as kernel, with kernel coefficient (gamma) set to 0.00001 and the penalty parameter of the error term (C) set to 3500.

3.4. Ensemble Classification

Ensemble classification considers the output of several classifiers, whose individual decisions are combined in some way—typically by weighted or un-weighted voting—in order to classify new examples [12]. In this work, ensemble classification is used to classify new tweets, consisting in classifiers denoted by . For each tweet classifier generates m probabilities . indicates the probability generated by the classifier that the q-th tweet belongs to class , the probability that it belongs to the class and so on for the m classes, as shown in Table 2.

Table 2.

Probabilities generated by the classifier , of which the q-th tweet belongs to each of the classes.

The proposed weighting is a soft weighting scheme, in such a way that it weights the m probabilities generated by classifier with weights . See Table 3.

Table 3.

Weighting scheme of ensemble classification.

3.5. Accuracy of the Ensemble Classifier

There are different metrics to evaluate an automatic learning model: accuracy, model error, completeness, precision, recall, and F1 measure are some of them. In this work, accuracy will be used to evaluate the ensemble classifier.

Once the probabilities generated by the n classifiers are weighted, the probability weighted by class can be obtained for the q-th tweet that is described in (1).

The prediction of the ensemble learning for the q-th tweet is the maximum probability weighted by class described in (2).

Given the set of predictions of the ensemble learning and the set of real classes of tweets , , it is possible to know the number of intersections between these two sets, as described in (3).

Finally, the accuracy of the ensemble learning with weights is described in (4).

3.6. Maximum Theoretical Accuracy

The intention of this work is to maximize the accuracy of the ensemble classifier. However, it is good to have a reference to know how far it is possible to maximize this accuracy. For this, the maximum accuracy of n classifiers was calculated. The prediction of classifier i for the q-th tweet is described in (5).

The predictions of n classifiers for the q-th tweet can be calculated, as shown in (6).

If the cardinality of the intersection of the set with the real class of the q-th tweet is greater than zero, it is considered that there is a coincidence between any of the predictions of the n classifiers with the real class of the -th tweet, as described in (7).

As an example, we calculate the maximum accuracy of three classifiers for five tweets , with real classes , and predictions from three classifiers with cardinalities , as shown in Table 4. For this example, the maximum accuracy described in (9) is obtained.

Table 4.

Predictions of three classifiers for five tweets.

4. Evolutionary Optimization of the Weighted Ensemble Classification

A mono-objective optimization problem is considered in order to maximize the accuracy of the ensemble classifier. This can be described in a general way as maximizing subject to (10) and (11).

The design goal considers the e matches between the set of predictions of the ensemble learning, and the set of real classes of the test tweets , where are the weights that must be adjusted to maximize J, as defined in (4).

The design variables are the weights that are assigned to classifiers . The set of design variables are grouped in vector .

It is necessary to narrow the search space, establishing boundaries for the design variables, in order to find optimal solutions to real-world problems. In the case of this problem, these limits are established as the inequality constraints (10).

Another restriction that must be met is that the sum of the weights assigned to classifiers must be equal to 1. This constraint is described in (11).

4.1. Differential Evolution Algorithm

Differential Evolution (DE) is an evolutionary algorithm proposed by Rainer Storn and Kenneth Price to solve global optimization problems in continuous search spaces [39]. DE has characteristics of robustness, precision, and speed of convergence that have made it attractive, not only to solve problems with continuous search spaces [40,41], but also discrete spaces [42,43]. DE begins with a set of solutions, called parents population, to which processes of crossing, mutation and selection are applied to create child populations that approach optimum solutions in an iterative process. The parameters of DE are: population size NP, maximum number of generations , number of crossings , and a factor of scale F.

There are different variants of DE, being the most popular —the one used in this work. The word indicates that the three individuals selected to calculate the mutation value are selected randomly, 1 the number of pairs of solutions chosen, and that a binomial recombination is used [44].

In this work, DE creates an initial matrix population with NP individuals, called population of parents. Each individual of contains the design variables generated randomly, as described in (12) and (13), respecting the inequality constraints (10) and the equality constraint (11). In the mutation process a mutant individual is created with three parent individuals (, and ) different to the current father and the scale factor F. In the crossing process, the crossing factor is considered to determine whether the gene inherited from the individual child is taken from the mutant individual or from the parent individual . Subsequently, it is verified if the child individual complies with constraints (10) and (11). If not, is randomly generated with (12) and (13). Finally, in the selection process, the individual parent of the next generation will be the individual with greater accuracy comparing the child individual and the parent individual . These processes continue iteratively while . The population of the maximum generation has the individuals with better accuracy for Max generations. Algorithm 1 shows the complete pseudo-code of the implementation of the algorithm in order to optimize the weights of the ensemble classifier.

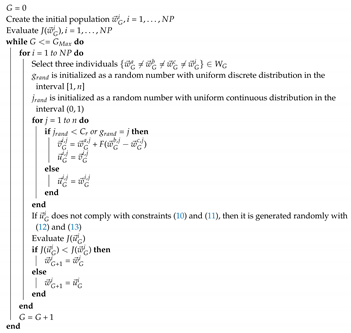

| Algorithm 1: Pseudocode of the DE algorithm for the evolutionary optimization of the ensemble classifier. |

|

4.2. K-Fold Cross-Validation and Stratified K-Fold Cross-Validation

It is important to estimate the performance of classifiers in order to select the most appropriate scheme. A common strategy for this purpose is to use k-fold cross-validation, in which a dataset S is split in k mutually exclusive subsets, called folds, , , …, of approximately the same size [45]. Subsequently, classifiers are trained and tested k times; each time , it is trained on the training subset , and tested on (testing subset).

A variation of this strategy, called stratified k-fold cross-validation, considers the distribution of classes to create the folds [45]. The folds in this strategy are evenly distributed, so that they contain approximately the same proportions of labels as the original dataset. In our proposal, for both strategies k is equal to 10, which is, the training set is divided in 10 folds.

Both of the strategies show the robustness of classifiers and the average accuracy of folds is a good estimator of expected performance on the test set. Therefore, we apply the evolutionary optimization method described in Algorithm 1 on each fold to calculate the best weighting scheme. Selection of the best weighting scheme is described in the following subsection.

4.3. Best Weights Selection Strategy

Evolutionary optimization algorithms provide a set of good solutions. From these solutions, the one that maximizes (or minimizes, depending on the problem) the objective function must be selected. A simple solution could just select the weighting scheme that maximizes accuracy, but this weighting scheme would have been calculated on a single fold of a test set, and there is no certainty that it could obtain the same good results in the test subsets from other folds. To avoid this bias in selecting the best solution, the next next steps are followed:

- Train the classifiers described in Section 3.3 with each of the 10 training sets.

- Use Algorithm 1 to determine the weighting schemes that maximizes accuracy on each of the 10 testing sets. In this step 10 candidates for best weighting scheme are obtained, one for each testing fold.

- Use the obtained weighting schemes of each test set on the ensemble to classify the tweets of remaining nine test sets.

- Calculate the average accuracy obtained by each weighting scheme of the ensemble on the test sets.

- Select the weighting scheme with the best average accuracy.

As well as cross-validation ensures the robustness of the classifiers, we consider that the selection strategy described above takes advantage of the diversity of samples on the folds to provide a global solution (The apparently straightforward selection strategy of averaging weights from the best weighting vectors in each fold was also tested, with no satisfactory results.).

The complexity of Algorithm 1 is calculated as , where is the number of generations for crossover and mutation of individuals of the population, and n is the number of design variables that corresponds to the number of weights to be assigned to the classifiers (in this case, 3).

5. Experiments And Results

Our goal is to be able to correctly classify the polarity of tweets of the test set Z of the TASS corpus, as described in Section 3.1. In order to do so, first the classifiers are trained and adjusted on the training corpus E. Experiments and results on this set are described in Section 5.1; afterwards, the experiments on the test set Z are described in Section 5.2.

5.1. Experiments on the E Set

Several strategies can be explored for training and adjusting the ensemble learning scheme with differential evolution weight selection. The number of individuals and generations can be changed (See Section 5.1.1), as well as the way of creating folds (see Section 5.1.2). With these experiments, the optimal weighting scheme is sought. Subsequently, it will be applied to classify the TASS test set Z, as described in Section 5.2.

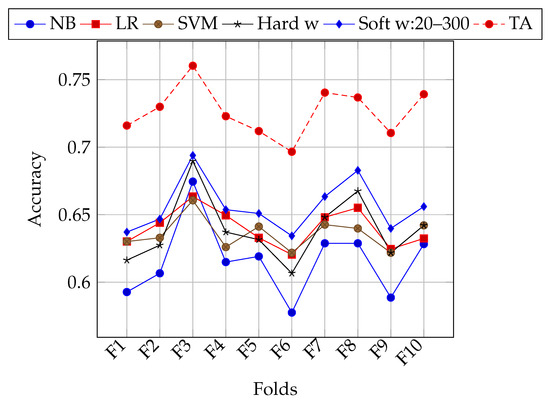

5.1.1. Random Folds

Table 5 shows the results of the first experiment with the training set of the TASS corpus E and 10 folds. From rows one to three, the accuracy obtained by the NB, LR, and SVM classifiers is observed independently. The fourth row (Hard w) shows the accuracy obtained by ensemble classification with the same classifiers using a hard weighting (same weights for all classifiers). Row 5 (Soft w:20–300) shows the accuracy obtained by the ensemble classification using the weighting scheme of the best individual after the process of evolutionary optimization. For this selection a random initial population of 20 individuals over 300 generations was used and the experiment was run 30 times in order to ensure robustness in the results. The total execution time was about 16 h in a 20 core dual-processor Intel Xeon E5-2690V2 Server (TEN CORE @ 3.0 GHz). The average of these 30 runs is reported. For results shown in Row 6 (Soft w:200–1000) the population was increased from 20 individuals to 200, and generations were increased from 300 to 1000 with the intention of achieving greater diversity in the population. As well as in Row 5 (Soft w:20–300), the experiment was run 30 times. The execution time was similar to the previous experiment. Because these changes did not have a significant effect in results, no more increases in generations or individuals were tested. Row 7 (TA) shows the maximum theoretical accuracy that was obtained by ideally selecting the correct result, if provided by any classifier (see Section 3.6). The last column shows the average accuracy of the classifiers and the ensemble learning. The highest accuracy for each independent classifier on each fold is highlighted in italics, while the best overall accuracy (without considering TA) is shown in bold.

Table 5.

Results on random folds of the TASS training set E.

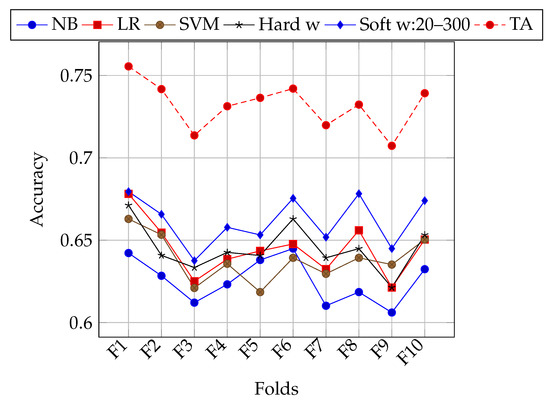

5.1.2. Stratified Folds

The folding strategy in previous experiments consisted of randomly selecting tweets from the E set of the TASS corpus. As can be seen in Figure 1, for some folds all classifiers in general achieve better accuracy. This might be due to the class bias of tweet polarities (unbalanced number of classes). Experiments with stratified k-folding were performed in order to lessen the impact of this bias on classification accuracy. In Table 6, results of using stratified k-folding are shown. Figure 2 shows performance for each fold using stratification. Both of the configurations of 20 individuals and 300 generations, and 200 individuals and 1000 generations were used. In general, soft weighting improves the classification accuracy on stratified folds as well. Nevertheless, there is still an heterogeneous performance for different folds.

Figure 1.

Accuracy of each classifier, different weighting schemes, and maximum theoretical accuracy on each fold of the E set of the TASS corpus.

Table 6.

Results on stratified folds of the training set of the TASS corpus E.

Figure 2.

Accuracy of each classifier, different weighting schemes, and maximum theoretical accuracy on each stratified fold of the E set of the TASS corpus.

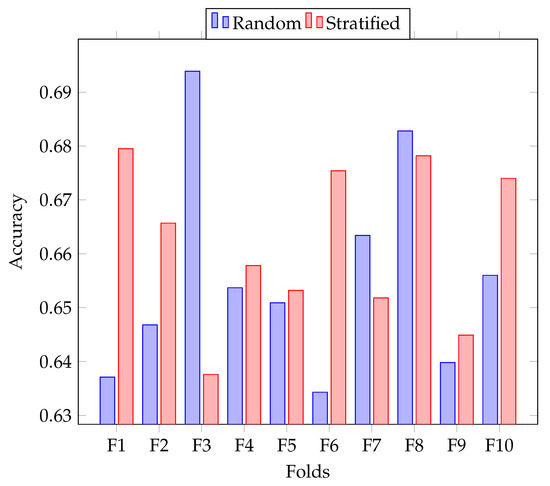

Figure 3 shows a comparison of accuracy obtained by Soft w:20–300 on both folding strategies, random and stratified. Stratified folding improved the performance on most of the folds, but decreased on others. On average, the accuracy on random folds was 0.6558, while average accuracy on stratified folds was 0.6618.

Figure 3.

Accuracy of Soft w:20–300 on both random and stratified folding strategy for each created fold of the E set of the TASS corpus.

For each fold, different soft weights were found. In the next section it is explained how the best weight on each folding strategy is selected in order to classify the tweets of the final test on the Z set.

5.2. Experiments on the Z Set

For each experiment described in the previous sections, a vector of optimal weights was obtained for each fold. The strategy detailed in Section 4.3 was applied for each experiment in order to select the best set of weights. The selected weighting vector on the random folds (from the soft w:20–300 experiment) was = [0.1713, 0.0380, 0.7905], while the vector corresponding to the stratified folds (using 20 individuals and 300 generations) was = [0.1345, 0.0340, 0.8313]. Each value in this vector corresponds to the weight of each classifier, namely NB, LR, and SVM.

It can be seen that, in both and , SVM is given a predominant weight (0.7905 and 0.8313 respectively); this is interesting, because this classifier obtained better average accuracy than NB, but lower than LR.

Once the weights were determined, tweets in the test set were classified with each classifier, and they were then assembled in a voting scheme with and weights, respectively. The results are shown in Table 7. As can be seen based on stratified folds (which obtained better results in the training set E) also yielded the best result in the test set Z.

Table 7.

Results of the experiment with Z set of the TASS corpus. Soft shows results with soft weights calculated from random folds while those of Soft were calculated from stratified folds.

5.3. Comparison with Other Works

Table 8 presents the best results reported by other systems on the same task. To our knowledge, the best accuracy reported so far is 0.726 by the LIF system. However, in order to fairly compare these systems, it is necessary to consider the external resources they are using to improve classification. For example, the LIF system uses external affective lexicons, such as ElhPolar [46], SSL [47], LYSA [48], MPQA [49], and HGI [50]. A similar situation occurs with the first four systems with the highest accuracies. Isolation from the effect of other resources is desirable, as, in principle, we aim to improve classification accuracy by adjusting weights of a classification ensemble. In that sense, we are comparable to the LYS, SINAI-DW2Vec, and INGEOTEC systems. Our proposed classification method with soft weights on stratified k-folds overcomes the accuracy of these systems.

Table 8.

External resources used and Accuracy for TASS Task 1, 4 classes, Z corpus. Best run reported for each system.

Additionally, Table 9 gives a brief description of the tools used by the best methods for classifying polarity tweets on the TASS task. The first column after accuracy shows the maximum number of n-gram features being used. In our work, we used only bag of words, which is equivalent to using unigrams. We are not using a Named-Entity-Recognizer module or NLP techniques (such as lemmatization, using parts-of-speech tags, etc.). We do not handle negation with any particular method. Other works use feature augmenting methods that are based on deep learning (Word2Vec [55], Doc2Vec [56], GloVe [57]), distributional methods (LDA [58], LSI [59]), or other feature weighing methods (TF·IDF [60]). In this work, none of these was used.

Table 9.

Classifiers used for systems in Table 8. (LR = Logistic Regression, SVM = Support Vector Machines, ME = MaxEnt, SG = SkipGrams).

The last column of Table 9 shows a very compact survey of the classifiers used by each system. Most works use Support Vector Machines (SVM). The first system (LIF) uses a ensemble of SVM, and Convolutional networks with skipgrams, bag of words, and vectors obtained from GloVe [57]. These results are fed to an SVM classifier. ELiRF, the second best system combines the output of several SVM classifiers with different parameters, and then this information is classified in cascade with another SVM classifier.

5.4. Discussion

The Differential Evolution strategy for optimizing the weights in a soft-voting ensemble was able to overcome performance of the individual classifiers. As expected, in the E set performance was better for the soft voting scheme, compared with hard weighting. Specifically, this latter achieves 66.57% accuracy, while the best weights obtained by Differential Evolution reach 67.71%.

Additionally, two different ways of partitioning information for finding the best weights were explored. One was based on random k-folds, and other on stratified k-folding. Stratified k-folds tend to improve the final classification. The latter strategy had better performance on the E set (66.19% vs. 65.61%), and the weight vector Soft calculated on these folds slightly contributed to obtain a better classification on the Z corpus (67.71% vs. 67.68%). In both folding strategies, the soft weighting always outperformed the hard weighting scheme.

We experimented with the InterTASS corpus of 2018 (Spanish) in order to test our solution with a different corpus [61]. We applied the DE:Soft method without recalculating weights. The results are shown in Table 10. From this table, it can be seen that despite the full process of adjusting weights was not carried out, our method outperformed some of the neural-network-based methods (retuyt-cnn).

Table 10.

Results of our proposed method with the InterTASS ES corpus, as compared with top results [61].

We have calculated the statistical significance of our experiments while using the STAC platform [62] considering the different results we obtained separately with each classifier, hard weights, and soft weights. With the Shapiro–Wilk test [63], we obtained that the null hypothesis is rejected with a level of significance of 0.093, while for the Kolmogorov–Smirnov test [64], it is rejected with .

6. Conclusions

In this paper, we presented a method to optimize weights for a classification ensemble. When compared with other methods, DE:Soft is able to obtain state of the art accuracy, given that no external resources are being used. As a future work, it would be interesting to assess the effect of using our proposed method along with external resources in order to further improve scores for this task.

In general, this proposal could be used for problems where training data are relatively small when compared with the amounts required for other state of the art methods, such as deep learning. Automatic optimization of weights for different classifiers allows for easily adapting this method for other problems, including those with multiclass labels.

In both and , is notoriously given a predominant weight, although it is interesting to see that this is not the best overall classifier if used alone. In this case, would be a better choice (see Table 5 and Table 6). Additionally, one of the best reported systems (GTI-GRAD) uses as its main classifier. This suggests a deeper by-case analysis that may enable classifiers to specialize in particular cases, along with a meta-classifier that dynamically adjusts weights for each case. Another option is to create separate classifiers per class; this is left as future work. Other improvements to the Differential Evolution algorithm, such as different ways of partitioning data, are also considered for further exploration.

Author Contributions

Conceptualization, O.J.G. and M.G.V.-C.; formal analysis, C.V.G.-M.; funding acquisition, H.G.; investigation, O.J.G.; methodology, C.V.G.-M., O.J.G., M.G.V.-C. and H.C.; software, C.V.G.-M.; supervision, O.J.G., M.G.V.-C. and H.C.; validation, O.J.G., M.G.V.-C. and H.C.; writing—original draft, C.V.G.-M., O.J.G. and H.C.; writing—review & editing, H.C. All authors have read and agreed to the published version of the manuscript.

Funding

Authors wish to thank support of the Government of Mexico via CONACYT, SNI, and Instituto Politécnico Nacional (IPN) grants SIP 2083, SIP 20200811, SIP 20201252, and SIP 20201362, IPN-COFAA and IPN-EDI; AMPLN and SMIA.

Acknowledgments

Authors are grateful with Sotiris Kotsiantis, as well as the anonymous reviewers for their useful comments and discussion.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Taboada, M.; Brooke, J.; Tofiloski, M.; Voll, K.D.; Stede, M. Lexicon-Based Methods for Sentiment Analysis. Comput. Linguist. 2011, 37, 267–307. [Google Scholar] [CrossRef]

- Pang, B.; Lee, L.; Vaithyanathan, S. Thumbs up? Sentiment Classification Using Machine Learning Techniques. In Proceedings of the EMNLP2002, Philadelphia, PA, USA, 6–7 July 2002; pp. 79–86. [Google Scholar]

- Vilares, D.; Alonso, M.Á.; Gómez-Rodríguez, C. Supervised polarity classification of Spanish tweets based on linguistic knowledge. In Proceedings of the 2013 ACM Symposium on Document Engineering, Florence, Italy, 10–13 September 2013; pp. 169–172. [Google Scholar]

- Sidorov, G.; Miranda-Jiménez, S.; Viveros-Jiménez, F.; Gelbukh, A.; Castro-Sánchez, N.; Velásquez, F.; Díaz-Rangel, I.; Suárez-Guerra, S.; Treviño, A.; Gordon, J. Advances in Artificial Intelligence. In Proceedings of the 11th Mexican International Conference on Artificial Intelligence, MICAI 2012, San Luis Potosí, Mexico, 27 October–4 November 2012; Chapter Empirical Study of Machine Learning Based Approach for Opinion Mining in Tweets. Springer: Berlin/Heidelberg, Germany, 2013; pp. 1–14. [Google Scholar]

- Ali, F.; El-Sappagh, S.; Islam, S.R.; Ali, A.; Attique, M.; Imran, M.; Kwak, K.S. An intelligent healthcare monitoring framework using wearable sensors and social networking data. Future Gener. Comput. Syst. 2020. [Google Scholar] [CrossRef]

- Godínez, I.R.; López-Yáñez, I.; Yáñez-Márquez, C. Classifying patterns in bioinformatics databases by using Alpha-Beta associative memories. In Biomedical Data and Applications; Springer: Berlin/Heidelberg, Germany, 2009; pp. 187–210. [Google Scholar]

- Uriarte-Arcia, A.V.; López-Yáñez, I.; Yáñez-Márquez, C. One-hot vector hybrid associative classifier for medical data classification. PLoS ONE 2014, 9, e95715. [Google Scholar] [CrossRef] [PubMed]

- García-Floriano, A.; Ferreira-Santiago, Á.; Camacho-Nieto, O.; Yáñez-Márquez, C. A machine learning approach to medical image classification: Detecting age-related macular degeneration in fundus images. Comput. Electr. Eng. 2019, 75, 218–229. [Google Scholar] [CrossRef]

- Ali, F.; Kwak, D.; Khan, P.; El-Sappagh, S.; Ali, A.; Ullah, S.; Kim, K.H.; Kwak, K.S. Transportation sentiment analysis using word embedding and ontology-based topic modeling. Knowl.-Based Syst. 2019, 174, 27–42. [Google Scholar] [CrossRef]

- Hansen, L.K.; Salamon, P. Neural network ensembles. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 993–1001. [Google Scholar] [CrossRef]

- Dos Santos, E.M. Emotion classification of online news articles from the reader’s perspective. In Proceedings of the 2008 IEEE/WIC/ACM International Conference on Web Intelligence and Intelligent Agent Technology, Sydney, NSW, Australia, 9–12 December 2008; pp. 419–430. [Google Scholar]

- Dietterich, T.G. Ensemble methods in machine learning. In International Workshop on Multiple Classifier Systems; Springer: Berlin/Heidelberg, Germany, 2000; pp. 1–15. [Google Scholar]

- Villena-Román, J.; García-Morera, J.; Cumbreras, M.Á.G.; Martínez-Cámara, E.; Martín-Valdivia, M.T.; López, L.A.U. Overview of TASS 2015. In Proceedings of the TASS 2015: Workshop on Semantic Analysis at SEPLN (TASS 2015), Alicante, Spain, 15 September 2015; pp. 13–21. [Google Scholar]

- Onan, A.; Korukoğlu, S.; Bulut, H. A multiobjective weighted voting ensemble classifier based on differential evolution algorithm for text sentiment classification. Expert Syst. Appl. 2016, 62, 1–16. [Google Scholar] [CrossRef]

- Saleena, N. An Ensemble Classification System for Twitter Sentiment Analysis. Procedia Comput. Sci. 2018, 132, 937–946. [Google Scholar] [CrossRef]

- Liu, Y.; Yu, X.; Huang, J.X.; An, A. Combining integrated sampling with SVM ensembles for learning from imbalanced datasets. Inf. Process. Manag. 2011, 47, 617–631. [Google Scholar] [CrossRef]

- Rooney, N.; Wang, H.; Taylor, P.S. An investigation into the application of ensemble learning for entailment classification. Inf. Process. Manag. 2014, 50, 87–103. [Google Scholar] [CrossRef]

- Dashtban, M.; Balafar, M.; Suravajhala, P. Gene selection for tumor classification using a novel bio-inspired multi-objective approach. Genomics 2018, 110, 10–17. [Google Scholar] [CrossRef] [PubMed]

- Adeli, M.; Rouat, J.; Wood, S.; Molotchnikoff, S.; Plourde, E. A Flexible Bio-Inspired Hierarchical Model for Analyzing Musical Timbre. IEEE/ACM Trans. Audio Speech Lang. Process. 2016, 24, 875–889. [Google Scholar] [CrossRef]

- Ali, F.; El-Sappagh, S.; Islam, S.R.; Kwak, D.; Ali, A.; Imran, M.; Kwak, K.S. A smart healthcare monitoring system for heart disease prediction based on ensemble deep learning and feature fusion. Inf. Fusion 2020, 63, 208–222. [Google Scholar] [CrossRef]

- Marie-Sainte, S.L.; Alalyani, N. Firefly Algorithm based Feature Selection for Arabic Text Classification. J. King Saud Univ. Comput. Inf. Sci. 2018. [Google Scholar] [CrossRef]

- Escalante, H.J.; García-Limón, M.A.; Morales-Reyes, A.; Graff, M.; y Gómez, M.M.; Morales, E.F.; Martínez-Carranza, J. Term-weighting learning via genetic programming for text classification. Knowl.-Based Syst. 2015, 83, 176–189. [Google Scholar] [CrossRef]

- Onan, A.; Korukoğlu, S.; Bulut, H. A hybrid ensemble pruning approach based on consensus clustering and multi-objective evolutionary algorithm for sentiment classification. Inf. Process. Manag. 2017, 53, 814–833. [Google Scholar] [CrossRef]

- Hoang, T.B.N.; Mothe, J. Location extraction from tweets. Inf. Process. Manag. 2018, 54, 129–144. [Google Scholar] [CrossRef]

- Jan, M.Z.; Verma, B. A novel diversity measure and classifier selection approach for generating ensemble classifiers. IEEE Access 2019, 7, 156360–156373. [Google Scholar] [CrossRef]

- López, M.; Valdivia, A.; Martínez-Cámara, E.; Luzón, M.V.; Herrera, F. E2SAM: Evolutionary ensemble of sentiment analysis methods for domain adaptation. Inf. Sci. 2019, 480, 273–286. [Google Scholar] [CrossRef]

- Tama, B.A.; Nkenyereye, L.; Islam, S.R.; Kwak, K.S. An Enhanced Anomaly Detection in Web Traffic Using a Stack of Classifier Ensemble. IEEE Access 2020, 8, 24120–24134. [Google Scholar] [CrossRef]

- Rim, K.; Tu, J.; Lynch, K.; Pustejovsky, J. Reproducing Neural Ensemble Classifier for Semantic Relation Extraction in Scientific Papers. In Proceedings of the 12th Language Resources and Evaluation Conference, Marseille, France, 11–16 May 2020; pp. 5569–5578. [Google Scholar]

- Ekbal, A.; Saha, S. A multiobjective simulated annealing approach for classifier ensemble: Named entity recognition in Indian languages as case studies. Expert Syst. Appl. 2011, 38, 14760–14772. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, H.; Cai, J.; Yang, B. A weighted voting classifier based on differential evolution. In Abstract and Applied Analysis; Hindawi: London, UK, 2014; Volume 2014. [Google Scholar]

- Sahami, M.; Dumais, S.; Heckerman, D.; Horvitz, E. A Bayesian approach to filtering junk e-mail. In AAAI-98 Workshop on Learning for Text Categorization; AAAI Press: Palo Alto, CA, USA, 1998; pp. 55–62. [Google Scholar]

- Gambino, O.J.; Calvo, H. A Comparison Between Two Spanish Sentiment Lexicons in the Twitter Sentiment Analysis Task. In Ibero-American Conference on Artificial Intelligence; Springer: Cham, Switzerland, 2016; pp. 127–138. [Google Scholar]

- Knerr, S.; Personnaz, L.; Dreyfus, G. Single-layer learning revisited: A stepwise procedure for building and training a neural network. In Neurocomputing; Springer: Berlin/Heidelberg, Germany, 1990; pp. 41–50. [Google Scholar]

- Galar, M.; Fernández, A.; Barrenechea, E.; Bustince, H.; Herrera, F. An overview of ensemble methods for binary classifiers in multi-class problems: Experimental study on one-vs-one and one-vs-all schemes. Pattern Recognit. 2011, 44, 1761–1776. [Google Scholar] [CrossRef]

- John, G.H.; Langley, P. Estimating continuous distributions in Bayesian classifiers. In Proceedings of the Eleventh conference on Uncertainty in artificial intelligence, Montreal, QC, Canada, 18–20 August 1995; Morgan Kaufmann Publishers Inc.: Burlington, MA, USA, 1995; pp. 338–345. [Google Scholar]

- Friedman, J.; Hastie, T.; Tibshirani, R. The Elements of Statistical Learning; Springer Series in Statistics: New York, NY, USA, 2001; Volume 10. [Google Scholar]

- Joachims, T. Text categorization with support vector machines: Learning with many relevant features. In Proceedings of the European Conference on Machine Learning, Chemnitz, Germany, 21–23 April 1998; Springer: Berlin/Heidelberg, Germany, 1998; pp. 137–142. [Google Scholar]

- Wu, T.F.; Lin, C.J.; Weng, R.C. Probability estimates for multi-class classification by pairwise coupling. J. Mach. Learn. Res. 2004, 5, 975–1005. [Google Scholar]

- Storn, R.; Price, K. Differential evolution–a simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Li, H.; Li, N.; Liu, K. Two-Way Differential Evolution Algorithm: A Global Optimization Algorithm in Continuous Space. In Proceedings of the 2010 Second WRI Global Congress on Intelligent Systems, Wuhan, China, 16–17 December 2010; Volume 1, pp. 55–58. [Google Scholar] [CrossRef]

- Iwai, R.; Kato, S. Optimization in multi-modal continuous space with little globally convex using differential evolution on scattered parents. In Proceedings of the 2012 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Seoul, Korea, 14–17 October 2012; pp. 2002–2007. [Google Scholar] [CrossRef]

- Li, J. Resource Planning and Scheduling of Payload for Satellite with a Discrete Binary Version of Differential Evolution. In Proceedings of the 2009 IITA International Conference on Control, Automation and Systems Engineering (Case 2009), Zhangjiajie, China, 11–12 July 2009; pp. 62–65. [Google Scholar] [CrossRef]

- Sauer, J.G.; dos Santos Coelho, L. Discrete Differential Evolution with local search to solve the Traveling Salesman Problem: Fundamentals and case studies. In Proceedings of the 2008 7th IEEE International Conference on Cybernetic Intelligent Systems, London, UK, 9–10 September 2008; pp. 1–6. [Google Scholar] [CrossRef]

- Mezura-Montes, E.; Velázquez-Reyes, J.; Coello Coello, C.A. A Comparative Study of Differential Evolution Variants for Global Optimization. In Proceedings of the 8th Annual Conference on Genetic and Evolutionary Computation, Seattle, WA, USA, 8–12 July 2006; ACM: New York, NY, USA, 2006; pp. 485–492. [Google Scholar] [CrossRef]

- Kohavi, R. A study of cross-validation and bootstrap for accuracy estimation and model selection. In Proceedings of the IJCAI, Montreal, QC, Canada, 20–25 August 1995; Volume 14, pp. 1137–1145. [Google Scholar]

- Saralegi, X.; San Vicente, I. Elhuyar at TASS 2013. In Proceedings of the XXIX Congreso de la Sociedad Española de Procesamiento de Lenguaje Natural, Workshop on Sentiment Analysis at SEPLN (TASS 2013), Madrid, Spain, 20 September 2013; pp. 143–150. [Google Scholar]

- Perez-Rosas, V.; Banea, C.; Mihalcea, R. Learning Sentiment Lexicons in Spanish. In Proceedings of the LREC, Istanbul, Turkey, 23–25 May 2012; Volume 12, p. 73. [Google Scholar]

- Vilares, D.; Doval, Y.; Alonso, M.A.; Gómez-Rodrıguez, C. LyS at TASS 2014: A prototype for extracting and analysing aspects from Spanish tweets. In Proceedings of the TASS workshop at SEPLN, Girona, Spain, 16–19 September 2014. [Google Scholar]

- Deng, L.; Wiebe, J. MPQA 3.0: An entity/event-level sentiment corpus. In Proceedings of the Conference of the North American Chapter of the Association of Computational Linguistics: Human Language Technologies, Denver, CO, USA, 31 May–5 June 2015. [Google Scholar]

- Stone, P.J.; Dunphy, D.C.; Smith, M.S. The General Inquirer: A Computer Approach to Content Analysis; American Psychological Association: Washington, DC, USA, 1966. [Google Scholar]

- Molina-González, M.D.; Martínez-Cámara, E.; Martín-Valdivia, M.T.; Perea-Ortega, J.M. Semantic orientation for polarity classification in Spanish reviews. Expert Syst. Appl. 2013, 40, 7250–7257. [Google Scholar] [CrossRef]

- Ríos, M.G.D.; Gravano, A. Spanish DAL: A Spanish Dictionary of Affect in Language. In Proceedings of the WASSA 2013, Zhangjiajie, China, 7–10 August 2013; p. 21. [Google Scholar]

- Cruz, F.L.; Troyano, J.A.; Pontes, B.; Ortega, F.J. ML-SentiCon: Un lexicón multilingüe de polaridades semánticas a nivel de lemas. Proces. Leng. Nat. 2014, 53, 113–120. [Google Scholar]

- Manandhar, S.; Yuret, D. Second Joint Conference on Lexical and Computational Semantics (*SEM), Volume 2: Proceedings of the Seventh International Workshop On Semantic Evaluation (Semeval 2013); Omnipress, Inc.: Madison, WI, USA, 2013; Volume 2. [Google Scholar]

- Le, Q.; Mikolov, T. Distributed representations of sentences and documents. In Proceedings of the International Conference on Machine Learning, Beijing, China, 21–26 June 2014; pp. 1188–1196. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed representations of words and phrases and their compositionality. In Advances in Neural Information Processing Systems; NeurIPS: Lake Tahoe, NV, USA, 2013; pp. 3111–3119. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global Vectors for Word Representation. In Proceedings of the EMNLP, Doha, Qatar, 25–29 October 2014; Volume 14, pp. 1532–1543. [Google Scholar]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent dirichlet allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Landauer, T.K.; Foltz, P.W.; Laham, D. An introduction to latent semantic analysis. Discourse Process. 1998, 25, 259–284. [Google Scholar] [CrossRef]

- Sparck Jones, K. A statistical interpretation of term specificity and its application in retrieval. J. Doc. 1972, 28, 11–21. [Google Scholar] [CrossRef]

- Martínez Cámara, E.; Almeida Cruz, Y.; Díaz Galiano, M.C.; Estévez-Velarde, S.; García Cumbreras, M.Á.; García Vega, M.; Gutiérrez, Y.; Montejo Ráez, A.; Montoyo, A.; Munoz, R.; et al. Overview of TASS 2018: Opinions, Health and Emotions; Sun SITE Central Europe: Sevilla, Spain, 2018. [Google Scholar]

- Rodríguez-Fdez, I.; Canosa, A.; Mucientes, M.; Bugarín, A. STAC: A web platform for the comparison of algorithms using statistical tests. In Proceedings of the 2015 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), Istanbul, Turkey, 2–5 August 2015. [Google Scholar]

- Shapiro, S.S.; Wilk, M.B. An analysis of variance test for normality (complete samples). Biometrika 1965, 52, 591–611. [Google Scholar] [CrossRef]

- Stephens, M. Introduction to Kolmogorov (1933) on the empirical determination of a distribution. In Breakthroughs in Statistics; Springer: Berlin/Heidelberg, Germany, 1992; pp. 93–105. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).