Abstract

Forecasting market risk lies at the core of modern empirical finance. We propose a new self-exciting probability peaks-over-threshold (SEP-POT) model for forecasting the extreme loss probability and the value at risk. The model draws from the point-process approach to the POT methodology but is built under a discrete-time framework. Thus, time is treated as an integer value and the days of extreme loss could occur upon a sequence of indivisible time units. The SEP-POT model can capture the self-exciting nature of extreme event arrival, and hence, the strong clustering of large drops in financial prices. The triggering effect of recent events on the probability of extreme losses is specified using a discrete weighting function based on the at-zero-truncated Negative Binomial (NegBin) distribution. The serial correlation in the magnitudes of extreme losses is also taken into consideration using the generalized Pareto distribution enriched with the time-varying scale parameter. In this way, recent events affect the size of extreme losses more than distant events. The accuracy of SEP-POT value at risk (VaR) forecasts is backtested on seven stock indexes and three currency pairs and is compared with existing well-recognized methods. The results remain in favor of our model, showing that it constitutes a real alternative for forecasting extreme quantiles of financial returns.

1. Introduction

Forecasting extreme losses is at the forefront of quantitative management of market risk. More and more statistical methods are being released with the objective of adequately monitoring and predicting large downturns in financial markets, which is a safeguard against severe price swings and helps to manage regulatory capital requirements. We aim to contribute to this strand of research by proposing a new self-exciting probability peaks-over-threshold (SEP-POT) model with the prerogative of being adequately tailored to the dynamics of real-world extreme events in financial markets. Our model can capture the strong clustering phenomenon and the discreteness of times between the days of extreme events.

Market risk models that account for catastrophic movements in security prices are the focal point in the practice of risk management, which can clearly be demonstrated by repetitive downturns in financial markets. The truth of this statement cannot be more convincing nowadays as global equity markets have very recently reacted to the COVID-19 pandemic with a plunge in prices and extreme volatility. The coronavirus fear resulted in panic sell-outs of equities and the U.S. S&P 500 index plummeted 9.5% on 12 March 2020, experiencing its worst loss since the famous Black Monday crash in 1987. Directly 2, 4, 6, and 7 business days later, the S&P 500 index registered additional huge price drops amounting to, correspondingly, 12%, 5.2%, 4.3%, and 2.9%, respectively. At the same time, the toll that the COVID-19 pandemic took on European markets was also unprecedented. For example, the German bluechip index DAX 30 plunged 12.2% on 12 March 2020, which was followed by a further 5.3%, 5.6%, 2.1% losses, correspondingly, 2, 4, and 7 business days later. The COVID-19 aftermath is a real example that highlights the strong clustering property of extreme losses.

One of the most well-recognized and widely used measures of exposure to market risk is the value at risk (VaR). VaR summarizes the quantile of the gains and losses distribution and can be intuitively understood as the worst expected loss over a given investment horizon at a given level of confidence [1]. VaR can be derived as a quantile of an unconditional distribution of financial returns, but it is much more advisable to model VaR as the conditional quantile, so that it can capture the strongly time-varying nature of volatility inherent to financial markets. The volatility clustering phenomenon provides the reason for using the generalized autoregressive conditional heteroskedasticity (GARCH) models to derive the conditional VaR measure [2]. However, over the last decade, the conventional VaR models have been subject to massive criticism, as they failed to predict huge repetitive losses that devastated financial institutions during the global crisis of 2007–2008. Therefore, special focus and emphasis is now placed on adequate modeling of extreme quantiles for the conditional distribution of financial returns rather than the distribution itself.

One of the relatively recent and intensively explored approaches to modeling extreme price movements is a dynamic version of the POT model which relies on the concept of the marked self-exciting point process. Unlike the GARCH-family models, POT-family models do not act on the entire conditional distribution of financial returns. Instead, their focus moves to the distribution tails where—in order to account for their heaviness—the probability mass is usually approximated with the generalized Pareto distribution. Early POT models described the occurrence of extreme returns as realizations of an independent and identically distributed (i.i.d.) variable, which led to VaR estimates in the form of unconditional quantiles. One of the first dynamic specifications of POT models that took into account the volatility clustering phenomenon and allowed economists to perceive VaR as a conditional quantile was a two-stage method developed in [3]. This method required estimating an appropriately specified GARCH-family model in the first stage and fitting the POT model to GARCH residuals. A new avenue for forecasting VaR was opened up when the point-process approach to POT models was released in [4]. This methodology was later extended in several publications [5,6,7,8,9,10,11,12,13,14]. The benefit of this model is that it does not require prefiltering returns using GARCH-family estimates while at the same time it can capture the clustering effects of extreme losses and maintain the merits of the extreme value theory. The point-process POT model approximates the time-varying conditional probability of an extreme loss over a given day with the help of a conditional intensity function that characterizes the arrival rate of such extreme events. The intensity function can either be formulated in the spirit of the self-exciting Hawkes process [4,5,10,11,12] (which is extensively used in geophysics and seismology), in the form of the observation-driven autoregressive conditional intensity (ACI) model [13], or using the autoregressive conditional duration (ACD) models [6,7,8] (the last two methodologies were very popular in the area of market microstructure and the modeling of financial ultra-high-frequency data [15,16,17]). In all cases, the timing of extreme losses depends on the timing of extreme losses observed in the past.

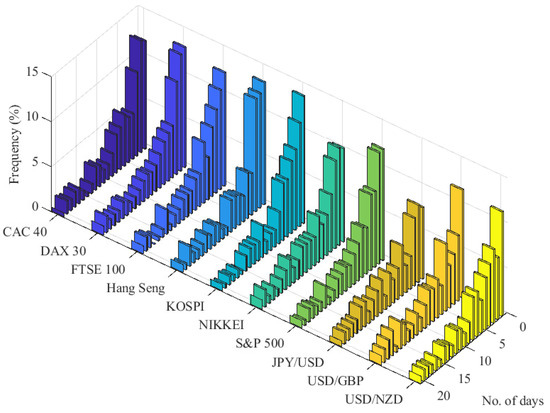

This study does not strictly rely on the above mentioned point process approach to POT models. The discrete-time framework of our SEP-POT model is motivated by observation of real-world financial data measured daily, which is the most common frequency used in POT models of risk. The empirical analysis put forward in this paper is based on the daily log returns of seven international stock indexes (i.e., CAC 40 (France), DAX 30 (Germany), FTSE 100 (United Kingdom), Hang Seng (Hong Kong), KOSPI (Korea), NIKKEI (Japan), and S&P 500 (U.S.)) as well as the daily log returns of three currency pairs (JPY/USD, USD/GBP, USD/NZD). The daily log returns for the equity market were calculated from the adjusted daily closing prices downloaded from the Refinitiv Datastream database. The foreign exchange (FX) rates were obtained from the Federal Reserve Economic Data repository and are measured in following units: Japanese Yen to one U.S. Dollar (JPY/USD), U.S. Dollars to one British Pound (USD/GBP), U.S. Dollars to one New Zealand Dollar (USD/NZD). Extreme losses are defined as the daily negated log returns (log returns pre-multiplied by ) whose magnitudes (in absolute terms) are larger than a sufficiently large threshold, u. Figure 1 shows that for u corresponding to the 0.95-quantile of the unconditional distribution of negated log returns, the daily measurement frequency, and the broad set of financial instruments, the relative frequency mass of the time interval between subsequent extreme losses is concentrated on small integer values. Indeed, about 45% of all such durations is distributed on distinct discrete values of 1–5 days, and the most frequent time span between subsequent extreme losses is one day (about 12–13% of cases).

Figure 1.

Frequency histogram for the time intervals (in number of days) between subsequent extreme losses for seven equity indexes and three FX rates between January 1981 and March 2020.

The SEP-POT model relates to the published work on the point-process approach to POT models but is consistent with the observed discreteness of threshold exceedance durations. Thus, in our model, the values of the time variable are treated as indivisible time units upon which extreme losses can be observed. As a result that the extreme losses are clustered, the model incorporates the self-exciting component. Accordingly, the extreme loss probability is affected by the series of time spans (in number of days) that have elapsed since all past extreme loss events. We apply the weighting function in the form of the at-zero-truncated Negative Binomial (NegBin) distribution that allows the influence of previous extreme losses to decay over time. The functional form of the extreme loss probability in our SEP-POT model is drawn from [18], where a very similar specification was proposed to depict the self-exciting nature of terrorist attacks in Indonesia and forecasted the probability of future terrorist attacks as a function of attacks observed in the past. Inspired by this work, we check the adequacy of such a discrete-time approach in the framework of POT models of risk. To this end, we perform an extensive validation of the SEP-POT model both in and out of sample and compare it with three widely-recognized VaR measures: one based on the self-exciting intensity (Hawkes) POT model, one derived from the exponential GARCH model with skewed Student’s t distribution (skewed-t-EGARCH) model, and the last one was delivered by the Gaussian GARCH model. The results for VaR at high confidence levels (>99%) remain in favor of the SEP-POT model, and hence, the model constitutes a real alternative for measuring the risk of large losses.

Section 2 outlines the point process approach to POT models, introduces the SEP-POT model, and outlines the backtesting methods used for model validation. Section 3 presents the empirical findings and discusses the extensive backtesting results. Finally, Section 4 concludes the paper and proposes areas for future research.

2. Methods

2.1. Self-Exciting Intensity POT Model

Consider () denoting the stochastic process that characterizes the evolution of negated daily log returns on a financial asset, being the daily log returns pre-multiplied by . The convention of using negated log returns legitimizes treating extreme losses as observations that fall into the right tail of distribution. More precisely, the extreme losses are defined as such positive realizations of that are larger than a sufficiently large threshold u. The magnitudes of extreme losses over a threshold u, (i.e., ) will be referred to as the threshold exceedances. The time intervals between subsequent threshold exceedances will be referred to as threshold exceedance durations.

Let denote an observed sample path of (1) the times when extreme losses are observed (i.e., ) and (2) the corresponding magnitudes of such losses (i.e., ). If one pursued a continuous-time approach (i.e., assuming ), the realized sequence of extreme returns with their locations in time can be treated as an observed trajectory of the marked point process. Treating these instances of threshold exceedance as realizations of a random variable allows us to model the occurrence rate of extreme losses at different time points , for example, days. An excellent introduction to the theory and statistical properties of point processes can be found in [19].

The crucial concept in the point process theory is the conditional intensity function that characterizes the time structure of event locations, and hence, the evolution of the point process. The conditional intensity function is defined as follows:

where denotes a number of events in . Note that the conditional intensity function can intuitively be treated as the instantaneous conditional probability of an event (per unit of time) immediately after time t. To account for the clustering of extreme losses, depends on being an information set available at t, consisting of the complete history of event time locations and their marks, (i.e., ). If was constant over time (i.e., ) then for the point process would correspond to a homogeneous Poisson point process with an arrival rate .

The notion of the conditional intensity facilitates the derivation of the conditional VaR measure. The VaR at a confidence level , (i.e., denotes a VaR coverage level), represents a qth quantile in the conditional distribution of financial returns. After taking advantage of working with the negated log returns and based on the notation introduced so far, the VaR (for a coverage level q) estimated for a day can be derived from the following equation:

Hence, the VaR for a coverage rate q is equal to , because the probability that a (negated) return exceeds the threshold value over a day is equal to q. This probability can be further rewritten as a product of: (1) the probability of an extreme loss arrival (i.e., a threshold exceedance) over day (given ), and (2) the conditional probability that the size of this extreme loss is larger than (given that an extreme loss was observed over day ):

The early, classical POT model of the extreme value theory (EVT) (The EVT offers two major classes of models for extreme events in finance: (1) the block maxima method, which uses the largest observations from samples of i.i.d. data, and (2) the POT method, which is more efficient for practical application because it uses all large realizations of variables, provided that they exceed a sufficiently high threshold. A detailed exposition of these methods can be found in [20].) assumes that the financial return data is i.i.d. Hence, threshold exceedances are also i.i.d homogeneous Poisson distributed in time. Accordingly, the probability of observing a threshold exceedance over given day t is constant and can be estimated as a proportion of threshold exceedances in the sample (i.e., , where n is the number of threshold exceedances and T denotes the length of time series of financial returns). By this logic, the standard POT model neglects repeated episodes of increased volatility and therefore also ignores the clustering property of extreme losses. As noted in [20], the standard POT model is not directly applicable to financial return data.

The more recent dynamic versions of the classical POT model found in several studies (i.e., [4,5,6,7,8,9,10,11,12,13,14]), are directly motivated by the behavior of the non-homogeneous Poisson point process, where the intensity rate of threshold exceedances, , can vary over time due to the temporal bursts in volatility. According to such a point process approach to POT models, the first factor on the left-hand side of Equation (3) (i.e., the conditional probability of a threshold exceedance over day ) can be derived based on the (time varying) conditional intensity function as follows:

because the probability of no events in (i.e., ) can be given as [21].

The POT models use the Pickands–Balkema–de Haan’s theorem of EVT, which allows us to approximate the second factor on the left-hand side of Equation (3) (i.e., the conditional probability that exceeds , given that it surpassed the threshold u) using the generalized Pareto distribution, as follows:

where denotes the cumulative distribution function of the generalized Pareto (GP) distribution with the scale parameter and the shape parameter . If , tends to the cumulative distribution function of an exponential distribution.

The dynamic versions of the POT models benefit from both (1) the point process theory, which allows for the time-varying intensity rate of threshold exceedances, and hence, the clustering of extreme losses, and (2) the EVT, which allows us to account for the tail risk of financial instruments. Thus, the forecasts of daily VaR can be time-varying and react to the new information. (The early, classical POT models of EVT assume a constant intensity, , and a constant scale parameter of the GP distribution for threshold exceedances, . Accordingly, the VaR level is constant over time: .) In empirical applications, appropriate dynamic specifications of selected components in Equation (6) are needed. One possible way of specifying the time-varying conditional intensity function is provided by the Hawkes process [19]. The Hawkes process belongs to the class of so called self-exciting processes where past events can accelerate the occurrence of future events. Accordingly, the conditional intensity function is defined as follows:

where denotes a constant and refers to a non-negative weighting function that captures the impact of past events, (i.e., extreme-loss days). Accordingly, each threshold exceedance at contributes an amount to the risk of an extreme loss at t. This is necessary to provide a convenient parametric functional form for . The well-recognized weighting function that we apply in the empirical portion of this paper is an exponential kernel function, given as follows:

where , are the parameters to be estimated. Accordingly, is based on the summation of exponential kernel functions evaluated at the time intervals that start at the times of previous extreme losses (i.e., ) and last up to time t. The parameters and capture, correspondingly, the scale (i.e., the amplitude) and the rate of decay characterizing an influence of past events on the current intensity. The point process features the self-excitation property because the conditional intensity function rises instantaneously after an extreme loss is registered, which, in the aftermath, triggers the arrival of next events. This mechanism results in the clustering effect, characterizing the location of extreme losses in time. The time-varying nature of the conditional intensity function results in the fluctuations of VaR (see Equation (6)). On top of the clustering feature, the self-exciting intensity POT (i.e., SEI-POT) model for VaR (c.f., Equation (6)) can be further extended to account for the serial correlation in the magnitudes of the threshold exceedances. This can be achieved by providing an appropriate dynamic model for the scale parameter of the GP distribution in Equation (5). In the empirical portion of this paper we use the following specification:

where , , denote the parameters to be estimated. Accordingly, the threshold exceedance magnitude is affected by the sizes and times of past threshold exceedances.

Unlike standard POT models, where the times of threshold exceedances are assumed to be i.i.d distributed according to the homogeneous Poisson process and the magnitudes of threshold exceedances are assumed to be i.i.d. GP distributed, the dynamic point-process-based variants of the POT models allow for a time-varying intensity rate of threshold exceedances and a time-varying expected magnitude of these threshold exceedances. Accordingly, the VaR is also time-varying. The interplay of fluctuations in and in the scale parameter of the GP distribution for the threshold exceedances, , elevates VaR in turbulent periods of financial turmoil and decreases its level during calm periods. Hence, the VaR adjusts to observed market conditions.

2.2. Self-Exciting Probability POT Model

In this section we introduce the self-exciting probability POT model that obeys the natural distinction between the processes defined in discrete and continuous time. The structure of our model still draws from Equation (3), but we treat time as if it was composed of indivisible distinct units (days). Therefore, we refrain from approximating the conditional extreme loss probability using the conditional intensity function that characterizes the evolution of point process in continuous time. As a result that we formulate our model in discrete time, we directly describe the conditional probability of an extreme loss over day t, as follows:

where denotes a link function. One possible choice of specifying (cf., [18]) is:

where if .

Based on [18], the conditional probability of an extreme loss arrival over day t can be described in a dynamic fashion that exposes the self-exciting nature of the SEP-POT model as follows:

where denotes a constant determining a baseline probability, determines the scale (amplitude) of the impact that the time location of the ith past extreme-loss event exerts on , and denotes the weighting function (i.e., discrete kernel function) that makes the past extreme-loss events less impactful than the more recent events. We specify as the probability function of the at-zero-truncated negative binomial (NegBin) distribution.

A probability function of a NegBin distribution is:

where and are the parameters of the distribution and and . For , the NegBin distribution converges to a Poisson distribution. For , the geometric distribution is obtained.

The at-zero-truncated NegBin distribution was formerly used in high-frequency-finance for modeling the non-zero price changes of financial instruments [22,23]. The probability function of at-zero-truncated NegBin distribution is given as (for ), where :

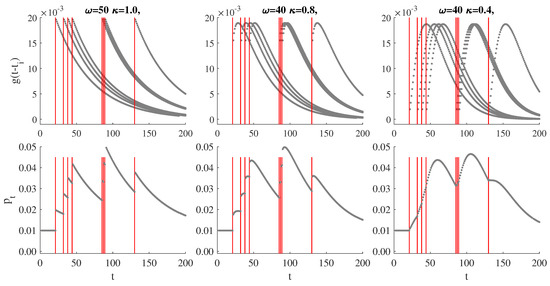

Figure 2 illustrates the self-exciting property of the SEP-POT model. The plots shown in the upper row depict the at-zero-truncated NegBin kernel functions evaluated at the time distances to previously observed events (i.e., ). The impact of past events on diminishes with time and the shape of decay is determined by parameters and . The scale of this impact is determined by . The resulting conditional probability function of an extreme loss arrival is therefore based on the summation of the weighted kernel functions based on all the backward recurrence times. The choice of an at-zero-truncated NegBin distribution guarantees flexibility in feasible shapes of the weighting function to properly reflect the dynamic properties of the data.

Figure 2.

Illustration of the self-exciting probability model for eight events. Upper rows: Feasible shapes of the weighting functions , , at , (red lines indicate times of events). Lower row: The resulting probability .

Like in existing dynamic extensions of the POT methodology, the threshold exceedance magnitudes in the SEP-POT model are described using the generalized Pareto distribution with the time-varying scale parameter. We specify this parameter as follows:

where is a constant, is a scale parameter, and (for ) denotes the nonnegative discrete weighting (kernel) function. For this purpose, we use a PDF of a geometric distribution with parameter , because it constitutes a natural discrete counterpart to an exponential distribution used in the continuous-time framework of the SEI-POT model (see Equation (9)). Hence, the magnitude of the threshold exceedance awaited at t is affected by the times and sizes of all previously observed threshold exceedances. The monotonically decaying weighting function allows distant events to affect the magnitudes of losses less than the recent events do.

The SEP-POT model assumes that the density function , depicting the right tail of the distribution of the negated financial returns, has the following form:

which means that either surpasses the threshold u, i.e., belongs to the right tail of distribution (), and hence, is drawn from the generalized Pareto distribution with probability , or does not belong to the distribution tail with probability .

This reasoning allows us to formulate the log-likelihood function of the SEP-POT model as the sum of two log-likelihoods as follows:

where:

and

The VaR for a coverage rate q forecasted for day t (based on the information up to and including day ) can be derived from the SEP-POT model as follows:

Hence:

The SEP-POT model provides the grounds not only to derive the VaR, but also the expected shortfall (ES). Unlike the VaR, the ES is a coherent risk measure. It represents the conditional expectation of loss given that the loss lies beyond the VaR [24]. Accordingly, the ES corresponding to a coverage rate q, forecasted for a day t based on the information set up to and including day is defined as following:

Equation (22) can be also rewritten as follows:

The ES can be derived based on the standard definition of the mean excess function for the GP distribution. For , the mean excess function corresponding to the GP distribution (where , ) is defined as:

Hence, the expected size of losses exceeding the threshold is a linear function of . The ES forecasts from the SEP-POT model can be derived by applying the definition of to Equation (23) and by specifying the scale parameter of the GP distribution, , according to Equation (15). This leads to the formula for ES as follows:

2.3. Backtesting Methods

We use four backtesting procedures to assess the accuracy of the VaR delivered by the SEP-POT model. Each of these methods refer to the notion of a VaR exceedance or a VaR violation, being a binary indicator function, , defined as follows:

The backtesting is based on the comparison of forecasted daily VaR numbers with observed daily returns over a given period. A VaR exceedance occurs when an actual loss is larger than the VaR predicted for that day. If the SEP-POT model were a true data generating process, than, , which implies that the VaR violations would be i.i.d.

2.3.1. Unconditional Coverage Test

The first test that we consider is a widely used unconditional coverage (UC) test [25] where the null hypothesis states that the proportion of VaR exceedances according to a risk model (i.e., ) matches with the assumed coverage level for VaR (i.e., q): . The UC test is formulated as a likelihood ratio test which compares two Bernoulli likelihood functions. Asymptotically, as the number of observations T goes to infinity, the test statistic is distributed as with one degree of freedom:

where denotes the number of VaR violations in the sample of T returns.

2.3.2. Conditional Coverage Test

The second test is the conditional coverage (CC) that not only verifies the correct coverage but also sheds light on the independence of VaR violations over time [26]. This test was established in such a way that it aims to reject the VaR models when a risk model produces either the incorrect proportions or the clusters of exceedances. To this end, the process of VaR violations is described by a first-order Markov model and the CC test is based on the estimated transition matrix, as follows:

where and denote, correspondingly, the conditional probability of no VaR violation and a VaR violation (today), given that yesterday there was no VaR violation. Analogously, and denote, correspondingly, the conditional probability of a VaR violation and no VaR violation (today) directly after a VaR violation yesterday. As given in Equation (27), the elements of the transition matrix are estimated with the actual proportions of VaR violations, where , for is the number of (negated) returns with the indicator function equal to j directly following an indicator’s value i. The CC null hypothesis states that the conditional probability of a VaR violation directly after another VaR violation is the same as the conditional probability of a VaR violation after no violation and, at the same time, it is equal to the assumed coverage level for VaR (i.e., ). Asymptotically, as the number of observations T goes to infinity, the test statistic is distributed as a with two degrees of freedom:

However, because the CC test is established on the Markov property of the violation process, it is sensitive to the dependence of order one only. Therefore, the CC test cannot be used to verify whether the current VaR exceedance depends on the sequence of states that preceded the last one.

2.3.3. Dynamic Quantile Conditional Coverage Test

The next two backtesting methods shed more light on the higher-order autocorrelation in the process of VaR violations. They also allow us to conclude whether the violations are affected by some previously observed explanatory variables. The first test is a dynamic quantile (DQ) test [27] that is based on a hit function, as follows:

The correctly specified VaR model should form the sequence with a mean value insignificantly different from 0, because equals , each time is larger than the daily VaR and , otherwise. Moreover, there should be no correlation between the current and the lagged values of the sequence or between the current values of the sequence and the current VaR. If the risk model corresponds to the true data generating process, the conditional expectation of should be 0 given any information known at . The DQ test that we use in the empirical section of our paper can be derived as the Wald statistic from an auxiliary regression, as follows:

The null hypothesis states that the current value of a hit function (i.e., ) is not correlated with its four lags and the forecasted VaR (i.e., which is based on information known at ). Thus . Hence, the null hypothesis states that the coverage probability produced by a risk model is correct (i.e., ) and none of the five explanatory variables affects . Hence, the DQ test statistic is asymptotically distributed with six degrees of freedom:

where denotes a vector with observations of variable and denotes the standard matrix containing a column of ones and observations on the five explanatory variables at times , according to the regression given in Equation (30).

2.3.4. Dynamic Logit Conditional Coverage Test

The dynamic logit test of conditional coverage might be treated as an extension of the DQ conditional coverage test [28]. This method considers the dichotomous nature of VaR violations. Accordingly, instead of the linear regression given by Equation (30), this test is established based on the dynamic logit model for : , where denotes the cumulative distribution function of a logistic distribution and is specified as follows:

The autoregressive structure of Equation (32) allows us to better capture the dependence of a VaR violation probability upon possible explanatory factors. The null hypothesis is . Thus, the null states that the coverage probability delivered by a risk model corresponds to the assumed coverage rate for VaR (i.e., ) and none of regressors used in Equation (32) causes an incidence of VaR violation. The test statistic can be established as a likelihood ratio test statistic. Accordingly, it requires estimating the model given by Equation (32) and comparing its empirical log likelihood, , with the restricted log likelihood under the null . Under the null, the LR test statistic is distributed with four degrees of freedom:

3. Results and Discussion

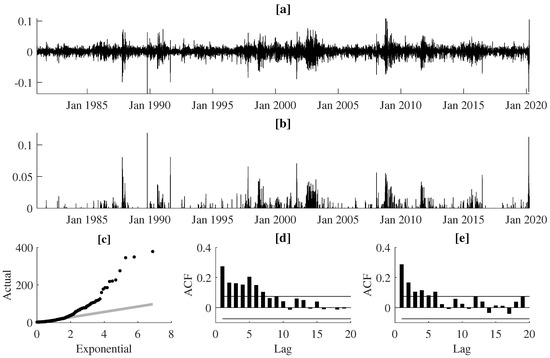

In our empirical study we use daily log-returns from seven major stock indexes worldwide (CAC 40, DAX 30, FTSE 100, Hang Seng, KOSPI, NIKKEI, and S&P 500) and three currency pairs (JPY/USD, USD/GBP, USD/NZD). The CAC 40, DAX 30, and FTSE 100 are the major equity indexes in France, Germany, and U.K., respectively, and they are often perceived as the proxies or the real-time indicators for a much broader European stock market. The Hang Seng, KOSPI, and NIKKEI demonstrate the investment opportunity on the largest Asian equity markets in Hong Kong, South Korea, and Japan, respectively. S&P 500 constitutes a widely-investigated benchmark stock index reflecting the state of the overall U.S. economy. These seven indices monitor the state of the international equity market in its three global financial centers—western Europe, eastern Asia, and the U.S. As far as selection of the FX rates is concerned, according to [29], the JPY/USD and USD/GBP are the second and third most traded currency pair in the world, after EUR/USD (We did not investigate the EUR/USD currency pair due to a much smaller number of observations when comparing to the other time series; the euro was launched on 1 January 1999). The NZD/USD, often nicknamed as the Kiwi by FX traders, is a classical example of the commodity currency pair that co-fluctuates with the world prices of primary commodities (i.e., New Zealand exports oil, metals, dairy, and meat products). The New Zealand Dollar is also treated by international investors as a carry trade currency—therefore, it is very sensitive to interest rate risk. For each of these financial instruments we split the data spanning over a four-decades-long period into: (1) the in-sample data (i.e., 2 January 1981–31 December 2014) dedicated to the estimation and evaluation of our models and (2) the out-of-sample data (i.e., 2 January 2015–31 March 2020) which is reserved for VaR backtesting purposes. For each of the time series, the initial threshold u was set as the 95%-quantile of the in-sample unconditional distribution of negated log returns. Hence, the 5% largest negated returns were defined as extreme losses, which means that, on average, an extreme loss can be observed with probability 0.05. The selection of the threshold value u was a compromise between (1) the desired number of observations in the tail of the distribution to reduce noise and to ensure stability in parameter estimates (i.e., the lower the u, the more observations used for estimation) and (2) the goodness-of-approximation of the threshold exceedance distribution with the GP distribution (i.e., the higher the u, the better the approximation with the GP distribution). The latter issue was solved using two diagnostic tools, that confirmed the adequate goodness-of-fit of the conditional GP distribution. We used the D-test proposed in Ref. [30] and the test for uniformity of probability integral transforms (PIT) based on the GP density estimates. Figure 3 illustrates extreme losses corresponding to the German DAX 30 index between January 1981 and March 2020. The examination of panels [a] and [b] allows us to conclude that the periodic volatility bursts are paralleled with the strong clustering effects for both (1) the magnitudes of extreme losses and (2) the days that they occur. Indeed, the quantile-quantile (QQ) plot (panel [c]) comparing empirical quantiles of the time intervals between subsequent extreme-loss days against the quantiles of an exponential distribution proves that the times of extreme losses are not distributed according to the homogeneous Poisson point process. Clustering of extreme events is also demonstrated by the shape of the autocorrelation function (ACF), indicating significant positive autocorrelations in both time intervals between successive threshold exceedances and the observed magnitudes of such exceedances.

Figure 3.

Panel (a) presents the daily log returns for the DAX index between Jan. 1981 and March 2020, panel (b) shows the corresponding ground-up threshold exceedances (i.e., the magnitudes of losses over the threshold u), panel (c) illustrates the quantile-quantile plot of inter-exceedance durations (in number of days) against the exponential distributions, and panels (d,e) present the autocorrelation functions for the inter-exceedance durations and the threshold exceedances, respectively.

The descriptive statistics of the CAC 40, DAX 30, and FTSE 100 data are summarized in Table 1 (analogical results for the remaining time series can be obtained from the author upon request). We see that for the CAC 40, DAX 30, and FTSE 100, the threshold exceedances were obtained as the losses surpassing u that is equal to 0.021, 0.021, and 0.017, respectively. Out of 8574 (CAC 40), 8563 (DAX 30), and 7826 (FTSE 100) daily log returns in-sample, these threshold values allow us to expose, correspondingly, 429, 428, and 391 extreme losses that were used for the model estimation purposes. For the FTSE 100 index, we have less observations (corresponding to three years: 1981–1983), because the in-sample period starts on 3 January 1984, when the FTSE 100 index was established. Although the official base date for the DAX 30 index is 31 December 1987, the DAX 30 index was linked with the former DAX index which dates back to 1959. The official base date for the CAC 40 also begins on 31 December 1987, but between 2 January 1981 and 30 December 1987 it could be measured as the “Insee de la Bourse de Paris.” The threshold-exceedance durations cover a very wide range of observed values. For example, for the FTSE 100 index, the range spans from one day (with the relative frequency equal to 12.8% in-sample and 11.3% out-of-sample) up to 304 days in-sample or 205 days out-of-sample. In-sample, the largest threshold exceedance, equal to 0.114, was observed on the Black Monday of 20 October 1987 and it corresponded to a 12.22% decrease of the index. Out-of-sample, the maximum threshold exceedance is equal to 0.099 (a 10.87% plunge in the index) and was observed on the Black Thursday of 12 March 2020, being a single day in a chain of stock market crashes induced by the COVID-19 pandemic.

Table 1.

Descriptive statistics for the threshold exceedance durations and the threshold exceedance magnitudes for the CAC 40, DAX 30, and FTSE 100 indexes. ( denotes the Ljung-Box test statistics for the lack of autocorrelation up to k-th order; ***, **, and * denote the statistics significant at the 1%, 5%, and 10% levels).

Realized gains and losses are measured over distinct days, and hence, the time spans between extreme losses are comprised of discrete time units (i.e., days). The scale of this phenomenon can be seen by looking at the considerable proportion of threshold exceedance durations equal to one, two, or three (business) days. Moreover, about 45% of such durations is less than or equal to five days and over 60% are less than or equal to ten days. Another striking observation from Table 1 is the clustering of extreme losses. Large losses tend to occur in waves, which is seen from the Ljung-Box test statistics (where ) for the lack of up to kth-order serial correlation. These test statistics are significantly different from zero, and hence, the null hypothesis of no autocorrelation in threshold exceedance durations must be rejected. Indeed, due to the COVID-19 outbreak, between 24 Februry and 31 March 2020 (i.e., over 27 business days) the CAC 40, DAX 30, and FTSE 100 suffered from as many as 10 (CAC40 and DAX 30) or 11 (FTSE 100) extreme losses (with the shortest and the longest threshold exceedance durations equal to one and five business days only, respectively). Extreme loss days tended to occur very close to each other, but this phenomenon is paralleled by the significant autocorrelation in the magnitudes of observed threshold exceedances. Based on the Ljung-Box test results, the null hypothesis of no autocorrelation in the threshold exceedance sizes needs to be rejected. The observed threshold exceedance durations are by their very nature discrete and feature strong positive autocorrelation. Therefore, our SEP-POT model is suitably tailored to this data.

The SEP-POT model was estimated by maximizing the log likelihood function given in Equations (17)–(19). To this end, we used the constrained maximum likelihood (CML) library of the Gauss mathematical and statistical system. The standard errors of the parameter estimates were derived from the asymptotic covariance matrix based on the (inverse) of a computed Hessian. Table 2 presents the estimation results for the CAC 40, DAX 30, and FTSE 100 (analogical results for the remaining time series can be obtained from the author upon request). The parameter estimates responsible for the self-excitement mechanism, both in the probability of threshold exceedances (i.e., , , ) and the magnitudes of these exceedances (i.e., , ) are highly statistically significant. The parameter estimates for DAX 30 and CAC 40 indices look very much alike, especially for the conditional probability of threshold exceedances, which means that these two stock markets are closely related to each other.

Table 2.

Maximum likelihood (ML) parameter estimates of the self-exciting probability peaks-over-threshold (SEP-POT) for the CAC 40 and DAX 30 indices. Standard errors given in brackets.

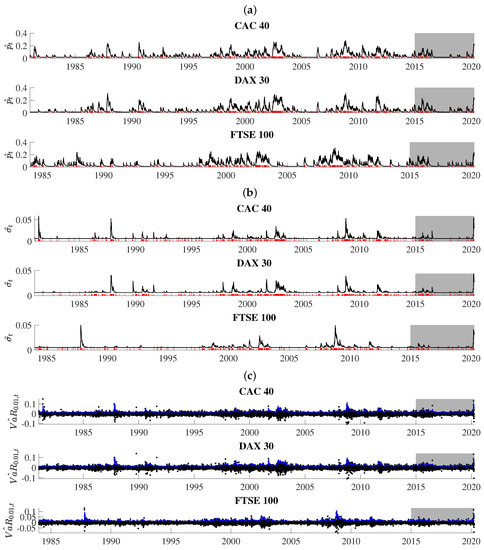

Obtained series for , , and are illustrated in Figure 4. The extreme loss probability (i.e., ) features a strong self-excitation property because it reacts to extreme-loss days with abrupt increases and, if there are no further intervening events, it slowly wanders in the downward direction. In calm and prosperous periods of the stock market history, the path of rests on very low levels. However, in turbulent periods, when the location of extreme-loss days is very dense, tends to involve very high numbers. More specifically, persistently elevated levels can be seen during the market downturn of 2002–2003 and the global crisis of 2008–2009. For the CAC 40 and FTSE 100, the highest in-sample level, equal to 0.2834 (CAC 40) and 0.3082 (FTSE 100), was reached on Monday, 24 November 2008. Both maximum values were triggered by a self-excitation mechanism during the prevailing stock market turmoil. Directly before 24 November 2008 the market suffered three consecutive extreme-loss days–November 19. (Wednesday), 20. (Thursday) and 21. (Friday). For the DAX 30 index, the in-sample peaked to its highest level (0.3126) on 11 November 1987, in the aftermath of 10 steep losses that started on the Black Monday of 19 October. The last three were observed on three business days, 6–10 November 1987. Out-of-sample, the highest levels of 0.2298 (CAC 40), 0.2416 (DAX 30), and 0.2339 (FTSE 100) corresponded to 24 March 2020 (CAC 40 and DAX 30) and 19 March 2020 (FTSE 100). COVID-19-induced anxiety before 24 March, resulted in the concentration of six threshold exceedances for CAC 40 and DAX 30 in March 2020 alone, where the last of these threshold exceedances took place just one day before the highest level was reached on 23 March 2020.

Figure 4.

Estimation results from the SEP-POT models: the conditional probability of a threshold exceedance (i.e., , panel (a)); the time-varying scale parameter of the generalized Pareto (GP) distribution for the magnitudes of threshold exceedances (i.e., panel (b)); the daily value at risk (VaR) at the confidence level 99 % (in blue color) that overlays the (negated) log returns (panel (c)). The days of extreme losses were marked in red. The shadowed area corresponds to the out-of-sample period.

Observed fluctuations of are accompanied with the strongly time-varying behavior of (i.e., the estimate of the dispersion parameter in the conditional distribution of threshold exceedances). The losses exceeding u trigger upward jumps in both numbers, boosting the awaited probability and the size of a threshold exceedance. For the CAC 40 index, peaked to its highest level (0.059) on 15 May 1981, due to enormous panic and sell-offs on the Paris Bourse just days before Francois Mitterand announced hostile reforms for the stocks quoted at the Bourse. Indeed, the preceding days saw the CAC 30 index plunge by over 30%. The UK and German markets were mostly untouched by these French policy-oriented events, and the highest was registered on 27 October 1987 (FTSE 100) and 29 October 1987 (DAX 30) at the levels of 0.051 (FTSE 100) and 0.042 (DAX 30), just after a few huge price drops were observed including the famous Black Monday on 19 October 1987. Note, that the maximum levels do not have to coincide with those of . This is because is also affected by the magnitude of past threshold exceedances. For all data in this study, the highest out-of-sample levels were registered in the second half of March 2020.

The self-triggering nature of and give rise to variations in daily VaR, as shown in the panel [c] of Figure 4. What catches special attention is that the obtained path of VaR estimates tends to adjust to both periods of calm and turmoil in the history of equity markets—it quickly reacts to price jumps and bursts in volatility and accounts for persistent swings in stock prices.

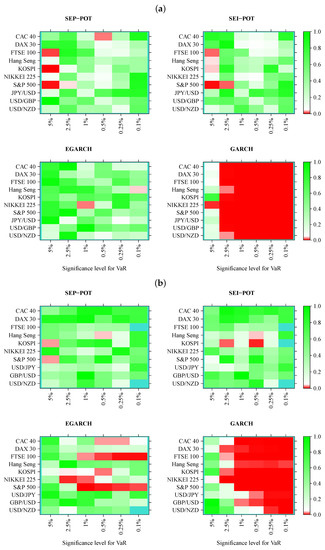

We verified whether the SEP-POT model is appropriate for forecasting the daily VaR. To ensure a big-picture perspective over its usefulness in diverse practical applications, we derived the daily VaR levels for six assumed theoretical coverage rates (i.e., for ), and compared them with corresponding VaR numbers from three competing risk models (i.e., the self-exciting intensity (Hawkes) POT model (SEI-POT), the EGARCH(1,1) model with the skewed-t distributed innovations and the standard GARCH(1,1) model with normally-distributed innovations). For the sake of fair comparison between the four risk models under study, the accuracy of VaR forecasts was validated with four backtesting procedures. Moreover, each of these statistical routines was distinctly applied to examine the following: (1) the in-sample goodness-of-fit and (2) the out-of-sample accuracy. Considering ten financial instruments under study, six coverage levels for VaR (q) and four models (SEP-POT VaR, SEI-POT VaR, skewed-t-EGARCH VaR, and Gaussian GARCH VaR), we ended up with 240 VaR series in-sample and 240 series out-of-sample. Therefore, for clarity of exposition, the backtesting results were summarized in the form of heatmap graphs (cf., Figure 5, Figure 6, Figure 7 and Figure 8). Heatmaps use a grid of colored rectangles across two axes where the horizontal axis corresponds to the assumed VaR coverage level and the vertical axis corresponds to the financial instrument under study. The color of each little rectangle (in shades of red and green) reflects the p-value of a backtesting procedure. The white colour corresponds to a p-value equal to 0.05. The darker the red color indicates an increasingly smaller p-value, one that it is less than 0.05. The darker the tone of green indicates an increasingly higher p-value, one that it is larger than 0.05. For example, panel [a] of Figure 5 presents the p-values corresponding to the UC test statistics. Each of the four heatmaps in panel [a] refers to the VaR delivered from a different model: the SEP-POT, SEI-POT, skewed-t-EGARCH, and Gaussian GARCH.

Figure 5.

Heatmap charts showing p-value for the in-sample (panel (a)) and out-of-sample (panel (b)) for unconditional coverage (UC) tests. VaR series was calculated from the self-exciting probability POT model (SEP-POT), self-exciting intensity (Hawkes) POT model (SEI-POT), the EGARCH(1,1) model with the skewed-t distribution (EGARCH), and standard GARCH(1,1) model with normally-distributed innovations (GARCH). The squares of the heatmaps in the shades of red correspond to p-value < 0.05. The rectangles in turquoise color correspond to no VaR exceedances.

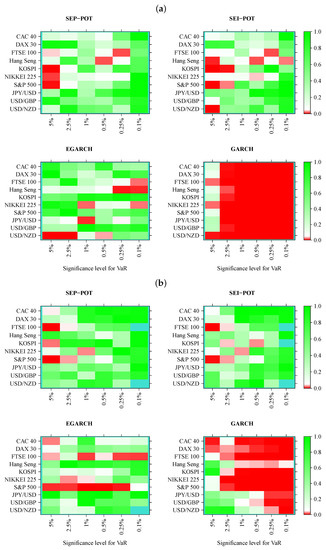

Figure 6.

Heatmap charts showing p-value for the in-sample (panel (a)) and out-of-sample (panel (b)) for conditional coverage (CC) tests. VaR series was calculated from the self-exciting probability POT model (SEP-POT), self-exciting intensity (Hawkes) POT model (SEI-POT), the EGARCH(1,1) model with the skewed-t distribution (EGARCH), and standard GARCH(1,1) model with normally-distributed innovations (GARCH). The squares of the heatmaps in the shades of red correspond to p-value < 0.05. The rectangles in turquoise color correspond to no VaR exceedances.

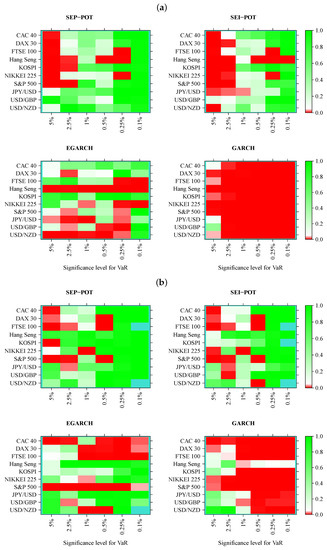

Figure 7.

Heatmap charts showing p-value for the in-sample (panel (a)) and out-of-sample (panel (b)) for dynamic quantile (DQ) conditional coverage tests. VaR series was calculated from the self-exciting probability POT model (SEP-POT), self-exciting intensity (Hawkes) POT model (SEI-POT), the EGARCH(1,1) model with the skewed-t distribution (EGARCH), and standard GARCH(1,1) model with normally-distributed innovations (GARCH). The rectangles of the heatmaps in the shades of red correspond to p-value < 0.05. The rectangles in turquoise color correspond to no VaR exceedances.

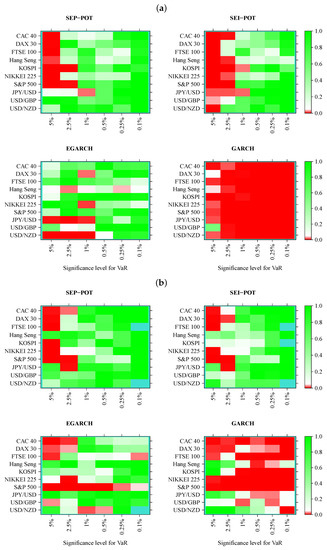

Figure 8.

Heatmap charts showing p-value for the in-sample (panel (a)) and out-of-sample (panel (b)) for dynamic logit conditional coverage tests. VaR series was calculated from the self-exciting probability POT model (SEP-POT), self-exciting intensity (Hawkes) POT model (SEI-POT), the EGARCH(1,1) model with the skewed-t distribution (EGARCH), and standard GARCH(1,1) model with normally-distributed innovations (GARCH). The rectangles of the heatmaps in the shades of red correspond to p-value < 0.05. The rectangles in turquoise color correspond to no VaR exceedances.

According to the UC test results, the VaR based on the SEP-POT, SEI-POT, and skewed-t-EGARCH models produce, in-sample, a rather accurate proportion of violations. The best in-sample results were delivered by the skewed-t-EGARCH model; however, its superiority diminishes out-of-sample, where the skewed-t-EGARCH model failed in 13 out of 60 instances. Out-of-sample, the SEP-POT VaR and SEI-POT VaR models rejected the null of correct coverage only three times. The EGARCH model seems to produce good VaR forecasts for high coverage levels (i.e., ). For , the EGARCH VaR model is left behind the SEI-POT VaR model and SEP-POT VaR model. As expected, the advantage of VaR models based on POT methodology is most visible for the extreme quantiles. As far as the Gaussian GARCH VaR model is concerned, its performance is dramatically worse than other risk models both in-sample and out-of-sample. The model produces incorrect VaR forecasts for small q (i.e., ), which can be explained by insufficient probability mass in the tails of Gaussian distribution.

The results of the CC test checking both the correct coverage and the lack of dependence of order one in VaR violations seem to support the SEP-POT VaR model (cf., Figure 6). The poorest fit corresponds to the highest q levels (i.e., ) because in such cases, the null of proper specification had to be rejected both in-sample and out-of-sample for FTSE 100, KOSPI, NIKKIEI, and S&P 500. However, the SEP-POT VaR model seems to be slightly superior than the SEI-POT VaR model. In sample, only in six instances out of 60 did the SEP-POT VaR model fail. For the SEI-POT VaR model, the number of failures was 10 and for the skewed-t-EGARCH VaR model it was nine. As in the case of the UC test, the CC test results indicate that the Gaussian GARCH VaR model rendered the worst fit—the null was not rejected in only seven cases, mainly for the lowest quantiles (i.e., for ). Out-of-sample, the SEP-POT and the SEI-POT models deliver the similar quality of daily VaR forecasts and both win over GARCH-family models.

Turning our attention to Figure 7, which illustrates the results of the DQ test, the first striking observation is that a much larger area of all heatmaps is marked with shades of red when compared to the results of the CC tests. Indeed, the DQ test is more demanding than the CC test because checks not only whether a VaR violation today is uncorrelated with the fact of a VaR violation yesterday but it also checks whether VaR violations are affected by some covariates from a wider information set, where we used the current VaR and the variable observations from one to four days ago (as in original work [27]). The superiority of the SEP-POT VaR model over its competitors is clearly visible. Although the SEP-POT VaR model has a clear tendency to mis-specify VaR at the highest q levels (i.e., ), the DQ test results for the SEI-POT VaR and the VaR based on the GARCH family models are inferior. In-sample, the DQ test rejected 14 SEP-POT VaR models, 21 SEI-POT VaR models, 26 skewed-t-EGARCH VaR and 57 (i.e., nearly all) Gaussian GARCH VaR models. Out-of-sample, the advantage of the SEP-POT VaR model over the SEI-POT VaR model is less vivid—the first model failed in 12 instances and the latter failed in 14.

Figure 8 illustrates the results of the dynamic logit CC test. We can observe a systematic pattern as far as the SEP-POT VaR and SEI-POT VaR models are concerned. The area marked in red concentrates on the left-hand side of the heatmaps both in and out-of-sample, which means that VaR is mis-specified if derived for high coverage rates (i.e., ). This deficit of POT VaR models is recouped by their accuracy at low q levels. Indeed, for in-sample and for out-of-sample, both POT models are not able to reject the null. The SEP-POT VaR model was still slightly more successful than the remaining risk models. In-sample, it failed only 10 times (mainly for ), whereas the SEI-POT VaR model failed 18 times, the skewed-t-GARCH model failed ten times, yet the Gaussian GARCH VaR model managed to pass this test only two times. Out-of-sample, both POT VaR models were equally correct. For the SEP-POT and SEI-POT VaR model, the null of correct conditional coverage was rejected nine times. The dynamic logit CC test rejected the skewed-t-EGARCH model in 16 and the Gaussian GARCH in majority of cases.

The practical implications of the SEP-POT model stem from its suitability to provide adequate VaR and ES predictions. The VaR forecasts can be used by financial institutions as internal control measures of market risk. The adequacy of risk models used by financial institutions is of utmost importance for the market regulator. Commercial banks have used VaR models for several years to calculate regulatory capital charges using the internal model-based approach of the Basel II regulatory framework. According to the more recent recommendations of the Basel Committee on Banking Supervision (BCBS), banks should use ES to ensure a more prudent capture of “tail risk” and capital adequacy during periods of significant stress in the financial markets [31]. This attitude remains in line with the core objective of the dynamic POT models (including the SEP-POT model), as they focus on the quantification of both the forecasted probability and the awaited size of huge losses, also producing the time-varying ES forecasts. The recent Basel III accord, comprising a set of regulations developed by the BCBS, further reinforces the role of bank units responsible for internal model validations. For more about the current regulatory framework of market risk management see [32]. Despite the recent shift from VaR to ES models in the calculation of capital requirements, ES forecasts remain highly sensitive to the quality of VaR predictions.

All in all, our findings pinpoint that the SEP-POT model constitutes a reasonable promising alternative for forecasting extreme quantiles of financial returns and the daily VaR, especially for very small coverage rates. Undoubtedly, further examination of the theoretical properties of the SEP-POT model and its forecasting accuracy is needed. The model should be backtested using other classes of financial instruments and compared against other extreme risk models. However, there is a plethora of VaR models in the literature—therefore, there are no two or three candidate specifications against which the SEP-POT model should be benchmarked and compared. Only among the point process-based POT models there have been variants put forward, including the ACD-POT model (which is based on the dynamic specifications of time, i.e., duration, that elapses between consecutive extreme losses [6,7,8]) or the ACI-POT model (with its multivariate extensions) that provides an explicit autoregressive specification for the intensity function [13]. All these dynamic versions of POT models exploit both strands of the literature: the point process theory and the EVT, accounting for the clustering of extreme losses and the heavy-tailness of the loss distribution. The SEP-POT model is also suitable tailored to these features but also explicitly accounts for the discreteness of times between extreme losses. The empirical findings in this paper provide much support for our SEP-POT model. However, further efforts should be focused on benchmarking and comparison with a broader range of methods under the same settings (i.e., the same data and the same period).

4. Conclusions

We proposed a new self-exciting probability POT model for forecasting the risk of extreme losses. Existing methods within the point process approach to POT models pursue a continuous-time framework and therefore involve specification of an intensity function. Our model is inspired by leading research in this area but is based on observation of the real-world data as we built our model for discrete time. Hence, our model is a dynamic version of a POT model where extreme losses might occur upon a sequence of indivisible time units (i.e., days). Instead of delivering a new functional form for a conditional intensity of the point process, we propose its natural discrete counterpart being the conditional probability of experiencing an extreme event on a given day. This conditional probability is described in a dynamic fashion, allowing the recent events to have a greater effect than the distant ones. Thus, extreme losses arrive according to a self-exciting process, which allows for a realistic capturing of their clustering properties. The functional form of the conditional probability in the SEP-POT model resembles the conditional intensity function used in ETAS models. However, we rely on discrete weighting functions based on at-zero-truncated negative binomial (NegBin) distribution to provide a weight for the influence of past events.

Our move toward the discrete-time setup is backed up by the descriptive analysis of the data. On average, the probability mass for nearly 45% of the time intervals between extreme-loss days is distributed upon a set of discrete values ranging from one up to five days, and the shortest one-day-long duration has a relative frequency of 12% (for the threshold u set equal to the 95%-quantile of the unconditional distribution for negated returns). Accordingly, the motivation of the SEP-POT model lies in allowing the data to speak for itself. Using the at-zero-truncated NegBin distribution as a weighting function in the equation for the conditional probability of extreme loss, we try to tailor the method to the data specificity. The conditional distribution for the magnitudes of threshold exceedances also remain in line with this approach. We specify the evolution of the threshold exceedance magnitudes in a self-exciting fashion utilizing the weighting scheme based on the geometric probability density function. Accordingly, the sizes of more distant threshold exceedances have less effect on the current magnitudes of extreme losses than the more recent events do.

The backtesting results stay in favour of the SEP-POT VaR model. We used four backtesting procedures to check the practical utility of our approach for seven major stock indexes and three currency pairs both in- and out-of-sample. The out-of-sample period covered as much as over five years involving the series of catastrophic downswings in equity prices due to the COVID-19 pandemic in March 2020. We compared VaR forecasts delivered by the SEP-POT model with three widely recognized alternatives: self-exciting intensity (Hawkes) POT-VaR, skewed-t-GARCH VaR and Gaussian GARCH VaR model. Outcomes of backtesting procedures pinpoint that the SEP-POT model for VaR is a good alternative to existing methods.

The standard structure of the SEP-POT model offers several interesting generalizations. For example, it is possible to explain the conditional probability of an extreme loss with some covariates. Some potential candidate explanatory variables include price volatility measures such as high-low price ranges and measures of realized volatility. For stock indexes, some valuable information can be found in volatility indexes such as the CBOE volatility (VIX) index for the U.S. equity market. Unlike existing point process-based POT models, the merits of the SEP-POT model seem to lie in its discrete-time nature. Indeed, the Bernoulli log-likelihood function given in Equation (18) makes it easy to update an information set in the SEP-POT model on a regular, day-by-day basis. Another interesting generalization of the SEP-POT model could be to add the multi-excitation effect caused by different types of events. For example, the conditional probability of an extreme loss on one market could be additionally co-triggered by crashes observed in another market. Finally, the contemporaneous spillover effect between different markets can be captured using multivariate extensions of the SEP-POT model, for example based on extreme copula functions. These issues are left for further research.

Funding

This research received no external funding.

Conflicts of Interest

The author declare no conflict of interest.

References

- Jorion, P. Value at Risk: The New Benchmark for Managing Financial Risk, 3rd ed.; McGrow-Hill Companies: New York, NY, USA, 2006. [Google Scholar]

- Christoffersen, P.F. Elements of Financial Risk Management; Elsevier: Amsterdam, The Netherlands, 2012. [Google Scholar]

- McNeil, A.; Frey, R. Estimation of tail-related risk measures for heteroscedastic financial time series: An extreme value approach. J. Empir. Financ. 2000, 7, 271–300. [Google Scholar] [CrossRef]

- Chavez-Demoulin, V.; Davidson, A.C.; McGill, J.A. Estimating value-at-risk: A point process approach. Quant. Financ. 2005, 5, 227–234. [Google Scholar] [CrossRef]

- Chavez-Demoulin, V.; McGill, J.A. High-frequency financial data modeling using Hawkes processes. J. Bank. Financ. 2012, 36, 3415–3426. [Google Scholar] [CrossRef]

- Hamidieh, K.; Stoev, S.; Michailidis, G. Intensity-based estimation of extreme loss event probability and value at risk. Appl. Stoch Model Bus. 2013, 29, 171–186. [Google Scholar] [CrossRef]

- Herrera, R.; Schipp, B. Value at risk forecasts by extreme value models in a conditional duration framework. J. Empir. Financ. 2013, 23, 33–47. [Google Scholar] [CrossRef]

- Pyrlik, V. Autoregressive conditional duration as a model for financial market crashes prediction. Physica A 2013, 392, 6041–6051. [Google Scholar] [CrossRef]

- Herrera, R.; González, N. The modeling and forecasting of extreme events in electricity spot markets. Int. J. Forecast. 2014, 30, 477–490. [Google Scholar] [CrossRef]

- Grothe, O.; Korniichuk, V.; Manner, H. Modeling multivariate extreme events using self-exciting point processes. J. Economet. 2014, 182, 269–289. [Google Scholar] [CrossRef]

- Clements, A.E.; Herrera, R.; Hurn, A.S. Modelling interregional links in electricity price spikes. Energy Econ. 2015, 51, 383–393. [Google Scholar] [CrossRef]

- Herrera, R.; Clements, A.E. Point process models for extreme returns: Harnessing implied volatility. J. Bank. Financ. 2018, 88, 161–175. [Google Scholar] [CrossRef]

- Hautsch, N.; Herrera, R. Multivariate dynamic intensity peaks-over-threshold models. J. Appl. Econ. 2020, 35, 248–272. [Google Scholar] [CrossRef]

- Stindl, T.; Chen, F. Modeling extreme negative returns using marked renewal Hawkes processes. Extremes 2019, 22, 705–728. [Google Scholar] [CrossRef]

- Hautsch, N. Econometrics of Financial High-Frequency Data; Springer: Berlin, Germany, 2012. [Google Scholar]

- Engle, R.F.; Russell, J.R. Autoregressive conditional duration: A new model for irregularly spaced transaction data. Econometrica 1998, 66, 1127–1162. [Google Scholar] [CrossRef]

- Pacurar, M. Autoregressive conditional duration (ACD) models in finance: A survey of the theoretical and empirical literature. J. Econ. Surv. 2008, 22, 711–751. [Google Scholar] [CrossRef]

- Porter, M.D.; White, G. Self-exciting hurdle models for terrorist activity. Ann. Appl. Stat. 2012, 6, 106–124. [Google Scholar] [CrossRef]

- Daley, D.J.; Vere-Jones, D. An Introduction to the Theory of Point Processes, Volume I: Elementary Theory and Methods, 2nd ed.; Springer: New York, NY, USA, 2003. [Google Scholar]

- McNeil, A.J.; Frey, R.; Embrechts, P. Quantitative Risk Management: Concepts, Techniques and Tools; Princeton University Press: Princeton, NY, USA, 2005. [Google Scholar]

- Lewis, P.A.W.; Shedler, G.S. Statistical Analysis of Non-Stationary Series of Events in a Data Base System; Naval Postgraduate School: Monterey, CA, USA, 1976. [Google Scholar]

- Liesenfeld, R.; Nolte, I.; Pohlmeier, W. Modelling Financial Transaction Price Movements: A Dynamic Integer Count Data Model. Appl. Econ. 2006, 30, 795–825. [Google Scholar] [CrossRef]

- Bień, K.; Nolte, I.; Pohlmeier, W. An inflated multivariate integer count hurdle model: An application to bid and ask quote dynamics. J. Appl. Economet. 2011, 26, 669–707. [Google Scholar]

- Yamai, Y.; Yoshiba, T. Comparative analyses of expected shortfall and value-at-risk: Their validity under market stress. Monet. Econ. Stud. 2002, 20, 181–237. [Google Scholar]

- Kupiec, P.H. Techniques for Verifying the Accuracy of Risk Measurement Models. J. Deriv. 1995, 3, 73–84. [Google Scholar] [CrossRef]

- Christoffersen, P.F. Evaluating Interval Rorecasts. Int. Econ. Rev. 1998, 39, 841–862. [Google Scholar] [CrossRef]

- Engle, R.F.; Manganelli, S. CAViaR: Conditional Autoregressive Value at Risk by Regression Quantiles. J. Bus. Econ. Stat. 2004, 22, 367–381. [Google Scholar] [CrossRef]

- Dumitrescu, E.; Hurlin, C.; Pham, V. Backtesting Value-at-Risk: From Dynamic Quantile to Dynamic Binary Tests. Finance 2012, 33, 79–112. [Google Scholar] [CrossRef]

- Bank of International Settlements. Triennial Central Bank Survey. Foreign Exchange Turnover in April 2016; Bank for International Settlements: Basel, Switzerland, 2016. [Google Scholar]

- Fernandes, M.; Grammig, J. Non-parametric specification tests for conditional duration models. J. Economet. 2005, 127, 35–68. [Google Scholar] [CrossRef]

- Basel Committee on Banking Supervision. Minimum Capital Requirements for Market Risk; Bank for International Settlements: Basel, Switzerland, 2015. [Google Scholar]

- Roncalli, T. Handbook of Financial Risk Management; CRC Press: Boca Raton, FL, USA, 2020. [Google Scholar]

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).