Abstract

There is a huge group of algorithms described in the literature that iteratively find solutions of a given equation. Most of them require tuning. The article presents root-finding algorithms that are based on the Newton–Raphson method which iteratively finds the solutions, and require tuning. The modification of the algorithm implements the best position of particle similarly to the particle swarm optimisation algorithms. The proposed approach allows visualising the impact of the algorithm’s elements on the complex behaviour of the algorithm. Moreover, instead of the standard Picard iteration, various feedback iteration processes are used in this research. Presented examples and the conducted discussion on the algorithm’s operation allow to understand the influence of the proposed modifications on the algorithm’s behaviour. Understanding the impact of the proposed modification on the algorithm’s operation can be helpful in using it in other algorithms. The obtained images also have potential artistic applications.

1. Introduction

Most of the algorithms determining the local extremes and especially the minimum of the function are based on determining the value of the function or its gradient. In the case of such an algorithm, the new position of the particle is calculated basing on its previous position and the gradient which determine the direction of the movement [1]—an example of this approach is the gradient descent method:

where: —negative gradient, —step size, —the current position of the ith particle in a D dimensional environment, —the previous position of the ith particle. There are many algorithms implementing modifications of this method, and its operation is well described in the literature.

Evolutionary algorithms are another group of algorithms used to solve optimisation problems, including minimisation. As shown in [2], the analysis of particle behaviour in the evolutionary algorithms is a very complex problem. In practice, many algorithms similar to the evolutionary algorithms are used [3]. Particularly noteworthy is the group of particle swarm optimisation algorithms (PSO) [4]. The complex nature of the interaction between particles does not allow us to determine their impact on the operation of the algorithm in a simple way. The behaviour of particles in the PSO algorithm can be described by the following equation:

where: —the current position of the ith particle in a D dimensional environment, —the previous position of the ith particle, —the current velocity of the ith particle in a D dimensional environment that is given by the following formula:

where: —the previous velocity of the ith particle, —inertia weight (), , —acceleration constants (), , —random numbers generated uniformly in the interval, —the best position of the ith particle, —the global best position of the particles. The best position of the particle and the best global position are calculated in each iteration. The position of the particle and the best global position have a significant impact on the behaviour of not only the particle, but the entire swarm, directing them towards the best solution. The particle behaviour is determined by three parameters: inertia weight () and two acceleration constants (). The PSO algorithm belongs to the group of stochastic algorithms for which the convergence can be proved [5]. The parameter informs about the influence of the particle velocity at the moment on its velocity at the moment t. On the other hand, the parameter affects the changes in the particle acceleration at the time t. For the particles slow down to reach the velocity of zero. For the value of close to and the value of and close to the PSO algorithm has good convergence. The cooperation of particles releases an adaptive mechanism that drives the particle and determines its dynamics. The selection of the proper values of inertia weight and acceleration constants is a very important problem for the algorithm [6]—the algorithm tuning is intuitive and based on developer’s experience.

Another important problem that arises in practice is finding the solutions of a system of non-linear equations. Due to many difficulties in solving such systems by using analytical methods many numerical methods were developed. The most famous method of this type is the Newton’s method [7]. In recent years methods with different approaches were proposed. In [8] Goh et al. inspired by the simplex algorithm, proposed a partial Newton’s method. Despite this method is based on Newton’s method, it has slower convergence. Another method based on Newton’s method was proposed in [9]. In this method Saheya et al. revised the Jacobian matrix by a rank one matrix each iteration. Sharma et al. in [10] introduced a three-step iterative scheme that is based on Newton–Traub’s method. A method that is based on a Jarratt-like method was proposed by Abbasbandy et al. in [11]. This method uses only one LU factorisation which preserves and reduces computational complexities. An acceleration technique that can be used in many iterative methods was proposed in [12]. Moreover, the authors introduced a new family of two-step third order methods for solving systems of non-linear equations. Recently, Alqahtani et el. proposed a three-step family of iterative methods [13]. This family is a generalisation of the fourth-order King’s family to the multidimensional case. Awwal et al. in [14] proposed a two-step iterative algorithm that is based on projection technique and solves system of monotone non-linear equations with convex constraints.

In addition to the methods based on classical root finding methods (Newton, Traub, Jarratt, etc.), in the literature we can find approaches based on the PSO algorithm and other metaheuristic algorithms. In [15] Wang et al. proposed a modification of the PSO algorithm in which the search dynamics is controlled by a traditional controller such as the PID (Proportional–Integral–Derivative) controller. A combination of Nelder–Mead simplex method and PSO algorithm was proposed in [16]. To overcome problems of being trapped in local minima and slow convergence, Jaberipour et al. proposed a new way of updating particle’s position [17]. Another modification of the PSO algorithm for solving systems of non-linear equations was proposed by Li et al. in [18]. The authors proposed: (1) a new way of inertia weight selection, (2) dynamics conditions of stopping iterating, (3) calculation of the standardised number of restarting times based on reliability theory. Ibrahim and Tawhid in [19] introduced a hybridisation of cuckoo search and PSO for solving system of non-linear equations, and in [20] a hybridisation of differential evolution and the monarch butterfly optimisation. Recently, Liao et al. in [21] introduced a decomposition re-initialisation-based differential evolution to locate multiple roots of non-linear equations system. A study on different metaheuristic methods, namely PSO, firefly algorithm and cuckoo search algorithm, in the solving of system of non-linear equation task was conducted in [22]. The study showed that the PSO algorithm obtains better results than the other two algorithms.

Dynamic analysis is a separate field of research using numerous investigation methods [23]. Among them, there is a graphical visualisation of dynamics [24]. In the analysis of the complex polynomial root finding process the polynomiography is a very popular method of visualising the dynamics [25,26,27]. In this method the number of iterations required to obtain the solution is visualised. The generated images show the dynamics of the root-finding process using some colour maps. Today, dynamics study of root-finding methods with polynomiography is an essential part of modern analysis of the quality of these methods [28]. It turns out that this form of dynamics study is a powerful tool in selecting optimal parameters that appear in the iterative methods for solving non-linear equations and to compare these methods [29,30]. The visualisation methods proposed in the work will also be called polynomiography because of their similarity to the primary polynomiography, despite the fact that we use any functions, not just polynomials.

This article proposes modifications of a root finding algorithm, that is based on the Newton’s method, by using the inertia weight and the acceleration constant as well as the best position of the particle and the implementation of various iteration methods. The conducted research serves to visualise the impact of the proposed algorithm modifications on the behaviour of the particle. They allow conducting not only the discussion on the behaviour of a particle, but also to present images of aesthetic character and artistic meaning [31,32].

Nammanee et al. [33] introduced a strong convergence theorem for the modified Noor iterations in the framework of uniformly smooth Banach spaces. Their results allow to suppose that the proposed modifications using the best particle position will be an effective way to increase the effectiveness of algorithm’s operation.

The rest of the paper is organised as follows. Section 2 introduces root finding algorithm that is based on the Newton–Raphson method. This method is used to illustrate the tuning influence on its behaviour. Next, Section 3 introduces iteration processes known in literature. Moreover, basing on these iterations we propose iterations that use the best position of the particle. Section 4 presents algorithms for creating polynomiographs. Then, in Section 5 a discussion on the research results illustrated by the obtained polynomiographs is made. Finally, Section 6 gives short concluding remarks.

2. The Algorithm

Numerous methods can be used to solve a system of D non-linear equations with D variables. One of the best known and intensively studied methods is the Newton–Raphson method [7]. Let and let

Let be a continuous function which has continuous first partial derivatives. Now, to solve the equation , where , by using the Newton–Raphson method, a starting point is selected and then the iterative formula is used as follows:

where:

is the Jacobian matrix of and is its inverse.

By taking the Newton–Raphson method can be described as follows:

To solve (4), the following algorithm can be used:

where: is a starting position, is a starting velocity, is the current velocity of particle (), is the previous position of particle (). The algorithm sums the position of the particle with its current velocity . The current velocity of the particle is determined by the inertia weight and the acceleration constants:

where: —the previous velocity of particle, —inertia weight, —acceleration constant. Let us notice that (8) reduces to the classical Newton’s method [25] if and , and to the relaxed Newton’s method [34] if and . Moreover, we can observe that the proposed method joins the PSO algorithm and the Newton’s method. The acceleration term in the PSO algorithm is responsible for pointing the particle in the direction of the best solution. In our method as the acceleration term we use the direction used in the Newton’s method, which is responsible for moving the current point into direction of the solution. Thus, in each iteration we are moving towards the solution and eventually we will stop in the solution, because for the particle slows down and , when we reach the solution.

The implementation of inertia weight () and the acceleration constant () allows controlling particle dynamics in a wider range [4]. The selection of the value of these parameters is not deterministic. It depends on the problem being solved and the selected iteration method. These values are selected by tuning—it is a kind of art. The relationships between the and parameters show a complex nature and the change of these parameters influences particle’s dynamics, which can be visualised using algorithms described in Section 4.

A very important feature in numerical methods for solving systems of equations is the order of convergence of the method. For instance, the order of convergence of Newton’s method is 2 [25]. In the case of the methods that are based on Newton’s, Traub, Jarratt, etc. methods the order of convergence is proved (see for instance [8,9,10,11,12,13,14]), but in case of the methods that are based on metaheuristic algorithms, like the method presented in this paper, the order of convergence is not derived (see for instance [15,16,17,18,19,20,21,22]). This is due the fact that this order greatly varies for different values of the parameters used in the metaheuristic algorithms. We will show this on a very simple example. In this example to approximate the order of convergence we will use the computational order convergence introduced in [35], i.e.,

Let us consider , . We will solve system (4) by using (8) with various values of and in (9) and three different starting points . The obtained results are presented in Table 1. For and (classic Newton’s method) we see that the method obtains second order of convergence, which is in accordance with the result proved in the literature. When we take a non-zero inertia, then we see that for a fixed starting point and varying and we obtain very different values of the order. The same situation happens when we fix and and change the starting points.

Table 1.

Computational order of convergence for system given by , solved by (8).

Another problem studied in the context of solving systems of non-linear equation with iterative methods is the problem of strange (extraneous) fixed points, i.e., fixed points which are found by the method and are not the roots of the considered system [36]. Let us assume that (8) has converged to a solution. In such case the in (9) is equal to 0. Therefore, method (8) behaves like relaxed Newton’s method. As a result that relaxed Newton’s method has no strange fixed point [25], the proposed method also has no strange fixed points.

3. Iteration Processes

Picard’s iteration [37] is widely used in many iterative algorithms. This iteration can be described as follows:

Notice that (8) uses the Picard iteration, where takes the form

In the literature many other iteration processes can be found. Three most basic and commonly implemented algorithms of the approximate finding of fixed points of mappings are as follows:

- The Mann iteration [38]:where: for all , and the Mann iteration for reduces to the Picard iteration.

- The Ishikawa iteration [39]:where: and for all , the Ishikawa iteration reduces to the Mann iteration when , and to the Picard iteration when and .

- The Agarwal iteration [40] (S-iteration):where and for all , let us notice that the S-iteration reduces to the Picard iteration when , or and .

A survey of various iteration processes and their dependencies can be found in [41].

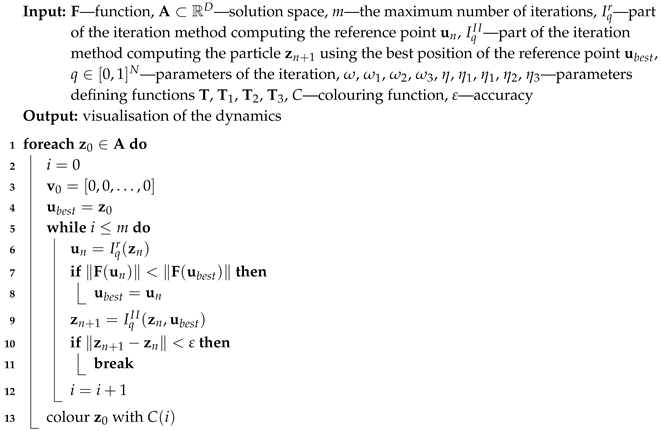

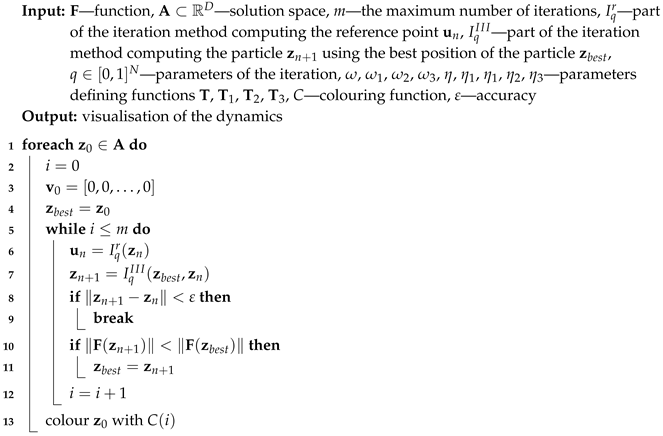

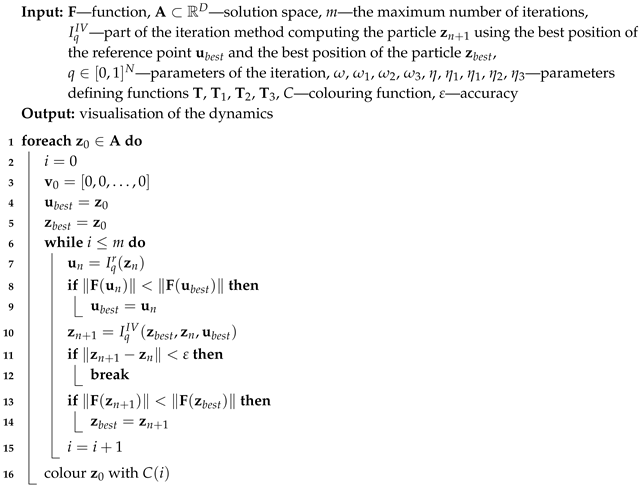

An additional particle (a sample in the solution space) (acting as a reference point) is applied in the Ishikawa and Agarwal iterations. In this case, each of these iterations performs two steps. The reference point is set in the first step, and next a new particle position is computed using the reference point. It allows better control of the particle’s motion. In the paper a modification of the above-discussed iterations is proposed by applying the best position of the reference point and the best position of the particle—similar to the PSO algorithm.

The positions of the reference point and the particle are evaluated and compared to and . In solutions space, the lower value of determines the better position of the reference point or the particle (). The next and are updated if they are worse than and . The best position of the reference point or position of the reference point and the best position of the particle or the position of the particle (depending on the result of the evaluation) are used to determine the new position of the particle .

As a result of this modification, the next position of the particle in the Equations (13)–(15) is determined by the following formulas:

- The modified Mann iteration

- The modified Ishikawa iteration

- The modified Agarwal iteration

All the presented iterations used only one mapping, but in the fixed point theory exist iterations that use several mappings and are applied to find common fixed points of the mappings. Examples of this type of iterations are the following:

- The Das–Debata iteration [42]:where and for all , the Das–Debata iteration for reduces to the Ishikawa iteration.

- The Khan–Cho–Abbas iteration [43]:where and for all , let us notice that the Khan–Cho–Abbas iteration reduces to the Agarwal iteration when .

- The generalised Agarwal’s iteration [43]:where and for all , moreover the generalised Agarwal iteration reduces to the Khan–Cho–Abbas iteration when and to the Agarwal iteration when .

Iterations described by Equations (19)–(21) can be also modified by introducing the best position of the reference point and the best position of the particle. As a result of this modification, equations describing take the following form:

- The modified Das–Debata iteration

- The modified Khan–Cho–Abbas iteration

- The modified generalised Agarwal iteration

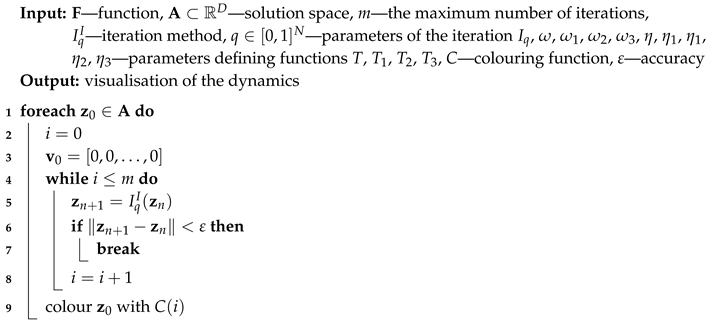

For all iterations is used for the base type of iteration (Algorithm 1), is used for the proposed algorithm with the best reference point (Algorithm 2), is used for the proposed algorithm with the best particle position (Algorithm 3) and both and are used for the proposed Algorithm 4. The variety of different iteration processes is implemented in our algorithms. In the iterations we use (12) as the mapping with different values of and parameters.

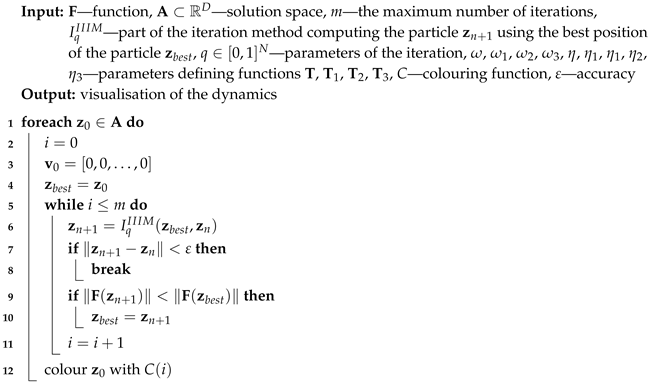

| Algorithm 1: Visualisation of the dynamics—the base Algorithm |

|

| Algorithm 2: Visualisation of the dynamics with the best reference point |

|

| Algorithm 3: Visualisation of the dynamics with the best particle |

|

| Algorithm 4: Visualisation of the dynamics with both the best reference point and particle |

|

4. Visualisation of the Dynamics

A very similar method to the polynomiography [44] is used to visualise the dynamics of the proposed algorithms. The iteration methods presented in Section 3 are implemented in the algorithms and proper values of parameters are selected. , for a single mapping or and for (depending on the chosen iteration). The maximum number of iterations m which algorithm should make, accuracy of the computations and a colouring function are set. Then, each in the solution space is used in the algorithm. The iterations of the algorithm proceed till the convergence criterion:

or the maximum number of iterations is reached. A colour corresponding to the performed number of iterations is assigned to using colouring function C. Iterations described in Section 3 and their modifications using the best particle position and the best reference point position allow proposing five algorithms. Algorithm 1 (denoted in the text by I) is the base algorithm, without modification. Algorithm 2 (denoted in the article by ) performs the selection of the best position of the reference point. Algorithm 3 (denoted by ) performs the selection of the best particle. Algorithm 4 (denoted by ) combines the modifications introduced in Algorithms 2 and 3. Algorithm 5 is a modification of Algorithm 3 because Mann iteration does not use a reference point and it is denoted by .

| Algorithm 5: Visualisation of the dynamics with the best particle for the Mann iteration |

|

The iteration method is denoted by for a given algorithm, where q is a vector of parameters of the iteration and a is one of the algorithms or r—it is the part of iteration determining position of the reference point.

The domain (solution space) is defined in a D-dimensional space, thus the algorithms return polynomiographs in this space. For a single image is obtained or for a two-dimensional cross-section of can be selected for visualisation.

5. Discussion on the Research Results

In this section we present and discuss the obtained results of visualising the dynamics of the method introduced in Section 2 together with the various iteration methods presented in Section 3 using algorithms described in Section 4. Let be the field of complex numbers with a complex number where and . In the experiments we want to solve the following non-linear equation

where .

This equation can be written in the following form:

Now, Equation (27) can be transformed into a system of two equations with two variables, i.e.,

where , . The set of solutions of this system is the following: , , and ( is used for magnification of the centre parts of polynomiographs).

Moreover, several additional test functions are used:

where: , and the set of solutions of this system is the following: , , , and ;

where: , and the set of solutions of this system is the following: , , , , and ;

where: , and the set of solutions of this system is the following (approximately): , , , , , and .

In the experiments, to solve (28) we use the method introduced in Section 2. The same colour map (Figure 1) was used to colour all the obtained polynomiographs. Furthermore, in every experiment we used the following common parameters: , , image resolution pixels.

Figure 1.

Colour map used in the experiments.

The algorithms used in the experiments were implemented in the C++ programming language. The experiments were conducted on a computer with the Intel Core i7 processor, 8 GB RAM and Linux Ubuntu 18.04 LTS.

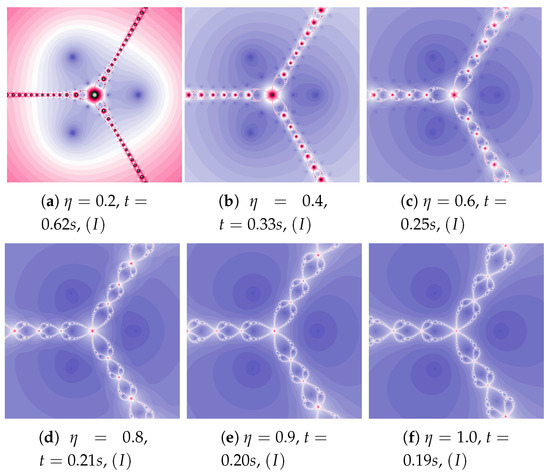

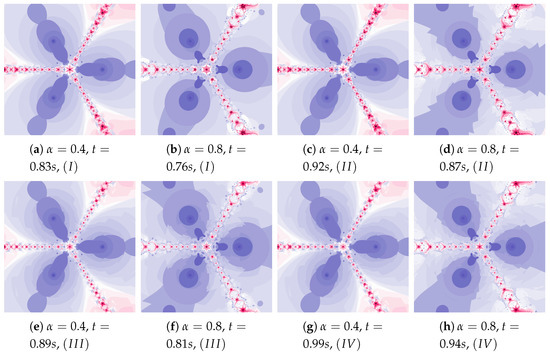

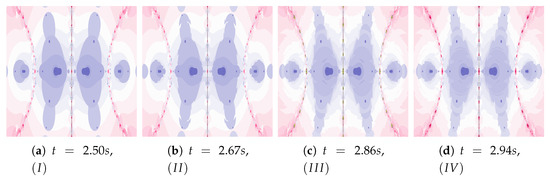

5.1. The Picard Iteration

The motion of particles depends on the acceleration constant () and inertia weight (). These parameters control dynamics of a particle behaviour. The polynomiograph visualises particle dynamics and allows analysing parameters impact on the algorithm’s operation. Polynomiographs are created using Algorithm 1. The dynamics visualisations for the Picard iteration using and varying are presented in Figure 2. Earlier, in Section 2, we noticed that the proposed method reduces to the relaxed Newton’s method for and and to the Newton’s method for and , so Figure 2a–e present polynomiographs for the relaxed Newton’s method, whereas Figure 2f for the classical Newton’s method. The polynomiograph generation times are also given in Figure 2. The time decreases as the dynamics controlled by the acceleration constant increases.

Figure 2.

Polynomiographs of the Picard iteration for and varying .

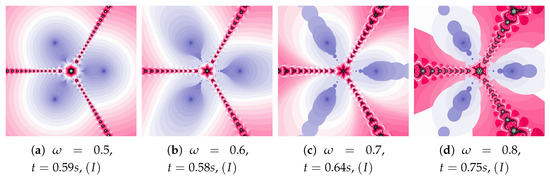

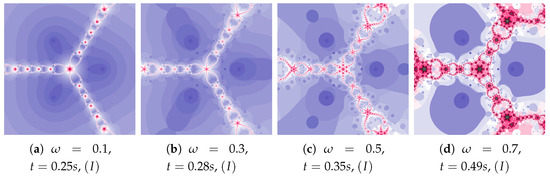

Polynomiographs of the Picard iteration for low value of acceleration constant () and varying are presented in Figure 3. The increase in the inertia weight results in the increase in particle dynamics. Both too small and too high particle dynamics cause the increase in the polynomiographs creation time. The shortest time was obtained for Figure 3b. Every change of dynamics creates a new image.

Figure 3.

Polynomiographs of the Picard iteration for and varying .

The acceleration constant is equal to for images in Figure 4. Change in particle’s dynamics is realised by the increase of the inertia weight. As in the previous example, the proper selection of particle’s dynamics minimises the creation time of the polynomiograph (see Figure 4b). The increase in the particle dynamics is expressively illustrated by polynomiographs.

Figure 4.

Polynomiographs of the Picard iteration for and varying .

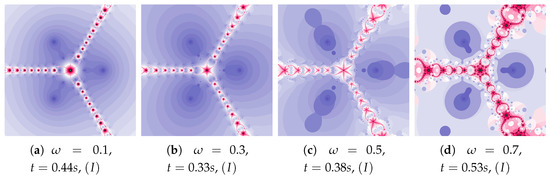

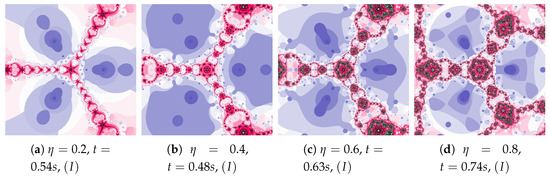

In Figure 5 we see polynomiographs generated using the same values of the inertia weight as in Figure 4, but with higher value of the acceleration constant—. The increase in the inertia weight causes the increase of the time of image creation. It is the consequence of the excessive growth of particle’s dynamics.

Figure 5.

Polynomiographs of the Picard iteration for and varying .

Polynomiographs of the Picard iteration for a constant value of the inertia weight () and varying acceleration constant are presented in Figure 6. For the images in Figure 6c,d the dynamics presented by the polynomiographs is high (the parts of the image are blurred—there are non-contrast patterns created by the points). Moreover, let us notice that the generation time for Figure 6a is lower than the time obtained for Figure 2a ( s—the Picard iteration with , ). Thus, by increasing the inertia we were able to obtain a shorter time than in the case of the relaxed Newton’s method with the same value of acceleration.

Figure 6.

Polynomiographs of the Picard iteration for and varying .

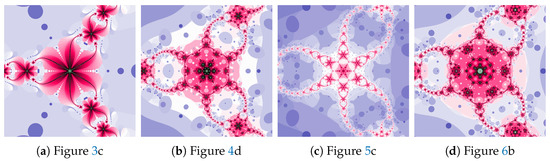

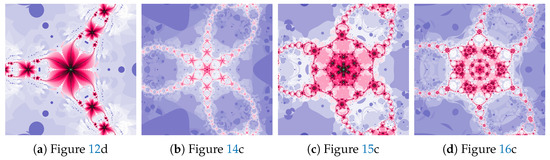

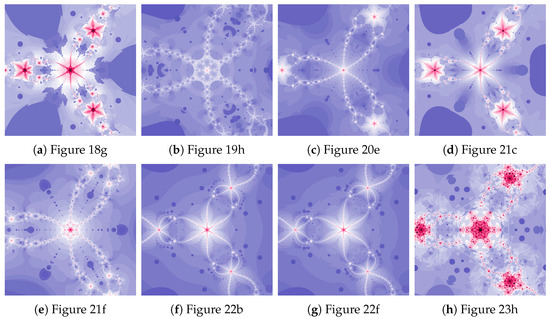

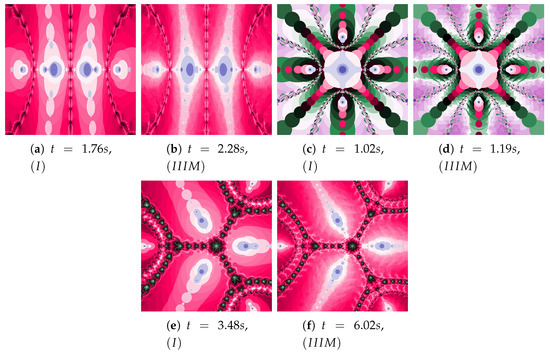

The magnifications of the central part of selected polynomiographs of the Picard iteration are presented in Figure 7. Changes in particle dynamics paint beautiful patterns. They can be an inspiration for creating mosaics.

Figure 7.

Magnification of the central part of selected polynomiographs of the Picard iteration.

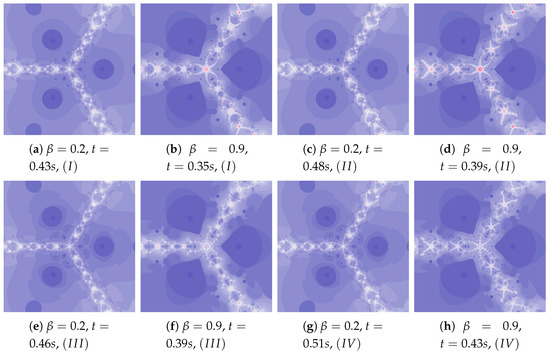

5.2. The Mann Iteration

Su and Qin in [45] discussed a strong convergence of iterations in the case of a fixed reference point. We start the examples for the Mann iteration with an example showing the behaviour of the Mann iteration implementing a fixed reference point. The results are presented in Figure 8. For polynomiograph presented in Figure 8a the fixed reference point is in its centre , whereas in Figure 8b this point is in the position —the behaviour of the algorithm is similar in both cases. For the polynomiograph in Figure 8c the fixed reference point is placed in the root position (in the best position). It causes a significant improvement in the algorithm operation near the root position. For polynomiograph presented in Figure 8d, a fixed reference point is the starting point—it results in greater efficiency in the algorithm operation near positions of the roots. A better position of the reference point can significantly improve the algorithm operation—however, it is impossible to determine its best position. In the proposed methods, instead of using a fixed reference point we use the locally best position of the reference point. This position is modified when the new position of the reference point is better than the previous one.

Figure 8.

Polynomiographs of the Mann iteration with the fixed reference point for , , . The positions of the reference point: (a) , (b) , (c) (one of the roots), (d) the starting point.

The Mann iteration allows to compare the operation of algorithm I with the Algorithm . The choice of the best position of the particle will cause the change in the dynamics, it can result in a better convergence of the particle—this mechanics is used in many algorithms. Visualisation is a great tool for presenting this mechanics of algorithm operation.

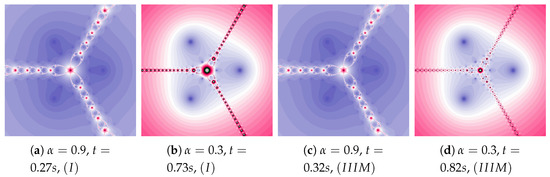

Polynomiographs of the Mann iteration for , and varying are presented in Figure 9. A small value of causes a reduction in particle’s dynamics. When Algorithm is used, the areas with the higher dynamics (areas of dynamically changing pattern) are narrowed (compare Figure 9a with Figure 9c).

Figure 9.

Polynomiographs of the Mann iteration for and varying .

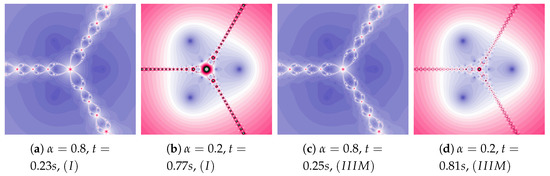

The particle dynamics for polynomiographs in Figure 10 is greater than in Figure 9. The high value of the coefficient limits the effect of selecting the best particle’s position in Algorithm . Nevertheless, in Figure 10c we observe a narrowing of areas of high dynamics and small changes in the shape of neighbouring areas. Limiting the particle’s dynamics by decreasing in the value of the coefficient affects the visualisation of the influence of the best particle position on the Algorithm operation (compare Figure 10b and Figure 10d)—similarly to Figure 9d.

Figure 10.

Polynomiographs of the Mann iteration for , and varying .

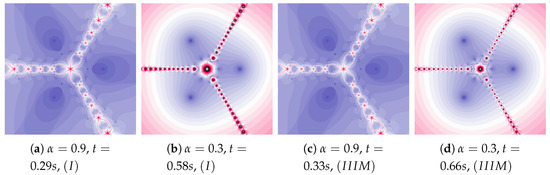

In the examples presented in Figure 11, the increase in particle dynamics is obtained by including inertia. The increase in particle dynamics is evident in polynomiographs in Figure 11a,c. As in the previous cases, Algorithm makes changes visible only in areas with higher dynamics.

Figure 11.

Polynomiographs of the Mann iteration for , and varying .

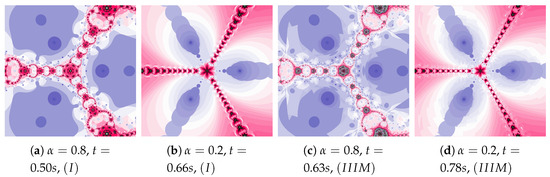

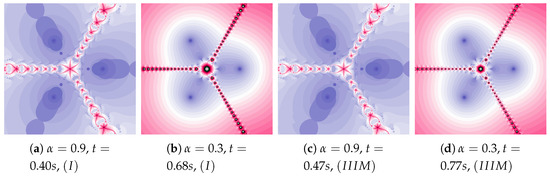

Another increase in the inertia weight () causes the increase in dynamics shown in images in Figure 12a,b. The changes in dynamics caused by the Algorithm operation are visible in the whole area of polynomiograph (see Figure 12c,d). The time of creation of polynomiograph in Figure 12d, when compared to Figure 12b, is shorter. The proposed modification of the algorithm can accelerate finding the solution.

Figure 12.

Polynomiographs of the Mann iteration for , and varying .

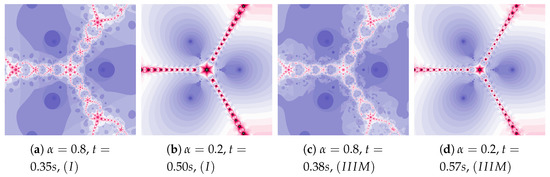

In polynomiographs in Figure 13, the particle dynamics are limited by the small value of (). The changes in dynamics caused by the Algorithm are in areas of high dynamics, the neighbourhood of these areas and places where the particle gets high velocity (the long distance from the centre of the image). This is clearly visible in images in Figure 13c,d.

Figure 13.

Polynomiographs of the Mann iteration for , and varying .

Polynomiographs presented in Figure 14 show the increase in dynamics due to the increase in the acceleration constant (). The dynamics is high for image in Figure 14a, and in Figure 14b the dynamics is limited by the small value of . Algorithm clearly influences the change in particle’s dynamics—Figure 14c. The dynamics caused by the Algorithm operation are much smaller in Figure 14d because the dynamics are limited by the small value of .

Figure 14.

Polynomiographs of the Mann iteration for , and varying .

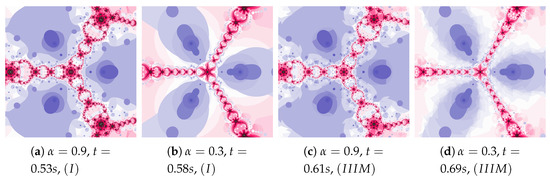

The inertia weight has much greater effect on particle dynamics than the acceleration constant. The dynamics presented in Figure 15 is high due to the high value of and . The dynamics changes, caused by the best position of the particle, are clearly visible.

Figure 15.

Polynomiographs of the Mann iteration for , and varying .

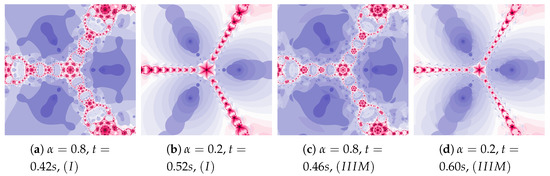

Similarly, as in the example presented in Figure 15, the dynamics in Figure 16 are shaped by the high value of and (, ). Additionally, in this case the dynamics paints some interesting images.

Figure 16.

Polynomiographs of the Mann iteration for , and varying .

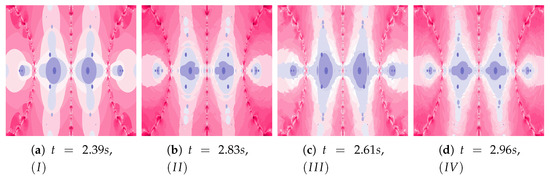

The magnifications of the central part of selected polynomiographs of the Mann iteration and its modifications are presented in Figure 17. Changes in the particle dynamics are visualised by colourful mosaics, which can be used in design to create ornaments.

Figure 17.

Magnification of the central part of selected polynomiographs of the Mann iteration.

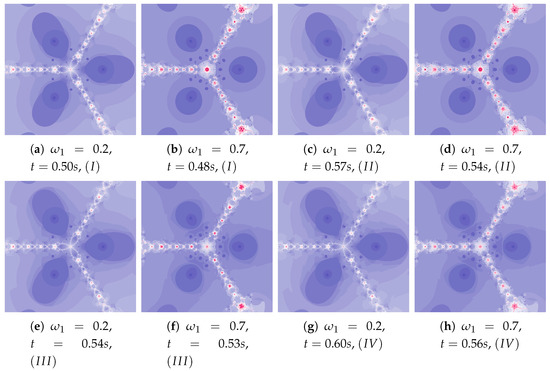

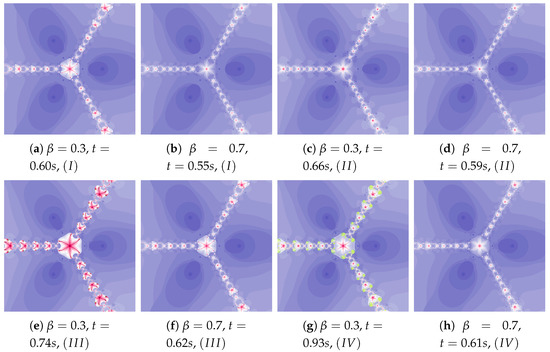

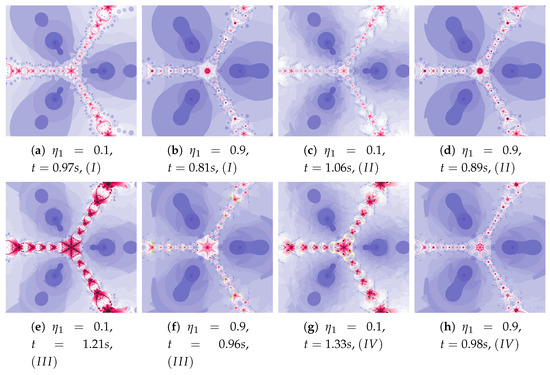

5.3. The Ishikawa and the Das–Debata Iterations

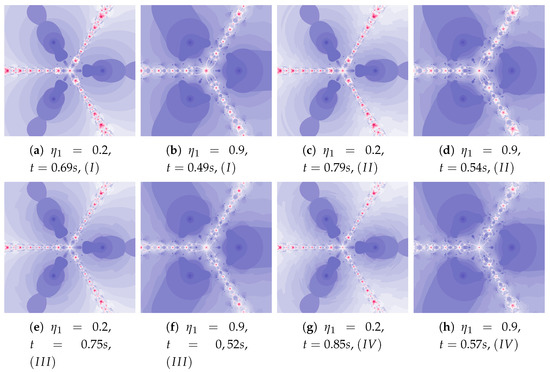

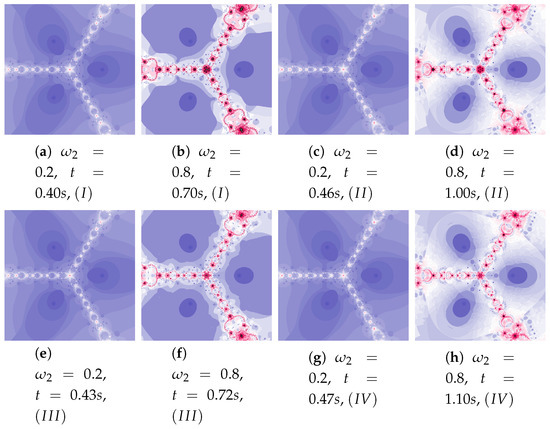

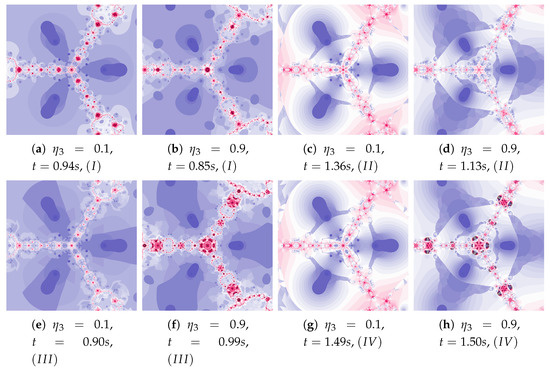

Ishikawa and Das–Debata iterations use a reference point to determine a new particle position. In the presented analysis, base Algorithm I and its three modifications are used: Algorithm using the best position of the reference point, the Algorithm using the best position of the particle, and both these modifications in the Algorithm .

Figure 18 shows polynomiographs of all four algorithms for two values of ( and ). The low value limits the influence of the reference point, so images in Figure 18a and Figure 18c are very similar. Algorithm using the best particle position has a significant influence on the form of the polynomiograph. It results in a strong similarity between images in Figure 18e and Figure 18g. The dynamics shown in Figure 18b is high. The influence of the best reference point is shown in Figure 18d because of the large value of . Changes in dynamics are also visible in Figure 18f created by the algorithm using the best position of particle. Each of the algorithms has a different influence on the dynamics of the particle. These features are combined in Algorithm —it clearly visualises the image in Figure 18h.

Figure 18.

Polynomiographs of the Ishikawa iteration for , , , , and varying .

A low value of reduces the impact of the transformation operator on the dynamics of reference point creation. It has the same effect as the low value of —for this reason Figure 19a is similar to Figure 19c,e–g. The increase in the coefficient results in obtaining different features of the images in Figure 19b,d,f,h—the image in Figure 19h combines the features of the images in Figure 19d,f.

Figure 19.

Polynomiographs of the Ishikawa iteration for , , , , and varying .

The dynamics presented by the polynomiographs depends on the dynamics of the creation of the reference point and its processing. The parameters and are responsible for the dynamics of the reference point creation and the parameters and are responsible for its processing. The change in these parameters allows to obtain the effects described above.

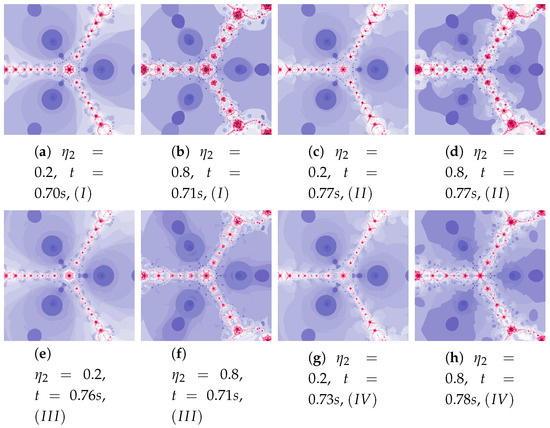

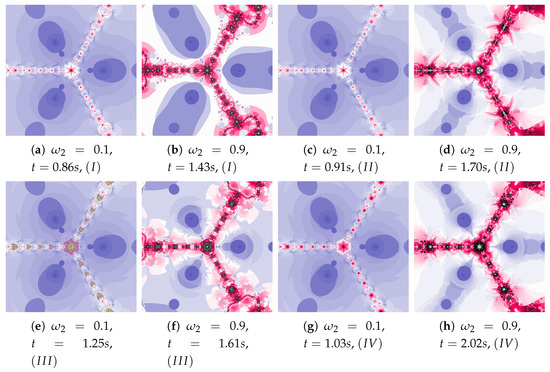

Polynomiographs of the Das–Debata iteration for varying are presented in Figure 20 and for varying in Figure 21. Polynomiographs created by Algorithms I and are similar—it means that the influence of choosing the best reference point is small for such selected parameters of the algorithm. The influence of the best position of particle has a significant effect on the form of the polynomiographs created by the Algorithm —these polynomiographs have many common features with the polynomiographs created by the Algorithm (the influence of Algorithm is small).

Figure 20.

Polynomiographs of the Das–Debata iteration for , , , , and varying .

Figure 21.

Polynomiographs of the Das–Debata iteration for , , , , and varying .

The parameters and influence the processing of the reference point. In Figure 22 polynomioographs for varying and in Figure 23 for varying are shown. For small values of , polynomiographs presented in Figure 22a,c,e,g are similar—it means, that the operation of all the algorithms is similar. Significant increase in the value of shows a significant influence of the reference point on the polynomiograph’s creation—Algorithms and similarly create polynomiographs.

Figure 22.

Polynomiographs of the Das–Debata iteration for , , , , and varying .

Figure 23.

Polynomiographs of the Das–Debata iteration for , , , , and varying .

All the algorithms make characteristic changes in polynomiographs shown in Figure 23. For high value of the polynomiographs created by Algorithms and are similar (see Figure 23d).

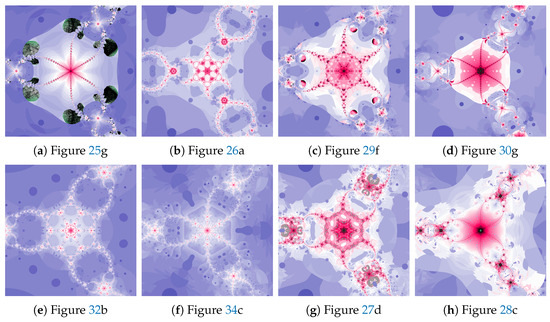

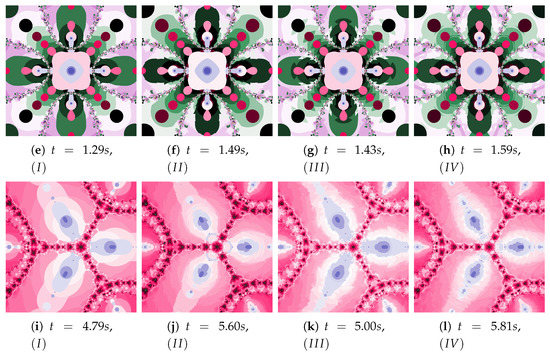

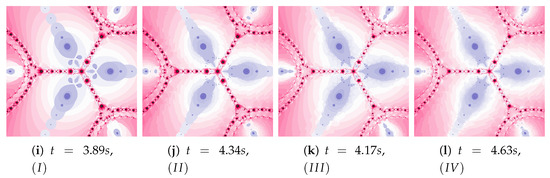

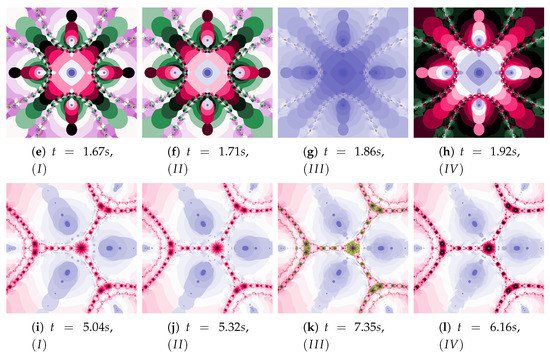

The magnifications of the central part of selected polynomiographs obtained with the Ishikawa and the Das–Debata iterations are presented in Figure 24. Similar to the previous examples these images have artistic features and can be used for instance in ornamentation.

Figure 24.

Magnification of the central part of selected polynomiographs of Ishikawa and Das–Debata iterations.

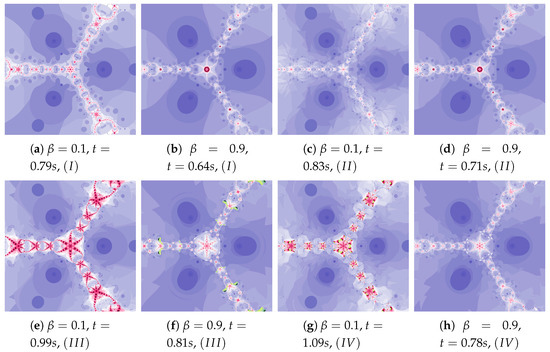

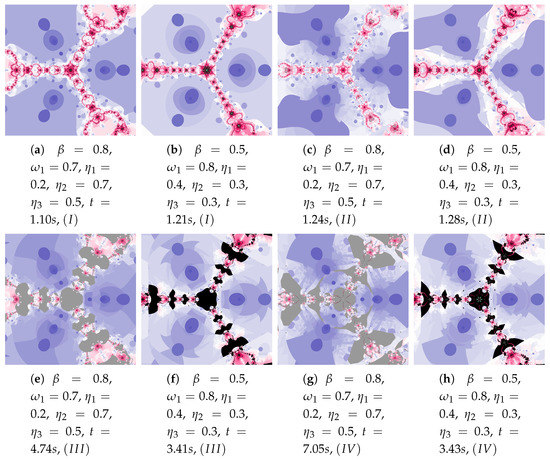

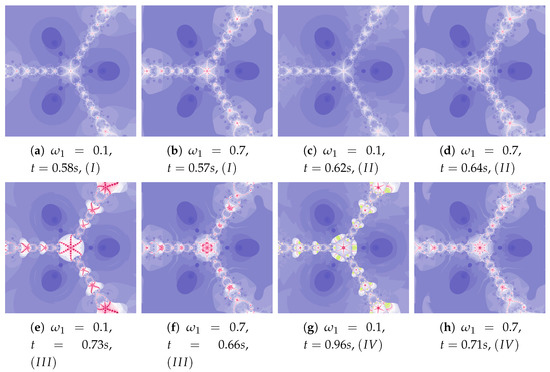

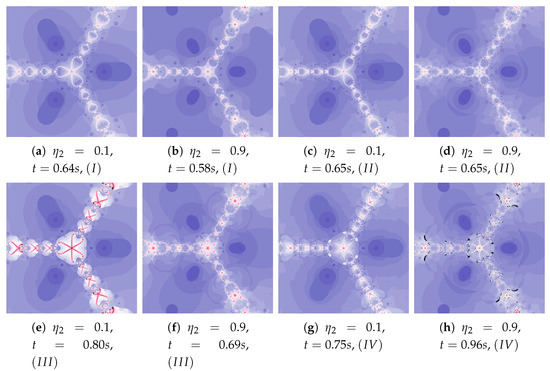

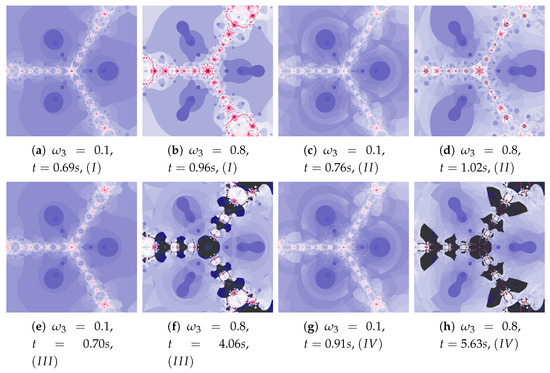

5.4. The Agarwal and the Khan–Cho–Abbas Iterations

The generalised Agarwal iteration introduces three operators parameters of which can be independently specified. It allows for a wide range of changes in particle’s dynamics. In addition, and parameters allow to determine the impact of these operators on the algorithm work. In Figure 25 polynomiographs of the Agarwal iteration for varying and in Figure 26 for varying are presented. Incorrect selection of these parameters can cause that the algorithm does not reach a solution in a given number of iterations—it results in extension of the algorithm’s operation time (see Figure 25e). Nevertheless, the increased particle dynamics gives the opportunity to obtain interesting patterns. Poorly selected particle dynamics can cause a significant increase in the creation time of a polynomiograph. This case is visible in the polynomiographs in Figure 27 and Figure 28 for Algorithms and .

Figure 25.

Polynomiographs of the Agarwal iteration for , , , , , , and varying .

Figure 26.

Polynomiographs of the Agarwal iteration for , , , , , , and varying .

Figure 27.

Polynomiographs of the generalised Agarwal iteration for , , , .

Figure 28.

Polynomiographs of the generalised Agarwal iteration for , , , , , .

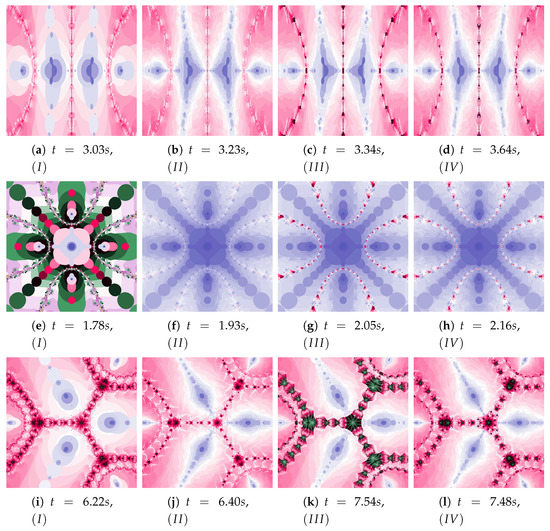

Depending on the choice of parameters, cases that have already been discussed in the previous iterations can be observed. The big similarity of polynomiographs created by Algorithms I and as well as and is observed in Figure 29 and Figure 30 (). Polynomiographs are similar for all algorithms with the low particle dynamics—this is observed in Figure 31. The strong similarity of polynomiographs is shown in Figure 32 and Figure 33 for Algorithms and —it indicates a strong influence of the reference point on the polynomiographs creation. Next, the strong similarity of polynomiographs is observed in Figure 34 for Algorithm I and —it results from the strong influence of the best particle position on the polynomiographs creation.

Figure 29.

Polynomiographs of the generalised Agarwal iteration for , , , , , , () and varying .

Figure 30.

Polynomiographs of the Khan–Cho–Abbas iteration for , , , , , , and varying .

Figure 31.

Polynomiographs of the generalised Agarwal iteration for , , , , , , () and varying .

Figure 32.

Polynomiographs of the Khan–Cho–Abbas iteration for , , , , , , and varying .

Figure 33.

Polynomiographs of the generalised Agarwal iteration for , , , , , , and varying .

Figure 34.

Polynomiographs of the generalised Agarwal iteration for , , , , , , and varying .

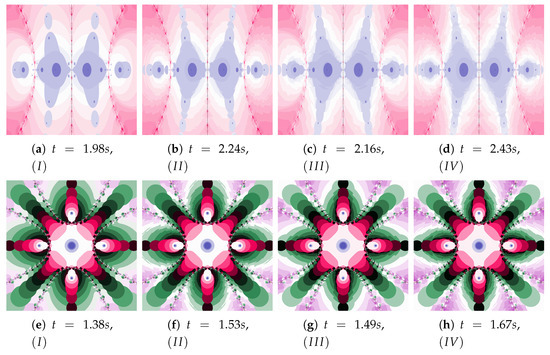

The magnifications of the central part of selected polynomiographs of Agarwal and Khan–Cho–Abbas iterations are presented in Figure 35. High dynamics can create interesting patterns, especially for the Agarwal iteration. These patterns can be an artistic inspiration.

Figure 35.

Magnification of the central part of selected polynomiographs of Agarwal and Khan–Cho–Abbas iterations.

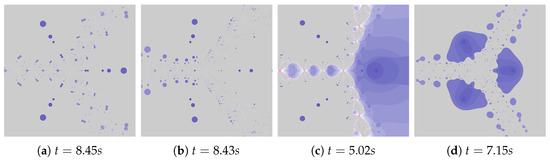

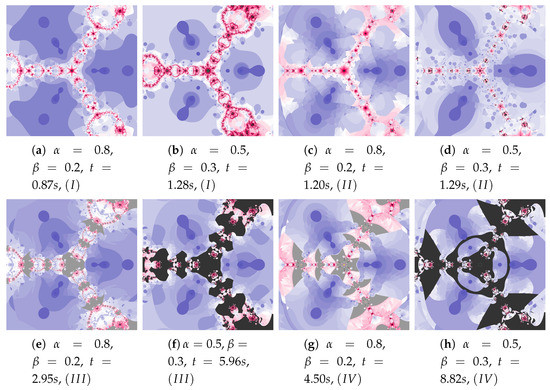

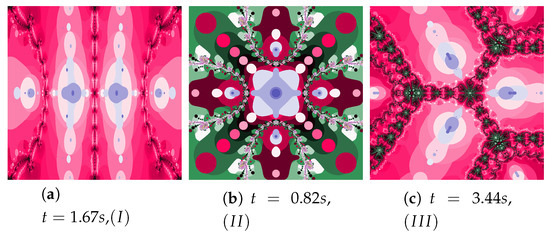

5.5. Algorithms Operation in the Selected Test Environments

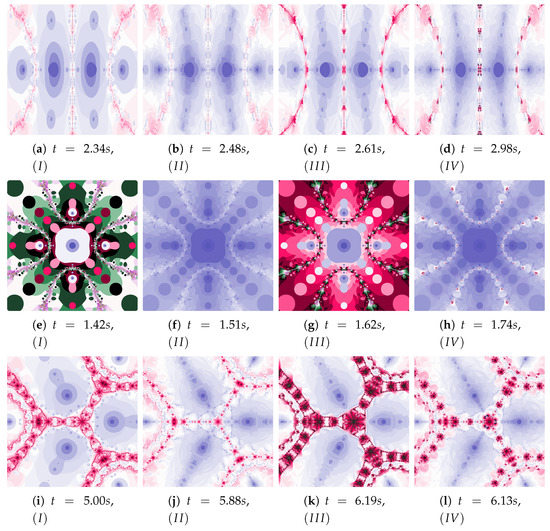

The discussion conducted in the previous sections presented in detail the impact of individual factors on the operation of the proposed algorithm. The authors want to supplement this discussion with a presentation of algorithms’ operation for the selected test environments, which we can be found in the world literature and were presented at the beginning of Section 5. Figure 36, Figure 37, Figure 38, Figure 39, Figure 40, Figure 41, Figure 42 present polynomiographs for three test environments and the iterations discussed in the article. They will allow to generalise the observations discussed in the previous sections. In Figure 36 the Picard’s iteration, in Figure 37a,c,e the Mann’s iteration and in sub-figures (a), (e), (i) of Figure 38, Figure 39, Figure 40, Figure 41, Figure 42 polynomiographs realised by the base algorithm are presented. These polynomiographs are characterised by smooth boundaries of areas with different particle dynamics. The implementation of the algorithm’s modification consisting in the selection of position of the best reference point and particle causes the blur and rag of smooth edges of the areas presented on the polynomiographs obtained for the base algorithm. The selection of the best position of the reference point implemented in the Algorithm results in a significant reduction of small elements (details) of the patterns visible on the polynomiograph. It is a significant limitation of particle dynamics, which can be seen in the (b) sub-figures of Figure 38, Figure 39, Figure 40, Figure 41, Figure 42. Figure 37b,d,f and the (c) sub-figures of Figure 38, Figure 39, Figure 40, Figure 41, Figure 42 present polynomiographs of the Algorithm ( and ) selecting the best particle position. The operation of the algorithm results in much better presentation of small details in the polynomiograph—these are the areas in which the particle wants to achieve a worse position. Polynomiographs obtained by using the algorithm denoted by , i.e., with the selection of the best reference point and the best particle position, are shown in the (d) sub-figures of Figure 38, Figure 39, Figure 40, Figure 41, Figure 42. We can observe both features of the polynomiographs from the sub-figures (b) and (c) of Figure 38, Figure 39, Figure 40, Figure 41, Figure 42. However, the polynomiograph’s features have a dominant influence for choosing the best particle position. The analysis of the changes in particle dynamics resulting from the applied algorithm can also be performed by analysing the simulation time. The best position of the reference point has a significant impact on the reduction of particle dynamics. One can only complete the observations regarding the test environments. In environments , , the particle obtains similar ranges of dynamics, whereas for the environment the particle achieves larger dynamics ranges.

Figure 36.

Polynomiographs of the Picard iteration for the polynomiograph settings from the Figure 4d.

Figure 37.

Polynomiographs of the Mann iteration for the polynomiograph settings from the Figure 15b.

Figure 38.

Polynomiographs of the Ishikawa iteration for the polynomiograph settings from the Figure 18b.

Figure 39.

Polynomiographs of the Das–Debata iteration for the polynomiograph settings from the Figure 21a.

Figure 40.

Polynomiographs of the Agarwal iteration for the polynomiograph settings from the Figure 26a.

Figure 41.

Polynomiographs of the generalised Agarwal iteration for the polynomiograph settings from the Figure 29a.

Figure 42.

Polynomiographs of the Khan–Cho–Abbas iteration for the polynomiograph settings from the Figure 30a.

6. Conclusions

The paper proposes a modification of the Newton’s method and introduces algorithms based on it. Similar approaches are presented in many optimisation algorithms which require parameters tuning, e.g., the PSO algorithm. The discussion presented in the article allows showing inertia weight and the acceleration constant impact on the operation of the proposed algorithms. Both too large and too small dynamics of particle can have an adverse influence on the algorithm’s operation. Polynomiography is a tool illustrating the behaviour of particles. It also creates an artistic mosaics and allows obtaining images that can be named as art.

Author Contributions

All the authors have equally contributed. All authors have read and agree to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Polak, E. Optimization Algorithms and Consistent Approximations; Springer: New York, NY, USA, 1997. [Google Scholar] [CrossRef]

- Gosciniak, I. Discussion on semi-immune algorithm behaviour based on fractal analysis. Soft Comput. 2017, 21, 3945–3956. [Google Scholar] [CrossRef][Green Version]

- Weise, T. Global Optimization Algorithms—Theory and Application, 2nd ed. Self-Published. 2009. Available online: http://www.it-weise.de/projects/book.pdf (accessed on 1 May 2020).

- Zhang, W.; Ma, D.; Wei, J.; Liang, H. A Parameter Selection Strategy for Particle Swarm Optimization Based on Particle Positions. Expert Syst. Appl. 2014, 41, 3576–3584. [Google Scholar] [CrossRef]

- Van den Bergh, F.; Engelbrecht, A.P. A Convergence Proof for the Particle Swarm Optimiser. Fundam. Inf. 2010, 105, 341–374. [Google Scholar] [CrossRef]

- Freitas, D.; Lopes, L.; Morgado-Dias, F. Particle Swarm Optimisation: A Historical Review Up to the Current Developments. Entropy 2020, 22, 362. [Google Scholar] [CrossRef]

- Cheney, W.; Kincaid, D. Numerical Mathematics and Computing, 6th ed.; Brooks/Cole: Pacific Groove, CA, USA, 2007. [Google Scholar]

- Goh, B.; Leong, W.; Siri, Z. Partial Newton Methods for a System of Equations. Numer. Algebr. Control Optim. 2013, 3, 463–469. [Google Scholar] [CrossRef]

- Saheya, B.; Chen, G.Q.; Sui, Y.K.; Wu, C.Y. A New Newton-like Method for Solving Nonlinear Equations. SpringerPlus 2016, 5, 1269. [Google Scholar] [CrossRef]

- Sharma, J.; Sharma, R.; Bahl, A. An Improved Newton-Traub Composition for Solving Systems of Nonlinear Equations. Appl. Math. Comput. 2016, 290, 98–110. [Google Scholar] [CrossRef]

- Abbasbandy, S.; Bakhtiari, P.; Cordero, A.; Torregrosa, J.; Lotfi, T. New Efficient Methods for Solving Nonlinear Systems of Equations with Arbitrary Even Order. Appl. Math. Comput. 2016, 287–288, 94–103. [Google Scholar] [CrossRef]

- Xiao, X.Y.; Yin, H.W. Accelerating the Convergence Speed of Iterative Methods for Solving Nonlinear Systems. Appl. Math. Comput. 2018, 333, 8–19. [Google Scholar] [CrossRef]

- Alqahtani, H.; Behl, R.; Kansal, M. Higher-Order Iteration Schemes for Solving Nonlinear Systems of Equations. Mathematics 2019, 7, 937. [Google Scholar] [CrossRef]

- Awwal, A.; Wang, L.; Kumam, P.; Mohammad, H. A Two-Step Spectral Gradient Projection Method for System of Nonlinear Monotone Equations and Image Deblurring Problems. Symmetry 2020, 12, 874. [Google Scholar] [CrossRef]

- Wang, Q.; Zeng, J.; Jie, J. Modified Particle Swarm Optimization for Solving Systems of Equations. In Advanced Intelligent Computing Theories and Applications; Volume 2: Communications in Computer and Information Science; Huang, D., Heutte, L., Loog, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 361–369._41. [Google Scholar] [CrossRef]

- Ouyang, A.; Zhou, Y.; Luo, Q. Hybrid Particle Swarm Optimization Algorithm for Solving Systems of Nonlinear Equations. In Proceedings of the 2009 IEEE International Conference on Granular Computing, Nanchang, China, 17–19 August 2009; pp. 460–465. [Google Scholar] [CrossRef]

- Jaberipour, M.; Khorram, E.; Karimi, B. Particle Swarm Algorithm for Solving Systems of Nonlinear Equations. Comput. Math. Appl. 2011, 62, 566–576. [Google Scholar] [CrossRef]

- Li, Y.; Wei, Y.; Chu, Y. Research on Solving Systems of Nonlinear Equations Based on Improved PSO. Math. Probl. Eng. 2015, 2015, 727218. [Google Scholar] [CrossRef]

- Ibrahim, A.; Tawhid, M. A Hybridization of Cuckoo Search and Particle Swarm Optimization for Solving Nonlinear Systems. Evol. Intell. 2019, 12, 541–561. [Google Scholar] [CrossRef]

- Ibrahim, A.; Tawhid, M. A hybridization of Differential Evolution and Monarch Butterfly Optimization for Solving Systems of Nonlinear Equations. J. Comput. Des. Eng. 2019, 6, 354–367. [Google Scholar] [CrossRef]

- Liao, Z.; Gong, W.; Wang, L.; Yan, X.; Hu, C. A Decomposition-based Differential Evolution with Reinitialization for Nonlinear Equations Systems. Knowl. Based Syst. 2020, 191, 105312. [Google Scholar] [CrossRef]

- Kamsyakawuni, A.; Sari, M.; Riski, A.; Santoso, K. Metaheuristic Algorithm Approach to Solve Non-linear Equations System with Complex Roots. J. Phys. Conf. Ser. 2020, 1494, 012001. [Google Scholar] [CrossRef]

- Broer, H.; Takens, F. Dynamical Systems and Chaos; Springer: New York, NY, USA, 2011. [Google Scholar]

- Boeing, G. Visual Analysis of Nonlinear Dynamical Systems: Chaos, Fractals, Self-Similarity and the Limits of Prediction. Systems 2016, 4, 37. [Google Scholar] [CrossRef]

- Kalantari, B. Polynomial Root-Finding and Polynomiography; World Scientific: Singapore, 2009. [Google Scholar] [CrossRef]

- Gościniak, I.; Gdawiec, K. Control of Dynamics of the Modified Newton-Raphson Algorithm. Commun. Nonlinear Sci. Numer. Simul. 2019, 67, 76–99. [Google Scholar] [CrossRef]

- Ardelean, G. A Comparison Between Iterative Methods by Using the Basins of Attraction. Appl. Math. Comput. 2011, 218, 88–95. [Google Scholar] [CrossRef]

- Petković, I.; Rančić, L. Computational Geometry as a Tool for Studying Root-finding Methods. Filomat 2019, 33, 1019–1027. [Google Scholar] [CrossRef]

- Chun, C.; Neta, B. Comparison of Several Families of Optimal Eighth Order Methods. Appl. Math. Comput. 2016, 274, 762–773. [Google Scholar] [CrossRef]

- Chun, C.; Neta, B. Comparative Study of Methods of Various Orders for Finding Repeated Roots of Nonlinear Equations. J. Comput. Appl. Math. 2018, 340, 11–42. [Google Scholar] [CrossRef]

- Kalantari, B. Polynomiography: From the Fundamental Theorem of Algebra to Art. Leonardo 2005, 38, 233–238. [Google Scholar] [CrossRef]

- Gościniak, I.; Gdawiec, K. One More Look on Visualization of Operation of a Root-finding Algorithm. Soft Comput. 2020, in press. [Google Scholar] [CrossRef]

- Nammanee, K.; Noor, M.; Suantai, S. Convergence Criteria of Modified Noor Iterations with Errors for Asymptotically Nonexpansive Mappings. J. Math. Anal. Appl. 2006, 314, 320–334. [Google Scholar] [CrossRef]

- Gilbert, W. Generalizations of Newton’s Method. Fractals 2001, 9, 251–262. [Google Scholar] [CrossRef]

- Cordero, A.; Torregrosa, J. Variants of Newton’s Method using Fifth-order Quadrature Formulas. Appl. Math. Comput. 2007, 190, 686–698. [Google Scholar] [CrossRef]

- Magreñán, A.; Argyros, I. A Contemporary Study of Iterative Methods: Convergence, Dynamics and Applications; Academic Press: San Diego, CA, USA, 2018. [Google Scholar]

- Picard, E. Mémoire sur la théorie des équations aux dérivées partielles et la méthode des approximations successives. J. De Mathématiques Pures Et Appliquées 1890, 6, 145–210. [Google Scholar]

- Mann, W. Mean Value Methods in Iteration. Proc. Am. Math. Soc. 1953, 4, 506–510. [Google Scholar] [CrossRef]

- Ishikawa, S. Fixed Points by a New Iteration Method. Proc. Am. Math. Soc. 1974, 44, 147–150. [Google Scholar] [CrossRef]

- Agarwal, R.; O’Regan, D.; Sahu, D. Iterative Construction of Fixed Points of Nearly Asymptotically Nonexpansive Mappings. J. Nonlinear Convex Anal. 2007, 8, 61–79. [Google Scholar]

- Gdawiec, K.; Kotarski, W. Polynomiography for the Polynomial Infinity Norm via Kalantari’s Formula and Nonstandard Iterations. Appl. Math. Comput. 2017, 307, 17–30. [Google Scholar] [CrossRef]

- Das, G.; Debata, J. Fixed Points of Quasinonexpansive Mappings. Indian J. Pure Appl. Math. 1986, 17, 1263–1269. [Google Scholar]

- Khan, S.; Cho, Y.; Abbas, M. Convergence to Common Fixed Points by a Modified Iteration Process. J. Appl. Math. Comput. 2011, 35, 607–616. [Google Scholar] [CrossRef]

- Gdawiec, K. Fractal Patterns from the Dynamics of Combined Polynomial Root Finding Methods. Nonlinear Dyn. 2017, 90, 2457–2479. [Google Scholar] [CrossRef]

- Su, Y.; Qin, X. Strong Convergence of Modified Noor Iterations. Int. J. Math. Math. Sci. 2006, 2006, 21073. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).