Particle Swarm Optimisation: A Historical Review Up to the Current Developments

Abstract

1. Introduction

2. Particle Swarm Optimisation

The Gbest and Lbest Models

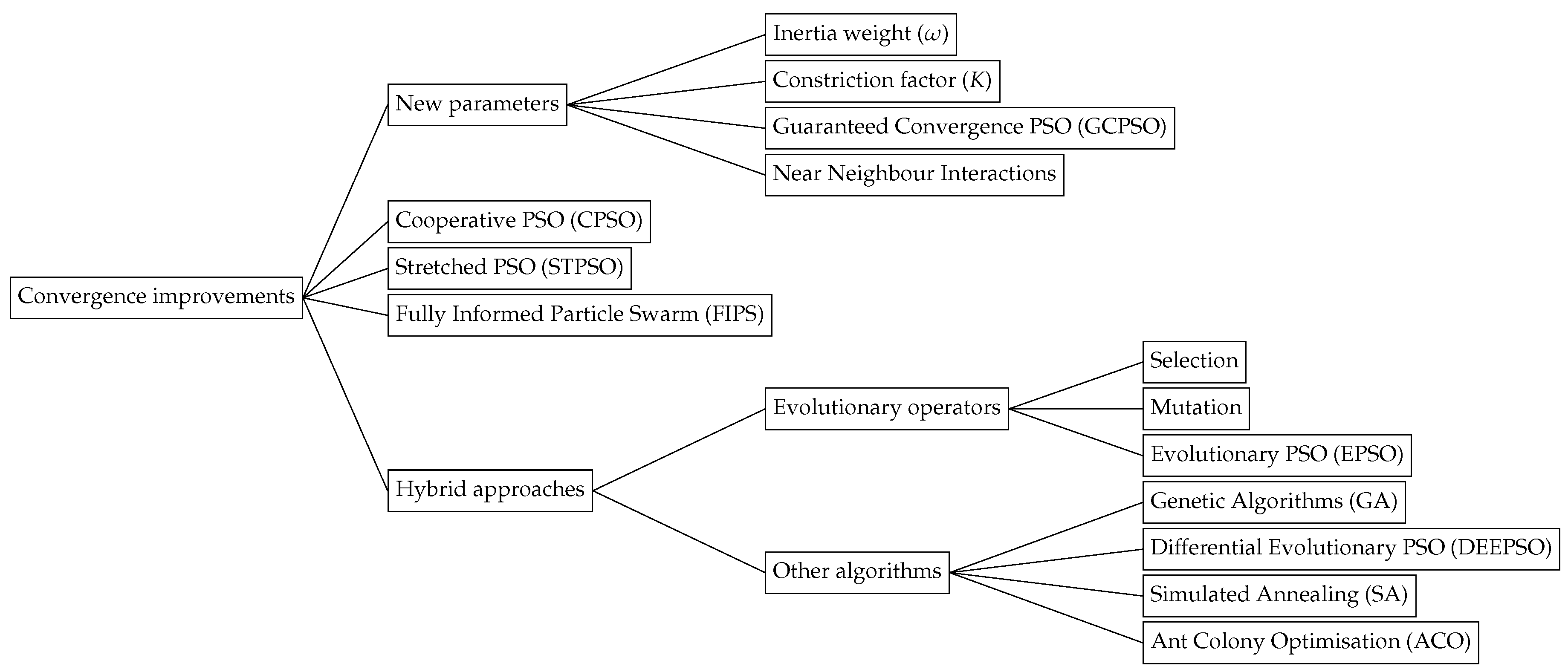

3. Modifications to the Particle Swarm Optimisation

3.1. Algorithm Convergence Improvements

3.1.1. The Inertia Weight Parameter

3.1.2. The Constriction Factor

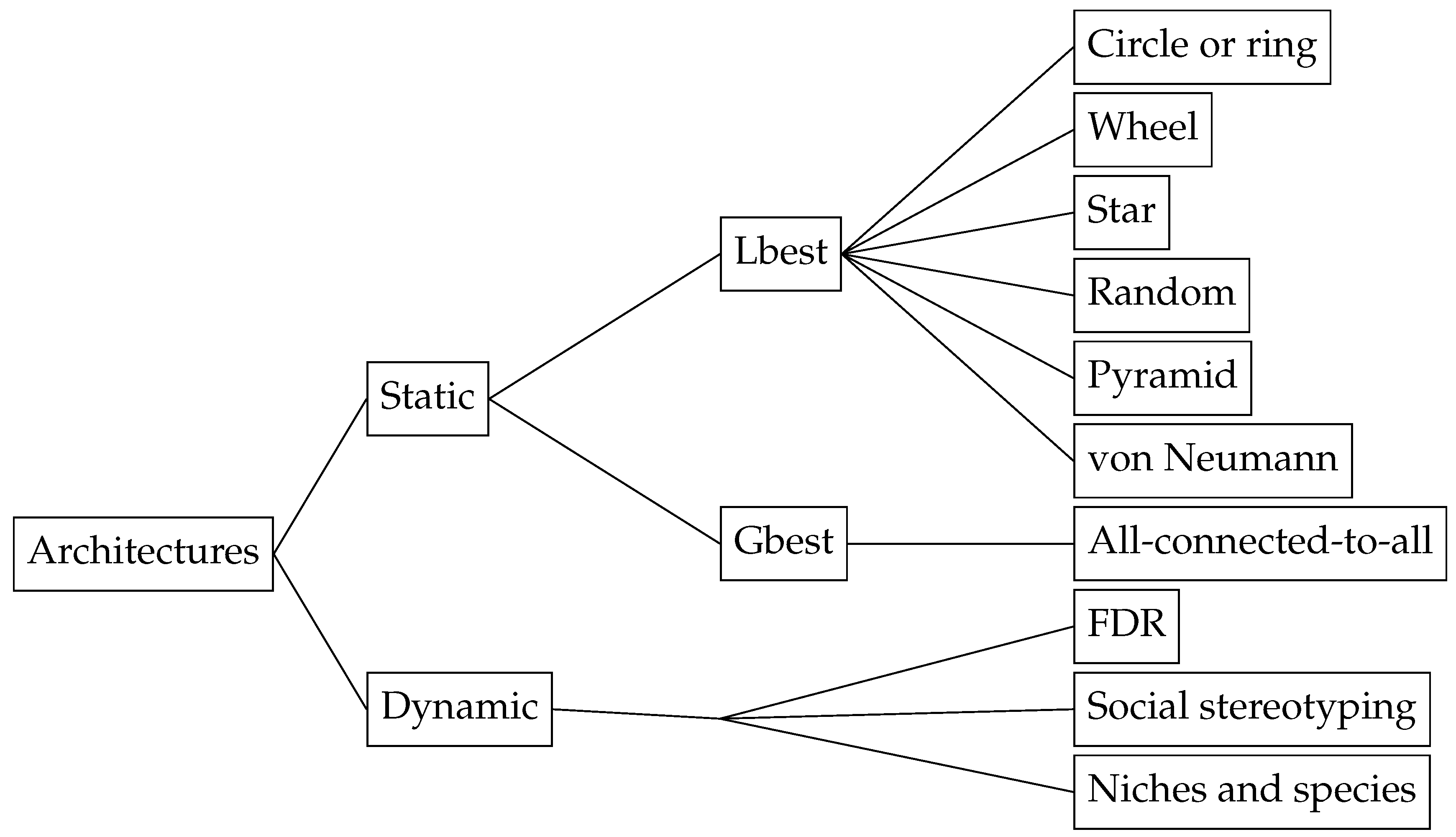

3.2. Neighbourhoods

3.2.1. Static Neighbourhood

3.2.2. Dynamic Neighbourhood

3.2.3. Near Neighbour Interactions

3.3. The Stagnation Problem

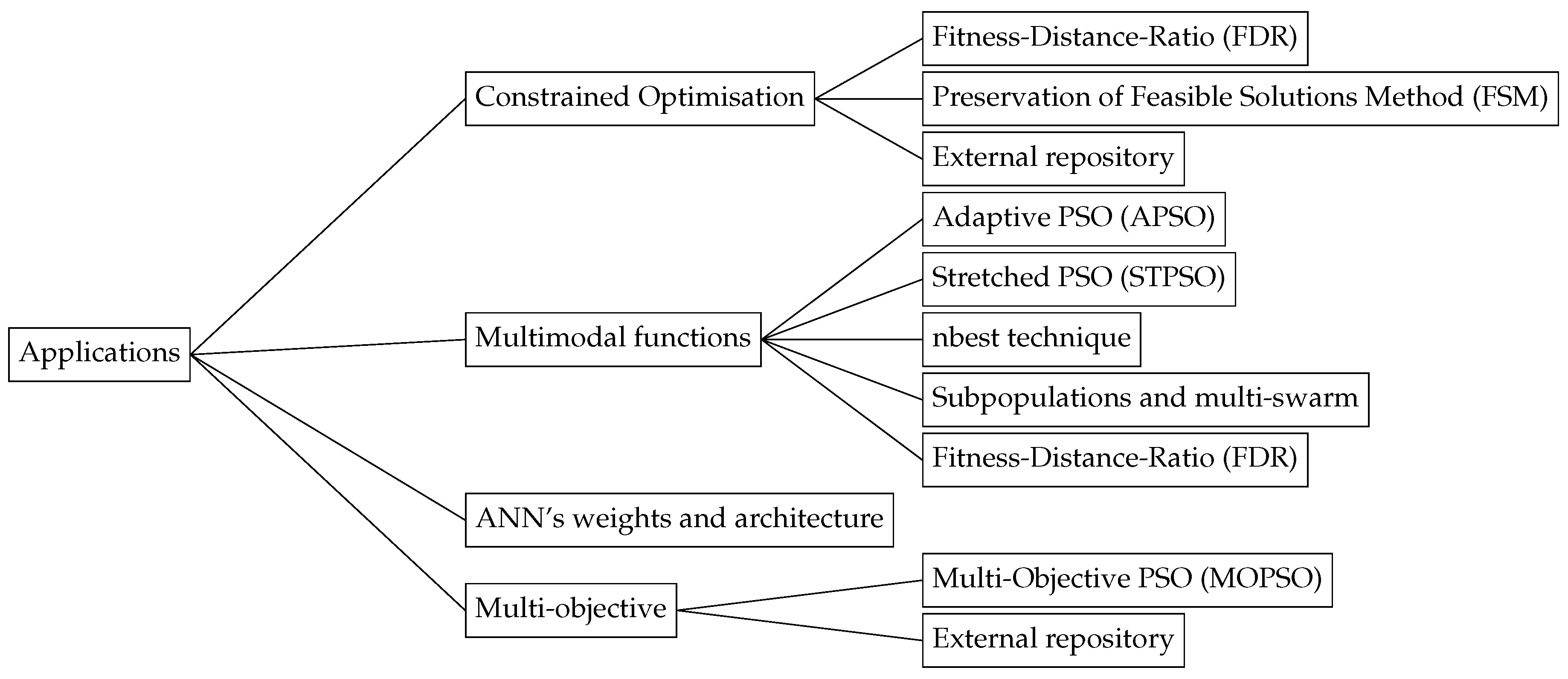

4. Particle Swarm Optimisation Variants

4.1. Cooperative Particle Swarm Optimisation

Two Steps Forward, One Step Back

4.2. Adaptive Particle Swarm Optimisation

4.3. Constrained Optimisation Problems

4.4. Multi-Objective Optimisation

4.5. Multimodal Function Optimisation

4.5.1. Objective Function Stretching

4.5.2. Nbest Technique

4.5.3. Subpopulations and Multi-Swarm

4.6. The Fully Informed Particle Swarm Optimisation

4.7. Parallel Implementations of Particle Swarm Optimisation

5. Connections to Other Artificial Intelligence Tools

5.1. Hybrid Variants of Particle Swarm Optimisation

5.1.1. Evolutionary Computation Operators

5.1.2. PSO with Genetic Algorithms

5.1.3. PSO With Differential Evolution

5.1.4. PSO with Simulated Annealing

5.1.5. PSO With Other Evolutionary Algorithms

5.2. Artificial Neural Networks with Particle Swarm Optimisation

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| PSO | Particle Swarm Optimisation |

| GA | Genetic Algorithms |

| ANN | Artificial Neural Network |

| FSM | Preservation of Feasible Solutions Method |

| GCPSO | Guaranteed Convergence PSO |

| FIPS | Fully Informed Particle Swarm |

| STPSO | Stretched PSO |

| APSO | Adaptive PSO |

| DE | Differential Evolution |

| EA | Evolutionary Algorithms |

| SA | Simulated Annealing |

| PPSO | Parallelized PSO |

| CPU | Central Processing Unit |

| GPU | Graphics Processing Unit |

| COP | Constrained Optimisation Problem |

| FDR | Fitness-Distance-Ratio |

| MPPSO | Multi-Phase PSO |

| SPSO | Standard PSO |

| CPSO | Cooperative PSO |

| MOPSO | Multi-Objective PSO |

| SCD | Special Crowding Distance |

| EPSO | Evolutionary PSO |

| DEEPSO | Differential Evolutionary PSO |

| ACO | Ant Colony Optimisation |

| DNSPSO | Diversity Enhanced PSO with Neighborhood Search |

| ABC | Artificial Bee Colony |

Mathematical notation

| D | Mean distance of each particle to other particles |

| Penalty function to be minimised or maximised | |

| Function stretching for multimodal function optimisation | |

| Penalty factor in a penalty function | |

| Constriction factor | |

| Neighbourhood of the particle i | |

| Cognitive uniformly distributed random vector used to compute the particle’s velocity | |

| Social uniformly distributed random vector used to compute the particle’s velocity | |

| S | Search space, defined by the domain of the function to be optimised, |

| that contains all the feasible solutions for the problem | |

| Diagonal matrix whose diagonal values are within the range of | |

| Absolute different between the last and the current best fitness value, or the algorithm accuracy | |

| Power of a penalty function | |

| Position of the best particle in the swarm or in the neighbourhood (target particle) | |

| Inertia weight parameter used to compute the velocity of each particle | |

| Diagonal matrix that represents the architecture of the swarm | |

| Scale parameter of Cauchy mutation | |

| Index of the global best particle in the swarm | |

| Multi-stage assignment function in a penalty function | |

| Cognitive real acceleration coefficient used to compute the particle’s velocity | |

| Social real acceleration coefficient used to compute the particle’s velocity | |

| Deviation real acceleration coefficient used to compute the particle’s velocity | |

| Prior best position that maximises the FDR measure | |

| Particle’s velocity | |

| Position of the centroid of the group j | |

| Global best position of a particle in the swarm | |

| Personal best position of particle i | |

| Position vector of a solution found in the search space | |

| Upper limit of the dimension d in the search space | |

| Lower limit of the dimension d in the search space | |

| Set of feasible solutions that forms the Pareto front | |

| d | Number of dimensions of the search space |

| Evolutionary factor used in the APSO | |

| Objective function to be minimised or maximised | |

| g | Set of inequality function constraints |

| h | Set of equality function constraints |

| Dynamic modified penalty value in a penalty function | |

| l | Number of particles in the swarm or in the neighbourhood |

| m | Number of inequality constraints |

| n | Non-linear modulation index |

| p | Number of equality constraints |

| Relative violated function of the constraints in a penalty function | |

| s | Number of particles in the swarm |

| t | The number of the current iteration |

| Parameter, in the form of a diagonal matrix, to add variability to the best position in the swarm |

References

- Eberhart, R.; Kennedy, J. A new optimizer using particle swarm theory. In Proceedings of the 6th International Symposium on Micro Machine and Human Science (MHS), Nagoya, Japan, 4–6 October 1995; pp. 39–43. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the International Conference on Neural Networks (ICNN), Perth, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Bonyadi, M.R.; Michalewicz, Z. Particle swarm optimization for single objective continuous space problems: A review. Evol. Comput. 2017, 25, 1–54. [Google Scholar] [CrossRef] [PubMed]

- Løvbjerg, M.; Rasmussen, T.K.; Krink, T. Hybrid particle swarm optimiser with breeding and subpopulations. In Proceedings of the 3rd Annual Conference on Genetic and Evolutionary Computation (GECCO), San Francisco, CA, USA, 7–11 July 2001; Volume 24, pp. 469–476. [Google Scholar]

- Kennedy, J. Small worlds and mega-minds: Effects of neighborhood topology on particle swarm performance. In Proceedings of the Congress on Evolutionary Computation (CEC), Washington, WA, USA, 6–9 July 1999; Volume 3, pp. 1931–1938. [Google Scholar]

- Clerc, M. The swarm and the queen: Towards a deterministic and adaptive particle swarm optimization. In Proceedings of the Congress on Evolutionary Computation (CEC), Washington, WA, USA, 6–9 July 1999; Volume 3, pp. 1951–1957. [Google Scholar]

- Kennedy, J.; Eberhart, R.C. A discrete binary version of the particle swarm algorithm. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics (SMC), Orlando, FL, USA, 12–15 October 1997; Volume 5, pp. 4104–4108. [Google Scholar]

- Rosendo, M.; Pozo, A. A hybrid particle swarm optimization algorithm for combinatorial optimization problems. In Proceedings of the IEEE Congress on Evolutionary Computation (CEC), Barcelona, Spain, 18–23 July 2010; pp. 1–8. [Google Scholar]

- Rosendo, M.; Pozo, A. Applying a discrete particle swarm optimization algorithm to combinatorial problems. In Proceedings of the 11th Brazilian Symposium on Neural Networks (SBRN), São Paulo, Brazil, 23–28 October 2010; pp. 235–240. [Google Scholar]

- Junliang, L.; Wei, H.; Huan, S.; Yaxin, L.; Jing, L. Particle swarm algorithm based task scheduling for many-core systems. In Proceedings of the 12th IEEE Conference on Industrial Electronics and Applications (ICIEA), Siem Reap, Cambodia, 18–20 June 2017; pp. 1860–1864. [Google Scholar]

- Ozcan, E.; Mohan, C.K. Analysis of a simple particle swarm optimization system. In Proceedings of the Intelligent Engineering Systems Through Artificial Neural Networks (ANNIE), St. Louis, MO, USA, 1–4 November 1998; Volume 8, pp. 253–258. [Google Scholar]

- Ozcan, E.; Mohan, C.K. Particle swarm optimization: Surfing the waves. In Proceedings of the Congress on Evolutionary Computation (CEC), Washington, WA, USA, 6–9 July 1999; Volume 3, pp. 1939–1944. [Google Scholar]

- Sun, C.; Zeng, J.; Pan, J.; Xue, S.; Jin, Y. A new fitness estimation strategy for particle swarm optimization. Inf. Sci. 2013, 221, 355–370. [Google Scholar] [CrossRef]

- Clerc, M.; Kennedy, J. The particle swarm – Explosion, stability, and convergence in a multidimensional complex space. IEEE Trans. Evol. Comput. 2002, 6, 58–73. [Google Scholar] [CrossRef]

- Shi, Y.; Eberhart, R.C. A modified particle swarm optimizer. In Proceedings of the IEEE World Congress on Computational Intelligence (WCCI), Anchorage, AK, USA, 4–9 May 1998; pp. 69–73. [Google Scholar]

- Van den Bergh, F. An Analysis of Particle Swarm Optimizers. Ph.D. Thesis, University of Pretoria, Pretoria, South Africa, 2002. [Google Scholar]

- Sengupta, S.; Basak, S.; Peters, R.A., II. Particle swarm optimization: A survey of historical and recent developments with hybridization perspectives. Mach. Learn. Knowl. Extr. 2018, 1, 10. [Google Scholar] [CrossRef]

- Eberhart, R.C.; Shi, Y. Tracking and optimizing dynamic systems with particle swarms. In Proceedings of the Congress on Evolutionary Computation (CEC), Seoul, Korea, 27–30 May 2001; Volume 1, pp. 94–100. [Google Scholar]

- Shi, Y.; Eberhart, R.C. Fuzzy adaptive particle swarm optimization. In Proceedings of the IEEE Congress on Evolutionary Computation (CEC), Seoul, Korea, 27–30 May 2001; Volume 1, pp. 101–106. [Google Scholar]

- Eberhart, R.C.; Shi, Y. Comparing inertia weights and constriction factors in particle swarm optimization. In Proceedings of the Congress on Evolutionary Computation (CEC), La Jolla, CA, USA, 16–19 July 2000; Volume 1, pp. 84–88. [Google Scholar]

- Xin, J.; Chen, G.; Hai, Y. A particle swarm optimizer with multi-stage linearly-decreasing inertia weight. In Proceedings of the International Joint Conference on Computational Sciences and Optimization (CSO), Sanya, China, 24–26 April 2009; Volume 1, pp. 505–508. [Google Scholar]

- Chatterjee, A.; Siarry, P. Nonlinear inertia weight variation for dynamic adaptation in particle swarm optimization. Comput. Oper. Res. 2006, 33, 859–871. [Google Scholar] [CrossRef]

- Eberhart, R.C.; Shi, Y. Particle swarm optimization: Developments, applications and resources. In Proceedings of the Congress on Evolutionary Computation (CEC), Seoul, Korea, 27–30 May 2001; Volume 1, pp. 81–86. [Google Scholar]

- Kar, R.; Mandal, D.; Bardhan, S.; Ghoshal, S.P. Optimization of linear phase FIR band pass filter using particle swarm optimization with constriction factor and inertia weight approach. In Proceedings of the IEEE Symposium on Industrial Electronics and Applications (ICIEA), Langkawi, Malaysia, 25–28 September 2011; pp. 326–331. [Google Scholar]

- Kennedy, J.; Mendes, R. Population structure and particle swarm performance. In Proceedings of the Congress on Evolutionary Computation (CEC), Honolulu, HI, USA, 12–17 May 2002; Volume 2, pp. 1671–1676. [Google Scholar]

- Suganthan, P.N. Particle swarm optimiser with neighbourhood operator. In Proceedings of the Congress on Evolutionary Computation (CEC), Washington, WA, USA, 6–9 July 1999; Volume 3, pp. 1958–1962. [Google Scholar]

- Kennedy, J. Stereotyping: Improving particle swarm performance with cluster analysis. In Proceedings of the Congress on Evolutionary Computation (CEC), La Jolla, CA, USA, 16–19 July 2000; Volume 2, pp. 1507–1512. [Google Scholar]

- Veeramachaneni, K.; Peram, T.; Mohan, C.; Osadciw, L.A. Optimization using particle swarms with near neighbor interactions. In Proceedings of the Genetic and Evolutionary Computation Conference (GECCO), Chicago, IL, USA, 12–16 July 2003; pp. 110–121. [Google Scholar]

- Peram, T.; Veeramachaneni, K.; Mohan, C.K. Fitness-distance-ratio based particle swarm optimization. In Proceedings of the IEEE Swarm Intelligence Symposium (SIS), Indianapolis, IN, USA, 26 April 2003; pp. 174–181. [Google Scholar]

- Van den Bergh, F.; Engelbrecht, A.P. A new locally convergent particle swarm optimiser. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics (SMC), Yasmine Hammamet, Tunisia, 6–9 October 2002; Volume 3, pp. 94–99. [Google Scholar]

- Brits, R.; Engelbrecht, A.P.; van den Bergh, F. A niching particle swarm optimizer. In Proceedings of the 4th Conference on Simulated Evolution and Learning (SEAL), Singapore, 18–22 November 2002; Volume 2, pp. 692–696. [Google Scholar]

- Higashi, N.; Iba, H. Particle swarm optimization with Gaussian mutation. In Proceedings of the IEEE Swarm Intelligence Symposium (SIS), Indianapolis, IN, USA, 26 April 2003; pp. 72–79. [Google Scholar]

- Tang, H.; Yang, X.; Xiong, S. Modified particle swarm algorithm for vehicle routing optimization of smart logistics. In Proceedings of the 2nd International Conference on Measurement, Information and Control (ICMIC), Harbin, China, 16–18 August 2013; Volume 2, pp. 783–787. [Google Scholar]

- Engelbrecht, A.P. Asynchronous particle swarm optimization with discrete crossover. In Proceedings of the IEEE Symposium on Swarm Intelligence (SIS), Orlando, FL, USA, 9–12 December 2014; pp. 1–8. [Google Scholar]

- Peer, E.S.; van den Bergh, F.; Engelbrecht, A.P. Using neighbourhoods with the guaranteed convergence PSO. In Proceedings of the IEEE Swarm Intelligence Symposium (SIS), Indianapolis, IN, USA, 26 April 2003; pp. 235–242. [Google Scholar]

- Van den Bergh, F.; Engelbrecht, A.P. Cooperative learning in neural networks using particle swarm optimizers. S. Afr. Comput. J. 2000, 26, 84–90. [Google Scholar]

- Van den Bergh, F.; Engelbrecht, A.P. A cooperative approach to particle swarm optimization. IEEE Trans. Evol. Comput. 2004, 8, 225–239. [Google Scholar] [CrossRef]

- Zhan, Z.; Zhang, J.; Li, Y.; Chung, H.S. Adaptive particle swarm optimization. IEEE Trans. Syst. Man Cybern. 2009, 39, 1362–1381. [Google Scholar] [CrossRef]

- Parsopoulos, K.E.; Vrahatis, M.N. Particle swarm optimization method for constrained optimization problem. In Intelligent Technologies: From Theory to Applications; IOS Press: Amsterdam, The Netherlands, 2002; pp. 214–220. ISBN 978-1-58603-256-2. [Google Scholar]

- Hu, X.; Eberhart, R. Solving constrained nonlinear optimization problems with particle swarm optimization. In Proceedings of the 6th World Multiconference on Systemics, Cybernetics and Informatics (SCI), Orlando, FL, USA, 14–18 July 2002; Volume 5, pp. 203–206. [Google Scholar]

- Hu, X.; Eberhart, R.C.; Shi, Y. Engineering optimization with particle swarm. In Proceedings of the IEEE Swarm Intelligence Symposium (SIS), Indianapolis, IN, USA, 26 April 2003; pp. 53–57. [Google Scholar]

- Coath, G.; Halgamuge, S.K. A comparison of constraint-handling methods for the application of particle swarm optimization to constrained nonlinear optimization problems. In Proceedings of the Congress on Evolutionary Computation (CEC), Canberra, Australia, 8–12 December 2003; Volume 4, pp. 2419–2425. [Google Scholar]

- He, S.; Prempain, E.; Wu, Q.H. An improved particle swarm optimizer for mechanical design optimization problems. Eng. Optim. 2004, 36, 585–605. [Google Scholar] [CrossRef]

- Sun, C.L.; Zeng, J.C.; Pan, J.S. An improved vector particle swarm optimization for constrained optimization problems. Inf. Sci. 2011, 181, 1153–1163. [Google Scholar] [CrossRef]

- Hu, X.; Eberhart, R.C. Multiobjective optimization using dynamic neighborhood particle swarm optimization. In Proceedings of the Congress on Evolutionary Computation (CEC), Honolulu, HI, USA, 12–17 May 2002; Volume 2, pp. 1677–1681. [Google Scholar]

- Coello Coello, C.A.; Salazar Lechuga, M. MOPSO: A proposal for multiple objective particle swarm optimization. In Proceedings of the Congress on Evolutionary Computation (CEC), Honolulu, HI, USA, 12–17 May 2002; Volume 2, pp. 1051–1056. [Google Scholar]

- Coello Coello, C.A.; Toscano Pulido, G.; Salazar Lechuga, M. Handling multiple objectives with particle swarm optimization. IEEE Trans. Evol. Comput. 2004, 8, 256–279. [Google Scholar] [CrossRef]

- Fieldsend, J.E.; Singh, S. A multi-objective algorithm based upon particle swarm optimisation, an efficient data structure and turbulence. In Proceedings of the UK Workshop on Computational Intelligence (UKCI), Birmingham, UK, 2–4 September 2002; pp. 37–44. [Google Scholar]

- Mostaghim, S.; Teich, J. Strategies for finding good local guides in multi-objective particle swarm optimization (MOPSO). In Proceedings of the IEEE Swarm Intelligence Symposium (SIS), Indianapolis, IN, USA, 26 April 2003; pp. 26–33. [Google Scholar]

- Parsopoulos, K.E.; Plagianakos, V.P.; Magoulas, G.D.; Vrahatis, M.N. Stretching technique for obtaining global minimizers through particle swarm optimization. In Proceedings of the PSO Workshop (PSOW), Indianapolis, IN, USA, 6–7 April 2001; Volume 29, pp. 22–29. [Google Scholar]

- Parsopoulos, K.E.; Vrahatis, M.N. Modification of the particle swarm optimizer for locating all the global minima. In Proceedings of the International Conference on Artificial Neural Nets and Genetic Algorithms (ICANNGA), Prague, Czech Republic, 22–25 April 2001; pp. 324–327. [Google Scholar]

- Brits, R.; Engelbrecht, A.P.; van den Bergh, F. Solving systems of unconstrained equations using particle swarm optimization. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics (SMC), Yasmine Hammamet, Tunisia, 6–9 October 2002; Volume 3, pp. 102–107. [Google Scholar]

- Parsopoulos, K.E.; Vrahatis, M.N. On the computation of all global minimizers through particle swarm optimization. IEEE Trans. Evol. Comput. 2004, 8, 211–224. [Google Scholar] [CrossRef]

- Blackwell, T.; Branke, J. Multi-swarm optimization in dynamic environments. In Proceedings of the Workshops on Applications of Evolutionary Computation, Coimbra, Portugal, 5–7 April 2004; pp. 489–500. [Google Scholar]

- Kennedy, J. The particle swarm: Social adaptation of knowledge. In Proceedings of the IEEE International Conference on Evolutionary Computation (ICEC), Indianapolis, IN, USA, 13–16 April 1997; pp. 303–308. [Google Scholar]

- Schoeman, I.L.; Engelbrecht, A.P. Using vector operations to identify niches for particle swarm optimization. In Proceedings of the IEEE Conference on Cybernetics and Intelligent Systems (CIS), Singapore, 1–3 December 2004; Volume 1, pp. 361–366. [Google Scholar]

- Schoeman, I.L.; Engelbrecht, A.P. A parallel vector-based particle swarm optimizer. In Proceedings of the 7th International Conference on Adaptive and Natural Computing Algorithms (ICANNGA), Coimbra, Portugal, 21–23 March 2005; pp. 268–271. [Google Scholar]

- Li, X. A multimodal particle swarm optimizer based on fitness euclidean-distance ratio. In Proceedings of the 9th Annual Conference on Genetic and Evolutionary Computation (GECCO), London, UK, 7–11 July 2007; pp. 78–85. [Google Scholar]

- Li, J.; Wan, D.; Chi, Z.; Hu, X. An efficient fine-grained parallel particle swarm optimization method based on GPU-acceleration. Int. J. Innov. Comput. Inf. Control. 2007, 3, 1707–1714. [Google Scholar]

- Li, X. Adaptively choosing neighbourhood bests using species in a particle swarm optimizer for multimodal function optimization. In Proceedings of the Genetic and Evolutionary Computation Conference (GECCO), Seattle, WA, USA, 26–30 June 2004; pp. 15–116. [Google Scholar]

- Parrott, D.; Li, X. A particle swarm model for tracking multiple peaks in a dynamic environment using speciation. In Proceedings of the Congress on Evolutionary Computation (CEC), Portland, OR, USA, 19–23 June 2004; Volume 1, pp. 98–103. [Google Scholar]

- Parrott, D.; Li, X. Locating and tracking multiple dynamic optima by a particle swarm model using speciation. IEEE Trans. Evol. Comput. 2006, 10, 440–458. [Google Scholar] [CrossRef]

- Li, X. Niching without niching parameters: Particle swarm optimization using a ring topology. IEEE Trans. Evol. Comput. 2010, 14, 150–169. [Google Scholar] [CrossRef]

- Yue, C.; Qu, B.; Liang, J. A multi-objective particle swarm optimizer using ring topology for solving multimodal multi-objective problems. IEEE Trans. Evol. Comput. 2018, 22, 805–817. [Google Scholar] [CrossRef]

- Mendes, R.; Kennedy, J.; Neves, J. The fully informed particle swarm: Simpler, maybe better. IEEE Trans. Evol. Comput. 2004, 8, 204–210. [Google Scholar] [CrossRef]

- Lalwani, S.; Sharma, H.; Satapathy, S.C.; Deep, K.; Bansal, J.C. A survey on parallel particle swarm optimization algorithms. Arab. J. Sci. Eng. 2019, 44, 2899–2923. [Google Scholar] [CrossRef]

- Gupta, M.; Deep, K. A state-of-the-art review of population-based parallel meta-heuristics. In Proceedings of the World Congress on Nature and Biologically Inspired Computing (NaBIC), Coimbatore, India, 9–11 December 2009; pp. 1604–1607. [Google Scholar]

- Gies, D.; Rahmat-Samii, Y. Reconfigurable array design using parallel particle swarm optimization. In Proceedings of the IEEE Antennas and Propagation Society International Symposium, Columbus, OH, USA, 22–27 June 2003; Volume 1, pp. 177–180. [Google Scholar]

- Baskar, S.; Suganthan, P.N. A novel concurrent particle swarm optimization. In Proceedings of the Congress on Evolutionary Computation (CEC), Portland, OR, USA, 19–23 June 2004; Volume 1, pp. 792–796. [Google Scholar]

- Chu, S.C.; Pan, J.S. Intelligent parallel particle swarm optimization algorithms. In Parallel Evolutionary Computations; Springer: Berlin, Germany, 2006; pp. 159–175. ISBN 978-3-540-32839-1. [Google Scholar]

- Chang, J.F.; Chu, S.C.; Roddick, J.; Pan, J.S. A parallel particle swarm optimization algorithm with communication strategies. J. Inf. Sci. Eng. 2005, 21, 809–818. [Google Scholar]

- Schutte, J.F.; Fregly, B.J.; Haftka, R.T.; George, A.D. A Parallel Particle Swarm Optimizer; Technical Report; Department of Electrical and Computer Engineering, University of Florida: Gainesville, FL, USA, 2003. [Google Scholar]

- Schutte, J.F.; Reinbolt, J.A.; Fregly, B.J.; Haftka, R.T.; George, A.D. Parallel global optimization with the particle swarm algorithm. Int. J. Numer. Methods Eng. 2004, 61, 2296–2315. [Google Scholar] [CrossRef]

- Venter, G.; Sobieszczanski-Sobieski, J. Parallel particle swarm optimization algorithm accelerated by asynchronous evaluations. J. Aerosp. Comput. Inf. Commun. 2006, 3, 123–137. [Google Scholar] [CrossRef]

- Koh, B.I.; George, A.D.; Haftka, R.T.; Fregly, B.J. Parallel asynchronous particle swarm optimization. Int. J. Numer. Meth. Eng. 2006, 67, 578–595. [Google Scholar] [CrossRef] [PubMed]

- McNabb, A.W.; Monson, C.K.; Seppi, K.D. Parallel PSO using MapReduce. In Proceedings of the IEEE Congress on Evolutionary Computation (CEC), Singapore, 25–28 September 2007; pp. 7–14. [Google Scholar]

- Aljarah, I.; Ludwig, S.A. Parallel particle swarm optimization clustering algorithm based on MapReduce methodology. In Proceedings of the 4th World Congress on Nature and Biologically Inspired Computing (NaBIC), Mexico City, Mexico, 5–9 November 2012; pp. 104–111. [Google Scholar]

- Han, F.; Cui, W.; Wei, G.; Wu, S. Application of parallel PSO algorithm to motion parameter estimation. In Proceedings of the 9th International Conference on Signal Processing (SIP), Beijing, China, 26–29 October 2008; pp. 2493–2496. [Google Scholar]

- Gülcü, Ş.; Kodaz, H. A novel parallel multi-swarm algorithm based on comprehensive learning particle swarm optimization. Eng. Appl. Artif. Intell. 2015, 45, 33–45. [Google Scholar] [CrossRef]

- Cao, B.; Zhao, J.; Lv, Z.; Liu, X.; Yang, S.; Kang, X.; Kang, K. Distributed parallel particle swarm optimization for multi-objective and many-objective large-scale optimization. IEEE Access 2017, 5, 8214–8221. [Google Scholar] [CrossRef]

- Lorion, Y.; Bogon, T.; Timm, I.J.; Drobnik, O. An agent based parallel particle swarm optimization—APPSO. In Proceedings of the IEEE Swarm Intelligence Symposium (SIS), Nashville, TN, USA, 30 March–2 April 2009; pp. 52–59. [Google Scholar]

- Dali, N.; Bouamama, S. GPU-PSO: Parallel particle swarm optimization approaches on graphical processing unit for constraint reasoning: Case of Max-CSPs. Procedia Comput. Sci. 2015, 60, 1070–1080. [Google Scholar] [CrossRef]

- Rymut, B.; Kwolek, B. GPU-supported object tracking using adaptive appearance models and particle swarm optimization. In Proceedings of the International Conference on Computer Vision and Graphics (ICCVG), Warsaw, Poland, 20–22 September 2010; pp. 227–234. [Google Scholar]

- Zhou, Y.; Tan, Y. GPU-based parallel particle swarm optimization. In Proceedings of the IEEE Congress on Evolutionary Computation (CEC), Trondheim, Norway, 18–21 May 2009; pp. 1493–1500. [Google Scholar]

- Hussain, M.M.; Hattori, H.; Fujimoto, N. A CUDA implementation of the standard particle swarm optimization. In Proceedings of the 18th International Symposium on Symbolic and Numeric Algorithms for Scientific Computing (SYNASC), Timisoara, Romania, 24–27 September 2016; pp. 219–226. [Google Scholar]

- Mussi, L.; Daolio, F.; Cagnoni, S. Evaluation of parallel particle swarm optimization algorithms within the CUDA™ architecture. Inf. Sci. 2011, 181, 4642–4657. [Google Scholar] [CrossRef]

- Zhu, H.; Pu, C.; Eguchi, K.; Gu, J. Euclidean particle swarm optimization. In Proceedings of the 2nd International Conference on Intelligent Networks and Intelligent Systems (ICINIS), Tianjin, China, 1–3 November 2009; pp. 669–672. [Google Scholar]

- Zhu, H.; Guo, Y.; Wu, J.; Gu, J.; Eguchi, K. Paralleling Euclidean particle swarm optimization in CUDA. In Proceedings of the 4th International Conference on Intelligent Networks and Intelligent Systems (ICINIS), Kunming, China, 1–3 November 2011; pp. 93–96. [Google Scholar]

- Awwad, O.; Al-Fuqaha, A.; Ben Brahim, G.; Khan, B.; Rayes, A. Distributed topology control in large-scale hybrid RF/FSO networks: SIMT GPU-based particle swarm optimization approach. Int. J. Commun. Syst. 2013, 26, 888–911. [Google Scholar] [CrossRef]

- Hung, Y.; Wang, W. Accelerating parallel particle swarm optimization via GPU. Optim. Methods Softw. 2012, 27, 33–51. [Google Scholar] [CrossRef]

- Angeline, P.J. Using selection to improve particle swarm optimization. In Proceedings of the IEEE World Congress on Computational Intelligence (WCCI), Anchorage, AK, USA, 4–9 May 1998; pp. 84–89. [Google Scholar]

- Yang, B.; Chen, Y.; Zhao, Z. A hybrid evolutionary algorithm by combination of PSO and GA for unconstrained and constrained optimization problems. In Proceedings of the IEEE International Conference on Control and Automation (ICCA), Guangzhou, China, 30 May–1 June 2007; pp. 166–170. [Google Scholar]

- Jana, J.; Suman, M.; Acharyya, S. Repository and mutation based particle swarm optimization (RMPSO): A new PSO variant applied to reconstruction of gene regulatory network. Appl. Soft Comput. 2019, 74, 330–355. [Google Scholar] [CrossRef]

- Stacey, A.; Jancic, M.; Grundy, I. Particle swarm optimization with mutation. In Proceedings of the Congress on Evolutionary Computation (CEC), Canberra, Australia, 8–12 December 2003; Volume 2, pp. 1425–1430. [Google Scholar]

- Imran, M.; Jabeen, H.; Ahmad, M.; Abbas, Q.; Bangyal, W. Opposition based PSO and mutation operators. In Proceedings of the 2nd International Conference on Education Technology and Computer (ICETC), Shanghai, China, 22–24 June 2010; Volume 4, pp. 506–508. [Google Scholar]

- Miranda, V.; Fonseca, N. EPSO—Best-of-two-worlds meta-heuristic applied to power system problems. In Proceedings of the Congress on Evolutionary Computation (CEC), Honolulu, HI, USA, 12–17 May 2002; Volume 2, pp. 1080–1085. [Google Scholar]

- Darwin, C. The Origin of Species; Oxford University Press: Oxford, UK, 1998; ISBN 978-019-283-438-6. [Google Scholar]

- Wang, H.; Sun, H.; Li, C.; Rahnamayan, S.; Pan, J.S. Diversity enhanced particle swarm optimization with neighborhood search. Inf. Sci. 2013, 223, 119–135. [Google Scholar] [CrossRef]

- Robinson, J.; Sinton, S.; Rahmat-Samii, Y. Particle swarm, genetic algorithm, and their hybrids: Optimization of a profiled corrugated horn antenna. In Proceedings of the IEEE Antennas and Propagation Society International Symposium, San Antonio, TX, USA, 16–21 June 2002; Volume 1, pp. 314–317. [Google Scholar]

- Juang, C.F. A hybrid of genetic algorithm and particle swarm optimization for recurrent network design. IEEE Trans. Syst. Man Cybern. 2004, 34, 997–1006. [Google Scholar] [CrossRef] [PubMed]

- Valdez, F.; Melin, P.; Castillo, O. Evolutionary method combining particle swarm optimization and genetic algorithms using fuzzy logic for decision making. In Proceedings of the IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), Jeju Island, Korea, 20–24 August 2009; pp. 2114–2119. [Google Scholar]

- Alba, E.; Garcia-Nieto, J.; Jourdan, L.; Talbi, E. Gene selection in cancer classification using PSO/SVM and GA/SVM hybrid algorithms. In Proceedings of the IEEE Congress on Evolutionary Computation (CEC), Singapore, 25–28 September 2007; pp. 284–290. [Google Scholar]

- Fu, Y.; Ding, M.; Zhou, C.; Hu, H. Route planning for unmanned aerial vehicle (UAV) on the sea using hybrid differential evolution and quantum-behaved particle swarm optimization. IEEE Trans. Syst. Man Cybern. Syst. 2013, 43, 1451–1465. [Google Scholar] [CrossRef]

- Yang, J.; Zhang, H.; Ling, Y.; Pan, C.; Sun, W. Task allocation for wireless sensor network using modified binary particle swarm optimization. IEEE Sens. J. 2014, 14, 882–892. [Google Scholar] [CrossRef]

- Tian, G.; Ren, Y.; Zhou, M. Dual-objective scheduling of rescue vehicles to distinguish forest fires via differential evolution and particle swarm optimization combined algorithm. IEEE Trans. Intell. Transp. Syst. 2016, 17, 3009–3021. [Google Scholar] [CrossRef]

- Senthilnath, J.; Kulkarni, S.; Benediktsson, J.A.; Yang, X.S. A novel approach for multispectral satellite image classification based on the Bat algorithm. IEEE Geosci. Remote. Sens. Lett. 2016, 13, 599–603. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential evolution—A simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Das, S.; Suganthan, P.N. Differential evolution: A survey of the state-of-the-art. IEEE Trans. Evol. Comput. 2011, 15, 4–31. [Google Scholar] [CrossRef]

- Hendtlass, T. A combined swarm differential evolution algorithm for optimization problems. In Proceedings of the International Conference on Industrial, Engineering and Other Applications of Applied Intelligent Systems (IEA/AIE), Budapest, Hungary, 4–7 June 2001; pp. 11–18. [Google Scholar]

- Zhang, W.J.; Xie, X.F. DEPSO: Hybrid particle swarm with differential evolution operator. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics (SMC), Washington, WA, USA, 5–8 October 2003; Volume 4, pp. 3816–3821. [Google Scholar]

- Luitel, B.; Venayagamoorthy, G.K. Differential evolution particle swarm optimization for digital filter design. In Proceedings of the IEEE Congress on Evolutionary Computation (CEC), Hong Kong, China, 1–6 June 2008; pp. 3954–3961. [Google Scholar]

- Talbi, H.; Batouche, M. Hybrid particle swarm with differential evolution for multimodal image registration. In Proceedings of the IEEE International Conference on Industrial Technology (ICIT), Hammamet, Tunisia, 8–10 December 2004; Volume 3, pp. 1567–1572. [Google Scholar]

- Xu, R.; Xu, J.; Wunsch, D.C. Clustering with differential evolution particle swarm optimization. In Proceedings of the IEEE Congress on Evolutionary Computation (CEC), Barcelona, Spain, 18–23 July 2010; pp. 1–8. [Google Scholar]

- Miranda, V.; Alves, R. Differential evolutionary particle swarm optimization (DEEPSO): A successful hybrid. In Proceedings of the BRICS Congress on Computational Intelligence and 11th Brazilian Congress on Computational Intelligence (BRICS-CCI & CBIC), Ipojuca, Brazil, 8–11 September 2013; pp. 368–374. [Google Scholar]

- Abdullah, A.; Deris, S.; Hashim, S.Z.M.; Mohamad, M.S.; Arjunan, S.N.V. An improved local best searching in particle swarm optimization using differential evolution. In Proceedings of the 11th International Conference on Hybrid Intelligent Systems (HIS), Malacca, Malaysia, 5–8 December 2011; pp. 115–120. [Google Scholar]

- Omran, M.G.H.; Engelbrecht, A.P.; Salman, A. Differential evolution based particle swarm optimization. In Proceedings of the IEEE Swarm Intelligence Symposium (SIS), Honolulu, HI, USA, 1–5 April 2007; pp. 112–119. [Google Scholar]

- Pant, M.; Thangaraj, R.; Grosan, C.; Abraham, A. Hybrid differential evolution—Particle swarm optimization algorithm for solving global optimization problems. In Proceedings of the 3rd International Conference on Digital Information Management, London, UK, 13–16 November 2008; pp. 18–24. [Google Scholar]

- Epitropakis, M.G.; Plagianakos, V.P.; Vrahatis, M.N. Evolving cognitive and social experience in particle swarm optimization through differential evolution. In Proceedings of the IEEE Congress on Evolutionary Computation (CEC), Barcelona, Spain, 18–23 July 2010; pp. 1–8. [Google Scholar]

- Zhang, C.; Ning, J.; Lu, S.; Ouyang, D.; Ding, T. A novel hybrid differential evolution and particle swarm optimization algorithm for unconstrained optimization. Oper. Res. Lett. 2009, 37, 117–122. [Google Scholar] [CrossRef]

- Xiao, L.; Zuo, X. Multi-DEPSO: A DE and PSO based hybrid algorithm in dynamic environments. In Proceedings of the IEEE Congress on Evolutionary Computation (CEC), Brisbane, Australia, 10–15 June 2012; pp. 1–7. [Google Scholar]

- Omran, M.G.; Engelbrecht, A.P.; Salman, A. Bare bones differential evolution. Eur. J. Oper. Res. 2009, 196, 128–139. [Google Scholar] [CrossRef]

- Das, S.; Konar, A.; Chakraborty, U.K. Annealed differential evolution. In Proceedings of the IEEE Congress on Evolutionary Computation (CEC), Singapore, 25–28 September 2007; pp. 1926–1933. [Google Scholar]

- Yang, G.; Chen, D.; Zhou, G. A new hybrid algorithm of particle swarm optimization. In Proceedings of the International Conference on Intelligent Computing (ICICA), Kunming, China, 16–19 August 2006; pp. 50–60. [Google Scholar]

- Wang, X.H.; Li, J.J. Hybrid particle swarm optimization with simulated annealing. In Proceedings of the International Conference on Machine Learning and Cybernetics (ICMLC), Shanghai, China, 26–29 August 2004; Volume 4, pp. 2402–2405. [Google Scholar]

- Zhao, F.; Zhang, Q.; Yu, D.; Chen, X.; Yang, Y. A hybrid algorithm based on PSO and simulated annealing and its applications for partner selection in virtual enterprise. In Proceedings of the International Conference on Intelligent Computing (ICICA), Hefei, China, 23–26 August 2005; pp. 380–389. [Google Scholar]

- Sadati, N.; Zamani, M.; Mahdavian, H.R.F. Hybrid particle swarm-based-simulated annealing optimization techniques. In Proceedings of the 32nd Annual Conference on IEEE Industrial Electronics (IECON), Paris, France, 6–10 November 2006; pp. 644–648. [Google Scholar]

- Xia, W.J.; Wu, Z.M. A hybrid particle swarm optimization approach for the job-shop scheduling problem. Int. J. Adv. Manuf. Technol. 2006, 29, 360–366. [Google Scholar] [CrossRef]

- Chu, S.C.; Tsai, P.W.; Pan, J.S. Parallel particle swarm optimization algorithms with adaptive simulated annealing. In Stigmergic Optimization; Springer: Berlin, Germany, 2006; pp. 261–279. ISBN 978-3-540-34689-0. [Google Scholar]

- Shieh, H.L.; Kuo, C.C.; Chiang, C.M. Modified particle swarm optimization algorithm with simulated annealing behavior and its numerical verification. Appl. Math. Comput. 2011, 218, 4365–4383. [Google Scholar] [CrossRef]

- Deng, X.; Wen, Z.; Wang, Y.; Xiang, P. An improved PSO algorithm based on mutation operator and simulated annealing. Int. J. Multimed. Ubiquitous Eng. 2015, 10, 369–380. [Google Scholar] [CrossRef]

- Dong, X.; Ouyang, D.; Cai, D.; Zhang, Y.; Ye, Y. A hybrid discrete PSO-SA algorithm to find optimal elimination orderings for bayesian networks. In Proceedings of the 2nd International Conference on Industrial and Information Systems (ICIIS), Dalian, China, 10–11 July 2010; Volume 1, pp. 510–513. [Google Scholar]

- He, Q.; Wang, L. A hybrid particle swarm optimization with a feasibility-based rule for constrained optimization. Appl. Math. Comput. 2007, 186, 1407–1422. [Google Scholar] [CrossRef]

- Shelokar, P.; Siarry, P.; Jayaraman, V.; Kulkarni, B. Particle swarm and ant colony algorithms hybridized for improved continuous optimization. Appl. Math. Comput. 2007, 188, 129–142. [Google Scholar] [CrossRef]

- Ghodrati, A.; Lotfi, S. A hybrid CS/GA algorithm for global optimization. In Proceedings of the International Conference on Soft Computing for Problem Solving (SocProS), Roorkee, India, 20–22 December 2011; pp. 397–404. [Google Scholar]

- Shi, X.; Li, Y.; Li, H.; Guan, R.; Wang, L.; Liang, Y. An integrated algorithm based on artificial bee colony and particle swarm optimization. In Proceedings of the 6th International Conference on Natural Computation (ICNC), Yantai, China, 10–12 August 2010; Volume 5, pp. 2586–2590. [Google Scholar]

- Eberhart, R.C.; Hu, X. Human tremor analysis using particle swarm optimization. In Proceedings of the Congress on Evolutionary Computation (CEC), Washington, WA, USA, 6 August 2002; Volume 3, pp. 1927–1930. [Google Scholar]

- Engelbrecht, A.P.; Ismail, A. Training product unit neural networks. Stab. Control Theory Appl. 1999, 2, 59–74. [Google Scholar]

- Zhang, C.; Shao, H.; Li, Y. Particle swarm optimisation for evolving artificial neural network. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics (SMC), Nashville, TN, USA, 8–11 October 2000; Volume 4, pp. 2487–2490. [Google Scholar]

- Chatterjee, A.; Pulasinghe, K.; Watanabe, K.; Izumi, K. A particle-swarm-optimized fuzzy-neural network for voice-controlled robot systems. IEEE Trans. Ind. Electron. 2005, 52, 1478–1489. [Google Scholar] [CrossRef]

- Gudise, V.G.; Venayagamoorthy, G.K. Comparison of particle swarm optimization and backpropagation as training algorithms for neural networks. In Proceedings of the IEEE Swarm Intelligence Symposium (SIS), Indianapolis, IN, USA, 26 April 2003; pp. 110–117. [Google Scholar]

- Mendes, R.; Cortez, P.; Rocha, M.; Neves, J. Particle swarms for feedforward neural network training. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Honolulu, HI, USA, 12–17 May 2002; Volume 2, pp. 1895–1899. [Google Scholar]

- Ince, T.; Kiranyaz, S.; Gabbouj, M. A generic and robust system for automated patient-specific classification of ECG signals. IEEE Trans. Biomed. Eng. 2009, 56, 1415–1426. [Google Scholar] [CrossRef]

- Pehlivanoglu, Y.V. A new particle swarm optimization method enhanced with a periodic mutation strategy and neural networks. IEEE Trans. Evol. Comput. 2013, 17, 436–452. [Google Scholar] [CrossRef]

- Quan, H.; Srinivasan, D.; Khosravi, A. Short-term load and wind power forecasting using neural network-based prediction intervals. IEEE Trans. Neural Netw. Learn. Syst. 2014, 25, 303–315. [Google Scholar] [CrossRef]

- Garro, B.A.; Sossa, H.; Vazquez, R.A. Design of artificial neural networks using a modified particle swarm optimization algorithm. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Atlanta, GA, USA, 14–19 June 2009; pp. 938–945. [Google Scholar]

- Al-Kazemi, B.; Mohan, C.K. Training feedforward neural networks using multi-phase particle swarm optimization. In Proceedings of the 9th International Conference on Neural Information Processing (ICONIP), Singapore, 18–22 November 2002; Volume 5, pp. 2615–2619. [Google Scholar]

- Al-Kazemi, B.; Mohan, C.K. Multi-phase discrete particle swarm optimization. In Proceedings of the 6th Joint Conference on Information Sciences (JCIS), Research Triange Park, NC, USA, 8–15 March 2002; pp. 622–625. [Google Scholar]

- Al-Kazemi, B.; Mohan, C. Discrete multi-phase particle swarm optimization. In Information Processing with Evolutionary Algorithms: From Industrial Applications to Academic Speculations; Springer: London, UK, 2005; pp. 305–327. ISBN 978-1-85233-866-4. [Google Scholar]

- Conforth, M.; Meng, Y. Toward evolving neural networks using bio-inspired algorithms. In Proceedings of the International Conference on Artificial Intelligence (IC-AI), Las Vegas, NV, USA, 14–17 July 2008; pp. 413–419. [Google Scholar]

- Hamada, M.; Hassan, M. Artificial neural networks and particle swarm optimization algorithms for preference prediction in multi-criteria recommender systems. Informatics 2018, 5, 25. [Google Scholar] [CrossRef]

- Zweiri, Y.H.; Whidborne, J.F.; Seneviratne, L.D. A three-term backpropagation algorithm. Neurocomputing 2003, 50, 305–318. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Freitas, D.; Lopes, L.G.; Morgado-Dias, F. Particle Swarm Optimisation: A Historical Review Up to the Current Developments. Entropy 2020, 22, 362. https://doi.org/10.3390/e22030362

Freitas D, Lopes LG, Morgado-Dias F. Particle Swarm Optimisation: A Historical Review Up to the Current Developments. Entropy. 2020; 22(3):362. https://doi.org/10.3390/e22030362

Chicago/Turabian StyleFreitas, Diogo, Luiz Guerreiro Lopes, and Fernando Morgado-Dias. 2020. "Particle Swarm Optimisation: A Historical Review Up to the Current Developments" Entropy 22, no. 3: 362. https://doi.org/10.3390/e22030362

APA StyleFreitas, D., Lopes, L. G., & Morgado-Dias, F. (2020). Particle Swarm Optimisation: A Historical Review Up to the Current Developments. Entropy, 22(3), 362. https://doi.org/10.3390/e22030362