Abstract

Thermodynamics establishes a relation between the work that can be obtained in a transformation of a physical system and its relative entropy with respect to the equilibrium state. It also describes how the bits of an informational reservoir can be traded for work using Heat Engines. Therefore, an indirect relation between the relative entropy and the informational bits is implied. From a different perspective, we define procedures to store information about the state of a physical system into a sequence of tagging qubits. Our labeling operations provide reversible ways of trading the relative entropy gained from the observation of a physical system for adequately initialized qubits, which are used to hold that information. After taking into account all the qubits involved, we reproduce the relations mentioned above between relative entropies of physical systems and the bits of information reservoirs. Some of them hold only under a restricted class of coding bases. The reason for it is that quantum states do not necessarily commute. However, we prove that it is always possible to find a basis (equivalent to the total angular momentum one) for which Thermodynamics and our labeling system yield the same relation.

1. Introduction

The role of information in Thermodynamics [1,2] already has a long history. Arguably, it is best exhibited in Information Heat Engines [3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22]. They are devices that cyclically extract energy from a thermal reservoir and deliver it as mechanical work. They do so by increasing the entropy of a set of bits from an information reservoir [1,23,24,25,26,27,28,29,30]. There are differences between classical bits and quantum qubits [31,32,33,34,35,36], but they share the same maximum efficiency [37,38]. Appendix A describes a basic model of an Information Heat Engine.

Physical systems in a state out of thermal equilibrium also allow the production of work. It turns out to be related to the relative entropy with respect to the equilibrium state , again an informational quantity (in this paper, always represents the binary logarithm of x). Appendix B contains a short derivation of this result. Some recent reviews compile a variety of properties and functional descriptions of relative entropy [39,40,41,42]. Probably, the most closely connected to this paper is its interpretation as the average extra number of bits that are employed when a code optimized for a given probabilistic distribution of words is used for some other. This paper contributes a new procedure that also reveals a direct connection between the relative entropy of physical systems and information reservoirs circumventing Thermodynamics. It focuses on the quantum case, particularly when the relative entropy is defined for non-commuting density matrices.

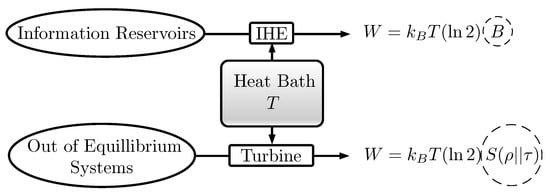

The generation of work in Information Heat Engines always requires the transfer of thermal energy from a heat reservoir and needs adequate steering of a Hamiltonian. In Szilard Engines [14,43,44], they occur at the same time as the piston moves within the cylinder. In the one particle case, every bit from an information reservoir enables the generation of mechanical work. Other thermal machines, such as turbines, also imply tuning Hamiltonians and heat transfer. Combining these devices, it is possible to produce work by increasing the entropy of informational qubits and use it to build up the relative entropy of a physical system with respect to its thermal equilibrium state. The net heat and work would vanish (see Figure 1). This consideration naturally motivates the question of whether it would be possible to do the same transformation only with informational manipulations, without any reference to Hamiltonians, temperatures or heat baths.

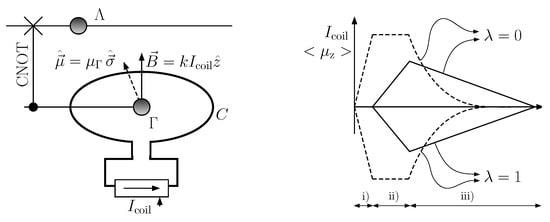

Figure 1.

According to Thermodynamics, work can be reversibly obtained from a heat bath by consuming B bits of an information reservoir and also by decreasing its relative entropy with respect to the thermal state .

In order to simplify the quantification of the resources involved, we exclusively consider unitary operations. In addition, we only allow observational interactions on the physical system. This restricts the set of transformations to those defined by controlled unitary gates, in which the state of the physical system remains in the controlling part. Basically, we compute the informational cost of labeling a physical system by considering the number of pure state tagging qubits at the input minus those recovered at the output. In the following, we may use the terms “initialize” or “reset” to denote the action of driving a tagging qubit to a state.

The tagging operation implies using some coding procedure to correlate the quantum states of a physical system and its label. Conversely, we also consider the process of deleting that correlation and returning the tagging qubits to an information reservoir. We assume that the code has to be optimal in the sense that it uses the least average amount of bits to identify the state of a physical system. Averaging is defined with respect to an underlying probability distribution of states. For this reason, we choose a Shannon coding technique; it is asymptotically optimal and provides a simple relation between the lengths and the probabilities of the codewords. We consider two degrees of tagging. The tight-labeling implies a reversible assignation of a label to every physical system. It is described in Section 2. The loose-labeling implies tight-labeling a collection of physical systems followed by a random shuffling. It is studied in Section 3. The discussion is presented in Section 4 and the conclusions in Section 5. A simple example, using magnetic spins, is given in Appendix E, in order to illustrate some of the ideas presented in the paper.

2. Tight Labeling

In this section, we present a method for writing a label, consisting of a set of qubits, with the purpose of representing the quantum state of a physical system. We call it tight because each label is assigned unambiguously to the state of the physical system that it is attached to.

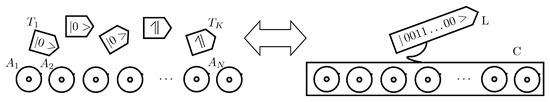

We consider a sufficiently large set of identical physical systems that will be referred to as atoms. For simplicity, a two-dimensional Hilbert space for them is assumed. The statistical distribution for each atom is determined by its quantum state, which is known to be . The eigenstates of are denoted by and their eigenvalues are ordered as . Atoms are grouped in clusters of a common length N. Besides the atoms, we assume unlimited availability of tagging qubits in either a pure or a maximally mixed state (see Figure 2).

Figure 2.

Our labeling procedures assume the availability of tagging qubits in either a or a maximally mixed state, and physical systems, referred to as atoms. The labeling assigns a set of H tagging qubits to a cluster of N atoms. The cost is defined as the number of tagging qubits in state employed.

We consider a coding basis that diagonalizes . Its vectors are denoted by , where can be either 0 or 1.

The operations considered are:

- Any unitary transformation on a system T of tagging qubits.

- Unitary transformations on joint states of cluster C and a system T of K tagging qubits, that are defined, using the basis for C and the computational basis for T, by:where is any function that transforms binary strings of N bits into strings of K bits. They can be considered as just probing operations with respect to clusters. It is also easy to check that .

The cost of any operation will be tallied as the number of tagging qubits in a pure state that are input and not recovered at the output.

We further choose a binary Shannon lossless code for the sequences according to a frequency given by the eigenvalue of that corresponds to . The procedure defines a function that assigns a binary codeword to every string . Let H be the maximum length of all the codewords. We further define a label as an array of H tagging qubits. The vectors of the label computational basis are denoted by , where can be either 0 or 1.

The coding procedure determines a unitary operator that acts on every cluster–label pair . It can be defined by specifying how the elements of the basis transform; it is given by:

where represents the Shannon codeword supplemented with the necessary trailing 0s to match a length H.

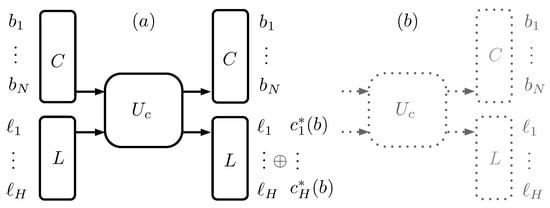

Labeling an N-atoms cluster C in state means applying the unitary operator to the cluster and a label of H tagging qubits in a state, as is depicted in Figure 3. When is diagonal in the basis, the operation is equivalent to the classical operation of coding according to the Shannon method and storing the codeword in the label. The resulting state is a classical statistical mixture of cluster–label pairs.

Figure 3.

(a) represents the coding procedure as a unitary operation , controlled by the cluster C, on the tagging qubits of the label L. If the tagging qubits are initially in a state, they hold the coded string for the cluster; (b) represents the inverse operation, which is equal to , so that is the identity transformation.

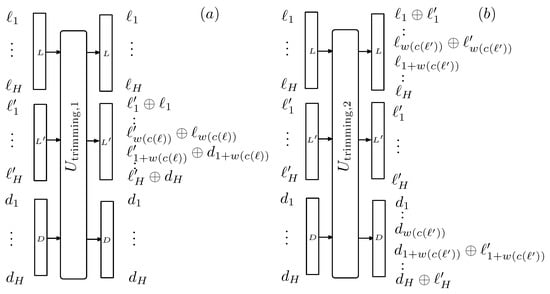

We define the width of the codeword assigned to the ket as the number of bits in . Only the leading qubits of the label contain information. The trailing qubits of L are superfluous and should be replaced by others in a completely mixed state. For this purpose, we define a new unitary operation by which the trailing qubits are swapped with those of a new label D. In the labeling process, D contains H maximally mixed qubits. The operation of is depicted in Figure 4 and explained more elaborately in Appendix C.

Figure 4.

Representation of the procedure employed for replacing the trailing qubits that need not be used in the codeword by maximally mixed ones, as explained in Appendix C. It is split into two unitary transformations. The first one, represented in (a), copies the first qubits of L into that enters with all its tagging qubits in the state. The remaining qubits are copied from the maximally mixed qubits of D. The second transformation, represented in (b), resets the L label and the trailing qubits of D. The overall function is recovering qubits in state and generate a new label with maximally mixed trailing qubits.

In our setting, the width of the codeword is a quantum variable represented by the operator , which operates on the Hilbert space of the cluster. Its eigenvectors are those of the basis, and its eigenvalues are the widths of their Shannon codewords. Its effect on the basis vectors is:

and also represents the cost of the labeling procedure. We further define the atomic width as . For sufficiently large N, converges to . Accordingly,

Thus, for sufficiently large N, the average atomic width for a codeword in state is given by:

which, taking into account that , can be rewritten as

and represents the atomic cost of labeling clusters in state . It is straightforward to reverse the process and check that an atomic yield of

is obtained.

A cluster in state can be described as being in a probabilistic mixture of eigenstates of . Each of them defines a tight-label that allows for unambiguously identifying which eigenstate of it is attached to. The cost expressed by Equation (6) represents the average length of the codewords that correspond to clusters drawn according to the distribution defined by . The equivalent situation in Thermodynamics corresponds to averaging the work that is necessary to produce pure state physical systems (as spin systems in the example of Appendix E) out of equilibrium, following the distribution given by . However, there is a subtle difference from the case that we want to model in the next section. In it, we still have clusters whose states are drawn from the distribution defined by , but we ignore the particular state of each cluster. To cope with this new situation, it would be sufficient to overlook the precise label that is attached to each cluster, but keeping the distribution that corresponds to . This is implemented through a process of label shuffling for a collection of clusters in state . The procedure is described in Section 3.

3. Loose Labeling

The tagging procedure put forth in the previous section outputs maximally correlated cluster-label pairs. In this section, we describe a procedure that reduces the correlation. To this end, the label L assigned to cluster C will be the codeword of another cluster that belongs to a collection F of M clusters, all of them in state . Therefore, the state of F is . Figure 5 represents the process with unitary gates that acts on a collection of M labeled cluster pairs , a random set of P tagging qubits and an auxiliary label collection . The role played by is to generate a random shuffling of the labels. The process is analyzed in Appendix D, where it is shown that the average number of qubits in the array that exit in a state verifies that, for large M, converges to , where is the entropy associated with the probability distribution for the possible labels of the elements of the basis.

Figure 5.

The figure describes the unitary process used to shuffle the labels of a collection F of M tight-labeled clusters, as is explained in Appendix D. Besides the labels, a set of P maximally mixed qubits and a set of M labels , all in state enter the first unitary gate (a). It shuffles the labels , according to the permutation indicated by the qubits and stores the result in the labels. The second gate (b) resets the qubits that signaled the permutation. The last gate resets the labels by regenerating them from the clusters.

Therefore, taking into account the cost of tight-labeling the F collection, given by Equation (5), the one of loosely tagging the clusters of F is

which leads to a value per atom:

Next, we will find a suitable expression for . Each label is assigned to a vector of the basis. Its frequency is given by . The probabilities for the set of codewords are the eigenvalues of the density matrix

It is immediate to check that . Thus, Equation (9) can be rewritten as

Notice that represents a CPTP (Completely Positive Trace Preserving) map, which is not a unitary transformation. However, all the operations of our labeling system are unitary. The CPTP map is used here just as a means to find a convenient expression for , not as a real operation on the state of the clusters and labels.

Next, we define a particular base for which Equation (11) will only retain the last term in the limit.

Let be the operators represented by the Pauli matrices in the base, which diagonalizes . In the Hilbert space of the -th atom of a cluster, they are denoted by . For the whole cluster, we define:

Let be the basis which diagonalizes (the well-known momentum basis in Quantum Physics).

In the basis, any state is a mixture of states , where is a state defined within the invariant subspace of . The entropy of this mixture is the sum of the entropy associated with the mixture and the weighted entropy of the different :

The same decomposition can be applied to the state. Taking into account that commute,

so that using Equation (13) for , and substracting both entropies, we arrive at

The maximum entropy of a state is , where is the dimension of the -th invariant subspace. Its maximum value is . Therefore, the absolute value of the right half side of Equation (15) can not be greater than . In the limit,

so that, for sufficiently large N,

4. Discussion

A well-known situation in Thermodynamics is the availability of a thermal reservoir of freely available, non-interacting atoms in a Gibbs state, given by:

where is the Hamiltonian of each atom, T is the temperature and represents the partition function.

A cluster of atoms in another state can be used to obtain work from the energy of the thermal reservoir. The obtainable work per atom, as given in [41,45] and derived in Appendix B, is

and is usually collected by some physical means (mechanical, electromagnetic, etc.) which involves coupling to thermal baths and mechanical or electromagnetic energy-storage systems. Remarkably, it only depends on the relative entropy that is connected to the physics through the dependence of on the Hamiltonian and the temperature T. The work obtained matches the heat transferred from the thermal reservoir plus the decrease of the internal energy of the atom. After the process, the state of the atom is . The reverse operation, driving a system initially in state to an out of equilibrium state demands the same amount of work to be supplied in the process.

In this paper, we have come across the relative entropy from a very different approach. We have chosen to employ a coding system that asymptotically minimizes the labeling cost for . Shannon coding for state satisfies this requirement. In the process of labeling, we incur a cost that can be evaluated after substitution of for in Equation (17), in the case of loose-labeling. It is equivalent to the process of driving a system from state to state , with the following observation: while in the Thermodynamic operation the process of transforming requires work, in the labeling approach, it needs qubits in the state.

In a different Thermodynamic setting, we know that work can also be obtained from informational qubits at Information Heat Engines in the presence of a heat bath. Typically, they enter in a known pure state (say ) and exit in a maximally mixed one. They need to be coupled to a physical system that should be able to equilibrate with a thermal reservoir and couple to an external energy storage. The work obtained per qubit is

Accordingly, it is clear that the relative entropy of physical systems with respect to a thermal state can be traded for bits of information reservoirs by means of engines and heat baths within a Thermodynamic context.

We claim that, in this paper, we have described a way to do the same with purely informational manipulations. Furthermore, the physical system is accessed for probing operations that reduce to acting on the information qubits according to its state. Labeling is a particular kind of these processes.

However, the loose-labeling cost, given by Equation (11), depends on the labeling strategy through the choice of the coding basis . The atomic cost does not converge to the relative entropy unless converges to . This is trivial if commute, but, in a general quantum scenario, it can not be assumed. Nonetheless, even in the non-commuting case, it is accomplished by using the eigenbasis described in Section 3. For the general case, when another basis is chosen, does not converge to and the loose-labeling costs are lower than the quantum relative entropy. This can be deduced by substracting both:

where we have taken into account that, because is diagonal in , then

Notice that , so that Equation (21) can be written as

which is always positive by the monotonicity of quantum relative entropy.

At any point, tracing out the label places the reduced state of the cluster back to . Therefore, we interact with the cluster just to obtain or delete information about it. The parallel with the situation in Thermodynamics is clear: bits from information reservoirs are traded for changing the relative entropy of state with respect to . From this point of view, the most important aspect of a physical system in a thermodynamical setting is knowing its state, so that it can be used to supply the corresponding work. It is the state that fixes the process by which work is obtained.

The two types of labeling described exhibit different costs. It is quite obvious because the loose-labeling implies shuffling. This leads to labels that are related not to a particular cluster, but to a collection of them that share some particular state. It is natural that the work given by Equation (19) is related to this cost because it assumes a process which is common to all of them. However, if we have a tight-labeled collection of clusters, we can process each one in a different way, chosen according to its label. Then, each cluster would contribute a work given by the relative entropy of the pure state identified according to the label. The average work value would be given by:

which corresponds to the cost of tight-labeling, given by Equation (6), irrespective of the particular choice of the coding basis.

From another perspective, loose-labeling is essentially the process of disarranging the tight-labels of a collection of M clusters. Let us first assume that commute. Asymptotically, as , the number of possible orders for the set of labels tends to , which is precisely the difference between Equations (6) and (17). From a physical point of view, both expressions point to slightly different situations. When a thermal engine is tuned to supply work from a physical system out of equilibrium, its configuration depends on the state of the system. Each pure or mixed state requires different settings. Let be the work obtained from a system in a pure state . Next, we consider two cases:

- (a)

- the pure state of each physical system is known, and the setting can be adjusted according to it. Then, the average work obtained by processing a collection of physical systems is the weighted average of all the , each one contributing according to its corresponding eigenvalue in the density matrix . It is given by Equation (24).

- (b)

- only the collective mixed state of the collection is known. Then, the engine is tuned with a different set of parameters, and the average value of the extracted work is lower than in the previous case. It is given in Equation (19).

Situations (a) and (b) correspond to the tight and loose-labeling techniques, respectively. Work is immediately translated by information heat engines into reservoir bits. The conversion factor is given in Equation (20). The difference in the average value of work in (a) and (b) translates exactly into bits. The same results can be extended to the case when do not commute, provided that coding is defined in a suitable basis.

5. Conclusions

In this paper, we have described two ways to label physical systems grouped in clusters. In both procedures, unitary transformations operate on a Hilbert space determined by the cluster and additional informational qubits. In the tight-labeling method, the label identifies pure states of the cluster, while, in the loose-labeling case, the label is chosen at random from a collection of clusters that share the same mixed quantum state. The costs of both procedures have been deduced in the asymptotic limit of infinite equal systems. The evaluation has been made counting the number of informational qubits in the pure state in the final and the initial situations. They are related to the von Neumann and relative entropies , where is the state of the physical systems and is the state relative to which the labeling is optimized. In both processes, no manipulation of the physical system is attempted. Its intervention only exhibits an observational character.

We have shown that the atomic cost of tight-labeling converges to the sum of the von Neumann and relative entropies . Remarkably, this result does not depend on the coding basis. However, in the loose-labeling case, the atomic cost depends not only on , but also on the coding basis. It is bounded by the relative entropy , to which it can converge when a right basis is chosen, as explained in Section 3.

Assuming this choice of basis, we have shown that the costs of labeling, both in the tight and loose versions, correspond to what thermodynamical processing predicts by combining the models of (a) work extraction from physical systems out of equilibrium, and (b) information heat engines powered by pure state qubits. Through writing and erasing labels, we have presented a procedure to trade relative entropy for von Neumann entropy of the physical system just by informational manipulation.

Funding

The author acknowledges support from MINECO/FEDER Project FIS 2017-91460-EXP.

Acknowledgments

The author wishes to thank support from MINECO/FEDER Projects FIS 2017-91460-EXP, PGC2018-099169-B-I00 FIS-2018 and from CAM/FEDER Project No. S2018/TCS-4342 (QUITEMAD-CM).

Conflicts of Interest

The author declares no conflict of interest.

Appendix A. Magnetic Spin Information Heat Engine

In order to illustrate the connection between information reservoirs and thermal machines that is mentioned in the Introduction, this appendix describes a short version of a magnetic Quantum Information Heat Engine [17]. Its input is a low entropy qubit from an information reservoir, and its output is twofold: the same qubit in a higher entropy state and electrical work is delivered to a magnetic coil through an induced electromotive force. The energy stems from a thermal reservoir at temperature T. The engine is reversible and can be employed to lower the entropy of qubits from an information reservoir if some work is supplied.

Figure A1.

On the left, the basics components of the magnetic quantum information engine. The CNOT (Controlled NOT) gate is used to correlate the information and the magnetic qubits. The evolution of the electric current is controlled by the state of . The magnetic induction field at that is generated by the coil is . The right part of the figure contains a graph of the evolutions od (solid and dashed lines, respectively). The upper branches represent the case , whereas the lower branches take place when , as described in Appendix A.

The physical system within the engine (see Figure A1) consists of an internal magnetic spin particle that sits in the magnetic field generated by a classical induction coil C. The z-component of the magnetic moment of is , where is the third Pauli matrix. The machine operates cyclically, according to the following steps:

- (i)

- The electric current in the coil is initially null. The qubit from the information reservoir enters in a partially mixed state . The magnetic qubit is initially in a maximally mixed state . They undergo a CNOT (Controlled NOT) operation, controlled by the magnetic qubit. The result is an entangled mixed state:which defines two conditional states for the magnetic qubit , each one corresponding to a state of the informational qubit .

- (ii)

- In this stage, the magnetic qubit is isolated from the thermal reservoir. The current in the coil is raised to a value controlled by . It is determined by making equal to the Gibbs state for the corresponding value of the magnetic field , at generated by the current . The equation that fixes the two possible values of iswhich, together with Equation (A2), yields

- (iii)

- The current is gradually turned down until it is completely switched off. The process occurs slowly enough for assuming a thermal equilibrium state for throughout this stage. Therefore, the final states for are maximally mixed. Only the qubit exits in a different state than it started in. The current and the qubit are reset to their initial conditions.

Let be the contributions to the magnetic flux through the coil from its own current and the magnetic spin , respectively. We further assume that the induction field generated by the coil current at the position of is . By the reciprocity theorem [46], we can state that . The differential work on the coil can be evaluated by

which, in a cycle, integrates to

where

The net energy supplied to the current source is

where is the value of throughout stage ii), which is easily evaluated to

Now, Equation (A8) can be rewritten as

which, in both cases , yields the same result:

which represents the potential of the qubits in the information reservoir to produce work. If qubits in a state are used, Equation (20) is obtained.

Appendix B. Work Output from a System Out of Thermal Equilibrium

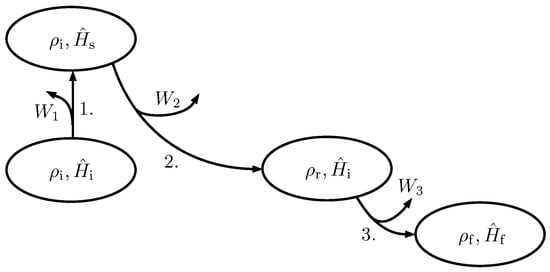

In this appendix, we derive the average work that can be obtained from a physical system in an initial state with Hamiltonian when we reversibly drive the system to a thermal equilibrium state with Hamiltonian , related through Equation (18). The conclusion has already been given elsewhere [41], and we only present an alternative derivation that fits better with this paper. The process is reversible so that the work obtained is the maximum that can be achieved. We split the transformation into three steps (represented in Figure A2):

Figure A2.

Representation of the process described in Appendix B for the work extraction in a process beginning with Hamiltonian in state and ending in Hamiltonian and state . Stages 2 and 3 are isothermal.

- The Hamiltonian is changed from to the operator for which is in thermal equilibrium at temperature T. It is given byIn this step, the Hamiltonian varies quickly, so that the state does not change. Considering that there is no heat exchange in this step, the work obtained is

- The Hamiltonian is slowly taken back to , while thermal equilibrium is assured. Therefore, the system is driven to the state given by:

- While keeping thermal equilibrium, the Hamiltonian is taken from to . The work is obtained as in the previous step and yields:

The final count gives

The quantity is known as the free energy for a physical system with Hamiltonian in equilibrium at temperature T. Equation (A19) can be rewritten as

In the context of this paper, we assume that and the work that can be extracted from a system out of equilibrium is directly related to the relative entropy

Appendix C. Label Trimming

In this appendix, we describe the trimming operation depicted in Figure 4. The leading qubits of label L hold the codeword assigned to the cluster. The trailing qubits are in a state. The purpose of the operation is to replace them with worthless qubits in a maximally mixed state. It is split into two unitary operations. The first one performs a transformation on three labels . enters the process with all its tagging qubits in a state, while those of D are maximally mixed. The operation is controlled by the qubits of L and copies its leading qubits to . The remaining ones are copied from D. Now, holds the desired label. The following operation, carried out by the gate , aims at resetting the L label and the trailing qubits of D. It is controlled by where the codeword is now stored. Considering the whole operation, valuable qubits are recovered in the process.

Appendix D. Label Shuffling

In this appendix, we explain how the shuffling of labels depicted in Figure 5 can be implemented by a unitary transformation. We have split the process into three operations, performed by the unitary gates and . The first gate operates on three groups of tagging qubits: are collections of M labels and J is a collection of P qubits, where P is equal to . Initially, W contains the labels generated for a group E of M clusters; all the tagging qubits in V are in a state and all the qubits in J are in a maximally mixed state. The gate writes in V the values in W according to the p-th permutation of the labels . The value of p is read from the random group J. The unnecessary trailing qubits of each label are then trimmed according to the procedure described in Appendix C.

The second gate, , identifies the p of the permutation from the values of and resets the qubits of J that host the value of p.

The number of possible values for each label is . Let be the i-th one and the number of labels in the collection F that are equal to . The number of permutations of the labels is

The leading qubits of are reset to state after the gate. For large M, , where is the entropy associated with the probability distribution for the possible labels of the elements of the basis.

After the gate, W still holds the ordered collection of labels, while V holds a shuffled set. The last operation performed by , resets the ordered set by using the collection of clusters that generated them. The final state is a collection of reset labels at W, a shuffled set at V, and a collection of state qubits that correspond to the leading members of J.

Appendix E. Example

In order to illustrate the contents of this paper, we next present a simple example. Let us assume that the atoms are magnetic spin systems in the presence of a Hamiltonian

which determines a Gibbs state

If we have a cluster of N spins in a pure state , we know, from Equation (A21), that we can obtain an average work of

The same value is necessary for the reverse operation, whereby a system of N spins in state is obtained from N systems in state , provided that work is supplied.

The same work can be obtained at an Information Heat Engine if bits from an information reservoir are used. This consideration sets an exchange rate between spins and bits given by bits per spin. We have described a particular model of Information Heat Engine that employs magnetic spins in [47]. Work is extracted by exposing the spins to a sequence of interactions with a suitable magnetic field in the presence of a heat bath at temperature T. The magnetic field is chosen according to the state of the spin.

Tight-labeling a cluster C of N spins implies applying a Shannon coding procedure that assigns a sequence L of tagging qubits to C. The coding procedure defined in Section 2 consists of two steps. We have found that the best way to keep track of the costs is to employ unitary transformations. They are defined by their action on the elements of a particular basis . We require to be diagonal in . For , there are many possible choices of . Let be the eigenvalue of for the element b of . Our coding procedure begins by assigning to b a sequence of tagging qubits. The leading represents the Shannon code for b, while the trailing ones are state qubits (see Figure 3). The operation is defined in Equation (2). Then, the valuable trailing qubits are recovered by the trimming procedure described in Appendix C and represented in Figure 4.

In Section 2, we prove that the tight-labeling of a cluster of N spins in state , according to a Shannon coding system optimized for a distribution of states given by , requires exactly bits per spin in the limit. This is the same number of bits per spin found for the Thermodynamic approach at the beginning of this section. However, we would like to underline the different contexts in which they have been derived. They come as answers to the following questions:

- How many bits from an information reservoir are needed to match the work that is required to take a cluster of N spins out of thermal equilibrium?

- How many bits are needed to label the state of a cluster of N spins using a coding system optimized for a given distribution of states?

Note that, in the second context, there are no concepts like temperature, heat, work or Hamiltonian. However, the coincidence of the answers to both questions could have been predicted after some abstraction was made about the first situation. Considering the initial and final states of spins and information reservoir bits, the net result is the obtention of some spins in a known state after employing a number of bits from an information reservoir. In the labeling scenario, the same process has happened. Before the operation, in both cases, picking a cluster at random implies a quantum state for it. At the end, we can know that it is in state .

For the reverse process, given the unitarity of the tight-labeling procedure, un-labeling a state restores the state of the label qubits. In the Thermodynamic scenario, processing a spin in state , produces an average amount of work that can be used to reset the given number of informational bits. Again, in both contexts, we see the same initial and final situations.

Collections of spin clusters sampled from the same state can then undergo a loose-labeling procedure by which their tight-labels are reassigned at random. In this particular example, defined by a pure state , all the labels are equal and no informational qubits are recovered. In fact, , so that the unitary costs of tight- and loose-labeling are the same and are given by the relative entropy .

The most relevant result of the paper is that this situation holds even when the state to be labeled does not commute with the state that describes the underlying distribution, provided that a particular basis is chosen to define the coding procedure. In the example presented here, it is the basis that diagonalizes the magnitude and the z component of the total spin of the cluster.

Let us consider a state and its density matrix . Unlike , does not commute with . It is impossible to find a basis that simultaneously diagonalizes and . Therefore, the tight-label assigned to must be a linear combination of the labels defined for the elements of . In the basis, the collection of M systems in the state is described by a superposition of basis states. Some of them contain the same labels for the M clusters, but others do not and thus may undergo a shuffling process that allows the recovery of some reset qubits. Its average number per cluster is given by , as defined in Appendix D. However, if the basis is chosen, then tends to 0 and the relative entropy is recovered as the unit cost, as explained in Section 3.

The same result extends to the case when the labels are shuffled in a collection of clusters at any mixed state , whether or not it commutes with , as described in Section 3.

References

- Deffner, S.; Jarzynski, C. Information Processing and the Second Law of Thermodynamics: An Inclusive, Hamiltonian Approach. Phys. Rev. X 2013, 3, 041003. [Google Scholar] [CrossRef]

- Parrondo, J.M.; Horowitz, J.M.; Sagawa, T. Thermodynamics of information. Nat. Phys. 2015, 11, 131–139. [Google Scholar] [CrossRef]

- Lloyd, S. Quantum-mechanical Maxwell’s demon. Phys. Rev. A 1997, 56, 3374–3382. [Google Scholar] [CrossRef]

- Piechocinska, B. Information erasure. Phys. Rev. A 2000, 61, 062314. [Google Scholar] [CrossRef]

- Scully, M.O. Extracting Work from a Single Thermal Bath via Quantum Negentropy. Phys. Rev. Lett. 2001, 87, 220601. [Google Scholar] [CrossRef]

- Zurek, W.H. Maxwell’s Demon, Szilard’s Engine and Quantum Measurements. arXiv 2003, arXiv:quant-ph/0301076. [Google Scholar]

- Scully, M.O.; Rostovtsev, Y.; Sariyanni, Z.E.; Zubairy, M.S. Using quantum erasure to exorcize Maxwell’s demon: I. Concepts and context. Phys. E 2005, 29, 29–39. [Google Scholar] [CrossRef]

- Sariyanni, Z.E.; Rostovtsev, Y.; Zubairy, M.S.; Scully, M.O. Using quantum erasure to exorcize Maxwell’s demon: III. Implementation. Phys. E 2005, 29, 47–52. [Google Scholar] [CrossRef]

- Rostovtsev, Y.; Sariyanni, Z.E.; Zubairy, M.S.; Scully, M.O. Using quantum erasure to exorcise Maxwell’s demon: II. Analysis. Phys. E 2005, 29, 40–46. [Google Scholar] [CrossRef]

- Quan, H.T.; Wang, Y.D.; Liu, Y.X.; Sun, C.P.; Nori, F. Maxwell’s Demon Assisted Thermodynamic Cycle in Superconducting Quantum Circuits. Phys. Rev. Lett. 2006, 97, 180402. [Google Scholar] [CrossRef]

- Zhou, Y.; Segal, D. Minimal model of a heat engine: Information theory approach. Phys. Rev. E 2010, 82, 011120. [Google Scholar] [CrossRef] [PubMed]

- Toyabe, S.; Sagawa, T.; Ueda, M.; Muneyuki, E.; Sano, M. Information heat engine: Converting information to energy by feedback control. Nat. Phys. 2010, 6, 988–992. [Google Scholar] [CrossRef]

- Rio, L.D.; Aberg, J.; Renner, R.; Dahlsten, O.; Vedral, V. The thermodynamic meaning of negative entropy. Nature 2011, 474, 61–63. [Google Scholar] [CrossRef]

- Kim, S.; Sagawa, T.; Liberato, S.D.; Ueda, M. Quantum Szilard Engine. Phys. Rev. Lett. 2011, 106, 070401. [Google Scholar] [CrossRef] [PubMed]

- Plesch, M.; Dahlsten, O.; Goold, J.; Vedral, V. Comment on “Quantum Szilard Engine”. Phys. Rev. Lett. 2013, 111, 188901. [Google Scholar] [CrossRef]

- Funo, K.; Watanabe, Y.; Ueda, M. Thermodynamic work gain from entanglement. Phys. Rev. A 2013, 88, 052319. [Google Scholar] [CrossRef]

- Diaz de la Cruz, J.M.; Martin-Delgado, M.A. Quantum-information engines with many-body states attaining optimal extractable work with quantum control. Phys. Rev. A 2014, 89, 032327. [Google Scholar] [CrossRef]

- Vidrighin, M.D.; Dahlsten, O.; Barbieri, M.; Kim, M.S.; Vedral, V.; Walmsley, I.A. Photonic Maxwell’s Demon. Phys. Rev. Lett. 2016, 116, 050401. [Google Scholar] [CrossRef]

- Hewgill, A.; Ferraro, A.; De Chiara, G. Quantum correlations and thermodynamic performances of two-qubit engines with local and common baths. Phys. Rev. A 2018, 98, 042102. [Google Scholar] [CrossRef]

- Paneru, G.; Lee, D.Y.; Tlusty, T.; Pak, H.K. Lossless Brownian Information Engine. Phys. Rev. Lett. 2018, 120, 020601. [Google Scholar] [CrossRef]

- Seah, S.; Nimmrichter, S.; Scarani, V. Work production of quantum rotor engines. New J. Phys. 2018, 20, 043045. [Google Scholar] [CrossRef]

- Uzdin, R. Coherence-Induced Reversibility and Collective Operation of Quantum Heat Machines via Coherence Recycling. Phys. Rev. Appl. 2016, 6, 024004. [Google Scholar] [CrossRef]

- Mandal, D.; Quan, H.T.; Jarzynski, C. Maxwell’s Refrigerator: An Exactly Solvable Model. Phys. Rev. Lett. 2013, 111, 030602. [Google Scholar] [CrossRef] [PubMed]

- Barato, A.C.; Seifert, U. Stochastic thermodynamics with information reservoirs. Phys. Rev. E 2014, 90, 042150. [Google Scholar] [CrossRef] [PubMed]

- Chapman, A.; Miyake, A. How an autonomous quantum Maxwell demon can harness correlated information. Phys. Rev. E 2015, 92, 062125. [Google Scholar] [CrossRef] [PubMed]

- Manzano, G.; Plastina, F.; Zambrini, R. Optimal Work Extraction and Thermodynamics of Quantum Measurements and Correlations. Phys. Rev. Lett. 2018, 121, 120602. [Google Scholar] [CrossRef]

- Strasberg, P.; Schaller, G.; Brandes, T.; Esposito, M. Quantum and Information Thermodynamics: A Unifying Framework Based on Repeated Interactions. Phys. Rev. X 2017, 7, 021003. [Google Scholar] [CrossRef]

- Zurek, W.H. Eliminating ensembles from equilibrium statistical physics: Maxwell’s demon, Szilard’s engine, and thermodynamics via entanglement. Phys. Rep. 2018, 755, 1–21. [Google Scholar] [CrossRef]

- Šafránek, D.; Deffner, S. Quantum Zeno effect in correlated qubits. Phys. Rev. A 2018, 98, 032308. [Google Scholar] [CrossRef]

- Stevens, J.; Deffner, S. Quantum to classical transition in an information ratchet. Phys. Rev. E 2019, 99, 042129. [Google Scholar] [CrossRef]

- Ollivier, H.; Zurek, W. Quantum Discord: A measure of the quantumness of correlations. Phys. Rev. Lett. 2002, 88, 017901. [Google Scholar] [CrossRef] [PubMed]

- Oppenheim, J.; Horodecki, M.; Horodecki, P.; Horodecki, R. Thermodynamical Approach to Quantifying Quantum Correlations. Phys. Rev. Lett. 2002, 89, 180402. [Google Scholar] [CrossRef] [PubMed]

- Zurek, W.H. Quantum discord and Maxwell’s demons. Phys. Rev. A 2003, 67, 012320. [Google Scholar] [CrossRef]

- De Liberato, S.; Ueda, M. Carnot’s theorem for nonthermal stationary reservoirs. Phys. Rev. E 2011, 84, 051122. [Google Scholar] [CrossRef]

- Park, J.; Kim, K.; Sagawa, T.; Kim, S. Heat Engine Driven by Purely Quantum Information. 2013. Available online: http://xxx.lanl.gov/abs/1302.3011 (accessed on 23 January 2020).

- Dann, R.; Kosloff, R. Quantum Signatures in the Quantum Carnot Cycle. arXiv 2019, arXiv:1906.06946. [Google Scholar] [CrossRef]

- Gardas, B.; Deffner, S. Thermodynamic universality of quantum Carnot engines. Phys. Rev. E 2015, 92, 042126. [Google Scholar] [CrossRef]

- Alicki, R.; Kosloff, R. Introduction to Quantum Thermodynamics: History and Prospects. In Thermodynamics in the Quantum Regime: Fundamental Aspects and New Directions; Binder, F., Correa, L.A., Gogolin, C., Anders, J., Adesso, G., Eds.; Springer: Berlin/Heidelberg, Germany, 2018; pp. 1–33. [Google Scholar] [CrossRef]

- Vedral, V. The role of relative entropy in quantum information theory. Rev. Mod. Phys. 2002, 74, 197–234. [Google Scholar] [CrossRef]

- Hasegawa, H.; Ishikawa, J.; Takara, K.; Driebe, D. Generalization of the second law for a nonequilibrium initial state. Phys. Lett. A 2010, 374, 1001–1004. [Google Scholar] [CrossRef]

- Sagawa, T. Second Law-Like Inequalities with Quantum Relative Entropy: An Introduction. 2012. Available online: http://xxx.lanl.gov/abs/1202.0983 (accessed on 23 January 2020).

- Brandao, F.; Horodecki, M.; Ng, N.; Oppenheim, J.; Wehner, S. The second laws of quantum thermodynamics. Proc. Natl. Acad. Sci. USA 2015, 112, 3275–3279. [Google Scholar] [CrossRef]

- Szilard, L. über die Entropieverminderung in einem thermodynamischen System bei Eingriffen intelligenter Wesen. Z. Phys. 1929, 53, 840–856. [Google Scholar] [CrossRef]

- Diazdelacruz, J. Entropy Distribution in a Quantum Informational Circuit of Tunable Szilard Engines. Entropy 2019, 21, 980. [Google Scholar] [CrossRef]

- Goold, J.; Huber, M.; Riera, A.; del Rio, L.; Skrzypczyk, P. The role of quantum information in thermodynamics—A topical review. J. Phys. A 2016, 49, 143001. [Google Scholar] [CrossRef]

- Insko, E.; Elliott, M.; Schotland, J.; Leigh, J. Generalized Reciprocity. J. Magn. Reson. 1998, 131, 111–117. [Google Scholar] [CrossRef] [PubMed]

- Diaz de la Cruz, J.M.; Martin-Delgado, M.A. Enhanced Energy Distribution for Quantum Information Heat Engines. Entropy 2016, 18, 335. [Google Scholar] [CrossRef]

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).