Aerial Video Trackers Review

Abstract

1. Introduction

1.1. Target Specificity

- Dim small targets: Targets for which the imaging size is relatively small due to the shooting angle and shooting distance-namely, targets for which the imaging size is less than 0.12% of the total number of pixels [9].

- Weakly fuzzy targets: Targets for which the image is blurred due to the exposure time or flight jitter.

- Weak-contrast targets: In a recognition environment with low noise and a low signal-to-noise ratio (SNR), the recognition target and moving background are similar in terms of color features and texture features. Hence, the contrast between the recognition target and the background is low, and the texture feature is not readily identified, but there is no missing target category.

- Occluded targets: Targets that are temporarily occluded by the complex environmental background or are hidden for a long time during aerial photography tracking.

- Fast-moving targets: Targets that exhibit dodging, fleeing and fast movement, which include image debris that is caused by the shaking of the UAV fuselage, obstacle avoidance and the influence of wind speed.

- Common targets: Targets with normal behavior and clear images.

1.2. Background Complexity

1.3. Tracking Diversity

- We conduct a comprehensive benchmark test of aerial video trackers based on handcrafted feature and deep learning.

- We take the target scale and definition as the classification criteria and conduct a complete comparative analysis of the three tracking schemes.

- We benchmark 20 trackers based on handcrafted feature, depth Feature, siamese network and attention mechanism.

- We compare the performance of the tracker in various challenging environments, so that relevant researchers can better understand the research progress on aerial video tracking.

2. Aerial Video Datasets

3. Traditional Target Tracking Algorithm

3.1. Common Targets

3.2. Weak Targets

3.2.1. Dim Small Targets

3.2.2. Weak Blurred Targets

3.2.3. Weak-Contrast Targets

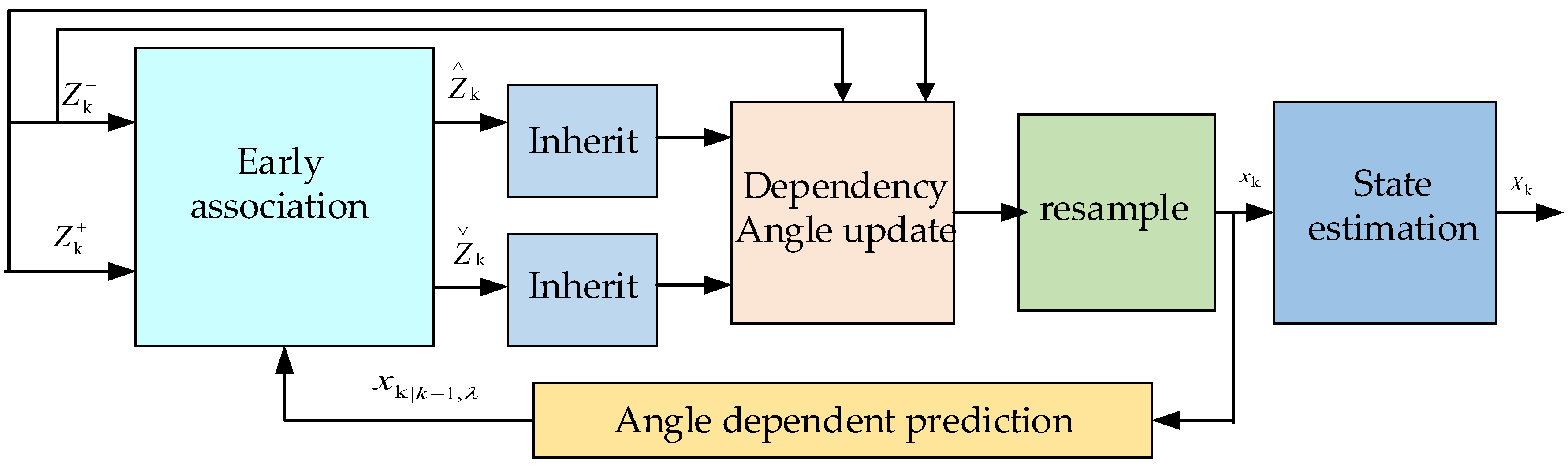

3.3. Occluded Targets and Fast-Moving Targets

4. Target Tracking Algorithm Based on a Deep Learning Network

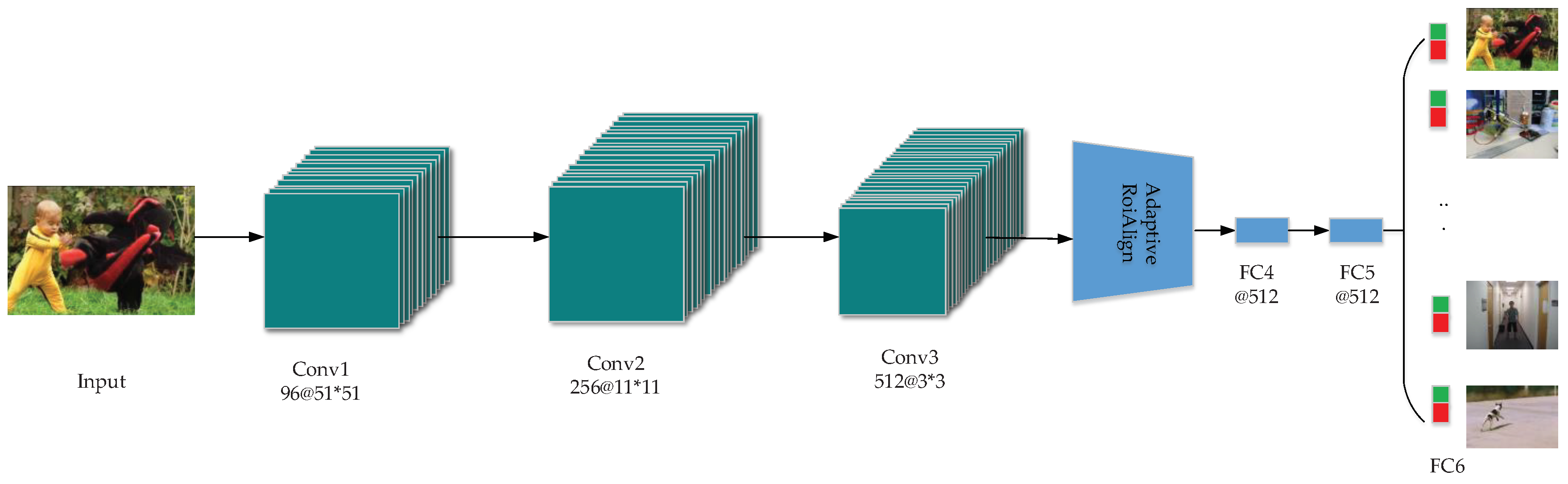

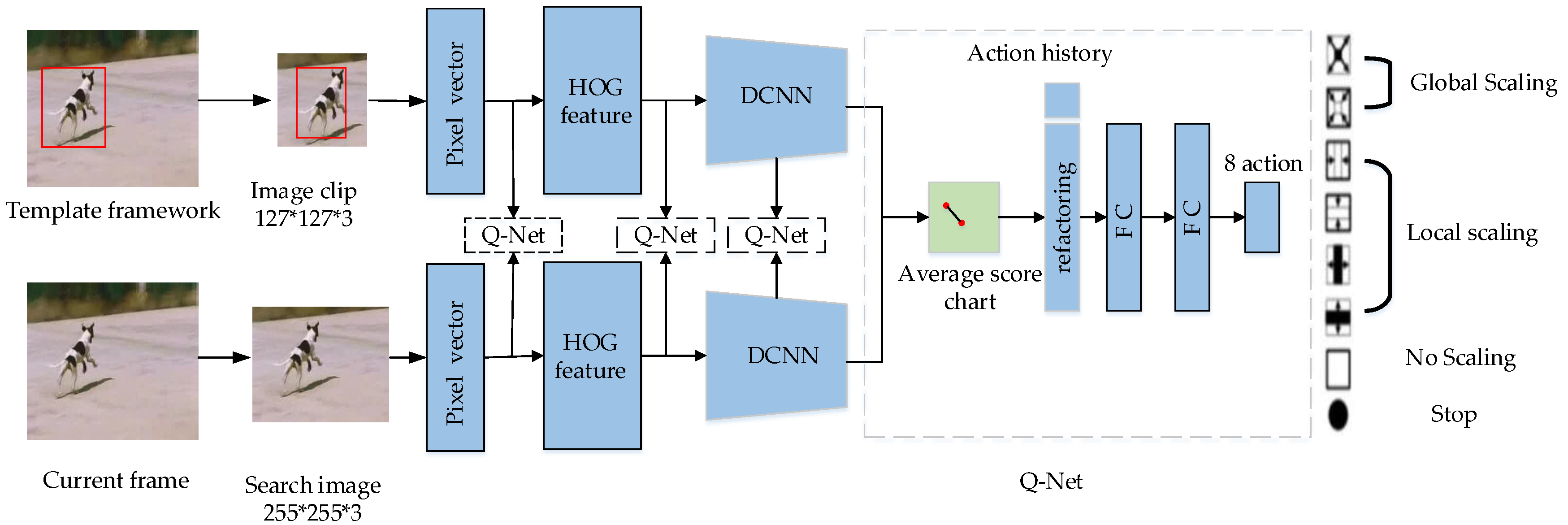

4.1. Depth Features

| Algorithm 1 Online tracking process of RT-MDNet algorithm |

| Input: Pretrained RT-MDNet convolution weights , where is the weight value of a convolution layer, and the initial target state . |

| Output: Adjusted target status . 1.8 |

|

| Algorithm 2 Action selection process for the EAST network |

| Input: Feature map, action index: eigth_actionindex {}, the action value h from the first four layers, action list: . |

| Output: Current conv layer action value. 1.8 |

|

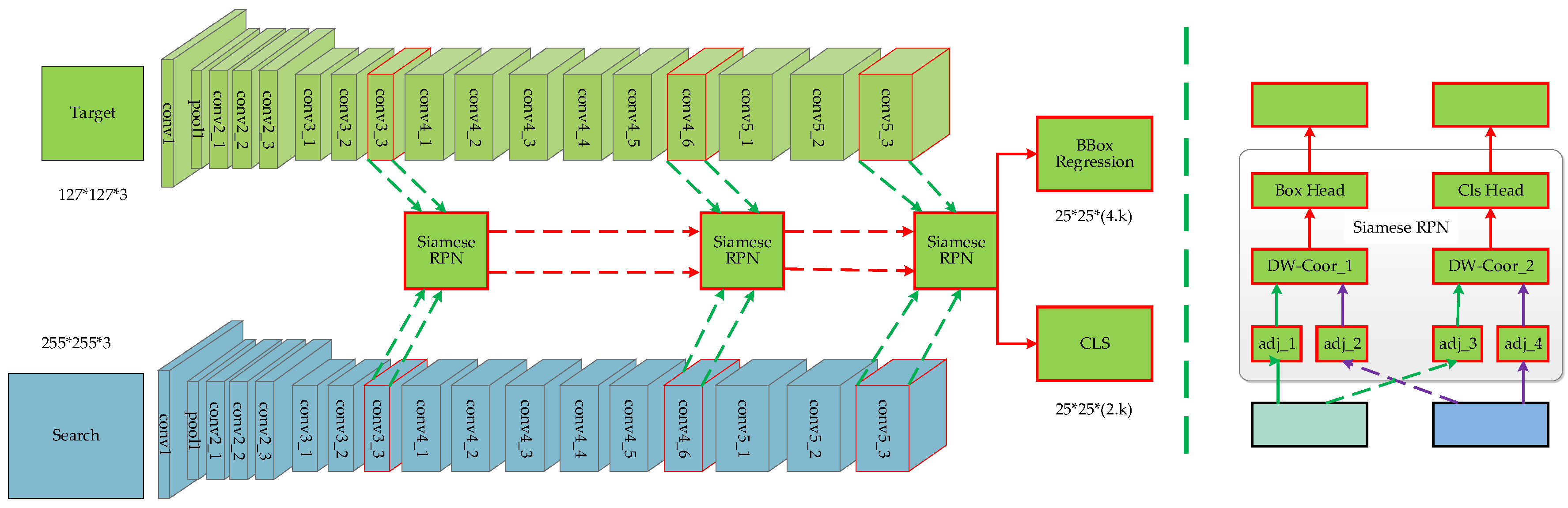

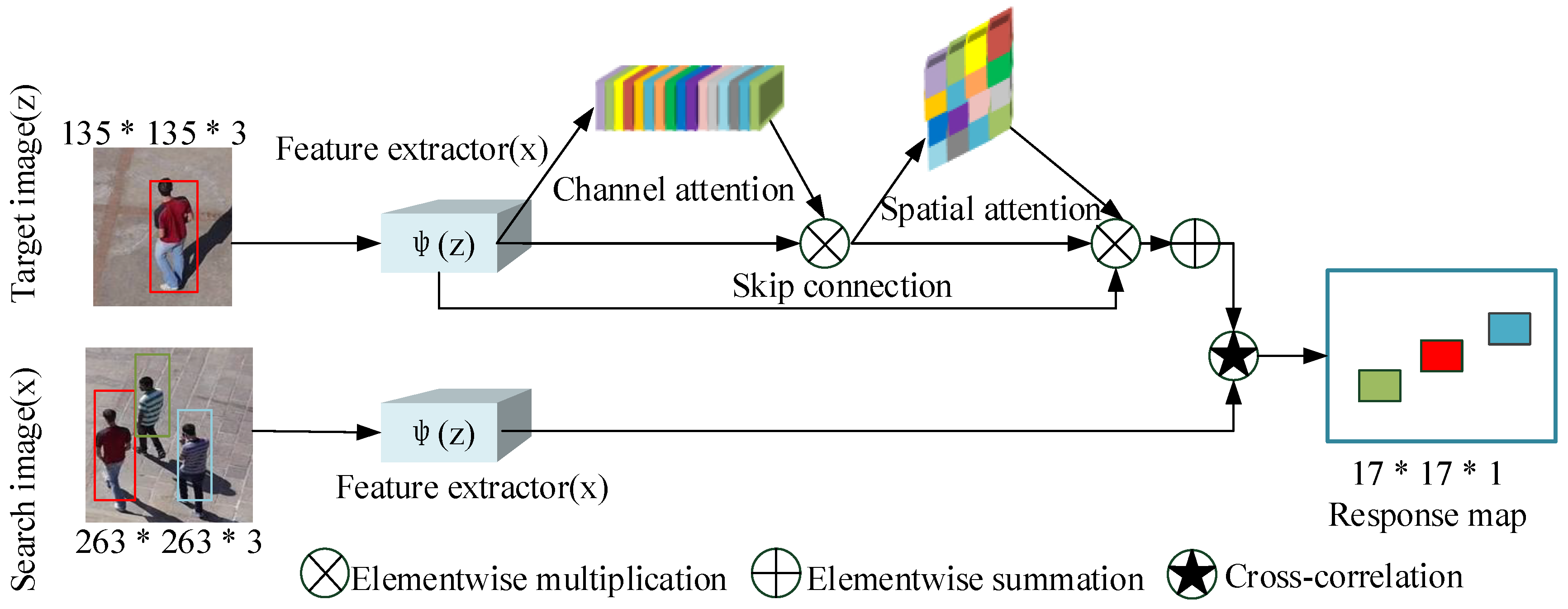

4.2. Siamese Network

| Algorithm 3 SiamRPN block |

| Input: Feature map (), where is the feature vector of the template frame; is the feature vector of the detection frame. |

| Output: Classification results and regression results of bbox. 1.8 |

|

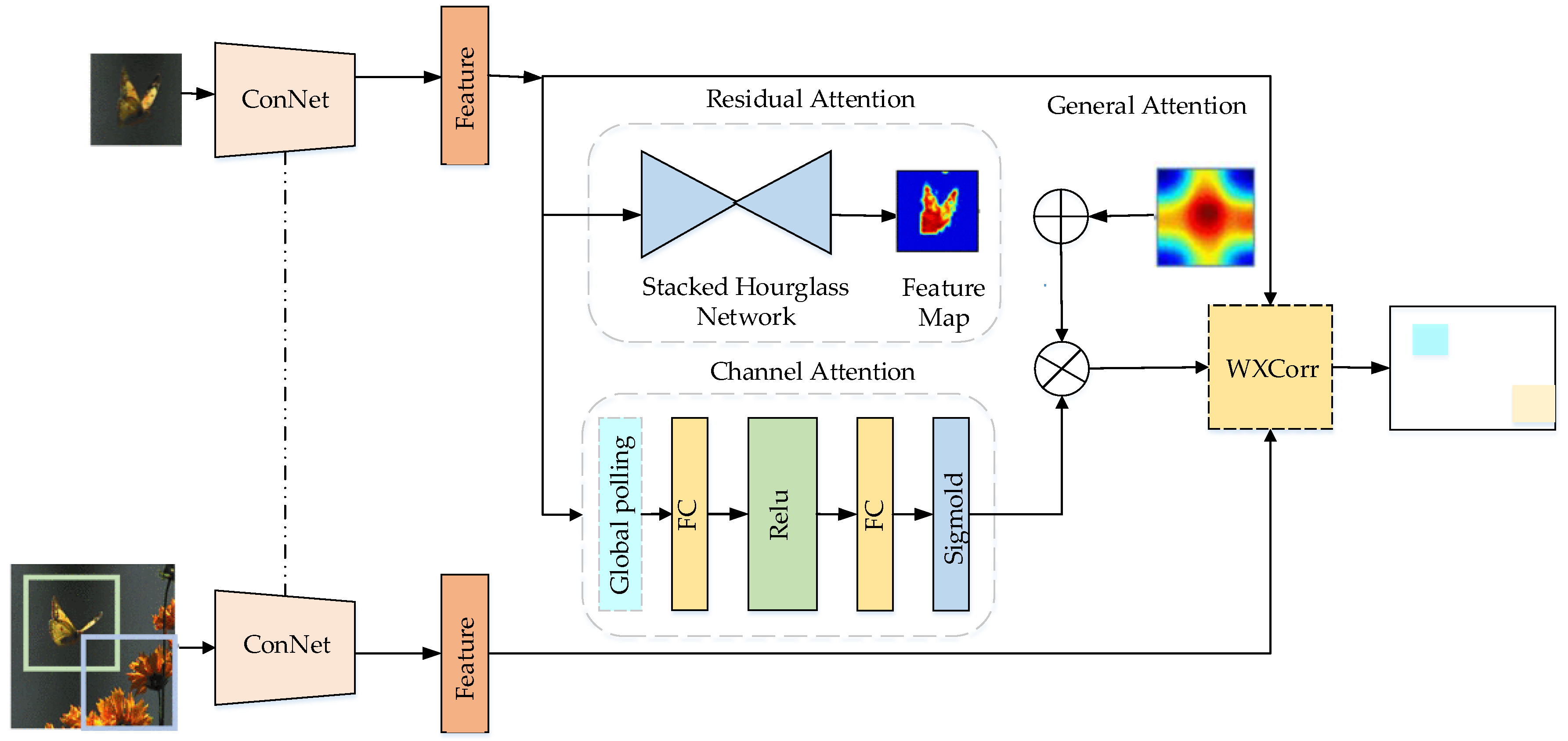

4.3. Attention Mechanism

| Algorithm 4 Attention Fusion Process of the RASNet algorithm |

| Input: Feature map. |

| Output: Trace box q with the largest response value. 1.8 |

|

| Algorithm 5 Channel-Spatial attention calculation process in the SCSAtt algorithm |

| Input: Feature map . |

| Output: Channel-Spatial attention . 1.8 |

|

5. Experiment

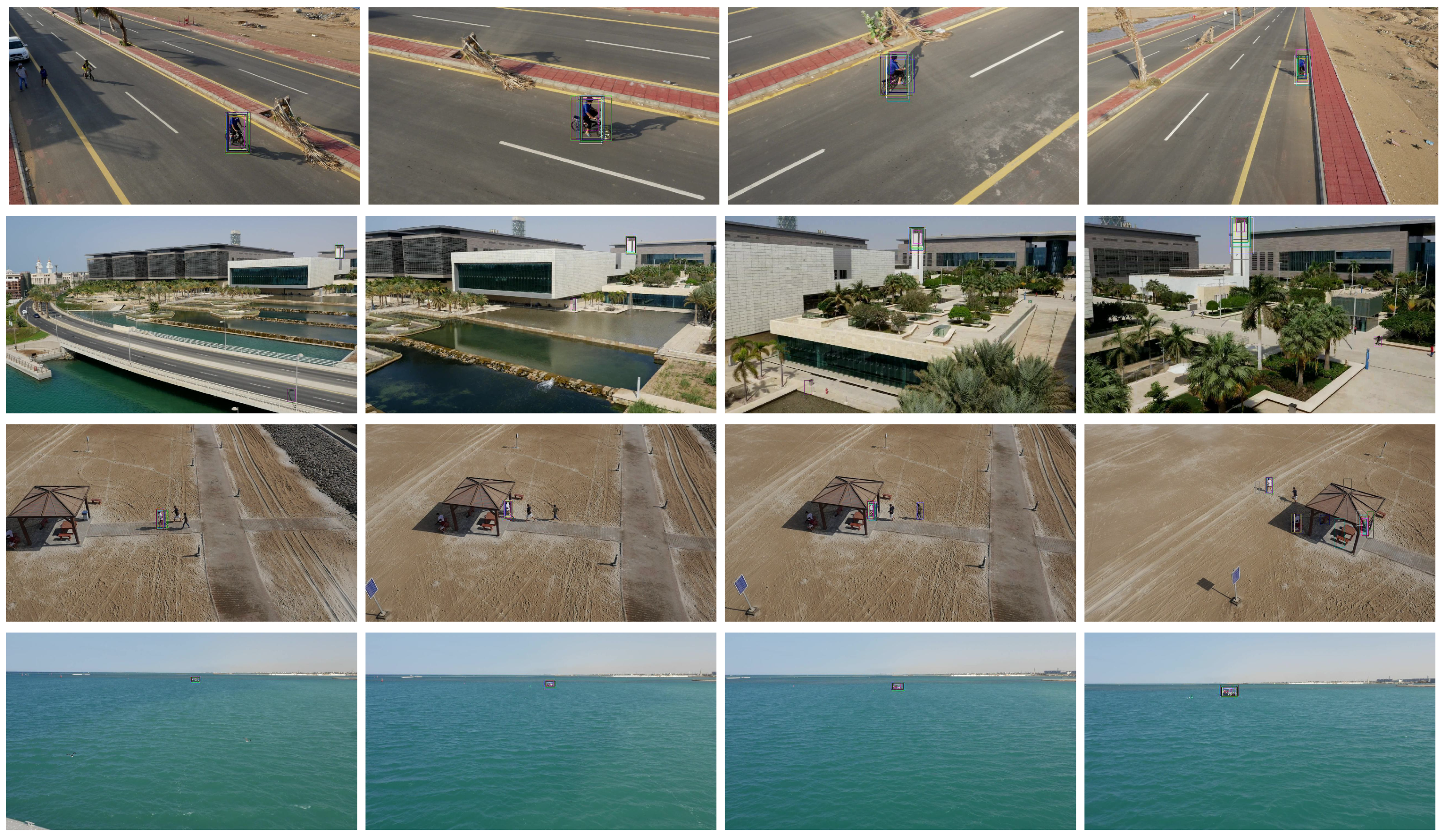

5.1. Datasets

5.1.1. Baseline Assessment

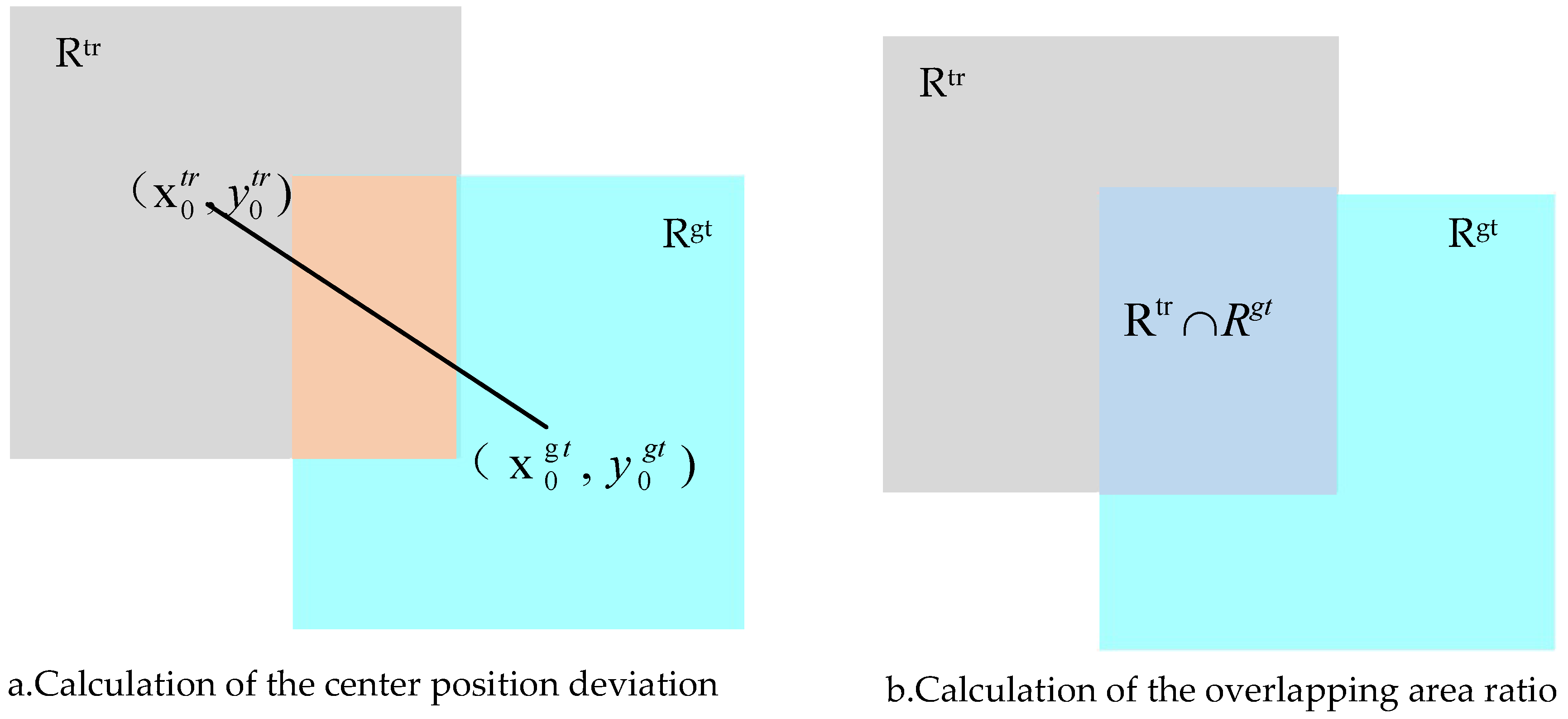

5.1.2. Evaluating Indicators

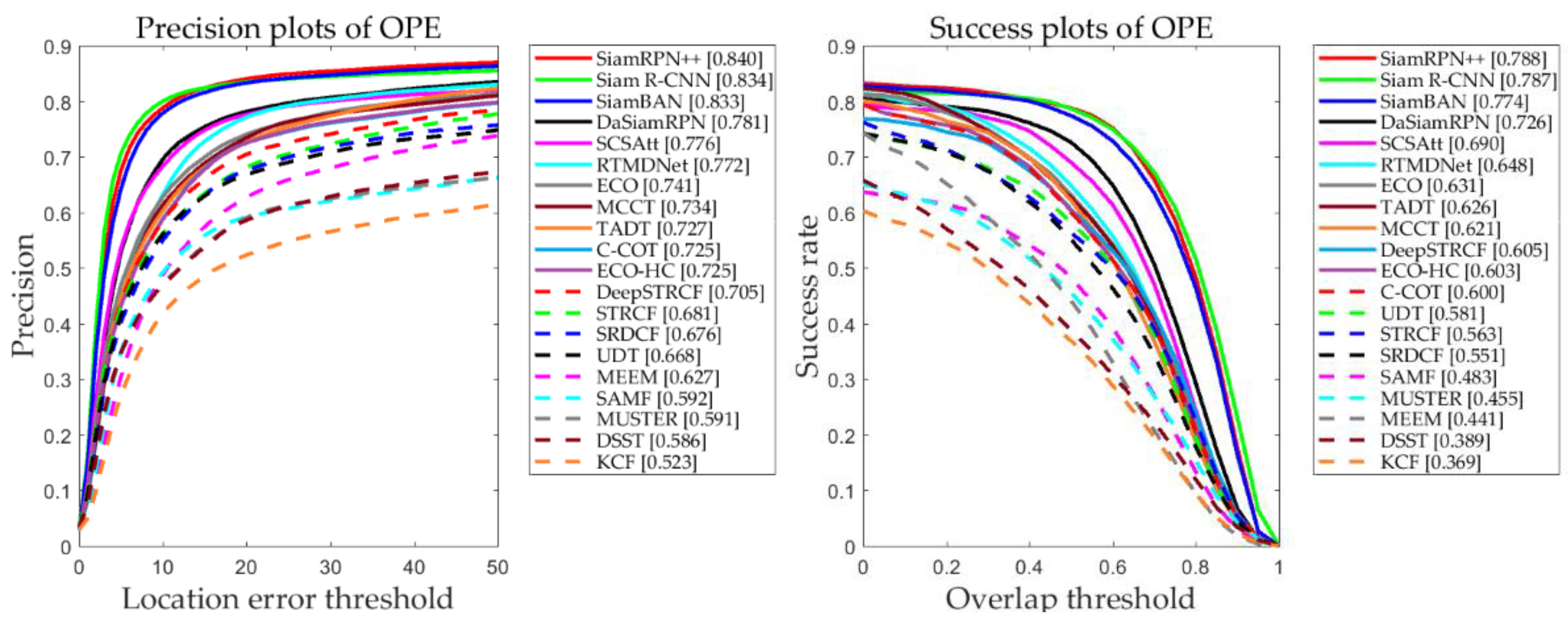

5.2. Evaluaton in UAV123

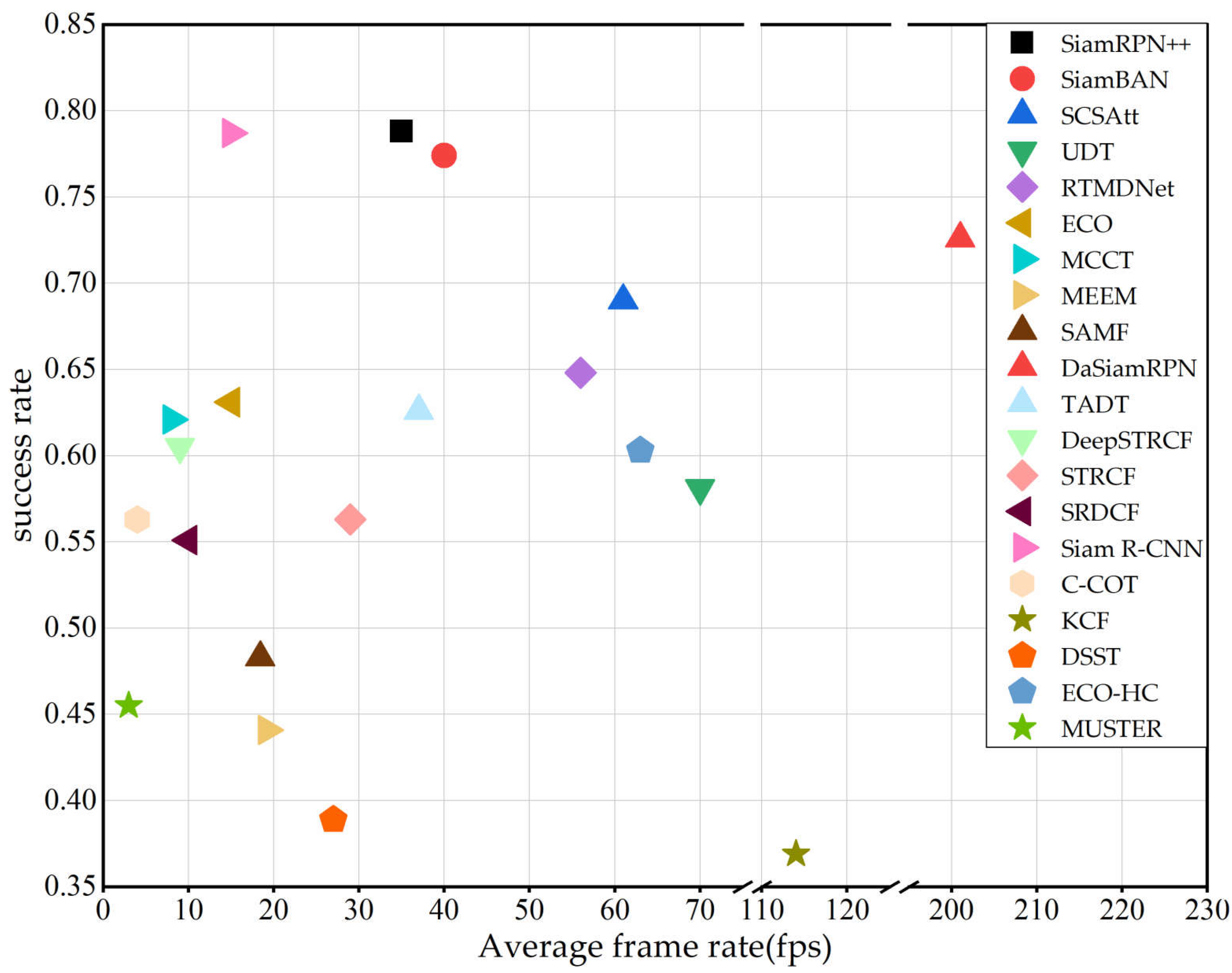

5.2.1. Overall Evaluation

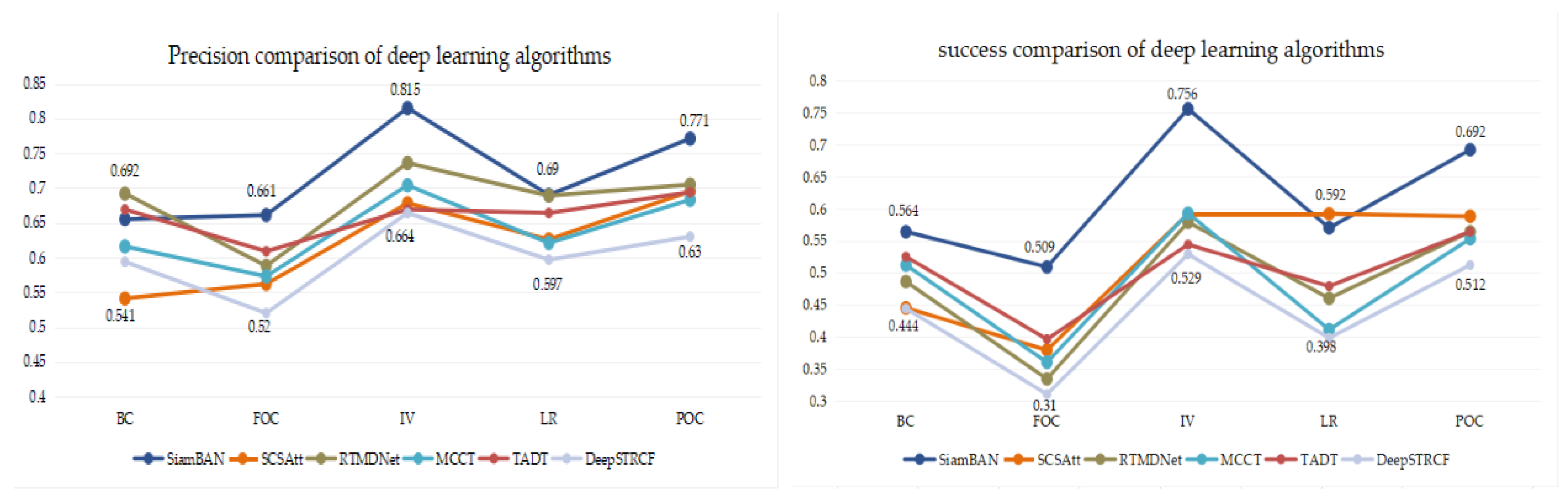

5.2.2. Attribute Evaluation

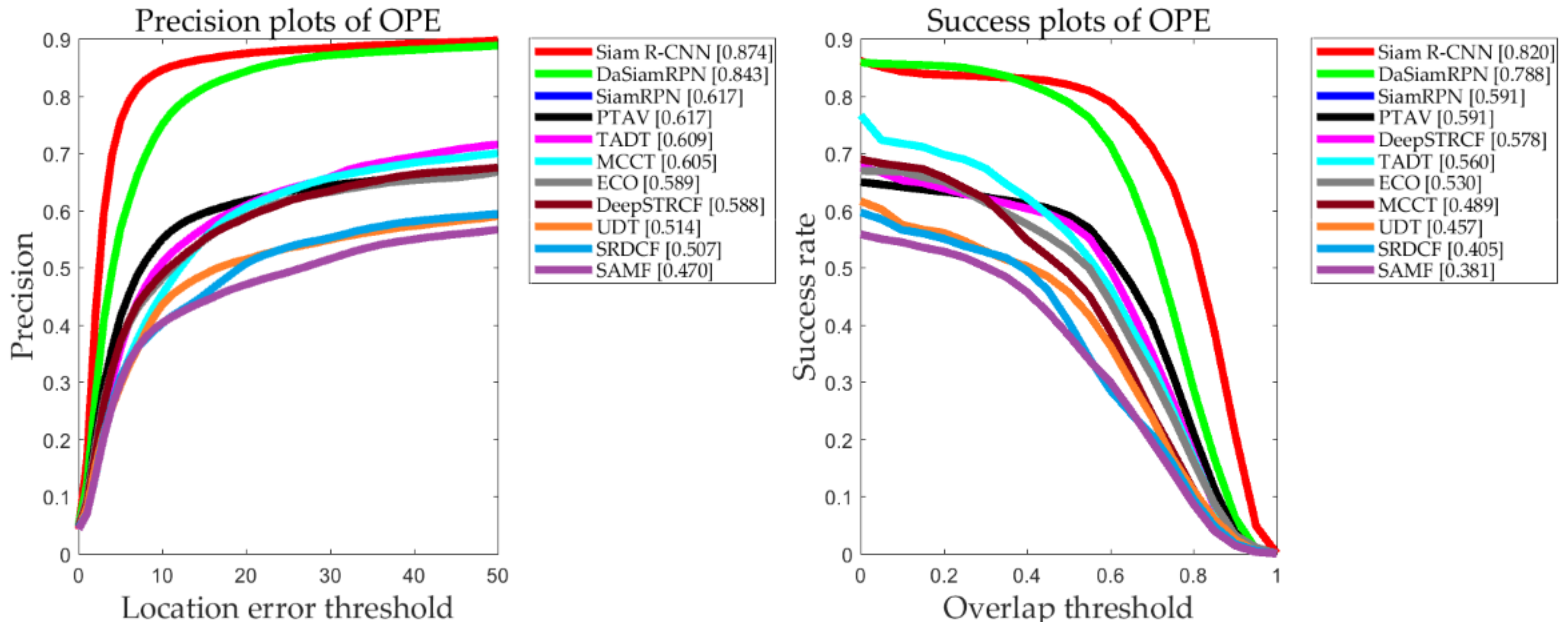

5.3. Evaluation in UAV20L

5.4. Comparison and Summary

- Changes in the target attitude. Multiple postures of the same moving target reduce the accuracy of target recognition, which is a common interference problem in target tracking. When the target attitude changes, its characteristics differ from those at the original attitude, and the target is easily lost, thereby resulting in tracking failure. An attention mechanism can help networks focus on important information regarding targets and reduce the probability of target loss during tracking. The utilization by deep learning network algorithms of an attention mechanism to ensure the accurate positioning of network targets is a promising research direction.

- Long-term tracking. In a long-time tracking process, due to the height and speed limit of aerial photography, the tracking target scale in the images in the video change with increasing tracking time. Since the tracking box cannot utilize adaptive tracking, it contains redundant background feature information, thereby leading to parameter update error of the target model. In contrast, the accelerated flight causes the target scale to increase continuously. Since the tracking box cannot contain all characteristic information of the target, parameter update error also occurs. According to the experimental results of this paper, the Siamese network realizes satisfactory performance in long-term tracking but cannot conduct online real-time tracking. The construction of a suitable long-term target tracking model according to the characteristics of long-term tracking tasks and their connection points with short-term tracking that combines the depth characteristics and migration learning remains a substantial challenge.

- Target tracking in a complex background environment. Against a complex background such as night, substantial changes in illumination intensity or too much occlusion, the target exhibits reflection, occlusion or transient disappearance during movement. If the moving target is similar to the background, tracking failure will occurs because the corresponding model of the target cannot be found. The main strategies for solving the occlusion problem are as follows: The depth characteristics of the target can be fully extracted to ensure that the network can handle the occlusion problem. During the offline training, occluded targets can be added into the training samples so that the network can fully learn coping strategies when a target is blocked and the trained offline network can be used to track the target. Multi-UAV collaborative tracking can utilize target information from multiple angles and effectively solve the problem of target tracking against a complex background.

- Real-time tracking. Real-time tracking is always a difficult problem in the field of target tracking. The current tracking method based on deep learning has the advantage of learning from a large amount of data. However, in the target tracking process, only the annotation data of the first frame are completely accurate, and it is difficult to extract sufficient training data from the network. The network model of deep learning is complex and has many training parameters. If the network is adjusted online in the tracking stage to ensure the tracking performance, the network tracking speed is severely affected. Large-scale datasets obtained via aerial photography are gradually becoming available, which include rich target classes and involve various situations that are encountered in practical applications. Many tracking algorithms have continued to learn depth characteristics from these datasets via an end-to-end approach, which is expected to further enable target tracking algorithms to realize real-time tracking while ensuring satisfactory tracking speed.

6. Future Directions

6.1. Cooperative Tracking and Path Planning of Multiple Drones

6.2. Long-Term Tracking and Abnormal Discovery

6.3. Visualization and Intelligent Analysis of Aerial Photography Data

Author Contributions

Funding

Conflicts of Interest

References

- Bonatti, R.; Ho, C.; Wang, W.; Choudhury, S.; Scherer, S.A. Towards a Robust Aerial Cinematography Platform: Localizing and Tracking Moving Targets in Unstructured Environments. arXiv 2019, arXiv:1904.02319. [Google Scholar]

- Zheng, Z.; Yao, H. A Method for UAV Tracking Target in Obstacle Environment. In Proceedings of the 2019 Chinese Automation Congress (CAC), Hangzhou, China, 22–24 November 2019; pp. 4639–4644. [Google Scholar]

- Zhang, S.; Zhao, X.; Zhou, B. Robust Vision-Based Control of a Rotorcraft UAV for Uncooperative Target Tracking. Sensors 2020, 20, 3474. [Google Scholar] [CrossRef] [PubMed]

- Wu, D.; Du, X.; Wang, K. An effective approach for underwater sonar image denoising based on sparse representation. In Proceedings of the 2018 IEEE 3rd International Conference on Image, Vision and Computing (ICIVC), Chongqing, China, 27–29 June 2018; pp. 389–393. [Google Scholar]

- Chen, Y.; Yu, M.; Jiang, G.; Peng, Z.; Chen, F. End-to-end single image enhancement based on a dual network cascade model. J. Vis. Commun. Image Represent. 2019, 61, 284–295. [Google Scholar] [CrossRef]

- Qiu, S.; Zhou, D.; Du, Y. The image stitching algorithm based on aggregated star groups. Signal Image Video Process. 2019, 13, 227–235. [Google Scholar] [CrossRef]

- Laguna, G.J.; Bhattacharya, S. Path planning with Incremental Roadmap Update for Visibility-based Target Tracking. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 4–8 November 2019; pp. 1159–1164. [Google Scholar]

- Yang, X.; Shi, J.; Zhou, Y.; Wang, C.; Hu, Y.; Zhang, X.; Wei, S. Ground Moving Target Tracking and Refocusing Using Shadow in Video-SAR. Remote Sens. 2020, 12, 3083. [Google Scholar] [CrossRef]

- Zhang, W.; Cong, M.; Wang, L. Algorithms for optical weak small targets detection and tracking: Review. In Proceedings of the International Conference on Neural Networks and Signal Processing, Nanjing, China, 14–17 December 2003; Volume 1, pp. 643–647. [Google Scholar] [CrossRef]

- De Oca, A.M.M.; Bahmanyar, R.; Nistor, N.; Datcu, M. Earth observation image semantic bias: A collaborative user annotation approach. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 2462–2477. [Google Scholar] [CrossRef]

- Mueller, M.; Smith, N.; Ghanem, B. A benchmark and simulator for uav tracking. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 445–461. [Google Scholar]

- Smeulders, A.W.; Chu, D.M.; Cucchiara, R.; Calderara, S.; Dehghan, A.; Shah, M. Visual tracking: An experimental survey. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 1442–1468. [Google Scholar]

- Kristan, M.; Leonardis, A.; Matas, J.; Felsberg, M.; Pflugfelder, R.; Cehovin Zajc, L.; Vojir, T.; Bhat, G.; Lukezic, A.; Eldesokey, A.; et al. The sixth visual object tracking vot2018 challenge results. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–53. [Google Scholar]

- Kristan, M.; Leonardis, A.; Matas, J.; Felsberg, M.; Pflugfelder, R.; Cehovin Zajc, L.; Vojir, T.; Hager, G.; Lukezic, A.; Eldesokey, A.; et al. The visual object tracking vot2017 challenge results. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 1949–1972. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.H. Online object tracking: A benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2411–2418. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.H. Object Tracking Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef]

- Liang, P.; Blasch, E.; Ling, H. Encoding color information for visual tracking: Algorithms and benchmark. IEEE Trans. Image Process. 2015, 24, 5630–5644. [Google Scholar] [CrossRef]

- Fan, H.; Lin, L.; Yang, F.; Chu, P.; Deng, G.; Yu, S.; Bai, H.; Xu, Y.; Liao, C.; Ling, H. Lasot: A high-quality benchmark for large-scale single object tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–21 June 2019; pp. 5374–5383. [Google Scholar]

- Kiani Galoogahi, H.; Fagg, A.; Huang, C.; Ramanan, D.; Lucey, S. Need for speed: A benchmark for higher frame rate object tracking. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1125–1134. [Google Scholar]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Ling, H.; Hu, Q.; Nie, Q.; Cheng, H.; Liu, C.; Liu, X.; et al. Visdrone-det 2018: The vision meets drone object detection in image challenge results. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 437–468. [Google Scholar]

- Hu, Y.; Xiao, M.; Zhang, K.; Wang, X. Aerial infrared target tracking in complex background based on combined tracking and detecting. Math. Probl. Eng. 2019, 2019, 1–17. [Google Scholar] [CrossRef]

- Jia, X.; Lu, H.; Yang, M.H. Visual tracking via adaptive structural local sparse appearance model. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 18–20 June 2012; pp. 1822–1829. [Google Scholar]

- Hong, Z.; Chen, Z.; Wang, C.; Mei, X.; Prokhorov, D.; Tao, D. Multi-store tracker (muster): A cognitive psychology inspired approach to object tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 749–758. [Google Scholar]

- Raguram, R.; Chum, O.; Pollefeys, M.; Matas, J.; Frahm, J.M. USAC: A universal framework for random sample consensus. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 2022–2038. [Google Scholar] [CrossRef]

- Ke, Y.; Sukthankar, R. PCA-SIFT: A more distinctive representation for local image descriptors. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; Volume 2, p. II-II. [Google Scholar]

- Zhou, X.; Li, J.; Chen, S.; Cai, H.; Liu, H. Multiple perspective object tracking via context-aware correlation filter. IEEE Access 2018, 6, 43262–43273. [Google Scholar] [CrossRef]

- He, Z.; Yi, S.; Cheung, Y.M.; You, X.; Tang, Y.Y. Robust object tracking via key patch sparse representation. IEEE Trans. Cybern. 2016, 47, 354–364. [Google Scholar] [CrossRef] [PubMed]

- Han, J.; Liang, K.; Zhou, B.; Zhu, X.; Zhao, J.; Zhao, L. Infrared small target detection utilizing the multiscale relative local contrast measure. IEEE Geosci. Remote Sens. Lett. 2018, 15, 612–616. [Google Scholar] [CrossRef]

- Sanchez-Matilla, R.; Poiesi, F.; Cavallaro, A. Online multi-target tracking with strong and weak detections. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 84–99. [Google Scholar]

- Wang, C.; Song, F.; Qin, S. Infrared small target tracking by discriminative classification based on Gaussian mixture model in compressive sensing domain. In International Conference on Optical and Photonics Engineering (icOPEN 2016); International Society for Optics and Photonics: Bellingham, WA, USA, 2017; Volume 10250, p. 102502L. [Google Scholar]

- Liu, M.; Huang, Z.; Fan, Z.; Zhang, S.; He, Y. Infrared dim target detection and tracking based on particle filter. In Proceedings of the 2017 36th Chinese Control Conference (CCC), Dalian, China, 26–28 July 2017; pp. 5372–5378. [Google Scholar]

- Li, S.J.; Fan, X.; Zhu, B.; Cheng, Z.D.; State Key Laboratory of Pulsed Power Laser Technology, Electronic Engineering Institute. A method for small infrared targets detection based on the technology of motion blur recovery. Acta Photonica Sin. 2017, 37, 06100011–06100017. [Google Scholar]

- Raj, N.N.; Vijay, A.S. Adaptive blind deconvolution and denoising of motion blurred images. In 2016 IEEE International Conference on Recent Trends in Electronics, Information & Communication Technology (RTEICT); IEEE: Piscataway, NJ, USA, 2016; pp. 1171–1175. [Google Scholar]

- Shkurko, K.; Yuksel, C.; Kopta, D.; Mallett, I.; Brunvand, E. Time Interval Ray Tracing for Motion Blur. IEEE Trans. Vis. Comput. Graph. 2017, 24, 3225–3238. [Google Scholar] [CrossRef]

- Inoue, M.; Gu, Q.; Jiang, M.; Takaki, T.; Ishii, I.; Tajima, K. Motion-blur-free high-speed video shooting using a resonant mirror. Sensors 2017, 17, 2483. [Google Scholar] [CrossRef]

- Bi, Y.; Bai, X.; Jin, T.; Guo, S. Multiple feature analysis for infrared small target detection. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1333–1337. [Google Scholar] [CrossRef]

- Qiang, Z.; Du, X.; Sun, L. Remote sensing image fusion for dim target detection. In Proceedings of the 2011 International Conference on Advanced Mechatronic Systems, Zhengzhou, China, 11–13 August 2011; pp. 379–383. [Google Scholar]

- Wu, D.; Zhang, L.; Lin, L. Based on the moving average and target motion information for detection of weak small target. In Proceedings of the 2018 International Conference on Intelligent Transportation, Big Data & Smart City (ICITBS), Xiamen, China, 25–26 January 2018; pp. 641–644. [Google Scholar]

- Rollason, M.; Salmond, D. Particle filter for track-before-detect of a target with unknown amplitude viewed against a structured scene. IET Radar Sonar Navig. 2018, 12, 603–609. [Google Scholar] [CrossRef]

- Wang, H.; Peng, J.; Yue, S. A feedback neural network for small target motion detection in cluttered backgrounds. In International Conference on Artificial Neural Networks; Springer: Berlin/Heidelberg, Germany, 2018; pp. 728–737. [Google Scholar]

- Martin, D.; Gustav, F.; Fahad Shahbaz, K.; Michael, F. Accurate scale estimation for robust visual tracking. In Proceedings of the British Machine Vision Conference, Nottingham, UK, 1–5 September 2014. [Google Scholar]

- Cheng, H.; Lin, L.; Zheng, Z.; Guan, Y.; Liu, Z. An autonomous vision-based target tracking system for rotorcraft unmanned aerial vehicles. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1732–1738. [Google Scholar]

- Li, F.; Yao, Y.; Li, P.; Zhang, D.; Zuo, W.; Yang, M.H. Integrating boundary and center correlation filters for visual tracking with aspect ratio variation. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 2001–2009. [Google Scholar]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. Exploiting the circulant structure of tracking-by-detection with kernels. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2012; pp. 702–715. [Google Scholar]

- Li, Y.; Zhu, J. A scale adaptive kernel correlation filter tracker with feature integration. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 254–265. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef]

- Li, Y.; Fu, C.; Huang, Z.; Zhang, Y.; Pan, J. Intermittent Contextual Learning for Keyfilter-Aware UAV Object Tracking Using Deep Convolutional Feature. IEEE Trans. Multimed. 2020. [Google Scholar] [CrossRef]

- Li, Y.; Fu, C.; Huang, Z.; Zhang, Y.; Pan, J. Keyfilter-aware real-time uav object tracking. arXiv 2020, arXiv:2003.05218. [Google Scholar]

- Oh, H.; Kim, S.; Shin, H.S.; Tsourdos, A. Coordinated standoff tracking of moving target groups using multiple UAVs. IEEE Trans. Aerosp. Electron. Syst. 2015, 51, 1501–1514. [Google Scholar]

- Greatwood, C.; Bose, L.; Richardson, T.; Mayol-Cuevas, W.; Chen, J.; Carey, S.J.; Dudek, P. Tracking control of a UAV with a parallel visual processor. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 4248–4254. [Google Scholar]

- Song, R.; Long, T.; Wang, Z.; Cao, Y.; Xu, G. Multi-UAV Cooperative Target Tracking Method using sparse a search and Standoff tracking algorithms. In Proceedings of the 2018 IEEE CSAA Guidance, Navigation and Control Conference (CGNCC), Xiamen, China, 10–12 August 2018; pp. 1–6. [Google Scholar]

- Huang, Z.; Fu, C.; Li, Y.; Lin, F.; Lu, P. Learning aberrance repressed correlation filters for real-time uav tracking. In Proceedings of the IEEE International Conference on Computer Vision, Long Beach, CA, USA, 16–20 June 2019; pp. 2891–2900. [Google Scholar]

- Danelljan, M.; Hager, G.; Shahbaz Khan, F.; Felsberg, M. Learning spatially regularized correlation filters for visual tracking. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 13–16 December 2015; pp. 4310–4318. [Google Scholar]

- Li, F.; Tian, C.; Zuo, W.; Zhang, L.; Yang, M.H. Learning spatial-temporal regularized correlation filters for visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4904–4913. [Google Scholar]

- Li, Y.; Fu, C.; Ding, F.; Huang, Z.; Lu, G. AutoTrack: Towards High-Performance Visual Tracking for UAV with Automatic Spatio-Temporal Regularization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–18 June 2020; pp. 11923–11932. [Google Scholar]

- Che, F.; Niu, Y.; Li, J.; Wu, L. Cooperative Standoff Tracking of Moving Targets Using Modified Lyapunov Vector Field Guidance. Appl. Sci. 2020, 10, 3709. [Google Scholar] [CrossRef]

- Wang, L.; Ouyang, W.; Wang, X.; Lu, H. Stct: Sequentially training convolutional networks for visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1373–1381. [Google Scholar]

- Yun, S.; Choi, J.; Yoo, Y.; Yun, K.; Young Choi, J. Action-decision networks for visual tracking with deep reinforcement learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Venice, Italy, 22–29 October 2017; pp. 2711–2720. [Google Scholar]

- Zhang, X.; Zhang, X.; Du, X.; Zhou, X.; Yin, J. Learning Multi-Domain Convolutional Network for RGB-T Visual Tracking. In Proceedings of the 2018 11th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Beijing, China, 13–15 October 2018; pp. 1–6. [Google Scholar]

- Jung, I.; Son, J.; Baek, M.; Han, B. Real-time mdnet. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 83–98. [Google Scholar]

- Huang, C.; Lucey, S.; Ramanan, D. Learning policies for adaptive tracking with deep feature cascades. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 105–114. [Google Scholar]

- Danelljan, M.; Hager, G.; Shahbaz Khan, F.; Felsberg, M. Convolutional features for correlation filter based visual tracking. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 13–16 December 2015; pp. 58–66. [Google Scholar]

- Qi, Y.; Zhang, S.; Qin, L.; Yao, H.; Huang, Q.; Lim, J.; Yang, M.H. Hedged deep tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4303–4311. [Google Scholar]

- Xia, H.; Zhang, Y.; Yang, M.; Zhao, Y. Visual tracking via deep feature fusion and correlation filters. Sensors 2020, 20, 3370. [Google Scholar] [CrossRef]

- Jianming, Z.; Shugao, M.; Sclaroff, S. MEEM: Robust Tracking via Multiple Experts Using Entropy Minimization. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 188–203. [Google Scholar]

- Danelljan, M.; Bhat, G.; Shahbaz Khan, F.; Felsberg, M. Eco: Efficient convolution operators for tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6638–6646. [Google Scholar]

- Danelljan, M.; Robinson, A.; Khan, F.S.; Felsberg, M. Beyond correlation filters: Learning continuous convolution operators for visual tracking. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 472–488. [Google Scholar]

- Bhat, G.; Johnander, J.; Danelljan, M.; Shahbaz Khan, F.; Felsberg, M. Unveiling the power of deep tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 483–498. [Google Scholar]

- Wang, N.; Zhou, W.; Tian, Q.; Hong, R.; Wang, M.; Li, H. Multi-cue correlation filters for robust visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4844–4853. [Google Scholar]

- Ke, H.; Chen, D.; Li, X.; Tang, Y.; Shah, T.; Ranjan, R. Towards brain big data classification: Epileptic EEG identification with a lightweight VGGNet on global MIC. IEEE Access 2018, 6, 14722–14733. [Google Scholar] [CrossRef]

- Li, X.; Ma, C.; Wu, B.; He, Z.; Yang, M.H. Target-aware deep tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 1369–1378. [Google Scholar]

- Lukezic, A.; Matas, J.; Kristan, M. D3S-A Discriminative Single Shot Segmentation Tracker. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–18 June 2020; pp. 7133–7142. [Google Scholar]

- Xu, Y.; Wang, Z.; Li, Z.; Yuan, Y.; Yu, G. SiamFC++: Towards Robust and Accurate Visual Tracking with Target Estimation Guidelines; AAAI: Menlo Park, CA, USA, 2020; pp. 12549–12556. [Google Scholar]

- Tao, R.; Gavves, E.; Smeulders, A.W. Siamese instance search for tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1420–1429. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-convolutional siamese networks for object tracking. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 850–865. [Google Scholar]

- Tang, W.; Yu, P.; Wu, Y. Deeply learned compositional models for human pose estimation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 190–206. [Google Scholar]

- Valmadre, J.; Bertinetto, L.; Henriques, J.; Vedaldi, A.; Torr, P.H. End-to-end representation learning for correlation filter based tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Venice, Italy, 22–29 October 2017; pp. 2805–2813. [Google Scholar]

- Wang, Q.; Gao, J.; Xing, J.; Zhang, M.; Hu, W. Dcfnet: Discriminant correlation filters network for visual tracking. arXiv 2017, arXiv:1704.04057. [Google Scholar]

- Fan, H.; Ling, H. Parallel tracking and verifying: A framework for real-time and high accuracy visual tracking. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5486–5494. [Google Scholar]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High performance visual tracking with siamese region proposal network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8971–8980. [Google Scholar]

- Zhu, Z.; Wang, Q.; Li, B.; Wu, W.; Yan, J.; Hu, W. Distractor-aware siamese networks for visual object tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 101–117. [Google Scholar]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. Siamrpn++: Evolution of siamese visual tracking with very deep networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2019; pp. 4282–4291. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Voigtlaender, P.; Luiten, J.; Torr, P.H.; Leibe, B. Siam r-cnn: Visual tracking by re-detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–18 June 2020; pp. 6578–6588. [Google Scholar]

- Chen, Z.; Zhong, B.; Li, G.; Zhang, S.; Ji, R. Siamese Box Adaptive Network for Visual Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–18 June 2020; pp. 6668–6677. [Google Scholar]

- Wang, N.; Song, Y.; Ma, C.; Zhou, W.; Liu, W.; Li, H. Unsupervised deep tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 1308–1317. [Google Scholar]

- Zhao, L.; Ishag Mahmoud, M.A.; Ren, H.; Zhu, M. A Visual Tracker Offering More Solutions. Sensors 2020, 20, 5374. [Google Scholar] [CrossRef]

- Wang, Q.; Teng, Z.; Xing, J.; Gao, J.; Hu, W.; Maybank, S. Learning attentions: Residual attentional siamese network for high performance online visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4854–4863. [Google Scholar]

- Rahman, M.M.; Fiaz, M.; Jung, S.K. Efficient Visual Tracking with Stacked Channel-Spatial Attention Learning. IEEE Access 2020. [Google Scholar] [CrossRef]

- Li, D.; Wen, G.; Kuai, Y.; Porikli, F. End-to-end feature integration for correlation filter tracking with channel attention. IEEE Signal Process. Lett. 2018, 25, 1815–1819. [Google Scholar] [CrossRef]

- Ru, C.J.; Qi, X.M.; Guan, X.N. Distributed cooperative search control method of multiple UAVs for moving target. Int. J. Aerosp. Eng. 2015, 2015. [Google Scholar] [CrossRef]

- Nikodem, M.; Słabicki, M.; Surmacz, T.; Mrówka, P.; Dołęga, C. Multi-Camera Vehicle Tracking Using Edge Computing and Low-Power Communication. Sensors 2020, 20, 3334. [Google Scholar] [CrossRef] [PubMed]

- Zhong, Y.; Yao, P.; Sun, Y.; Yang, J. Method of multi-UAVs cooperative search for Markov moving targets. In Proceedings of the 2017 29th Chinese Control And Decision Conference (CCDC), Chongqing, China, 28–30 November 2017; pp. 6783–6789. [Google Scholar]

- Ramirez-Atencia, C.; Bello-Orgaz, G.; R-Moreno, M.D.; Camacho, D. Solving complex multi-UAV mission planning problems using multi-objective genetic algorithms. Soft Comput. 2017, 21, 4883–4900. [Google Scholar] [CrossRef]

- Oh, H.; Kim, S.; Tsourdos, A. Road-map–assisted standoff tracking of moving ground vehicle using nonlinear model predictive control. IEEE Trans. Aerosp. Electron. Syst. 2015, 51, 975–986. [Google Scholar]

- Da Costa, J.R.; Nedjah, N.; de Macedo Mourelle, L.; da Costa, D.R. Crowd abnormal detection using artificial bacteria colony and Kohonen’s neural network. In Proceedings of the 2017 IEEE Latin American Conference on Computational Intelligence (LA-CCI), Arequipa, Peru, 8–10 November 2017; pp. 1–6. [Google Scholar]

- Cong, Y.; Yuan, J.; Liu, J. Abnormal event detection in crowded scenes using sparse representation. Pattern Recognit. 2013, 46, 1851–1864. [Google Scholar] [CrossRef]

| Datasets | Number of Videos | Shortest Video Frames | Average Video Frames | Longest Video Frames | Total Video Frames |

|---|---|---|---|---|---|

| UAV123 [11] | 120 | 109 | 915 | 9085 | 112,578 |

| UAV20L [11] | 20 | 1717 | 2934 | 5527 | 58,670 |

| ALOV300++ [12] | 314 | 19 | 483 | 5975 | 151,657 |

| VOT-2014 [13] | 25 | 164 | 409 | 1210 | 10,000 |

| VOT-2017 [14] | 60 | 41 | 356 | 1500 | 21,000 |

| OTB2013 [15] | 51 | 71 | 578 | 3872 | 29,491 |

| OTB2015 [16] | 100 | 71 | 590 | 3872 | 59,040 |

| Temple Color 128 [17] | 129 | 71 | 429 | 3872 | 55,346 |

| LaSOT [18] | 1400 | 1000 | 2506 | 11,397 | 3.52 M |

| NFS [19] | 100 | 169 | 3830 | 20,665 | 383,000 |

| VisDone 2018 [20] | 288 | - | 10,209 | - | 261,908 |

| Parameter Name | Version or Value |

|---|---|

| Operating system | Windows 10 |

| CPU | Intel Xeon 3.60 GHz |

| GPU | NVIDIA TITAN V/12 G |

| CUDA | CUDA10.1 |

| RAM | 32 GB |

| Tracker | Base Network | Feature | Online-Learning | Real-Time |

|---|---|---|---|---|

| SiamRPN++ | SiamRPN | CNN | N | Y |

| SiamBAN | SiamFC | CNN | N | Y |

| Siam R-CNN | SiamFC | CNN | Y | N |

| DaSiamRPN | SiamRPN | CNN | Y | Y |

| SCSAtt | SiamFC | CNN | N | Y |

| UDT | SiamFC | CNN | N | Y |

| RTMDNet | MDNet | CNN | Y | Y |

| ECO | C-COT | CNN, HOG, CN | Y | N |

| ECO-HC | C-COT | HOG, CN | Y | N |

| C-COT | C-COT | CNN | N | N |

| MCCT | DCF | CNN | Y | N |

| TADT | TADT | CNN | N | Y |

| DeepSTRCF | STRCF | CNN, HOG, CN | Y | N |

| MEEM | MEEM | CNN | Y | N |

| STRCF | SRDCF | HOG, CN, Gray | Y | N |

| SRDCF | SRDCF | HOG, CN | Y | N |

| SAMF | KCF | HOG, CN, Gray | N | N |

| MUSTER | MUSTER | HOG, CN | N | N |

| DSST | CF | HOG, CN, Gray | N | N |

| KCF | CF | HOG | N | N |

| Tracker | ARC | BC | CM | FM | FOC | IV | LR | OV | POC | SOB | SV | VC |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Siam R-CNN | 0.854 | 0.714 | 0.889 | 0.822 | 0.776 | 0.809 | 0.706 | 0.839 | 0.809 | 0.812 | 0.828 | 0.875 |

| SiamBAN | 0.796 | 0.645 | 0.848 | 0.805 | 0.671 | 0.766 | 0.719 | 0.789 | 0.765 | 0.777 | 0.813 | 0.824 |

| SiamRPN++ | 0.818 | 0.655 | 0.863 | 0.774 | 0.661 | 0.815 | 0.690 | 0.816 | 0.771 | 0.800 | 0.820 | 0.876 |

| DaSiamRPN | 0.756 | 0.668 | 0.786 | 0.737 | 0.633 | 0.710 | 0.663 | 0.693 | 0.701 | 0.747 | 0.754 | 0.753 |

| SCSAtt | 0.722 | 0.541 | 0.775 | 0.690 | 0.562 | 0.678 | 0.626 | 0.721 | 0.695 | 0.78 | 0.749 | 0.747 |

| ECO | 0.654 | 0.624 | 0.721 | 0.652 | 0.576 | 0.710 | 0.683 | 0.590 | 0.669 | 0.747 | 0.707 | 0.680 |

| RTMDNet | 0.720 | 0.689 | 0.767 | 0.641 | 0.579 | 0.723 | 0.689 | 0.659 | 0.700 | 0.754 | 0.735 | 0.702 |

| MCCT | 0.683 | 0.616 | 0.720 | 0.614 | 0.573 | 0.704 | 0.621 | 0.659 | 0.683 | 0.741 | 0.700 | 0.681 |

| TADT | 0.667 | 0.669 | 0.723 | 0.617 | 0.609 | 0.669 | 0.664 | 0.626 | 0.694 | 0.728 | 0.692 | 0.655 |

| DeepSTRCF | 0.644 | 0.594 | 0.696 | 0.586 | 0.520 | 0.664 | 0.597 | 0.618 | 0.630 | 0.717 | 0.667 | 0.640 |

| UDT | 0.618 | 0.516 | 0.654 | 0.600 | 0.474 | 0.599 | 0.585 | 0.580 | 0.578 | 0.668 | 0.639 | 0.599 |

| SRDCF | 0.587 | 0.526 | 0.627 | 0.524 | 0.501 | 0.600 | 0.579 | 0.576 | 0.608 | 0.678 | 0.639 | 0.593 |

| STRCF | 0.586 | 0.563 | 0.658 | 0.5554 | 0.488 | 0.538 | 0.589 | 0.570 | 0.587 | 0.648 | 0.643 | 0.581 |

| ECO-HC | 0.653 | 0.608 | 0.712 | 0.587 | 0.569 | 0.653 | 0.631 | 0.599 | 0.653 | 0.698 | 0.690 | 0.640 |

| C-COT | 0.586 | 0.502 | 0.658 | 0.554 | 0.487 | 0.536 | 0.584 | 0.388 | 0.587 | 0.648 | 0.643 | 0.581 |

| MEEM | 0.563 | 0.516 | 0.595 | 0.418 | 0.460 | 0.509 | 0.580 | 0.476 | 0.526 | 0.629 | 0.591 | 0.680 |

| SAMF | 0.497 | 0.530 | 0.558 | 0.402 | 0.458 | 0.524 | 0.539 | 0.469 | 0.506 | 0.611 | 0.541 | 0.518 |

| MUSTER | 0.516 | 0.581 | 0.570 | 0.406 | 0.463 | 0.489 | 0.527 | 0.296 | 0.495 | 0.629 | 0.552 | 0.537 |

| DSST | 0.482 | 0.500 | 0.520 | 0.367 | 0.406 | 0.524 | 0.475 | 0.256 | 0.505 | 0.604 | 0.538 | 0.502 |

| KCF | 0.424 | 0.454 | 0.483 | 0.300 | 0.374 | 0.418 | 0.436 | 0.386 | 0.451 | 0.578 | 0.471 | 0.436 |

| Tracker | ARC | BC | CM | FM | FOC | IV | LR | OV | POC | SOB | SV | VC |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SiamR-CNN | 0.795 | 0.648 | 0.839 | 0.753 | 0.638 | 0.765 | 0.614 | 0.772 | 0.738 | 0.749 | 0.778 | 0.842 |

| SiamRPN++ | 0.751 | 0.564 | 0.804 | 0.706 | 0.509 | 0.756 | 0.570 | 0.728 | 0.692 | 0.721 | 0.761 | 0.832 |

| SiamBAN | 0.724 | 0.549 | 0.783 | 0.723 | 0.510 | 0.699 | 0.590 | 0.707 | 0.678 | 0.695 | 0.746 | 0.772 |

| DaSiamRPN | 0.680 | 0.574 | 0.738 | 0.660 | 0.464 | 0.653 | 0.524 | 0.631 | 0.625 | 0.659 | 0.692 | 0.709 |

| SCSAtt | 0.597 | 0.445 | 0.691 | 0.564 | 0.379 | 0.592 | 0.592 | 0.600 | 0.588 | 0.673 | 0.655 | 0.645 |

| ECO | 0.497 | 0.479 | 0.599 | 0.463 | 0.358 | 0.534 | 0.470 | 0.506 | 0.548 | 0.629 | 0.588 | 0.530 |

| RTMDNet | 0.524 | 0.463 | 0.608 | 0.454 | 0.326 | 0.574 | 0.464 | 0.553 | 0.596 | 0.617 | 0.622 | 0.536 |

| MCCT | 0.521 | 0.512 | 0.618 | 0.464 | 0.360 | 0.593 | 0.411 | 0.543 | 0.553 | 0.615 | 0.578 | 0.546 |

| TADT | 0.501 | 0.525 | 0.613 | 0.456 | 0.396 | 0.544 | 0.479 | 0.499 | 0.564 | 0.610 | 0.582 | 0.513 |

| DeepSTRCF | 0.503 | 0.444 | 0.605 | 0.427 | 0.318 | 0.529 | 0.398 | 0.513 | 0.512 | 0.601 | 0.560 | 0.519 |

| UDT | 0.499 | 0.422 | 0.569 | 0.480 | 0.308 | 0.499 | 0.499 | 0.500 | 0.482 | 0.563 | 0.548 | 0.481 |

| SRDCF | 0.431 | 0.401 | 0.545 | 0.366 | 0.301 | 0.457 | 0.359 | 0.465 | 0.468 | 0.532 | 0.510 | 0.441 |

| STRCF | 0.418 | 0.425 | 0.512 | 0.359 | 0.289 | 0.385 | 0.388 | 0.470 | 0.469 | 0.550 | 0.516 | 0.426 |

| ECO-HC | 0.491 | 0.459 | 0.598 | 0.414 | 0.368 | 0.511 | 0.404 | 0.520 | 0.525 | 0.585 | 0.561 | 0.476 |

| C-COT | 0.584 | 0.382 | 0.539 | 0.357 | 0.289 | 0.381 | 0.382 | 0.471 | 0.462 | 0.547 | 0.510 | 0.421 |

| MEEM | 0.362 | 0.389 | 0.426 | 0.242 | 0.258 | 0.360 | 0.304 | 0.329 | 0.380 | 0.516 | 0.405 | 0.357 |

| SAMF | 0.362 | 0.408 | 0.450 | 0.283 | 0.249 | 0.362 | 0.269 | 0.349 | 0.392 | 0.500 | 0.430 | 0.354 |

| MUSTER | 0.516 | 0.439 | 0.432 | 0.243 | 0.242 | 0.354 | 0.296 | 0.297 | 0.347 | 0.471 | 0.405 | 0.385 |

| DSST | 0.482 | 0.389 | 0.346 | 0.200 | 0.226 | 0.331 | 0.256 | 0.293 | 0.342 | 0.401 | 0.322 | 0.299 |

| KCF | 0.422 | 0.341 | 0.347 | 0.187 | 0.210 | 0.296 | 0.210 | 0.257 | 0.321 | 0.379 | 0.307 | 0.283 |

| Tracker | ARC | BC | CM | FM | FOC | IV | LR | OV | POC | SOB | SV | VC |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Siam R-CNN | 0.522 | 0.191 | 0.597 | 0.642 | 0.349 | 0.439 | 0.521 | 0.641 | 0.578 | 0.683 | 0.597 | 0.561 |

| DaSiamRPN | 0.517 | 0.191 | 0.595 | 0.641 | 0.346 | 0.436 | 0.520 | 0.637 | 0.572 | 0.667 | 0.584 | 0.558 |

| SiamRPN | 0.514 | 0.190 | 0.596 | 0.642 | 0.351 | 0.437 | 0.518 | 0.641 | 0.574 | 0.678 | 0.581 | 0.549 |

| MCCT | 0.516 | 0.382 | 0.54 | 0.534 | 0.418 | 0.563 | 0.475 | 0.575 | 0.573 | 0.618 | 0.586 | 0.495 |

| ECO | 0.489 | 0.382 | 0.567 | 0.493 | 0.409 | 0.551 | 0.486 | 0.546 | 0.554 | 0.559 | 0.567 | 0.507 |

| TADT | 0.521 | 0.383 | 0.588 | 0.614 | 0.444 | 0.518 | 0.550 | 0.534 | 0.577 | 0.587 | 0.588 | 0.505 |

| PTAV | 0.489 | 0.382 | 0.567 | 0.493 | 0.409 | 0.551 | 0.486 | 0.546 | 0.554 | 0.559 | 0.567 | 0.507 |

| DeepSTRCF | 0.488 | 0.381 | 0.566 | 0.508 | 0.429 | 0.523 | 0.512 | 0.549 | 0.556 | 0.563 | 0.566 | 0.503 |

| UDT | 0.446 | 0.378 | 0.496 | 0.492 | 0.427 | 0.437 | 0.445 | 0.478 | 0.487 | 0.521 | 0.489 | 0.402 |

| SRDCF | 0.389 | 0.252 | 0.482 | 0.327 | 0.331 | 0.411 | 0.429 | 0.495 | 0.491 | 0.522 | 0.481 | 0.414 |

| SAMF | 0.382 | 0.330 | 0.443 | 0.308 | 0.351 | 0.416 | 0.419 | 0.384 | 0.445 | 0.457 | 0.443 | 0.363 |

| Tracker | ARC | BC | CM | FM | FOC | IV | LR | OV | POC | SOB | SV | VC |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Siam R-CNN | 0.490 | 0.137 | 0.569 | 0.544 | 0.241 | 0.431 | 0.432 | 0.623 | 0.549 | 0.691 | 0.691 | 0.57 |

| DaSiamRPN | 0.489 | 0.131 | 0.564 | 0.541 | 0.225 | 0.430 | 0.424 | 0.605 | 0.543 | 0.687 | 0.691 | 0.552 |

| SiamRPN | 0.483 | 0.136 | 0.557 | 0.537 | 0.238 | 0.427 | 0.416 | 0.618 | 0.533 | 0.682 | 0.678 | 0.561 |

| MCCT | 0.403 | 0.327 | 0.463 | 0.347 | 0.285 | 0.428 | 0.337 | 0.448 | 0.456 | 0.563 | 0.563 | 0.497 |

| ECO | 0.42 | 0.288 | 0.506 | 0.321 | 0.267 | 0.498 | 0.341 | 0.501 | 0.495 | 0.565 | 0.565 | 0.51 |

| TADT | 0.464 | 0.321 | 0.537 | 0.445 | 0.307 | 0.504 | 0.432 | 0.448 | 0.525 | 0.591 | 0.591 | 0.563 |

| PTAV | 0.42 | 0.288 | 0.506 | 0.321 | 0.267 | 0.498 | 0.341 | 0.501 | 0.495 | 0.565 | 0.565 | 0.51 |

| DeepSTRCF | 0.474 | 0.297 | 0.556 | 0.397 | 0.286 | 0.531 | 0.408 | 0.552 | 0.545 | 0.61 | 0.61 | 0.556 |

| UDT | 0.4 | 0.319 | 0.456 | 0.404 | 0.309 | 0.43 | 0.349 | 0.433 | 0.441 | 0.514 | 0.514 | 0.43 |

| SRDCF | 0.305 | 0.203 | 0.384 | 0.207 | 0.214 | 0.327 | 0.24 | 0.407 | 0.383 | 0.463 | 0.463 | 0.39 |

| SAMF | 0.281 | 0.268 | 0.349 | 0.143 | 0.22 | 0.37 | 0.275 | 0.307 | 0.356 | 0.371 | 0.371 | 0.349 |

| Category | Method | Applicable Target | Applicable Scenario | Number of Targets |

|---|---|---|---|---|

| Manual features | ASLA [22] | Common objectives | Severe target occlusion | Single target |

| MUSTer [23] | Common objectives | Short/long-time tracking | Single target | |

| Characteristics of the cascade [62] | Common objectives | Hover aerial shot | Single target | |

| Moving average method [38] | Weak small targets | Smaller target | Single target | |

| Grayscale features, spatial features [35] | Weak/background similar targets | Complex background/small target | Single target | |

| Filter tracking | Bayesian trackers [39] | Blurred objectives | Common scenario | Many objectives |

| Wiener filtering [32] | Blurred objectives | Blurred target | Single target | |

| Vector field characteristics [50] | Fast/multitarget | Fast-moving speed/wide field of vision | Many objectives | |

| Feedback ESTMD [40] | Moving small target | Complicated background | Single target | |

| ARCF [53] | Moving target | Severe occlusion/background interference | Single target | |

| DSST [41] | Moving target | Common scenario | Single target | |

| KCF [47] | Moving target | Common scenario | Single target | |

| SRDCF [54] | Moving target | Large range of motion/complex scenes | Single target | |

| STRCF [55] | Moving target | Common scenario | Single target | |

| AutoTrack [56] | Moving target | Common scenario | Single target | |

| Scale estimate | SAMF [46] | Moving target | Scale change | Single target |

| Depth features | RT-MDNet [61] | Moving target | Complicated background | Single target |

| MEEM [66] | Multiscale target | General background | Single target | |

| C-COT [68] | Common objectives | General background | Single target | |

| ECO [67] | Common objectives | General background | Single target | |

| ECO+ [69] | Common objectives | Background complex/multiscale | Single target | |

| MCCT [70] | Common objectives | Target occlusion/complex background | Single target | |

| TADT [72] | Target deformation | Background interference/common scenario | Single target | |

| DeepSTRCF [55] | Similar objectives | Common scenario | Single target | |

| Siamese network | SiamFC [76] | Target deformation | General background | Single target |

| PTAV [80] | Common objectives | Common scenario | Single target | |

| SiamRPN [81] | Weak small targets | Common scenario | Single target | |

| Da SiamRPN [82] | Moving target | Long track | Single target | |

| SiamRPN++ [83] | Moving target | Various scenarios | Single target | |

| Siam R-CNN [85] | Multiscale target | Severe occlusion/common scenario | Single target | |

| SiamBAN [86] | Common objectives | Various scenarios | Single target | |

| UDT [87] | Multiscale target | Severe occlusion | Single target | |

| Attention mechanism | RASNet [89] | Common objectives | General background | Single target |

| SCSAtt [90] | Common objectives | Target scales vary substantially | Single target | |

| FICFNet [91] | Moving target | Severe deformation/occlusion of the target | Single target |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jia, J.; Lai, Z.; Qian, Y.; Yao, Z. Aerial Video Trackers Review. Entropy 2020, 22, 1358. https://doi.org/10.3390/e22121358

Jia J, Lai Z, Qian Y, Yao Z. Aerial Video Trackers Review. Entropy. 2020; 22(12):1358. https://doi.org/10.3390/e22121358

Chicago/Turabian StyleJia, Jinlu, Zhenyi Lai, Yurong Qian, and Ziqiang Yao. 2020. "Aerial Video Trackers Review" Entropy 22, no. 12: 1358. https://doi.org/10.3390/e22121358

APA StyleJia, J., Lai, Z., Qian, Y., & Yao, Z. (2020). Aerial Video Trackers Review. Entropy, 22(12), 1358. https://doi.org/10.3390/e22121358