Abstract

We consider brain activity from an information theoretic perspective. We analyze the information processing in the brain, considering the optimality of Shannon entropy transport using the Monge–Kantorovich framework. It is proposed that some of these processes satisfy an optimal transport of informational entropy condition. This optimality condition allows us to derive an equation of the Monge–Ampère type for the information flow that accounts for the branching structure of neurons via the linearization of this equation. Based on this fact, we discuss a version of Murray’s law in this context.

1. Introduction

The brain as the organ responsible for processing information in the body has been subjected to evolutionary pressure. “The business of the brain is computation, and the task it faces is monumental. It must take sensory inputs from the external world, translate this information into a computationally accessible form, then use the results to make decisions about which course of action is appropriate given the most probable state of the world” []. It is therefore arguable that information processing has been optimized, at least to some extent, by natural selection [,]. This is a rather abstract claim that should ultimately be contrasted with experiments (in this respect, see [,]). In a subsequent work, we explore this question in more detail, but a plausible connection can be established with experimental and theoretical results via fMRI in which different measures of cost have been proposed. The brain as an informational system is the subject of active research (see for instance [,,]). For example, “behavior analysis has adopted these tools [Information theory] as a novel means of measuring the interrelations between behavior, stimuli, and contingent outcomes” []. In this context, in [], it was shown that informational measures of contingency appear to be a reasonable basis for predicting behavior. We also refer the reader to the issue devoted to information theory in neuroscience [] for a recent account of this perspective. In the work just mentioned, several papers investigate optimization principles (for instance, the maximum entropy principle [] or the free energy principle; see [,]) as tools for understanding inference, coding, and other brain functionalities. Information theory will allow filling the gaps between disciplines, for example psychology, neurobiology, physics, mathematics, and computer science. In the present paper, we adopt a related setting and mathematically formalize information processing in the brain within the framework of optimal transport. The rationale for this is consistent with the view that some essential brain functionalities such as inference and the coordination of tasks (e.g., auditory and motor activities) involve the transportation of information and that such processes should be efficient, have been subjected to evolutionary pressure, and as a consequence, are (pseudo) optimal. As was already pointed out, our theoretical proposal should be contrasted with experimental results.

It is necessary to observe from the very beginning that information processing and transport are of an intrinsically spatiotemporal nature. Therefore, our proposal should include these two features. In doing so, we expect the spatial part of the optimization to give rise to spatial patterns, for instance network-like or branching hierarchical components, as well as the temporal structure, such as periodic or synchronized patterns. However, in this paper, we begin by considering only the spatial part, except for a few general remarks. Since our proposal is an attempt to establish a methodological framework to study informational entropy transport in the brain, we deal with spacial aspects first, leaving a study of the dynamical aspects for a subsequent work. Furthermore, in order to simplify the problem, we consider only the one-dimensional case in the mathematical formalism. However, it is certain that the geometry of the brain has to play an essential role in all the processes [], and we extrapolate some of the results we obtain to two and three dimensions.

Now, we provide an overview of the paper. In Section 2, we present a general framework for information processing in the brain as an optimal transport of entropy, as well as some mathematical results in the context of the Monge–Kantorovich problem. The main idea is that instead of considering that some sort of physical mass is being transported, it is informational entropy. In order to provide the mathematical results and for the sake of completeness, we adapt the material on the existence of a solution in the optimal mass transportation case as presented by Bonnotte [] to the informational case. We conclude this section with a derivation of the Monge–Ampère equation in the one-dimensional case. We begin Section 3 by recalling the linearization of the Monge–Ampère equation around the square of the distance function, which involves the Laplacian. Then, we argue that adding a nonlinear term is justified by the physiological nature of transmission along neurons. The resulting model is a semilinear elliptic equation. At the end of this section, we relate the qualitative features of the solutions with the branching structure of neural networks in the brain. In other words, we show that the optimal transport process of informational entropy is consistent with the geometric branching structure of neural branching. In Section 4, we elaborate on the relationship of the branching structure of neurons and Murray’s law [], which provides the optimal branching ratio of the father to the daughter branch sections, as well as the optimal bifurcation angle. We propose a modified version of Murray’s law when the underlying transport network carries information instead of a fluid. The last section is devoted to concluding remarks, further research, and open questions.

2. The Monge–Kantorovich Problem

We present a general overview of the results on optimal transportation theory needed in this work. For a complete exposition, see [,,] or [], but for the sake of completeness, we include a general discussion without giving the proofs of the results, but including appropriate references. Our presentation follows closely [] and some parts of [].

The original Monge–Kantorovich problem was formulated in the context of mass transport (Monge) or budget allocation (Kantorovich). In a later section, we will adapt the setting to include the transport of informational entropy.

Monge’s problem: Given two probability measures and on and a cost function , the problem of Monge can be stated as follows:

The condition means that T transports onto ; that is, is the push-forward of by T: for any , .

Monge–Kantorovich problem: Monge’s problem might have no solution; hence, it is better to take the following generalization proposed by Leonid Kantorovich: instead of looking for a map, find a measure:

where stands for the set of all transport plans between and , i.e., the probability measures on with marginals and . This problem really extends Monge’s problem. For any transport map T, sending onto yields a measure , which is given by , i.e., the only measure on such that:

and the associated costs of transportation are the same. In this version, it is not difficult to show that there is always a solution ([] or []).

2.1. Dual Formulation

There is a duality between the Monge–Kantorovich problem (2) and the following problem:

It seems natural to look for a solution of this problem among the pairs that satisfy:

We will write and .

Definition 1.

A function ψ is said to be c-concave if for some function ϕ. In that case, and are called the c-transform of ψ and ϕ, respectively. We also say that is an admissible pair and ψ, are admissible potentials.

Then, the problem becomes:

The function is called a Kantorovich potential between and .

The following proposition explains how to relate the Monge–Kantorovich problem (2) with (3), known as the Kantorovich duality principle.

Proposition 1

(Kantorovich duality principle). Let μ and ν be Borel probability measures on X and , respectively. If the cost function is lower semi-continuous and:

then there is a Borel map that is c-concave and optimal for (3). Moreover, the resulting maximum is equal to the minimum of the Monge–Kantorovich problem (2); i.e.,

or:

where,

If is optimal, then almost everywhere for π.

Proof.

A proof of this result can be found in []. □

2.2. Solution in the Real Line: Optimal Transportation Case

For the rest of this section, we only consider the one-dimensional case, as discussed in the Introduction.

Let X and Y be two bounded smooth open sets in and , the probability measures of X and Y, respectively, with , , in X, and in Y. Let and be the cumulative distributions of and , respectively, defined by and .

Proposition 2.

Let h ∈ be a non-negative, strictly convex function. Let μ and ν be Borel probability measures on such that:

If μ has no atom and F and G stand for the respective cumulative distribution of μ and ν, respectively, then:

solves Monge’s problem (1) for the cost:

If π is the induced transform plan, that is:

defined as for , then π is optimal for the Monge–Kantorovich problem (2).

Proof.

A proof of Proposition (2) can be found in []. □

In order to get the previous result, one has to consider the functional:

where is some given cost function and stands for the set of all transport plans between and , meaning the probability measures on with marginals and ; i.e.,

or more rigorously:

Our goal is to get a similar result to Proposition (2) in the case when entropy transportation is considered instead of mass transportation. This is the content of the next section.

3. Solution in the Real Line: Optimal Entropy Transportation Case

As was pointed out in [], “the fundamental measure in information theory is the entropy. It corresponds to the uncertainty associated with a signal. “Entropy” and “information” are used interchangeably, because the uncertainty about an outcome before it is observed, corresponds to the information gained by observing it” (for a review, see [,] or []). In that context, we will prove the existence of an optimal entropy transport for the cost function satisfying (6) and (7), similarly to the optimal transportation case discussed in the last section. We will also find the Monge–Ampère equation for quadratic cost for this optimal entropy transport. A few words are in order regarding the choice of c. From the mathematical perspective, it considerably simplifies the analysis. As a matter of fact, the optimal transportation problem has not been solved for the general case of nonquadratic costs. On the other hand, from the physiological point of view, it is natural to assume that the energy required to send a signal from one point of the brain to another can be taken as a monotone function of the distance.

Let and be probability measures defined as above, then take , and let the entropy be characterized by Shannon’s proposal:

where , , and are the distribution densities with respect to and , respectively, in the same way as the formulation of the optimal transportation problem states; i.e.,

satisfying (8). We wish (9) to be related to the probability measure in . It is natural to think that in passing from the state characterized by (9) to the one characterized by , where and wish it to be related to the probability measure in . Similarly, and will be the marginals of .

As a first concrete proposal, we consider the following functional:

where c is a spatial cost function. Notice that we have dropped the minus sign, so looking for maximal entropy is equivalent to minimizing the previous expression. The problem is then to find the optimal entropy transport strategy between x and y (analogous to Monge’s problem). There is however a standard problem, which is the fact that in the continuous case, the entropy could be negative, whereas in the discrete case (i.e., for discrete probability functions), the entropy is always positive. We therefore consider the absolute value of the entropy defined in (9). More precisely, define the following:

with:

It is natural to assume that:

for some constant , and let:

with:

Observation 1.

If is the distribution density of in , then (11) is a well-defined Borel probability measure on , and:

is also a well-defined Borel probability measure on . Then, we can define:

with and given by (11) and (12); or more rigorously:

with and given by (11) and (12); we can take the cumulative distributions of and , respectively, by and with and given as before. By definition, they are non-decreasing.

Now, we are in the condition to establish the problem (1) analogous to that of Monge, namely:

and analogous to the Monge–Kantorovich problem:

Next, we present the equivalent proposition of (2), for the measures given by (11) and (12), namely:

Proposition 3.

Let h∈ be a non-negative, strictly convex function. Let μ and ν be Borel probability measures on given by (11) and (12), respectively. Suppose that:

for . If μ has no atom and F and G represent the corresponding cumulative distribution functions of μ and ν, respectively, then:

solves Problem (14) for the cost:

If π is the induced entropy transform plan, that is:

defined as for , then π is optimal for Problem (15).

Proof.

The proof of this result is adapted from [] and for the sake of completeness is given in Appendix A. □

Our next goal is to deduce the Monge–Ampère equation for this case. In order to do that, we will need an analog of the Kantorovich duality principle (Proposition (1)), for the measures given by (11) and (12), namely:

Proposition 4.

Let μ and ν be the Borel probability measures on given by (11) and (12), respectively. If the cost function:

is lower semi-continuous and:

then there is a Borel map that is c-concave and optimal for (3). Moreover, the resulting maximum is equal to the minimum of Problem (15); i.e.,

or:

where,

and if given by (13) is optimal, then almost everywhere for π.

Proof.

A proof of this result can be found in Appendix A. □

If we propose , we wish T to be expressed as for some convex function and then to be able to find the corresponding Monge–Ampère equation related to the measures and given by (11) and (12). This fact is guaranteed by Brenier’s theorem. The details of its proof adapted for our case are important and can be found in Appendix A.

Theorem 1

(Brenier). Let μ and ν be the Borel probability measures on given by (11) and (12), respectively, and with finite second-order moments; that is, such that:

Then, if μ is absolutely continuous on Ω, there exists a unique

such that and:

with given by (13). Moreover, there is only one optimal transport plan, γ, which is necessarily , and T is the gradient of a convex function φ, which is therefore unique up to an additive constant. There is also a unique (up to an additive constant) Kantorovich potential, ψ, which is locally Lipschitz and linked to φ through the relation:

Proof.

See Appendix A. □

Observation 2.

Observe that Theorem 1 holds for the general case on .

The Monge–Ampère Equation

Let:

be two probability measures, absolutely continuous with respect to the Lebesgue measure. By Theorem 1, there exists a unique gradient of a convex function, , such that:

for all test functions . Since is strictly convex, then is and one-to-one. Hence, taking , we get:

From (20) and (21), we get:

and the Monge–Ampère equation:

corresponding to this case.

4. Neural Branching Structure and the Linearization of the Monge–Ampère Equation

As was pointed out in the Introduction, the purpose of this section is to propose a model for the branching structure of the neurons, which is consistent with the process of information transport previously introduced. The basic idea is as follows. If we consider that information transport is optimized in some brain processes, we consequently have (as discussed in the previous section) an associated Monge–Ampère equation for the transport plan potential. Besides, it is natural to consider a cost that is close to some power of the distance function, since physiological cost can be taken to depend on the distance traveled by the corresponding signal, as is usually assumed in transport networks. For technical reasons, and in order to be able to adapt results well known in the literature, we take this cost function to be close to:

From a qualitative perspective, this choice should not change the results much, as long as the cost function remains convex (see []). If this is the case, we can then compute the linearization of the Monge–Ampère equation around this quadratic cost function. The resulting equation is a linear elliptic equation. We argue that a self-activating mechanism should be incorporated in the form of a nonlinear term (as a result of the excitable nature of the transport of electric impulses along axons). In this way, we end up with a semilinear equation that can be used to explain branching processes in biological networks (see []). More specifically, the solution to this equation could be associated with the concentration of a morphogen, e.g., a growth factor, and if such a concentration is above a certain threshold, a branching mechanism is triggered. It is then consistent to look for solutions that are close to the quadratic cost function and therefore to linearize around it.

In order to linearize the Monge–Ampère equation, we assume then that is very close to , so is very close to . In that case, following [,,], make:

and:

with and . We leave the details of this computation for Appendix A. Substituting (24) and (25) in the Monge–Ampère Equation (23), we get as the linearized operator:

with:

Then, the Laplacian plus a transport term can be seen as the linearized version of the Monge–Ampère equation for our proposal. We notice that the main mechanism responsible for the flow of information along the axons is the propagation of electrical impulses. This is well known to be an excitable process that involves, among others, a self-activating component, as for instance in the standard Hodgkin–Huxley or FitzHugh–Nagumo models. If we include this into the linearization previously obtained (Equation (26)), we get:

where F a is function describing the self-activating mechanism and can be taken typically as a power of :

with . Solutions of this type of equations have been studied by many authors since the pioneering work by Ni and Takagi ([] and the references therein), since they appear in different contexts. These solutions typically exhibit concentrations that can be responsible for branching structures.

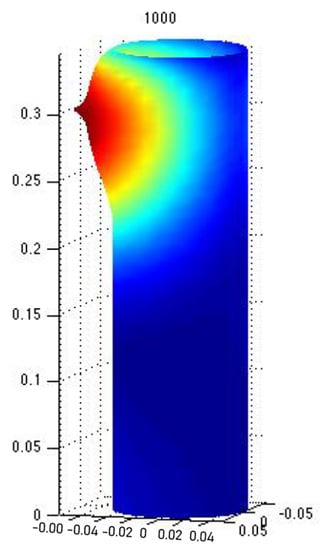

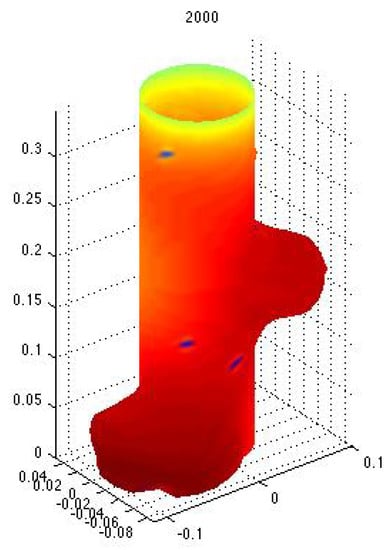

Indeed, if one assumes that the concentration of a solution to the previous equation is correlated with a growth factor morphogen, then a branch will stem out of the main branch. This or similar models have been proposed using reaction-diffusion models following Turing’s original proposal ([] or []), for pattern formation; in particular for branching structures in plants ([,] or []), lungs ([]), and other vascular systems ([,]). Figure 1 and Figure 2 show numerical simulations for a particular case of the linearization of the Monge–Ampère equation. Growth is induced by the concentration of the solution, and it can be seen that the process gives rise to lateral branches.

Figure 1.

Numerical solution of the linearization of the Monge-Ampère equation including growth. Branching occurs when there is the concentration of the solution, the morphogen, above a certain threshold (the color code stands for standard heat maps: red, high; blue, low). This simulation was provided by Jorge Castillo-Medina and developed in COMSOL. For more details, the reader is referred to [] and the references therein.

Figure 2.

A similar simulation as in the previous figure with a different growth rate. Notice the different branching structure. Simulation performed by J. Castillo as well; see also [].

5. Murray’s Law and Neural Branching

In the previous section, we argued that neuronal branching is compatible with reaction diffusion processes. On the other hand, we deduced the corresponding equations by considering the transport of information along neural networks. The question of whether there is some connection with Murray’s law arises naturally. Recall that Murray’s law refers to a transport network ([,]).

In his original paper [], Murray obtained from optimizing considerations a relationship for the different parameters associated with a branching transport network that was later generalized in []. In what follows, we deduce it from scratch for the sake of completeness (we refer the reader to [,,] for further details). The total power required for the flow to overcome the viscous drag is described by:

where is the dynamic viscosity of the fluid, L is the vessel length, f is the volumetric flow rate, m is an all-encompassing metabolic coefficient that includes the chemical cost of keeping the blood constituents fresh and functional and the general cost owing to the weight of the blood and the vessel, and r is the vessel radius. For our purposes, we modify Equation (27) as follows:

where the first term corresponds to the power required for an electrical impulse to propagate along the axon. Notice in particular that the factor of in the denominator follows from the fact that electrical resistance is inversely proportional to the area of the section of the conducting material. On the other hand, the second term is proportional to a power, , of the radius and describes the fact that metabolic cost can vary depending on the type of neurons with which we are dealing. For instance, the degree of myelination of the axon could determine the effective cost associated with information transport. The minimum power is found by differentiating with respect to r and equating to zero:

With this, the optimal radius:

and the optimal relation between volumetric flow rate and vessel radius, such that the power requirement is minimized, is obtained with:

where .

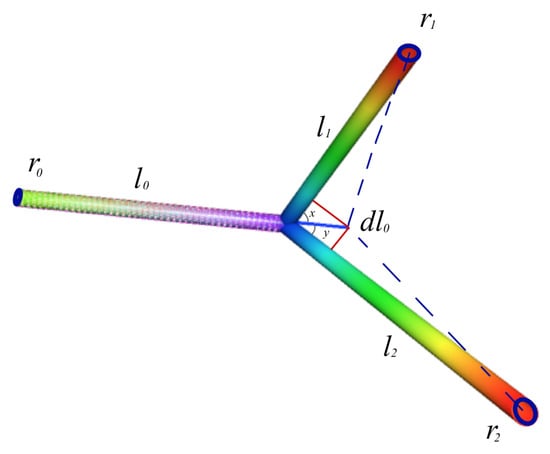

Using the construction of [], if the radius of the main branch (), lateral branches (, ), and x and y are the angles between the lateral branches and the main branch, we obtain a generalized version of Murray’s law:

thus, we get the general law:

for . Using these relations, we obtain three general equations associated with the branching angles (see Figure 3) x, y, and :

which correspond to a different generalized Murray’s law for different values of .

Figure 3.

The figure shows schematically the geometric configuration when branching occurs on a plane. Illustration after [] by the authors.

Observation 3.

If , then by (32)–(34), we get and then , which is consistent with the fact that , then and , and then, . This case corresponds to no branching. In terms of the cost, this would imply that it is more efficient for the network not to bifurcate.

If , then , , and , which corresponds to Murray’s original proposal [], which states that the angle in the bifurcation of an artery should not be less than ( to be more exact). This is consistent with the numerical and experimental results in [].

If , we get and then . On the other hand, , then since ; this implies that and . Similarly, . We obtain that and for this case. In other words, for , the angle between the bifurcated branches is , but orthogonal branching is ruled out.

We conclude then that the relevant values for our purposes are for . It would be interesting to contrast these possible scenarios with experimental data for different kinds of nervous tissues. To our knowledge, no systematic experimental study of branching angles has been carried out.

6. Conclusions

We proposed that information flow in some brain processes can be analyzed in the framework of optimal transportation theory. A Monge–Ampère equation was obtained for the optimal transportation plan potential in the one-dimensional case. Extrapolating to higher dimensions, the corresponding linearization around a quadratic distance cost was derived and shown to be consistent with the branching structure of the nervous system. Finally, a generalized version of Murray’s law was derived assuming different cost functions, depending on a parameter related to the metabolic maintenance term. Future work includes a detailed comparison of the methodological proposal with experimental data. In particular, it would be interesting to carry out the program here proposed in a concrete cognitive experiment. Possible concrete experiments to compare with could be found in [,,]. Here, we outline a simple procedure with fMRI data in which a direct connection with optimal transport theory can be tested. Consider the brain activity map for the resting state given by standard fMRI. Once normalized, this map will provide the initial probability density and entropy to be transported. In fact, in [], another possible methodology for measuring the entropy with fMRI can be found. Later on, the subject is asked to perform a simple motor task, for instance move the right hand. The corresponding density after the task is done can then be registered as before and will provide the final density in the optimal transport problem. Some intermediate densities should be determined as well. This information will provide a transport plan that can be compared with the mathematical solution of the problem. Correspondingly, the branching structures and their bifurcation angles and radii should be compared with experimental results as well. In principle, Murray’s law should be consistent with the Monge–Ampère equation and its linearization, and it should be possible to derive it from them. A precise relationship between the maximum entropy principle and optimal entropy transport should be clarified.

Author Contributions

All authors contributed equally to the manuscript: read and approved the final version, conceptualization, methodology, software, validation, formal analysis, investigation, resources, writing–original draft preparation, writing–review and editing, visualization, supervision and project administration. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

M.A.P. was supported by Universidad Autónoma de la Ciudad de México, sabbatical approval UACM/CAS/010/19. This work was supported by the Departamento de Matemáticas y Mecánica (MyM) of the Intituto de Investigaciones en Mateámaticas Aplicadas y en Sistemas (IIMAS) of the Universidad Nacional Autónoma de la Ciudad de México (UNAM). M.A.P. would like to thank P.P. and IIMAS-UNAM for the support during his sabbatical leave. The authors would also like to thank Jorge Castillo-Medina at the Universidad Autónoma de Guerrero in Acapulco for his kind permission to use Figure 1 and Figure 2.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Relevant Theorems and Some Proofs

Theorem A1

(The continuity and support theorem). Let μ be a probability measure on with cumulative distribution function F. The following properties are equivalent:

- a.

- The function F is strictly increasing in the interval:

- b.

- The inverse function is continuous.

- c.

- The inverse measure is non-atomic.

- d.

- The support of μ, given by:with Σ a σ-algebra defined on , is a closed interval in the real line, finite or not.

Proof.

The proof of this result can be found in [], Appendix A. □

Proposition A1.

Let h ∈ be a non-negative, strictly convex function. Let μ and ν be Borel probability measures on given by (11) and (12), respectively. Suppose that:

for . If μ has no atom and F and G represent the respective cumulative distribution functions of μ and ν, respectively, then:

solves Problem (14) for the cost:

If π is the induced entropy transform plan, that is:

defined as for , then π is optimal for Problem (15).

Proof.

- T is well defined:The only problem we might have with the definition of could be when . However, if , then:but if for some , we have , then , which means that and T is well defined, as desired.

- :Let F and G be defined as in observation (1). Then, is non-decreasing, since F and G are non-decreasing. Then:Since T is non-decreasing, is an interval.Claim 1. Since has no atom, F is increasing and continuous, and then, is a closed interval.If has no atom, let and such that . Then, given such that , there exits such that . Then, such that , there exists such that:then for all such that , and so, F is increasing and continuous; Theorem A1 implies that is continuous, and then:is closed. In particular, is closed. Then, we conclude that is a closed interval, as desired.We have proven Claim 1.Now, if , then , and we have:and then, , as desired.

- is optimal:Observe that given by (11) and (12) satisfy:where:and:then as given by (13).Now, observe that:since T and are non-decreasing,for . Then:On the other hand,for , and then:As a consequence, in any case, we have:Set:then (A2) becomes:which implies:as a consequence, is c-concave, according to Definition (1).Hypothesis A1 implies the existence of , such that:Claim 2: and : Observe that:and:hence:then, by (A4) and (A5),now, by Hypothesis A3 and since :therefore, integrating (A6) with respect to , we get:as a consequence:and we can conclude that .Similarly:by (A4) and (A5); using (A3) and (A7), if we integrate with respect to ,therefore:Hence, . We have proven Claim 2.Integrating with respect to , we get:and then:Finally, observe that for every ; if is another entropy transport plan, the associated total entropy transport cost is greater, by the definition of and ; then, the equality holds only for the optimal entropy transport plan and ; hence, it solves Problem (14), and solves Problem (15).We have proven the proposition.□

Proposition A2.

Let μ and ν be the Borel probability measures on given by (11) and (12), respectively. If the cost function:

is lower semi-continuous and:

then there is a Borel map that is c-concave and optimal for (3). Moreover, the resulting maximum is equal to the minimum of Problem (15); i.e.,

or:

where,

and if given by (13) is optimal, then almost everywhere for π.

Proof.

The existence of a maximizing pair and Relation (A8) have been proven in Proposition A1. Now, choosing an optimal , we have:

and since in , it must vanish for -a.e. .

The proof is completed. □

Theorem A2

(Brenier). Let μ and ν be the Borel probability measures on given by (11) and (12), respectively, and with second-order moments; that is, such that:

Then, if μ is absolutely continuous on Ω, there exists a unique such that and:

with given by (13). Moreover, there is only one optimal transport plan γ, which is necessarily , and T is the gradient of a convex function φ, which is therefore unique up to an additive constant. There is also a unique (up to an additive constant) Kantorovich potential, ψ, which is locally Lipschitz and linked to φ through the relation:

Proof.

Claim 1: , and it is optimal:

Set . Proposition A1 guarantees the existence of the pair as given by (A9) such that for all ; then:

and then:

Set:

Hypothesis A10 means:

then:

hence, is always finite in given by (13). As a consequence,

where , given by (18), and:

Then, the Kantorovich duality principle (4) becomes:

Now, since ; hence:

is called the Legendre transform of and satisfies:

It can be proven that (see []; Theorem 2.9); now, Proposition A1 guarantees the existence of an optimal transport plan . As a consequence, Relation (A13) implies:

and then:

for all . However, by the definition of the Legendre transform. Then, for -a.e. , we have:

(by symmetry, we can also conclude that ). Now, since is convex, it can be proven that it is locally Lipschitz and continuous; Rademacher’s theorem (see []; Section 3.1.2) implies then that is differentiable -a.e. on . As a consequence:

One can prove that (see []). Therefore, , and it is optimal.

We have proven Claim 1.

Claim 2: is the only transport plan:

Let be another convex function such that .

We want to prove -a.e. .

By Claim 1, is an optimal transport plan, and the pair is optimal for the dual problem, just like . Therefore:

Let be the optimal transport plan associated with . Then:

hence:

and:

since , we conclude:

-a.e. on . Hence, by (A14):

Now, since is differentiable -a.e. on :

Claim 2 has been proven.

Finally, we have proven not only the uniqueness of Problem (15) (analogous to the Monge–Kantorovich problem), but also the uniqueness of the gradient of a convex function such that and:

We have proven the theorem.

□

Appendix B. Linearization of the Monge–Ampère Equation

Assume that is very close to , so is very close to . In that case, following [,,], make:

and:

with . Then, by the Neumann series:

On the other hand, it is easy to see that:

Definition A1.

The term is called the linearization of the Monge–Ampère equation, where is the matrix of co-factors of .

Then:

Substituting (A16) and (A17) into the Monge–Ampère Equation (22) and omitting order superior terms, we get:

and:

by using the order one Taylor’s series. as a consequence, (A18) becomes:

then:

and then:

omitting again order two terms, we get:

or simply:

with:

We have proven that the Laplace equation can be seen as the linearized version of the Monge–Ampère equation for our proposal.

References

- Jensen, G.; Ward, R.D.; Balsam, P.D. Information: Theory, brain, and behavior. J. Exp. Anal. Behav. 2013, 100, 408–431. [Google Scholar] [CrossRef] [PubMed]

- Pregowska, A.; Szczepanski, J.; Wajnryb, E. Temporal code versus rate code for binary Information Sources. Neurocomputing 2016, 216, 756–762. [Google Scholar] [CrossRef]

- Pregowska, A.; Szczepanski, J.; Wajnryb, E. How Far can Neural Correlations Reduce Uncertainty? Comparison of Information Transmission Rates for Markov and Bernoulli Processes. Int. J. Neural Syst. 2019, 29, 1950003. [Google Scholar] [CrossRef] [PubMed]

- Harris, J.J.; Jolivet, R.; Engl, E.; Attwell, D. Energy-Efficient Information Transfer by Visual Pathway Synapses. Curr. Biol. 2015, 25, 3151–3160. [Google Scholar] [CrossRef]

- Harris, J.J.; Engl, E.; Attwell, D.; Jolivet, R.B. Energy-efficient information transfer at thalamocortical synapses. PLoS Comput. Biol. 2019, 15, 1–27. [Google Scholar] [CrossRef]

- Keshmiri, S. Entropy and the Brain: An Overview. Entropy 2020, 22, 917. [Google Scholar] [CrossRef]

- Salmasi, M.; Stemmler, M.; Glasauer, S.; Loebel, A. Synaptic Information Transmission in a Two-State Model of Short-Term Facilitation. Entropy 2019, 21, 756. [Google Scholar] [CrossRef]

- Crumiller, M.; Knight, B.; Kaplan, E. The Measurement of Information Transmitted by a Neural Population: Promises and Challenges. Entropy 2013, 15, 3507–3527. [Google Scholar] [CrossRef]

- Panzeri, S.; Piasini, E. Information Theory in Neuroscience. Entropy 2019, 21, 62. [Google Scholar] [CrossRef]

- Isomura, T. A Measure of Information Available for Inference. Entropy 2018, 20, 512. [Google Scholar] [CrossRef]

- Friston, K. The free-energy principle: A unified brain theory? Nat. Rev. Neurosci. 2010, 11, 127–138. [Google Scholar] [CrossRef] [PubMed]

- Ramstead, M.J.D.; Badcock, P.B.; Friston, K. Answering Schrodinger’s question: A free-energy formulation. Phys. Life Rev. 2016, 24, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Luczak, A. Measuring neuronal branching patterns using model-based approach. Front. Comput. Neurosci. 2010, 4, 135. [Google Scholar] [CrossRef] [PubMed]

- Bonnotte, N. Unidimensional and Evolution Methods for Optimal Transportation. Ph.D. Thesis, Scuola Normale Superiore di Pisa and Université Paris-Sud XI, Orsay, France, 2013. [Google Scholar]

- Stephenson, D.; Patronis, A.; Holland, D.M.; Lockerby, D.A. Generalizing Murray’s Law: An optimization principle for fluidic networks of arbitrary shape and scale. J. Appl. Phys. 2015, 118, 174302. [Google Scholar] [CrossRef]

- Villani, C. Topics in Optimal Transportation, 1st ed.; Graduate Studies in Mathematics; American Mathematical Society: Providence, RI, USA, 2003; Volume 58. [Google Scholar]

- Villani, C. Optimal Transport Old and New, 1st ed.; Grundlehren der Mathematischen Wissenschaften: A Series of Comprehensive Studies in Mathematics; Springer: Berlin/Heidelberg, Germany, 2009; Volume 338. [Google Scholar] [CrossRef]

- Evans, L.C. Partial Differential Equations and Monge–Kantorovich Mass Transfer. Curr. Dev. Math. 1997, 1997, 65–126. [Google Scholar] [CrossRef]

- Shannon, C. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Applebaum, D. Probability and Information: An Integrated Approach, 2nd ed.; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar] [CrossRef]

- Alarcón, T.; Castillo, J.; García-Ponce, B.; Padilla, P. Growth rate and shape as possible control mechanisms for the selection of mode development in optimal biological branching processes. Eur. Phys. J. Spec. Top. 2016, 225, 2581–2589. [Google Scholar] [CrossRef]

- Gutierrez, C.E.; Caffarelli, L.A. Properties of the solutions of the linearized Monge-Ampére equation. Am. J. Math. 1997, 119, 423–465. [Google Scholar] [CrossRef]

- Gutiérrez, C.E. The Monge-Ampére Equation, 2nd ed.; Progress in Nonlinear Differential Equations and Their Applications; Birkhäuser: Basel, Switzerland, 2016; Volume 89. [Google Scholar] [CrossRef]

- Ni, W.M. Diffusion, cross-diffusion, and their spike-layer steady states. Not. AMS 1998, 45, 9–18. [Google Scholar]

- Turing, A.M. The Chemical Basis of Morphogenesis. Philos. Trans. R. Soc. Lond. Ser. B Biol. Sci. 1952, 237, 37–72. [Google Scholar]

- Zhu, X.; Yang, H. Turing Instability-Driven Biofabrication of Branching Tissue Structures: A Dynamic Simulation and Analysis Based on the Reaction–Diffusion Mechanism. Micromachines 2018, 9, 109. [Google Scholar] [CrossRef] [PubMed]

- Meinhardt, H.; Koch, A.J.; Bernasconi, G. Models of pattern formation applied to plant development. In Symmetry in Plants; Series in Mathematical Biology and Medicine: Volume 4; World Scientific: Singapore, 1998; pp. 723–758. [Google Scholar] [CrossRef]

- Cortes-Poza, Y.; Padilla-Longoria, P.; Alvarez-Buylla, E. Spatial dynamics of floral organ formation. J. Theor. Biol. 2018, 454, 30–40. [Google Scholar] [CrossRef] [PubMed]

- Barrio, R.A.; Romero-Arias, J.R.; Noguez, M.A.; Azpeitia, E.; Ortiz-Gutiérrez, E.; Hernández-Hernández, V.; Cortes-Poza, Y.; Álvarez-Buylla, E. Cell Patterns Emerge from Coupled Chemical and Physical Fields with Cell Proliferation Dynamics: The Arabidopsis thaliana Root as a Study System. PLoS Comput. Biol. 2013, 9, e1003026. [Google Scholar] [CrossRef] [PubMed]

- Serini, G.; Ambrosi, D.; Giraudo, E.; Gamba, A.; Preziosi, L.; Bussolino, F. Modeling the early stages of vascular network assembly. EMBO J. 2003, 22, 1771–1779. [Google Scholar] [CrossRef]

- Köhn, A.; de Back, W.; Starruß, J.; Mattiotti, A.; Deutsch, A.; Perez-Pomares, J.M.; Herrero, M.A. Early Embryonic Vascular Patterning by Matrix-Mediated Paracrine Signalling: A Mathematical Model Study. PLoS ONE 2011, 6, e24175. [Google Scholar]

- Murray, C. The Physiological Principle of Minimum Work: I. The Vascular System and the Cost of Blood Volume. Proc. Natl. Acad. Sci. USA 1926, 12, 207–214. [Google Scholar] [CrossRef]

- Murray, C. The Physiological Principle of Minim Work Applied to the Angle of Branching of Arteries. J. Gen. Physiol. 1926, 9, 835–841. [Google Scholar] [CrossRef]

- McCulloh, K.A.; Sperry, J.S.; Adler, F.R. Water transport in plants obeys Murray’s law. Nature 2003, 421, 939–942. [Google Scholar] [CrossRef]

- Zheng, X.; Shen, G.; Wang, C.; Li, Y.; Dunphy, D.; Tawfique Hasan, T.; Brinker, C.J.; Su, B.L. Bio-inspired Murray materials for mass transfer and activity. Nat. Commun. 2017, 8, 14921. [Google Scholar] [CrossRef]

- Özdemir, H.I. The structural properties of carotid arteries in carotid artery diseases—A retrospective computed tomography angiography study. Pol. J. Radiol. 2020, 85, e82–e89. [Google Scholar] [CrossRef]

- Wang, Z.; Li, Y.; Childress, A.R.; Detre, J.A. Brain Entropy Mapping Using fMRI. PLoS ONE 2014, 9, e89948. [Google Scholar] [CrossRef] [PubMed]

- Bobkov, S.; Ledoux, M. One-Dimensional Empirical Measures, Order Statistics, and Kantorovich Transport Distances, 1st ed.; Memoirs of the American Mathematical Society; American Mathematical Society: Providence, RI, USA, 2016; Volume 261. [Google Scholar] [CrossRef]

- Evans, L.C.; Gariepy, R.F. Measure Theory and Fine Properties of Functions, Revised ed.; Textbooks in Mathematics; CRC Press: Boca Raton, FL, USA, 2015. [Google Scholar]

Sample Availability: Samples of the compounds are available from the authors. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).