Abstract

In this paper, we consider a surrogate modeling approach using a data-driven nonparametric likelihood function constructed on a manifold on which the data lie (or to which they are close). The proposed method represents the likelihood function using a spectral expansion formulation known as the kernel embedding of the conditional distribution. To respect the geometry of the data, we employ this spectral expansion using a set of data-driven basis functions obtained from the diffusion maps algorithm. The theoretical error estimate suggests that the error bound of the approximate data-driven likelihood function is independent of the variance of the basis functions, which allows us to determine the amount of training data for accurate likelihood function estimations. Supporting numerical results to demonstrate the robustness of the data-driven likelihood functions for parameter estimation are given on instructive examples involving stochastic and deterministic differential equations. When the dimension of the data manifold is strictly less than the dimension of the ambient space, we found that the proposed approach (which does not require the knowledge of the data manifold) is superior compared to likelihood functions constructed using standard parametric basis functions defined on the ambient coordinates. In an example where the data manifold is not smooth and unknown, the proposed method is more robust compared to an existing polynomial chaos surrogate model which assumes a parametric likelihood, the non-intrusive spectral projection. In fact, the estimation accuracy is comparable to direct MCMC estimates with only eight likelihood function evaluations that can be done offline as opposed to 4000 sequential function evaluations, whenever direct MCMC can be performed. A robust accurate estimation is also found using a likelihood function trained on statistical averages of the chaotic 40-dimensional Lorenz-96 model on a wide parameter domain.

1. Introduction

Bayesian inference is a popular approach for solving inverse problems with far-reaching applications, such as parameter estimation and uncertainty quantification (see for example [1,2,3]). In this article, we will focus on a classical Bayesian inference problem of estimating the conditional distribution of hidden parameters of dynamical systems from a given set of noisy observations. In particular, let be a time-dependent state variable, which implicitly depends on the parameter through the following initial value problem,

Here, for any fixed , f can be either deterministic or stochastic. Our goal is to estimate the conditional distribution of , given discrete-time noisy observations , where:

Here, are the solutions of Equation (1) for a specific hidden parameter , g is the observation function, and are unbiased noises representing the measurement or model error. Although the proposed approach can also estimate the conditional density of the initial condition , we will not explore this inference problem in this article.

Given a prior density, , Bayes’ theorem states that the conditional distribution of the parameter can be estimated as,

where denotes the likelihood function of given the measurements that depend on a hidden parameter value through (2). In most applications, the statistics of the conditional distribution are the quantity of interest. For example, one can use the mean statistic as a point estimator of and the higher order moments for uncertainty quantification. To realize this goal, one draws samples of and estimates these statistics via Monte Carlo averages over these samples. In this application, Markov Chain Monte Carlo (MCMC) is a natural sampling method that plays a central role in the computational statistics behind most Bayesian inference techniques [4].

In our setup, we assume that for any , one can simulate:

where denote solutions to the initial value problem in Equation (1). If the observation function has the following form,

where are i.i.d. noises, then one can define the likelihood function of , , as a product of the density functions of the noises ,

When the observations are noise-less, , and the underlying system is an Itô diffusion process with additive or multiplicative noises, one can use the Bayesian imputation to approximate the likelihood function [5]. In both parametric approaches, it is worth noting that the dependence of the likelihood function on the parameter is implicit through the solutions . Practically, this implicit dependence is the source of the computational burden in evaluating the likelihood function since it requires solving the dynamical model in (1) or every proposal in the MCMC chain. In the case when simulating is computationally feasible, but the likelihood function is intractable, then one can use, e.g., the Approximate Bayesian Computation (ABC) rejection algorithm [6,7] for Bayesian inference. Basically, the ABC rejection scheme generates the samples of by comparing the simulated to the observed data, , with an appropriate choice of metric comparison for each proposal . In general, however, repetitive evaluation of (4) can be expensive when the dynamics in (1) is high-dimensional and/or stiff, or when T is large, or when the function g is an average of a long time series. Our goal is to address this situation in addition to not knowing the approximate likelihood function.

Broadly speaking, the existing approaches to overcome repetitive evaluation of (4) require knowledge of an approximate likelihood function such as in (6). They can be grouped into two classes. The first class consists of methods that improve/accelerate the sampling strategy; for example, the Hamiltonian Monte Carlo [8], adaptive MCMC [9], and delay rejection adaptive Metropolis [10], just to name a few. The second class consists of methods that avoid solving the dynamical model in (1) when running the MCMC chain by replacing it with a computationally more efficient model on a known parameter domain. This class of approach, also known as surrogate modeling, includes Gaussian process models [11], polynomial chaos [12,13], and enhanced model error [14]; for example, the non-intrusive spectral projection [13] approximate in (6) with a polynomial chaos expansion. Another related approach, which also avoids MCMC on top of integrating (1), is to employ a polynomial expansion on the likelihood function [15]. This method represents the parametric likelihood function in (6) with orthonormal basis functions of a Hilbert space weighted by the prior measure. This choice of basis functions makes the computation for the statistics of the posterior density straightforward, and thus, MCMC is not needed.

In this paper, we consider a surrogate modeling approach where a nonparametric likelihood function is constructed using a data-driven spectral expansion. By nonparametric, we mean that our approach does not require any parametric form or assume any distribution as in (6). Instead, we approximate the likelihood function using the kernel embedding of conditional distribution formulation introduced in [16,17]. In our application, we will extend their formulation onto a Hilbert space weighted by the sampling measure of the training dataset as in [18]. We will rigorously demonstrate that using orthonormal basis functions of this data-driven weighted Hilbert space, the error bound is independent of the variance of the basis functions, which allows us to determine the amount of training data for accurate likelihood function estimations.

Computationally, assuming that the observations lie on (or close to) a Riemannian manifold embedded in with sampling density , we apply the diffusion maps algorithm [19,20] to approximate orthonormal basis functions using the training dataset. Subsequently, a nonparametric likelihood function is represented as a weighted sum of these data-driven basis functions, where the coefficients are precomputed using the kernel embedding formulation. In this fashion, our approach respects the geometry of the data manifold. Using this nonparametric likelihood function, we then generate the MCMC chain for estimating the conditional distribution of hidden parameters. For the present work, our aim is to demonstrate that one can obtain accurate and robust parameter estimation by implementing a simple Bayesian inference algorithm, the Metropolis scheme, with the data-driven nonparametric likelihood function. We should also point out that the present method is computationally feasible on low-dimensional parameter space, like any other surrogate modeling approach. Possible ways to overcome this dimensionality issue will be discussed.

This paper is organized as follows: In Section 2, we review the formulation of the reproducing kernel Hilbert space to estimate conditional density functions. In Section 3, we discuss the error estimate of the likelihood function approximation. In Section 4, we discuss the construction of the analytic basis functions for the Euclidean data manifold, as well as the data-driven basis functions with the diffusion maps algorithm for data that lie on embedded Riemannian geometry. In Section 5, we provide numerical results with parameter estimation application on instructive examples. In one of the examples where the dynamical model is low-dimensional and the observation is in the form of (5), we compare the proposed approach with the direct MCMC and non-intrusive spectral projection method (both schemes use likelihood of the form (6)). In addition, we will also demonstrate the robustness of the proposed approach on an example where g is a statistical average of a long-time trajectory (in which the likelihood is intractable) and the dynamical model has relatively high-dimensional chaotic dynamics such that repetitive evaluation of (4) is numerically expensive. In Section 6, we conclude this paper with a short summary. We accompany this paper with Appendices for treating large amount of data and more numerical results.

2. Conditional Density Estimation via Reproducing Kernel Weighted Hilbert Spaces

Let , where is a smooth manifold with intrinsic dimension . In practice, we measure the observations in the ambient coordinates and denote their components as . For the parameter space, has a Euclidean structure with components, , so is assumed to be either an m-dimensional hyperrectangle or . For training, we are given M number of training parameters . For each training parameter , we generate a discrete time series of length N for noisy observation data for and . Here, the sub-index i and the sub-index j of correspond to the observation data for the training parameter . Our goal for training is to learn the conditional density from the training dataset and for arbitrary and within the range of .

The construction of the conditional density is based on a machine learning tool known as the kernel embedding of the conditional distribution formulation introduced in [16,17]. In their formulation, the representation of conditional distributions is an element of a Reproducing Kernel Hilbert Space (RKHS).

Recently, the representation using a Reproducing Kernel Weighted Hilbert Space (RKWHS) was introduced in [18]. That is, let be the orthonormal basis of , where they are eigenbasis of an integral operator,

that is, .

In the case where is compact and is Hilbert–Schmidt, the kernel can be written as,

which converges in . Define the feature map as,

Therefore, any can be represented as , where and provided that . If we define , we can write the kernel in (8) as . Throughout this manuscript, we denote the RKHS generating the feature map in (9) as the space of square integrable functions with a reproducing property,

induced by the basis of . While this definition deceptively suggests that is similar to , we should also point out that the RKHS requires that the Dirac functional defined as be continuous. Since contains a class of functions, it is not an RKHS and . See, e.g., Chapter 4 of [21] for more details. Using the same definition, we denote as the RKHS induced by orthonormal basis of of functions of the parameter .

In this work, we will represent conditional density functions using the RKWHS induced by the data, where the bases will be constructed using the diffusion maps algorithm. The outcome of the training is an estimate of the conditional density, , for arbitrary and within the range of .

2.1. Review of Nonparametric RKWHS Representation of Conditional Density Functions

We first review the RKWHS representation of conditional density functions deduced in [18]. Let be the orthonormal basis functions of , where contains the domain of the training data , and the weight function is defined with respect to the volume form inherited by from the ambient space . Let be the orthonormal basis functions in the parameter space, where the training parameters are , with weight function . For finite modes, , and , a nonparametric RKWHS representation of the conditional density can be written as follows [18]:

where denotes an estimate of the conditional density , and the expansion coefficients are defined as:

Here, the matrix is , and the matrix is , whose components can be approximated by Monte Carlo averages [18]:

where the expectations are taken with respect to the sampling densities of the training dataset and . The equation for the expansion coefficients in Equation (11) is based on the theory of kernel embedding of the conditional distribution [16,17,18]. See [18] for the detailed proof of Equations (11)–(13). Note that for RKWHS representation, the weight functions q and can be different from the sampling densities of the training dataset and , respectively. This generalizes the representation in [18], which sets the weights q and to be the sampling densities of the training dataset and , respectively. If the assumption of is not satisfied, then can be singular. In such a case, one can follow the suggestion in [16,17] to regularize the linear regression in (11) by replacing with , where is an empirically-chosen parameter and denotes an identity matrix of size .

Incidentally, it is worth mentioning that the conditional density in (10) and (11) is represented as a regression in infinite-dimensional spaces with basis functions and . The expression (10) is a nonparametric representation in the sense that we do not assume any particular distribution for the density function . In this representation, only training dataset and with appropriate basis functions are used to specify the coefficients and the densities . In Section 4, we will demonstrate how to construct the appropriate basis completely from the training data, motivated by the theoretical result in Section 3 below.

2.2. Simplification of the Expansion Coefficients (11)

If the weight function is the sampling density of the training parameters , the matrix in (13) can be simplified to a identity matrix,

where is the Kronecker delta function. Here, the second equality follows from the weight being the sampling density, and the third equality follows from the orthonormality of with respect to the weight function . Then, the expansion coefficients in (11) can be simplified to,

with the matrix still given by (12). In this work, we always take the weight function to be the sampling density of the training parameters for the simplification of the expansion coefficients in (15). This assumption is not too restrictive since the training parameters are specified by the users.

Finally, the formula in (10) combined with the expansion coefficients in (15) and the matrix in (12) forms an RKWHS representation of the conditional density for arbitrary and . Numerically, the training outcome is the matrix in (12), and then, the conditional density can be represented by (10) with coefficients (15) using the basis functions and . From above, one can see that two important questions naturally arise as a consequence of the usage of RKWHS representation: first, whether the representation in (10) is valid in estimating the conditional density ; second, how to construct the orthonormal basis functions and . We will address these two important questions in the next two sections.

3. Error Estimation

In this section, we focus on the error estimation of the expansion coefficient and, later, the conditional density at the training parameter . The notation is defined as the expansion coefficient in (15), evaluated at the training parameter . Let the total number of basis functions in parameter space, , be equal to the total number of training parameters, M, that is, . Denoting , where the component of approximates the basis function evaluated at the training data , we can write the last equality in (14) in a compact form as . This also means that, , the components of which are,

For the training parameter , we can simplify the expansion coefficient by substituting Equation (12) into Equation (15),

where the last equality follows from (16).

3.1. Error Estimation Using Arbitrary Bases

We first study the error estimation for the expansion coefficient . For each training parameter , the conditional density function can be analytically represented in the form,

due to the completeness of . Here, the analytic expansion coefficient is given by,

Note that the estimator in (17) is a Monte Carlo approximation of the expansion coefficient in (19), i.e.,

where the last equality follows from the training dataset , which admits a conditional density . Note also that in the following theorems and propositions, the condition is required. In Section 5.2 and Appendix B, we will provide an example to discuss this condition in detail. Next, we provide the unbiasedness and consistency of the estimator .

Proposition 1.

Proof.

The estimator is unbiased,

where the expectation is taken with respect to the conditional density . If the variance, Var, is finite, then the variance of converges to zero as the number of training data ,

Then, we can obtain that the estimator is consistent,

where Chebyshev’s inequality has been used. □

If the estimator of is given by the representation with an infinite number of basis functions, , then the estimator is pointwise unbiased for every observation . However, in the numerical implementation, only a finite number of basis functions can be used in the representation (10). Numerically, the estimator of is given by the representation (10) at the training parameter ,

Then, the pointwise error of the estimator, , can be defined as:

It can be seen that the estimator is no longer unbiased or consistent due to the first error term in (23) induced by modes . Next, we estimate the expectation and the variance of an -norm error of for all training parameters .

Theorem 1.

Let the condition in Proposition 1 be satisfied for all , and Var be finite for all . Define the -norm error,

where is the pointwise error in (23), and is the volume form inherited by the manifold from the ambient space [18,20]. Then,

where and Var are defined with respect to the joint distribution of for all . Moreover, and Var converge to zero as and then , where the limiting operations of and N are not commutative.

Proof.

The expectation of can be estimated as,

where the first inequality follows from Jensen’s inequality. Here, the randomness comes from the estimators . Due to the orthonormality of basis functions, , the error estimation in (27) can be simplified as,

where the inequality follows from the linearity of expectation, and the equality follows from in (21) and Var in (22). In error estimation (28), the first term is deterministic, and the second term is random. We have so far proven that the expectation is bounded by (25). Similarly, we can prove that the variance Var is bounded by (26).

Next, we prove that the expectation converges to zero as and then . Parseval’s theorem states that:

where the inequality follows from for all . For , there exists an integer for such that:

Since the variance Var is assumed to be finite for all k and j, there exists a constant such that Var can be bounded above by this constant D,

Then, for , there exists a sufficiently large number of training data:

such that whenever , then:

Since is arbitrary, by substituting Equation (32) and Equation (35) into the error estimation (28), we obtain that converges to zero as and then . Note that, we first take to ensure the first error term in (28) vanishes and then take to ensure the second error term in (28) vanishes. Thus, the limiting operations of and N are not commutative. Similarly, we can prove that the variance Var converges to zero as and then . □

Theorem 1 provides the intuition for specifying the number of training observation data N to achieve any desired accuracy given fixed M-parameters and sufficiently large . It can be seen from Theorem 1 that numerically, the expectation in (25) and the variance Var in (26) can be bounded within arbitrarily small by choosing sufficiently large and N. Specifically, there are two error terms in Equations (25) and (26), the first being deterministic, induced by modes , and the second random, induced by modes . For the deterministic term (), the error can be bounded by by choosing sufficiently large satisfying (31). In our implementation, the number of basis functions is empirically chosen to be large enough in order to make the first error term in Equations (25) and (26) for as small as possible.

For the random term (), the error can be bounded by by choosing sufficiently large N satisfying (Equation (34)). The minimum number of training data, , depends on the upper bound of Var, D. However, the upper bound D may not exist for some problems. This means that for some problems, the assumption for finite Var in Theorem 1 may not be satisfied. Even if the upper bound D exists, it is typically not easy to evaluate its value given an arbitrary basis since one needs to evaluate Var for all and . Note that Theorem 1 holds true for representing with an arbitrary basis as long as for all and Var is finite for and . Next, we provide several cases in which Var is finite for k and j.

Remark 1.

If the weighted Hilbert space is defined on a compact manifold and has smooth basis functions , then Var is finite for a fixed and . This assertion follows from the fact that continuous functions on a compact manifold are bounded. The smoothness assumption is not unreasonable in many applications since the orthonormal basis functions are obtained as solutions of an eigenvalue problem of a self-adjoint second-order elliptic differential operator. Note that the bound here is not necessarily a uniform bound of for all and . As long as Var is finite for and , the upper bound D is finite, and then, Theorem 1 holds.

Remark 2.

If the manifold is a hyperrectangle in and the weight q is a uniform distribution on , then Var is finite for a fixed and . This assertion is an immediate consequence of Remark 1.

In Theorem 1, depends on the upper bound of Var, D, as shown in (34). In the following, we will specify a Hilbert space, referred to as a data-driven Hilbert space, so that is independent of D and is only dependent of M, , and . As a consequence, we can easily determine how many training data N for bounding the second error term in Equations (25) and (26).

3.2. Error Estimation Using a Data-Driven Hilbert Space

We now turn to the discussion of a specific data-driven Hilbert space with orthonormal basis functions . Our goal is to specify the weight function such that the minimum number of training data, , only depends on M, , and . Here, the overline corresponds to the specific data-driven Hilbert space. The second error term in (28) can be further estimated as,

where the basis functions are substituted with the specific . Notice that are orthonormal basis functions with respect to the weight in . One specific choice of the weight function is:

where has been normalized, i.e.,

For the data-driven Hilbert space, we always use a normalized weight function . Note that the weight function in (37) is a discretization of the marginal density function of with marginalized out,

where denotes the joint density of . Essentially, the weight function in (37) is the sampling density of all the training data , which motivates us to refer to as a data-driven Hilbert space.

Next, we prove that by specifying the data-driven basis functions , the variance Var is finite for all and . Subsequently, we can obtain the minimum number of training data, , to only depend on M, , and , such that the expectation in (25) and the variance Var in (26) are bounded above by any .

Proposition 2.

Let be i.i.d. samples of with density . Let for all with weight specified in (37), and let be the complete orthonormal basis of . Then, Var is finite for all and .

Proof.

Notice that for all , we have:

where the last equality follows directly from the orthonormality of basis functions . From Equation (40), we can obtain that for all and , the variance Var is finite. □

Theorem 2.

Proof.

According to Proposition 2, we have that the variance Var is finite for all and . According to Proposition 1, since Var is finite, we have that the estimator is both unbiased and consistent for . All conditions in Theorem 1 are satisfied, so that we can obtain the error estimation of the expectation in (25) and the error estimation of the variance Var in (26). Moreover, the second error term in (25) and Var (26) can be both bounded by Equation (40), so that we can obtain our error estimations (41) and (42).

Choose as in (31) such that the first term in (41) and (42) is bounded by . The second term in (41) and (42) can be bounded by an arbitrarily small if the number of training data N satisfies:

Then, both the expectation and the variance Var can be bounded by . Since is arbitrary, the proof is complete. □

Recall that by applying arbitrary basis functions to represent in (10), it is typically not easy to evaluate the upper bound D in (33), which implies that it is not easy to determine how many observation data, (Equation (34)), should be used for training. However, by applying the data-driven basis functions to represent in (10), the minimum number of training data, (Equation (43)), becomes independent of D, and is only dependent of M, , and , as can be seen from Theorem 2. To let the error induced by modes k be smaller than a desired , we can easily determine how many observation data, (Equation (43)), should be used for training. In this sense, the specific data-driven Hilbert space with the corresponding basis functions is a good choice for representing (10).

We have so far theoretically verified the validity of the representation (10) in estimating the conditional density (Theorem 1). In particular, using the data-driven basis , we can easily control the error of conditional density estimation by specifying the number of training data N (Theorem 2). To summarize, the training procedures can be outlined as follows:

- (1-A)

- (1-B)

- Construct the basis functions for parameter space and for observation space by using the training dataset. For space, we need to empirically choose the number of basis functions to let the error induced by modes be as small as possible. In particular, for the data-driven Hilbert space, we will provide a detailed discussion on how to estimate the data-driven basis functions of with the sampling density from the training data in the following Section 4. Note that this basis estimation will introduce additional errors beyond the results in this section, which assumed the data-driven basis functions to be given.

- (1-C)

- (1-D)

- Finally, for new observations , define the likelihood function as a product of the conditional densities of new observations given any ,

Next, we address the second important question for the RKWHS representation (Procedure (1-B)): how to construct basis functions for and . Especially, we focus on how to construct the data-driven basis functions for .

4. Basis Functions

This section will be organized as follows. In Section 4.1, we discuss how to employ analytical basis functions for parameter and for observation as in the usual polynomial chaos expansion. In Section 4.2, we discuss how to construct the data-driven basis functions with being the manifold of the training dataset and the weight by (37) being the sampling density of .

4.1. Analytic Basis Functions

If no prior information about the parameter space other than its domain is known, we can assume that the training parameters are uniformly distributed on the parameter space. In particular, we choose M number of well-sampled training parameters in an m-dimensional box ,

where × denotes a Cartesian product and the two parameters and are the minimum and maximum values of the uniform distribution for the coordinate of space. Here, the well-sampled uniform distribution corresponds to a regular grid, which is a tessellation of m-dimensional Euclidean space by congruent parallelotopes. Two parameters and are determined by:

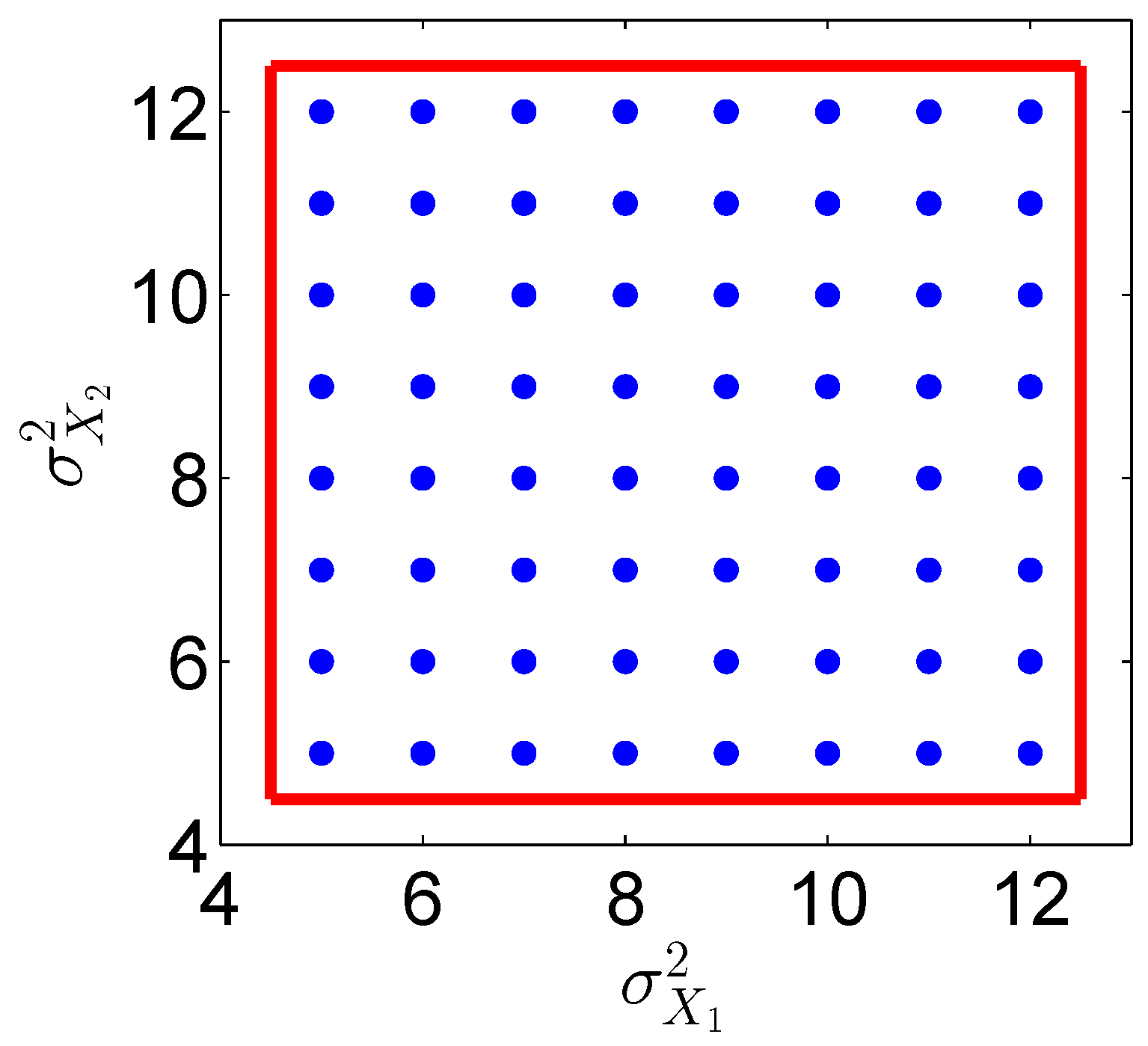

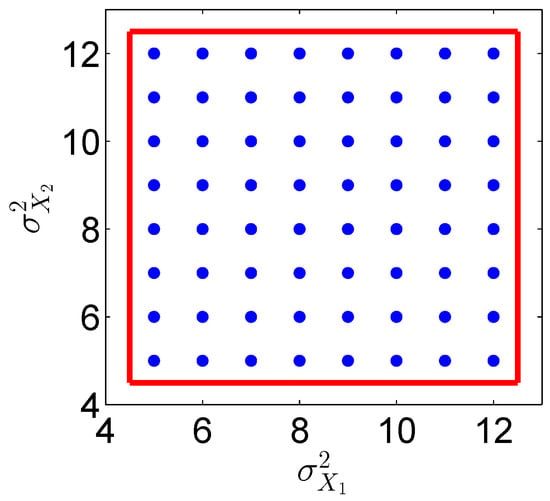

For M regularly-spaced grid points , we set in all of our numerical examples below, where is the number of training parameters in the coordinate. For example, see Figure 1 for the 2D well-sampled uniformly-distributed data (blue circles). In this case, the two-dimensional box is (red square).

Figure 1.

(Color online) An example of well-sampled 2D uniformly-distributed data points (blue circles). The boundary of the uniform distribution is depicted with a red square. Furthermore, these well-sampled data points correspond to the training parameters in Example I in Section 5. In this example, the well-sampled uniformly-distributed training parameters are (blue circles). The equal spacing distances of both coordinates are one. The two-dimensional box is (red square).

On this simple geometry, we will choose to be the tensor product of the basis functions on each coordinate. Notice that we have taken the weight function to be the sampling density of the training parameters in order to simplify the expansion coefficient in (15). In this case, the weight is a uniform distribution on . Then, for the coordinate of the parameter, , the weight function is a uniform distribution on the interval , and one can choose the following cosine basis functions,

where form a complete orthonormal basis of . This choice of basis functions corresponds to exactly the data-driven basis functions produced by the diffusion maps algorithm on the uniformly-distributed dataset on a compact interval, which will be discussed in Section 4.2. Although other choices such as the Legendre polynomials can be used, this choice will lead to a larger value of constant D in (34) that controls the minimum number of training data for accurate estimation.

Subsequently, we set , where ⊗ denotes the Hilbert tensor product, and is the uniform distribution on the m-dimensional box . Correspondingly, the basis functions are a tensor product of for ,

where and . Based on the property of the tensor product of Hilbert spaces, forms a complete orthonormal basis of .

We now turn to the discussion of how to construct analytic basis functions for . The approach is similar to the one for parameter , except that the domain of the data is specified empirically and the weight function is chosen to correspond to some well-known analytical basis functions, independent of the sampling distribution of the data . That is, we assume the geometry of the data has the following tensor structure, , where will be specified empirically based on the ambient space coordinate of . Let be the ambient component of ; we can choose a weighted Hilbert space with the weight depending on the parameters and being normalized to satisfy . For each coordinate, let be the corresponding orthonormal basis functions, which possess analytic expressions. Subsequently, we can obtain a set of complete orthonormal basis functions for by taking the tensor product of these as in (48).

For example, if the weight is uniform, is simply a one-dimensional interval. In this case, we can choose the cosine basis functions for as in (47) such that the parameters correspond to the boundaries of the domain , which can be estimated as in (46). In our numerical experiments below, we will set . Another choice is to set the weight to be Gaussian. In this case, the domain is assumed to be the real line, . For this choice, the corresponding orthonormal basis functions are Hermite polynomials, and the parameters , corresponding to the mean and variance of the Gaussian distribution, can be empirically estimated from the training data.

4.2. Data-Driven Basis Functions

In this section, we discuss how to construct a set of data-driven basis functions ∈ with being the manifold of the training dataset and weight in (37) being the sampling density of for all and . The issues here are that the analytical expression of the sampling density is unknown and the Riemannian metric inherited by the data manifold from the ambient space is also unknown. Fortunately, these issues can be overcome by the diffusion maps algorithm [18,19,20].

4.2.1. Learning the Data-Driven Basis Functions

Given a dataset with the sampling density (37), defined with respect to the volume form inherited by the manifold from the ambient space , one can use the kernel-based diffusion maps algorithm to construct an matrix that approximates a weighted Laplacian operator, , that takes functions with Neumann boundary conditions for the compact manifold with the boundary if the manifold has a boundary. The eigenvectors of the matrix are discrete approximations of the eigenfunctions of the operator , which form an orthonormal basis of the weighted Hilbert space . Connecting to the discussion on the RKWHS in Section 2, the eigenfunctions of , that is , can be approximated using an integral operator in (7) with the appropriate kernel constructed by the diffusion maps algorithm, up to a diagonal conjugation. Basically, is the data-driven reproducing kernel Hilbert space defined with the feature map in (9), induced by eigenfunctions of .

Each component of the eigenvector is a discrete estimate of the eigenfunction , evaluated at the training data point . The sampling density defined in (37) is estimated using a kernel density estimation method [22]. In contrast to the analytic continuous basis functions in the above Section 4.1, the data-driven basis functions are represented nonparametrically by the discrete eigenvectors using the diffusion maps algorithm. The outcome of the training is a discrete estimate of the conditional density, , which estimates the representation (10) on each training data point .

In our implementation, we use the Variable-Bandwidth Diffusion Maps (VBDM) algorithm introduced in [20], which extends the diffusion maps to non-compact manifolds without a boundary. See the supplementary material of [23] for the MATLAB code of this algorithm. We should point out that this discrete approximation induces errors in the basis function, which are estimated in detail in [24]. These errors are in addition to the error estimations in Section 3.

We note that if the data are uniformly distributed on a one-dimensional bounded interval, then the VBDM solutions are the cosine basis functions, which are eigenfunctions of the Laplacian operator on bounded interval with Neumann boundary conditions. This means that the cosine functions in (47) that are used to represent each component of are analogous to the data-driven basis functions. The difference is that with the parametric choice in (47), one avoids VBDM at the expense of specifying the boundaries of the domain, . In the remainder of this paper, we refer to an application of (10) with cosine basis functions for and VBDM basis functions for as the VBDM representation.

However, a direct application of the VBDM algorithm suffers from the expensive computational cost for large training data. Basically, we need an algorithm that allows us to subsample from the training dataset while preserving the sampling distribution of the full dataset. In Appendix A, we provide a simple box-averaging method to achieve this goal. In the remainder of this paper, we will denote the reduced data obtained via the box-averaging method in Appendix A by , where . We refer to them as the box-averaged data points. When the number of training data is too large, we apply the VBDM algorithm on these box-averaged data to obtain the discrete estimate of the eigenfunctions .

The second issue arises from the discrete representation of the conditional density in the observation space using the VBDM algorithm. Notice that the VBDM representation, , is only estimated at each training data point . A natural problem is to extend the representation onto new observations that are not part of the training dataset (Procedure (1-D)). Next, we address this issue.

4.2.2. Nyström Extension

We now discuss an extension method to evaluate basis functions on a new data point that does not belong to the training dataset. Given such an extension method, we can proceed with Procedure (1-D) by evaluating on new observations , which in turn give . Second, this extension is also needed in the training Procedure (1-C) when is large. More specifically, for training the matrix in (12), we need to know the estimate of the eigenfunction for all the original training data . Computationally, however, we can only construct the discrete estimate of the eigenfunction at the reduced box-averaged data points . This suggests that we need to extend the eigenfunctions onto all the original training data .

For the convenience of discussion, the training data that are used to construct the eigenfunctions are denoted by , and all the data that are not part of are denoted by . To extend the eigenfunctions onto the data point , one approach would be to use the Nyström extension [25] that is based on the basic theory of RKHS [26]. Let be the RKWHS with a symmetric positive kernel defined as,

where is the corresponding eigenvalue of associated with eigenfunction . Then, for any function , the Moore–Aronszajn theorem states that one can evaluate f at with the following inner product, . In our application, this amounts to evaluating,

where the non-symmetric kernel function (constructed by the diffusion maps algorithm) is related to the symmetric kernel by,

with being the sampling density of at . See the detailed evaluation of the kernels and for the Nyström extension in [27]. After obtaining the estimate of the eigenfunction using the Nyström extension, we can train the matrix in (12) for large and then obtain the representation of the conditional density on arbitrary new observation , .

To summarize this section, we have constructed two different sets of basis functions for , the analytic basis functions of such as the Hermite and cosine basis functions, which assume that the manifold is or hyperrectangle, respectively, and the data-driven basis functions of with being the data manifold and being the sampling density that are computed using the VBDM algorithm.

5. Parameter Estimation Using the Metropolis Scheme

First, we briefly review the Metropolis scheme for estimating the posterior density given new observations for a specific parameter . The key idea of the Metropolis scheme is to construct a Markov chain such that it converges to samples of conditional density as the target density. In our application, the parameter estimation procedures can be outlined as follows:

- (2-A)

- Suppose we have , then for , we can sample . Here, is the proposal kernel density. For example, use the random walk Metropolis algorithm to generate proposals, , where , the proposal covariance, and is a tunable nuisance parameter.

- (2-B)

- Accept the proposal, with probability , otherwise set . Repeat Procedures (2-A) and (2-B) above. Notice that the posterior can be determined from the prior and the likelihood based on Bayes’ theorem (3). The likelihood function is defined as a product of conditional densities of new observations in (44) (Procedure (1-D)). The conditional densities of new observations given are obtained from the training Procedure (1-C).

- (2-C)

- Generate a sufficiently long chain and use the chain’s statistic as an estimator of the true parameter . Take multiple runs of the chain started at different initial , and examine whether all these runs converge to the same distribution. The convergence of all the examples below has been validated using 10 randomly-chosen different initial conditions.

In the remainder of this section, we present numerical results of the Metropolis scheme using the proposed data-driven likelihood function on various instructive examples, where the likelihood function is either explicitly known, or can be approximated as in (6), or is intractable. In an example where the explicit likelihood is known, our goal is to show that the approach numerically converges to the true posterior estimate. In the second example, where the dimension of the data manifold is strictly less than the ambient dimension, we will show that the RKHS framework with the knowledge of the intrinsic geometry is superior. When the intrinsic geometrical information is unknown, the proposed data-driven likelihood function is competitive. In the third example with a low-dimensional dynamic and observation model of the form (5), we compare the proposed approach with standard methods, including the direct MCMC and nonintrusive spectral projection (both use the likelihood function of the form (6)). In our last example, we consider an observation model where the likelihood function is intractable and the cost of evaluating the observation model in (4) is numerically expensive.

5.1. Example I: Two-Dimensional Ornstein–Uhlenbeck Process

Consider an Ornstein–Uhlenbeck (OU) process as follows:

where denotes the state variable, denotes two-dimensional Wiener processes, and is a diagonal matrix with main diagonal components and to be estimated. In the stationary process, the solution of Equation (50) admits a Gaussian distribution ,

Our goal here is to estimate the posterior density and the posterior mean of the parameters , given a finite number, T, of observations, , for hidden true parameters , where each is an i.i.d. sample of (51) for . This example is shown here to verify the validity of the framework of our RKWHS representations for parameter estimation application.

One can show that the likelihood function for this problem is the inverse matrix gamma distribution, , where . If a prior is defined to be also the inverse matrix gamma distribution, , for some value of , then the posterior density can be obtained by applying Bayes’ theorem,

The posterior mean can thereafter be obtained as,

To compare with the analytic conditional density (51), we trained three RKWHS representations of the conditional density function, , by using the same training dataset. For training, we used well-sampled uniformly-distributed training parameters (shown in Figure 1), , where , which are denoted by . For each training parameter , we generated 640,000 well-sampled normally distributed observation data of density in (51) with . For Hermite and cosine representations, we used 20 basis functions for each coordinate, and then, we could construct basis functions of two-dimensional observation, , by taking the tensor product. For the VBDM representation, we first reduced the data from 640,000 to by the box-averaging method (Appendix A). Subsequently, we trained data-driven basis functions from the B box-averaged data using the VBDM algorithm [20].

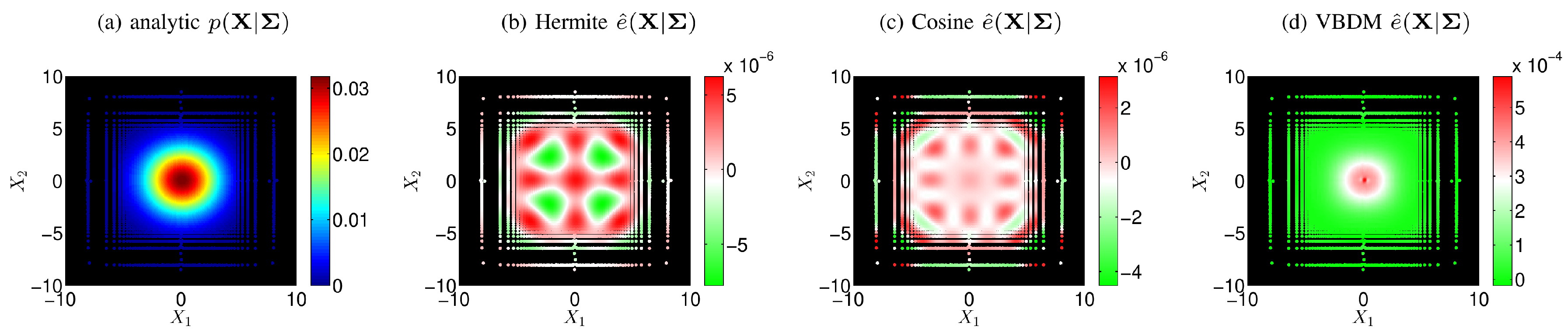

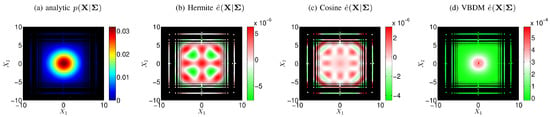

Figure 2a displays the analytic conditional density (51), and Figure 2b–d display the pointwise errors of the conditional densities for the training parameter . It can be seen from Figure 2b–d that all the pointwise errors are small compared to the analytic in Figure 2a, so that all representations of conditional densities are in excellent agreement with the analytic (Figure 2a). This suggests that for the Hermite representation, the upper bound D (33) in Theorem 1 is finite so that the representation is valid in estimating the conditional density, as can be seen from Figure 2b. On the other hand, the upper bounds D (33) for the cosine and the VBDM representations are always finite, as mentioned in Remark 2 and Proposition 2, respectively. We should also point out that for this example, the VBDM representation performed the worst with errors of order compared to the Hermite and cosine representations whose errors were on the order of . This larger error in the VBDM representation was because the data-driven basis functions were estimated by discrete eigenvectors , so additional errors [20] were introduced through this discrete approximation (especially on the high modes) on the box-averaged data, , 10,000. On the other hand, for Hermite and cosine representations, their analytic basis functions are known, so that the errors could be approximated by (25) in Theorem 1.

Figure 2.

(Color online) (a) The analytic conditional density (51). For comparison, plotted are the pointwise errors of conditional density functions for (b) Hermite, (c) cosine, and (d) VBDM representations. The density and all the error functions are plotted on the 10,000 box-averaged data points. The training parameter .

We now estimate the posterior density (52) and mean (53) by using the MCMC method (Procedures (2-A)–(2-C)). We generated well-sampled normally-distributed data as the observations from the true values of variance . From the analytical Formula (53), we obtained the posterior mean as . Here, the posterior mean deviated greatly from the true value since we only used normally-distributed observation data as new observations. If using much more new observation data, the analytical posterior mean (53) will get closer to the true value, . In our simulation, we set the parameter in the prior, , and the proposal covariance, . For each chain, the initial condition was drawn randomly from , and 800,000 iterations are generated for the chain.

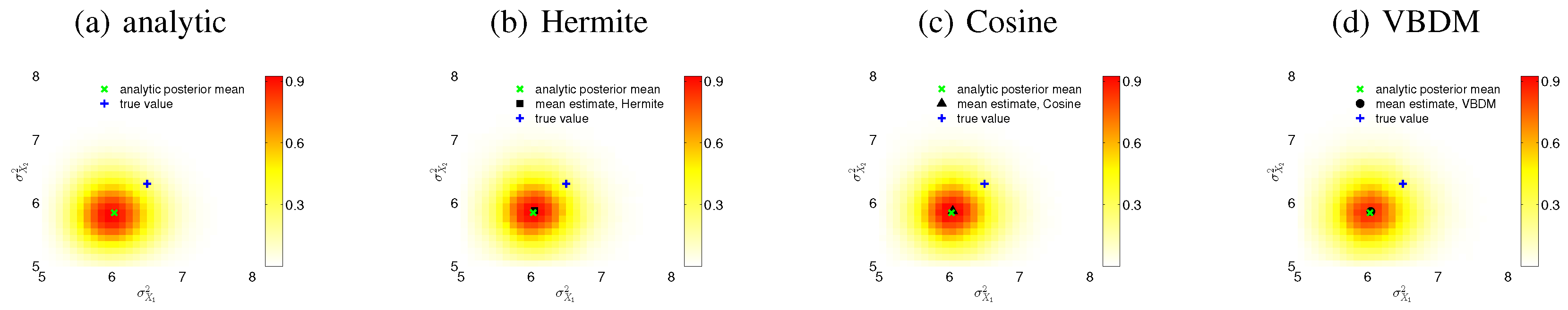

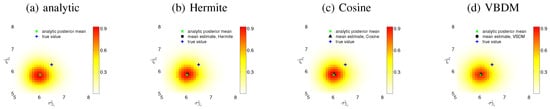

Figure 3b,c,d display the densities of the chain by using Hermite, cosine, and VBDM representation, respectively. The densities are plotted using the kernel density estimate on the chain ignoring the first 10,000 iterations. For comparison, Figure 3a displays the analytic posterior density (52). It can be seen from Figure 3 that the posterior densities by the three representations were in excellent agreement with each other and with the analytic posterior density (52). Figure 3 also shows the comparison between the posterior mean (53) and the MCMC mean estimates. From our numerical results, MCMC mean estimates by all representations and the analytic posterior mean (53) were identical within numerical accuracy. Therefore, for this 2D OU-process example, all representations were valid in estimating the posterior density and posterior mean of parameter .

Figure 3.

(Color online) Comparison of the posterior density functions . (a) Analytical posterior density (52). (b) Hermite representation. (c) Cosine representation. (d) VBDM representation. The true value (blue plus). The analytic posterior mean is (green cross). The MCMC mean estimate using Hermite representation is (black square). The MCMC mean estimate using Cosine representation is (black triangle). The MCMC mean estimate using VBDM representation is (black circle).

Next, we will investigate a system for which the intrinsic dimension d of the data manifold where the observations lie is smaller than the dimension of ambient space n.

5.2. Example II: Three-Dimensional System of SDE’s on a Torus

Consider a system of SDE’s on a torus defined in the intrinsic coordinates :

where and are two independent Wiener processes, and the drift and diffusion coefficients are:

The initial condition is . Here, D is a parameter to be estimated. This example exhibits non-gradient drift, anisotropic diffusion, and multiple time scales. Both the observations and the training dataset were generated by numerically solving the SDE on appropriate parameters D in (54) with a time step and then mapping this data into the ambient space, , via the standard embedding of the torus given by:

Here, are observations. This system on a torus satisfies , where is the intrinsic dimension of and is the dimension of ambient space . Our goal is to estimate the posterior density and the posterior mean of parameter D given discrete-time observations of , which are the solutions of (54) for a specific parameter .

For training, we used well-sampled uniformly-distributed training parameters, . For each training parameter , we generated 54,000 observations of by solving the SDE’s in (54) for parameter . For Hermite and cosine representation, we constructed 10 basis functions for each coordinate in Euclidean space. After taking tensor product of these basis functions, we could obtain basis functions on the ambient space . For VBDM representation, we first computed box-averaged data points by the data reduction method in Appendix A. However, we found that some of the B box-averaged data points were far away from the torus. After ignoring these points, we eventually chose 26,020 of the box-averaged data points that were close enough to the torus for training. Then, we trained data-driven basis functions on from these 26,020 box-averaged data points using the VBDM algorithm.

Unlike the previous example, the derivation of the analytical expression for the likelihood function was not trivial. This difficulty is due to the fact that the diffusion coefficient, , is state dependent. While direct MCMC with an approximate likelihood function constructed using the Bayesian imputation [5] can be done in principle, we neglected this numerical computation since the cost in generating the path for on each sampling step was too costly in our setup below (where 10,000, and we would generate a chain of length 400,000 samples). For diagnostic comparisons, we constructed another representation , named the intrinsic Fourier representation, which can be regarded as an accurate approximation of , as it used the basis functions defined on the intrinsic coordinates instead of . See Appendix B for the construction and the convergence of the intrinsic Fourier representation in detail. We should point out that this intrinsic representation is not available in general since one may not know the embedding of the data manifold.

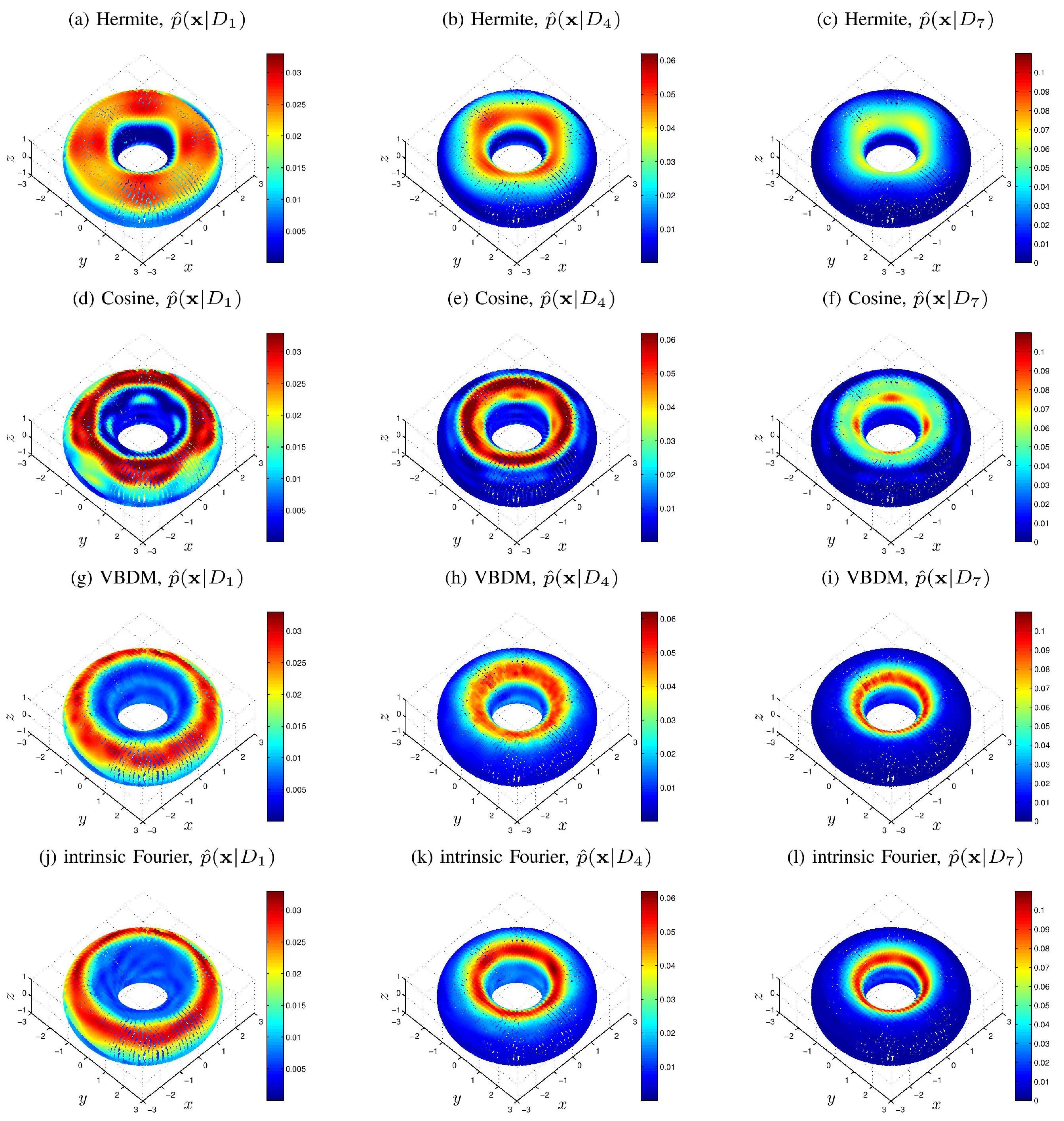

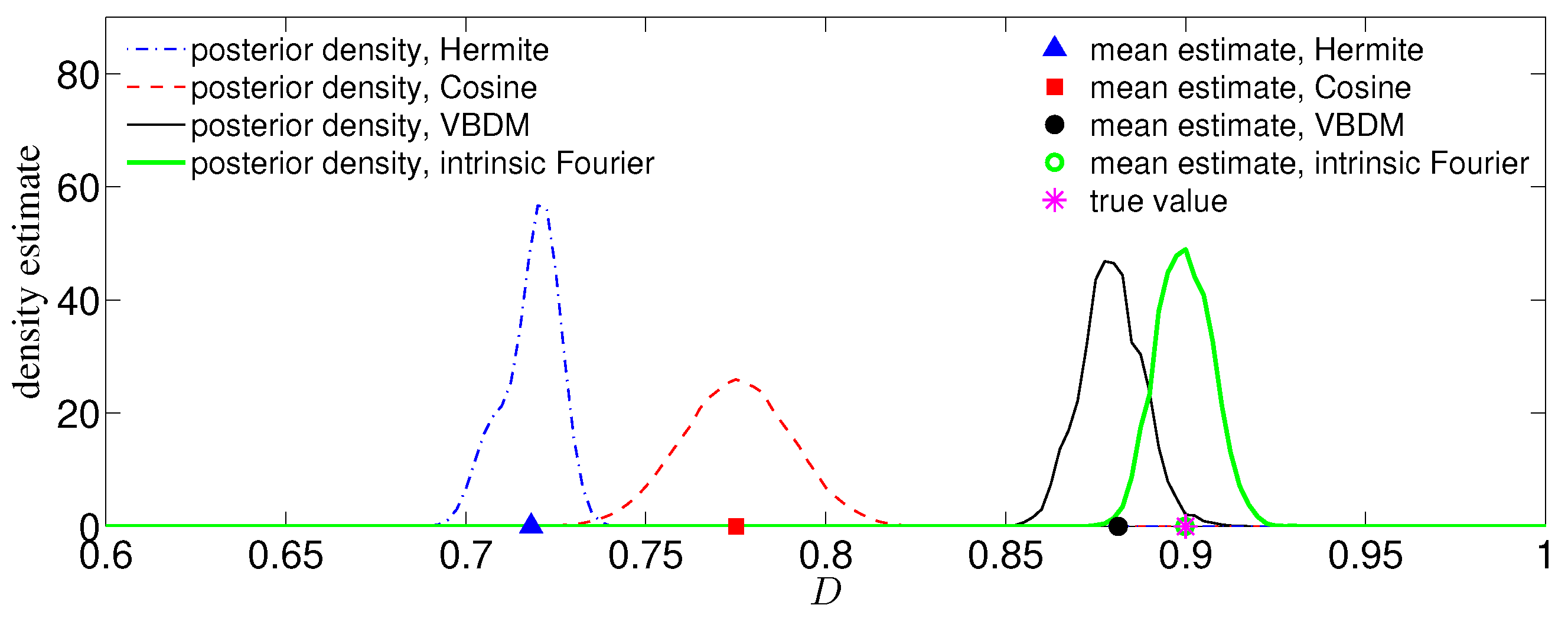

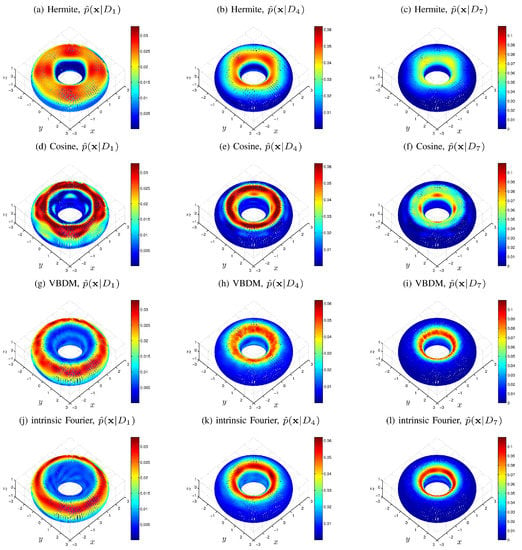

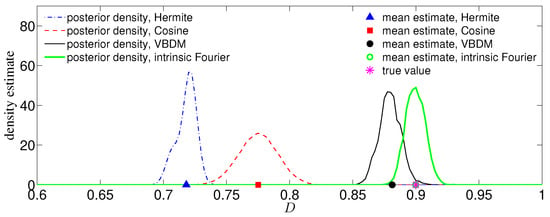

Figure 4 displays the comparison of the density estimates. It can be observed from Figure 4 that the VBDM representation was in good agreement with the intrinsic Fourier representation, whereas Hermite and cosine representations of deviated significantly from the intrinsic Fourier representation. The reason in short was that if the density in coordinate were in , then the corresponding VBDM representation with respect to would be in . However, the representation (Hermite and cosine) with respect to , is not in . A more detailed explanation of this assertion is presented in Appendix B.

Figure 4.

(Color online) Comparison of the conditional densities estimated by using Hermite representation (first row), cosine representation (second row), VBDM representation (third row), and intrinsic Fourier representation (fourth row). The left (a,d,g,j), middle (b,e,h,k), and right (c,f,i,l) columns correspond to the densities on the training parameters , , and , respectively. basis functions are used for all representations. For fair visual comparison, all conditional densities are plotted on the same box-averaged data points and normalized to satisfy with being the estimated sampling density of the box-averaged data .

We now compare the MCMC estimates with the true value, , from 10,000 observations. For this simulation, we set the prior to be uniformly distributed and empirically chose for the proposal. Figure 5 displays the posterior densities of the chains for all representations (each plot of the density estimate was constructed using KDE on a chain of length 400,000). Displayed also is the comparison between the true value and the MCMC mean estimates by all representations. Here, the mean estimate by the intrinsic Fourier representation nearly overlaps with the true value , as shown in Figure 5. The mean estimate by the VBDM representation is closer to the true value compared to the estimates by Hermite and cosine representations. Moreover, it can be seen from Figure 5 that the posterior by the VBDM representation is close to the posterior by intrinsic Fourier representation, whereas the posterior densities by Hermite and cosine representation are not. We should point out that this result is encouraging considering that the training parameter domain is rather wide, . This result suggests that when the intrinsic dimension is less than the ambient space dimension, , the VBDM representation (which does not require the knowledge of the embedding function in (55)) with data-driven basis functions in is superior compared to the representations with analytic basis functions defined on the ambient coordinates .

Figure 5.

(Color online) Comparison of the posterior density functions by all representations. Plotted also are mean estimates by Hermite representation (blue triangle), cosine representation (red square), VBDM representation (black circle), the intrinsic Fourier representation (green circle), and the true parameter value (magenta asterisk).

5.3. Example III: Five-Dimensional Lorenz-96 Model

Consider the Lorenz-96 model [28]:

with periodic boundary, . For the example in this section, we set . The initial condition was . Our goal here was to estimate the posterior density and posterior mean of the hidden parameter F given a time series of noisy observations , where:

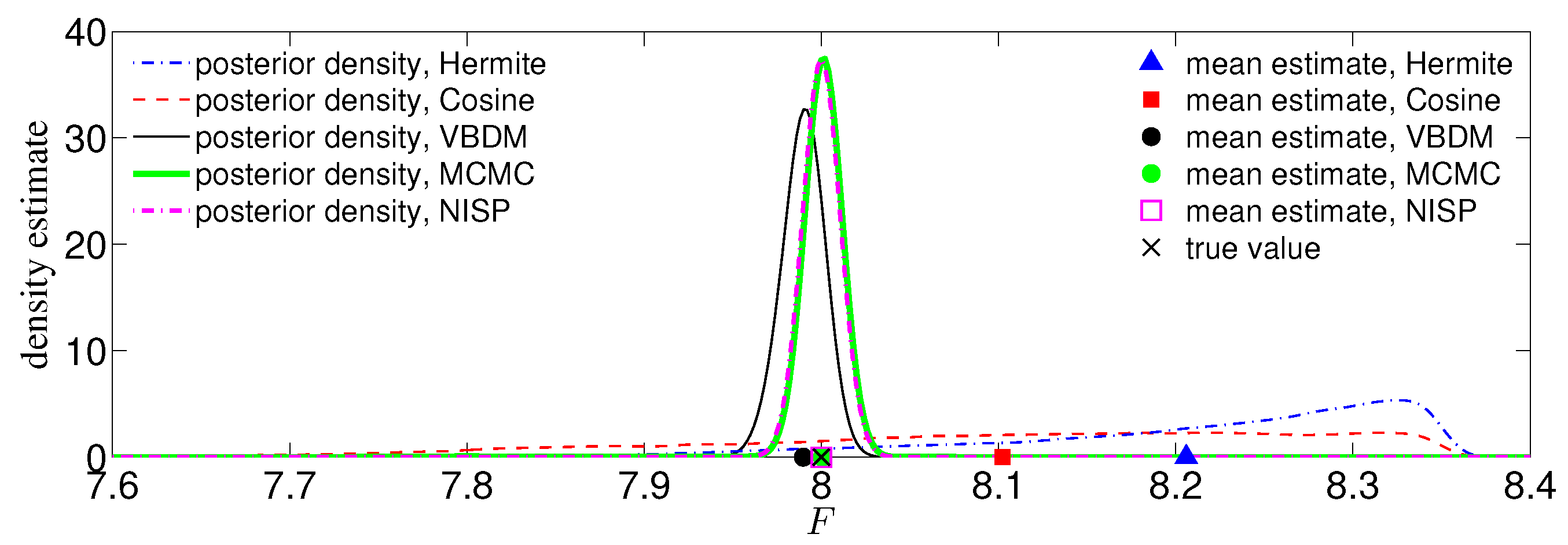

with noise variance . Here, denotes the approximate solution (with the Runge–Kutta method) with a specific parameter value at discrete times , where is the integration time step and s is the observation interval. Since the embedding function of the observation data is unknown, we do not have a parametric analog to the intrinsic Fourier representation as in the previous example.

In this low-dimensional setting, we can compare the proposed method with basic techniques, including the direct MCMC and the Non-Intrusive Spectral Projection (NISP) method [13]. By direct MCMC, we refer to employing the random walk Metropolis scheme directly on the following likelihood function,

where is the noise variance and is the solution of the initial value problem in Equation (56) with the parameter F at time . Note that evaluating is time consuming if the model time is long or the MCMC chain has many iterations. In our implementation, we generated the chain for 4000 iterations. This amounts to 4000 sequential evaluations of the likelihood function in (57), where each evaluation requires integrating the model in (56) with the proposal parameter value until model unit time . We used a uniform prior distribution and for the proposal.

For the NISP method [13], we used the same Gaussian likelihood function (57) with approximated . In particular, we approximated the solutions with for in the form of:

where are chosen to be the orthonormal cosine basis functions, are the expansion coefficients, and K is the number of basis functions. Subsequently, we prescribe a fixed set of nodes to be used for training . Practically, this training procedure only requires eight model evaluations that can be done in parallel, where each evaluation involves integrating the model with the specified until model unit time . The number of basis functions is . After specifying the coefficients such that , we obtain the approximation of the solutions for all parameters F. Using these approximated , in place of in (57), we can generate the Markov chain using the Metropolis scheme. Again, we used a uniform prior distribution and for the proposal. In our MCMC implementation, we generated the chain for 40,000 iterations; this involved only evaluating (58) instead of integrating the true dynamical model in (56) on the proposal parameter value .

For RKWHS representations, we also used uniformly-distributed training parameters, . As in the NISP, this training procedure required only eight model integrations with parameter value until the model unit time , resulting in a total of training data. In this example, we did not reduce the data using the box-averaging method in Appendix A. In fact, for some cases, such as and , the total of training data were only , which was too few for estimation of the eigenfunctions. Of course, one can consider more training parameters to increase this training dataset, but for a fair comparison with NISP, we chose to just add 10 i.i.d. Gaussian noises to each dataset, resulting in a total of for training dataset. This configuration (with a small dataset) is a tough setting for the VBDM since the nonparametric method is advantageous in the limit of a large dataset. When is sufficiently large, we do not need to increase the dataset by adding multiple i.i.d Gaussian noises.

For Hermite and Cosine representation, we constructed five Hermite and cosine basis functions for each coordinate, which yielding a total of basis functions in . For the VBDM representation, we directly applied the VBDM algorithm to train data-driven basis functions on manifold from the training dataset. From the VBDM algorithm, the estimated intrinsic dimension was , which was smaller than the dimension of the ambient space . Then, we applied a uniform prior distribution and for the proposal. As in NISP, we generated the chain for 40,000 iterations, which amounted to evaluating (44) instead of integrating the true dynamical model in (56) on each iteration.

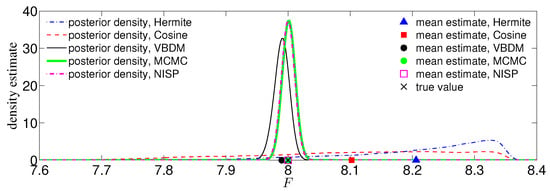

We now compare the posterior densities and mean estimates for the case of and noisy observations corresponding to the true parameter value . Figure 6 displays the posterior densities of the chains and mean estimates for the direct MCMC method, NISP method, and all representations. It can be seen from Figure 6 that the mean estimate by VBDM representation was in good agreement with the true value . In contrast, the mean estimates by Hermite and cosine representations deviated substantially from the true value. Based on this numerical result, where the estimated intrinsic dimension of the observations was lower than the ambient space dimension , the data-driven VBDM representation was superior compared to the Hermite and cosine representations. It can be further observed that direct MCMC, NISP, and VBDM representation can provide good mean estimates to the true value. However, notice that we only ran the model times for the NISP method and VBDM representation, whereas we ran the model 4000 times for the direct MCMC method.

Figure 6.

(Color online) Comparison of the posterior density functions among the direct MCMC method, NISP method, and all RKWHS representations. Plotted also are the true parameter value (black cross), mean the estimate by the direct MCMC method (green circle), the mean estimate by the NISP method (magenta square), and the mean estimates by Hermite representation (blue triangle), cosine representation (red square), and VBDM representation (black circle). The noisy observations are for , .

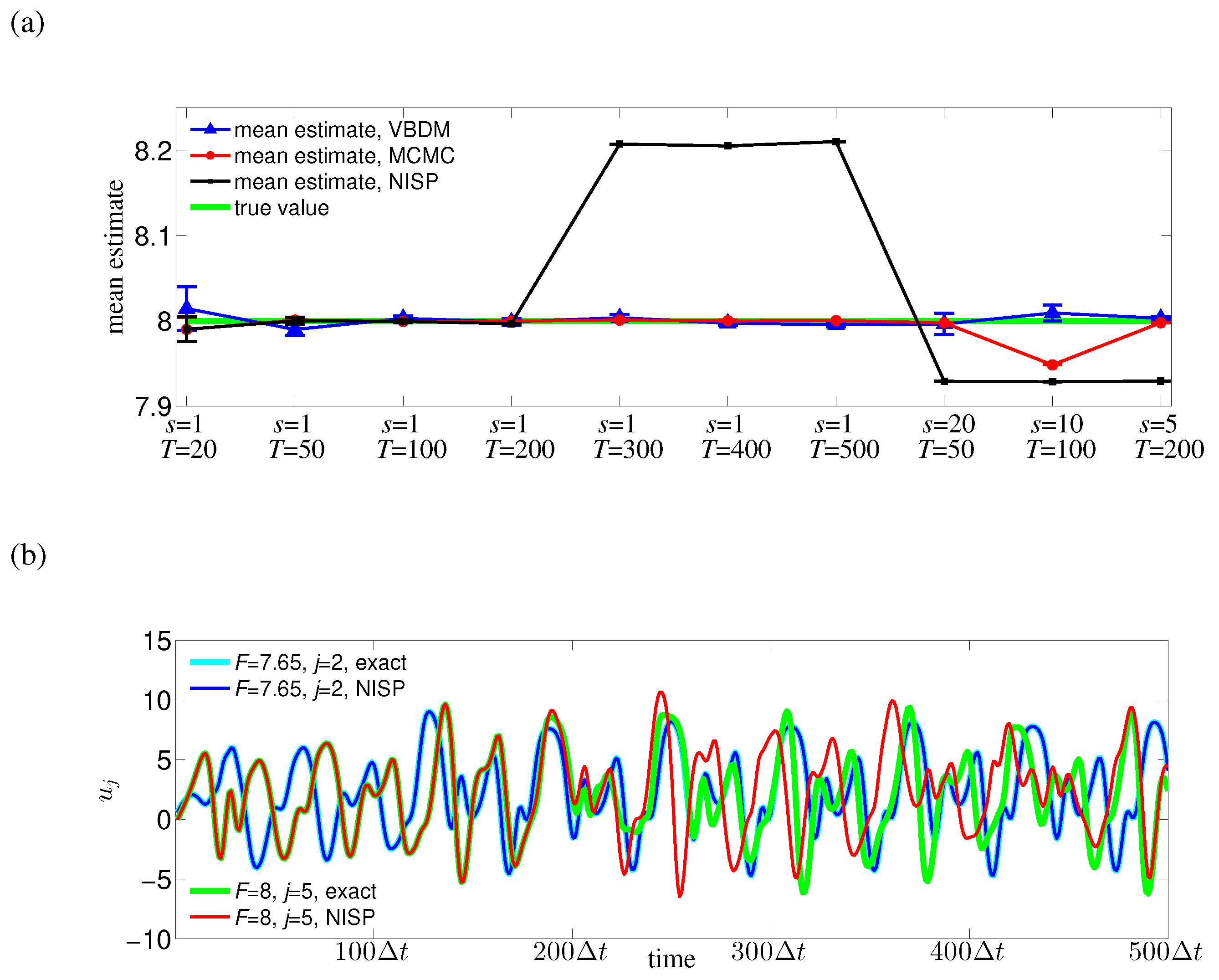

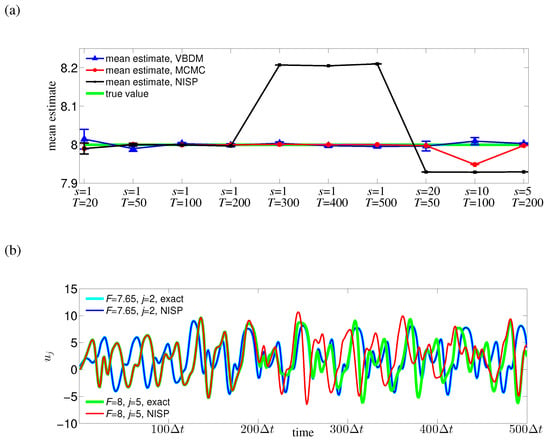

In real applications where the observations are not simulated by the model, we expect the observation configuration to be pre-determined. Therefore it is important to have an algorithm that is robust under various observation configurations. In our next numerical experiment, we checked such robustness by comparing the direct MCMC method, NISP method, and VBDM representation for different cases of s and T (Figure 7a). It can be observed from Figure 7a that both the direct MCMC method and VBDM representation can provide reasonably accurate mean estimates for all cases of s and T. However, again notice that we need to run the model much more times for the direct MCMC method than for VBDM representation. It can be further observed that the NISP method can only provide a good mean estimate for observation time up to when eight uniform nodes are used. The reason was that the approximated solution by NISP method was only accurate for observation time up to (see the green and red curves in Figure 7b). This result suggests that our surrogate modeling approach using the VBDM representation can provide accurate and robust mean estimates under various observation configurations.

Figure 7.

(Color online) (a) Comparison of the mean estimates among the direct MCMC method, NISP method, and VBDM representation for different cases of s and T. Plotted also is the true parameter value (green curve). (b) Comparison of the exact solution by numerical integration and the approximated solution by the NISP method at the training parameter and at the parameter value , which is not in the training parameter.

5.4. Example IV: The 40-Dimensional Lorenz-96 Model

In this section, we consider estimating the parameter F in the Lorenz-96 model in (56), but of a dimensional system. We now consider observing the autocorrelation function of several energetic Fourier modes of the system phase-space variables. In particular, let be the discrete Fourier mode of , where with . Let the observation function be defined as in (4) with four-dimensional , whose components are the autocorrelation function of Fourier mode ,

of the energetic Fourier modes, . See [29] for the detailed discussion of the statistical equilibrium behavior of this model for various values of F. Such observations arise naturally since some of the model parameters can be identified from non-equilibrium statistical information via the linear response statistics [30,31]. In our numerics, we will approximate the correlation function by averaging over a long trajectory,

with . Here, each of these Fourier modes is assumed to have zero empirical mean. We will consider observing the autocorrelation function up to time (corresponding to 2.5 unit time).

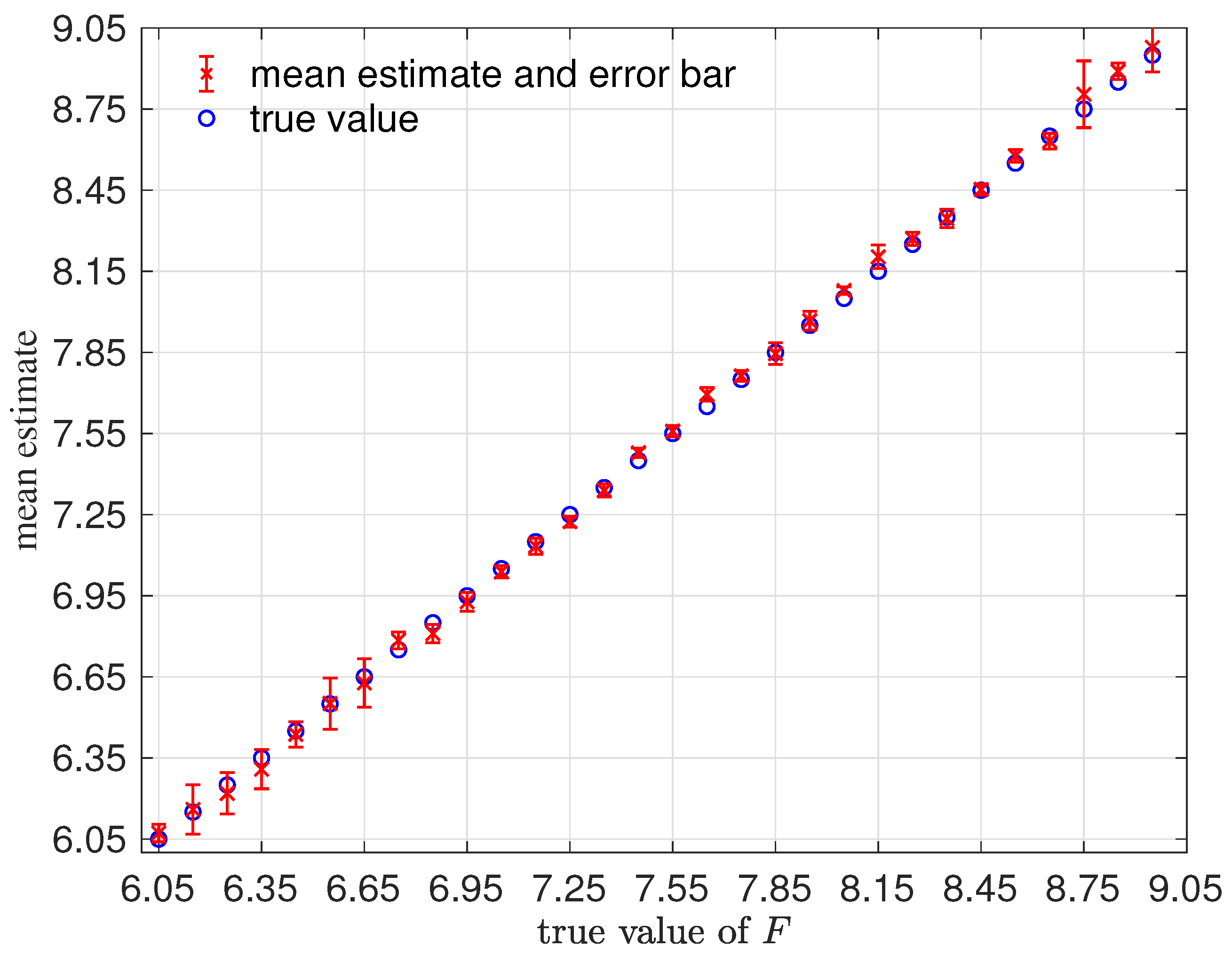

With this setup, the corresponding likelihood function for is not easily approximated (since it is not in the form of (6)), and it is computationally demanding to generate since each evaluation requires integration of the 40-dimensional Lorenz-96 model up to time index . This expensive computational cost makes either the direct MCMC or approximate Bayesian computation infeasible. We should also point out the fact that a long trajectory is needed in the evaluation of (59), making this problem intractable with NISP even if a parametric likelihood function becomes available. This issue is because the approximated trajectory by polynomial chaos expansion in NISP is only accurate for short times, as shown in the previous example. We will consider constructing the likelihood function from a wide range of training parameter values, . This parameter domain is rather wide and includes the weakly chaotic regime () and strongly chaotic regime (). See [32] for a complete list of chaotic measures in these regimes including the largest Lyapunov exponent and the Kolmogorov–Sinai entropy.

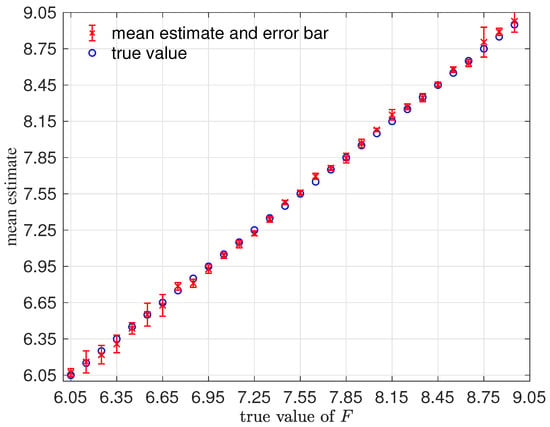

In this setup, we had a total of of for training. We will consider an RKHS representation with basis functions. We will demonstrate the performance on 30 sets of observations , where in each case, does not belong to the training parameter set, namely . In each simulation, the MCMC initial chain will be set to be random, ; the prior is uniform; and for the proposal. In Figure 8, we show the mean estimates and an error bar (based on one standard deviation) computed from averaging the MCMC chain of length 40,000 in each case. Notice the robustness of these estimates on a wide range of true parameter values using a likelihood function constructed using a single set of training parameter values on .

Figure 8.

(Color online) Mean error estimates and error bars for various true values of F that are not in the training parameters.

6. Conclusions

We have developed a framework of a parameter estimation approach where MCMC was employed with a nonparametric likelihood function. Our approach approximated the likelihood function using the kernel embedding of conditional distribution formulation based on RKWHS. By analyzing the error estimation in Theorem 1, we have verified the validity of our RKWHS representation of the conditional density as long as induced by the basis in and Var is finite. Furthermore, the analysis suggests that if the weight q is chosen to be the sampling density of the data, the Var is always finite. This justifies the use of Variable Bandwidth Diffusion Maps (VBDM) for estimating the data-driven basis functions of the Hilbert space weighted by the sampling density on the data manifold.

We have demonstrated the proposed approach with four numerical examples. In the first example, where the dimension of the data manifold was exactly the dimension of the ambient space, , the RKHS representation with VBDM basis yielded a parameter estimate as accurate as using other analytic basis representation. However, in the examples where the dimension of the data manifold was strictly less than the dimension of the ambient space, , only VBDM representation could provide more accurate estimation of the true parameter value. We also found that VBDM representation produced mean estimates that were robustly accurate (with accuracies that were comparable to the direct MCMC) on various observation configurations where the NISP was not accurate. This numerical comparison was based on using only eight model evaluations, which can be done in parallel for both VBDM and NISP, whereas the direct MCMC involved 4000 sequential model evaluations. Finally, we demonstrated robust accurate parameter estimation on an example where the analytic likelihood function was intractable and computationally demanding, even if it became available. Most importantly, this result was based on training on a wide parameter domain that included different chaotic dynamical behaviors.

From our numerical experiments, we conclude that the proposed nonparametric representation was advantageous in any of these configurations: (1) when the parametric likelihood function was not known, such as in Example IV; (2) when the observation time stamp was long (such as in Example II or for large in Example III and Example IV). Ultimately, the only real advantage of this method (as a surrogate model) was when the direct MCMC or ABC, which require sequential model evaluation, was computationally not feasible.

While the theoretical and numerical results were encouraging as a proof the concept for using the VBDM representation in many other parameter estimation applications, there were still practical limitations that need to be overcome. As in the other surrogate modeling approaches, one needs to have knowledge of the feasible domain for the parameters. Even when the parameter domain is given and wide, it is practically not feasible to generate training dataset by evaluating the model on the specified training grid points on this domain when the dimension of the parameter space is large (e.g., order 10), even if the Smolyak sparse grid is used. One possible way to simultaneously overcome these two issues is to use “crude” methods, such as ensemble Kalman filtering or smoothing, to obtain the training parameters. We refer to such a method as “crude” since the parameter estimation with ensemble Kalman filtering is sensitive to the initial conditions, especially when the persistent model is used as the dynamical model for the parameters [23]. However, with such crude methods, we can at least obtain a set of parameters that reflect the observational data, instead of specifying training parameters uniformly or in a random fashion, which can lead to unphysical training parameters. Another issue that arises in the VBDM representation is the expensive computational cost when the amount of data is large. When the dimension of the observations is low (as in the examples in this paper), the data reduction technique described in Appendix A is sufficient. For larger dimensional problems, a more sophisticated data reduction is needed. Alternatively, one can explore representations using other orthonormal data-driven basis, such as the QR factorized basis functions as a less expensive alternative to the eigenbasis [27].

Author Contributions

Both authors contribute equally. Conceptualization, J.H.; methodology, J.H. and S.W.J.; software, S.W.J.; validation, S.W.J. and J.H.; formal analysis, S.W.J. and J.H.; investigation, S.W.J. and J.H.; resources, J.H.; data curation, S.W.J.; writing, original draft preparation, S.W.J. and J.H.; writing, review and editing, S.W.J. and J.H.; visualization, S.W.J.; supervision, J.H.; project administration, J.H.; funding acquisition, J.H.

Funding

This research was funded by the Office of Naval Research Grant Number N00014-16-1-2888. J.H. would also like to acknowledge support from the NSF Grant DMS-1619661.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; nor in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| VBDM | Variable Bandwidth Diffusion Maps |

| RKHS | Reproducing Kernel Hilbert Space |

| RKWHS | Reproducing Kernel Weighted Hilbert Space |

| MCMC | Markov Chain Monte Carlo |

| ABC | Approximate Bayesian Computation |

| NISP | Non-Intrusive Spectral Projection |

Appendix A. Data Reduction

When is very large, the VBDM algorithm becomes numerically expensive since it involves solving an eigenvalue problem of matrix size . Notice that the number of training parameters M grows exponentially as a function of the dimension of parameter, m, if well-sampled uniformly distributed training parameters are used. To overcome this large training data problem, we employ an empirical data reduction method to reduce the original training data points to a small number of training data points yet preserving the sampling density in (37). Subsequently, we apply the VBDM algorithm on these reduced training data points. It is worthwhile to mention that this data reduction method is numerically applicable for low-dimensional dataset although in the following we will introduce this reduction method for any n-dimensional dataset.

The basic idea of our method is that we first cluster the training dataset into B number of boxes and then take the average of data points in each box as a reduced training data point. First, we cluster the training data , based on the ascending order of the 1st coordinate of the training data, , into number of groups such that each group has the same number of data points. After the first clustering, we obtain groups with each group denoted by for . Here, the super-index 1 denotes the first clustering and the sub-index denotes the group. Second, for each group , we cluster the training data inside , based on the ascending order of the second coordinate of the training data, , into number of groups such that each group has the same number of data points. After the second clustering, we obtain totally groups with each group denoted by for and . We can operate such clustering n times, where n is the dimension of the observation ambient space. After n times clustering, we obtain groups with each group denoted by with for all . Each group is a box [see Figure A1 for example]. After taking the average of the data points in each box , we obtain B number of reduced training data points. In the remainder of this paper, we denote these B number of reduced training data points by and refer to them as the box-averaged data points. Intuitively, this algorithm partitions the domain into hyperrectangle such that . Note that the idea of our data reduction method is analogous to that of multivariate k-nearest neighbor density estimates [33,34]. The error estimation can be found in the Refs. [33,34].

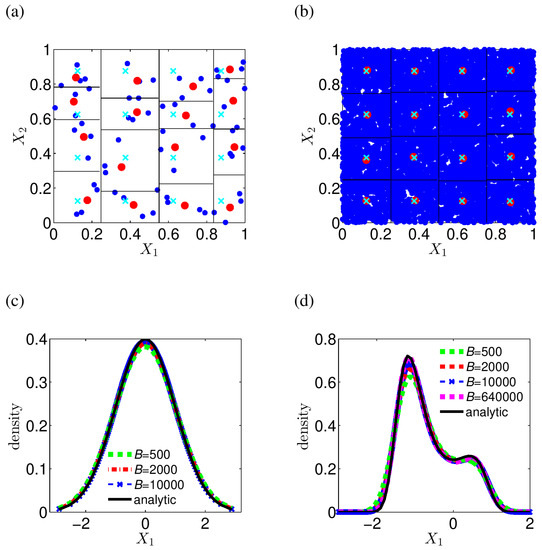

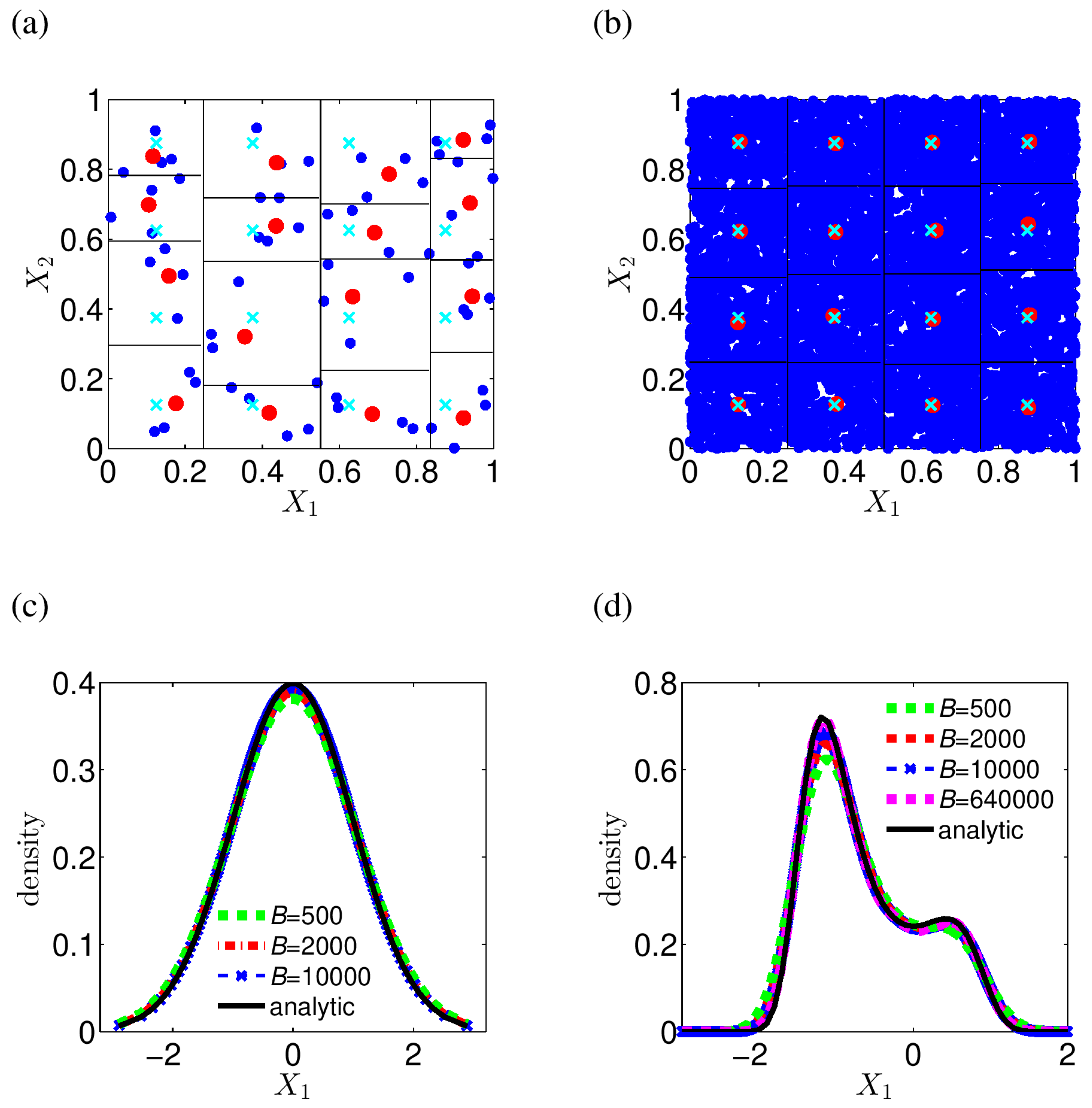

To examine whether the distribution of these box-averaged data points is close to the sampling density of the original dataset, we apply our reduction method to several numerical examples in the following. Figure A1a shows the reduction result for few number () of uniform data in . Here, and so that there are total boxes and inside each box there are 4 uniform data points (blue circles). It can be seen that the box-averaged data points (red circles) are far away from the well-sampled uniform data points (cyan crosses). However, when uniform data points (blue circles) increase to a large number (), box-average data points (red circles) are very close to well-sampled uniform data points (cyan crosses) as shown in Figure A1b. This suggests that these box-averaged data points nearly admit the uniform distribution when there are a large number of original uniform data points. Figure A1c and d show the comparison of the kernel density estimates applied on the box-averaged data for different B for standard normal distribution and the distribution proportional to , respectively. It can be seen that the reduced box-averaged data points nearly preserve the distribution of the original large dataset, .

Figure A1.

(Color online) Data reduction for (a) few number () of uniformly distributed data, and (b) many number () of uniformly distributed data. The 64 blue circles correspond to uniformly distributed data, 16 cyan crosses correspond to well-sampled uniformly distributed data, and 16 red circles correspond to box-averaged data. Boxes are partitioned by horizontal and vertical black lines. The vertical black lines correspond to the first clustering and the horizontal lines correspond to the second clustering. Panels (c) and (d) display the comparison of kernel density estimates on the box-averaged data for different number B for (c) standard normal distribution, and (d) the distribution proportional to , respectively. For comparison, also plotted is the analytic probability density of the distribution. The total number of the points is . It can be seen that the reduced box-averaged data points nearly preserve the distribution of the original dataset.

Figure A1.

(Color online) Data reduction for (a) few number () of uniformly distributed data, and (b) many number () of uniformly distributed data. The 64 blue circles correspond to uniformly distributed data, 16 cyan crosses correspond to well-sampled uniformly distributed data, and 16 red circles correspond to box-averaged data. Boxes are partitioned by horizontal and vertical black lines. The vertical black lines correspond to the first clustering and the horizontal lines correspond to the second clustering. Panels (c) and (d) display the comparison of kernel density estimates on the box-averaged data for different number B for (c) standard normal distribution, and (d) the distribution proportional to , respectively. For comparison, also plotted is the analytic probability density of the distribution. The total number of the points is . It can be seen that the reduced box-averaged data points nearly preserve the distribution of the original dataset.

When is very large, the VBDM algorithm for the construction of data-driven basis functions [Procedure (1-B)] can be outlined as follows. We first use our data reduction method to obtain number of box-averaged data with sampling density in (37). The sampling density is estimated at the box-averaged data using all the box-averaged data points by a kernel density estimation method. Implementing the VBDM algorithm, we can obtain orthonormal eigenvectors , which are discrete estimates of the eigenfunctions . The component of the eigenvector is a discrete estimate of the eigenfunction , evaluated at the box-averaged data point . Due to the dramatic reduction of the training data, the computation of these eigenvectors becomes much cheaper than the computation of the eigenvectors using the original training dataset . Then we can obtain a discrete representation (10) of the conditional density at the box-averaged data points , .

Appendix B. Additional Results on Example II

In this section, we discuss the intrinsic Fourier representation constructed for numerical comparisons in Example II and provide more detailed discussion on the numerical results.

We first discuss the construction for the intrinsic Fourier representation of the true conditional density, , defined with respect to the volume form inherited by from the ambient space for the system on the torus (54) in Example II. By noticing the embedding (55) of in , we can obtain the following equality,

where is the volume form, denotes the true conditional density as a function of the intrinsic coordinates, . Assuming that , and the relation in (A1), we can construct the intrinsic Fourier representation as follows,

where is a RKWHS representation (10) of the conditional density with a set of orthonormal Fourier basis functions . Here, are formed by the tensor product of two sets of orthonormal Fourier basis functions , , and , , for . Note that for intrinsic Fourier representation, we need to know the embedding (55) and know the data of in intrinsic coordinates for training, which is available for this example. Nevertheless, for Hermite, Cosine, and VBDM representations, we only need to know the observation data for training.

The convergence of to the true density can be explained as follows. For the system (54) in the intrinsic coordinates , where for all parameter D, the statistics Var are bounded for all D and all , by the compactness of and the uniform boundedness of for all k. According to Theorem 1, we can obtain the convergence of the representation . Then by noticing the smoothness of on the torus, we can obtain the convergence of the intrinsic Fourier representation in (A2).

Next, we give an intuitive explanation for the reason why in the regime , VBDM representation can provide a good approximation whereas Hermite and Cosine representations cannot. Essentially, the VBDM representation uses basis functions of the weighted Hilbert space of functions defined with respect to a volume form that is conformally equivalent to the volume form V that is inherited by the data manifold from the ambient space, . That is, the weighted Hilbert space, , means,