Combining the Burrows-Wheeler Transform and RCM-LDGM Codes for the Transmission of Sources with Memory at High Spectral Efficiencies

Abstract

1. Introduction

2. Preliminaries

2.1. Markov Sources

- is the state transition probability matrix of dimension , with the probability of transition from state to state , i.e., for all k.

- is the observation symbol probability matrix, with the probability of getting in the binary symbol v in state , i.e., , , .

- is the initial state distribution vector, with the probability for the initial state to be , i.e., , .

2.2. Burrows-Wheeler Transform (BWT)

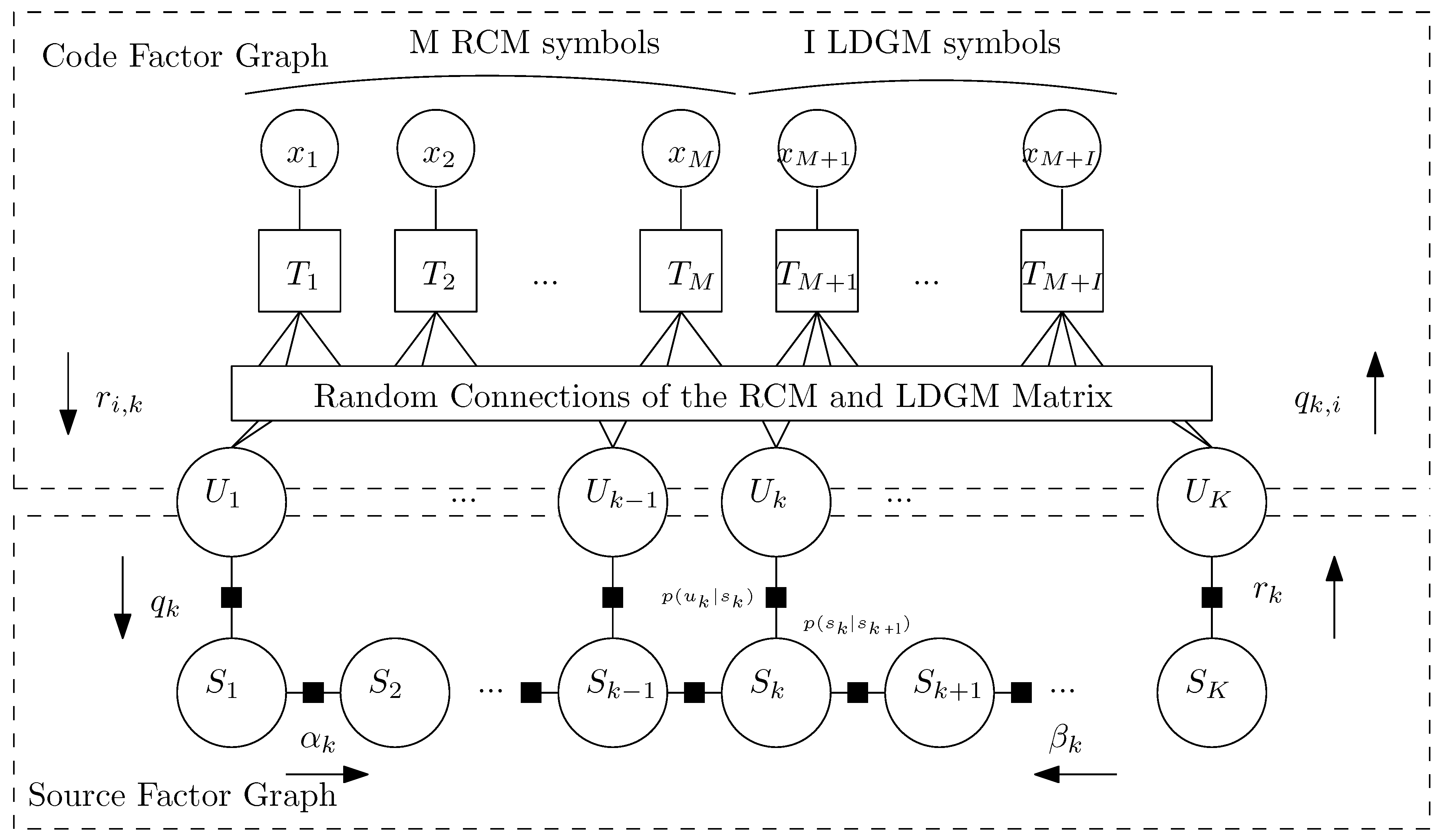

2.3. Parallel RCM-LDGM Codes

2.3.1. Rate-Compatible Modulation (RCM) Codes

2.3.2. Low-Density Generator Matrix (LDGM) Codes

2.3.3. Parallel RCM-LDGM Code

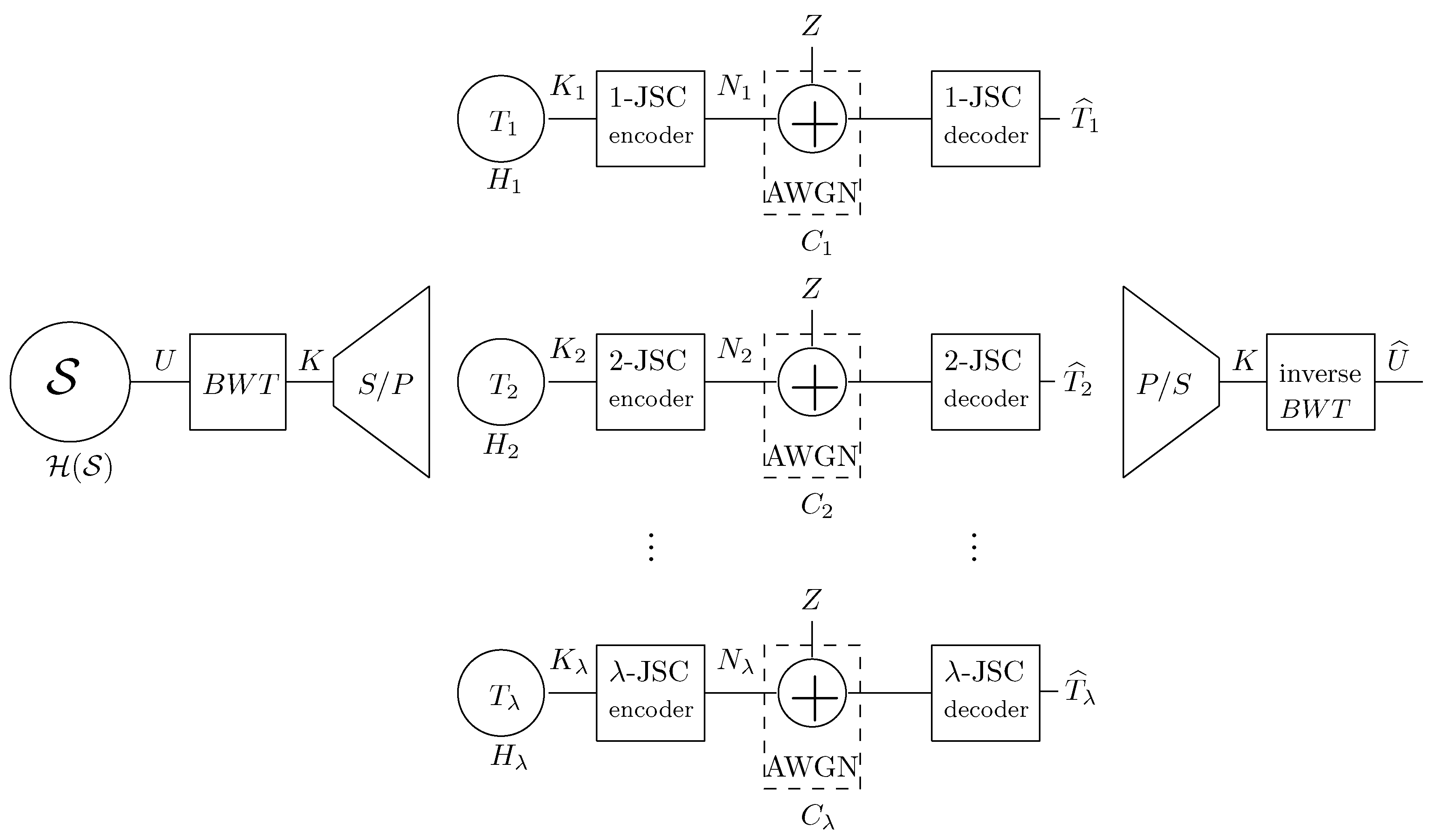

3. Proposed BTW-JSC Scheme

4. Results

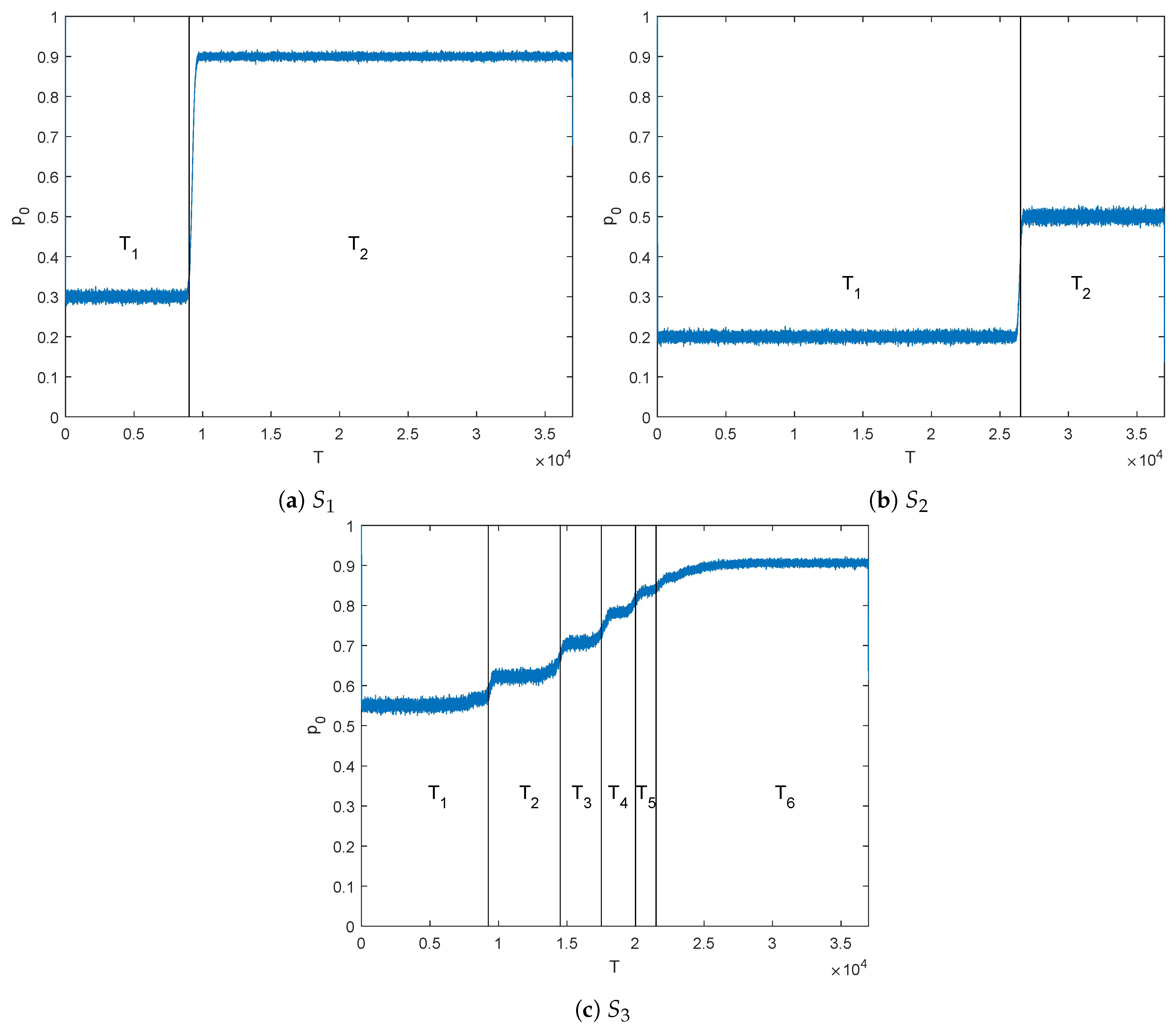

4.1. Simulated Sources and Their Output Probability Profile

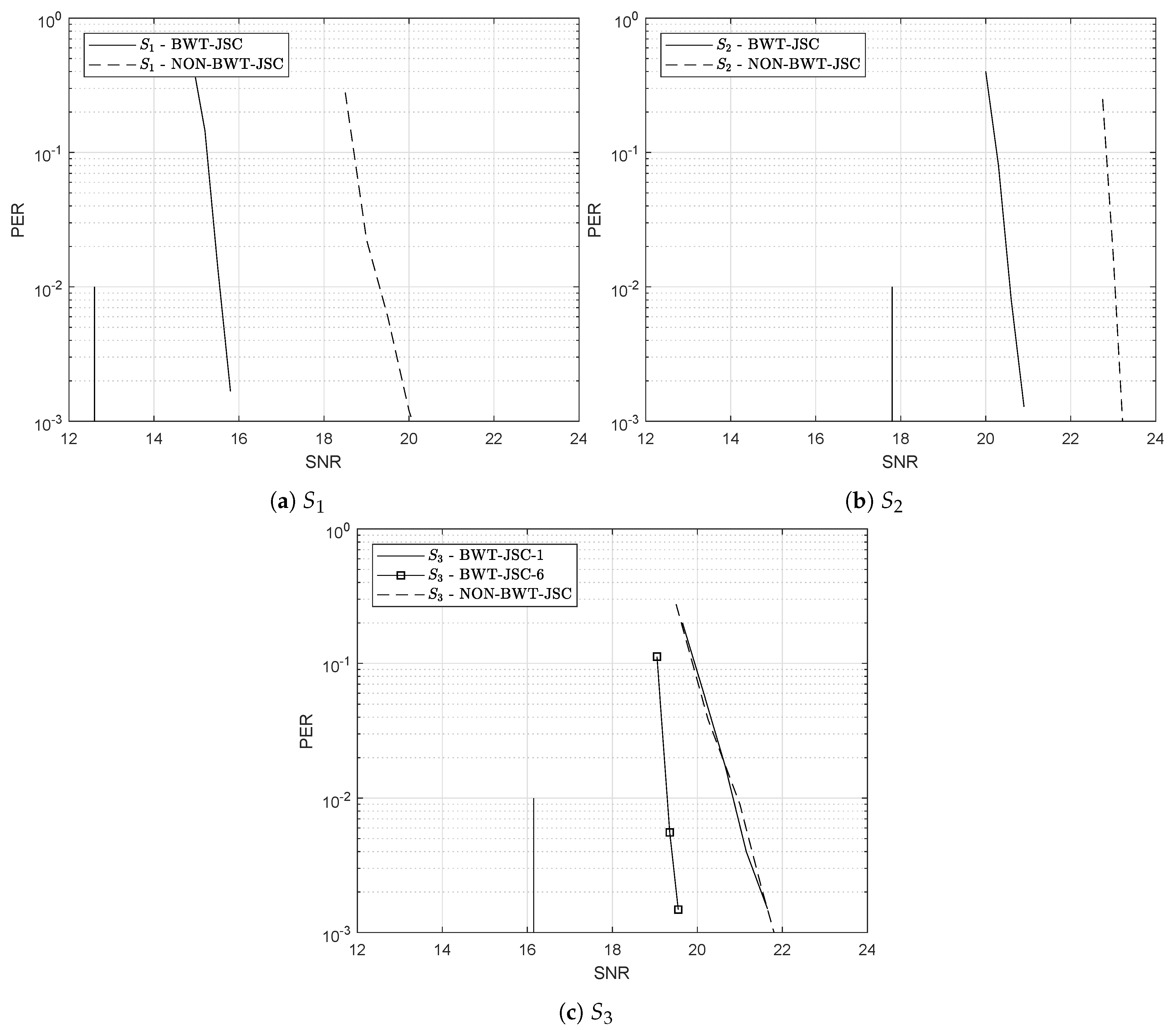

4.2. Numerical Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| BWT | Burrows-Wheeler Transform |

| DMS | Discrete Memoryless Source |

| JSC | Joint Source-Channel |

| MC | Markov Chain |

| HMM | Hidden Markov Model |

| RCM | Rate-Compatible Modulation |

| LDGM | Low-Density Generator Matrix |

| LDPC | Low-Density Parity Check |

| AWGN | Additive White Gaussian Noise |

| QAM | Quadrature Amplitude Modulation |

| SNR | Signal-to-Noise Ratio |

| PER | Paquet Error Rate |

References

- Sayood, K.; Borkenhagen, J.C. Use of residual redundancy in the design of joint source/channel coders. IEEE Trans. Commun. 1991, 39, 838–846. [Google Scholar] [CrossRef]

- Ramzan, N.; Wan, S.; Izquierdo, E. Joint source-channel coding for wavelet-based scalable video transmission using an adaptive turbo code. J. Image Video Process. 2007, 2007, 047517. [Google Scholar] [CrossRef]

- Zhonghui, M.; Lenan, W. Joint source-channel decoding of Huffman codes with LDPC codes. J. Electron. China 2006, 23, 806–809. [Google Scholar]

- Pu, L.; Wu, Z.; Bilgin, A.; Marcellin, M.W.; Vasic, B. LDPC-based iterative joint source-channel decoding for JPEG2000. IEEE Trans. Imagen Process. 2007, 16, 577–581. [Google Scholar] [CrossRef]

- Ordentlich, E.; Seroussi, G.; Verdu, S.; Viswanathan, K. Universal algorithms for channel decoding of uncompressed sources. IEEE Trans. Inf. Theory 2008, 54, 2243–2262. [Google Scholar] [CrossRef]

- Hindelang, T.; Hagenauer, J.; Heinen, S. Source-controlled channel decoding: Estimation of correlated parameters. In Proceedings of the 3rd International ITG Conference on Source and Channel Coding, Munich, Germany, 17–19 January 2000. [Google Scholar]

- Garcia-Frias, J.; Villasenor, J.D. Combining hidden Markov source models and parallel concatenated codes. IEEE Commun. Lett. 1997, 1, 111–113. [Google Scholar] [CrossRef]

- Garcia-Frias, J.; Villasenor, J.D. Joint turbo decoding and estimation of hidden Markov sources. IEEE J. Sel. Areas Commun. 2001, 19, 1671–1679. [Google Scholar] [CrossRef]

- Shamir, G.I.; Xie, K. Universal lossless source controlled channel decoding for i.i.d. sequences. IEEE Commun. Lett. 2005, 9, 450–452. [Google Scholar] [CrossRef]

- Yin, L.; Lu, J.; Wu, Y. LDPC based joint source-channel coding scheme for multimedia communications. In Proceedings of the 8th International Conference Communication System (ICCS), Singapore, 28 November 2002; Volume 1, pp. 337–341. [Google Scholar]

- Yin, L.; Lu, J.; Wu, Y. Combined hidden Markov source estimation and low-density parity-check coding: A novel joint source-channel coding scheme for multimedia communications. Wirel. Commun. Mob. Comput. 2002, 2, 643–650. [Google Scholar] [CrossRef]

- Ordentlich, E.; Seroussi, G.; Verdu, S.; Viswanathan, K.; Weinberger, M.J.; Weissman, T. Channel decoding of systematically encoded unknown redundant sources. In Proceedings of the International Symposium onInformation Theory, Chicago, IL, USA, 27 June–2 July 2004. [Google Scholar]

- Shamir, G.I.; Wang, L. Context decoding of low density parity check codes. In Proceedings of the 2005 Conference on Information Sciences and Systems, New Orleans, LA, USA, 14–17 March 2005; Volume 62, pp. 597–609. [Google Scholar]

- Xie, K.; Shamir, G.I. Context and denoising based decoding of non-systematic turbo codes for redundant data. In Proceedings of the International Symposium on Information Theory, Adelaide, Australia, 4–9 September 2005; pp. 1280–1284. [Google Scholar]

- Del Ser, J.; Crespo, P.M.; Esnaola, I.; Garcia-Frias, J. Joint Source-Channel Coding of Sources with Memory using Turbo Codes and the Burrows-Wheeler Transform. IEEE Trans. Commun. 2010, 58, 1984–1992. [Google Scholar] [CrossRef]

- Zhu, G.-C.; Alajaji, F. Joint source-channel turbo coding for binary Markov sources. IEEE Trans. Wirel. Commun. 2006, 5, 1065–1075. [Google Scholar]

- Li, L.; Garcia-Frias, J. Hybrid Analog Digital Coding Scheme Based on Parallel Concatenation of Liner Random Projections and LDGM Codes. In Proceedings of the 2014 48th Annual Conference on Information Sciences and Systems (CISS), Princeton, NJ, USA, 19–21 March 2014. [Google Scholar]

- Li, L.; Garcia-Frias, J. Hybrid Analog-Digital Coding for Nonuniform Memoryless Sources. In Proceedings of the 2015 49th Annual Conference on Information Sciences and Systems (CISS), Baltimore, MD, USA, 18–20 March 2015. [Google Scholar]

- Burrows, M.; Wheeler, D. A Block Sorting Lossless Data Compression Algorithm; Research Report 124; Digital Systems Center: Gdańsk, Poland, 1994. [Google Scholar]

- Visweswariah, K.; Kulkarni, S.; Verdú, S. Output Distribution of the Burrows Wheeler Transform. In Proceedings of the IEEE International Symposium of Information Theory, Sorrento, Italy, 25–30 June 2000. [Google Scholar]

- Effros, M.; Visweswariah, K.; Kulkarni, S.; Verdú, S. Universal lossless source coding with the burrows wheeler transform. IEEE Trans. Inf. Theory 2002, 48, 1061–1081. [Google Scholar] [CrossRef]

- Balkenhol, B.; Kurtz, S. Universal data compression based on the burrows wheeler transform: Theory and practice. IEEE Trans. Comput. 2000, 49, 1043–1053. [Google Scholar]

- Caire, G.; Shamai, S.; Verdú, S. Universal data compression using LDPC codes. In Proceedings of the International Symposium on Turbo Codes and Related Topics, Brest, France, 1–5 September 2003. [Google Scholar]

- Caire, G.; Shamai, S.; Shokrollahi, A.; Verdú, S. Fountain Codes for Lossless Data Compression; DIMACS Series in Discrete Mathematics and Theoretical Computer Science; American Mathematical Society: Providence, RI, USA, 2005; Volume 68. [Google Scholar]

- Cui, H.; Luo, C.; Wu, J.; Chen, C.W.; Wu, F. Compressive Coded Modulation for Seamless Rate Adaption. IEEE Trans. Wirel. Commun. 2013, 12, 4892–4904. [Google Scholar] [CrossRef]

- Mackay, D.J. Good Error-Correcting Codes Based on Very Sparse Matrices. IEEE Trans. Inf. Theory 1999, 45, 399–431. [Google Scholar] [CrossRef]

- Granada, I.; Crespo, P.M.; Garcia-Frias, J. Asymptotic BER EXIT chart analysis for high rate codes based on the parallel concatenation of analog RCM and digital LDGM codes. J. Wirel. Commun. Netw. 2019, 11. [Google Scholar] [CrossRef]

- Kschischang, F.R.; Frey, B.J.; Loeliger, H.-A. Factor Graphs and the Sum-Product Algorithm. IEEE Trans. Inf. Theory 2001, 47, 498–519. [Google Scholar] [CrossRef]

| Source | Matrix A | Matrix B | Vector | Entropy |

|---|---|---|---|---|

| 0.57 | ||||

| 0.8 | ||||

| 0.73 |

| M | I | ||||

| BWT-JSC | = 9020 | {1, 1, 1, 2, 2} | 3705 | 55 | 5 |

| = 27980 | {1, 1, 1, 1, 2, 2, 2, 2} | 6140 | 100 | 5 | |

| NON-BWT-JSC | K = 37000 | {2, 2, 3, 3, 4, 7} | 9860 | 140 | 5 |

| M | I | ||||

| BWT-JSC | = 26500 | {2, 2, 3, 3, 4, 8} | 6310 | 110 | 5 |

| = 10500 | {2, 3, 4, 7} | 3490 | 90 | 5 | |

| NON-BWT-JSC | K = 37000 | {2, 3, 4, 4, 7} | 9940 | 60 | 3 |

| M | I | ||||

| BWT-JSC- | = 9250 | {2, 3, 4, 4, 7} | 3376 | 29 | 7 |

| = 5250 | {2, 3, 4, 4, 7} | 1839 | 19 | 7 | |

| = 3000 | {2, 3, 4, 4, 7} | 935 | 37 | 7 | |

| = 2500 | {2, 3, 4, 4, 7} | 673 | 35 | 6 | |

| = 1500 | {2, 2, 3, 3, 4, 7} | 351 | 18 | 6 | |

| = 15500 | {2, 2, 2, 3, 3, 4, 4, 7, 7} | 2632 | 56 | 6 | |

| NON-BWT-JSC | K = 37000 | {2, 2, 3, 3, 4, 8} | 9880 | 120 | 3 |

| Entropy Rate | Shannon Limit | BWT-JSC(-) | NON-BWT-JSC | ||

|---|---|---|---|---|---|

| 0.57 | 12.57 dB | 15.8 dB | 20 dB | ||

| 0.80 | 17.78 dB | 20.9 dB | 23.25 dB | ||

| 1 | 6 | ||||

| 0.73 | 16.15 dB | 21.8 dB | 19.55 dB | 21.8 dB | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Granada, I.; Crespo, P.M.; Garcia-Frías, J. Combining the Burrows-Wheeler Transform and RCM-LDGM Codes for the Transmission of Sources with Memory at High Spectral Efficiencies. Entropy 2019, 21, 378. https://doi.org/10.3390/e21040378

Granada I, Crespo PM, Garcia-Frías J. Combining the Burrows-Wheeler Transform and RCM-LDGM Codes for the Transmission of Sources with Memory at High Spectral Efficiencies. Entropy. 2019; 21(4):378. https://doi.org/10.3390/e21040378

Chicago/Turabian StyleGranada, Imanol, Pedro M. Crespo, and Javier Garcia-Frías. 2019. "Combining the Burrows-Wheeler Transform and RCM-LDGM Codes for the Transmission of Sources with Memory at High Spectral Efficiencies" Entropy 21, no. 4: 378. https://doi.org/10.3390/e21040378

APA StyleGranada, I., Crespo, P. M., & Garcia-Frías, J. (2019). Combining the Burrows-Wheeler Transform and RCM-LDGM Codes for the Transmission of Sources with Memory at High Spectral Efficiencies. Entropy, 21(4), 378. https://doi.org/10.3390/e21040378