Deep Common Semantic Space Embedding for Sketch-Based 3D Model Retrieval

Abstract

:1. Introduction

2. Related Work

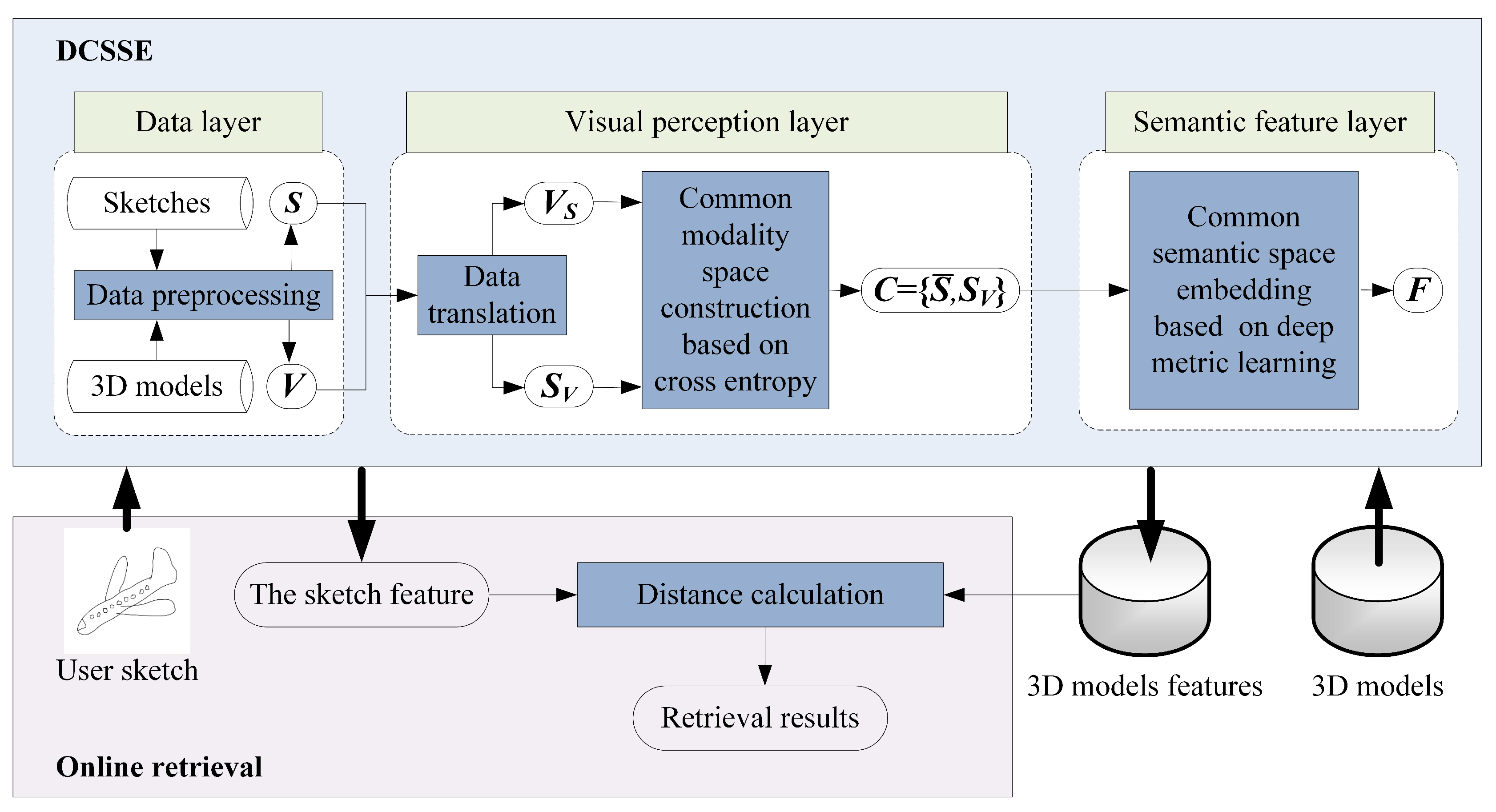

3. Proposed Method

- (1)

- DCSSE is composed of three layers: the data layer, the visual perception layer and the semantic feature layer. The inputs of DCSSE are the labeled 3D model dataset and the sketch dataset , where is the number of 3D models and is the number of sketches. In the data layer, a 3D model can be represented as l views by data preprocessing, which ensures both the inputted 3D models and the sketches occupy 2D space and share the same data form. Then, in the visual perception layer, data translation is used to construct a common modality space based on cross entropy, impelling all inputted data to share a similar visual perception. Furthermore, in the semantic feature layer, for any 3D model and any sketch , their feature embeddings and can be generated after deep metric learning, and a common semantic space F is constructed.

- (2)

- The online retrieval: Based on DCSSE, we can further extract the features of the user sketch and 3D models, calculate the distance between the user sketch and 3D models and return the retrieval results.

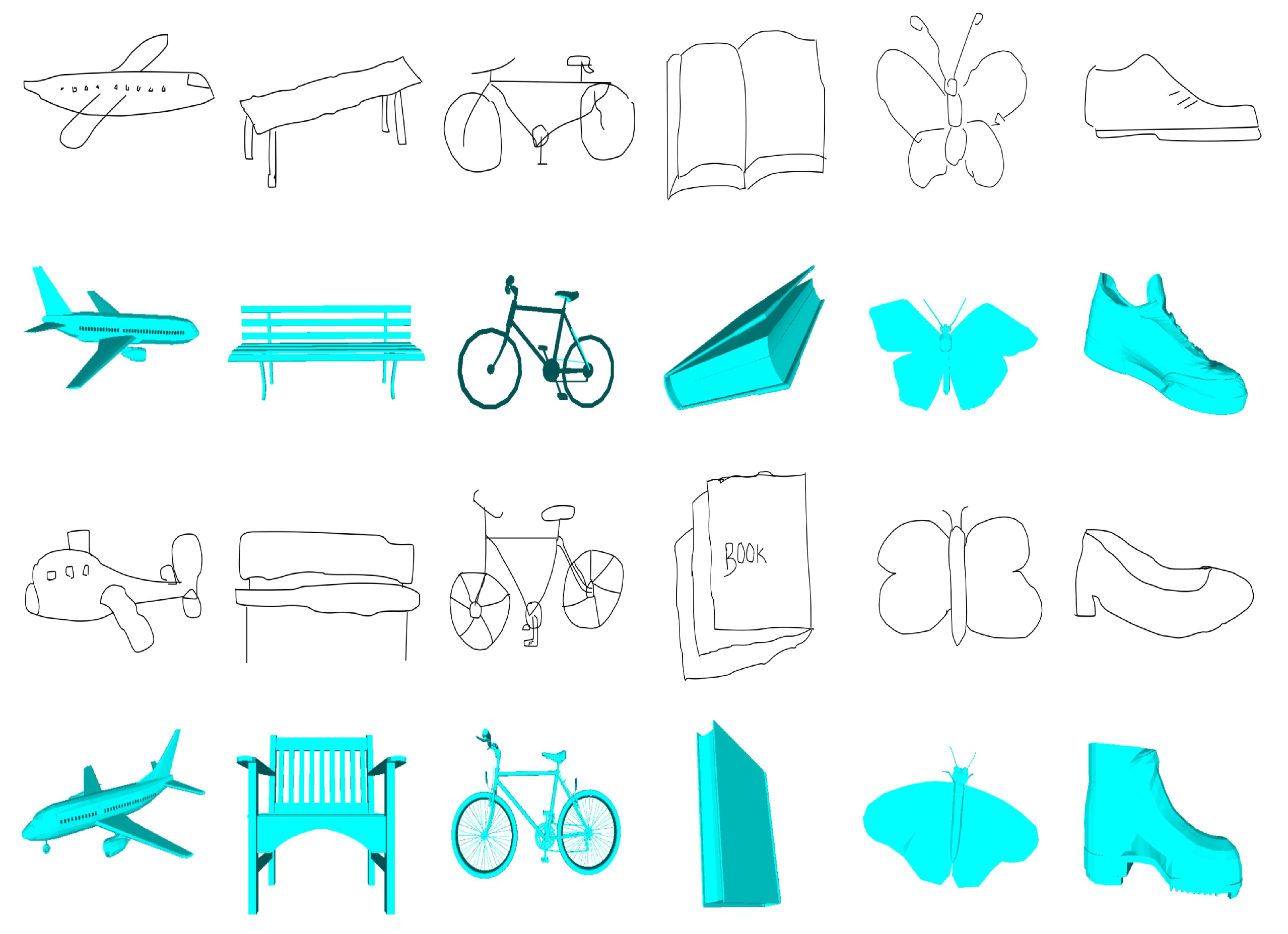

3.1. The Data Layer: Intradomain Data Preprocessing

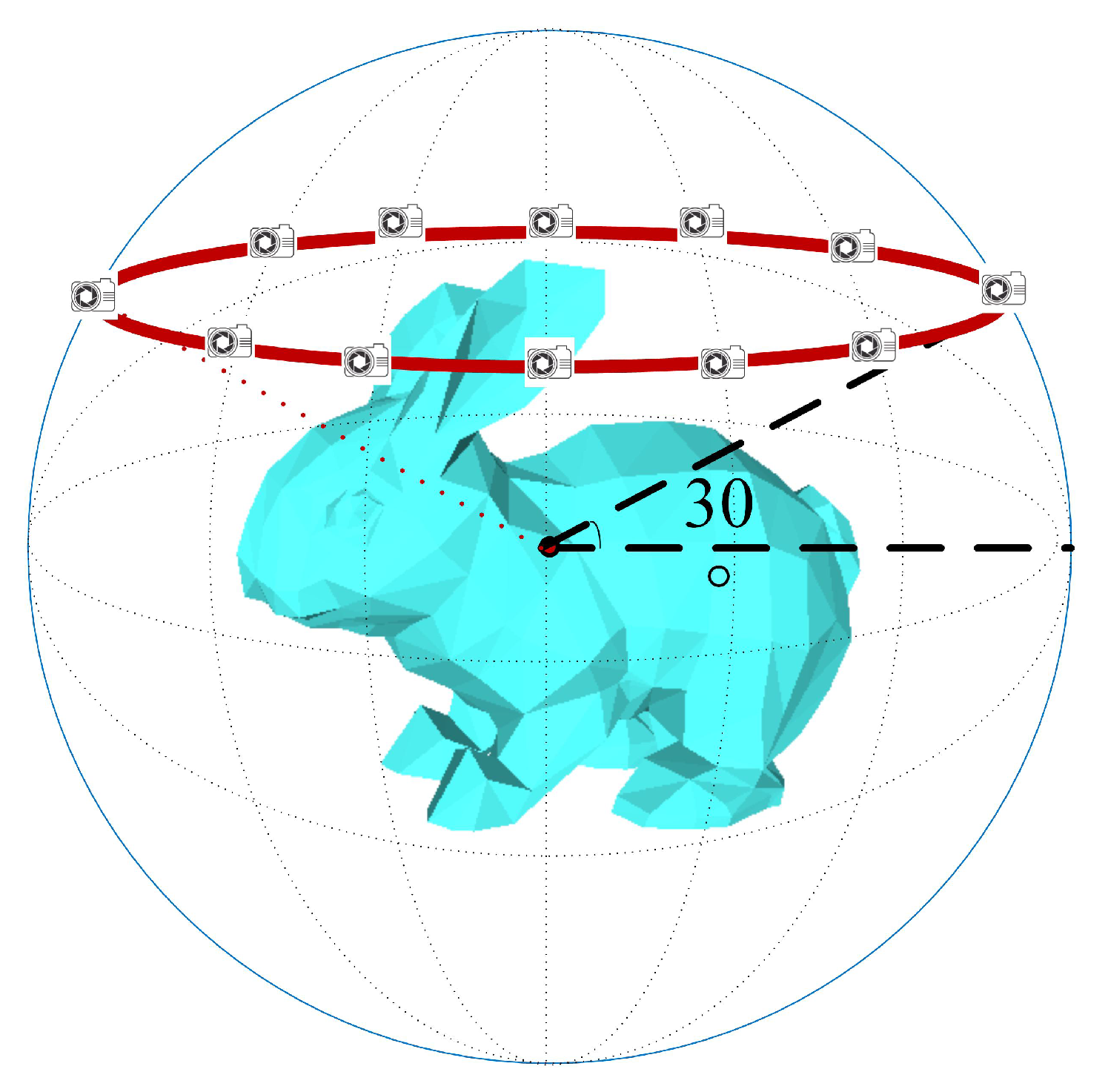

3.1.1. 3D Model Preprocessing

3.1.2. 2D Sketch Preprocessing

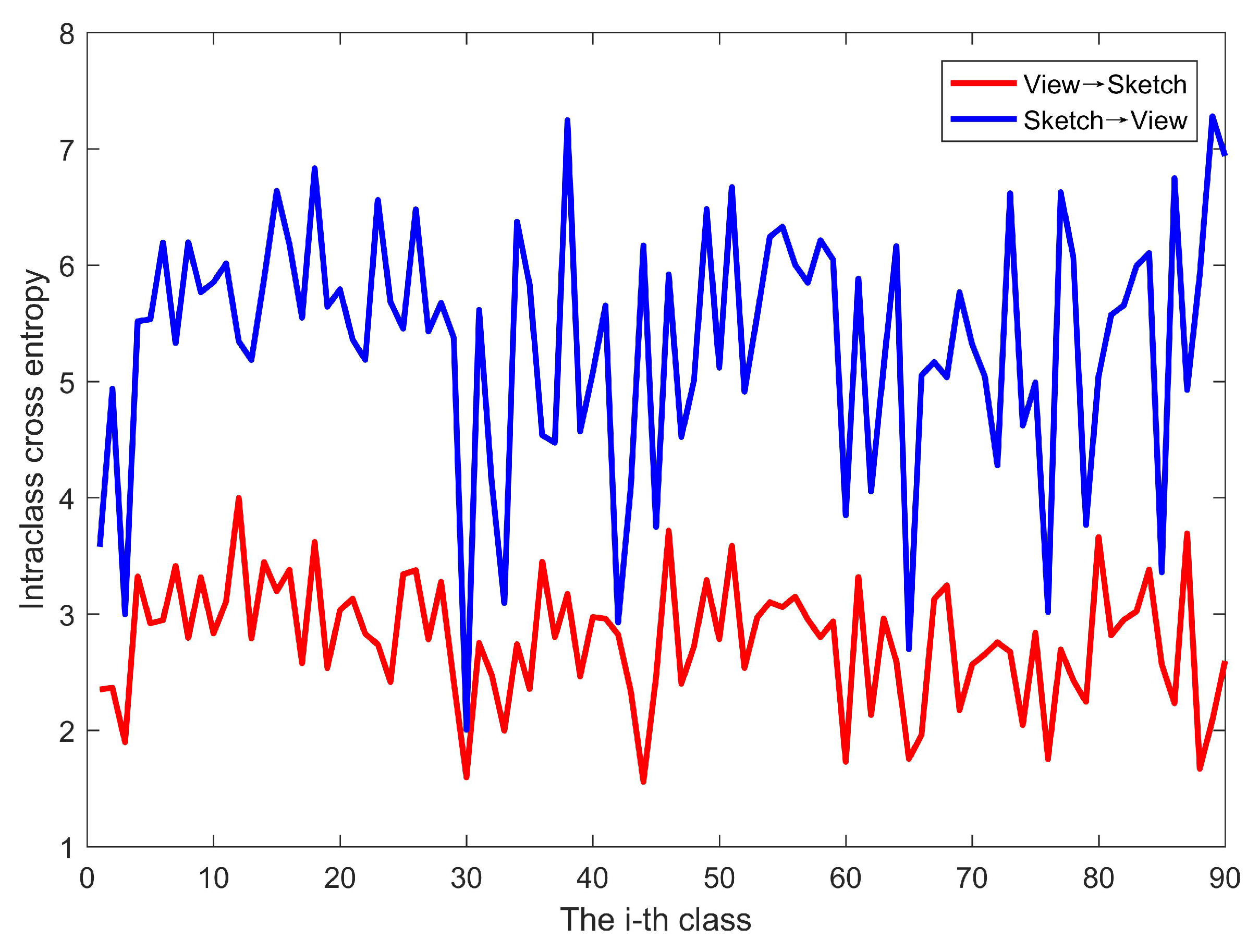

3.2. The Visual Perception Layer: Interdomain Data Translation

3.2.1. View-To-Sketch Translation

3.2.2. Sketch-To-View Translation

3.2.3. Construction of 2D Common Modality Space Based on Cross Entropy

3.3. The Semantic Feature Layer: Cross-Domain Common Semantic Space Embedding

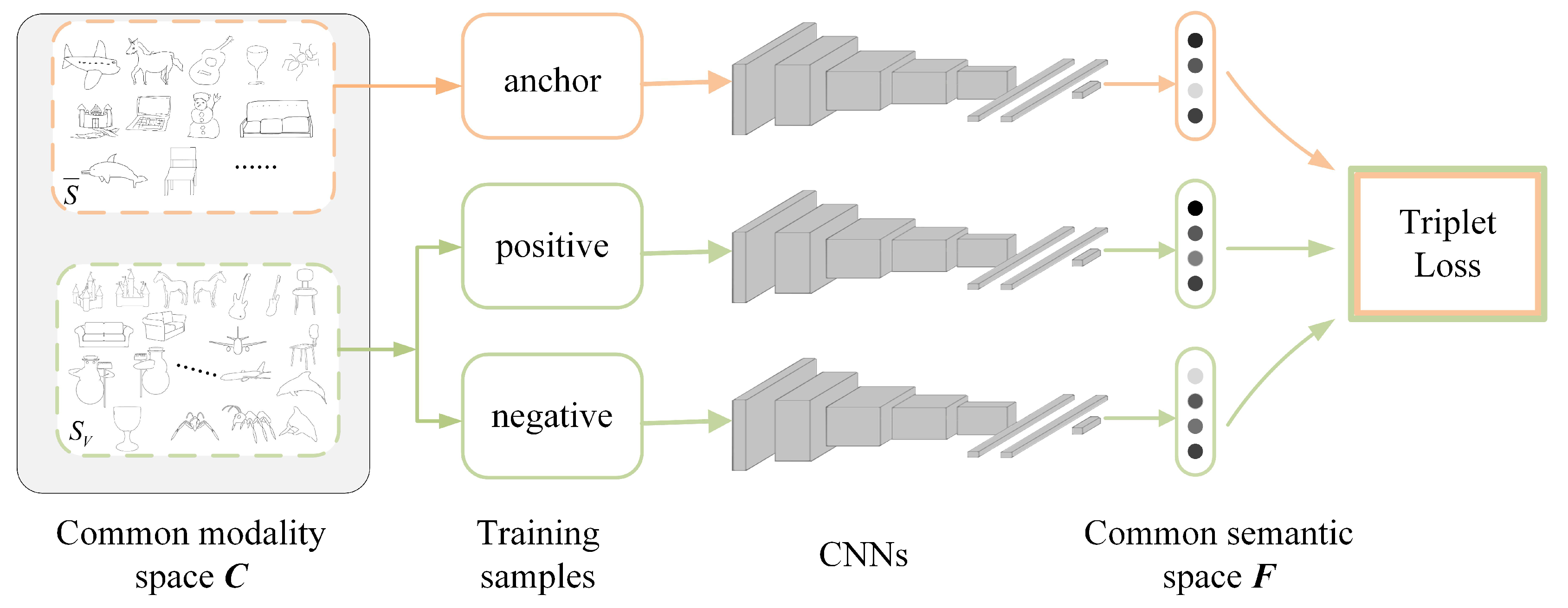

- (1)

- Selection of triples. The anchor training samples in the triples are selected from the normalized sketch dataset , and positive and negative training samples are selected from the extended sketch dataset . Positive samples must have the same class as a given anchor training sample, while negative training samples must have a different class.

- (2)

- Construction of the CNNs. Sketch-based 3D model retrieval is very complex, but the information in each sketch is relatively sparse. Therefore, taking AlexNet as the prototype [31], a medium-sized CNN is constructed. This network consists of eight layers; the first five layers are convolutional layers, the middle two layers are fully connected layers, and the last layer is the feature output layer Feat. The details are shown in Table 1.

- (3)

- Establishment of the loss function. Given the triplets , , of the objects, we take to be the anchor sample, to be the same class as , to be of a different class, to be the embedded feature representation of the network, and F to be the embedded feature space. The metric function should satisfy the following:where is the interval threshold and requires the minimum distance difference between the same class and different classes to be .The corresponding loss function can be expressed as follows:Here, if , otherwise .

- (4)

- Implementation.

3.4. Cross-Domain Distance Metric

- (1)

- is defined as the average distance from a sketch feature to all view features of a 3D model, calculated as follows:

- (2)

- is defined as the minimum distance from a sketch feature to all view features of a 3D model, calculated as follows:

4. Experiments

4.1. Datasets and Evaluation Metrics

4.2. Comparison of Different Distances

4.3. Comparison with the State-Of-The Art Methods

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Funkhouser, T.; Min, P.; Kazhdan, M.; Chen, J.; Halderman, A.; Dobkin, D.; Jacobs, D. A search engine for 3D models. ACM Trans. Graph. 2003, 22, 83–105. [Google Scholar] [CrossRef]

- Tangelder, J.W.H.; Veltkamp, R.C. A survey of content based 3D shape retrieval methods. Multimedia Tools Appl. 2008, 39, 441. [Google Scholar] [CrossRef]

- Li, B.; Lu, Y.; Godil, A.; Schreck, T.; Bustos, B.; Ferreira, A.; Furuya, T.; Fonseca, M.J.; Johan, H.; Matsuda, T.; et al. A comparison of methods for sketch-based 3D shape retrieval. Comput. Vis. Image Underst. 2014, 119, 57–80. [Google Scholar] [CrossRef]

- Li, B.; Lu, Y.; Li, C.; Godil, A.; Schreck, T.; Aono, M.; Burtscher, M.; Chen, Q.; Chowdhury, N.K.; Fang, B.; et al. A comparison of 3D shape retrieval methods based on a large-scale benchmark supporting multimodal queries. Comput. Vis. Image Underst. 2015, 131, 1–27. [Google Scholar] [CrossRef]

- Li, B.; Lu, Y.; Godil, A. SHREC’13 Track: Large Scale Sketch-Based 3D Shape Retrieval. In Proceedings of the Eurographics Workshop on 3D Object Retrieval, Girona, Spain, 11–12 May 2013; Eurographics Association: Girona, Spain, 2013. [Google Scholar]

- Li, B.; Lu, Y.; Li, C.; Godil, A.; Schreck, T.; Aono, M.; Burtscher, M.; Fu, H.; Furuya, T.; Johan, H.; et al. SHREC’14 track: Extended large scale sketch-based 3D shape retrieval. In Proceedings of the Eurographics Workshop on 3d Object Retrieval, Strasbourg, France, 6 April 2014; Eurographics Association: Strasbourg, France, 2014. [Google Scholar]

- Yoon, S.M.; Scherer, M.; Schreck, T.; Kuijper, A. Sketch-based 3D model retrieval using diffusion tensor fields of suggestive contours. In Proceedings of the International Conference on Multimedia, Philadelphia, PA, USA, 29–31 March 2010; pp. 193–200. [Google Scholar]

- Eitz, M.; Richter, R.; Boubekeur, T.; Hildebrand, K.; Alexa, M. Sketch-based shape retrieval. ACM Trans. Graph. 2012, 31, 1–10. [Google Scholar] [CrossRef]

- Li, B.; Johan, H. Sketch-based 3D model retrieval by incorporating 2D-3D alignment. Multimedia Tools Appl. 2013, 65, 363–385. [Google Scholar] [CrossRef]

- Li, B.; Lu, Y.; Johan, H. Sketch-Based 3D Model Retrieval by Viewpoint Entropy-Based Adaptive View Clustering. In Proceedings of the Eurographics Workshop on 3D Object Retrieval, Girona, Spain, 11–12 May 2013; Eurographics Association: Lower Saxony, Germany, 2013. [Google Scholar]

- Huang, Z.; Fu, H.; Lau, R.W.H. Data-driven segmentation and labeling of freehand sketches. ACM Trans. Graph. 2014, 33, 175. [Google Scholar] [CrossRef]

- Li, B.; Lu, Y.; Shen, J. A semantic tree-based approach for sketch-based 3D model retrieval. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016. [Google Scholar]

- Furuya, T.; Ohbuchi, R. Ranking on cross-domain manifold for sketch-based 3D model retrieval. In Proceedings of the 2013 International Conference on Cyberworlds, Yokohama, Japan, 21–23 October 2013; pp. 274–281. [Google Scholar]

- Tasse, F.P.; Dodgson, N.A. Shape2vec: Semantic based descriptors for 3D shapes, sketches and images. ACM Trans. Graph. 2016, 35, 208. [Google Scholar] [CrossRef]

- Li, Y.; Lei, H.; Lin, S.; Luo, G. A new sketch-based 3D model retrieval method by using composite features. Multimedia Tools Appl. 2018, 77, 2921–2944. [Google Scholar] [CrossRef]

- Zhu, F.; Xie, J.; Fang, Y. Learning Cross-Domain Neural Networks for Sketch-Based 3D Shape Retrieval. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 15–17 February 2016; pp. 3683–3689. [Google Scholar]

- Dai, G.; Xie, J.; Zhu, F.; Fang, Y. Deep Correlated Metric Learning for Sketch-based 3D Shape Retrieval. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 4002–4008. [Google Scholar]

- Dai, G.; Xie, J.; Fang, Y. Deep Correlated Holistic Metric Learning for Sketch-based 3D Shape Retrieval. IEEE Trans. Image Process. 2018, 27, 3374–3386. [Google Scholar] [CrossRef] [PubMed]

- Qi, A.; Song, Y.Z.; Xiang, T. Semantic Embedding for Sketch-Based 3D Shape Retrieval. In Proceedings of the British Machine Vision Conference (BMVC), Newcastle, UK, 2–6 September 2018. [Google Scholar]

- Wang, F.; Kang, L.; Li, Y. Sketch-based 3d shape retrieval using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1875–1883. [Google Scholar]

- Xie, J.; Dai, G.; Zhu, F.; Fang, Y. Learning barycentric representations of 3D shapes for sketch-based 3D shape retrieval. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Venice, Italy, 21–26 July 2017; pp. 3615–3623. [Google Scholar]

- Chen, J.; Fang, Y. Deep Cross-modality Adaptation via Semantics Preserving Adversarial Learning for Sketch-based 3D Shape Retrieval. arXiv, 2018; arXiv:1807.01806. [Google Scholar]

- Xu, Y.; Hu, J.; Zeng, K.; Gong, Y. Sketch-Based Shape Retrieval via Multi-view Attention and Generalized Similarity. In Proceedings of the 2018 7th International Conference on Digital Home (ICDH), Guilin, China, 30 November–1 December 2018. [Google Scholar]

- Su, H.; Maji, S.; Kalogerakis, E.; Learned-Miller, E. Multi-view convolutional neural networks for 3d shape recognition. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 945–953. [Google Scholar]

- Guo, H.; Wang, J.; Gao, Y.; Li, J.; Lu, H. Multi-view 3D object retrieval with deep embedding network. IEEE Trans. Image Process. 2016, 25, 5526–5537. [Google Scholar] [CrossRef] [PubMed]

- Phong, B.T. Illumination for computer generated pictures. Commun. ACM 1975, 18, 311–317. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–11 December 2014; MIT Press: Boston, MA, USA, 2014. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. arXiv, 2017; arXiv:1703.10593. [Google Scholar]

- Vedral, V. The role of relative entropy in quantum information theory. Rev. Mod. Phys. 2002, 74, 197. [Google Scholar] [CrossRef]

- Hoffer, E.; Ailon, N. Deep metric learning using triplet network. In International Workshop on Similarity-Based Pattern Recognition; Springer: Cham, Switzerland, 2015; pp. 84–92. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems 25 (NIPS 2012), Lake Tahoe, NV, USA, 3–8 December 2012; pp. 1097–1105. [Google Scholar]

- Sousa, P.; Fonseca, M.J. Sketch-based retrieval of drawings using spatial proximity. J. Vis. Lang. Comput. 2010, 21, 69–80. [Google Scholar] [CrossRef]

- Zou, C.; Wang, C.; Wen, Y.; Zhang, L.; Liu, J. Viewpoint-Aware Representation for Sketch-Based 3D Model Retrieval. IEEE Signal Process. Lett. 2014, 21, 966–970. [Google Scholar]

- Li, H.; Wu, H.; He, X.; Lin, S.; Wang, R.; Luo, X. Multi-view pairwise relationship learning for sketch based 3D shape retrieval. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017; pp. 1434–1439. [Google Scholar]

| Layer | Filter Size | Stride | Pad | Feature Maps | Input Size | Output Size |

|---|---|---|---|---|---|---|

| Conv1 | 4 | 0 | 96 | |||

| Relu1 | – | – | – | – | ||

| Norm1 | – | – | – | – | ||

| Pool1 | 2 | 0 | 96 | |||

| Conv2 | – | 2 | 256 | |||

| Relu2 | – | – | – | – | ||

| Norm2 | – | – | – | – | ||

| Pool2 | 2 | 0 | 256 | |||

| Conv3 | 0 | 1 | 384 | |||

| Relu3 | – | – | – | – | ||

| Conv4 | 0 | 1 | 384 | |||

| Relu4 | – | – | – | – | ||

| Conv5 | 0 | 1 | 256 | |||

| Relu5 | – | – | – | – | ||

| Pool5 | 2 | 0 | 256 | |||

| Fc6 | – | – | – | – | 4096 | |

| Relu6 | – | – | – | – | 4096 | 4096 |

| Dropout6 | – | – | – | – | 4096 | 4096 |

| Fc7 | – | – | – | – | 4096 | 4096 |

| Relu7 | – | – | – | – | 4096 | 4096 |

| Dropout 7 | – | – | – | – | 4096 | 4096 |

| Feat | – | – | – | – | 4096 | 200 |

| – | NN | FT | ST | E | DCG | AP |

|---|---|---|---|---|---|---|

| EUD_Ave | 0.849 | 0.772 | 0.858 | 0.410 | 0.888 | 0.817 |

| EUD_Min | 0.816 | 0.744 | 0.847 | 0.406 | 0.869 | 0.790 |

| EMD_Ave | 0.844 | 0.764 | 0.852 | 0.407 | 0.886 | 0.812 |

| EMD_Min | 0.813 | 0.741 | 0.845 | 0.406 | 0.868 | 0.787 |

| Methods | NN | FT | ST | E | DCG | AP |

|---|---|---|---|---|---|---|

| CDMR [13] | 0.279 | 0.203 | 0.296 | 0.166 | 0.458 | 0.250 |

| SBR-VC [5] | 0.164 | 0.097 | 0.149 | 0.085 | 0.348 | 0.116 |

| SP [32] | 0.017 | 0.016 | 0.031 | 0.018 | 0.240 | 0.026 |

| FDC [5] | 0.053 | 0.038 | 0.068 | 0.041 | 0.279 | 0.051 |

| Siamese [20] | 0.405 | 0.403 | 0.548 | 0.287 | 0.607 | NA |

| DCML [17] | 0.650 | 0.634 | 0.719 | 0.348 | 0.766 | NA |

| LWBR [21] | 0.712 | 0.725 | 0.785 | 0.369 | 0.814 | NA |

| SEM [19] | 0.823 | 0.828 | 0.860 | 0.403 | 0.884 | NA |

| OURS | 0.849 | 0.772 | 0.858 | 0.410 | 0.888 | 0.817 |

| Methods | NN | FT | ST | E | DCG | AP |

|---|---|---|---|---|---|---|

| BF-fGALIF [8] | 0.115 | 0.051 | 0.078 | 0.036 | 0.321 | 0.044 |

| CDMR [13] | 0.109 | 0.057 | 0.089 | 0.041 | 0.328 | 0.054 |

| SBR-VC [5] | 0.095 | 0.050 | 0.081 | 0.037 | 0.319 | 0.050 |

| BOF-JESC (Words800-VQ) [33] | 0.086 | 0.043 | 0.068 | 0.030 | 0.310 | 0.041 |

| Siamese [20] | 0.239 | 0.212 | 0.316 | 0.140 | 0.496 | NA |

| DCML [17] | 0.272 | 0.275 | 0.345 | 0.171 | 0.498 | NA |

| LWBR [21] | 0.403 | 0.378 | 0.455 | 0.236 | 0.581 | NA |

| MVPR [34] | 0.546 | 0.506 | 0.642 | 0.301 | 0.715 | 0.543 |

| SEM [19] | 0.804 | 0.749 | 0.813 | 0.395 | 0.870 | NA |

| OURS | 0.830 | 0.708 | 0.807 | 0.384 | 0.871 | 0.745 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bai, J.; Wang, M.; Kong, D. Deep Common Semantic Space Embedding for Sketch-Based 3D Model Retrieval. Entropy 2019, 21, 369. https://doi.org/10.3390/e21040369

Bai J, Wang M, Kong D. Deep Common Semantic Space Embedding for Sketch-Based 3D Model Retrieval. Entropy. 2019; 21(4):369. https://doi.org/10.3390/e21040369

Chicago/Turabian StyleBai, Jing, Mengjie Wang, and Dexin Kong. 2019. "Deep Common Semantic Space Embedding for Sketch-Based 3D Model Retrieval" Entropy 21, no. 4: 369. https://doi.org/10.3390/e21040369

APA StyleBai, J., Wang, M., & Kong, D. (2019). Deep Common Semantic Space Embedding for Sketch-Based 3D Model Retrieval. Entropy, 21(4), 369. https://doi.org/10.3390/e21040369