The Understanding Capacity and Information Dynamics in the Human Brain

Abstract

1. Preview

- (1)

- Self-organization in the neuronal substrate;

- (2)

- Information production; and

- (3)

- Optimization of the organism–environment interaction.

2. Introduction

2.1. The Physical and the Mental

- (a)

- Material (physical) objects = ;

- (b)

- Mental objects = ;

- (c)

- Relationships between the material and the mental objects, responsible for making the former accessible to the latter (i.e., intelligible) .

2.1.1. Physical Objects

2.1.2. Mental Objects

2.1.3. Requirements for Intelligibility

- The physical world is intelligible because it has a degree of order and consistency;

- Processes in the brain serve to apprehend that order and apply the results in regulating behavior;

- Efficient regulation is predicated on the availability of reversible operations performed on distinct (segregated), (quasi)stable, and flexible informational structures (mental objects).

2.2. Evolution of Regulatory Mechanisms

- (A)

- Primitive organisms. Regulation is confined to the boundary surface (e.g., opening or closing surface channels to allow or block access to the organism’s internals).

- (B)

- Animals. Regulation expands to the immediate surrounds and manifests in a range of behaviors extending from simple reactions (e.g., sea slugs extending or withdrawing their gills) to complex predatory or foraging behaviors in higher animals. In all cases, acting on conditions external to the surface involves establishing direct contact with the surface (reaching, grabbing, clawing, biting, etc.).

- (C)

- Humans. Regulation expands to outside conditions separated from the organism’s boundary surface by indefinitely large intervals. As formulated by Bertrand Russell: “…the essential practical function of “consciousness” and “thought” is that they enable us to act with reference to what is distant in time and space, although it is not at present stimulating our senses” [12].

2.3. Principles of Understanding

- (1)

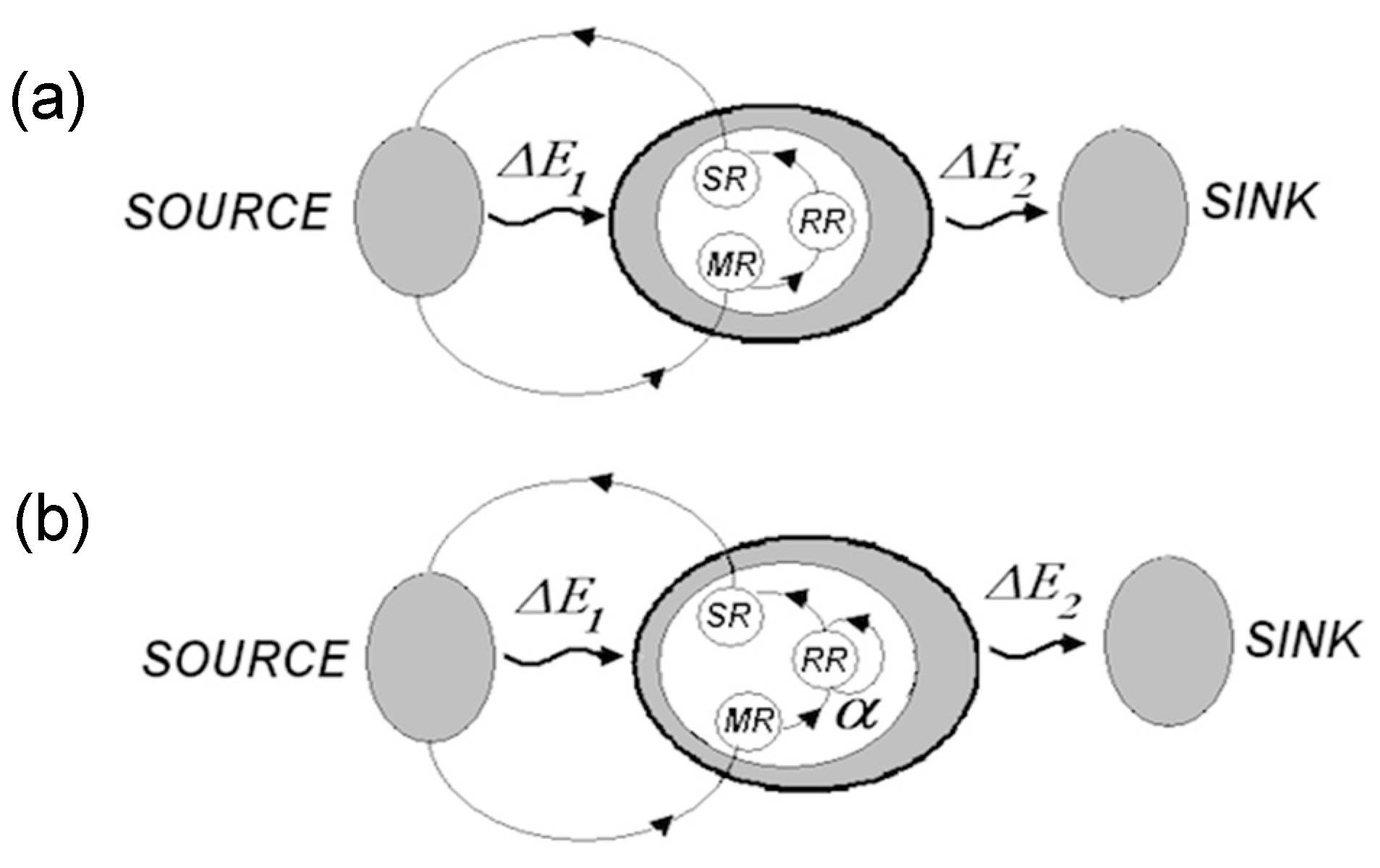

- Self-organization feeds on energy. More precisely, “the flow of energy through a system acts to organize that system” [13]. Entropy H of an open system connected to an energy source and energy sink is determined by entropy of the system and cumulative entropy of the source and the sink , H = +. According to the second law, + 0. Energy flow from the source to the sink leads to increasing entropy in the source-sink subsystem, > 0. The only demand on entropy change in placed by the second law is that . Accordingly, entropy decreases in are permitted, under the condition that system is open and serves as a conduit for energy flow [13].

- (2)

- Self-organization takes place in open systems driven away from equilibrium (“dissipative systems”) [14,15], and proceeds through phase transitions accompanied by entropy reduction and symmetry changes [16,17]. The rate of entropy generation declines as systems relax toward steady states [18]. Changes of symmetry manifest, for example, in the formation of Benard cells when molecular mechanisms of heat transfer are replaced with convective heat transfer. In general, symmetry breakings accompany transitions from disorganized movements of individual (micro) units to collective movement of ensembles comprised of multiple units (as, for example, during transitions from laminar to turbulent flow in liquids [19,20]).

- (3)

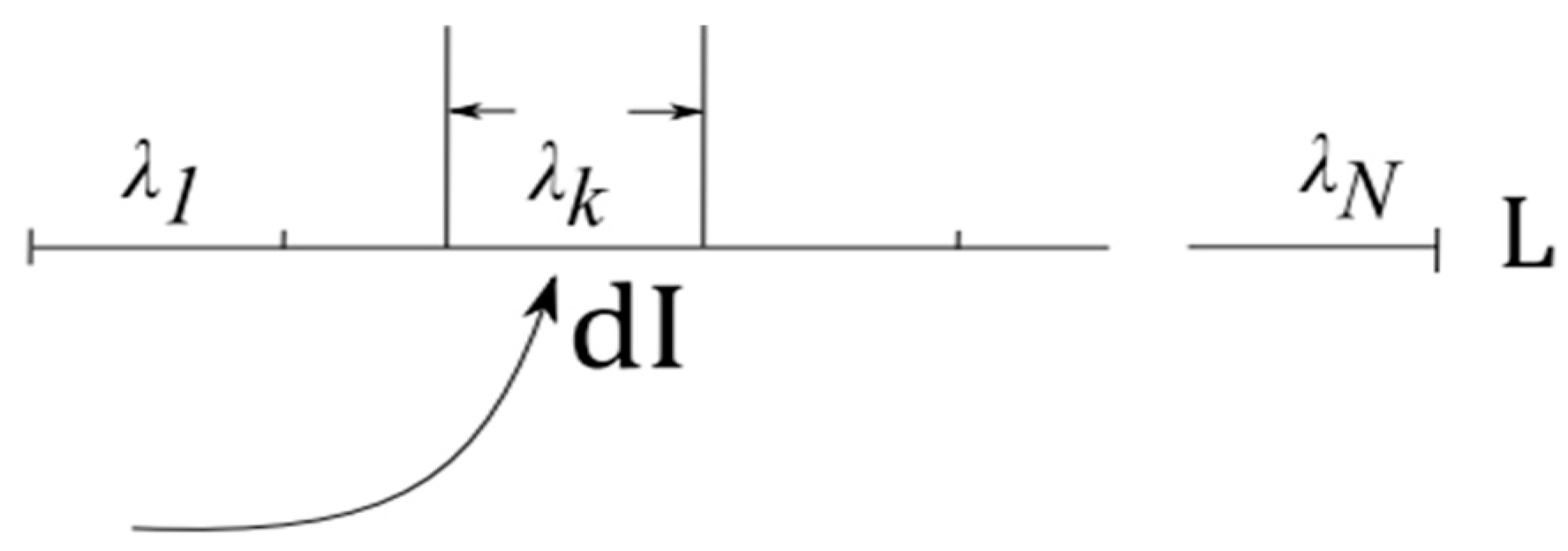

- Information absorption entails entropy reduction and extraction of free energy [21,22,23,24]. The notion dates back to the realization that measurements yielding information dI about a physical system cause entropy increase inside that system [21]. Reciprocally, absorbing information dI reduces entropy dH in the receiver, accompanied by extraction of free energy dF and conversion of heat into work dW. Roughly, the argument is as follows [24].

- (1)

- Understanding is a product of self-organization in the neuronal substrate, involving self-directed construction and manipulation of mental models.

- (2)

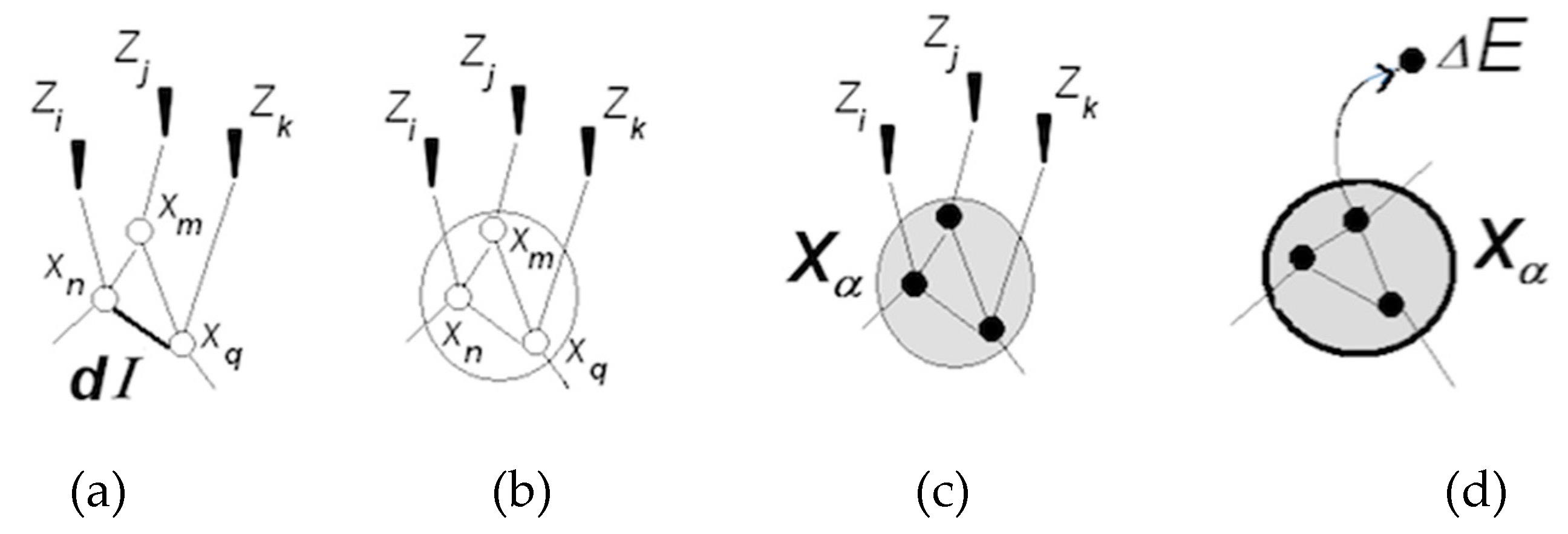

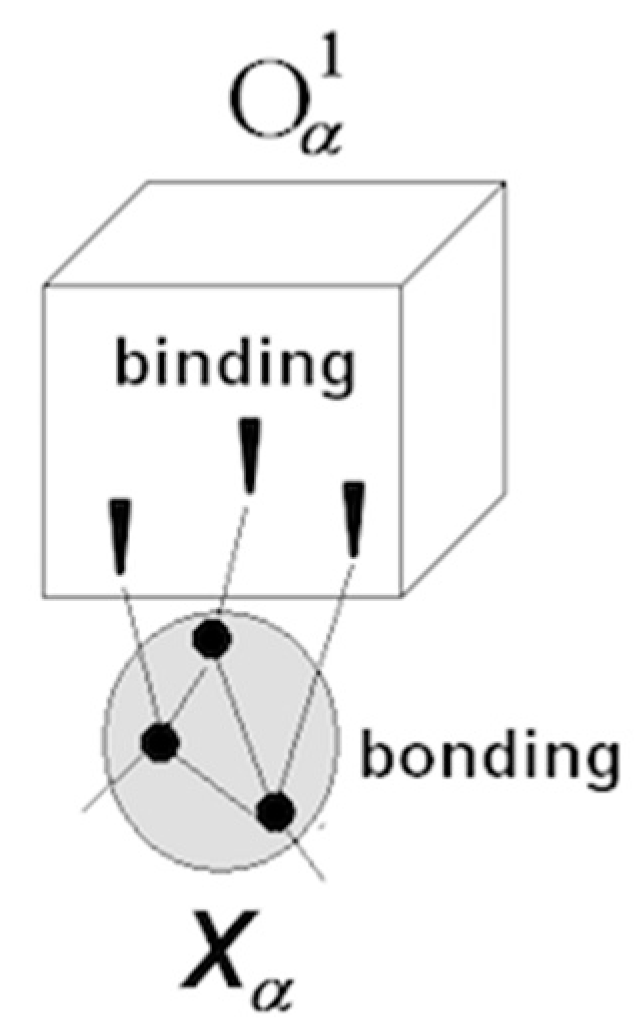

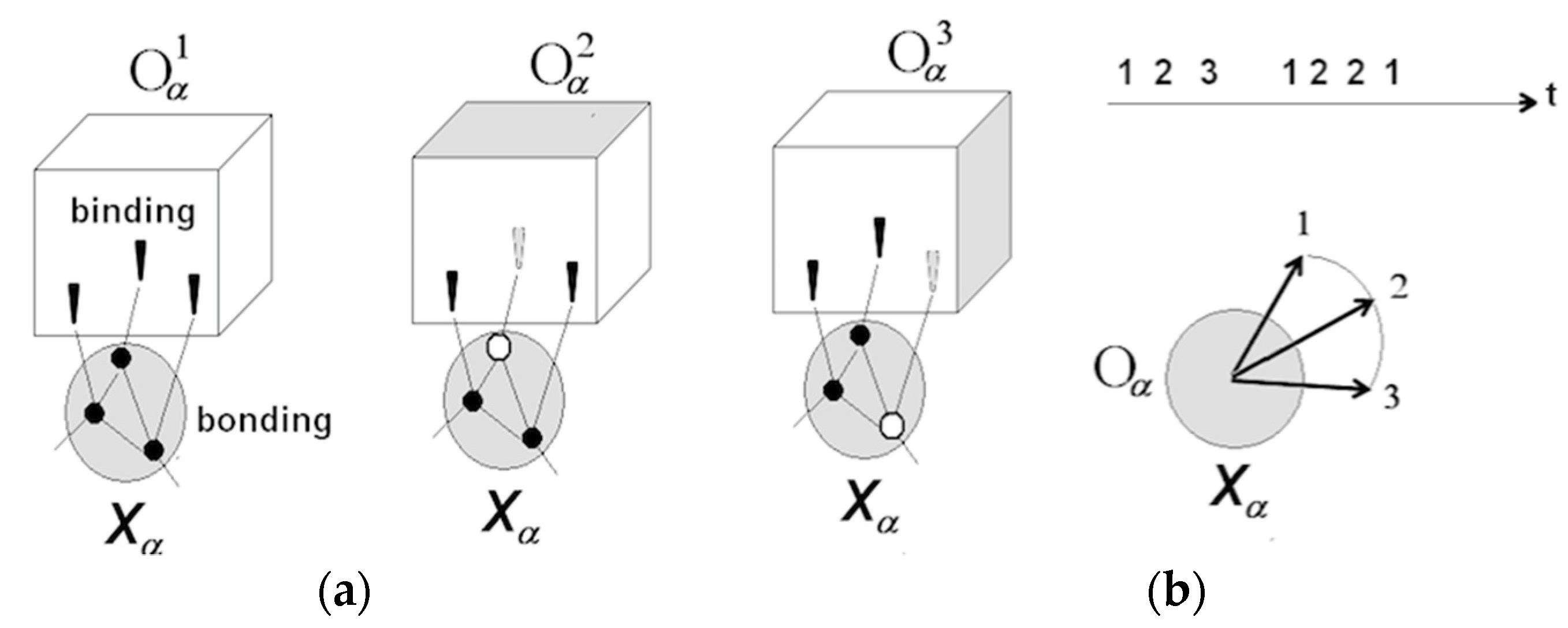

- Models are composed of quasi-stable neuronal groupings (packets).

- (3)

- Mental modeling involves work and is predicated on supplying free energy in the amounts sufficient for performing that work. The human brain regulates extraction of free energy from the environment and diverts a part of it towards the work of mental modeling.

- (4)

- Modeling produces information. Absorbing information from the environment equates to negentropy extraction, and reducing entropy as a result of internal information production equates to negentropy generation.

- (5)

- The process is self-catalytic, in the sense that modeling stimulates information seeking on the outside (significant information) that facilitates increasing order on the inside, via formation of new models, and expanding and unifying the already formed models.

- (6)

- Mental models function as synergistic complexes, focusing energy delivery and obtaining large amounts of work at low energy costs (high-cost attentive changes in any component produce mutually coordinated, low-cost changes throughout the model).

- (7)

- Modeling yields a quantum leap in regulatory efficiency by improving energy extraction from the outside (better predictions and robust response construction in unforeseen circumstances) and reducing unproductive energy expenditures inside the system.

3. Theory of Understanding: How the Intelligible World Arises from Sensory Flux

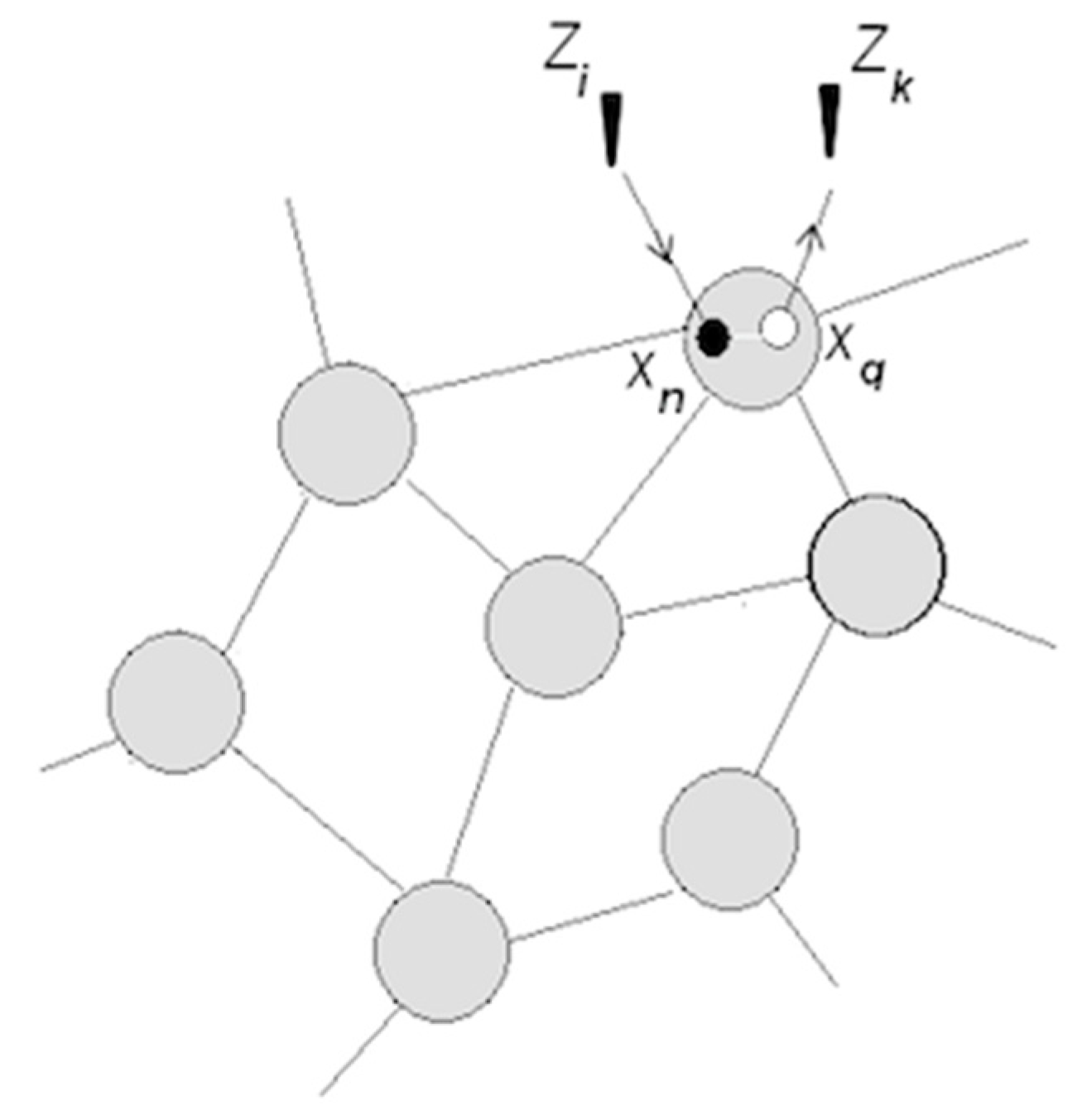

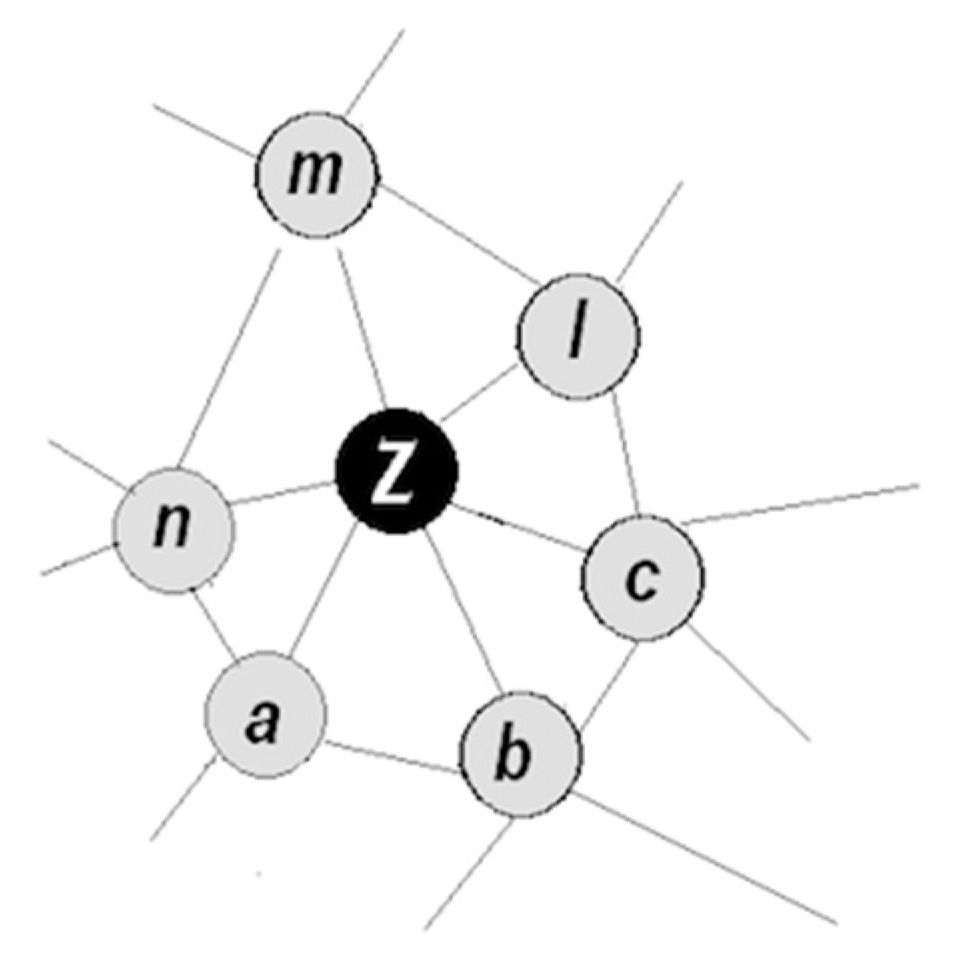

3.1. Neuronal Packets and Their Role in Understanding

3.1.1. Neuronal Packets: The Building Blocks of Understanding

3.1.2. Perception and Recognition

3.1.3. Apprehending Behavior

3.1.4. Rudimentary Understanding

- (1)

- Objects are in the immediate proximity of the animal (within the sensory-motor feedback loop);

- (2)

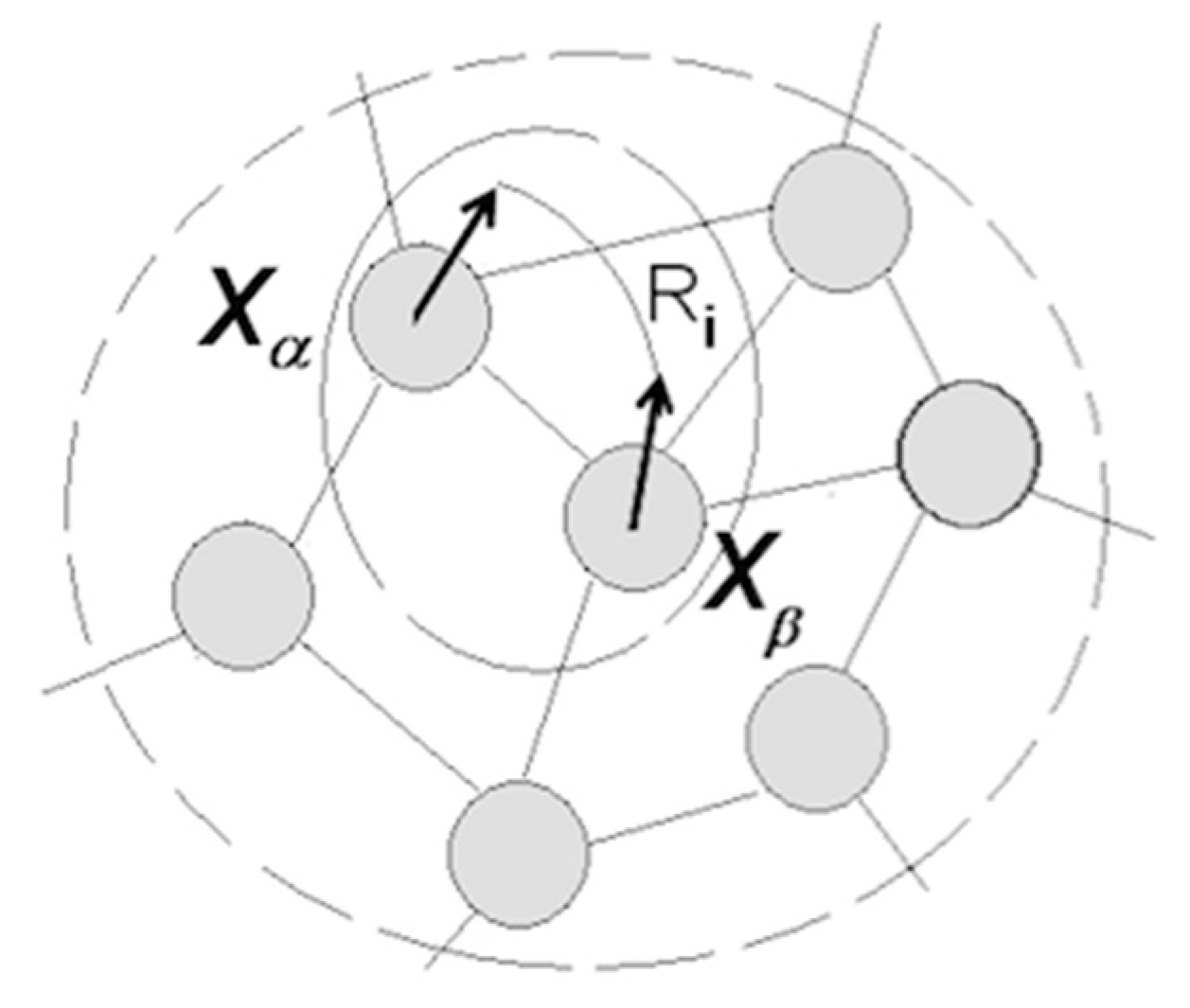

- Objects have familiar properties and are proximal in space and time, that is, have been co-occurring in the animal’s past history (accordingly, the corresponding packets occupy proximal positions in a small neighborhood in the packet network);

- (3)

- Manipulations are within the envelope of instinctive (genetically determined) responses (e.g., reaching, pulling, dragging, grabbing, etc.).

3.1.5. Cognitive Revolution: Emergence of Human Understanding

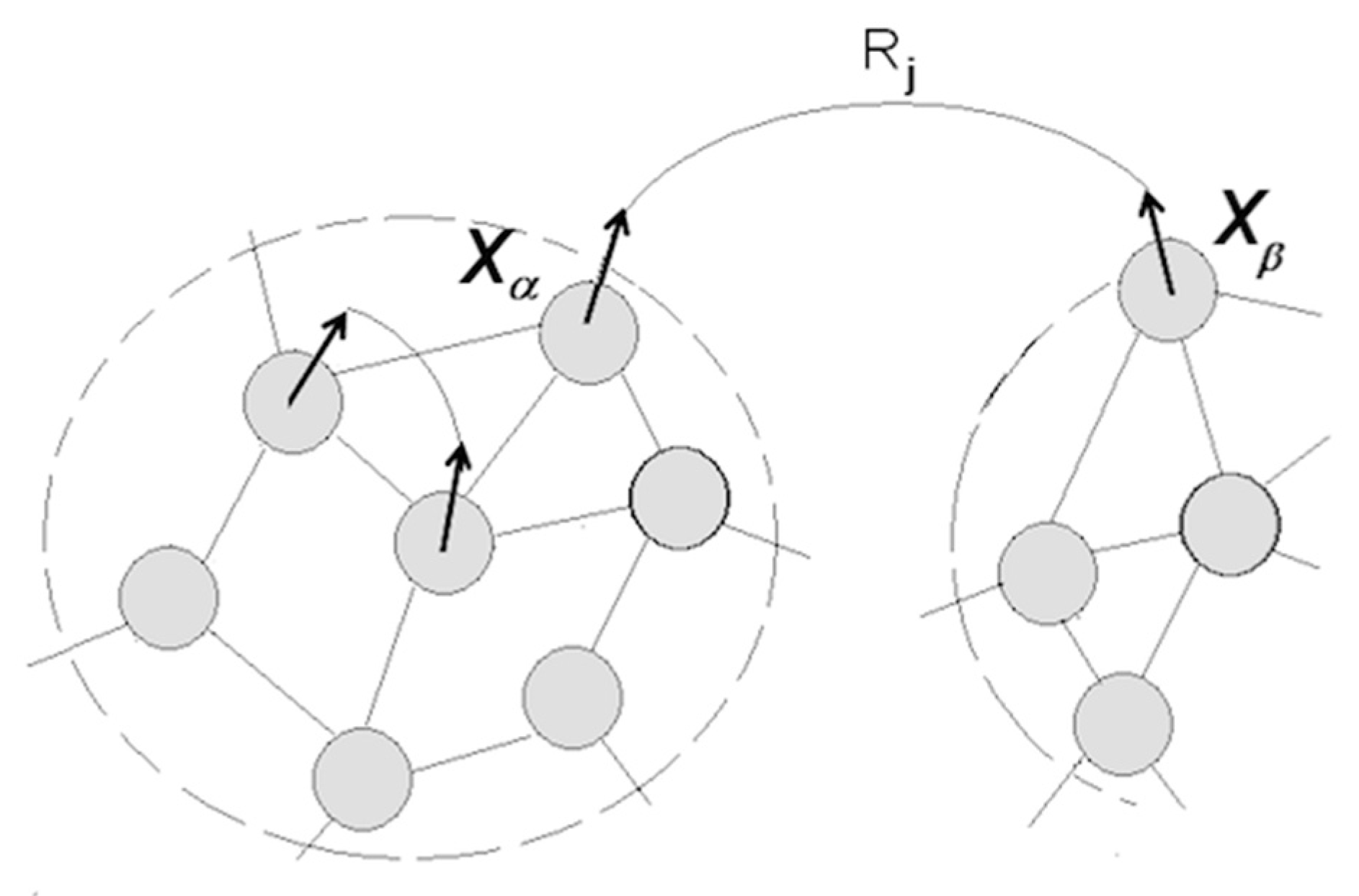

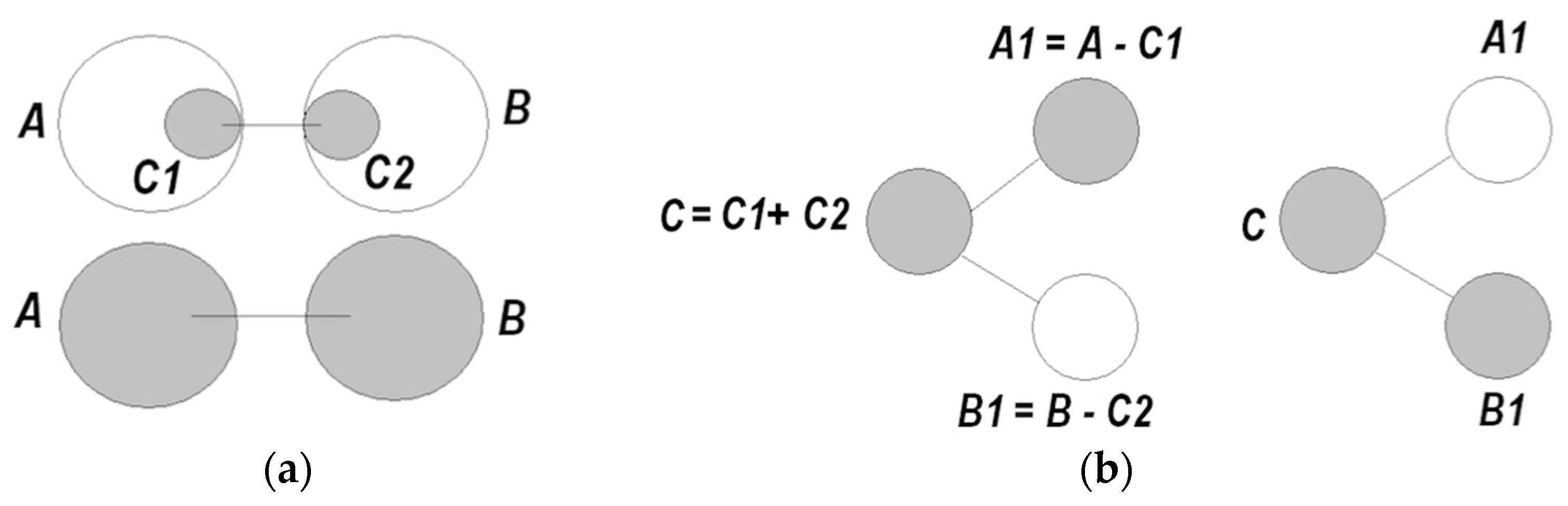

- (1)

- Coordinating packet vectors across unlimited spans in the packet network; and

- (2)

- Conducting such coordinations without motor-sensory feedback (i.e., while withdrawing from sensory inflows and suppressing overt motor activities).

- (1)

- If packet corresponds to a currently perceived object A, establishing coordination allows one to attribute causes of A’s behavior to some object B, which is not amenable to perception;

- (2)

- suggests the use of object B and deployment of coordination in order to produce some desired changes in the behavior of object A;

- (3)

- allows prediction of changes in B following changes in A;

- (4)

- Exercising coordination simulating interaction between ;

- (5)

- A coordinated pair becomes a functional unit → that can be coordinated with other units ( ) → , and so on;

- (6)

- Establishing coordinations equates to production of information, yielding reduction of entropy, and a growing degree of order in the system. Accordingly, information can be sought that facilitates entropy reduction, via self-directed construction, expansion, and integration of models;

- (7)

- Establishing coordination is experienced as attaining understanding, or grasping the meaning of behavior variations in A and B;

- (8)

- Understanding enables explanation.

- (1)

- Understanding entails entropy reduction, i.e., the resolution of uncertainty or expected surprise. Establishing relations (e.g., switch controls bulb) amounts to forming dependencies between packets, which is accompanied by producing information and reducing entropy,here H () denotes entropy before the dependency (relation, coordination) was established.

- (2)

- Understanding entails generalization, i.e., an increase in the marginal likelihood of internal models following a reduction in model complexity. Grasping a relation in a particular process enables transferring it to a variety of other processes different from the original one (e.g., having comprehended that switch controls bulb, the person can figure out how to handle desk lamps, floor lamps, fans, or other devices operated by switches, etc.). As formulated by Piaget:“…the subject must, in order to understand the process, be able to construct in thought an indefinite series ….and to treat the series he has actually observed as just one sector of that unlimited range of possibilities”.[6] (p. 222)

- (3)

- “Understanding brings out reason in things” [6] (p. 222), and thus enables explanations (“the bulb turned on because this switch controls it and it was turned up”).

- (4)

- Most importantly, understanding makes it possible to overcome the inertia of prior learning, and thus enables coping with disruptive changes and unprecedented conditions. Technically, intrinsic to modeling is the possibility of constructing, in thought, various packet groupings until a composition emerges fitting the situation at hand, and thus allowing explanation and prediction.

- (1)

- Intelligence derives from biophysical mechanisms allowing self-directed construction of mental models, establishing coordinated activities in neuronal packets residing in different domains in the packet network. Cognitive functions enabled by the mechanism range from figuring out methods for handling physical objects in order to achieve some desired objectives, to formulating scientific theories defining coordination between abstract variables.

- (2)

- Modeling entails entropy reduction. As a result, the process motivates extracting and producing information that is conducive to further entropy reduction, and thus has intrinsic worth to the system. Accounting for internal information production modifies Equation (1).here is the internal information increment. Entropy reduction inside the system— is compensated by entropy increases in the surrounds, keeping the overall process in line with the second law.

- (3)

- Along with maximizing intrinsic significance, modeling serves to maximize extrinsic value (utility) by supporting “mental simulation”, thus reducing prediction errors (minimizing variational free energy [11]). To underscore: In feedback-controlled coordinations, information has no intrinsic worth independent of the external conditions it signifies. Decoupling from feedback gives rise to intrinsic worth commensurate with the degree of entropy reduction the information obtains. The pursuit of intrinsic worth involves re-organizing and unifying mental models and seeking information that is subjectively significant, that is, conducive to further entropy reduction. Intrinsic worth motivates cognitive effort in search of understanding.

- (4)

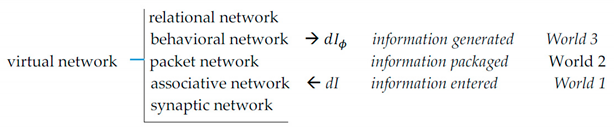

- The overall functional organization of the regulatory system is hierarchical, as shown below.

Synaptic wiring at the bottom gives rise to informational hierarchy, where each upper level is produced by operations on the lower one. The hierarchy extends upward indefinitely (models comprising models comprising models...).

- (5)

- The world is intelligible because its representation is constructed by the same mechanism that is employed in the attempts to understand it. Intelligibility does not equate to ready understanding, it only implies that understanding can be reached eventually with effort.

3.2. Supportive Experimental Findings

3.2.1. Neuronal Packets—Are They “Real”?

3.2.2. Mental Modeling: From Fitting Sticks to Landing on the Moon

“There is, in fact, a very appreciable difference between the two types of co-ordination, the first having a material and causal character because it involves a co-ordination of movements, and the second being implicative. The co-ordinations of actions … must proceed by systematic steps, thus ensuring continual accommodation to the present and the conservation of the past, but impeding inferences as to the future, distant spaces, and possible developments. By contrast, mental co-ordination succeeds in combining all the multifarious data and successive data into an overall, simultaneous picture, which vastly multiplies the powers of spacio-temporal extension, and of deducing possible developments”.[6] (p. 219)

“In large complexes, each of which hangs together as a … functional or dynamic unit. Such a complex, an interrelated knot of pieces … is to be considered as a unit of perception and significance”.[48]

“consists essentially of taking stock of the spatial, functional, and dynamic relations among the perceived parts, so that they can be combined into one whole”.[48]

3.2.3. Language

“We can picture Merge’s output as a kind of triangle—the two arguments of Merge form the two legs of the triangle’s “base,” and the label sits on “top” of the triangle”.[50] (p. 114])

“Language evolved as an instrument of internal thought, with externalization as a secondary process”.[50] (p. 74)

4. Aspects of Human Cognition

4.1. Landscape Navigation in Norm and Pathology

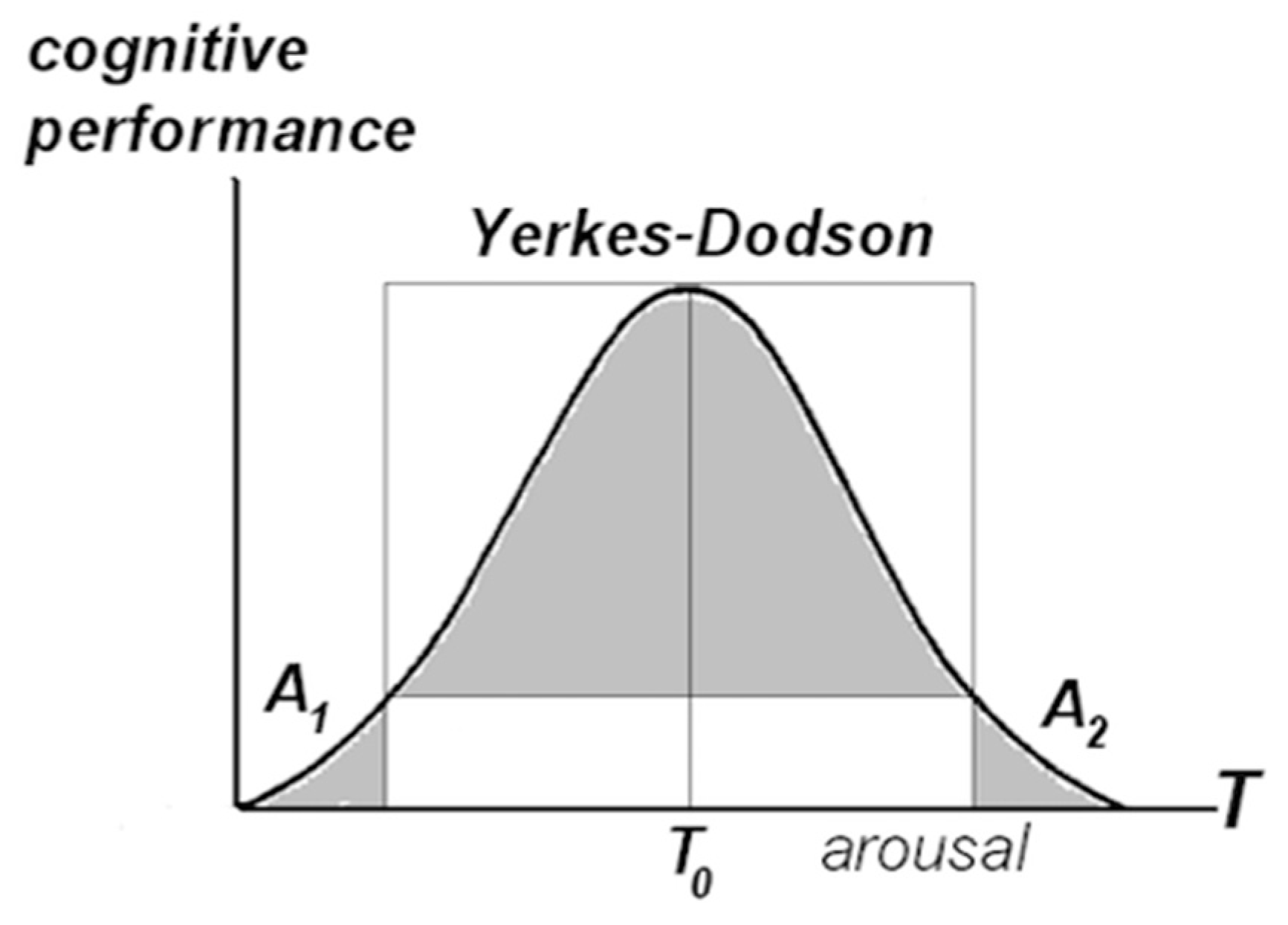

4.2. Cognitive Effort, Value Attribution, and Consciousness

4.3. Assimilation and Accommodation

4.4. Architecture for Coordination

4.5. From Self-Organization to Self-Realization

- (1)

- Genetics determines a person’s intellectual pursuits in the course of the life time and the ability to realize such pursuits within some range of condition variations;

- (2)

- Absence of the requisite conditions can arrest self-realization and cause frustration.

5. Summary and Discussion

5.1. Discussion: How Neurons Make Us Smart

“Observe that a high level of mechanization can be achieved in executing the algorithm, without any evidence of understanding, and a high level of understanding can be achieved at a stage where the algorithm still has to be followed from an externally stored recipe”.[104]

“Abduction … is an inferential step … including preference for any one hypothesis over others which would equally explain the facts, so long as this preference is not based upon any previous knowledge bearing upon the truth of the hypothesis, nor on any testing of any of the hypotheses, after having admitted them on probation”.[105]

5.2. Clarifications and Definitions

5.2.1. The Brain Operates as a Resource Allocation System with Self-Adaptive Capabilities

5.2.2. Attention

“An attentional mechanism helps sets of the relevant neurons to fire in a coherent semi-oscillatory way … so that a temporary global unity is imposed on neurons in many different parts of the brain”.[123]

5.2.3. Motivation

5.2.4. Understanding in Humans and Animals

5.2.5. Neuronal Substrate of Relations

5.2.6. Relations as Objects

5.2.7. Thinking

5.2.8. Dynamics of Thinking

5.2.9. Learning with and without Understanding

5.2.10. Meaning and value

5.2.11. Neuroenergetics

5.2.12. Gnostron

5.3. Further Research—A Fork in the Road

“Without offending against the principle of entropy in the physical sense … all organic creation achieves something that runs exactly counter to the purely probabilistic process in the inorganic realm. The organic world is constantly involved in a process of conversion from the more probable to the generally more improbable by continuously giving rise to higher, more complex sates of harmony from lower, simpler grades of organization”.[147]

- Converting excessive heat into work;

- Biasing ATP hydrolysis towards accelerating release of Gibbs free energy and inhibiting release of metabolic heat;

- Reducing Landauer’s cost of information processing (by regulating access in the landscape).

(please recollect that understanding was presumed to play a marginal role in problems solving, if any [104], as seen Section 5.1.) Neuropsychological theory of understanding has a dual objective of: (a) analyzing performance benefits conferred by the understanding capacity, and (b) elucidating the underlying neuronal mechanisms, aiming at representing them within a unified functional architecture (architecture for understanding) (e.g., contingent on further analysis, the architecture might account for recent findings indicating that processing of plausible and implausible data engages different pathways in the brain [160]). The theory needs to be broad enough to allow comprehensive analysis of the role played by understanding in different manifestations of intelligence (“multiple intelligences” [161]).“Unified theories of cognition are single sets of mechanisms that cover all of cognition—problem solving, decision making, routine action, memory, learning, skill, perception, motor activity, language, motivation, emotion, imagining, dreaming, daydreaming, etc. Cognition must be taken broadly, to include perception and motor activity”.[159]

5.4. Digest

Acknowledgments

Conflicts of Interest

References

- Bruner, J.S. Beyond the Information Given; W.W. Norton and Company: New York, NY, USA, 1973. [Google Scholar]

- Eddington, A. Space, Time and Gravitation; The University Press: Cambridge, MA, USA, 1978; p. 198. [Google Scholar]

- Hariri, Y.N. Sapiens: A brief History of Humankind; Harper Perennial: New York, NY, USA, 2018. [Google Scholar]

- Popper, K.R. Knowledge and the Mind-Body Problem; Routledge: London, UK, 1994; p. 4. [Google Scholar]

- Popper, K.R.; Eccles, J.R. The Self and Its Brain; Springer: Berlin/Heidelberg, Germany, 1977. [Google Scholar]

- Piaget, J. Success and Understanding; Harvard University Press: Cambridge, MA, USA, 1978. [Google Scholar]

- Piaget, J. The Development of Thought: Equilibration of Cognitive Structures; The Viking Press: New York, NY, USA, 1975. [Google Scholar]

- Maturana, H.R.; Varela, F.J. The Tree of Knowledge: The Biological Roots of Human Understanding; Shambhala: Boston, MA, USA, 1998. [Google Scholar]

- Kirchhoff, M.; Parr, T.; Palacious, E.; Friston, K.; Kiverstein, J. The Markov blankets of life: Autonomy, active inference and the free energy principle. J. R. Soc. Interface 2018, 15, 20170792. [Google Scholar] [CrossRef]

- Friston, K.J. Life as we know it. J. R. Soc. Interface 2013, 10, 20130475. [Google Scholar] [CrossRef]

- Friston, K.J. The free-energy principle: A unified brain theory? Nat. Rev. Neurosci. 2010, 11, 127–138. [Google Scholar] [CrossRef] [PubMed]

- Russell, B. The Analysis of Mind; George Allen & Unwin LTD: London, UK, 1921; p. 292. [Google Scholar]

- Morowitz, H.J. Energy Flow in Biology; Ox Bow Press: Woodridge, CT, USA, 1979; p. 2. [Google Scholar]

- Prigogine, I. From Being to Becoming: Time and Complexity in the Physical Sciences; W.H. Freeman and Co.: San Francisco, CA, USA, 1980. [Google Scholar]

- Prigogine, I.; Stengers, I.; Toffler, A. Order Out of Chaos; Bantam: New York, NY, USA, 1984. [Google Scholar]

- Haken, H. Synergetics: Nonequilibrium Phase Transitions and Self-Organization in Physics, Chemistry and Biology; Springer: New York, NY, USA, 1977. [Google Scholar]

- Klimontovich, Y.L. Turbulent Motion and the Structure of Chaos: A New Approach to the Statistical Theory of Open Systems; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1991. [Google Scholar]

- Glansdorff, P.; Prigogine, I. Thermodynamic Theory of Structure, Stability and Fluctuations; Wiley-Interscience: London, UK, 1971. [Google Scholar]

- Klimontovich, Y.L. Statistical Physics; Harwood Academic Publishers: New York, NY, USA, 1986. [Google Scholar]

- Klimontovich, Y.L. Is turbulent motion chaos or order. Phys. B Condense. Matter 1996, 228, 51–62. [Google Scholar] [CrossRef]

- Brillouine, L. Science and Information Theory; Academic Press: New York, NY, USA, 1956. [Google Scholar]

- Levitin, L.B. Physical information theory for 30 years: Basic concepts and results. In Quantum Aspects of Optical Communications; Bendjaballah, C., Hirota, O., Reynaud, S., Eds.; Springer: Berlin/Heidelberg, Germany, 1990; pp. 101–110. [Google Scholar]

- Stratonovich, R.L. On value of information. Izv. USSR Acad. Sci. Tech. Cybern. 1965, 5, 3–12. (In Russian) [Google Scholar]

- Stratonovich, R.L. Information Theory; Sovetskoe Radio: Moscow, Russia, 1975. (In Russian) [Google Scholar]

- Stratonovich, R.L. Nonlinear Nonequilibrium Thermodynamics: Linear and Nonlinear Fluctuation-Dissipation Theorems; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Jarzynski, C. A nonequilibrium equality for free energy differences. Phys. Rev. Lett. 1997, 78, 2690. [Google Scholar] [CrossRef]

- Still, S. Information bottleneck approach to predictive inference. Entropy 2014, 16, 968–989. [Google Scholar] [CrossRef]

- Nielsen, M.A.; Chuang, I.L. Quantum Computation and Quantum Information; Cambridge University Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 2, 623–656. [Google Scholar] [CrossRef]

- Yufik, Y.M. Virtual associative networks: A framework for cognitive modelling. In Brain and Values; Pribram, K.H., Ed.; Lawrence Erlbaum Associates: New York, NY, USA, 1998; pp. 109–177. [Google Scholar]

- Yufik, Y.M. How the mind works: An exercise in pragmatism. In Proceedings of the International Joint Conference on Neural Networks (IJCNN 02), Honolulu, HI, USA, 12–17 May 2002; pp. 2265–2269. [Google Scholar]

- Yufik, Y.M. Understanding, consciousness and thermodynamics of cognition. Chaos Solitons Fractals 2013, 55, 44–59. [Google Scholar] [CrossRef]

- Yufik, Y.M.; Friston, K.J. Life and understanding: Origins of the understanding capacity in the self-organizing nervous system. Front. Syst. Neurosci. 2016, 10, 98. [Google Scholar] [CrossRef]

- Hebb, D.O. The Organization of Behavior; Wiley & Sons: New York, NY, USA, 1949. [Google Scholar]

- Hebb, D.O. Essay on Mind; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 1980. [Google Scholar]

- Briggman, K.L.; Abarbanel, H.D.; Kristan, W.B., Jr. From crawling to cognition: Analyzing the dynamical interactions among populations of neurons. Curr. Opin. Neurobiol. 2006, 16, 135–144. [Google Scholar] [CrossRef] [PubMed]

- Briggman, K.L.; Abarbanel, H.D.; Kristan, W.B., Jr. Optical imaging of neuronal populations during decision-making. Science 2005, 307, 896–901. [Google Scholar] [CrossRef]

- Calabrese, R.L. Motor networks: Shifting coalitions. Curr. Biol. 2006, 17, 139–141. [Google Scholar] [CrossRef] [PubMed]

- Kristan, W.B., Jr.; Calabrese, R.L.; Friesen, W.O. Neuronal control of leech behavior. Prog. Neurobiol. 2005, 76, 279–327. [Google Scholar] [CrossRef]

- Lin, L.; Osan, R.; Tsien, J.Z. Organizing principles of real-time memory encoding: Neural clique assemblies and universal neural codes. Trends Neurosci. 2006, 29, 48–57. [Google Scholar] [CrossRef] [PubMed]

- Georgopoulos, A.P.; Lurito, J.T.; Petrides, M.; Schwartz, A.B.; Massey, J.T. Mental rotation of the neuronal population vector. Science 1989, 243, 234–236. [Google Scholar] [CrossRef] [PubMed]

- Georgopoulos, A.P.; Massey, J.T. Cognitive spatial-motor processes 1. The making of movements at various angles from a stimulus direction. Exp. Brain Res. 1987, 65, 361–370. [Google Scholar]

- Georgopoulos, A.P.; Taira, M.; Lukashin, A. Cognitive neurophysiology of the motor cortex. Science 1993, 260, 47–52. [Google Scholar] [CrossRef]

- Georgopoulos, A.P.; Kettner, R.E.; Schwartz, A.B. Primate motor cortex and free arm movements to visual targets in three-dimensional space. II. Coding of the direction of movement by a neuronal population. J. Neurosci. 1988, 8, 2928–2937. [Google Scholar] [CrossRef]

- Amirikian, B.; Georgopoulos, A.P. Cortical populations and behaviour: Hebb’s thread. Can. J. Exp. Psychol. 1999, 53, 21–34. [Google Scholar] [CrossRef]

- Chang, L.; Tsao, D.Y. The code for facial identity in the primate brain. Cell 2017, 169, 1013–1028. [Google Scholar] [CrossRef]

- James, W. The Principles of Psychology; Dover Publications: New York, NY, USA, 1950; Volume 1, p. 586. [Google Scholar]

- De Groot, A.D. Though and Choice in Chess; Basic Books Publishers: New York, NY, USA, 1965; pp. 330 & 333. [Google Scholar]

- Kasparov, G. How Life Imitates Chess; Bloomsbury: New York, NY, USA, 2007. [Google Scholar]

- Berwick, R.C.; Chomsky, N. Why Only Us? Language and Evolution; The MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Nelson, M.J.; Karoui, I.; Giber, K.; Yang, X.; Cohen, L.; Koopman, H.; Cash, S.C.; Naccache, L.; Hale, J.T.; Pallier, C.; et al. Neurophysiological dynamics of phrase-structure building during sentence processing. Proc. Natl. Acad. Sci. USA 2017, 114, E3669–E3678. [Google Scholar] [CrossRef] [PubMed]

- Glenberg, A.M.; Robertson, D.A. Symbol grounding and meaning: A Comparison of high-dimensional and embodied theories of meaning. J. Mem. Lang. 2000, 43, 379–401. [Google Scholar] [CrossRef]

- Bower, T.G.R. Development in Infancy; W.H. Freeman & Co.: San Francisco, CA, USA, 1974. [Google Scholar]

- Bernstein, N. The Co-Ordination and Regulation of Movements; Pergamon Press: Oxford, NY, USA, 1967. [Google Scholar]

- d’Avella, A.; Saltiel, P.; Bizzi, E. Combinations of muscle synergies in the construction of a natural motor behavior. Nat. Neurosci. 2003, 6, 300–308. [Google Scholar] [CrossRef] [PubMed]

- d’Avella, A.; Bizzi, E. Shared and specific muscle synergies in natural motor behaviors. Proc. Natl. Acad. Sci. USA 2005, 102, 3076–3081. [Google Scholar] [CrossRef]

- Nazifi, M.M.; Yoon, H.U.; Beschorner, K.; Hur, P. Shared and task-specific muscle synergies during normal walking and slipping. Front. Hum. Neurosci. 2017, 11, 40. [Google Scholar] [CrossRef] [PubMed]

- Chvatal, S.A.; Ting, L.H. Voluntary and reactive recruitment of locomotor muscle synergies during perturbed walking. J. Neurosci. 2012, 32, 12237–12250. [Google Scholar] [CrossRef] [PubMed]

- Overduin, S.A.; d’Avella, A.; Carmena, J.M.; Bizzi, E. Microstimulation activates a handful of muscle synergies. Neuron 2012, 76, 1071–1077. [Google Scholar] [CrossRef] [PubMed]

- Sukstanskii, A.L.; Yablonskiy, D.A. Theoretical model of temperature regulation in the brain during changes in functional activity. Proc. Natl. Acad. Sci. USA 2006, 103, 12144–12149. [Google Scholar] [CrossRef]

- Eysenck, M.W.; Keane, M.T. Cognitive Psychology; Psychology Press: London, UK, 1995. [Google Scholar]

- Horwitz, B.; Rumsey, J.M.; Grady, C.L. The cerebral metabolic landscape in autism; intercorrelations of regional glucose utilization. Arch. Neurol. 1988, 45, 749–755. [Google Scholar] [CrossRef]

- Just, M.A.; Cherkassky, V.L.; Keller, T.A.; Minshew, N.J. Cortical activation and synchronization during sentence comprehension in high-functioning autism: Evidence of underconnectivity. Brain 2004, 127, 1811–1821. [Google Scholar] [CrossRef]

- McAlonnan, G.M.; Cheung, V.; Cheung, C.; Suckling, J.; Lam, J.Y.; Tai, S.; Yip, L.; Murphy, D.G.M.; Chua, S.E. Mapping the brain in autism: A voxel-based MRI study of volumetric differences and intercorrelations in autism. Brain 2005, 128, 268–276. [Google Scholar] [CrossRef] [PubMed]

- Mayes, S.D.; Calhoun, S.L.; Murray, M.J.; Ahuja, M.; Smith, L.A. Anxiety, depression, and irritability in children with autism relative to children with other neuropsychiatric disorders and typical development. Res. Autism Spectr. Disord. 2011, 5, 474–485. [Google Scholar] [CrossRef]

- Treffert, D.A. Savant syndrome: An extraordinary condition. Philos. Trans. R. Soc. Lond. B Bio. Sci. 2009, 364, 1351–1357. [Google Scholar] [CrossRef] [PubMed]

- Hardy, J.; Selkoe, D.J. The amyloid hypothesis of Alzheimer’s disease: Progress and problems on the road to therapeutics. Science 2002, 297, 353–358. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, R.M.; Devenney, E.M.; Irish, M.; Ittner, A.; Naismith, S.; Ittner, L.M.; Rohrer, J.D.; Halliday, G.M.; Eisen, A.; Hodges, J.R.; et al. Neuronal network disintegration: Common pathways, linking neurodegenerative diseases. J Neurol. Neurosurg. Psychiatry 2016, 87, 1234–1241. [Google Scholar] [CrossRef] [PubMed]

- Descartes, R. Philosophical Essays; Bobbs-Merill: Indianapolis, IN, USA, 1964. [Google Scholar]

- Libet, B.; Wright, E.W.; Feinstein, B.; Pear, D.K. Subjective referral of the timing for a conscious sensory experience. Bain 1979, 102, 193–224. [Google Scholar] [CrossRef]

- Stephens, G.L.; Graham, G. Self-Consciousness, Mental Agency, and the Clinical Psychopathology of Thought Insertion; Philosophy, Psychiatry and Psychology, Johns Hopkins University Press: Baltimore, MD, USA, 1994; pp. 1–10. [Google Scholar]

- Westbrook, A.; Braver, T.S. Cognitive effort: A neuroeconomy approach. Cogn. Affect Behav. Neurosci. 2015, 15, 395–415. [Google Scholar] [CrossRef] [PubMed]

- Kurzban, R.; Duckworth, A.; Kable, J.W.; Myers, J. An opportunity cost model of subjective effort and task performance. Behav. Brain Sci. 2013, 36, 661–726. [Google Scholar] [CrossRef] [PubMed]

- Xu-Wilson, M.; Zee, D.S.; Shadmerr, R. The intrinsic value of visual information affects saccade velocities. Exp. Brain Res. 2009, 196, 475–481. [Google Scholar] [CrossRef] [PubMed]

- Schütz, A.C.; Trommershäuser, J.; Gegenfurtner, K.R. Dynamic integration of information about salience and value for saccadic eye movements. Proc. Natl. Acad. Sci. USA 2012, 109, 7547–7552. [Google Scholar] [CrossRef] [PubMed]

- Veale, D. Over-valued ideas: A conceptual analysis. Behav. Res. Ther. 2002, 40, 383–400. [Google Scholar] [CrossRef]

- Bartram, O.; McGuire, J.T.; Kable, J.W. The valuation system: A coordinate-based meta-analysis of BOLD fMRI experiments examining neural correlates of subjective value. Neuroimage 2013, 76, 412–427. [Google Scholar] [CrossRef] [PubMed]

- Diekhof, E.K.; Kaps, L.; Falka, P.; Gruber, O. The role of the human ventral striatum and the medial orbitofrontal cortex in the representation of reward magnitude—An activation likelihood estimation meta-analysis of neuroimaging studies of passive reward expectancy and outcome processing. Neuropshologia 2012, 50, 1252–1266. [Google Scholar] [CrossRef]

- Peters, J.; Buchel, C. Neural representation of subjective reward value. Behav. Brain Res. 2010, 213, 135–141. [Google Scholar] [CrossRef] [PubMed]

- Kurnianinsingh, Y.A.; Mullette-Gillman, O.A. Natural mechanisms of the transformation from objective value to to subjective utility: Converting from count to worth. Front. Neurosci. 2016, 10, 507. [Google Scholar]

- Raichle, M.E.; MacLeod, A.M.; Snyder, A.Z.; Powers, W.J.; Gusnard, D.A.; Shulman, G.L. A default mode of brain function. Proc. Natl. Acad. Sci. USA 2001, 98, 676–682. [Google Scholar] [CrossRef]

- Koestler, A. The Act of Creation; Arkana: London, UK, 1964. [Google Scholar]

- Noftzinger, E.A.; Mintun, M.A.; Wiseman, M.B.; Kupfer, D.J.; Moore, R.Y. Forebrain activation in REM sleep: An FDG PET study. Brain Res. 1997, 770, 192–201. [Google Scholar] [CrossRef]

- Vasquez, J.; Baghdoyan, H.A. Basal forebrain acetylcholine release during REM sleep is significantly greater than during waking. Am. J. Physiol. Regul. Integr. Comp. Physiol. 2001, 280, 598–601. [Google Scholar] [CrossRef]

- Tsujino, N.; Sakurai, T. Orexin/Hypocretin: A Neuropeptide at the interface of sleep, energy homeostasis, and reward system. Pharmacol. Rev. 2009, 61, 162–176. [Google Scholar] [CrossRef]

- Rechtschaffen, A.; Bregmann, B.M.; Everson, C.A.; Kushida, C.A.; Gulliland, M.A. Sleep deprivation in rats: Integration and discussion of the findings. Sleep 1989, 12, 68–87. [Google Scholar] [CrossRef] [PubMed]

- Bennion, K.A.; Mickley Stenmetz, K.R.; Kensinger, E.A.; Payne, J.D. Sleep and cortisol interact to support memory consolidation. Cereb. Cortex 2015, 25, 646–657. [Google Scholar] [CrossRef] [PubMed]

- Hobson, J.A. REM sleep and dreaming: Towards a theory of protoconsiousness. Nat. Rev. Neurosci. 2009, 10, 803–814. [Google Scholar] [CrossRef] [PubMed]

- Saalmann, Y.B.; Kastner, S. Cognitive and perceptual functions of the visual thalamus. Neuron 2011, 71, 209–223. [Google Scholar] [CrossRef] [PubMed]

- Saalmann, Y.B.; Kastner, S. The cognitive thalamus. Front. Syst. Neurosci. 2015. [Google Scholar] [CrossRef] [PubMed]

- Bruno, R.M.; Sakmann, B. Cortex is driven by weak but synchronously active thalamocortical synapses. Science 2006, 312, 1622–1627. [Google Scholar] [CrossRef]

- Mitchell, A.S.; Chakraborty, S. What does the mediodorsal thalamus do? Front. Syst. Neurosci. 2013, 7, 37. [Google Scholar] [CrossRef]

- Prevosto, V.; Sommer, M.A. Cognitive control of movement via the cerebellar-recipient thalamus. Front. Syst. Neurosci. 2013, 7, 56. [Google Scholar] [CrossRef]

- Minamimoto, T.; Hori, Y.; Yamanaka, K.; Kimura, M. Neural signal for counteracting pre-action bias in the centromedian thalamic nucleus. Front. Syst. Neurosci. 2014, 8, 3. [Google Scholar] [CrossRef]

- Klostermann, F.; Krugel, L.K.; Ehlen, F. Functional roles of the thalamus for language capacities. Front. Syst. Neurosci. 2013, 7, 32. [Google Scholar] [CrossRef]

- Saalmann, Y.B. Intralaminar and medial thalamic influence on cortical synchrony, information transmission and cognition. Front. Syst. Neurosci. 2014, 8, 83. [Google Scholar] [CrossRef] [PubMed]

- Ito, M. Control of mental activities by internal models in the cerebellum. Nat. Rev. Neurosci. 2008, 9, 304–313. [Google Scholar] [CrossRef] [PubMed]

- Leisman, G.; Braun-Benjamin, O.; Melillo, R. Cognitive-motor interactions of the basal ganglia in development. Front. Syst. Neurosci. 2014, 8, 16. [Google Scholar] [CrossRef] [PubMed]

- Cotterill, R.M. Cooperation of the basal ganglia, cerebellum, sensory cerebrum and hippocampus: Possible implications for cognition, consciousness, intelligence and creativity. Prog. Neurobiol. 2001, 64, 1–33. [Google Scholar] [CrossRef]

- Yagi, T. Genetic basis of neuronal individuality in the mammalian brain. J. Neurogenet. 2013, 27, 97–105. [Google Scholar] [CrossRef] [PubMed]

- Haken, H. Principles of Brain Functioning: A Synergistic Approach to Brain Activity, Behavior and Cognition; Springer: Berlin/Heidelberg, Germany, 1996. [Google Scholar]

- Rabinovich, M.I.; Huerta, R.; Varona, P.; Afraimovich, V.S. Transient cognitive dynamics, metastability, and decision making. PLoS Comput. Biol. 2008, 4, e1000072. [Google Scholar] [CrossRef]

- Newborn, M. Kasparov vs. Deep Blue: Computer Chess Game of Age; Springer: Berlin/Heidelberg, Germany, 1997. [Google Scholar]

- Newell, A.; Simon, H.A. Human Problem Solving; Prentice Hall: Upper Saddle River, NJ, USA, 1972. [Google Scholar]

- Peirce, C.S. Abduction and induction. In Philosophical writings of Peirce; Buchler, J., Ed.; Dover Publications: New York, NY, USA, 1950; p. 161. [Google Scholar]

- Yufik, Y.; Yufik, T. Situational understanding. In Proceedings of the 7th International Conference on Advances in Computing, Communication, Information Technology, Rome, Italy, 27–28 October 2018; pp. 21–27. [Google Scholar]

- Yufik, Y.M. Gnostron: A framework fro human-like machine understanding. In Proceedings of the 2018 IEEE Symposium Series on Computational Intelligence (SSCI), Bangalore, India, 18–21 November 2018. [Google Scholar]

- Yufik, Y.M. Systems and Method for Understanding Multi-Modal Data Streams. U.S. Patent 9,563,843,B2, 28 June 2016. [Google Scholar]

- Pellionisz, C.Z.; Llinas, R. Tensor network theory of the metaorganization of functional geometries in the central nervous system. Neurocsience 1985, 16, 245–273. [Google Scholar] [CrossRef]

- Davies, P.C.W.; Rieper, E.; Tuszynski, J.A. Self-organization and entropy reduction in a living cell. Biosystems 2013, 111, 1–10. [Google Scholar] [CrossRef]

- Daut, J. The living cell as an energy-transducing machine. Biochem. Biophys. Acta 1987, 895, 41–62. [Google Scholar]

- Marína, D.; Martín, M.; Sabater, B. Entropy decrease associated to solute compartmentalization in the cell. Biosystems 2009, 98, 31–36. [Google Scholar] [CrossRef]

- Toyabe, S.; Sagawa, T.; Ueda, M.; Muneyuki, E.; Sano, M. Experimental demonstration of information-to-energy conversion and validation of the generalized Jarzynski equality. Nat. Phys. 2010, 6, 988–992. [Google Scholar] [CrossRef]

- Faes, L.; Nollo, G.; Porta, A. Compensated transfer entropy as a tool for reliably estimating information transfer in physiological time series. Entropy 2013, 15, 198–219. [Google Scholar] [CrossRef]

- Stramaglia, S.; Cortes, J.M.; Marinazzo, D. Synergy and redundancy in the Granger causal analysis of dynamical networks. New J. Phys. 2014, 16, 105003. [Google Scholar] [CrossRef]

- Deutch, S. Constructor theory of information. arXiv, 2014; arXiv:1405.5563. [Google Scholar]

- Deutch, S. The Beginning of Infinity: Explanations That Transform the World; Penguin: London, UK, 2011. [Google Scholar]

- Bialek, W. Biophysics: Searching for Principles; Princeton University Press: Princeton, NJ, USA, 2012. [Google Scholar]

- Yufik, Y.M. Probabilistic Resource Allocation System with Self-Adaptive Capabilities. U.S Patent 5,794,224, 11 August 1998. [Google Scholar]

- Sperry, R. A modified concept of consciousness. Psychol. Rev. 1986, 76, 534. [Google Scholar] [CrossRef]

- Han, S.W.; Shin, H.; Jeong, D.; Jung, S.; Bae, E.; Kim, J.Y.; Baek, H.-M.; Kim, K. Neural substrates of purely endogenous, self-regulatory control of attention. Sci. Rep. 2018, 8, 925. [Google Scholar] [CrossRef] [PubMed]

- Asplund, C.L.; Todd, J.J.; Snyder, A.P.; Marois, R. A central role for the lateral prefrontal cortex in goal-directed and stimulus-driven attention. Nat. Neurosci. 2010, 13, 507–512. [Google Scholar] [CrossRef]

- Crick, F.; Koch, C. Towards a neurobiological theory of consciousness. Semin. Neurosci. 1990, 2, 263. [Google Scholar]

- Duffy, J.D. The neural substrate of consciousness. Psychiatr. Ann. 1997, 27, 24–29. [Google Scholar] [CrossRef]

- Kimura, M.; Yamada, H.; Matsumoto, N. Tonically active neurons in the striatum encode motivational contexts of action. Brain. Dev. 2003, 25 (Suppl. 1), S20–S23. [Google Scholar] [CrossRef]

- Howard, S.R.; Avarguès-Weber, A.; Garcia, J.E.; Greentree, A.D.; Dyer, A.G. Numerical cognition in honeybees enables addition and subtraction. Sci. Adv. 2019, 5, eaav0961. [Google Scholar] [CrossRef]

- Matzel, L.D.; Kolata, S. Selective attention, working memory, and animal intelligence. Neurosci. Behav. Rev. 2010, 34, 23–30. [Google Scholar] [CrossRef] [PubMed]

- Banerjee, K.; Chabris, C.F.; Johnson, V.E.; Lee, J.J.; Tsao, F.; Hauser, M.D. General intelligence in another primate: Individual differences across cognitive task performance in a New World Monkey (Saguinus oedipus). PLoS ONE 2009, 4, e5883. [Google Scholar] [CrossRef] [PubMed]

- Chaqas, A.M.; Theis, L.; Sengupta, B.; Stuttgen, M.C.; Bethge, M.; Schwarz, C. Functional analysis of ultra high information rates conveyed by rat vibrissal primary afferents. Front. Neural Circuits 2013. [Google Scholar] [CrossRef] [PubMed]

- Fried, S.I.; Munch, T.A.; Werblin, F.S. Directional selectivity is formed at multiple levels by laterally offset inhibition in the rabbit retina. Neuron 2005, 46, 1117–1127. [Google Scholar] [CrossRef] [PubMed]

- Singh, R.E.; Iqbal, K.; White, G.; Hutchinson, T.E. A systematic review on muscle synergies: From building blocks of motor behavior to neurorehabilitation tool. Appl. Bionics Biomech. 2018, 2018, 3615368. [Google Scholar] [CrossRef] [PubMed]

- Rosenbaum, D.A. Human Motor Control; Elsevier: New York, NY, USA, 2010. [Google Scholar]

- Mussa-Ivaldi, F.A.; Solla, S.A. Neural primitives for motion control. IEEE J. Ocean. Eng. 2004, 29, 640–650. [Google Scholar] [CrossRef]

- Quiroga, R. Concept cells: The building blocks of declarative memory functions. Nat. Rev. Neurosci. 2012, 13, 587–597. [Google Scholar] [CrossRef]

- Roberts, D.D. The existential graphs of Charles S. Peirce; Mouton: The Hague, The Netherlands, 1973. [Google Scholar]

- Rabinovich, M.I.; Varona, P. Robust transient dynamics and brain functions. Front. Comp. Neurosci. 2011. [Google Scholar] [CrossRef]

- Beggs, J.M.; Plentz, D. Neuronal avalanches in neocortical circuits. J. Neurocsi. 2003, 23, 11167–11177. [Google Scholar] [CrossRef]

- Cattell, R.B. Intelligence: Its Structure, Growth, And Action; Elsevier Science: New York, NY, USA, 1987. [Google Scholar]

- Harris, J.; Jolivet, R.; Attwell, D. Synaptic energy use and supply. Neuron 2012, 75, 762–777. [Google Scholar] [CrossRef]

- Aiello, L.C.; Wheeler, P. The expensive tissue hypothesis: The brain and the digestive system in human and primate evolution. Curr. Antrhropol. 1995, 36, 199–221. [Google Scholar] [CrossRef]

- Atwell, D.; Laughlin, S.B. An energy budget for signaling in the grey matter of the brain. J. Cereb. Blood Flow Metab. 2001, 21, 1133–1145. [Google Scholar] [CrossRef]

- Sabour, S.; Frost, N.; Hinton, G.E. Dynamic Routing between Capsules. Available online: https://papers.nips.cc/paper/6975-dynamic-routing-between-capsules.pdf (accessed on 20 March 2019).

- Dorny, N.C. A Vector Space Approach to Models and Optimization; John Wiley & Sons: New York, NY, USA, 1975. [Google Scholar]

- Kozma, R.; Noack, R. Neuroenergetics of brain operation and implications for energy-aware computing. In Proceedings of the 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; pp. 722–727. [Google Scholar]

- Swenson, R. Order, evolution, and natural law: Fundamental relations in complex systems theory. In Cybernetics and Applied Systems; Negoita, C.V., Ed.; Marcel Decker, Inc.: New York, NY, USA, 1992; pp. 125–146. [Google Scholar]

- Swenson, R. Emergent attractors and the law of maximum energy production: Foundations to a theory of general evolution. Sys. Res. 1989, 6, 187–197. [Google Scholar] [CrossRef]

- Lorenz, K. The Natural Science of the Human Species; The MIT Press: Cambridge, MA, USA, 1997; p. 93. [Google Scholar]

- Kittel, C. Thermal Physics; John Wiley & Sons: New York, NY, USA, 1977. [Google Scholar]

- Haynie, D.T. Biological Thermodynamics; Cambridge University Press: Cambridge, MA, USA, 2001. [Google Scholar]

- Lamprecht, I.; Zotin, A.I. Thermodynamics of Biological Processes; De Gruyter: New York, NY, USA, 1978. [Google Scholar]

- Werner, A.G.; Jirsa, V.K. Metastability, criticality and phase transitions in brain and its models. Biosystems 2007, 90, 496–508. [Google Scholar] [CrossRef] [PubMed]

- Fingelkurts, A.; Fingelkurts, A. Making complexity simpler: Multivariability and meatstability in the brain. Int. J. Neurocsi. 2004, 114, 843–862. [Google Scholar] [CrossRef] [PubMed]

- Bressler, S.L.; Kelso, J.A.S. Cortical coordination dynamics and cognition. Trends Cog. Sci. 2001, 5, 26–36. [Google Scholar] [CrossRef]

- Edelman, G. Neural Darwinism: The Theory of Neuronal Group Selection; Basic Books: New York, NY, USA, 1987. [Google Scholar]

- Van Gelder, T. What might cognition be, if not computation. J. Philos. 1995, 92, 345–381. [Google Scholar] [CrossRef]

- Port, R.; van Gelder, T. Mind as Motion: Explorations in the Dynamics of Cognition; MIT Press: Cambridge, UK, 1995. [Google Scholar]

- Kelso, J.A.S. Dynamic Patterns: The Self-Organization of Brain and Behavior; Bradford Book, The MIT Press: Cambridge, MA, USA, 1995. [Google Scholar]

- Lakoff, G.; Nunez, R.E. Where Mathematics Comes from: How the Embodied Mind Brings Mathematics into Beings; Basic books: New York, NY, USA, 2000. [Google Scholar]

- Newell, A. Précis of unified theories of cognition. Behav. Brain Sci. 1992, 15, 425–437. [Google Scholar] [CrossRef] [PubMed]

- Fugelsang, J.A.; Dunbar, K.N. Brain-based mechanisms underlying complex causal thinking. Neuropsychologia 2005, 43, 1204–1213. [Google Scholar] [CrossRef]

- Garner, H. Frames of Mind: The Theory of Multiple Intelligences; Basic Books: New York, NY, USA, 2011. [Google Scholar]

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yufik, Y.M. The Understanding Capacity and Information Dynamics in the Human Brain. Entropy 2019, 21, 308. https://doi.org/10.3390/e21030308

Yufik YM. The Understanding Capacity and Information Dynamics in the Human Brain. Entropy. 2019; 21(3):308. https://doi.org/10.3390/e21030308

Chicago/Turabian StyleYufik, Yan M. 2019. "The Understanding Capacity and Information Dynamics in the Human Brain" Entropy 21, no. 3: 308. https://doi.org/10.3390/e21030308

APA StyleYufik, Y. M. (2019). The Understanding Capacity and Information Dynamics in the Human Brain. Entropy, 21(3), 308. https://doi.org/10.3390/e21030308