1. Introduction

Quantifying the similarity between two probability distributions is a central component of a variety of applications in the statistics, quantitative finance, and engineering literature. Such measures are one of the main inputs into classification, clustering, and optimization algorithms. In [

1], the author compiles and categorizes a collection of sixty-five similarity measures and stresses that the identification of a proper notion of similarity for the application at hand is crucial to its success. Financial securities are often compared based on the values of statistics associated with their return distributions. For example, the historical volatility of a stock is defined to be the standard deviation of its daily return distribution and the 95% Value-at-Risk is the

quantile of this distribution. Our main aim will be to extend beyond return distribution summary statistic based comparisons of financial securities by utilizing their full return distributions when assessing their similarity. Specifically, we will consider the Fisher information distance on a model space of distributions which in turn will be used in both nearest neighbor and clustering applications that focus on the entire risk profiles of financial securities.

Grouping related probability distributions according to their similarity has been considered in a number of clustering applications. In [

2], the authors develop a Kullback-Leibler divergence based clustering technique. More general Bergman divergences are used in [

3] for clustering applications. The authors of [

4] develop several distribution based clustering techniques using the

norm between distributions as a similarity measure. In [

5,

6,

7], the authors explore information geometry based clustering methods using the Fisher-Rao distance distributions as a similarity measure. The authors also mention that the development of clustering algorithms based upon probability distributions is a relatively unexplored area. The promise of these techniques is that they take into account the entirety of the distribution of the objects being clustered which may provide a more precise notion of similarity than traditional measures that use distribution summary statistics for comparison. For example, two distributions with equal means and variances can have significantly different probability density functions. A distribution based similarity measure should be able to distinguish such differences whereas a measure based on just the mean and variance statistics would conclude that these two models are identical.

Clustering techniques applied to financial return data predominately rely upon single statistics associated with these time series rather than a model for a full return distribution [

8]. Specifically, similarity measures are defined in terms of functions of the correlation between these series. Each of [

9,

10,

11], utilize correlation distance measures to develop hierarchical clustering algorithms in order to explore the network structures in equity markets. These techniques take only the return time series of a collection of stocks as input and are able to identify hierarchical sector structure solely from this information. The authors of [

12] utilize related clustering techniques to improve traditional index tracking strategies. Commodity network properties are explored with analogous methods in [

13]. Correlation based clustering methods are useful for extracting network and sector structures from financial return data; however, they have limited utility when comparing the risk profiles of these securities, which is a strength of distributional based similarity measures.

Our aim is to develop a similarity measure to compare the return distributions of financial time series. Specifically, given end of day return data for a collection of equities, we will use maximum likelihood estimation or related model fitting techniques to identify a single model from a parametric family of distributions with data. Given the parameters of two such models, we consider the question of defining a distance between the models, which is a function of these parameters. We use the Fisher information distance which is constructed from the Fisher information metric of the distribution family for this purpose. The main practical focus will be to group loss distributions by their risk profiles; specifically, how similar are the left tails of the two distributions? With this in mind, we focus on the generalized Pareto and generalized extreme value distributions from extreme value theory. This distance function may be used as an input into a nearest neighbor or hierarchical clustering method, both of which provide a risk based clustering of financial securities analogous to the previously mentioned correlation methods.

The Fisher information metric and its associated distance are central concepts in the subject of information geometry [

14,

15,

16,

17] which draws upon ideas from statistics, differential geometry, and information theory to study the geometric structure of statistical models. The main connection between a family of statistical models and differential geometry is the identification of the positive definite symmetric Fisher information matrix with a Riemannian metric on the space of all such distributions. The distance function associated with this metric is then taken to be a measure of similarity between models. In addition, a characterization theorem due to Chentsov [

18] states that this is the unique metric one can place on a space of probability distributions that has certain desirable statistical properties explained further below.

One of the main limitations of the Fisher distance, which has lead to its somewhat slow adoption in practical applications, is the difficulty encountered in its computation. It is difficult to develop robust methods to directly solve the geodesic equations since the form of these equations, and in particular their singularity structure, is model dependent; we provide an example of this issue below. An alternative technique is provided in [

19,

20], where the author developed a second order formula using Riemann normal coordinates that locally approximate geodesic distances. This technique may be merged with graph theoretic methods to develop a global geodesic distance approximation algorithm. However, coordinate transformations to and from normal coordinates make this technique quite complicated. We will utilize simple robust methods for computing geodesic distances in model spaces of dimension two to four developed in [

21]. Here, the authors construct a Hamiltonian Fast Marching technique that solves an Eikonal equation for the distance function of a Riemannian manifold. This technique is robust and works well in many singular geometries and complex manifolds that the authors feature. The method may be roughly viewed as a generalization of Dijkstra’s algorithm applied to a discretized version of the Riemannian manifold. We have found that it is well suited for the computation of geodesic distances for Fisher metric geometries, and we use it in the distance computations described in the below higher dimensional applications.

We provide several novel contributions to the information geometry, clustering, and financial risk literature. First, we develop a closed form expression for the Fisher information distance between one-dimensional models. This may be used to measure the distance between members of a distribution family that depends on a single parameter or higher dimensional models where all but a single parameter is fixed. Next, we compute the components of the Fisher information matrix for the generalized Pareto and generalized extreme value distributions. Both are well suited for modeling the left tail of return distributions of financial securities as well as the distribution of the worst single day loss over a yearly period [

10,

22,

23,

24,

25]. In particular, modeling the left tail of the distribution is important for identifying securities with similar risk metrics such as their Value-at-Risk and expected shortfall. We finally provide nearest neighbor and hierarchical clustering applications that group together equity return distributions based on the Fisher distance between them. This results in a new risk focused clustering technique of financial securities.

This article is organized as follows. In

Section 2, we review the relevant background in information geometry as well as complications that arise in developing robust techniques to directly solve the geodesic equations. In

Section 3, we develop a closed form expression, up to quadrature, for the Fisher information distance of one-dimensional models and provide several examples. In

Section 4, we examine difficulties involved in computing geodesic distances in higher dimensional models, and compute the Fisher information matrix for univariate normal distributions, the generalized Pareto distribution, and the generalized extreme value distribution. We also compare the Fisher distance to the Kullback-Leibler divergence in the case of univariate Gaussian models. In

Section 5, we utilize these expressions in risk focused nearest neighbor and worst daily loss clustering applications for equity securities. Finally, in

Section 6, we conclude and summarize future directions to explore.

2. Information Geometry Background

Information geometry is a relatively new discipline which combines statistics and differential geometry together in the study of statistical model Riemannian manifolds, c.f. [

14,

15,

16,

17,

26]. Our main aim is to utilize the Fisher information distance on such a manifold as a similarity measure between probability distributions in subsequent applications. With this end in mind, we define the Fisher distance by first considering a random variable with probability density function

for

with parameters

. Denote the set of all probability distributions for this model by

where here

is the set of admissible values of the parameters. We refer to an element of

by its model parameters. Given

, the Fisher distance is a function

constructed from the log-likelihood of this model

The components of the Fisher information matrix

are defined as the expected value of the negative Hessian matrix of the log likelihood of

.

The Fisher information matrix is positive definite symmetric and also has many applications in estimation and information theory, most notably the Cramér-Rao bounds [

27,

28] that enable one to establish lower bounds on the variance of unbiased estimators of deterministic model parameters.

In order to construct a distance function from the Fisher information matrix, we identify

with a Riemannian metric

referred to as the Fisher information metric on the model space

. This identification was first made in [

28] and is the cornerstone of the subject of information geometry. Given the Riemannian manifold

, we may define a distance function in terms of the Fisher information metric in the usual manner in Riemannian geometry. In particular, the distance between two models

is given by the arc-length of the geodesic that connects these two points. More specifically, the components of such a geodesic

, with

and

are determined by minimizing the length functional

over the space of all continuously differentiable curves

that satisfy

and

. One can show that this is equivalent to solving the second order quasi-linear system of geodesic equations boundary value problem

where here the Christoffel symbols are defined by

assuming that

is parameterized on the unit interval. Given a solution to these equations

, we can compute the distance between the two models by evaluating

.

The main motivation for using the Fisher information distance as a similarity measure between different models in the same family of distributions is due to a characterization theorem of Chentsov [

18]. As described in [

14], Chentsov’s theorem roughly states that the Fisher information metric equipped with any one of a special set of

-connections, which includes the Levi-Civita connection, are the unique metric/connection combinations that are invariant under sufficient statistic mappings of the model parameters; this includes all injective parameter mappings as well. This is a statistically desirable property as model re-parameterization, for example, should not influence any notion of distance between distinct models. There are several related characterization theorems for the Fisher metric [

16,

29,

30], which extend Chentsov’s initial work and establish this result in the setting of continuous distributions. As a result, the Fisher information metric and its associated distance function provide the most natural means of defining a distance between two distributions of the same parametric family.

There are two major difficulties in using the Fisher information distance in applications. First, it is often not possible to obtain simple closed form expressions for the values of

. This results in the geodesic equations taking a complex form which requires a numerical quadrature method to be used inside a differential equation solver to calculate distances. Such methods are often intractable and unstable. Second, solving the geodesic Equation (

5) numerically can be quite challenging due to singularities that arise in its coefficient functions. These issues are illustrated in the case of the Pareto Power Law distribution ([

10], p. 61):

The Fisher information metric and inverse take a simple closed form:

and geodesic equations for this model are given by

which need to be solved numerically. One such method to solve these equations is the shooting point method. Here given two models

and

, the boundary value problem defined by Equation (

9) with initial conditions

and terminal conditions

can be transformed into an initial value problem where the initial conditions are supplemented with a choice of the derivative

for a vector

v in the tangent space of the initial point. For a fixed

v, one then integrates outward and computes the distance between the geodesic and the target boundary point. The initial choice vector

v is refined through a search over the tangent space until this distance vanishes.

We have had some success using the shooting point method to solve these equations in specific instances of initial and terminal points; however, producing a robust solution which works for any boundary conditions can be quite challenging. In particular, note that there are two singularities in this equation when or ; these can cause the shooting point method to fail if the geodesic being solved for passes nearby these points. We discuss alternative methods to approximate geodesic distances in two or higher dimensions below, and now focus on the one-dimensional setting.

3. Distance Functions for One-Dimensional Models

In the case of one-dimensional models consisting of a single free parameter, we can determine the Fisher distance exactly up to numerical quadrature. These models may be specified in terms of a single parameter or result from a higher dimensional model that has had all but one parameter fixed. We first consider the case of the exponential distribution before treating the generic one-dimensional model.

The probability density of the exponential distribution is defined by

for

. The single component of the Fisher metric

is given by

One can compute the distance function for this model directly by solving the geodesic equation. The inverse metric is given by

and the Christoffel symbol is

. The geodesic equation for the model parameter

is

which has solution

. If we take

and

, then solving for

, we find that

and the distance between these two models is given by

We follow a similar process to develop a generic formula for the Fisher distance on one-dimensional model spaces. Consider a one-dimensional model

whose Fisher information matrix has a single component that takes the form

so that the information metric is given by

where here

is assumed to be at least

. The Christoffel symbol for this metric is given by

For any fixed

define

Then in the coordinate

s, the metric takes the form

which is the Euclidean metric. Here

s is an arc length coordinate, and the distance between two points

and

is given by

Thus one can compute the distance function for any one-dimensional model by integrating the Fisher metric and evaluating the difference of the result at the model parameters. We now turn to examining the additional complexities that are involved in the two or higher dimensional setting.

5. Applications

We now proceed to consider three applications of the Fisher distance. First, we compare the Kullback Leibler divergence to the Fisher distance in the case of the normal distribution model to develop intuition for differences in these similarity measures. Second, we consider a nearest neighbor clustering application where we initially fit generalized Pareto distributions to equity return time series of members of the NASDAQ 100 index and consider their associated loss distributions. We then consider a single stock, AAPL, and identify which securities are nearest AAPL in a Fisher distance sense; thus finding the stocks whose risk profiles most closely resemble that of AAPL. Finally, we extend this idea to a hierarchical clustering application. Instead of focusing on a single stock, we iteratively cluster groups of stocks with a bottom-up hierarchical clustering algorithm that utilizes the Fisher distance as a similarity measure between their pairwise loss distributions along with Ward’s linkage criteria for measuring the distance between clusters of stocks. This results in a risk focused hierarchical grouping of stocks.

5.1. Comparison between KL Divergence and Fisher Distance

We first examine differences between the Kullback Leibler (KL) divergence, which is widely used as a similarity measure between probability distributions, and the Fisher information distance in the case of a univariate normal distribution through an empirical example. A related comparison was developed in [

33] which we extend by considering an empirical example focused on equity returns from NASDAQ 100 stocks. To construct the dataset for this example, we download daily historical index components and end of day closing price data for the NASDAQ 100 index from Bloomberg between 1 January 2010 and 31 December 2015 and select stocks that remained in the index over this entire timeframe. Data was downloaded with Bloomberg’s Python API package blpapi. We forward fill missing data at the price level so that a stock whose price was filled for a single day will have a corresponding zero daily return. We note that the price data is split and dividend adjusted by Bloomberg and that, with a few exceptions, each time series is fully populated over the timeframe being considered.

Our aim is to study differences between the Fisher distance and widely used KL divergence similarity measure. It is known that these two distance measures agree to second order when measuring the disparity between infinitesimally close distributions [

43,

44]; however, significant differences arise on larger distance scales. Part of this comparison will examine how these distances differ when applied to the maximum likelihood parameters of each stock in the NASDAQ100 dataset. In particular, we fit the distribution to each of the return series using the unbiased MLE estimators for a normal distribution.

The general KL divergence for two probability distributions

is defined by

which for a univariate Gaussian distribution can be calculated explicitly

This is not a distance function as it is neither symmetric nor does it satisfy the triangle inequality. However, it has been widely used as a similarity measure between distributions, in particular, in information theory applications [

45]. We first provide a graphical comparison in

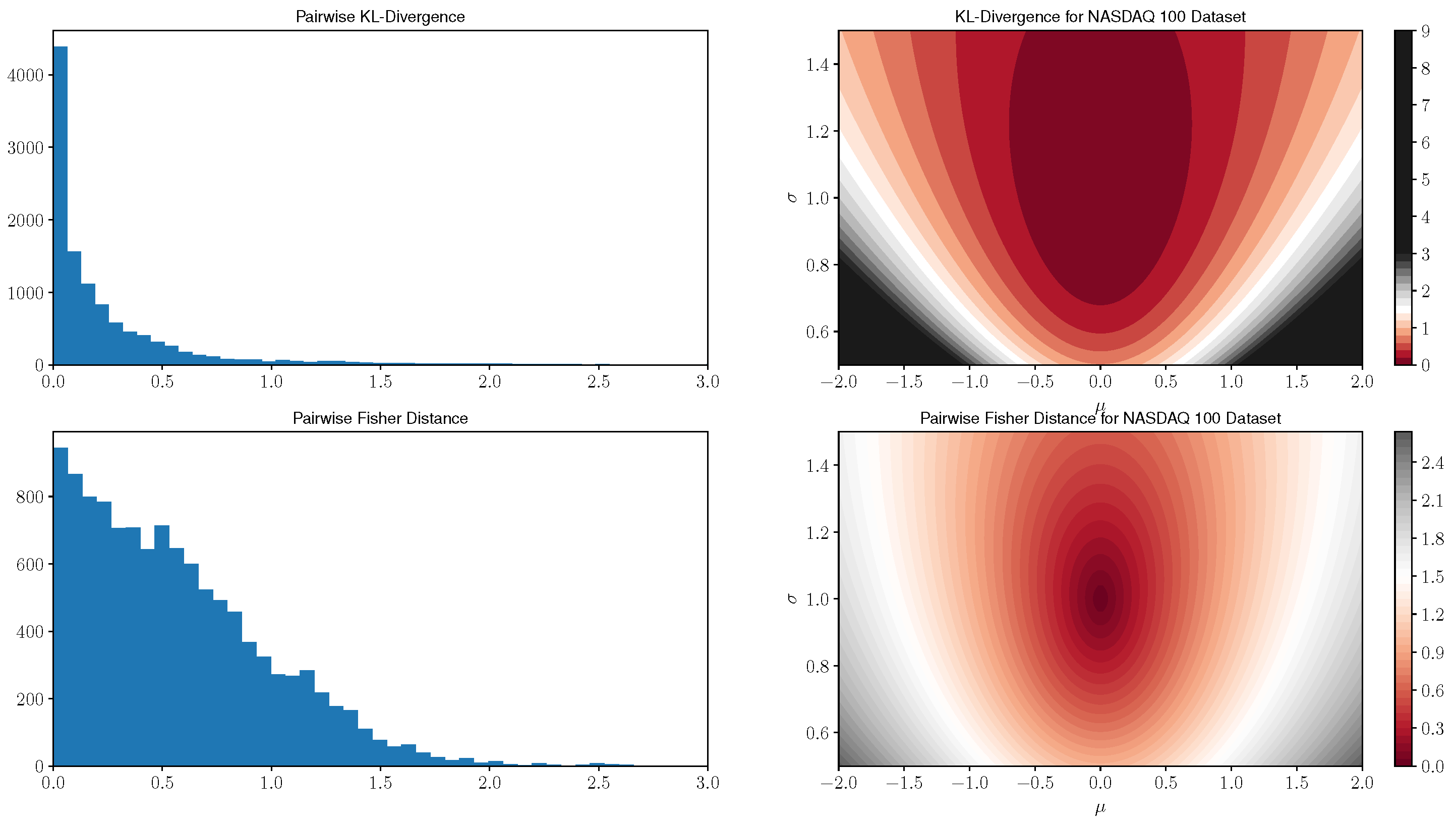

Figure 1 for pairwise distances between all models in this NASDAQ 100 dataset as well as a visualization of the distance functions to a standard normal distribution of both similarity measures.

Here, we compute all pairwise distances between the maximum likelihood model parameters models of 107 stocks which remained in the index over the timeframe of consideration. In the left most plot of

Figure 1, we display histograms of pairwise distances for both similarity measures. The right tail of the KL divergence histograms was truncated at an upper limit of 3.0; however, we note that the KL divergence has several outlier distances. In particular, 13 values greater than 10.0 with a maximum value of 19.2 were observed. The pairwise Fisher distance histogram contains all data points, and by comparison, the maximum value is 2.7. Next, note that the KL divergence histogram has roughly an exponential decay whereas the Fisher distance histogram declines linearly. The KL divergence tends to concentrate pairwise distances near zero values when compared with the Fisher distance; this provides an indication that the Fisher distance may distinguish subtle differences in the return distributions between the equity series more strongly than the KL divergence. In addition, the greater dispersion of Fisher distances, in relation to those corresponding to the KL divergence, motivates its utilization in clustering algorithms. Specifically, one would expect a more informative cluster structure using the Fisher distance as opposed to having many data points tightly grouped together in the case of the KL divergence.

In the right two plots of

Figure 1, we display contour plots that measure the similarity between all normal models in the domain and a standard

distribution, i.e., we plot level sets of the function

for each

in the domain of consideration. Here we can see that the KL divergence initially concentrates around zero for models close to the standard Gaussian, but rapidly expands for further away models which have increasingly large values in comparison to the Fisher distance. In addition, the Fisher distance has slower growth and is more granular for models near the standard Gaussian.

5.2. Generalized Pareto Nearest Neighbor Example

We now consider an application of identifying the nearest neighbors of a given stock based on the Fisher distance between the loss distribution of the stock and the securities to which it is being compared. We use the generalized Pareto distribution as a model for the loss distributions of these stocks.

There are many ways to fit a generalized Pareto distribution to data, c.f. [

46] for a comparison of a subset of such methods. Maximum likelihood estimation is notoriously difficult as the likelihood function is undefined for portions of the parameter domain. Multiple alternative fitting methods for the generalized Pareto distribution have been developed [

47,

48,

49,

50,

51] which are generally more stable and robust when compared with typical estimation techniques such as the maximum likelihood estimation or the method of moments. Although any of these fitting methods may be used in this application, since our focus is model comparison and not estimation, we use a hybrid estimation procedure developed in [

45] based on minimizing the Anderson-Darling statistic of this model which is both straightforward to implement and yields robust and intuitive results which we now briefly describe.

Given a sample

from an equity loss distribution, we note that the maximum likelihood estimator for the location parameter is

. We first compute

for the loss distribution of each stock, which is typically zero, and subtract means from the samples of every security. As this parameter does not vary significantly over models, we will fix it for the remainder of this application and fit and compare models with the two free parameters

. As in [

45], define

, and fit this model by defining a set of functions

for

where here

denotes the

i-th order statistic of the sample. Then we minimize

using a BFGS optimizer [

52] setting the initializer at 10% of the maximum value of the sample. Denote the minimum value by

, which defines the two model parameter estimators

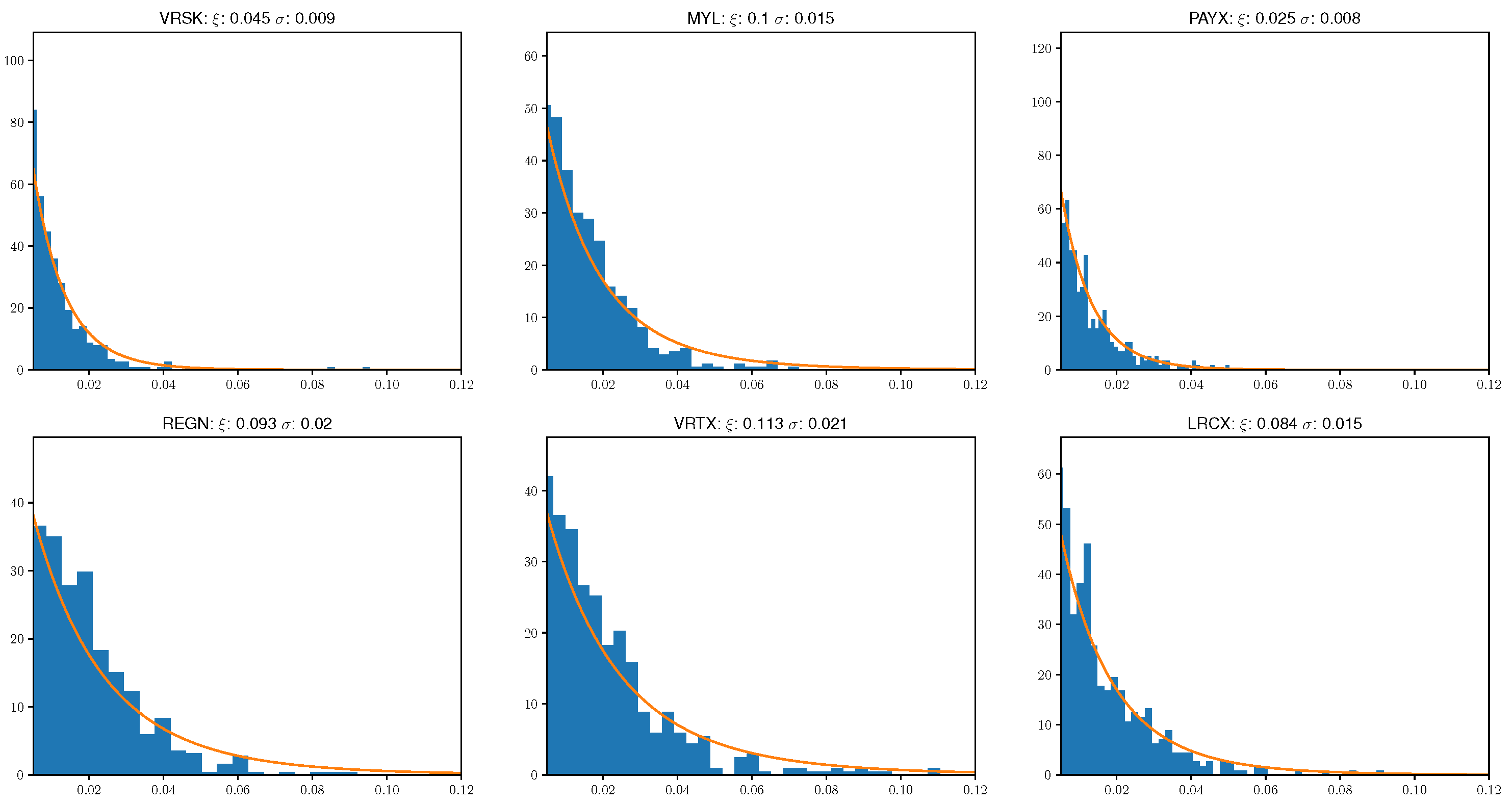

Overall, we have found this optimization problem to be more stable than threshold based fitting methods and it also leads to intuitive fits. Specifically, in

Figure 2, we display the best fit model parameters and distributions alongside return histograms for the loss distribution of six randomly selected stocks from our NASDAQ 100 sample.

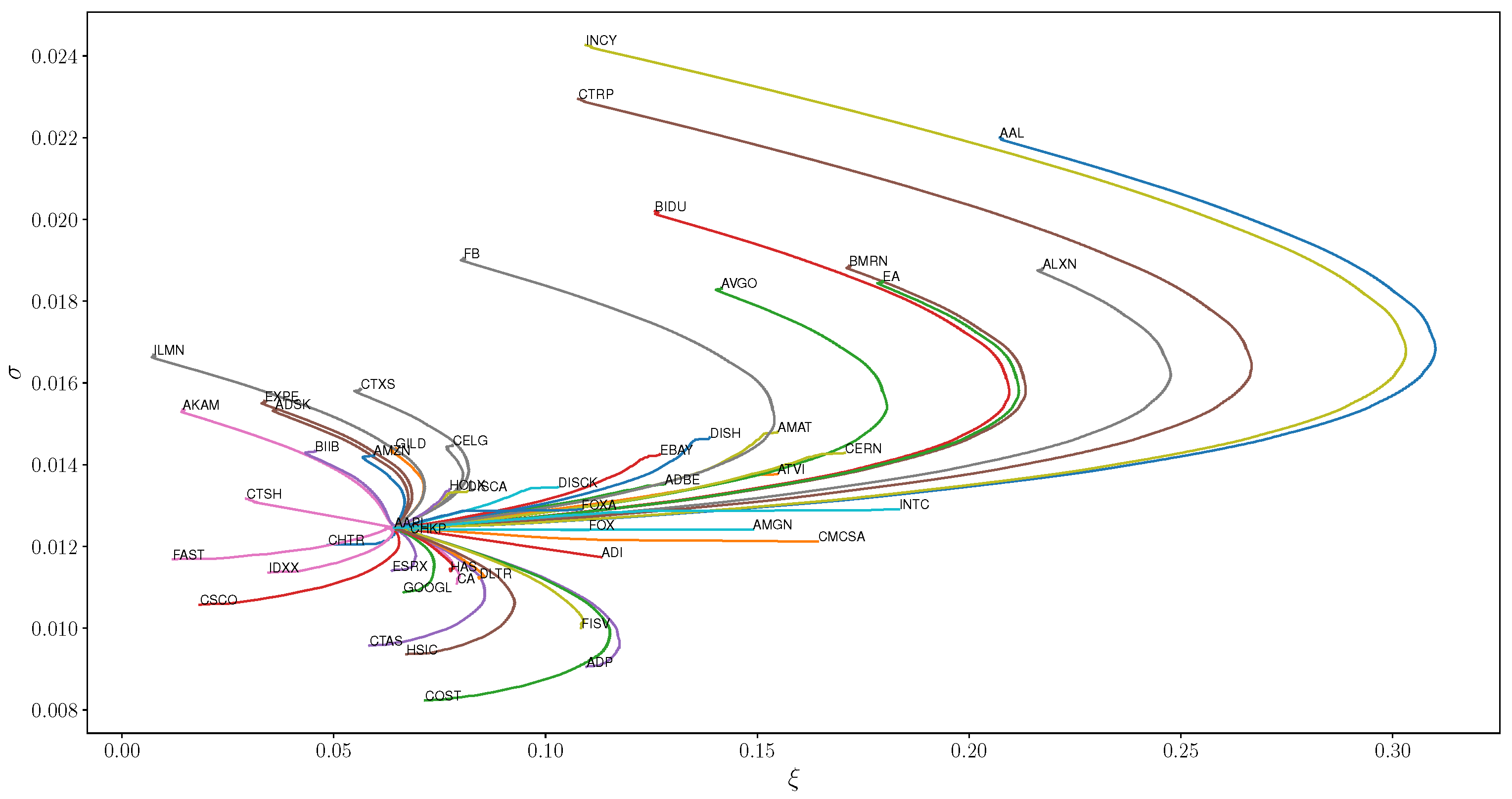

We note that this fitting technique works well for both low volatility stocks such as PAYX as well as those which consistently sustain larger losses like VRTX. Our next aim is to identify which stocks are the shortest distance away from a fixed stock. We fix APPL for this example, and calculate the geodesics that join the generalized Pareto models for each stock in the dataset to APPL and display the geodesics in

Figure 3 for fifty stocks in the sample.

First note that the Fisher geodesic paths which connect model parameters deviate significantly from Euclidean geodesic lines. For models with and values larger than those of AAPL, the geodesics have a strong arc which eventually reverts back and intersects the target model. If is larger but is similar, arclengths are much smaller and geodesics are approximately linear whereas lower values of exhibit the same bullet style geodesics of large values. In addition, different share classes such as FOX and FOXA have similar models and geodesics as expected since the return series of different share classes are highly correlated. In addition, note that this technique links several comparable technology stocks such as GOOGL and AMZN, which are more similar to AAPL than FB.

Given a geodesic path consisting of a sequence of parameter values

, for

, we approximate its arclength between models by first defining

,

and

,

analogously. We compute explicit distances using a discrete analogue of Equation (

4)

and gather results in

Table 1 for the 20 nearest and furthest away stocks from AAPL.

One example to note is that ADI and CHTR have similar Fisher distances; however, one can see from

Figure 3 that CHTR is considerably closer to AAPL in the Euclidean distance than ADI. In addition, the geodesic to ADI is nearly linear whereas that to CHTR is curved. This example demonstrates the potentially significant differences between the Fisher and Euclidean distance between model parameters.

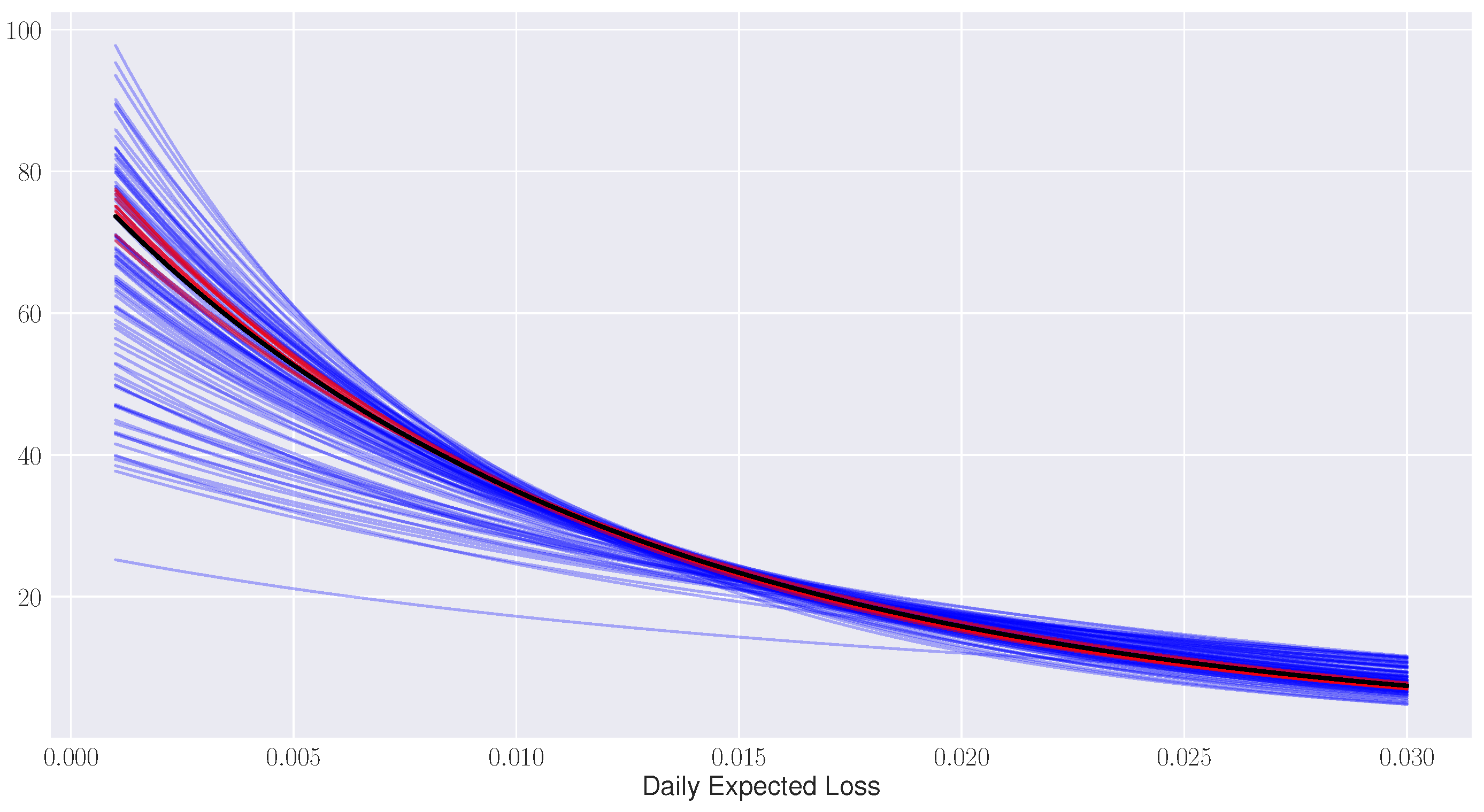

Finally, in

Figure 4, we plot the fitted generalized Pareto distributions to each of the NASDAQ 100 models being considered.

In black, we plot the best fit distribution to AAPL and in red the 10 nearest distributions to AAPL with respect to the Fisher distance. The remainder of model distributions are displayed in blue. This graph demonstrates that the Fisher distance groups stocks according to similarities in their distribution functional forms which was the original design intention of identifying stocks with common tail risk behavior.

5.3. Application to Clustering Based on Worst Annual Loss for S&P 500 Stocks

Next, we expand upon the nearest neighbor example by considering a clustering application using the Fisher distance based on an example of estimating the worst daily loss over an annual period provided in [

23]. This application stems from the Fisher-Tippett-Gnedenko theorem [

40,

53] which roughly states that for a set of independent identically distributed samples

from a probability distribution, the maximum

converges to a generalized extreme value distribution modulo a shift and scale sequence. Our application uses the GEV distribution as a model for the distribution of the maximum return of the loss distribution (worse single daily loss) for a series of stock returns.

We expand the number of securities in this application by extracting the components of the S&P 500 as of 31 December 2016 and keep stocks that remained in the index over the prior 30 years since 1 January 1987. For each stock, we find its minimum daily return over the prior calendar year and fit a three-dimensional GEV distribution with shift and scale parameters using maximum likelihood estimation to each set of 30 maximum loss values. The result is a set of 272 GEV model parameters which estimate the distribution of the maximum yearly loss for each stock.

Our aim will be to apply a hierarchical clustering method to these models in order to group stocks by their worst annual losses. Specifically, we use an agglomerative clustering method which requires two inputs. First, one must specify a notion of distance between two objects being clustered. In our example, these objects correspond to the max loss distributions of distinct stocks and the distance between them is given by the Fisher distance. Second, one must specify a notion of distance between clusters consisting of multiple points called the linkage function. We use Ward’s linkage which is designed to minimize the intra-cluster variance in each fusion step of the method [

54,

55].

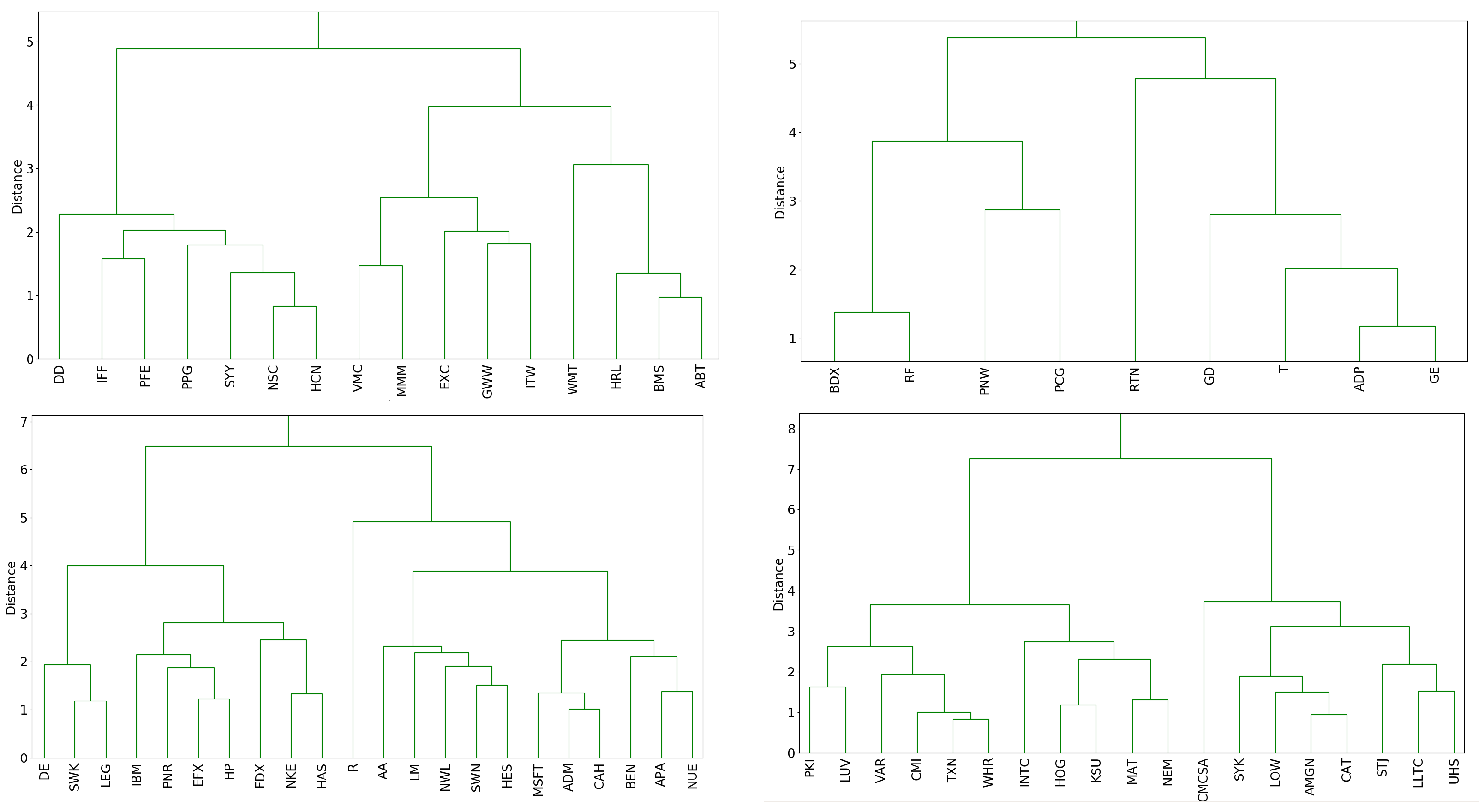

This clustering technique consists of initially grouping together the two securities with the smallest Fisher distance and then iteratively clustering the closest two security/cluster pairs until all distributions have been grouped into a single cluster. One way of visualizing this clustering algorithm is through the dendrogram of the clustering procedure, portions of which we display in

Figure 5.

Several dendrogram subclusters contain stocks belonging to the same sector. For example, in the upper left plot, the four stocks WMT, HRL, BMS, and ABT are part of the large right subcluster which is itself a subcluster of the entire group. In addition, we find that some clusters are composed of companies with similar businesses such as DE, SWK, and LEG which concentrate in the design and manufacturing of engineered products and heavy machinery. Other clusters such as GE, ADP, T, and GD in the upper right subplot contain securities from a mix of the aerospace, technology, industrial, and telecommunications industries. This clustering procedure provides a new way to group similar stocks according to their worst annual return.

6. Conclusions

In summary, we have reviewed relevant ideas in information geometry and discussed issues that arise when computing geodesics for statistical models. We then derived a closed form expression up to quadrature for the Fisher distance for one-dimensional models. Next, we compared the Fisher distance and KL divergence in an equity return distribution application for the univariate normal model. Finally, we developed two extreme value theory examples; a nearest neighbor comparison using the generalized Pareto distribution, and a maximum daily loss over an annual period distribution hierarchical clustering technique using the generalized extreme value distribution.

There are several further directions that may be pursued. First, it would be interesting to find and develop applications that involve the Fisher distance outside of quantitative finance, specifically in the areas of natural language and image processing. Second, the Fisher metric often cannot be represented in terms of a closed form algebraic expression. In such situations, one can develop numerical methods to compute geodesic distances given that the Fisher metric is only defined up to quadrature. Finally, one can consider Fisher distance analogous to clustering, classification, and regression techniques that take a distance function as input.