Information Theory for Non-Stationary Processes with Stationary Increments

Abstract

:1. Introduction

2. Information Theory for Non-Stationary Processes

2.1. Non-Stationary Processes with Stationary Increments

2.2. General Framework

2.2.1. Shannon Entropy

2.2.2. Mutual Information and Auto-Mutual Information

2.2.3. Entropy Rate

2.3. Practical Time-Averaged Framework

2.3.1. Time-Averaged Framework

2.3.2. Practical Framework

2.3.3. Information Theory Quantities in the Practical Framework

Ersatz Shannon Entropy

Auto-Mutual Information

Entropy Rate

2.4. Self-Similar Processes

2.4.1. General Framework

2.4.2. Practical Time-Averaged Framework

3. Benchmarking the Practical Framework with the fBm

3.1. Characterization of the Estimates

3.1.1. Data

3.1.2. Procedure

3.1.3. Convergence/Bias

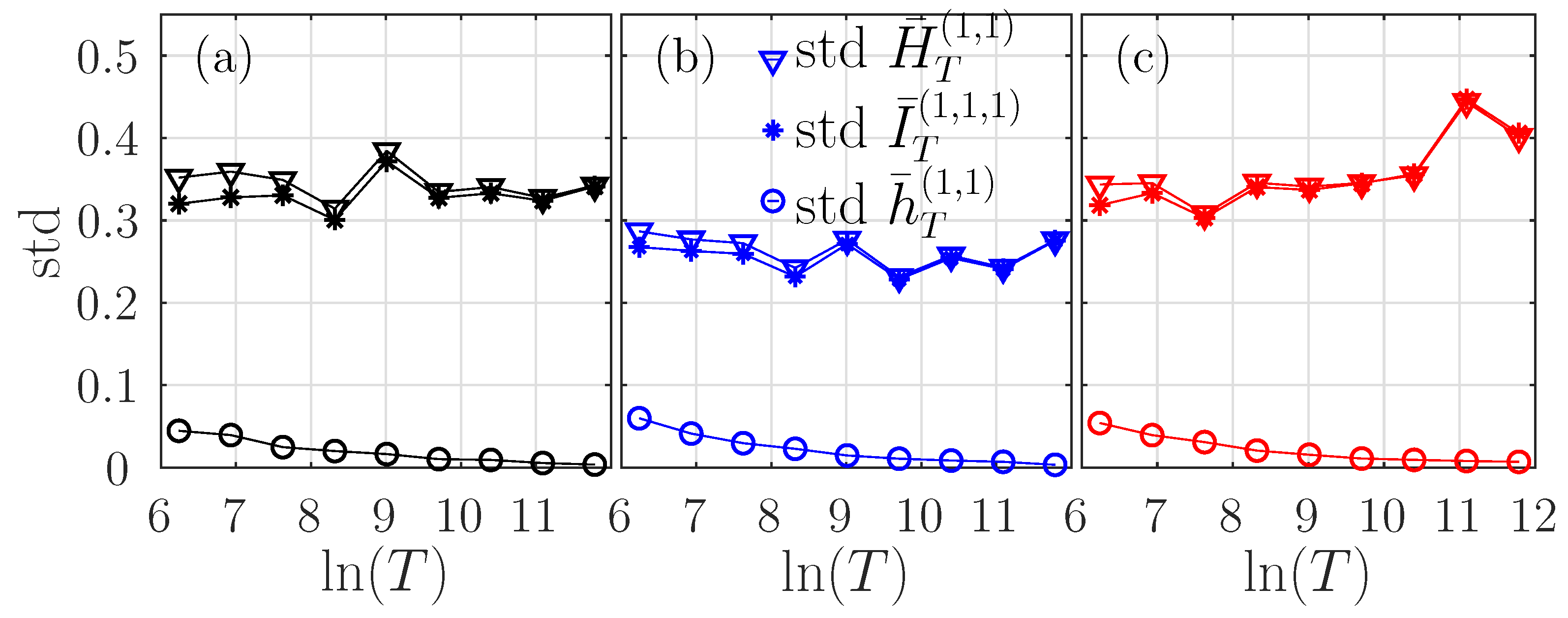

3.1.4. Standard Deviation of the Estimates

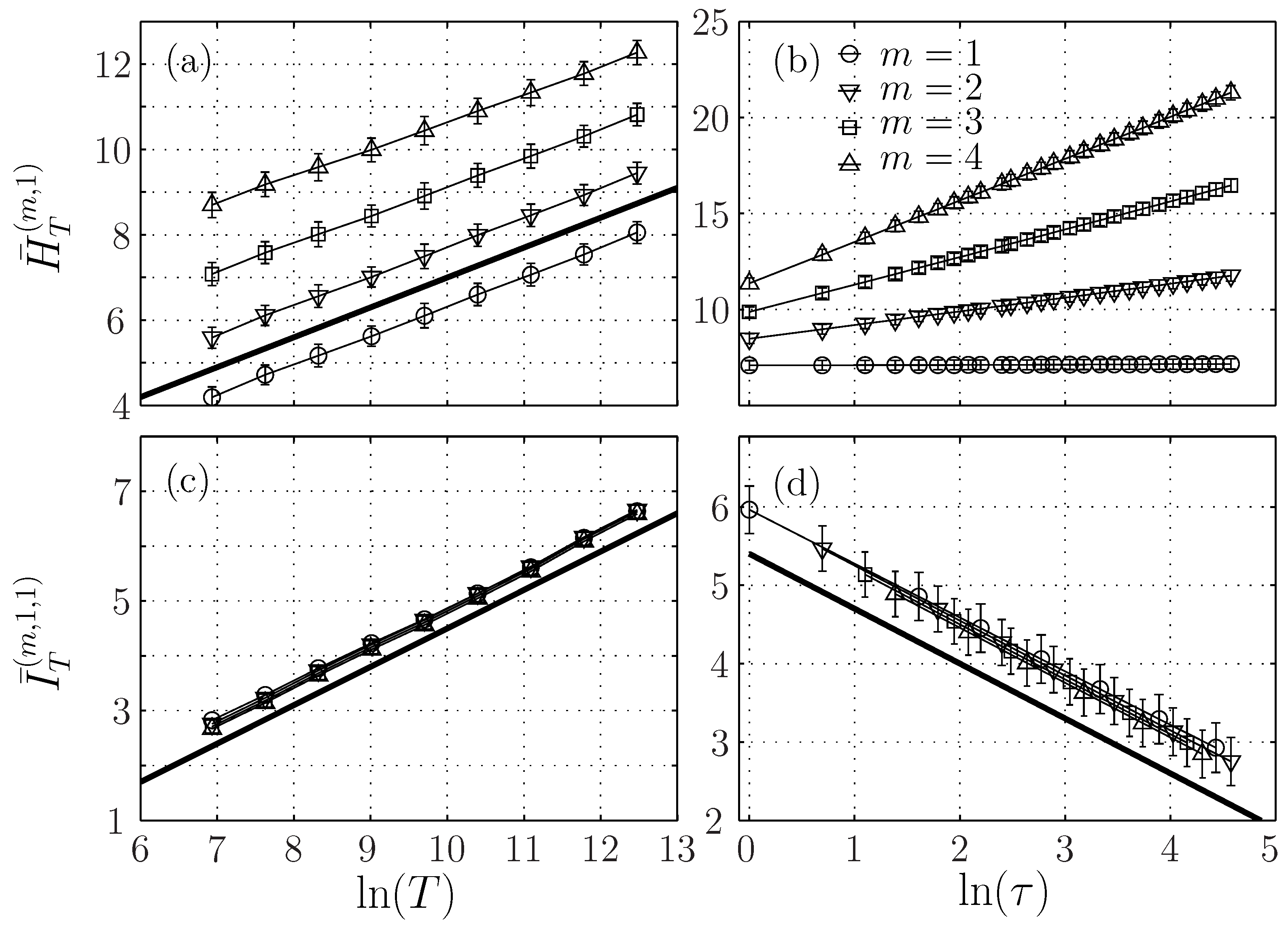

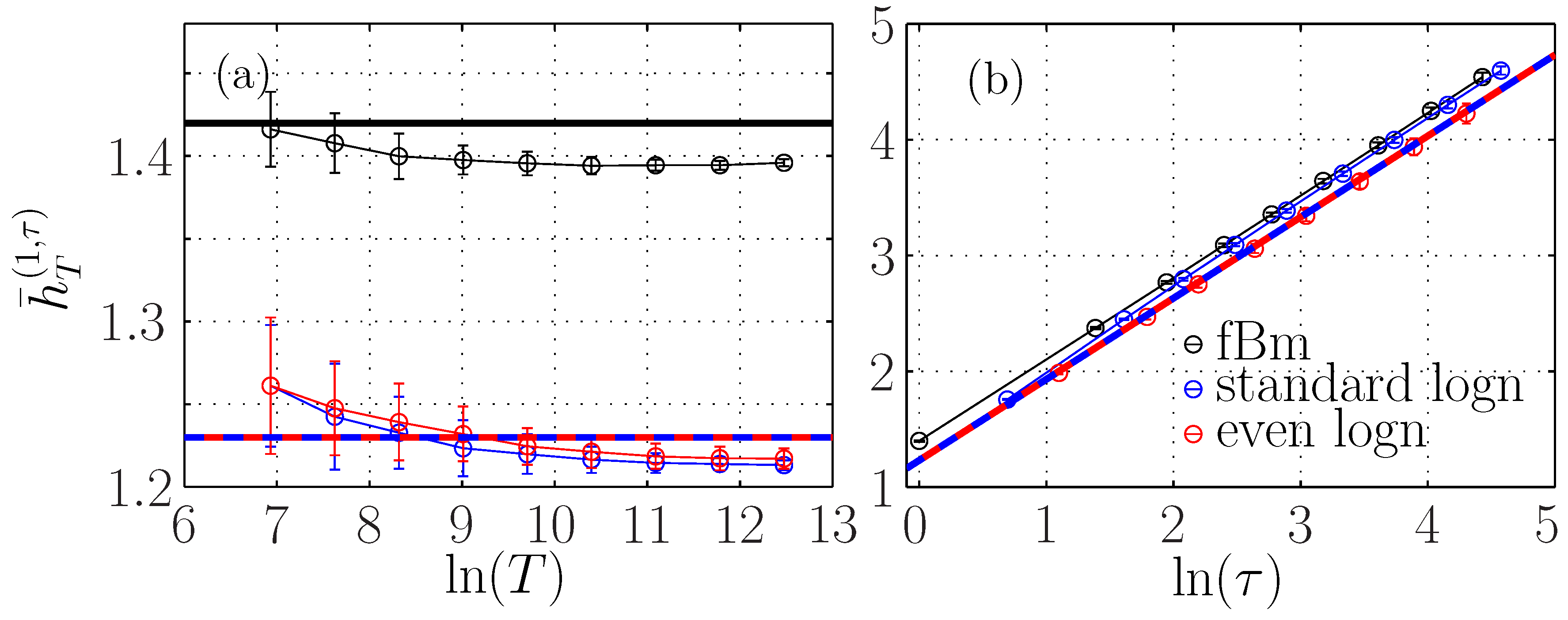

3.2. Dependence on Times T and

3.2.1. Entropy and Auto-Mutual Information

Dependence on T

Dependence on

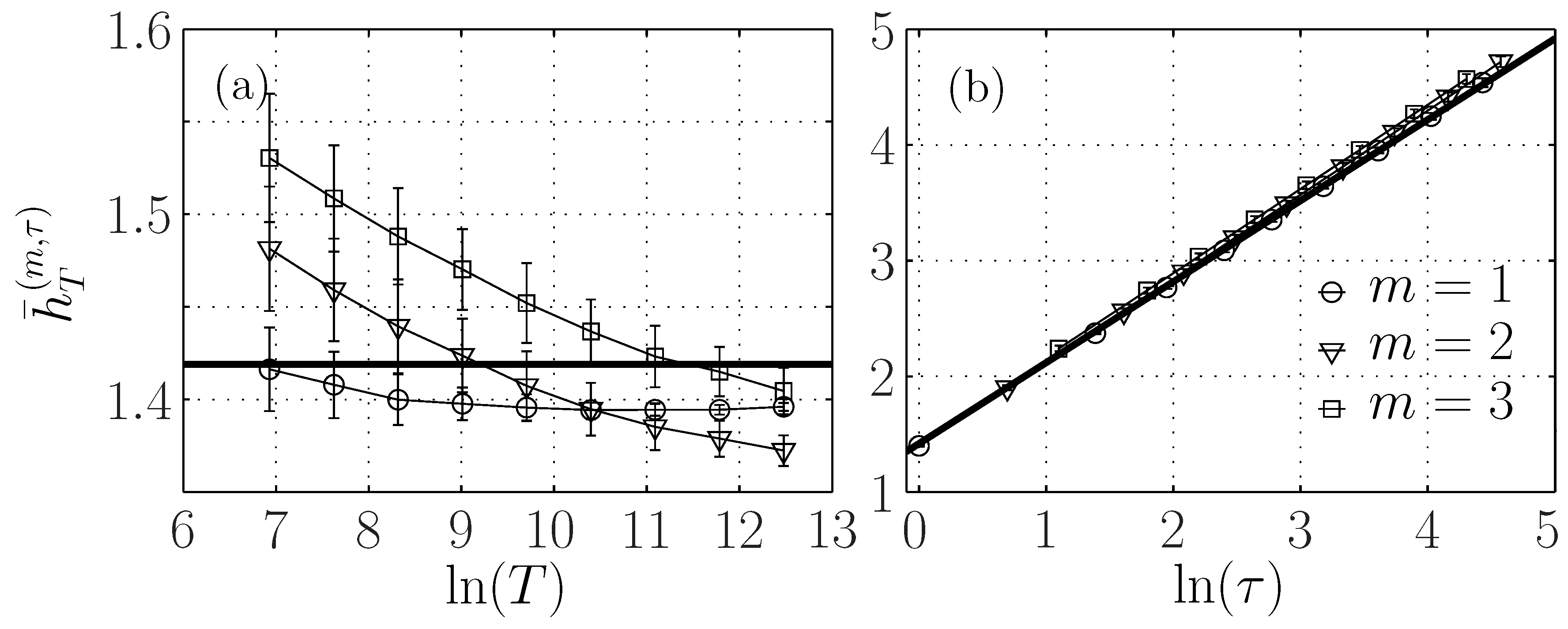

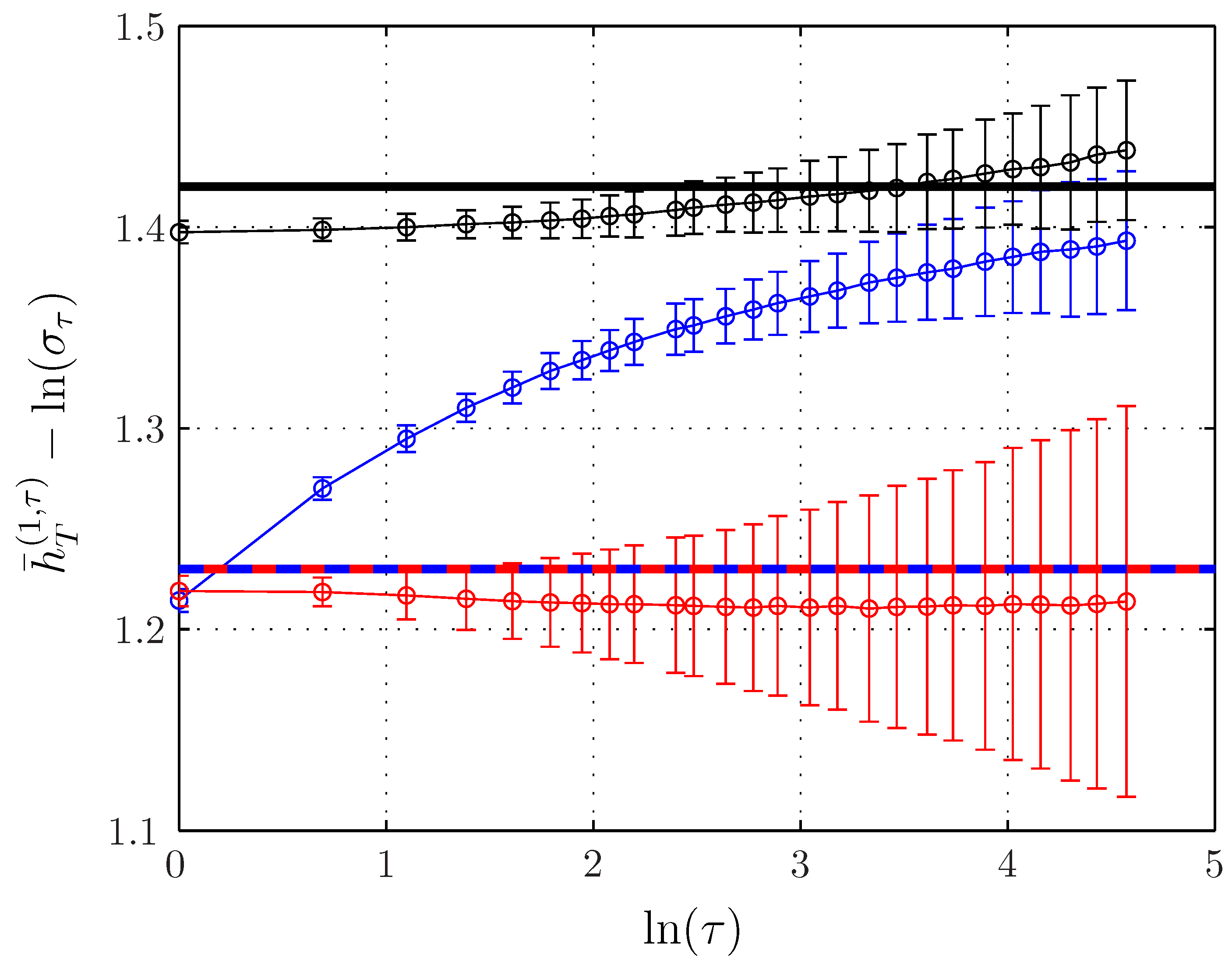

3.2.2. Stationarity of the Entropy Rate

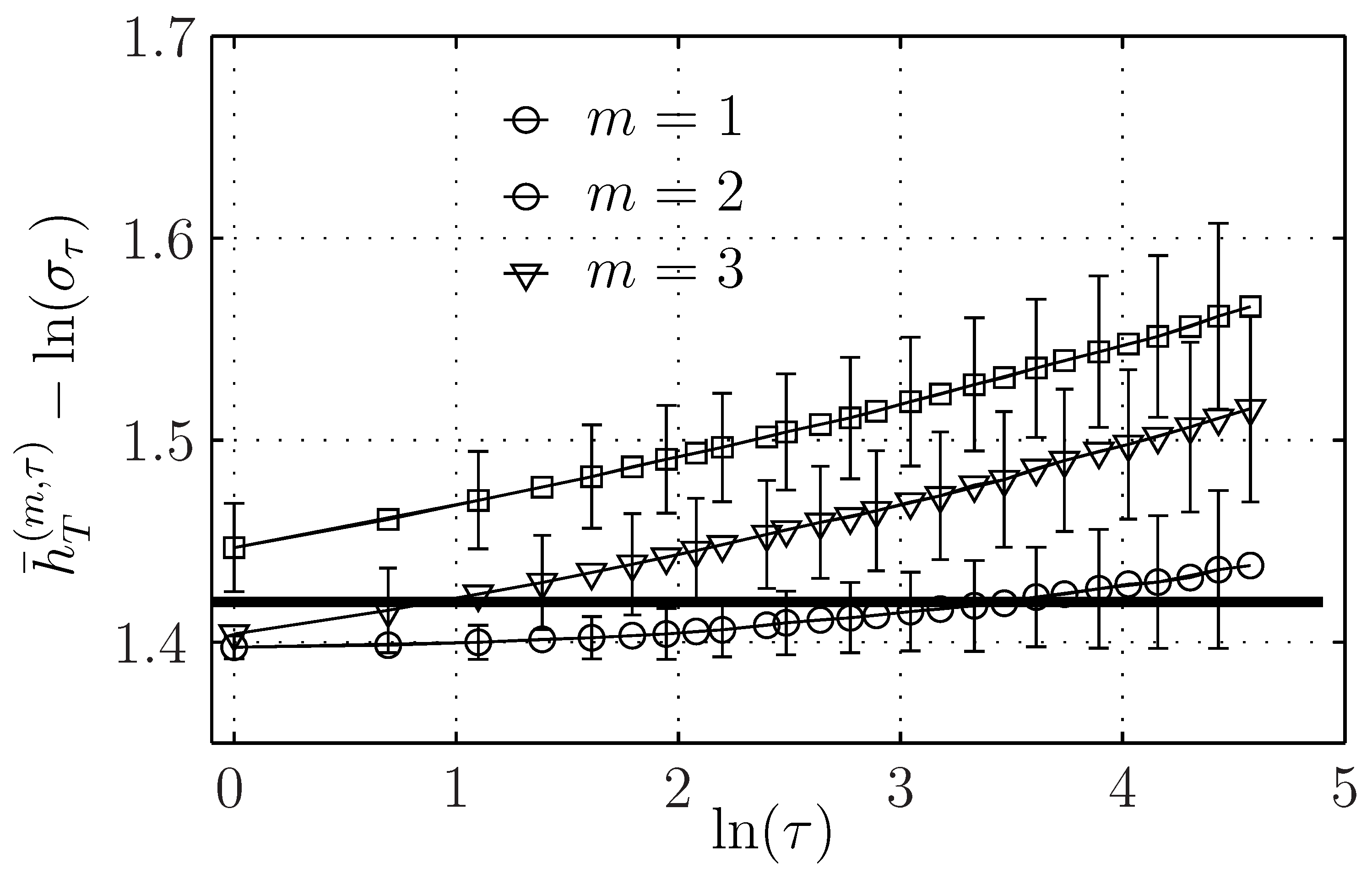

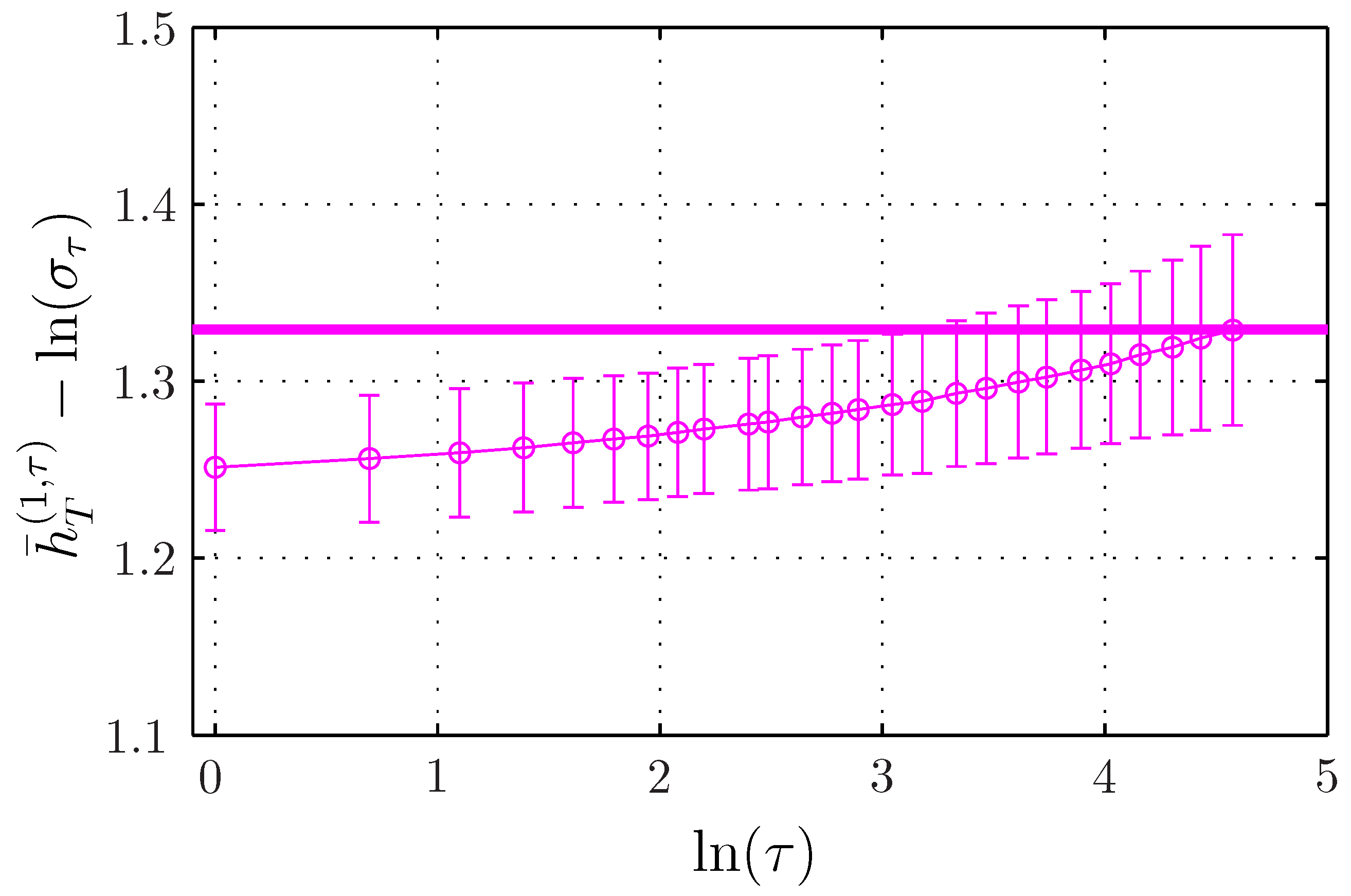

3.2.3. Entropy Rate Dependence on Scale

4. Application of the Practical Framework to Non-Gaussian Self-Similar Processes

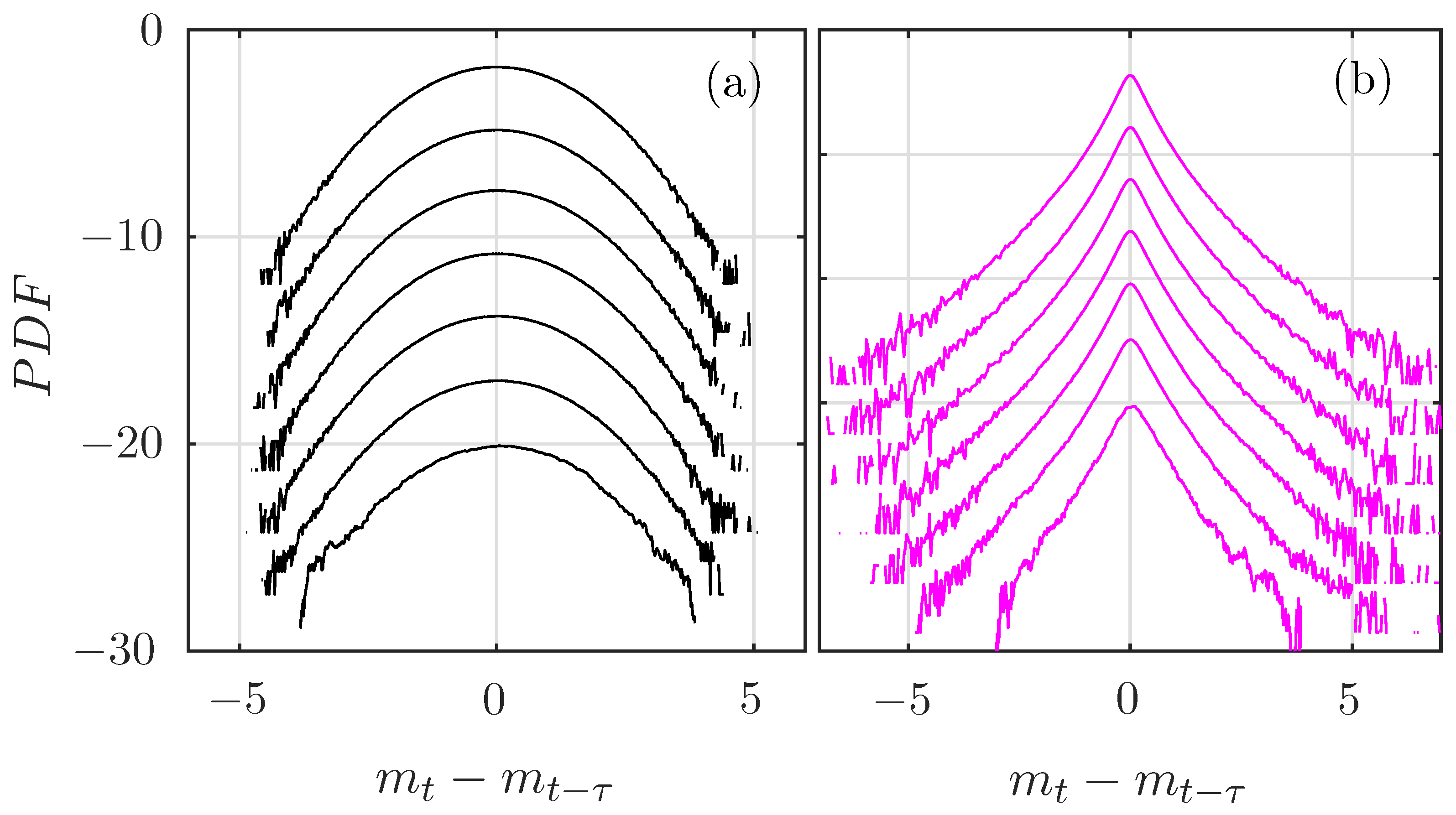

4.1. Procedure

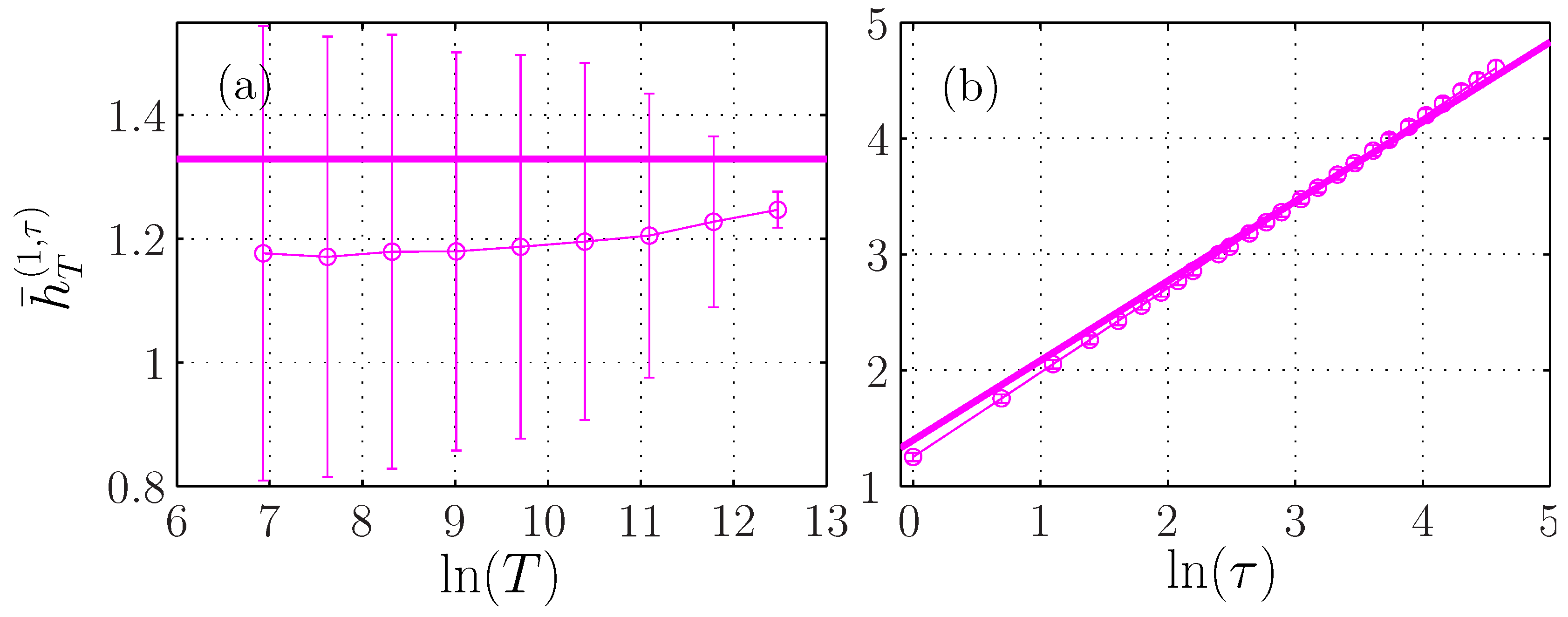

4.2. Bias and Standard Deviation

4.3. Dependence on Times T and

5. Application of the Practical Framework to a Multifractal Process

6. Discussion and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A. Entropy of a Time-Embedded Signal

References

- Andreas, E.; Geiger, C.; Treviño, G.; Claffey, K. Identifying nonstationarity in turbulence series. Bound. Layer Meteorol. 2008, 127, 37–56. [Google Scholar] [CrossRef]

- Nerini, D.; Besic, N.; Sideris, I.; Germann, U.; Foresti, L. A non-stationary stochastic ensemble generator for radar rainfall fields based on the short-space Fourier transform. Hydrol. Earth Syst. Sci. 2017, 21, 2777–2797. [Google Scholar] [CrossRef] [Green Version]

- Boashash, B.; Azemi, G.; O’Toole, J. Time-frequence processing of nonstationary signals. IEEE Signal Process. Mag. 2013, 30, 108–119. [Google Scholar] [CrossRef]

- Couts, D.; Grether, D.; Nerlove, M. Forecasting non-stationary economic time series. Manag. Sci. 1966, 18, 1–151. [Google Scholar] [CrossRef] [Green Version]

- Young, P. Time-variable parameter and trend estimation in non-stationary economic time series. J. Forecast. 1994, 13, 179–210. [Google Scholar] [CrossRef]

- Yang, K.; Shahabi, C. On the stationarity of multivariate time series for correlation-based data analysis. In Proceedings of the Fifth IEEE International Conference on Data Mining (ICDM’05), Houston, TX, USA, 27–30 November 2005. [Google Scholar]

- Dębowski, L. On processes with summable partial autocorrelations. Stat. Probab. Lett. 2007, 77, 752–759. [Google Scholar] [CrossRef] [Green Version]

- Yaglom, A. Correlation theory of processes with random stationary nth increments. Mat. Sb. 1955, 37, 141–196. [Google Scholar]

- Ibe, O. 11-Levy processes. In Markov Processes for Stochastic Modeling, 2nd ed.; Elsevier: London, UK, 2013; pp. 329–347. [Google Scholar]

- Frisch, U. Turbulence: The Legacy of A.N. Kolmogorov; Cambridge University Press: Cambridge, UK, 1995. [Google Scholar]

- Shannon, C. A Mathematical Theory of Communication. Bell Syst. Tech. J. 1948, XXVII, 388–427. [Google Scholar]

- Kantz, H.; Schreiber, T. Nonlinear Time Series Analysis; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Vu, V.Q.; Yu, B.; Kass, R.E. Information in the Non-Stationary Case. Neural Comput. 2009, 21, 688–703. [Google Scholar] [CrossRef]

- Ray, A.; Chowdhury, A.R. On the characterization of non-stationary chaotic systems: Autonomous and non-autonomous cases. Phys. A 2010, 389, 5077–5083. [Google Scholar] [CrossRef]

- Gómez Herrero, G.; Wu, W.; Rutanen, K.; Soriano, M.C.; Pipa, G.; Vicente, R. Assessing coupling dynamics from an ensemble of time series. Entropy 2015, 17, 1958–1970. [Google Scholar] [CrossRef] [Green Version]

- Granero-Belinchón, C.; Roux, S.; Abry, P.; Garnier, N.B. Probing high-order dependencies with information theory. IEEE Trans. Signal Process. 2019, 67, 3796–3805. [Google Scholar] [CrossRef]

- Mandelbrot, B.; Van Ness, J. Fractional brownian motions fractional noises and applications. SIAM Rev. 1968, 10, 422–437. [Google Scholar] [CrossRef]

- Takens, F. Detecting strange attractors in turbulence. In Dynamical Systems and Turbulence, Warwick: Proceedings of a Symposium Held at the University of Warwick 1979/80; Springer: Berlin/Heidelberg, Germany, 1981; pp. 366–381. [Google Scholar]

- Granero-Belinchon, C.; Roux, S.; Abry, P.; Doret, M.; Garnier, N. Information Theory to Probe Intrapartum Fetal Heart Rate Dynamics. Entropy 2017, 19, 640. [Google Scholar] [CrossRef] [Green Version]

- Crutchfield, J.; Feldman, D. Regularities unseen, randomness observed: The entropy convergence hierarchy. Chaos 2003, 15, 25–54. [Google Scholar] [CrossRef]

- Mandelbrot, B. The Fractal Geometry of Nature; W.H. Freeman and Co.: San Francisco, CA, USA, 1982. [Google Scholar]

- Mauritz, K. Dielectric relaxation studies of ion motions in electrolyte-containing perfluorosulfonate ionomers: 4. long-range ion transport. Macromolecules 1989, 22, 4483–4488. [Google Scholar] [CrossRef]

- Chevillard, L.; Castaing, B.; Arneodo, A.; Lévêque, E.; Pinton, J.; Roux, S. A phenomenological theory of Eulerian and Lagrangian velocity fluctuations in turbulent flows. C. R. Phys. 2012, 13, 899–928. [Google Scholar] [CrossRef] [Green Version]

- Kavvas, M.; Govindaraju, R.; Lall, U. Introduction to the focus issue: physics of scaling and self-similarity in hydrologic dynamics, hydrodynamics and climate. Chaos 2015, 25, 075201. [Google Scholar] [CrossRef]

- Rigon, R.; Rodriguez-Iturbe, I.; Maritan, A.; Giacometti, A.; Tarboton, D.; Rinaldo, A. On Hack’s law. Water Resour. Res. 1996, 32, 3367–3374. [Google Scholar] [CrossRef]

- Gotoh, K.; Fujii, Y. A fractal dimensional analysis on the cloud shape parameters of cumulus over land. J. Appl. Meteorol. 1998, 37, 1283–1292. [Google Scholar] [CrossRef]

- Console, R.; Lombardi, A.; Murru, M.; Rhoades, D. Bath’s law and the self-similarity of earthquakes. J. Geophys. Res. Solid Earth 2003, 108, 2128. [Google Scholar] [CrossRef] [Green Version]

- Ivanov, P.C.; Ma, Q.D.Y.; Bartsch, R.P.; Hausdorff, J.M.; Amaral, L.A.N.; Schulte-Frohlinde, V.; Stanley, H.E.; Yoneyama, M. Levels of complexity in scale-invariant neural signals. Phys. Rev. E 2009, 79, 041920. [Google Scholar] [CrossRef] [PubMed]

- Drozdz, S.; Ruf, F.; Speth, J.; Wojcik, M. Imprints of log-periodic self-similarity in the stock market. Eur. Phys. J. B Condens. Matter Complex Syst. 1999, 10, 589–593. [Google Scholar] [CrossRef] [Green Version]

- Cont, R.; Potters, M.; Bouchaud, J.P. Scaling in stock market data: stable laws and beyond. In Scale Invariance and Beyond; Springer: Berlin/Heidelberg, Germany, 1997; Volume 7, pp. 75–85. [Google Scholar]

- Uhl, A.; Wimmer, G. A systematic evaluation of the scale invariance of texture recognition methods. Pattern Anal. Appl. 2015, 18, 945–969. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chakraborty, D.; Ashir, A.; Suganuma, T.; Mansfield-Keeni, G.; Roy, T.; Shiratori, N. Self-similar and fractal nature of internet traffic. Netw. Manag. 2004, 14, 119–129. [Google Scholar] [CrossRef]

- Flandrin, P. Wavelet analysis and synthesis of fractional Brownian motion. IEEE Trans. Inf. Theory 1992, 38, 910–917. [Google Scholar] [CrossRef]

- Zografos, K.; Nadarajah, S. Expressions for Rényi and Shannon entropies for multivariate distributions. Stat. Probab. Lett. 2005, 71, 71–84. [Google Scholar] [CrossRef]

- Granero-Belinchon, C.; Roux, S.G.; Garnier, N.B. Scaling of information in turbulence. EPL 2016, 115, 58003. [Google Scholar] [CrossRef] [Green Version]

- Helgason, H.; Pipiras, V.; Abry, P. Synthesis of multivariate stationary series with prescribed marginal distributions and covariance using circulant matrix embedding. Signal Process. 2011, 91, 1741–1758. [Google Scholar] [CrossRef]

- Kozachenko, L.; Leonenko, N. Sample estimate of entropy of a random vector. Probl. Inf. Transm. 1987, 23, 95–100. [Google Scholar]

- Kraskov, A.; Stöbauer, H.; Grassberger, P. Estimating mutual information. Phys. Rev. E 2004, 69, 066138. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gao, W.; Oh, S.; Viswanath, P. Demystifying Fixed k-Nearest Neighbor Information Estimators. IEEE Trans. Inf. Theory 2018, 64, 5629–5661. [Google Scholar] [CrossRef] [Green Version]

- Bacry, E.; Delour, J.; Muzy, J.F. Multifractal random walk. Phys. Rev. E 2001, 64, 026103. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bacry, E.; Muzy, J. Multifractal stationary random measures and multifractal random walk with log-infinitely divisible scaling laws. Phys. Rev. E 2002, 66, 056121. [Google Scholar]

- Delour, J.; Muzy, J.; Arnéodo, A. Intermittency of 1D velocity spatial profiles in turbulence: A magnitude cumulant analysis. Eur. Phys. J. B 2001, 23, 243–248. [Google Scholar] [CrossRef]

- Granero-Belinchón, C.; Roux, S.G.; Garnier, N.B. Kullback-Leibler divergence measure of intermittency: Application to turbulence. Phys. Rev. E 2018, 97, 013107. [Google Scholar] [CrossRef] [Green Version]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Granero-Belinchón, C.; Roux, S.G.; Garnier, N.B. Information Theory for Non-Stationary Processes with Stationary Increments. Entropy 2019, 21, 1223. https://doi.org/10.3390/e21121223

Granero-Belinchón C, Roux SG, Garnier NB. Information Theory for Non-Stationary Processes with Stationary Increments. Entropy. 2019; 21(12):1223. https://doi.org/10.3390/e21121223

Chicago/Turabian StyleGranero-Belinchón, Carlos, Stéphane G. Roux, and Nicolas B. Garnier. 2019. "Information Theory for Non-Stationary Processes with Stationary Increments" Entropy 21, no. 12: 1223. https://doi.org/10.3390/e21121223

APA StyleGranero-Belinchón, C., Roux, S. G., & Garnier, N. B. (2019). Information Theory for Non-Stationary Processes with Stationary Increments. Entropy, 21(12), 1223. https://doi.org/10.3390/e21121223