Semantic Entropy in Language Comprehension

Abstract

1. Introduction

2. Comprehension-Centric Surprisal and Entropy

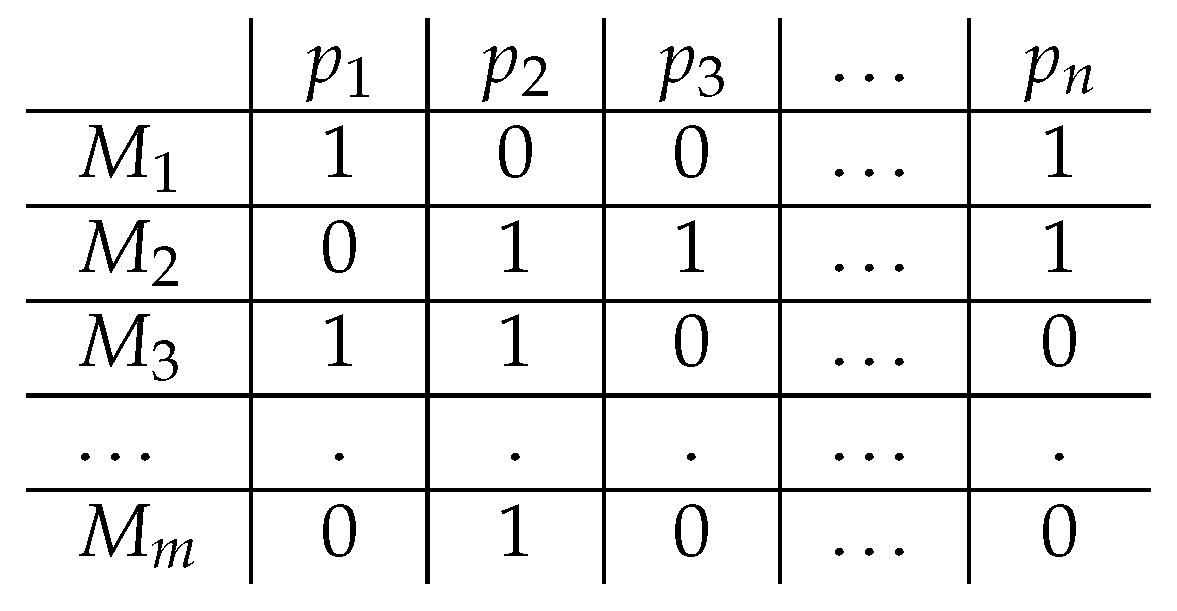

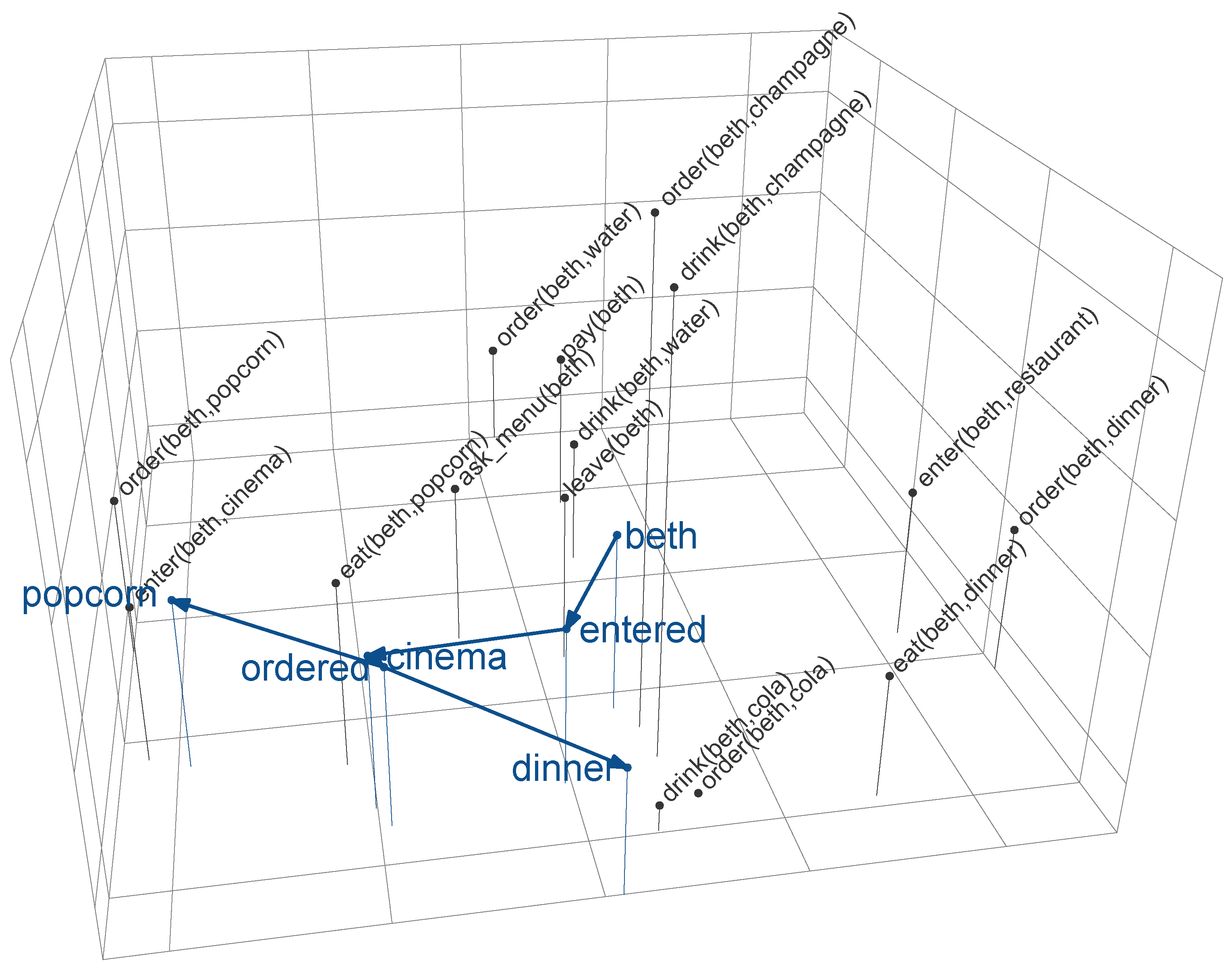

2.1. Meaning in a Distributional Formal Meaning Space

2.2. A Model of Surprisal Beyond the Words Given

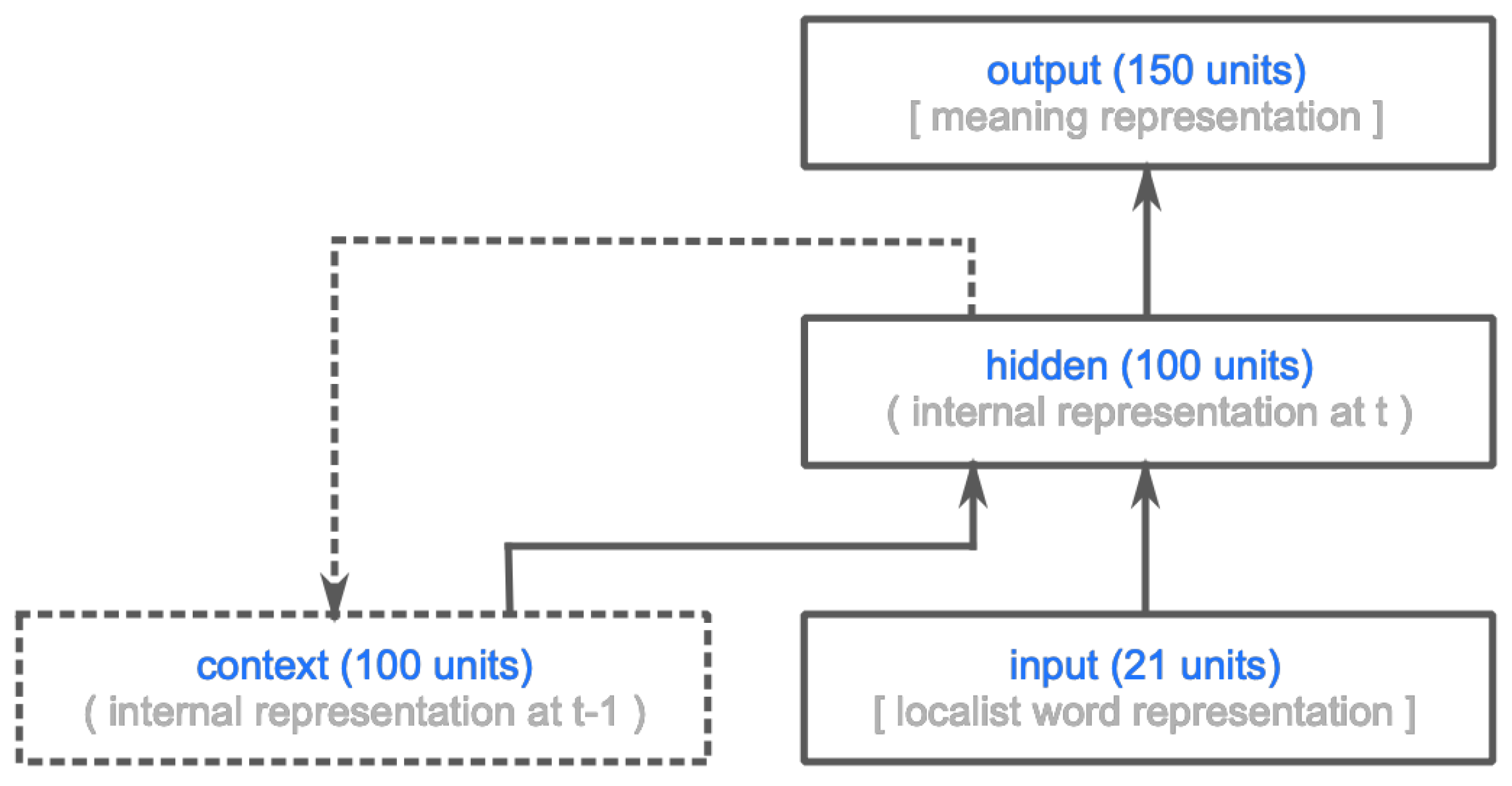

2.2.1. The Comprehension Model

2.2.2. A Comprehension-Centric Notion of Surprisal

2.3. Deriving a Comprehension-Centric Notion of Entropy

3. Entropy Reduction in Online Comprehension

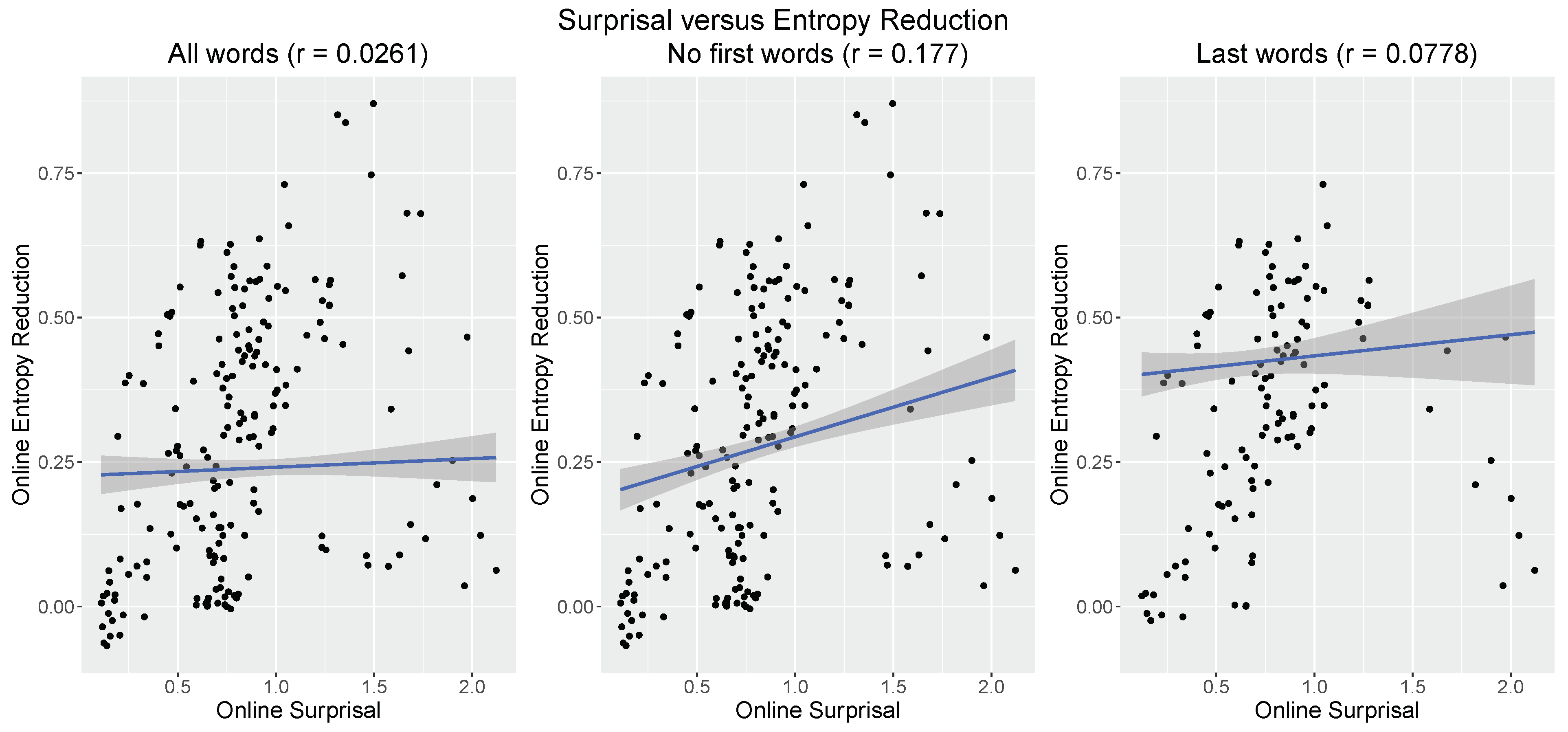

3.1. Comprehension-Centric Entropy Reduction versus Surprisal

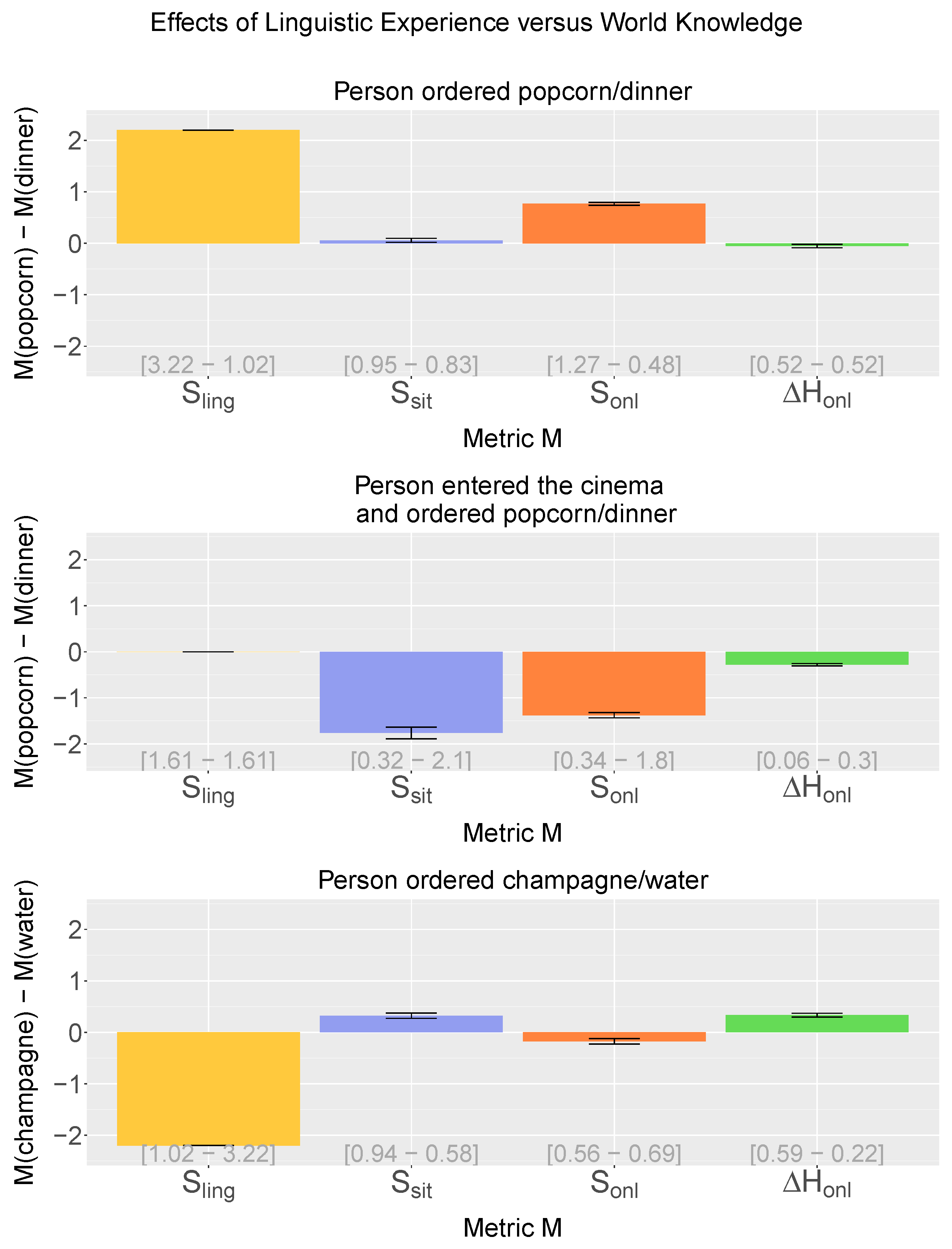

3.2. Effects of Linguistic Experience versus World Knowledge

- Manipulation of linguistic experience only: the model is presented with sentences that differ in terms of their occurrence frequency in the training data (i.e., differential linguistic surprisal) but that keep the meaning vector probabilities constant (i.e., equal situation surprisal).

- Manipulation of world knowledge only: the model is presented with sentences that occur equally frequently in the training data (i.e., equal linguistic surprisal) but differ with respect to their probabilities within the meaning space (i.e., differential situation surprisal).

- Manipulation of both linguistic experience and world knowledge: to investigate the interplay between linguistic experience and world knowledge, the model is presented with sentences in which the linguistic experience and world knowledge are in conflict with each other (i.e., linguistic experience dictates an increase in linguistic surprisal whereas world knowledge dictates a decrease in situation surprisal or vice versa).

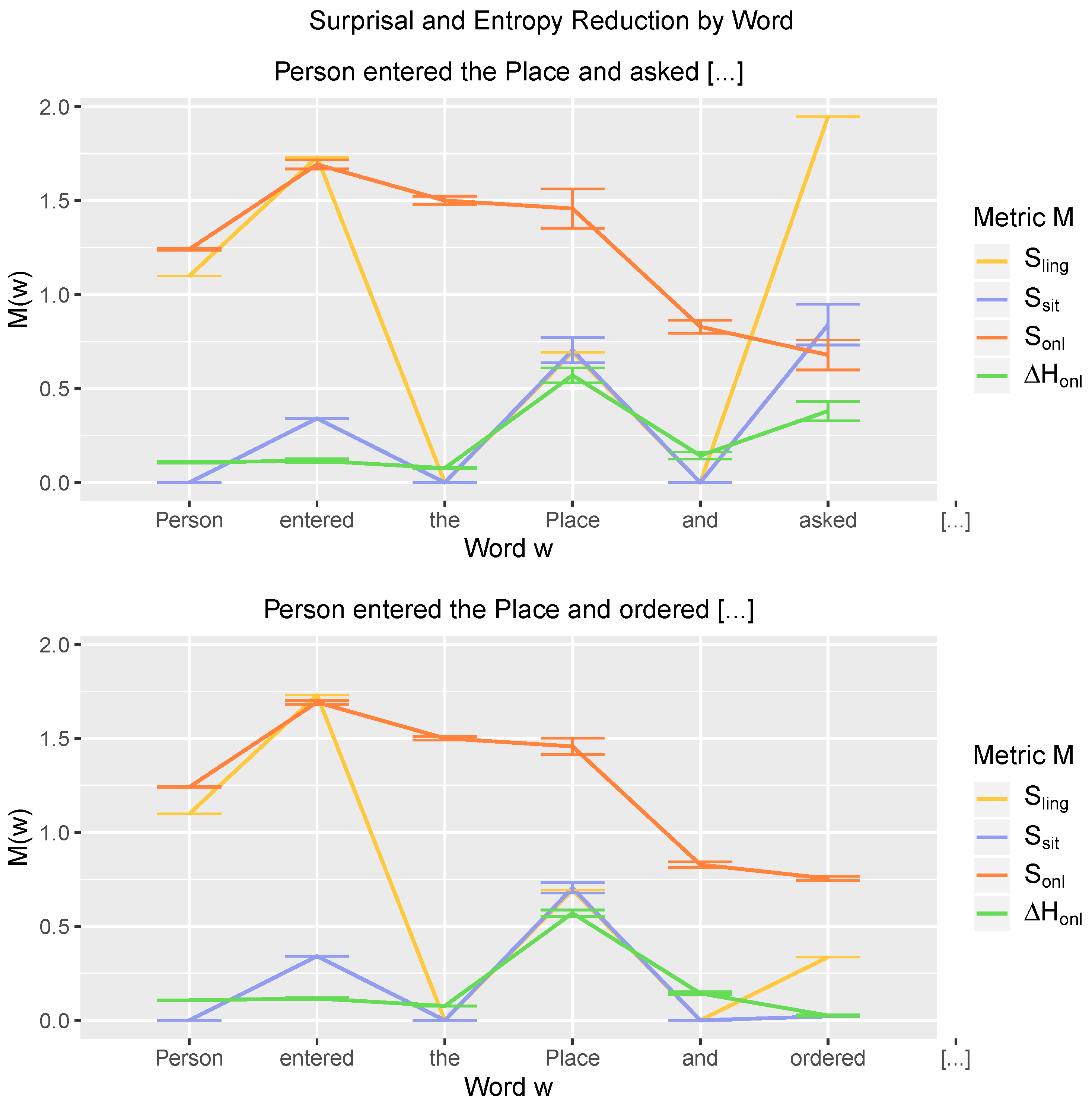

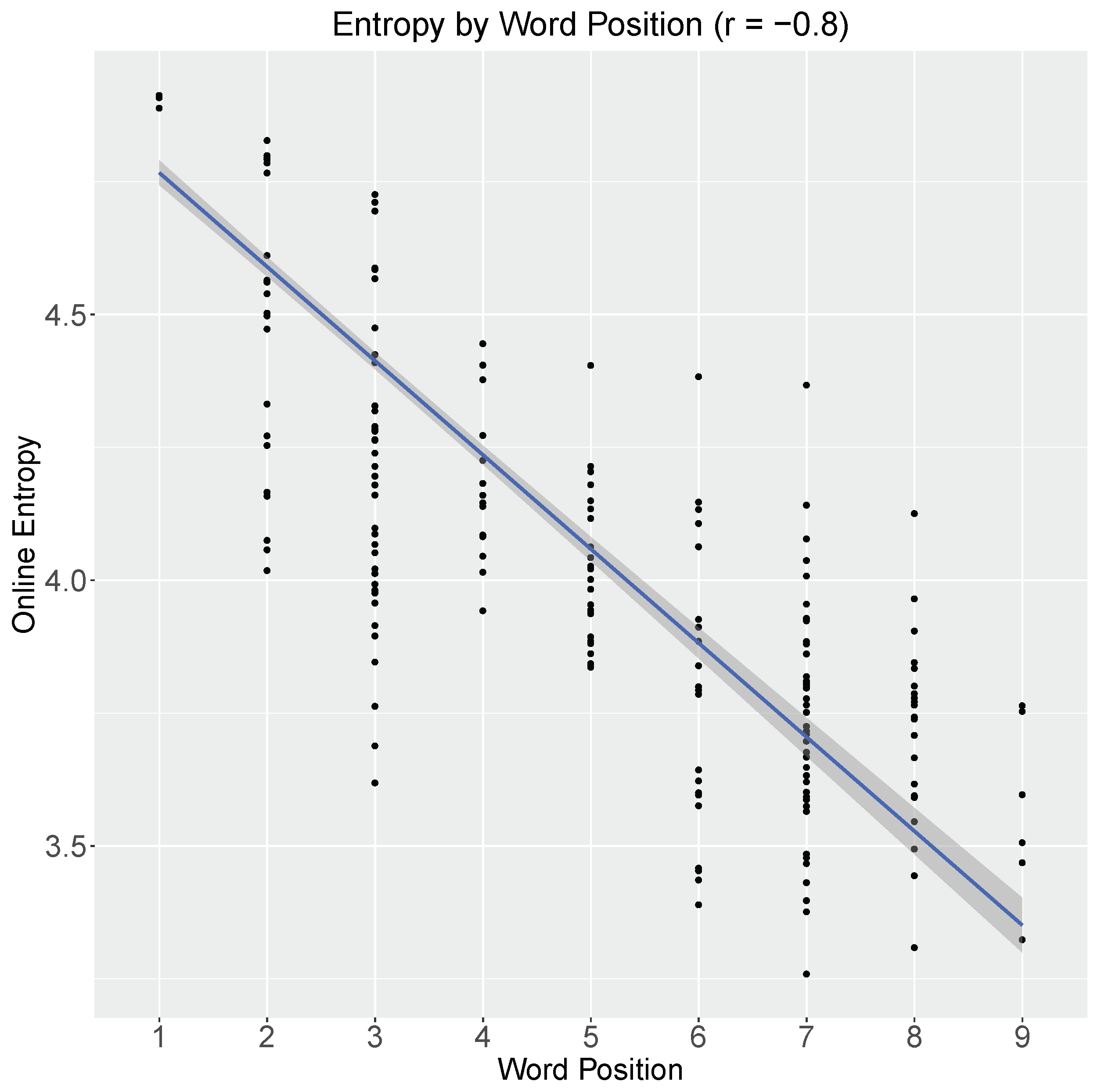

3.3. Online Entropy Reduction as the Sentence Unfolds

4. Discussion

Author Contributions

Funding

Conflicts of Interest

References

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Hale, J.T. A probabilistic Earley parser as a psycholinguistic model. In Proceedings of the Second Meeting of the North American Chapter of the Association for Computational Linguistics on Language Technologies; Association for Computational Linguistics: Stroudsburg, PA, USA, 2001; pp. 1–8. [Google Scholar]

- Levy, R. Expectation-based syntactic comprehension. Cognition 2008, 106, 1126–1177. [Google Scholar] [CrossRef] [PubMed]

- Hale, J.T. Uncertainty about the rest of the sentence. Cogn. Sci. 2006, 30, 643–672. [Google Scholar] [CrossRef] [PubMed]

- Boston, M.F.; Hale, J.T.; Kliegl, R.; Patil, U.; Vasishth, S. Parsing costs as predictors of reading difficulty: An evaluation using the Potsdam Sentence Corpus. J. Eye Mov. Res. 2008, 2, 1–12. [Google Scholar]

- Demberg, V.; Keller, F. Data from eye-tracking corpora as evidence for theories of syntactic processing complexity. Cognition 2008, 109, 193–210. [Google Scholar] [CrossRef] [PubMed]

- Frank, S.L. Surprisal-based comparison between a symbolic and a connectionist model of sentence processing. In Proceedings of the 31st Annual Conference of the Cognitive Science Society; Cognitive Science Society: Austin, TX, USA, 2009; pp. 1139–1144. [Google Scholar]

- Roark, B.; Bachrach, A.; Cardenas, C.; Pallier, C. Deriving lexical and syntactic expectation-based measures for psycholinguistic modeling via incremental top-down parsing. In Proceedings of the 2009 Conference on Empirical Methods in Natural Language Processing: Volume 1; Association for Computational Linguistics: Stroudsburg, PA, USA, 2009; pp. 324–333. [Google Scholar]

- Smith, N.J.; Levy, R. Optimal Processing Times in Reading: A Formal Model and Empirical Investigation. In Proceedings of the 30th Annual Meeting of the Cognitive Science Society; Cognitive Science Society: Austin, TX, USA, 2008; pp. 595–600. [Google Scholar]

- Brouwer, H.; Fitz, H.; Hoeks, J. Modeling the Noun Phrase versus Sentence Coordination Ambiguity in Dutch: Evidence from Surprisal Theory. In Proceedings of the 2010 Workshop on Cognitive Modeling and Computational Linguistics; Association for Computational Linguistics: Uppsala, Sweden, 2010; pp. 72–80. [Google Scholar]

- Blache, P.; Rauzy, S. Predicting linguistic difficulty by means of a morpho-syntactic probabilistic model. In Proceedings of the 25th Pacific Asia Conference on Language, Information and Computation (PACLIC-2011), Singapore, 16–18 December 2011; pp. 160–167. [Google Scholar]

- Wu, S.; Bachrach, A.; Cardenas, C.; Schuler, W. Complexity metrics in an incremental right-corner parser. In Proceedings of the 48th Annual Meeting of the Association for Computational Linguistics; Association for Computational Linguistics: Stroudsburg, PA, USA, 2010; pp. 1189–1198. [Google Scholar]

- Frank, S.L. Uncertainty reduction as a measure of cognitive processing effort. In Proceedings of the 2010 Workshop on Cognitive Modeling and Computational Linguistics; Association for Computational Linguistics: Stroudsburg, PA, USA, 2010; pp. 81–89. [Google Scholar]

- Hale, J.T. What a rational parser would do. Cogn. Sci. 2011, 35, 399–443. [Google Scholar] [CrossRef]

- Frank, S.L. Uncertainty reduction as a measure of cognitive load in sentence comprehension. Top. Cogn. Sci. 2013, 5, 475–494. [Google Scholar] [CrossRef]

- Marr, D. Vision: A Computational Investigation Into the Human Representation and Processing of Visual Information; W. H. Freeman: San Francisco, CA, USA, 1982. [Google Scholar]

- O’Brien, E.J.; Albrecht, J.E. Comprehension strategies in the development of a mental model. J. Exp. Psychol. Learn. Mem. Cogn. 1992, 18, 777–784. [Google Scholar] [CrossRef]

- Albrecht, J.E.; O’Brien, E.J. Updating a mental model: Maintaining both local and global coherence. J. Exp. Psychol. Learn. Mem. Cogn. 1993, 19, 1061–1070. [Google Scholar] [CrossRef]

- Morris, R.K. Lexical and message-level sentence context effects on fixation times in reading. J. Exp. Psychol. Learn. Mem. Cogn. 1994, 20, 92–102. [Google Scholar] [CrossRef]

- Hess, D.J.; Foss, D.J.; Carroll, P. Effects of global and local context on lexical processing during language comprehension. J. Exp. Psychol. Gen. 1995, 124, 62–82. [Google Scholar] [CrossRef]

- Myers, J.L.; O’Brien, E.J. Accessing the discourse representation during reading. Discourse Process. 1998, 26, 131–157. [Google Scholar] [CrossRef]

- Altmann, G.T.; Kamide, Y. Incremental interpretation at verbs: Restricting the domain of subsequent reference. Cognition 1999, 73, 247–264. [Google Scholar] [CrossRef]

- Van Berkum, J.J.A.; Hagoort, P.; Brown, C.M. Semantic integration in sentences and discourse: Evidence from the N400. J. Cogn. Neurosci. 1999, 11, 657–671. [Google Scholar] [CrossRef] [PubMed]

- Van Berkum, J.J.A.; Zwitserlood, P.; Hagoort, P.; Brown, C.M. When and how do listeners relate a sentence to the wider discourse? Evidence from the N400 effect. Cogn. Brain Res. 2003, 17, 701–718. [Google Scholar] [CrossRef]

- Van Berkum, J.J.A.; Brown, C.M.; Zwitserlood, P.; Kooijman, V.; Hagoort, P. Anticipating upcoming words in discourse: Evidence from ERPs and reading times. J. Exp. Psychol. Learn. Mem. Cogn. 2005, 31, 443–467. [Google Scholar] [CrossRef]

- Garrod, S.; Terras, M. The contribution of lexical and situational knowledge to resolving discourse roles: Bonding and resolution. J. Mem. Lang. 2000, 42, 526–544. [Google Scholar] [CrossRef]

- Cook, A.E.; Myers, J.L. Processing discourse roles in scripted narratives: The influences of context and world knowledge. J. Mem. Lang. 2004, 50, 268–288. [Google Scholar] [CrossRef]

- Knoeferle, P.; Crocker, M.W.; Scheepers, C.; Pickering, M.J. The influence of the immediate visual context on incremental thematic role-assignment: Evidence from eye-movements in depicted events. Cognition 2005, 95, 95–127. [Google Scholar] [CrossRef]

- Knoeferle, P.; Habets, B.; Crocker, M.W.; Münte, T.F. Visual scenes trigger immediate syntactic reanalysis: Evidence from ERPs during situated spoken comprehension. Cereb. Cortex 2008, 18, 789–795. [Google Scholar] [CrossRef]

- Camblin, C.C.; Gordon, P.C.; Swaab, T.Y. The interplay of discourse congruence and lexical association during sentence processing: Evidence from ERPs and eye tracking. J. Mem. Lang. 2007, 56, 103–128. [Google Scholar] [CrossRef] [PubMed]

- Otten, M.; Van Berkum, J.J.A. Discourse-based word anticipation during language processing: Prediction or priming? Discourse Process. 2008, 45, 464–496. [Google Scholar] [CrossRef]

- Kuperberg, G.R.; Paczynski, M.; Ditman, T. Establishing causal coherence across sentences: An ERP study. J. Cogn. Neurosci. 2011, 23, 1230–1246. [Google Scholar] [CrossRef] [PubMed]

- Venhuizen, N.J.; Crocker, M.W.; Brouwer, H. Expectation-based Comprehension: Modeling the Interaction of World Knowledge and Linguistic Experience. Discourse Process. 2019, 56, 229–255. [Google Scholar] [CrossRef]

- Tourtouri, E.N.; Delogu, F.; Sikos, L.; Crocker, M.W. Rational over-specification in visually-situated comprehension and production. J. Cult. Cogn. Sci. 2019. [Google Scholar] [CrossRef]

- Venhuizen, N.J.; Hendriks, P.; Crocker, M.W.; Brouwer, H. A Framework for Distributional Formal Semantics. In Logic, Language, Information, and Computation; Iemhoff, R., Moortgat, M., de Queiroz, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; pp. 633–646. [Google Scholar] [CrossRef]

- Frank, S.L.; Koppen, M.; Noordman, L.G.; Vonk, W. Modeling knowledge-based inferences in story comprehension. Cogn. Sci. 2003, 27, 875–910. [Google Scholar] [CrossRef]

- Frank, S.L.; Haselager, W.F.; van Rooij, I. Connectionist semantic systematicity. Cognition 2009, 110, 358–379. [Google Scholar] [CrossRef]

- Bos, J.; Basile, V.; Evang, K.; Venhuizen, N.J.; Bjerva, J. The Groningen Meaning Bank. In Handbook of Linguistic Annotation; Ide, N., Pustejovsky, J., Eds.; Springer: Dordrecht, The Netherlands, 2017; pp. 463–496. [Google Scholar]

- Wanzare, L.D.A.; Zarcone, A.; Thater, S.; Pinkal, M. DeScript: A Crowdsourced Database of Event Sequence Descriptions for the Acquisition of High-quality Script Knowledge. In Proceedings of the Tenth International Conference on Language Resources and Evaluation (LREC 2016); Calzolari, N., Choukri, K., Declerck, T., Goggi, S., Grobelnik, M., Maegaard, B., Mariani, J., Mazo, H., Moreno, A., Odijk, J., et al., Eds.; European Language Resources Association (ELRA): Paris, France, 2016. [Google Scholar]

- Elman, J.L. Finding structure in time. Cogn. Sci. 1990, 14, 179–211. [Google Scholar] [CrossRef]

- Rohde, D.L.T. A Connectionist Model of Sentence Comprehension and Production. Ph.D. Thesis, Carnegie Mellon University, Pittsburgh, PA, USA, 2002. [Google Scholar]

- Calvillo, J.; Brouwer, H.; Crocker, M.W. Connectionist Semantic Systematicity in Language Production. In Proceedings of the 38th Annual Conference of the Cognitive Science Society; Papafragou, A., Grodner, D., Mirman, D., Trueswell, J.C., Eds.; Cognitive Science Society: Philadelphia, PA, USA, 2016; pp. 2555–3560. [Google Scholar]

- Brouwer, H.; Crocker, M.W.; Venhuizen, N.J.; Hoeks, J.C.J. A Neurocomputational Model of the N400 and the P600 in Language Processing. Cogn. Sci. 2017, 41, 1318–1352. [Google Scholar] [CrossRef]

- Hale, J.T. The information conveyed by words in sentences. J. Psycholinguist. Res. 2003, 32, 101–123. [Google Scholar] [CrossRef]

- Frank, S.L.; Vigliocco, G. Sentence comprehension as mental simulation: An information-theoretic perspective. Information 2011, 2, 672–696. [Google Scholar] [CrossRef]

- Singer, M. Validation in reading comprehension. Curr. Dir. Psychol. Sci. 2013, 22, 361–366. [Google Scholar] [CrossRef]

- O’Brien, E.J.; Cook, A.E. Coherence threshold and the continuity of processing: The RI-Val model of comprehension. Discourse Process. 2016, 53, 326–338. [Google Scholar] [CrossRef]

- Richter, T. Validation and comprehension of text information: Two sides of the same coin. Discourse Process. 2015, 52, 337–355. [Google Scholar] [CrossRef]

- Gerrig, R.J.; McKoon, G. The readiness is all: The functionality of memory-based text processing. Discourse Process. 1998, 26, 67–86. [Google Scholar] [CrossRef]

- Cook, A.E.; O’Brien, E.J. Knowledge activation, integration, and validation during narrative text comprehension. Discourse Process. 2014, 51, 26–49. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Venhuizen, N.J.; Crocker, M.W.; Brouwer, H. Semantic Entropy in Language Comprehension. Entropy 2019, 21, 1159. https://doi.org/10.3390/e21121159

Venhuizen NJ, Crocker MW, Brouwer H. Semantic Entropy in Language Comprehension. Entropy. 2019; 21(12):1159. https://doi.org/10.3390/e21121159

Chicago/Turabian StyleVenhuizen, Noortje J., Matthew W. Crocker, and Harm Brouwer. 2019. "Semantic Entropy in Language Comprehension" Entropy 21, no. 12: 1159. https://doi.org/10.3390/e21121159

APA StyleVenhuizen, N. J., Crocker, M. W., & Brouwer, H. (2019). Semantic Entropy in Language Comprehension. Entropy, 21(12), 1159. https://doi.org/10.3390/e21121159