An Improved Belief Entropy to Measure Uncertainty of Basic Probability Assignments Based on Deng Entropy and Belief Interval

Abstract

1. Introduction

2. Preliminaries

2.1. D-S Theory

2.2. Shannon Entropy and Derivatives for D-S Framework

2.3. Deng Entropy

3. The Improved Belief Entropy

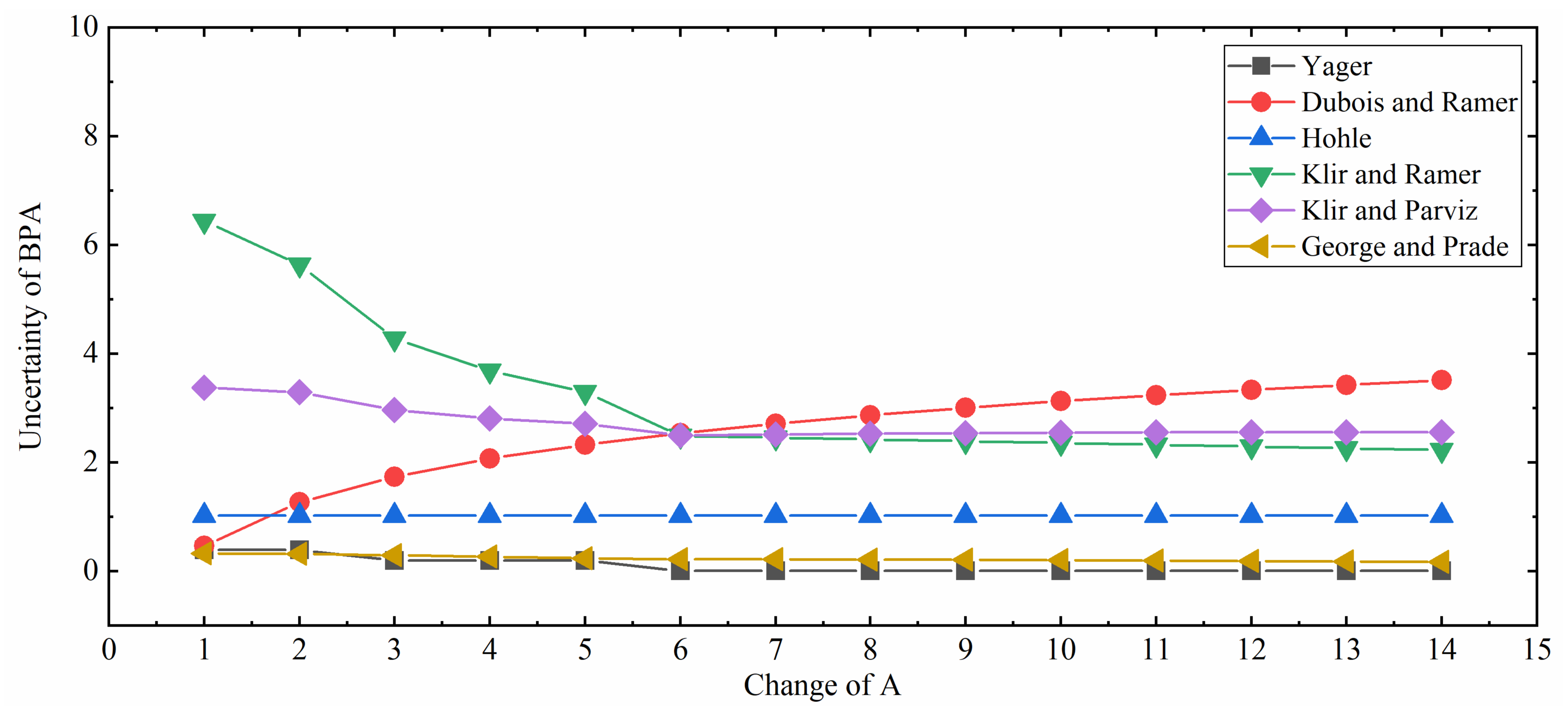

4. Numerical Examples

4.1. Example 1

4.2. Example 2

4.3. Example 3

4.4. Example 4

4.5. Example 5

5. Application

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Haukaas, T.; Der Kiureghian, A. Methods and object-oriented software for FE reliability and sensitivity analysis with application to a bridge structure. J. Comput. Civil. Eng. 2007, 21, 151–163. [Google Scholar] [CrossRef]

- Hattis, D.; Anderson, E.L. What should be the implications of uncertainty, variability, and inherent “biases”/“conservatis” for risk management decision-making? Risk Anal. 1999, 19, 95–107. [Google Scholar] [CrossRef]

- Der Kiureghian, A.; Ditlevsen, O. Aleatory or epistemic? Does it matter? Struct. Saf. 2009, 31, 105–112. [Google Scholar] [CrossRef]

- Zhou, D.; Tang, Y.; Jiang, W. An improved belief entropy and its application in decision-making. Complexity 2017, 2017, 4359195. [Google Scholar] [CrossRef]

- Deng, X.; Xiao, F.; Deng, Y. An improved distance-based total uncertainty measure in belief function theory. Appl. Intell. 2017, 46, 898–915. [Google Scholar] [CrossRef]

- Rényi, A. On measures of entropy and information. In Proceedings of the Fourth Berkeley Symposium on Mathematical Statistics and Probability; Contributions to the Theory of Statistics; The Regents of the University of California: Oakland, CA, USA, 1961; Volume 1. [Google Scholar]

- Shannon, C.E. A mathematical theory of communication. ACMSIGMOBILE Mob. Comput. Commun. Rev. 2001, 5, 3–55. [Google Scholar] [CrossRef]

- Yin, L.; Deng, Y. Toward uncertainty of weighted networks: An entropy-based model. Physica A 2018, 508, 176–186. [Google Scholar] [CrossRef]

- Zavadskas, E.K.; Podvezko, V. Integrated determination of objective criteria weights in MCDM. Int. J. Inf. Technol. Decis. Mak. 2016, 15, 267–283. [Google Scholar] [CrossRef]

- Krylovas, A.; Kosareva, N.; Zavadskas, E.K. WEBIRA-comparative analysis of weight balancing method. Int. J. Comput. Commun. Control 2018, 12, 238–253. [Google Scholar] [CrossRef]

- Dempster, A.P. Upper and lower probabilities induced by a multivalued mapping. In Classic Works of the Dempster-Shafer Theory of Belief Functions; Springer: Berlin/Heidelberg, Germany, 2008; pp. 57–72. [Google Scholar]

- Shafer, G. A Mathematical Theory of Evidence; Princeton University Press: Princeton, NJ, USA, 1976; Volume 42. [Google Scholar]

- He, Z.; Jiang, W. A new belief Markov chain model and its application in inventory prediction. Int. J. Prod. Res. 2018, 56, 2800–2817. [Google Scholar] [CrossRef]

- Deng, X. Analyzing the monotonicity of belief interval based uncertainty measures in belief function theory. Int. J. Intell. Syst. 2018, 33, 1869–1879. [Google Scholar] [CrossRef]

- Dong, Y.; Zhang, J.; Li, Z.; Hu, Y.; Deng, Y. Combination of evidential sensor reports with distance function and belief entropy in fault diagnosis. Int. J. Comput. Commun. Control 2019, 14, 329–343. [Google Scholar] [CrossRef]

- Du, W.B.; Cao, X.B.; Hu, M.B.; Wang, W.X. Asymmetric cost in snowdrift game on scale-free networks. EPL 2009, 87, 60004. [Google Scholar] [CrossRef]

- Jiang, W.; Yang, Y.; Luo, Y.; Qin, X. Determining basic probability assignment based on the improved similarity measures of generalized fuzzy numbers. Int. J. Comput. Commun. Control 2015, 10, 333–347. [Google Scholar] [CrossRef]

- Neshat, A.; Pradhan, B. Risk assessment of groundwater pollution with a new methodological framework: Application of Dempster-Shafer theory and GIS. Nat. Hazards 2015, 78, 1565–1585. [Google Scholar] [CrossRef]

- Zhang, X.; Mahadevan, S.; Deng, X. Reliability analysis with linguistic data: An evidential network approach. Reliab. Eng. Syst. Saf. 2017, 162, 111–121. [Google Scholar] [CrossRef]

- Fu, C.; Wang, Y. An interval difference based evidential reasoning approach with unknown attribute weights and utilities of assessment grades. Comput. Ind. Eng. 2015, 81, 109–117. [Google Scholar] [CrossRef]

- Jiang, W.; Xie, C.; Zhuang, M.; Shou, Y.; Tang, Y. Sensor data fusion with z-numbers and its application in fault diagnosis. Sensors 2016, 16, 1509. [Google Scholar] [CrossRef]

- Jiang, W.; Xie, C.; Zhuang, M.; Tang, Y. Failure mode and effects analysis based on a novel fuzzy evidential method. Appl. Soft. Comput. 2017, 57, 672–683. [Google Scholar] [CrossRef]

- Vasu, J.Z.; Deb, A.K.; Mukhopadhyay, S. MVEM-based fault diagnosis of automotive engines using Dempster-Shafer theory and multiple hypotheses testing. IEEE Trans. Syst. Man Cybern.-Syst. 2015, 45, 977–989. [Google Scholar] [CrossRef]

- Xu, S.; Jiang, W.; Deng, X.; Shou, Y. A modified Physarum-inspired model for the user equilibrium traffic assignment problem. Appl. Math. Model. 2018, 55, 340–353. [Google Scholar] [CrossRef]

- Roychowdhury, S.; Koozekanani, D.D.; Parhi, K.K. DREAM: Diabetic retinopathy analysis using machine learning. IEEE J. Biomed. Health Inform. 2013, 18, 1717–1728. [Google Scholar] [CrossRef] [PubMed]

- Perez, A.; Tabia, H.; Declercq, D.; Zanotti, A. Using the conflict in Dempster-Shafer evidence theory as a rejection criterion in classifier output combination for 3D human action recognition. Image Vis. Comput. 2016, 55, 149–157. [Google Scholar] [CrossRef]

- Bhattacharyya, A.; Saraswat, V.; Manimaran, P.; Rao, S. Evidence theoretic classification of ballistic missiles. Appl. Soft. Comput. 2015, 37, 479–489. [Google Scholar] [CrossRef]

- Fei, L.; Deng, Y. A new divergence measure for basic probability assignment and its applications in extremely uncertain environments. Int. J. Intell. Syst. 2019, 34, 584–600. [Google Scholar] [CrossRef]

- Denoeux, T. Maximum likelihood estimation from uncertain data in the belief function framework. IEEE Trans. Knowl. Data Eng. 2011, 25, 119–130. [Google Scholar] [CrossRef]

- Deng, X.; Jiang, W.; Wang, Z. Zero-sum polymatrix games with link uncertainty: A Dempster-Shafer theory solution. Appl. Math. Comput. 2019, 340, 101–112. [Google Scholar] [CrossRef]

- Dzitac, I. The fuzzification of classical structures: A general view. Int. J. Comput. Commun. Control 2015, 10, 12–28. [Google Scholar] [CrossRef]

- Jiang, W.; Wei, B.; Zhan, J.; Xie, C.; Zhou, D. A visibility graph power averaging aggregation operator: A methodology based on network analysis. Comput. Ind. Eng. 2016, 101, 260–268. [Google Scholar] [CrossRef]

- Moosavian, A.; Khazaee, M.; Najafi, G.; Kettner, M.; Mamat, R. Spark plug fault recognition based on sensor fusion and classifier combination using Dempster-Shafer evidence theory. Appl. Acoust. 2015, 93, 120–129. [Google Scholar] [CrossRef]

- Yang, J.B.; Xu, D.L. Evidential reasoning rule for evidence combination. Artif. Intell. 2013, 205, 1–29. [Google Scholar] [CrossRef]

- Klir, G.J.; Ramer, A. Uncertainty in the Dempster-Shafer theory: A critical re-examination. Int. J. Gen. Syst. 1990, 18, 155–166. [Google Scholar] [CrossRef]

- Dubois, D.J.; Wellman, M.P.; D’Ambrosio, B. Uncertainty in Artificial Intelligence: Proceedings of the Eighth Conference (1992); Morgan Kaufmann: Burlington, MA, USA, 2014. [Google Scholar]

- Dubois, D.; Prade, H. A note on measures of specificity for fuzzy sets. Int. J. Gen. Syst. 1985, 10, 279–283. [Google Scholar] [CrossRef]

- Hohle, U. Entropy with respect to plausibility measures. In Proceedings of the 12th IEEE International Symposium on Multiple-Valued Logic, Paris, France, 25–27 May 1982. [Google Scholar]

- Yager, R.R. Entropy and specificity in a mathematical theory of evidence. Int. J. Gen. Syst. 1983, 9, 249–260. [Google Scholar] [CrossRef]

- Klir, G.J.; Parviz, B. A note on the measure of discord. In Uncertainty in Artificial Intelligence; Elsevier: Amsterdam, The Netherlands, 1992; pp. 138–141. [Google Scholar]

- Körner, R.; Näther, W. On the specificity of evidences. Fuzzy Sets Syst. 1995, 71, 183–196. [Google Scholar] [CrossRef]

- Tang, Y.; Zhou, D.; Xu, S.; He, Z. A weighted belief entropy-based uncertainty measure for multi-sensor data fusion. Sensors 2017, 17, 928. [Google Scholar] [CrossRef]

- Deng, Y. Deng entropy. Chaos Solitons Fractals 2016, 91, 549–553. [Google Scholar] [CrossRef]

- Yuan, K.; Xiao, F.; Fei, L.; Kang, B.; Deng, Y. Modeling Sensor Reliability in Fault Diagnosis Based on Evidence Theory. Sensors 2016, 16, 113. [Google Scholar] [CrossRef]

- Yuan, K.; Xiao, F.; Fei, L.; Kang, B.; Deng, Y. Conflict management based on belief function entropy in sensor fusion. Springerplus 2016, 5, 638. [Google Scholar] [CrossRef]

- Pan, L.; Deng, Y. A new belief entropy to measure uncertainty of basic probability assignments based on belief function and plausibility function. Entropy 2018, 20, 842. [Google Scholar] [CrossRef]

- Yang, Y.; Han, D. A new distance-based total uncertainty measure in the theory of belief functions. Knowl.-Based Syst. 2016, 94, 114–123. [Google Scholar] [CrossRef]

- Li, Y.; Deng, Y. Generalized Ordered Propositions Fusion Based on Belief Entropy. Int. J. Comput. Commun. Control 2018, 13, 792–807. [Google Scholar] [CrossRef]

- Wang, X.; Song, Y. Uncertainty measure in evidence theory with its applications. Appl. Intell. 2018, 48, 1672–1688. [Google Scholar] [CrossRef]

- Pan, Q.; Zhou, D.; Tang, Y.; Li, X.; Huang, J. A Novel Belief Entropy for Measuring Uncertainty in Dempster-Shafer Evidence Theory Framework Based on Plausibility Transformation and Weighted Hartley Entropy. Entropy 2019, 21, 163. [Google Scholar] [CrossRef]

- George, T.; Pal, N.R. Quantification of conflict in Dempster-Shafer framework: A new approach. Int. J. Gen. Syst. 1996, 24, 407–423. [Google Scholar] [CrossRef]

- Jiang, W.; Wang, S. An Uncertainty Measure for Interval-valued Evidences. Int. J. Comput. Commun. Control 2017, 12, 631–644. [Google Scholar] [CrossRef]

- Deng, X.; Yong, H.; Yong, D.; Mahadevan, S. Environmental impact assessment based on D numbers. Expert Syst. Appl. 2014, 41, 635–643. [Google Scholar] [CrossRef]

- Klir, G.J.; Wierman, M.J. Uncertainty-Based Information: Elements of Generalized Information Theory; Physica: Berlin, Germany, 2013; Volume 15. [Google Scholar]

- Jiroušek, R.; Shenoy, P.P. A new definition of entropy of belief functions in the Dempster-Shafer theory. Int. J. Approx. Reason. 2018, 92, 49–65. [Google Scholar] [CrossRef]

- Abellan, J.; Masegosa, A. Requirements for total uncertainty measures in Dempster-Shafer theory of evidence. Int. J. Gen. Syst. 2008, 37, 733–747. [Google Scholar] [CrossRef]

- Fan, X.; Mingj, Z. Fault diagnosis of machines based on D-S evidence theory. Part 1: D-S evidence theory and its improvement. Pattern Recognit. Lett. 2006, 27, 366–376. [Google Scholar] [CrossRef]

- Zhou, D.; Tang, Y.; Jiang, W. A modified belief entropy in Dempster-Shafer framework. PLoS ONE 2017, 12, e0176832. [Google Scholar] [CrossRef] [PubMed]

| Cases | Pan and Zhou | Yager | Deng Entropy | Dubois and Prade | Höhle | Klir and Ramer | Klir and Parviz | George and Pal | Zhou et al. | The Improved Belief Entropy |

|---|---|---|---|---|---|---|---|---|---|---|

| A = {1} | 1.9757 | 0.3952 | 2.6623 | 0.4699 | 1.0219 | 6.4419 | 3.3804 | 0.3317 | 2.5180 | 5.9870 |

| A = {1, 2} | 2.3362 | 0.3952 | 3.9303 | 1.2699 | 1.0219 | 5.6419 | 3.2956 | 0.3210 | 3.7090 | 9.2881 |

| A = {1, 2, 3} | 2.5232 | 0.1997 | 4.9082 | 1.7379 | 1.0219 | 4.2823 | 2.9709 | 0.2943 | 4.6100 | 11.2461 |

| A = {1, 2, 3, 4} | 2.7085 | 0.1997 | 5.7878 | 2.0699 | 1.0219 | 3.6863 | 2.8132 | 0.2677 | 5.4127 | 12.9904 |

| A = {1, 2, 3, 4, 5} | 2.8749 | 0.1997 | 6.6256 | 2.3274 | 1.0219 | 3.2946 | 2.7121 | 0.2410 | 6.1736 | 14.6352 |

| A = {1, 2,..., 6} | 3.0516 | 0.0074 | 7.4441 | 2.5379 | 1.0219 | 2.4888 | 2.4992 | 0.2250 | 6.9151 | 16.3330 |

| A = {1, 2,..., 7} | 3.0647 | 0.0074 | 8.2532 | 2.7158 | 1.0219 | 2.4562 | 2.5198 | 0.2219 | 7.6473 | 17.9447 |

| A = {1, 2,..., 8} | 3.2042 | 0.0074 | 9.0578 | 2.8699 | 1.0219 | 2.4230 | 2.5336 | 0.2170 | 8.3749 | 19.6287 |

| A = {1, 2,..., 9} | 3.3300 | 0.0074 | 9.8600 | 3.0059 | 1.0219 | 2.3898 | 2.5431 | 0.2108 | 9.1002 | 21.3103 |

| A = {1, 2,..., 10} | 3.4445 | 0.0074 | 10.6612 | 3.1275 | 1.0219 | 2.3568 | 2.5494 | 0.2037 | 9.8244 | 22.9908 |

| A = {1, 2,..., 11} | 3.5497 | 0.0074 | 11.4617 | 3.2375 | 1.0219 | 2.3241 | 2.5536 | 0.1959 | 10.5480 | 24.6708 |

| A = {1, 2,..., 12} | 3.6469 | 0.0074 | 12.2620 | 3.3379 | 1.0219 | 2.2920 | 2.5562 | 0.1877 | 11.2714 | 26.3504 |

| A = {1, 2,..., 13} | 3.7374 | 0.0074 | 13.0622 | 3.4303 | 1.0219 | 2.2605 | 2.5577 | 0.1791 | 11.9946 | 28.0300 |

| A = {1, 2,..., 14} | 3.8219 | 0.0074 | 13.8622 | 3.5158 | 1.0219 | 2.2296 | 2.5582 | 0.1701 | 12.7177 | 29.7094 |

| Sensors Report | {F1} | {F2} | {F2,F3} | Θ |

|---|---|---|---|---|

| 0.60 | 0.10 | 0.10 | 0.20 | |

| 0.05 | 0.80 | 0.05 | 0.10 | |

| 0.70 | 0.10 | 0.10 | 0.10 |

| F1 | F2 | F2,F3 | Θ | |

|---|---|---|---|---|

| Fused Results | 0.4519 | 0.5048 | 0.0336 | 0.0096 |

| E1 | E2 | E3 | |

|---|---|---|---|

| 1.0000 | 0.2040 | 1.0000 | |

| 1.0000 | 0.5523 | 0.9660 | |

| 2.2909 | 1.3819 | 1.7960 |

| Methods | {F1} | {F2} | {F2,F3} | {Θ} |

|---|---|---|---|---|

| Dempster’s rule of combination [11,12] | 0.4519 | 0.5048 | 0.0336 | 0.0096 |

| Fan et al.’s method [57] | 0.8119 | 0.1096 | 0.0526 | 0.0259 |

| Yuan et al.’s method [44] | 0.8948 | 0.0739 | 0.0241 | 0.0072 |

| Zhou et al.’s method [58] | 0.8951 | 0.0738 | 0.0240 | 0.0071 |

| The improved belief entropy | 0.8763 | 0.0961 | 0.0219 | 0.0057 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Y.; Ji, D.; Yang, X.; Fei, L.; Zhai, C. An Improved Belief Entropy to Measure Uncertainty of Basic Probability Assignments Based on Deng Entropy and Belief Interval. Entropy 2019, 21, 1122. https://doi.org/10.3390/e21111122

Zhao Y, Ji D, Yang X, Fei L, Zhai C. An Improved Belief Entropy to Measure Uncertainty of Basic Probability Assignments Based on Deng Entropy and Belief Interval. Entropy. 2019; 21(11):1122. https://doi.org/10.3390/e21111122

Chicago/Turabian StyleZhao, Yonggang, Duofa Ji, Xiaodong Yang, Liguo Fei, and Changhai Zhai. 2019. "An Improved Belief Entropy to Measure Uncertainty of Basic Probability Assignments Based on Deng Entropy and Belief Interval" Entropy 21, no. 11: 1122. https://doi.org/10.3390/e21111122

APA StyleZhao, Y., Ji, D., Yang, X., Fei, L., & Zhai, C. (2019). An Improved Belief Entropy to Measure Uncertainty of Basic Probability Assignments Based on Deng Entropy and Belief Interval. Entropy, 21(11), 1122. https://doi.org/10.3390/e21111122