Texture Classification Using Spectral Entropy of Acoustic Signal Generated by a Human Echolocator

Abstract

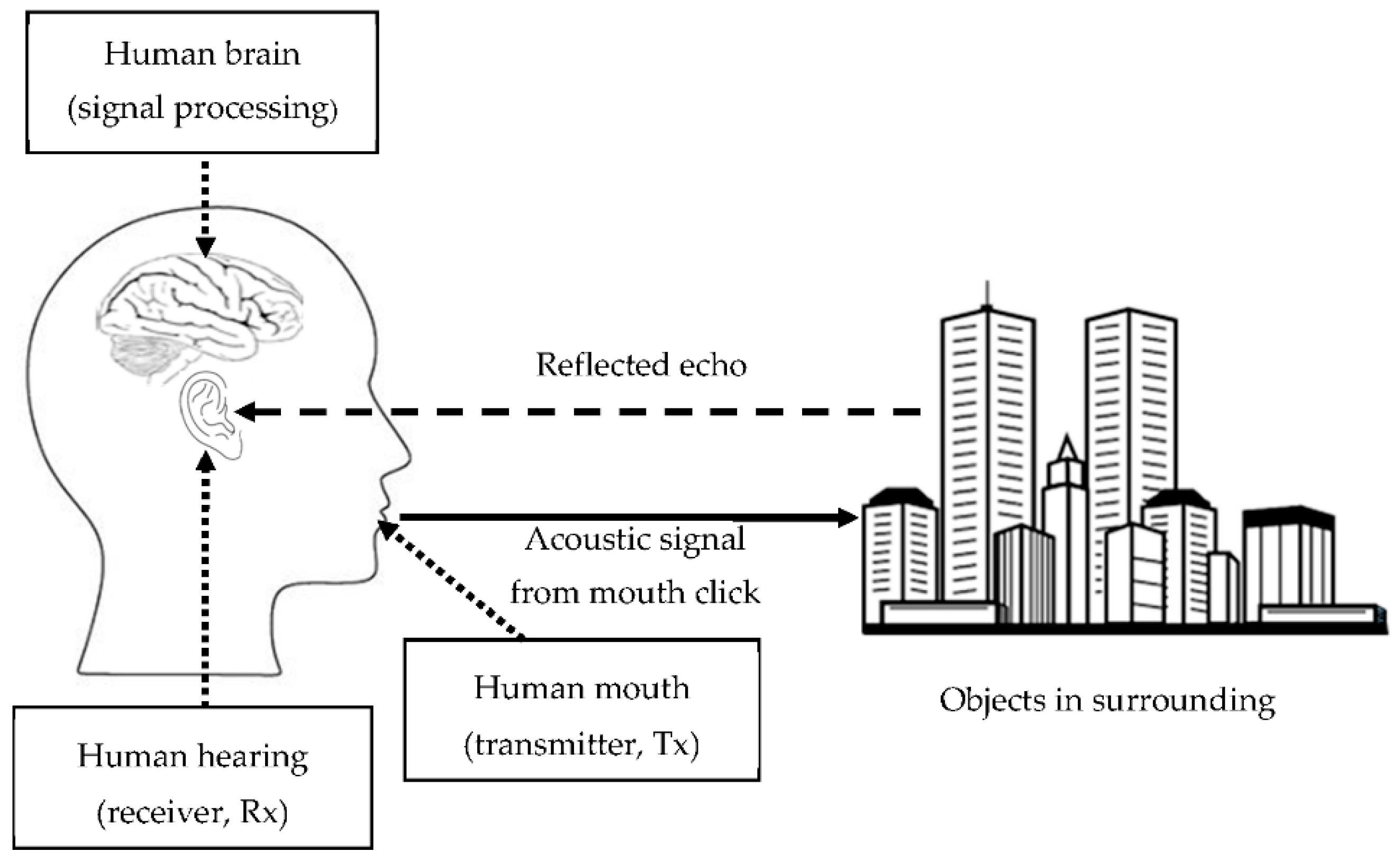

1. Introduction

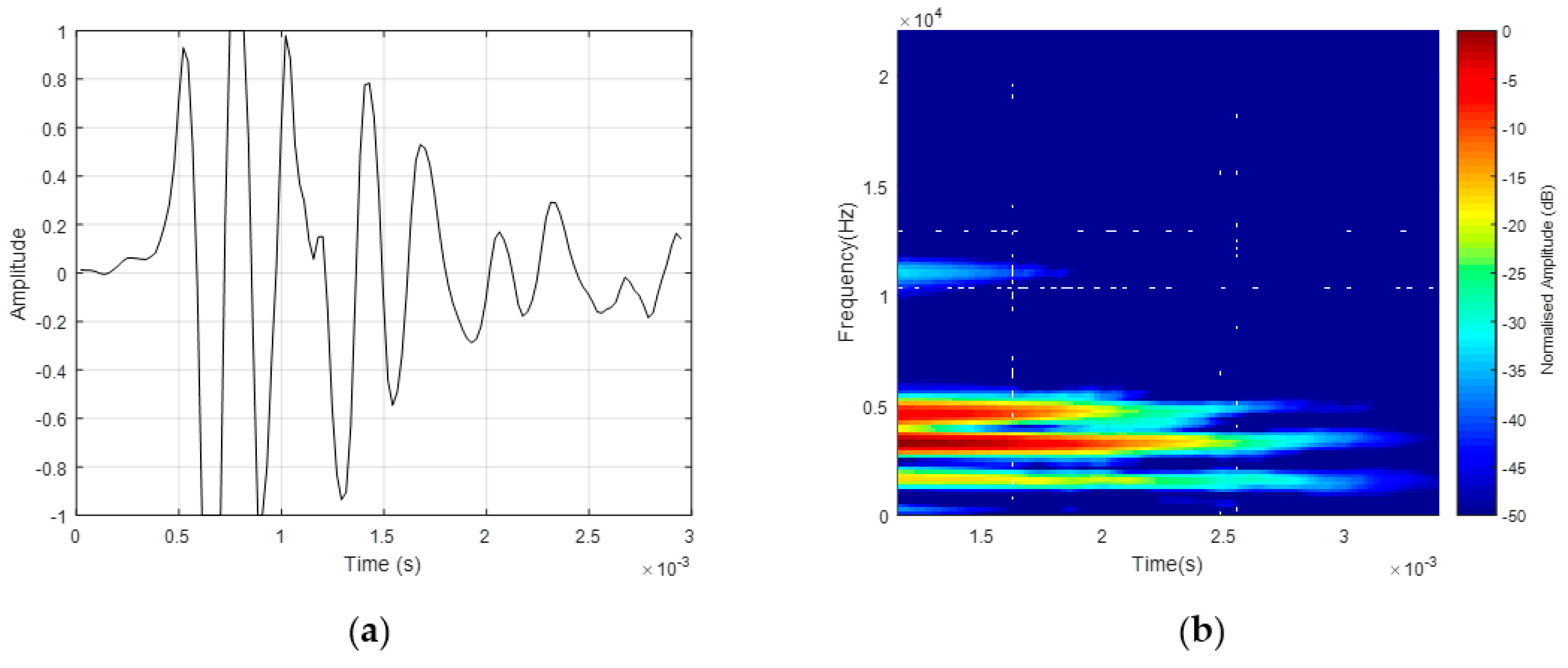

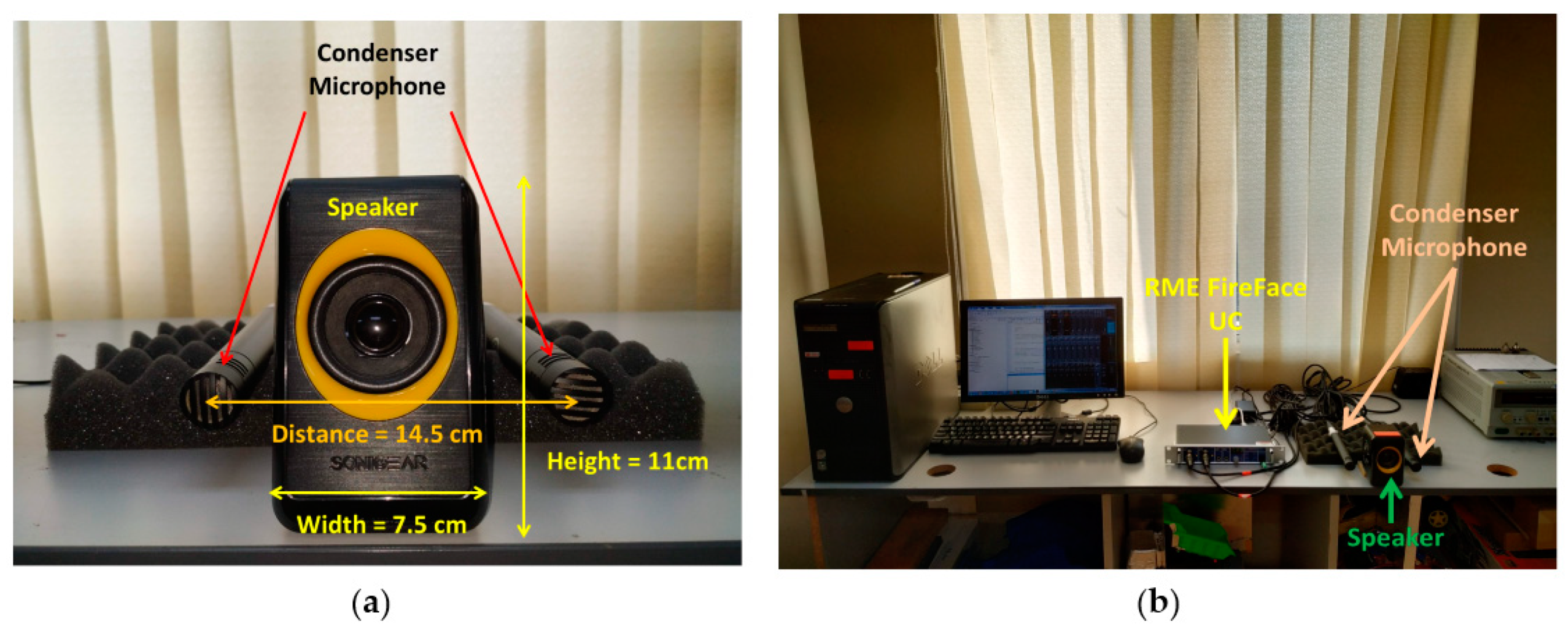

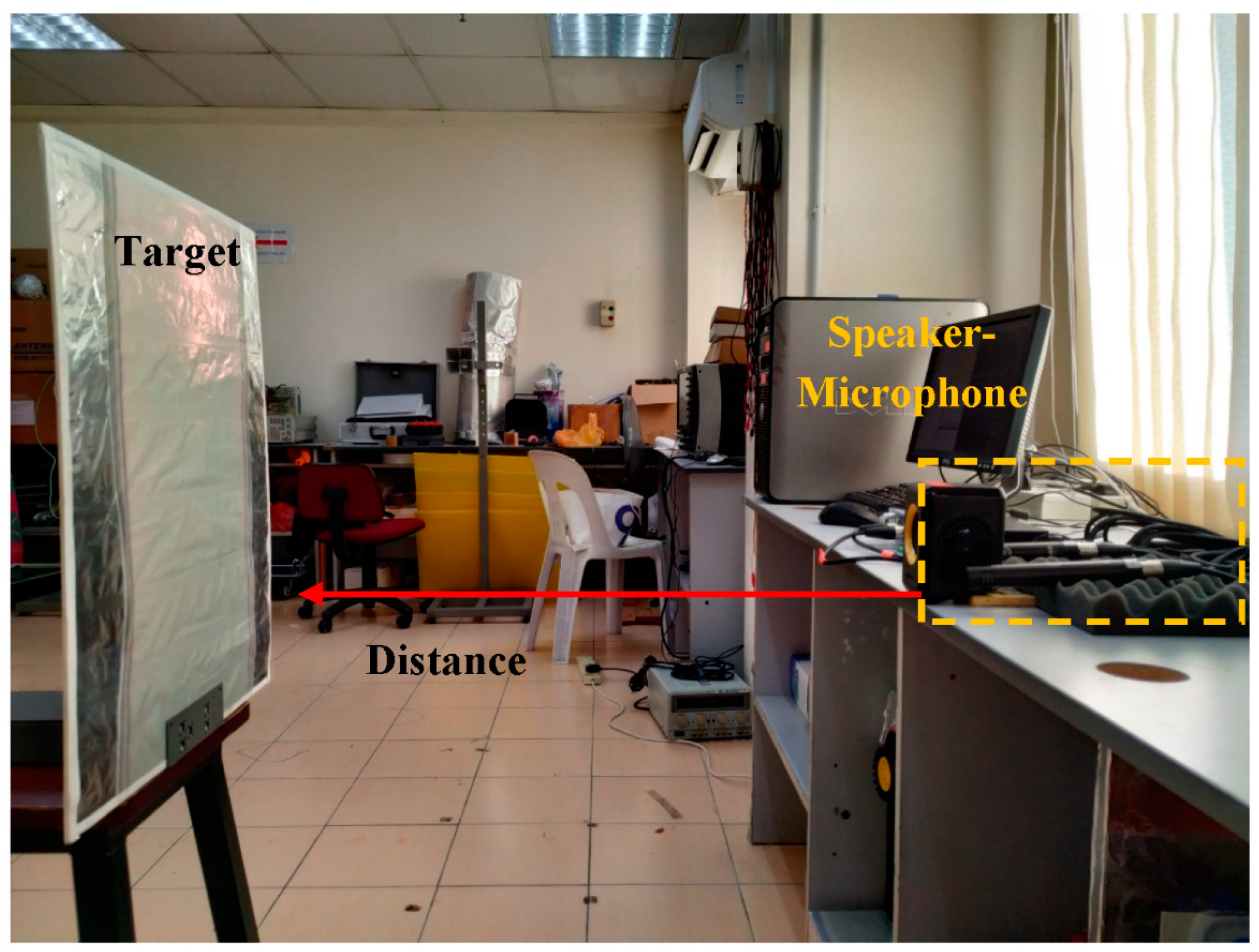

2. Signal Characteristics and Experimental Setup

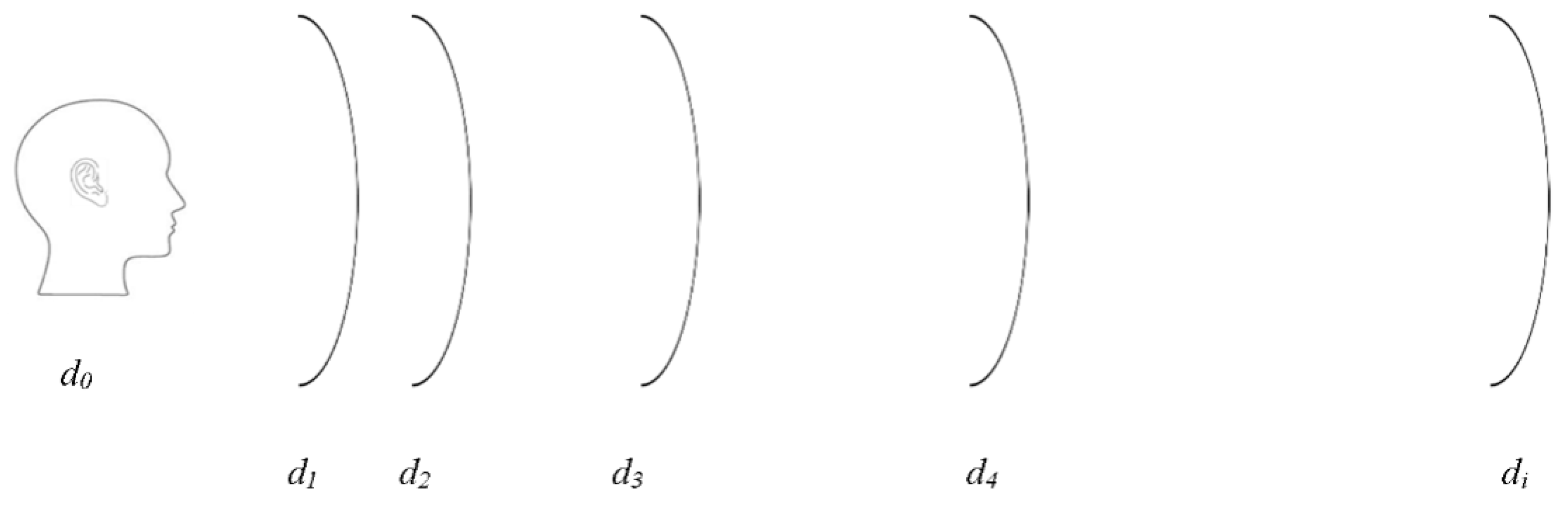

2.1. Human Mouth-Click Signal Characteristics

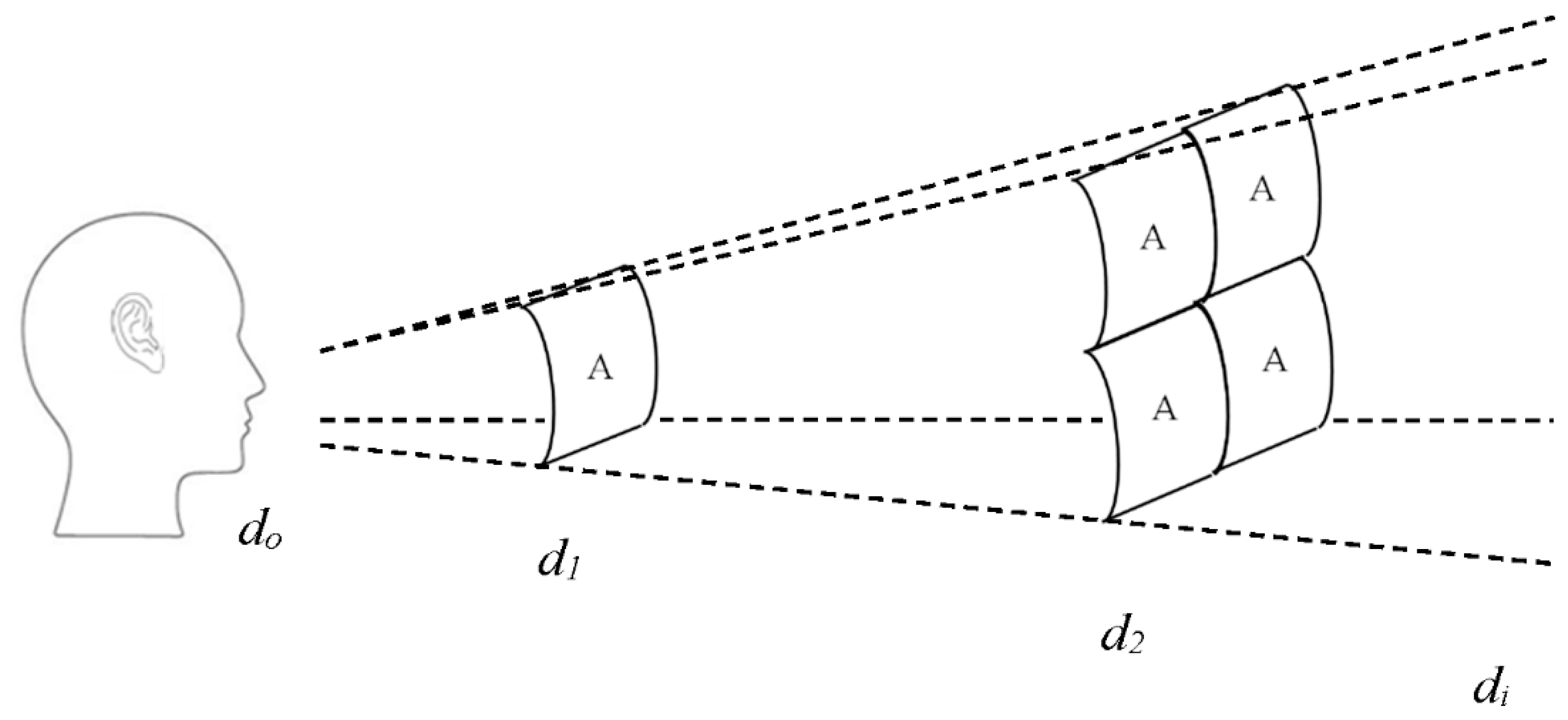

2.2. Human Mouth–Ear Experimental Setup

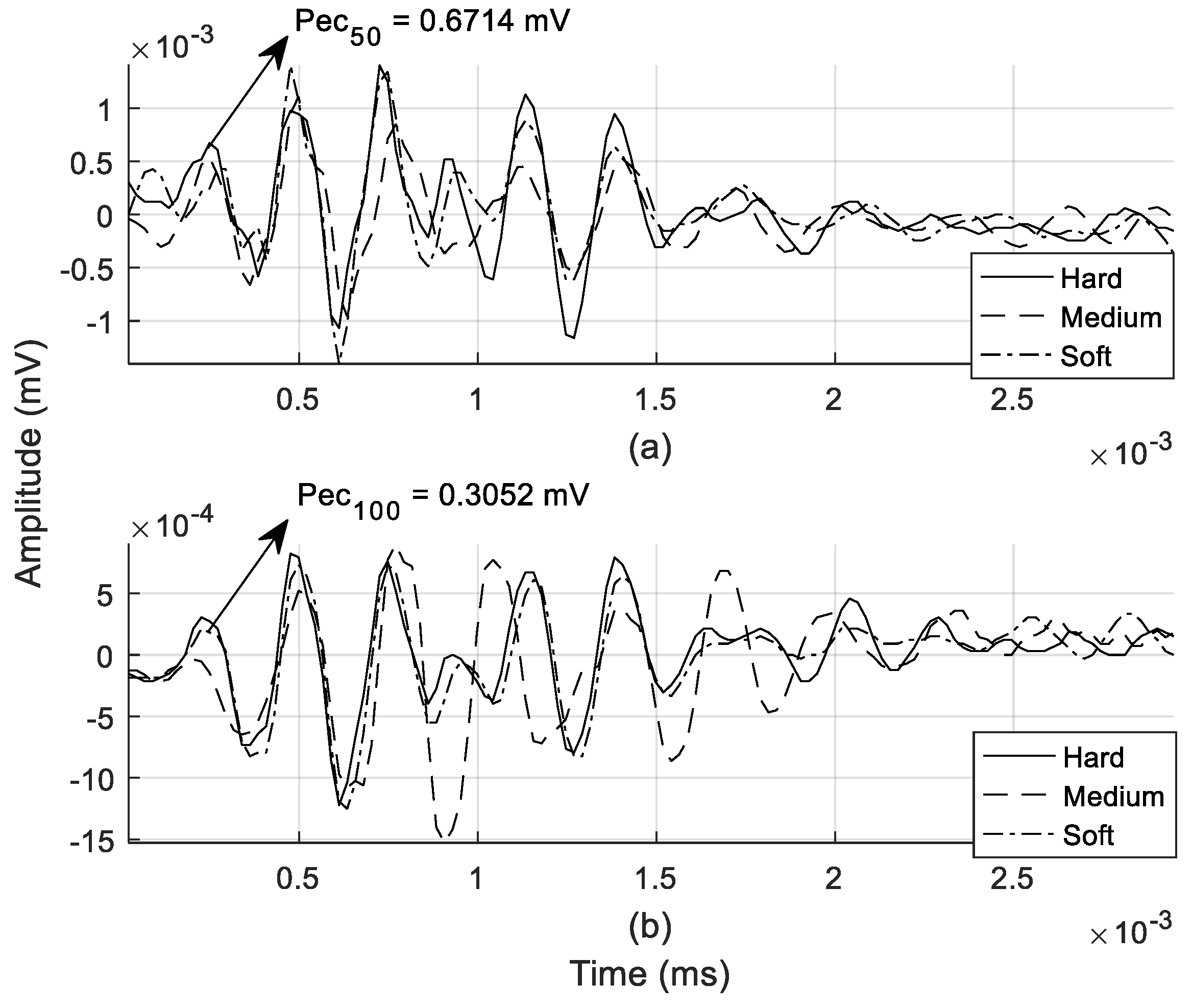

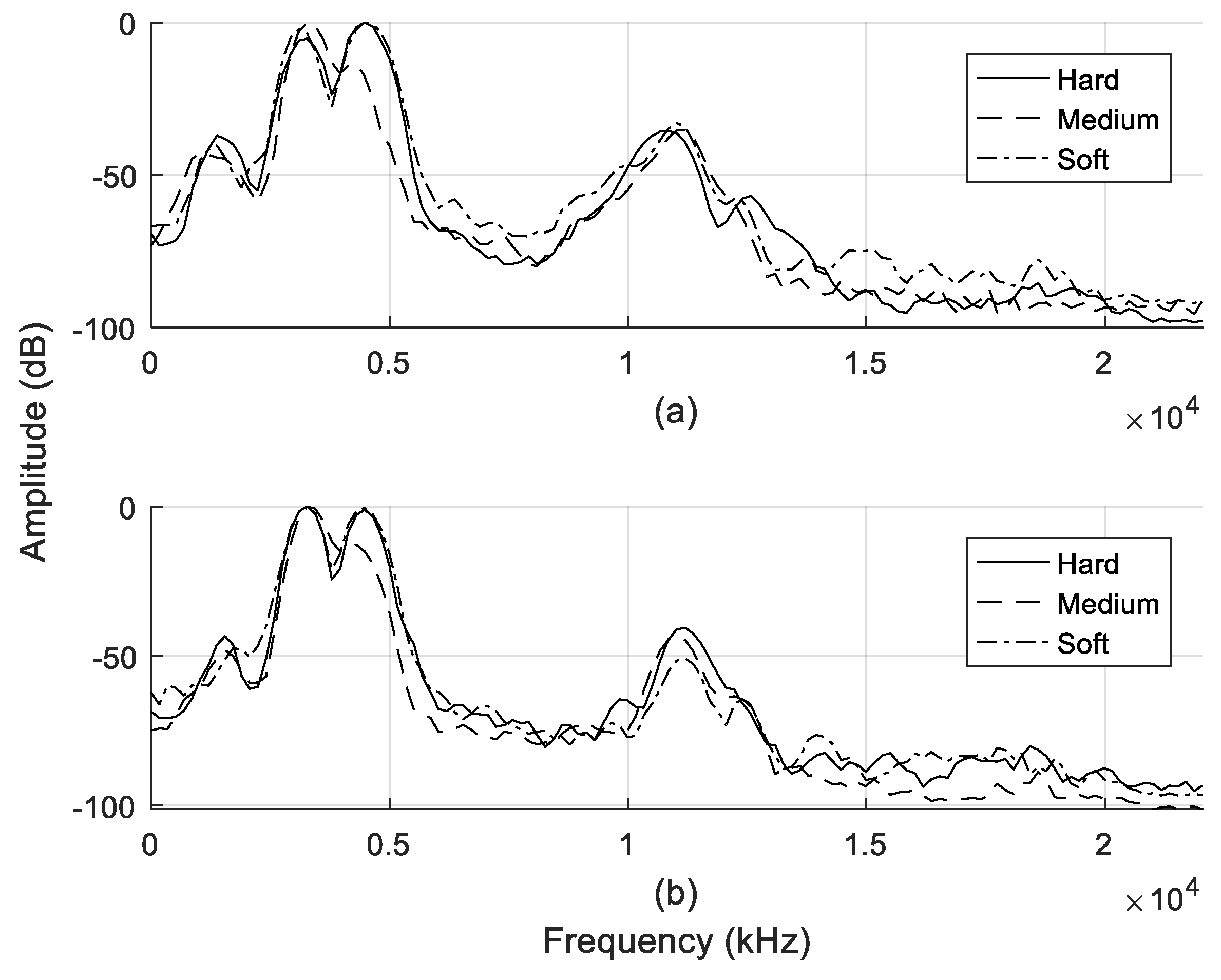

2.3. Signature of Reflected Echo Signal

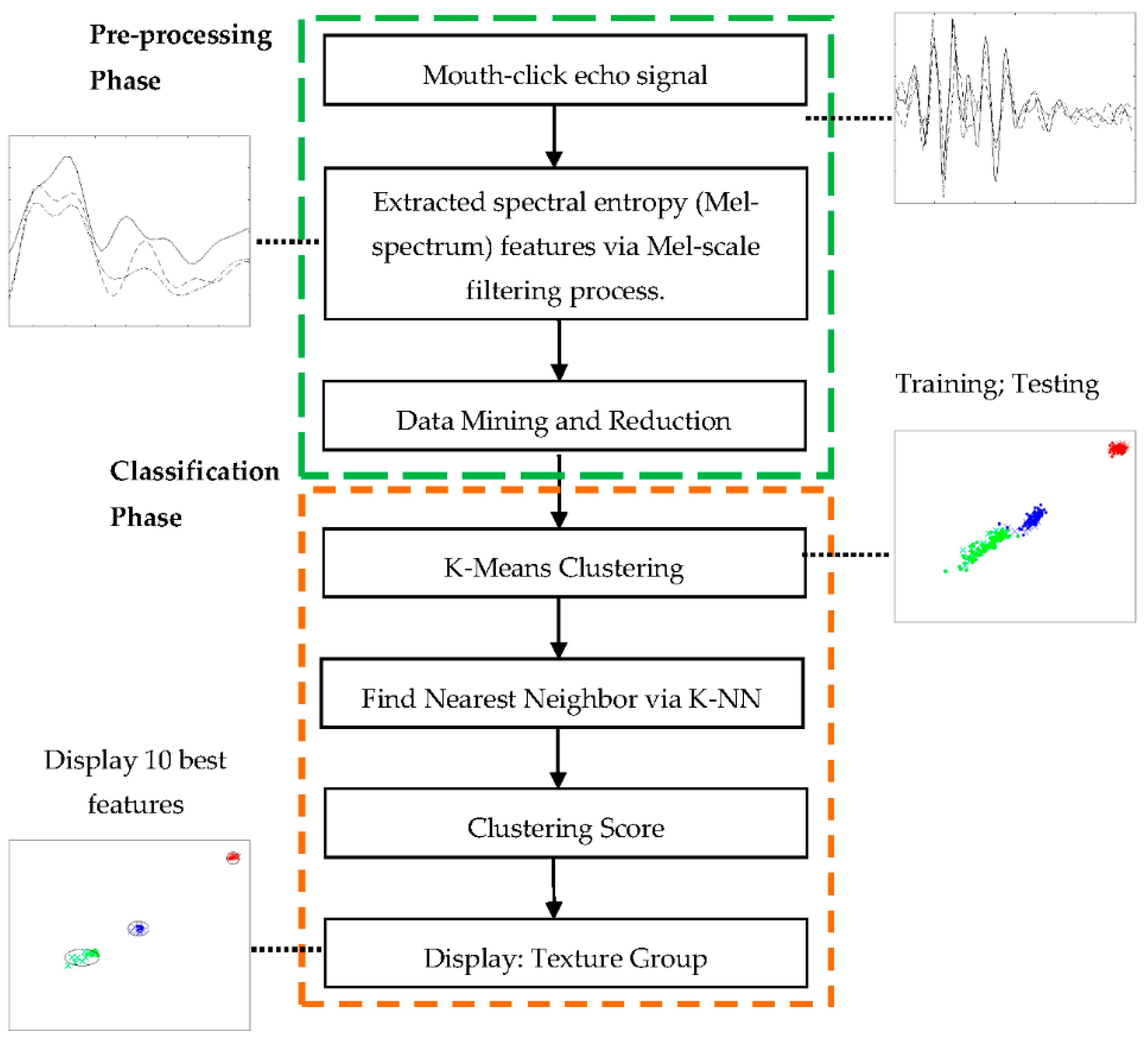

3. Spectral Entropy Features and Classification Framework

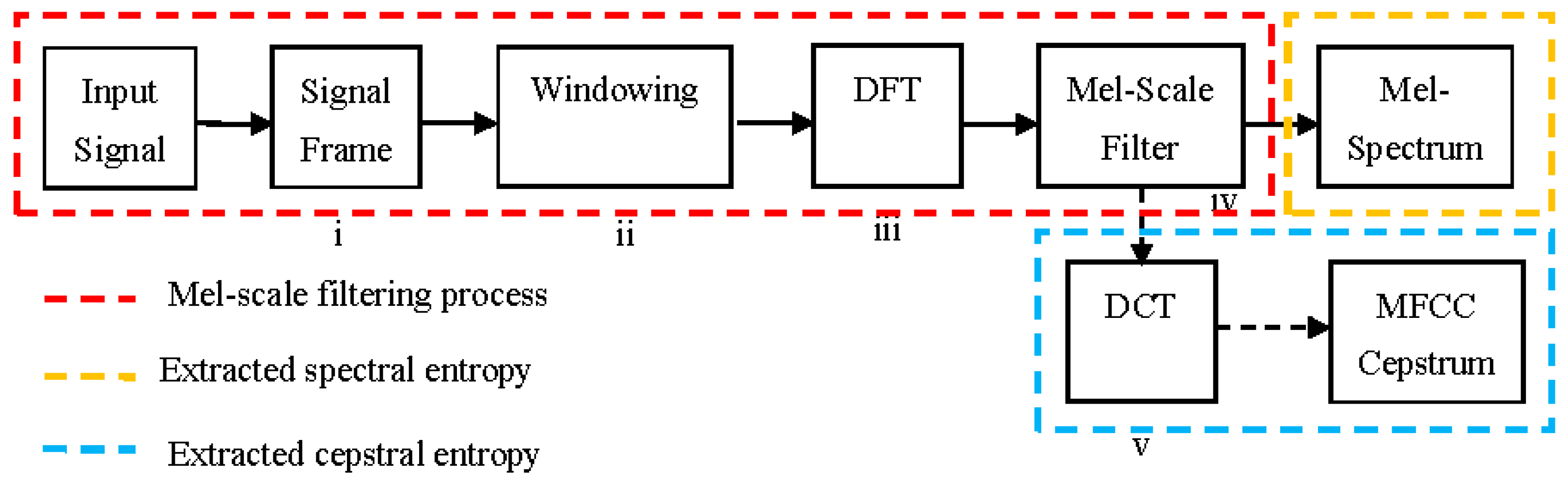

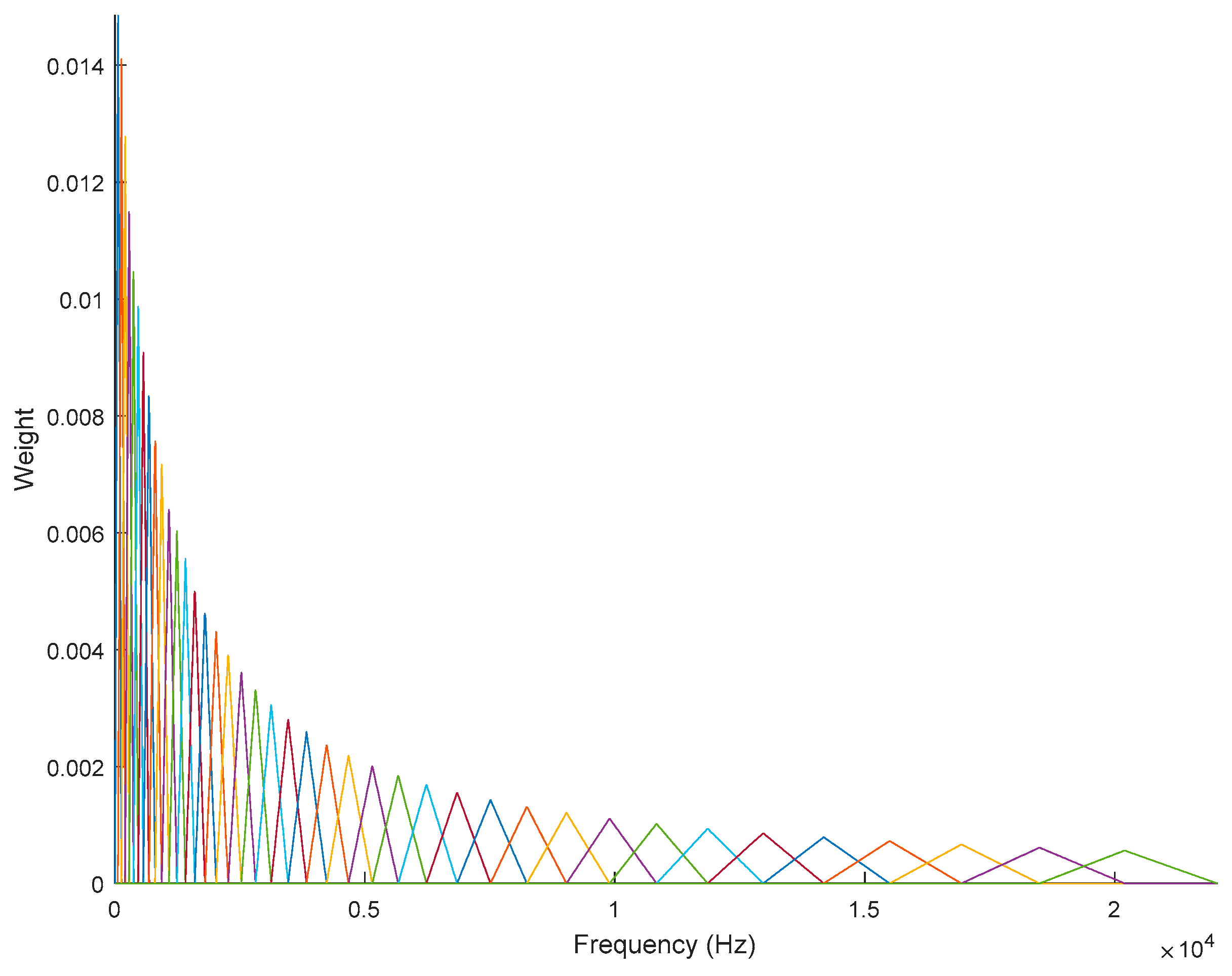

3.1. MFCC Structure

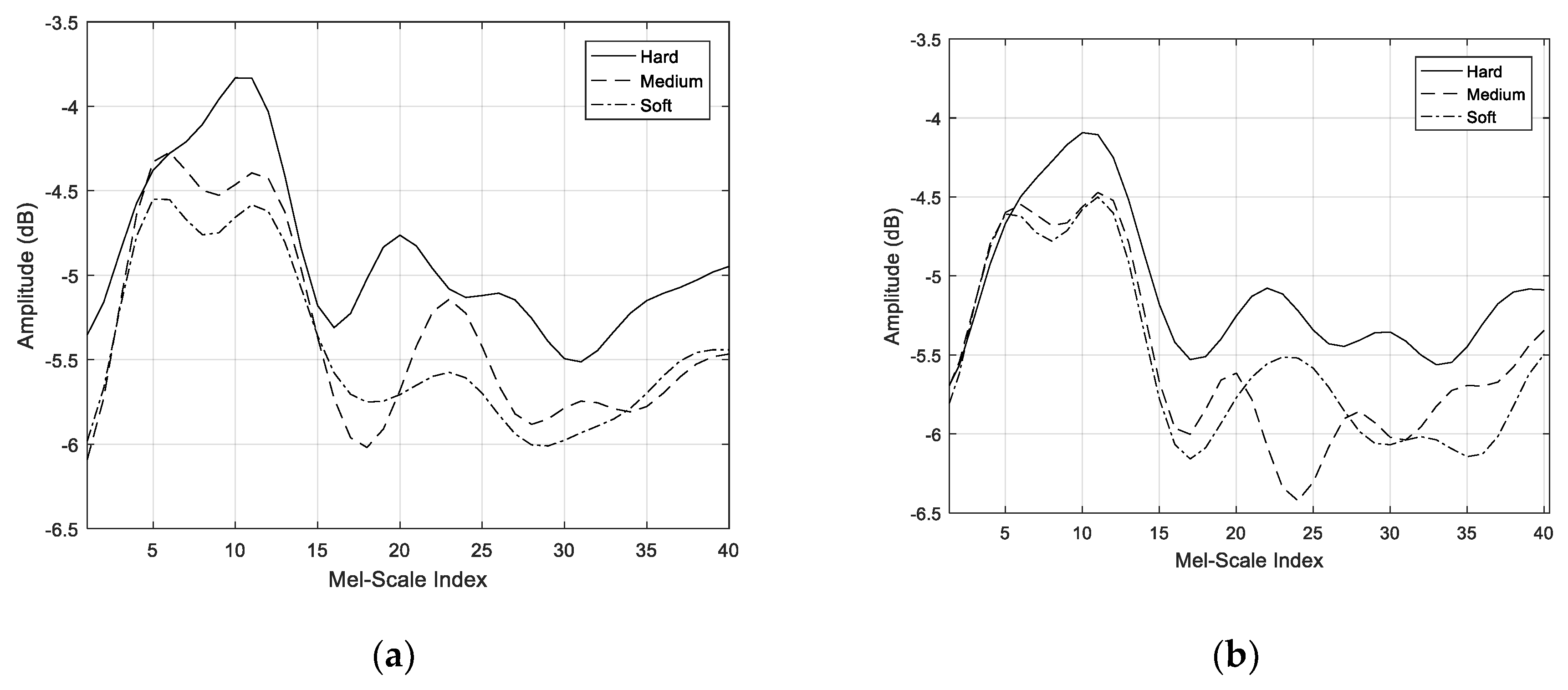

3.2. Mel-Spectrum Output

3.3. Classification Assignment Using K-Means and K-NN Validation

4. Results Evaluation and Discussion

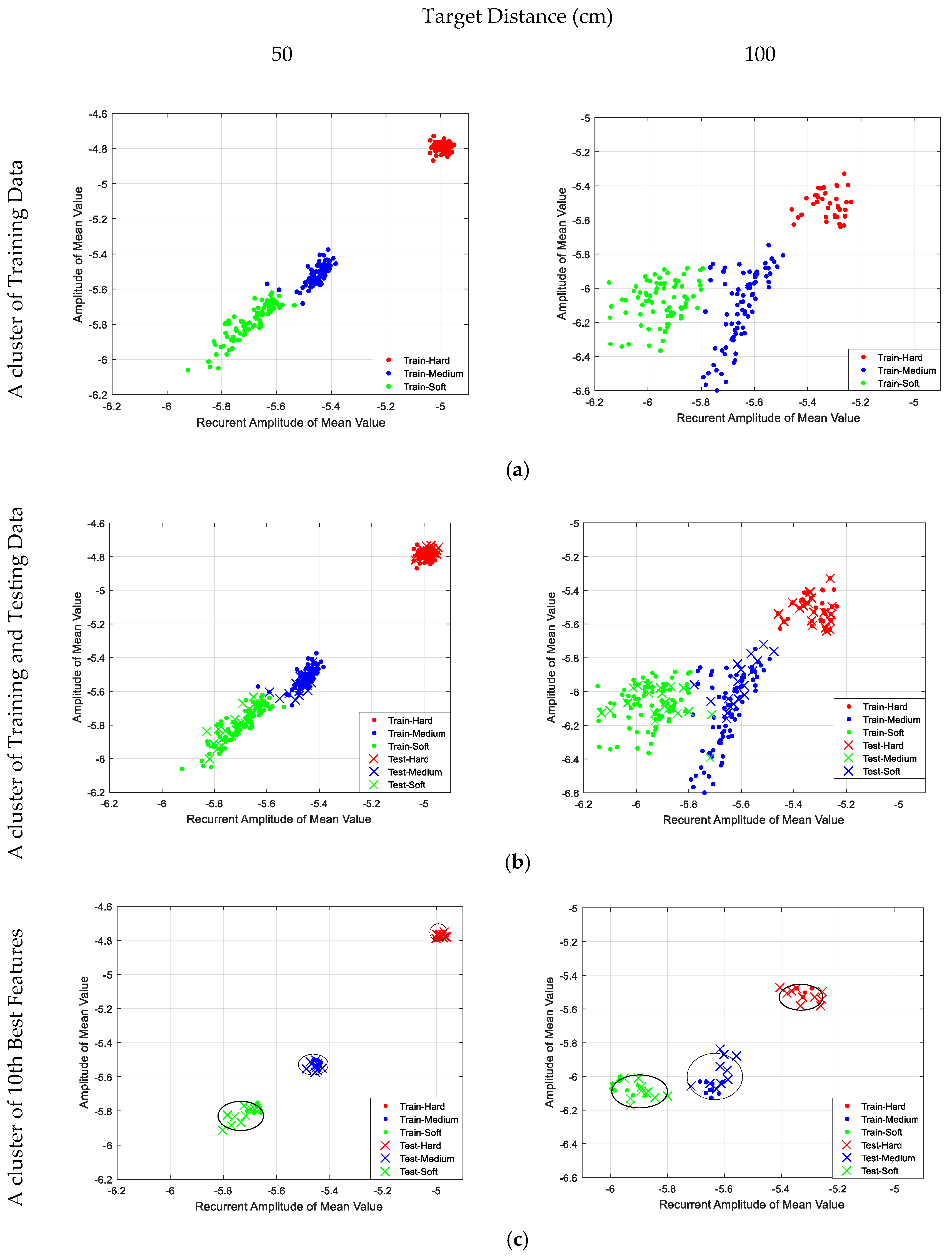

4.1. Clustering Results

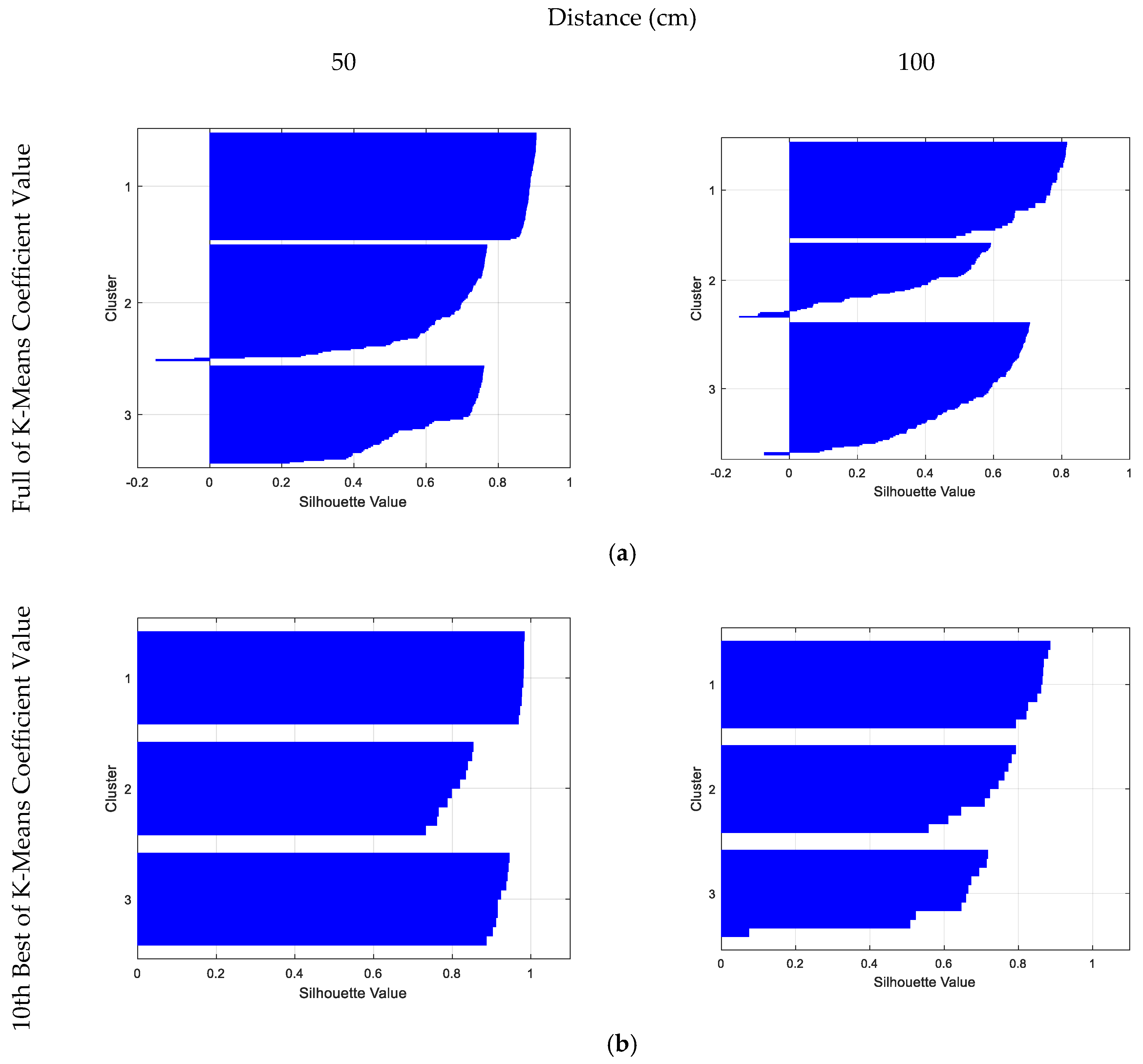

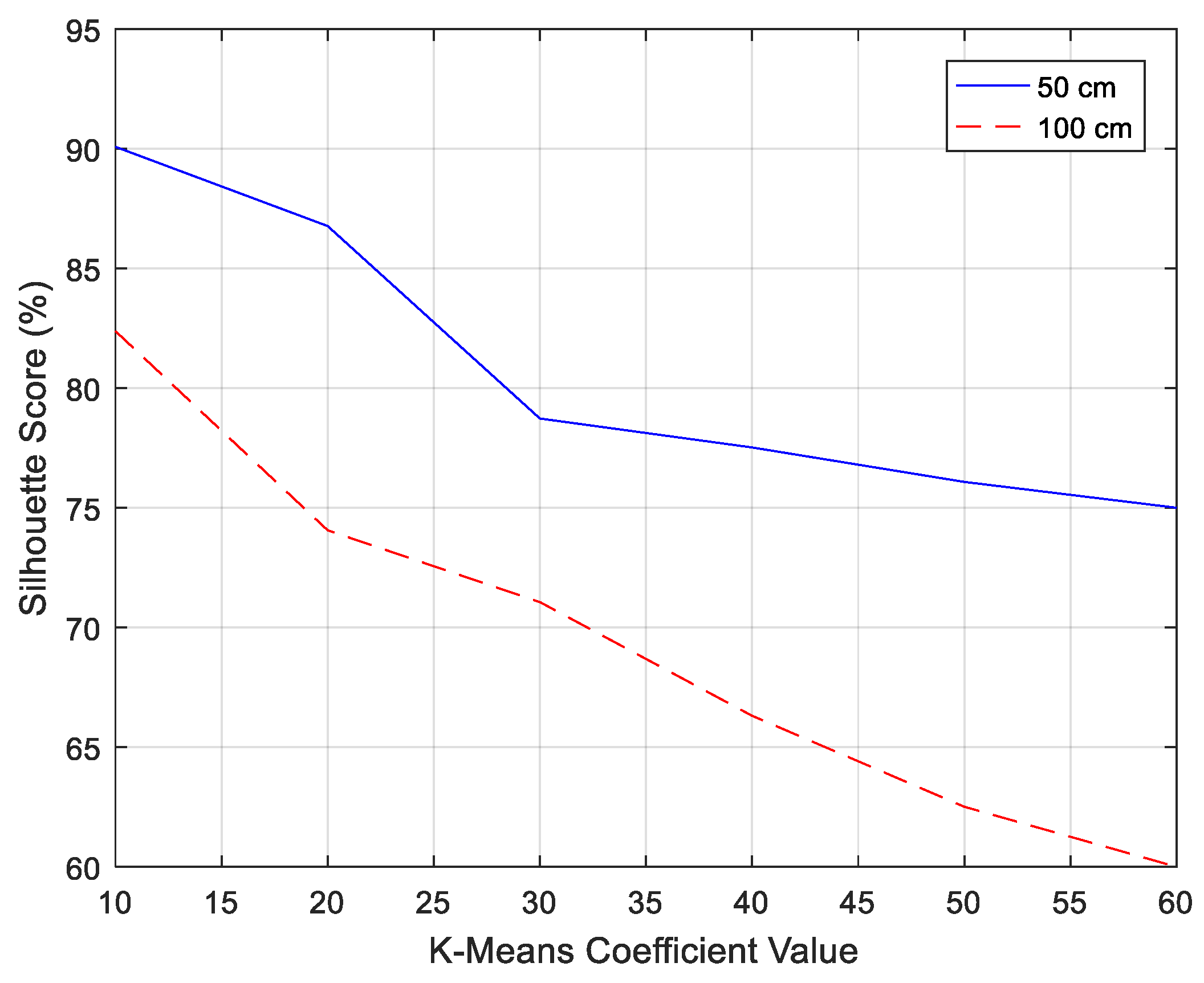

4.2. Silhouette Results

4.3. Confusion Matrix

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Griffin, D.R. Echolocation by blind men, bats and radar. Science 1944, 100, 589–590. [Google Scholar] [CrossRef] [PubMed]

- Abdullah, R.R.; Aziz, N.A.; Rashid, N.A.; Salah, A.A.; Hashim, F. Analysis on Target Detection and Classification in LTE Based Passive Forward Scattering Radar. Sensors 2016, 16, 1607. [Google Scholar] [CrossRef] [PubMed]

- Will, C.; Vaishnav, P.; Chakraborty, A.; Santra, A. Human Target Detection, Tracking, and Classification Using 24-GHz FMCW Radar. IEEE Sens. J. 2019, 19, 7283–7299. [Google Scholar] [CrossRef]

- Angelov, A.; Robertson, A.; Murray-Smith, R.; Fioranelli, F. Practical classification of different moving targets using automotive radar and deep neural networks. IET Radar Sonar Navig. 2018, 12, 1082–1089. [Google Scholar] [CrossRef]

- Supa, M.; Cotzin, M.; Dallenbach, K.M. Facial Vision: The Perception of Obstacles by the Blind. Am. J. Psychol. 1944, 57, 133–183. [Google Scholar] [CrossRef]

- Kellogg, W.N. Sonar System of the Blind: New research measures their accuracy in detecting the texture, size, and distance of objects by ear. Science 1962, 137, 399–404. [Google Scholar] [CrossRef] [PubMed]

- Rice, C.E. Human Echo Perception. Science 1967, 155, 656–664. [Google Scholar]

- Rojas, J.A.M.; Hermosilla, J.A.; Montero, R.S.; Espí, P.L.L. Physical analysis of several organic signals for human echolocation: Oral vacuum pulses. Acta Acust. United Acust. 2009, 95, 325–330. [Google Scholar] [CrossRef]

- Schenkman, B.N.; Nilsson, M.E. Human Echolocation: Blind and Sighted Persons’ Ability to Detect Sounds Recorded in the Presence of a Reflecting Object. Perception 2010, 39, 483–501. [Google Scholar] [CrossRef]

- Schenkman, B.N.; Nilsson, M.E. Human Echolocation: Pitch versus Loudness Information. Perception 2011, 40, 840–852. [Google Scholar] [CrossRef]

- Rice, C.E.; Feinstein, S.H. Sonar System of the Blind: Size Discrimination. Science 1965, 148, 1107–1108. [Google Scholar] [CrossRef] [PubMed]

- Milne, J.L.; Goodale, M.A.; Thaler, L. The role of head movements in the discrimination of 2-D shape by blind echolocation experts. Atten. Percept. Psychophys. 2014, 76, 1828–1837. [Google Scholar] [CrossRef] [PubMed]

- Hausfeld, S.; Power, R.P.; Gorta, A.; Harris, P. Echo perception of shape and texture by sighted subjects. Percept. Mot. Skills 1982, 55, 623–632. [Google Scholar] [CrossRef] [PubMed]

- DeLong, C.M.; Au, W.W.L.; Stamper, S.A. Echo features used by human listeners to discriminate among objects that vary in material or wall thickness: Implications for echolocating dolphins. J. Acoust. Soc. Am. 2007, 121, 605–617. [Google Scholar] [CrossRef] [PubMed]

- Norman, L.J.; Thaler, L. Human Echolocation for Target Detection Is More Accurate With Emissions Containing Higher Spectral Frequencies, and This Is Explained by Echo Intensity. Iperception 2018, 9, 204166951877698. [Google Scholar] [CrossRef] [PubMed]

- Smith, G.E.; Baker, C.J. Human echolocation waveform analysis. In Proceedings of the IET International Conference on Radar Systems, Glasgow, UK, 22–25 October 2012; p. 103. [Google Scholar]

- Zhang, X.; Reich, G.M.; Antoniou, M.; Cherniakov, M.; Baker, C.J.; Thaler, L.; Kish, D.; Smith, G.E. Echolocation in humans: Waveform analysis of tongue clicks. IEEE IET Lett. 2017, 53, 580–582. [Google Scholar] [CrossRef]

- Thaler, L.; Reich, G.M.; Zhang, X.; Wang, D.; Smith, G.E.; Tao, Z.; Abdullah, R.S.A.B.R.; Cherniakov, M.; Baker, C.J.; Kish, D. Mouth-clicks used by blind expert human echolocators–signal description and model based signal synthesis. PLoS Comput. Biol. 2017, 13, e1005670. [Google Scholar] [CrossRef] [PubMed]

- Purves, D.; Williams, S.M. Neuroscience, 3rd ed.; Sinauer Associates Inc.: Sunderland, MA, USA, 2004; Volume 3. [Google Scholar]

- Thaler, L.; Arnott, S.R.; Goodale, M.A. Neural correlates of natural human echolocation in early and late blind echolocation experts. PLoS ONE 2011, 6, e20162. [Google Scholar] [CrossRef] [PubMed]

- Mead, C. Neuromorphic electronic systems. Proc. IEEE 1990, 78, 1629–1636. [Google Scholar] [CrossRef]

- Abdullah, R.S.A.R.; Saleh, N.L.; Ahmad, S.M.S.; Rashid, N.E.A.; Reich, G.; Cherniakov, M.; Antoniou, M.; Thaler, L. Bio-inspired radar: Recognition of human echolocator tongue clicks signals. In Proceedings of the 2017 IEEE Asia Pacific Microwave Conference, Kuala Lumpur, Malaysia, 13–16 November 2017; Volume 1, pp. 861–864. [Google Scholar]

- Abdullah, R.S.A.R.; Saleh, N.L.; Rashid, N.E.A.; Ahmad, S.M.S. Bio-inspired signal detection mechanism for tongue click waveform used in human echolocation. Electron. Lett. 2017, 53, 1456–1458. [Google Scholar] [CrossRef]

- Abdullah, R.R.; Saleh, N.; Ahmad, S.; Salah, A.A.; Rashid, N.A. Detection of Human Echo Locator Waveform Using Gammatone Filter Processing. In Proceedings of the 2018 International Conference on Radar (RADAR), Brisbane, Australia, 30 August 2018; pp. 1–6. [Google Scholar]

- Abdullah, R.S.A.R.; Saleh, N.L.; Ahmad, S.M.S.; Salah, A.A.; Rashid, N.E.A. Ambiguity function analysis of human echolocator waveform by using gammatone filter processing. J. Eng. 2019, 2018, 1–5. [Google Scholar] [CrossRef]

- Anwary, A.R.; Yu, H.; Vassallo, M. Optimal Foot Location for Placing Wearable IMU Sensors and Automatic Feature Extraction for Gait Analysis. IEEE Sens. J. 2018, 18, 2555–2567. [Google Scholar] [CrossRef]

- Patterson, R.D.; Winter, I.M.; Carlyon, R.P. Basic Aspects of Hearing; Springer: New York, NY, USA, 2013; Volume 787. [Google Scholar]

- Kuttruff, H. Acoustics: An Introduction, 1st ed.; CRC Press: New York, NY, USA, 2007. [Google Scholar]

- Song, B.; Peng, L.; Fu, F.; Liu, M.; Zhang, H. Experimental and theoretical analysis of sound absorption properties of finely perforated wooden panels. Materials 2016, 9, 942. [Google Scholar] [CrossRef] [PubMed]

- Albert, D.G. Acoustic waveform inversion with application to seasonal snow covers. J. Acoust. Soc. Am. 2002, 109, 91. [Google Scholar] [CrossRef] [PubMed]

- Xie, W.; Xie, Z.; Zhao, F.; Ren, B. POLSAR Image Classification via Clustering-WAE Classification Model. IEEE Access 2018, 6, 40041–40049. [Google Scholar] [CrossRef]

- Phan, H.; Andreotti, F.; Cooray, N.; Chen, O.Y.; de Vos, M. Joint Classification and Prediction CNN Framework for Automatic Sleep Stage Classification. IEEE Trans. Biomed. Eng. 2019, 66, 1285–1296. [Google Scholar] [CrossRef] [PubMed]

- Meltzner, G.S.; Heaton, J.T.; Deng, Y.; de Luca, G.; Roy, S.H.; Kline, J.C. Silent Speech Recognition as an Alternative Communication Device for Persons With Laryngectomy. IEEE/ACM Trans. Audio Speech Lang. Process. 2017, 25, 2386–2398. [Google Scholar] [CrossRef] [PubMed]

- Grozdic, D.T.; Jovicic, S.T. Whispered Speech Recognition Using Deep Denoising Autoencoder and Inverse Filtering. IEEE/ACM Trans. Audio Speech Lang. Process. 2017, 25, 2313–2322. [Google Scholar] [CrossRef]

- Tabibi, S.; Kegel, A.; Lai, W.K.; Dillier, N. Investigating the use of a Gammatone filterbank for a cochlear implant coding strategy. J. Neurosci. Methods 2017, 277, 63–74. [Google Scholar] [CrossRef]

- Qi, J.; Wang, D.; Jiang, Y.; Liu, R. Auditory features based on Gammatone filters for robust speech recognition. In Proceedings of the 2013 IEEE International Symposium on Circuits and Systems (ISCAS), Beijing, China, 19–23 May 2013; pp. 305–308. [Google Scholar]

- Eronen, A.J.; Peltonen, V.T.; Tuomi, J.T.; Klapuri, A.; Fagerlund, S.; Sorsa, T.; Lorho, G.; Huopaniemi, J. Audio-based context recognition. IEEE Trans. Audio Speech Lang. Process. 2006, 14, 321–329. [Google Scholar] [CrossRef]

- Cai, R.; Lu, L.; Hanjalic, A. Co-clustering for auditory scene categorization. IEEE Trans. Multimed. 2008, 10, 596–606. [Google Scholar] [CrossRef]

- Chu, S.; Narayanan, S.; Kuo, C.-C.J. Environmental Sound Recognition with Time–Frequency Audio Features. IEEE Trans. Audio Speech Lang. Process. 2009, 17, 1142–1158. [Google Scholar] [CrossRef]

- Ballan, L.; Bazzica, A.; Bertini, M.; del Bimbo, A.; Serra, G. Deep networks for audio event classification in soccer videos. In Proceedings of the 2009 IEEE International Conference on Multimedia and Expo, New York, NY, USA, 28 June–3 July 2009; pp. 474–477. [Google Scholar]

- Michalak, H.; Okarma, K. Improvement of image binarization methods using image preprocessing with local entropy filtering for alphanumerical character recognition purposes. Entropy 2019, 21, 562. [Google Scholar] [CrossRef]

- Li, Z.; Li, Y.; Zhang, K. A Feature Extraction Method of Ship-Radiated Noise Based on Fluctuation-Based Dispersion Entropy and Intrinsic Time-Scale Decomposition. Entropy 2019, 21, 693. [Google Scholar] [CrossRef]

- Li, J.; Ke, L.; Du, Q. Classification of Heart Sounds Based on the Wavelet Fractal and Twin Support Vector Machine. Entropy 2019, 21, 472. [Google Scholar] [CrossRef]

- Chen, Z.; Li, Y.; Cao, R.; Ali, W.; Yu, J.; Liang, H. A New Feature Extraction Method for Ship-Radiated Noise Based on Improved CEEMDAN, Normalized Mutual Information and Multiscale Improved Permutation Entropy. Entropy 2019, 21, 624. [Google Scholar] [CrossRef]

| Texture | Material | Dimension (cm) | Images |

|---|---|---|---|

| Hard | Flat PVC board wrapped with aluminum foil | L = 50 H = 50 W = 0.5 |  |

| Medium | Rubber mat | L = 50 H = 50 W = 0.8 |  |

| Soft | Sponge | L = 50 H = 50 W = 4 |  |

| Distance | Texture | Dataset of Signal (Recorded by Microphone) | ||||

|---|---|---|---|---|---|---|

| Training | ANOVA Probability | Testing | ||||

| Left | Right | Left | Right | |||

| 50 cm | Hard | 40 | 40 | 0.9990 | 10 | 10 |

| Medium | 40 | 40 | 0.9810 | 10 | 10 | |

| Soft | 40 | 40 | 0.9850 | 10 | 10 | |

| 100 cm | Hard | 40 | 40 | 0.9266 | 10 | 10 |

| Medium | 40 | 40 | 0.9031 | 10 | 10 | |

| Soft | 40 | 40 | 0.9132 | 10 | 10 | |

| Distance (cm) | Texture | Confusion Matrix Score (%) | ||

|---|---|---|---|---|

| Hard | Medium | Score | ||

| 50 cm | Hard | 100 | 0 | 0 |

| Medium | 0 | 100 | 0 | |

| Soft | 0 | 0 | 100 | |

| 100 cm | Hard | 100 | 0 | 0 |

| Medium | 0 | 100 | 0 | |

| Soft | 0 | 0 | 100 | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Raja Abdullah, R.S.A.; Saleh, N.L.; Syed Abdul Rahman, S.M.; Zamri, N.S.; Abdul Rashid, N.E. Texture Classification Using Spectral Entropy of Acoustic Signal Generated by a Human Echolocator. Entropy 2019, 21, 963. https://doi.org/10.3390/e21100963

Raja Abdullah RSA, Saleh NL, Syed Abdul Rahman SM, Zamri NS, Abdul Rashid NE. Texture Classification Using Spectral Entropy of Acoustic Signal Generated by a Human Echolocator. Entropy. 2019; 21(10):963. https://doi.org/10.3390/e21100963

Chicago/Turabian StyleRaja Abdullah, Raja Syamsul Azmir, Nur Luqman Saleh, Sharifah Mumtazah Syed Abdul Rahman, Nur Syazmira Zamri, and Nur Emileen Abdul Rashid. 2019. "Texture Classification Using Spectral Entropy of Acoustic Signal Generated by a Human Echolocator" Entropy 21, no. 10: 963. https://doi.org/10.3390/e21100963

APA StyleRaja Abdullah, R. S. A., Saleh, N. L., Syed Abdul Rahman, S. M., Zamri, N. S., & Abdul Rashid, N. E. (2019). Texture Classification Using Spectral Entropy of Acoustic Signal Generated by a Human Echolocator. Entropy, 21(10), 963. https://doi.org/10.3390/e21100963