Abstract

Globally-constrained classical fields provide a unexplored framework for modeling quantum phenomena, including apparent particle-like behavior. By allowing controllable constraints on unknown past fields, these models are retrocausal but not retro-signaling, respecting the conventional block universe viewpoint of classical spacetime. Several example models are developed that resolve the most essential problems with using classical electromagnetic fields to explain single-photon phenomena. These models share some similarities with Stochastic Electrodynamics, but without the infinite background energy problem, and with a clear path to explaining entanglement phenomena. Intriguingly, the average intermediate field intensities share a surprising connection with quantum “weak values”, even in the single-photon limit. This new class of models is hoped to guide further research into spacetime-based accounts of weak values, entanglement, and other quantum phenomena.

1. Introduction

In principle, retrocausal models of quantum phenomena offer the enticing possibility of replacing the high-dimensional configuration space of quantum mechanics with ordinary spacetime, without breaking Lorentz covariance or utilizing action-at-a-distance [1,2,3,4,5,6]. Any quantum model based entirely on spacetime-localized parameters would obviously be much easier to reconcile with general relativity, not to mention macroscopic classical observations. (In general, block-universe retrocausal models can violate Bell-type inequalities because they contain hidden variables that are constrained by the future measurement settings (). These constraints can be mediated via continuous influence on the particle worldlines, explicitly violating the independence assumption utilized in Bell-type no-go theorems.)

In practice, however, the most sophisticated spacetime-based retrocausal models to date only apply to a pair of maximally entangled particles [3,7,8,9]. A recent retrocausal proposal from Sen [10] is more likely to extend to more of quantum theory, but without a retrocausal mechanism it would have to use calculations in configuration space, preparing whatever initial distribution is needed to match the expected final measurement. Sutherland’s retrocausal Bohmian model [11] also uses some calculations in configuration space. Given the difficulties in extending known retrocausal models to more sophisticated situations, further development may require entirely new approaches.

One obvious way to change the character of existing retrocausal models is to replace the usual particle ontology with a framework built upon spacetime-based fields. Every quantum “particle”, after all, is thought to actually be an excitation of a quantum field, and every quantum field has a corresponding classical field that could exist in ordinary spacetime. The classical Dirac field, for example, is a Dirac-spinor-valued function of ordinary spacetime, and is arguably a far closer analog to the electrons of quantum theory than a classical charged particle. This point is even more obvious when it comes to photons, which have no classical particle analog at all, but of course have a classical analog in the ordinary electromagnetic field.

This paper will outline a new class of field-based retrocausal models. Field-based accounts of particle phenomena are rare but not unprecedented, one example being the Bohmian account of photons [12,13], using fields in configuration space. One disadvantage to field-based models is that they are more complicated than particle models. However, if the reason that particle-based models cannot be extended to more realistic situations is that particles are too simple, then moving to the closer analog of classical fields might arguably be beneficial. Indeed, many quantum phenomena (superposition, interference, importance of relative phases, etc.) have excellent analogs in classical field behavior. In contrast, particles have essentially only one phenomenological advantage over fields: localized position measurements. The class of models proposed here may contain a solution to this problem, but the primary goal will be to set up a framework in which more detailed models can be developed (and to show that this framework is consistent with some known experimental results).

Apart from being an inherently closer analog to standard quantum theory, retrocausal field models have a few other interesting advantages to their particle counterparts. One intriguing development, outlined in detail below, is an account of the average “weak values” [14,15] measured in actual experiments, naturally emerging from the analysis of the intermediate field values. Another point of interest is that the framework here bears similarities to Stochastic Electrodynamics (SED), but without some of the conceptual difficulties encountered by that program (i.e., infinite background energy, and a lack of a response to Bell’s theorem) [16,17]. Therefore, it seems hopeful that many of the successes of SED might be applied to a further development of this framework.

The plan of this paper is to start with a conceptual framework, motivating and explaining the general approach that will be utilized by the specific models. Section 3 then explores a simple example model that illustrates the general approach, as well as demonstrating how discrete outcomes can still be consistent with a field-based model. Section 4 then steps back to examine a large class of models, calculating the many-run average predictions given a minimal set of assumptions. These averages are then shown to essentially match the weak-value measurements. The results are then used to motivate an improved model, as discussed in Section 5, followed by preliminary conclusions and future research directions.

2. Conceptual Framework

Classical fields generally have Cauchy data on every spacelike hypersurface. Specifically, for second order field equations, knowledge of the field and its time derivative everywhere at one time is sufficient to calculate the field at all times. However, the uncertainty principle, applied in a field framework, implies that knowledge of this Cauchy data can never be obtained: No matter how precise a measurement, some components of the field can always elude detection. Therefore, it is impossible to assert that either the preparation or the measurement of a field represents the precise field configuration at that time. This point sheds serious doubt on the way that preparations are normally treated as exact initial boundary conditions (and, in most retrocausal models, the way that measurements are treated as exact final boundary conditions).

In accordance with this uncertainty, the field of Stochastic Electrodynamics (SED) explores the possibility that in addition to measured electromagnetic (EM) field values, there exists an unknown and unmeasured “classical zero-point” EM field that interacts with charges in the usual manner [16,17]. Starting from the assumption of relativistic covariance, a natural gaussian noise spectrum is derived, fixing one free parameter to match the effective quantum zero-point spectrum of a half-photon per EM field mode. Using classical physics, a remarkable range of quantum phenomena can be recovered from this assumption. However, these SED successes come with two enormous problems. First, the background spectrum diverges, implying an infinite stress energy tensor at every point in spacetime. Such a field would clearly be in conflict with our best understanding of general relativity, even with some additional ultraviolet cutoff. Second, there is no path to recovering all quantum phenomena via locally interacting fields, because of Bell-inequality violations in entanglement experiments.

Both of these problems have a potential resolution when using the Lagrangian Schema [3] familiar from least-action principles in classical physics. Instead of treating a spacetime system as a computer program that takes the past as an input and generates the future as an output, the Lagrangian Schema utilizes both past and future constraints, solving for entire spacetime structures “all at once”. Unknown past parameters (say, the initial angle of a ray of light constrained by Fermat’s principle of least time) are the outputs of such a calculation, not inputs. Crucially, the action S that is utilized by these calculations is a covariant scalar, and therefore provides path to a Lorentz covariant calculation of unknown field parameters, different from the divergent spectrum considered by SED. The key idea is to keep the action extremized as usual (), while also imposing some additional constraint on the total action of the system. One intriguing option is to quantize the action (), a successful strategy from the “old” quantum theory that has not been pursued in a field context, and would motivate in the first place. (Here, the action S is the usual functional of the fields throughout any given spacetime subsystem, calculated by integrating the classical Lagrangian density over spacetime.)

Constraining the action does not merely ensure relativistic covariance. When complex macroscopic systems are included in the spacetime subsystem (i.e., preparation and measurement devices), they will obviously dominate the action, acting as enormous constraints on the microscopic fields, just as a thermal reservoir acts as a constraint on a single atom. The behavior of microscopic fields would therefore depend on what experimental apparatus is considered. Crucially, the action is an integral over spacetime systems, not merely spatial systems. Therefore, the future settings and orientations of measurement devices strongly influence the total action, and unknown microscopic fields at earlier times will be effectively constrained by those future devices. Again, those earlier field values are literally “outputs” of the full calculation, while the measurement settings are inputs.

Such models are correctly termed “retrocausal”. Given the usual block universe framework from classical field theory and the interventionist definition of causation [18,19,20,21], any devices with free external settings are “causes”, and any constrained parameters are “effects” (including field values at spacetime locations before the settings are chosen). Such models are retrocausal but not retro-signaling, because the future settings constrain unknown past field parameters, hidden by the uncertainty principle. (These models are also forward-causal, because the preparation is another intervention.) It is important not to view causation as a process—certainly not one “flowing” back-and-forth through time—as this would violate the block universe perspective. Instead, such systems are consistently solved “all-at-once”, as in action principles. Additional discussion of this topic can be found in [2,4,22].

The retrocausal character of these models immediately provides a potential resolution to both of the problems with SED. Concerning the infinite-density zero point spectrum, SED assumes that all possible field modes are required because one never knows which ones will be relevant in the future. However, a retrocausal model is not “in the dark” about the future, because (in this case) the action is an integral that includes the future. The total action might very well only be highly sensitive to a bare few field modes. (Indeed, this is usually the case; consider an excited atom, waiting for a zero-point field to trigger “spontaneous” emission. Here, only one particular EM mode is required to explain the eventual emission of a photon, with the rest of the zero point field modes being irrelevant to a future photon detector.) As is shown below, it is not difficult to envision action constraints where typically only a few field modes need to be populated in the first place, resolving the problem of infinities encountered by SED. Furthermore, it is well-known that retrocausal models can naturally resolve Bell-inequality violations without action-at-a-distance, because the past hidden variables are naturally correlated with the future measurement settings [4,23]. (Numerous proof-of-principle retrocausal models of entanglement phenomena have been developed over the past decade [3,7,8,9,10].)

Unfortunately, solving for the exact action of even the simplest experiments is very hard. The macroscopic nature of preparation and measurement that makes them so potent as boundary constraints also makes them notoriously difficult to calculate exactly—especially when the relevant changes in the action are on the order of Planck’s constant. Therefore, to initially consider such models, this paper will assume that any constraint on the total action manifests itself as certain rules constraining how microscopic fields are allowed to interact with the macroscopic devices. (Presumably, such rules would include quantization conditions, for example only allowing absorption of EM waves in packets of energy .) This assumption will allow us to focus on what is happening between devices rather than in the devices themselves, setting aside those difficulties as a topic for future research.

This paper will proceed by simply exploring some possible higher-level interaction constraints (guided by other general principles such as time-symmetry), and determining whether they might plausibly lead to an accurate explanation of observed phenomena. At this level, the relativistic covariance will not be obvious; after all, when considering intermediate EM fields in a laboratory experiment, a special reference frame is determined by the macroscopic devices which constrain those fields. However, it seems plausible that if some higher-level model matches known experiments then a lower-level covariant account would eventually be acheivable, given that known experiments respect relativistic covariance.

The following examples will be focused on simple problems, with much attention given to the case where a single photon passes through a beamsplitter and is then measured on one path or the other. This is precisely the case where field approaches are thought to fail entirely, and therefore the most in need of careful analysis. In addition, bear in mind that these are representative examples of an entire class of models, not one particular model. It is hoped that, by laying out this new class of retrocausal models, one particular model will eventually emerge as a possible basis for a future reformulation of quantum theory.

3. Constrained Classical Fields

3.1. Classical Photons

Ordinary electromagnetism provides a natural analog to a single photon: a finite-duration electromagnetic wave with total energy . Even in classical physics, all of the usual uncertainty relations exist between the wave’s duration and its frequency ; in the analysis below, we assume long-duration EM waves that have a reasonably well-defined frequency, in some well-defined beam such as the gaussian mode of a narrow bandwidth laser. By normalizing the peak intensity I of this wave so that a total energy of corresponds to , one can define a “Classical Photon Analog” (CPA).

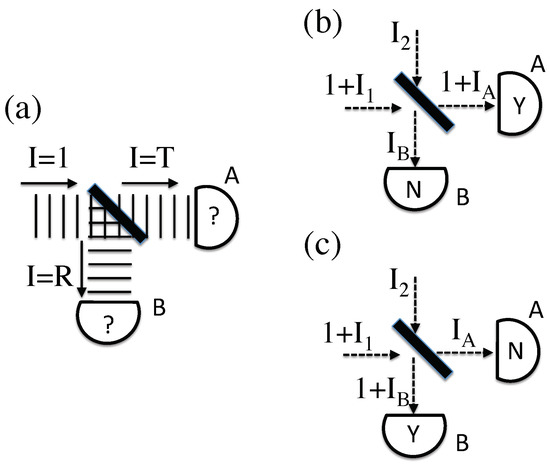

Such CPAs are rarely considered, for the simple reason that they seem incompatible with the simple experiment shown in Figure 1a. If such a CPA were incident upon a beamsplitter, some fraction T of the energy would be transmitted and the remaining fraction would be reflected. This means that detectors A and B on these two paths would never see what actually happens, which is a full amount of energy on either A or B, with probabilities T and R, respectively. Indeed, this very experiment is usually viewed as proof that classical EM is incorrect.

Figure 1.

(a) A classical photon analog encounters a beamsplitter, and is divided among two detectors, in contradiction with observation. (b) A classical photon analog, boosted by some unknown peak intensity , encounters the same beamsplitter. Another beam with unknown peak intensity enters the dark port. This is potentially consistent with a classical photon detection in only detector A (“Y" for yes, “N" for no), so long as the output intensities and remain unobserved. (The wavefronts have been replaced by dashed lines for clarity.) (c) The same inputs as in (b), but with outputs consistent with classical photon detection in only detector B, where the output intensities and again remain unobserved.

Notice that the analysis in the previous paragraph assumed that the initial conditions were exactly known, which would violate the uncertainty principle. If unknown fields existed on top of the original CPA, boosting its total energy to something larger than , it would change the analysis. For example, if the CPA resulted from a typical laser, the ultimate source of the photon could be traced back to a spontaneous emission event, and (in SED-style theories) such “spontaneous” emission is actually stimulated emission, due to unknown incident zero-point radiation. This unknown background would then still be present, boosting the intensity of the CPA such that . Furthermore, every beamsplitter has a “dark” input port, from which any input radiation would also end up on the same two detectors, A and B. In quantum electrodynamics, it is essential that one remember to put an input vacuum state on such dark ports; the classical analog of this well-known procedure is to allow for possible unknown EM wave inputs from this direction.

The uncertain field strengths apply to the outputs as well as the inputs, from both time-symmetry and the uncertainty principle. Just because a CPA is measured on some detector A, it does not follow that there is no additional EM wave energy that goes unmeasured. Just because nothing is measured on detector B does not mean that there is no EM wave energy there at all. If one were to insist on a perfectly energy-free detector, one would violate the uncertainty principle.

By adding these unknown input and output fields, Figure 1b demonstrates a classical beamsplitter scenario that is consistent with an observation of one CPA on detector A. In this case, two incoming beams, with peak intensities and , interfere to produce two outgoing beams with peak intensities and . The four unknown intensities are related by energy conservation, , where the exact relationship between these four parameters is determined by the unknown phase difference between the incoming beams. Different intensities and phases could also result in the detection of exactly one CPA on detector B, as shown in Figure 1c. These scenarios are allowed by classical EM and consistent with observation, subject to known uncertainties in measuring field values, pointing the way towards a classical account of “single-photon” experiments. This is also distinct from prior field-based accounts of beamsplitter experiments [13]; here there is no need to non-locally transfer field energy from one path to another.

Some potential objections should be addressed. One might claim that quantum theory does allow certainty in the total energy of a photon, at the expense of timing and phase information. However, in quantum field theory, one can only arrive at this conclusion after one has renormalized the zero-point values of the electromagnetic field—the very motivation for and in the first place. (Furthermore, when hunting for some more-classical formulation of quantum theory, one should not assume that the original formulation is correct in every single detail.)

Another objection would be to point out the sheer implausibility of any appropriate beam . Indeed, to interfere with the original CPA, it would have to come in with just the right frequency, spatial mode, pulse shape, and polarization. However, this concern makes the error of thinking of all past parameters as logical inputs. In the Lagrangian Schema, the logical inputs are the known constraints at the beginning and end of the relevant system. The unknown parameters are logical outputs of this Schema, just as the initial angle of the light ray in Fermat’s principle. The models below aim to generate the parameters of the incoming beam in , as constrained by the entire experiment. In action principles, just because a parameter is coming into the system at the temporal beginning does not mean that it is a logical input. In retrocausal models, these are the parameters that are the effects of the constraints, not causes in their own right. (Such unknown background fields do not have external settings by which they can be independently controlled, even in principle, and therefore they are not causal interventions.)

Even if the classical field configurations depicted in Figure 1 are possible, it remains to explain why the observed transmission shown in Figure 1b occurs with a probability T, while the observed reflection shown in Figure 1c occurs with a probability R. To extract probabilities from such a formulation, one obviously needs to assign probabilities to the unknown parameters, , , etc. However, use of the Lagrangian Schema requires an important distinction, in that the probabilities an agent would assign to the unknown fields would depend on that agent’s information about the experimental geometry. In the absence of any information whatsoever, one would start with a “a priori probability distribution” —effectively a Bayesian prior that would be (Bayesian) updated upon learning about any experimental constraints. Any complete model would require both a probability distribution as well as rules for how the experimental geometry might further constrain the allowed field values.

Before giving an example model, one further problem should be noted. Even if one were successful in postulating some prior distribution and that eventually recovered the correct probabilities, this might very well break an important time symmetry. Specifically, the time-reverse of this situation would instead depend on and . For that matter, if both outgoing ports have a wave with a peak intensity of at least , then the only parameters sensitive to which detector fires are the unobserved intensities and . Both arguments encourage us to include a consideration of the unknown outgoing intensities and in any model, not merely the unknown incoming fields.

3.2. Simple Model Example

The model considered in this section is meant to be an illustrative example of the class of retrocausal models described above, illustrating that it is possible to get particle-like phenomena from a field-based ontology, and also indicating a connection to some of the existing retrocausal accounts of entanglement.

One way to resolve the time-symmetry issues noted above is to impose a model constraint whereby the two unobserved incoming intensities and are always exactly equal to the unobserved outgoing intensities and (either or ). If this constraint is enforced, then assigning a probability of to each diagram does not break any time symmetry, as this quantity will always be equal to . One simple rule that seems to work well in this case is the a priori distribution

Here, is any of the allowed unobserved background intensities, Q is a normalization constant, and is some vanishingly small minimum intensity to avoid the pole at . (While there may be a formal need to normalize this expression, there is never a practical need; these prior probabilities will be restricted by the experimental constraints before being utilized, and will have to be normalized again.) The only additional rule to recover the appropriate probabilities is that . (This might be motivated by the above analysis that laser photons would have to be triggered by background fields, so the known incoming CPA would have to be accompanied by a non-vanishing unobserved field.)

To see how these model assumptions lead to the appropriate probabilities, first consider that it is overwhelmingly probable that . Thus, in this case, we can ignore the input on the dark port of the beamsplitter. However, with only one non-vanishing input, there can be no interference, and both outputs must have non-vanishing intensities. The only way it is possible for detector A to fire, given the above constraints, is if in Figure 1b (such that ). The only way it is possible for detector B to fire, in Figure 1c, is if .

With this added information from the experimental geometry, one would update the prior distribution by constraining the only allowed values of to be or (and then normalizing). The relative probabilities of these two cases is therefore

yielding the appropriate ratio of possible outcomes.

Taking stock of this result, here are the assumptions of this example model:

- The a priori probability distribution on each unknown field intensity is given by Equation (1)—to be updated for any given experiment.

- The unknown field values are further constrained to be equal as pairs, .

- is non-negligible because it accompanies a known “photon”.

- The probability of each diagram is given by , or equivalently, .

Note that it does not seem reasonable to assign the prior probability to the total incoming field , because Equation (1) should refer to the probability given no further information, not even the knowledge that there is an incoming photon’s worth of energy on that channel. (The known incoming photon that defines this experiment is an addition to the a priori intensity, not a part of it.) Given these assumptions, one finds the appropriate probabilities for a detected transmission as compared to a detected reflection.

There are several other features of this example model. Given Equation (1), it should be obvious that the total energy in most zero-point fields should be effectively zero, resolving the standard SED problem of infinite zero-point energy. In addition, this model would work for any device that splits a photon into two paths (such as a polarizing cube), because the only relevant parameters are the classical transmission and reflection, T and R.

More importantly, this model allows one to recover the correct measurement probabilities for two maximally entangled photons in essentially the same way as several existing retrocausal models in the literature [3,7,8]. Consider two CPAs produced by parametric down-conversion in a nonlinear crystal, with identical but unknown polarizations (a standard technique for generating entangled photons). The three-wave mixing that classically describes the down-conversion process can be strongly driven by the presence of background fields matching one of the two output modes, M1, even if there is no background field on the other output mode, M2. (Given Equation (1), having essentially no background field on one of these modes is overwhelmingly probable.) Thus, in this case, the polarization of M2 necessarily matches the polarization of the unknown background field on M1 (the field that strongly drives the down-conversion process).

Now, assume both output photons are measured by polarizing cubes set at arbitrary polarization angles, followed by detectors. With no extra background field on M2, the only way that M2 could satisfy the above constraints at measurement would be if its polarization was already exactly aligned (modulo ) with the angle of the future polarizing cube. (In that case, no background field would be needed on that path; the bare CPA would fully arrive at one detector or the other.) However, we have established that the polarization of M2 was selected by the background field on M1, so the background field on M1 is also forced to align with the measurement angle on M2 (modulo ). In other words, solving the whole experiment “all at once”, the polarization of both photons is effectively constrained to match one of the two future measurement angles.

This is essentially what happens in several previously-published retrocausal models of maximally entangled particles [3,7,8]. In these models, the properties of both particles (spin or polarization, depending on the context) are constrained to be aligned with one of the two future settings. The resulting probabilities are then entirely determined by the mis-matched particle, the one doesn’t match the future settings. However, this is just a single-particle problem, and in this case the corresponding classical probabilties (R and T, given by Malus’s Law at the final polarizer) are enforced by the above rules, matching experimental results for maximally entangled particles. The whole picture almost looks as if the measurement on one photon has collapsed the other photon into that same polarization, but in these models it was clear that the CPAs had the correct polarization all along, due to future constraints on the appropriate hidden fields.

3.3. Discussion

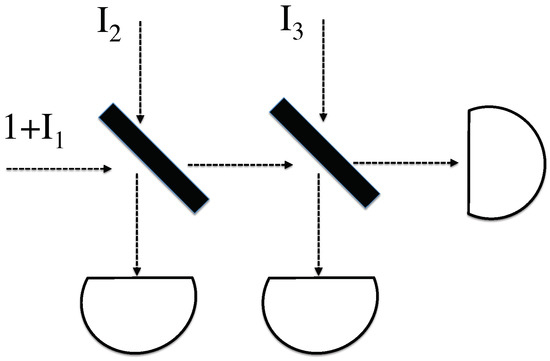

The above model was presented as an illustrating example, demonstrating one way to resolve the most obvious problems with classical photon analogs and SED-style approaches. Unfortunately, it does not seem to extend to more complicated situations. For example, if one additional beamsplitter is added, as in Figure 2, no obvious time-symmetric extension of the assumptions in the previous section lead to the correct results. In this case, one of the two dark ports would have to have non-negligible input fields. Performing this analysis, it is very difficult to invent any analogous rules that lead to the correct distribution of probabilities on the three output detectors.

Figure 2.

A classical photon analog encounters two beamsplitters, and is divided among three detectors. The CPA is boosted by some unknown peak intensity , and each beamsplitter’s dark port has an additional incident field with unknown intensity.

In Section 5, we show that it is possible to resolve this problem, using different assumptions to arrive at another model which works fine for multiple beamsplitters. However, before proceeding, it is worth reviewing the most important accomplishment so far. We have shown that it is possible to give a classical field account of an apparent single photon passing through a beamsplitter, matching known observations. Such models are generally thought to be impossible (setting aside nonlocal options [13]). Given that they are possible—if using the Lagrangian Schema—the next-level concern could be that such models are simply implausible. For phenomena that look so much like particle behavior, such classical-field-based models might seem to be essentially unmotivated.

The next section addresses this concern in two different ways. First, the experiments considered in Section 4 are expanded to include clear wave-like behavior, by combining two beamsplitters into an interferometer. Again, the input and output look like single particles, but now some essential wave interference is clearly occurring in the middle. Second, the averaged and post-selected results of these models can be compared with “weak values” that can be measured in actual experiments [14,15]. Notably, the results demonstrate a new connection between the average intermediate classical fields and experimental weak values. This correspondence is known in the high-field case [24,25,26,27,28], but here they are shown to apply even in the single-photon regime. Such a result will boost the general plausibility of this classical-field-based approach, and will also motivate an improved model for Section 5.

4. Averaged Fields and Weak Values

Even without a particular retrocausal model, it is still possible to draw conclusions as to the long-term averages predicted over many runs of the same experiment. The only assumption made here will be that every relevant unknown field component for a given experiment (both inputs and outputs) is treated the same as every other. In Figure 1, this would imply an equality between the averaged values , each defined to be the quantity .

Not every model will lead to this assumption; indeed, the example model above does not, because the the CPA-accompanying field was treated differently from the dark port field . However, for models which do not treat these fields differently, the averages converge onto parameters that can actually be measured in the laboratory: weak values [14,15]. This intriguing correspondence is arguably an independent motivation to pursue this style of retrocausal models.

4.1. Beamsplitter Analysis

Applying this average condition on the simple beamsplitter example of Figure 1b,c yields a phase relationship between the incoming beams, in order to retain the proper outputs. If is the phase difference between and before the beamsplitter, then taking into account the relative phase shift caused by the beamsplitter itself, a simple calculation for Figure 1b reveals that

Given the above restrictions on the average values, this is only possible if there exists a non-zero average correlation

between the inputs, such that . The same analysis applied to Figure 1c reveals that in this case . (This implies some inherent probability distribution to yield the correct distribution of outcomes, which will inform some of the model-building in the next section.) In this case, there are no intermediate fields to analyze, as every mode is either an input or an output. To discuss intermediate fields, we must go to a more complicated scenario.

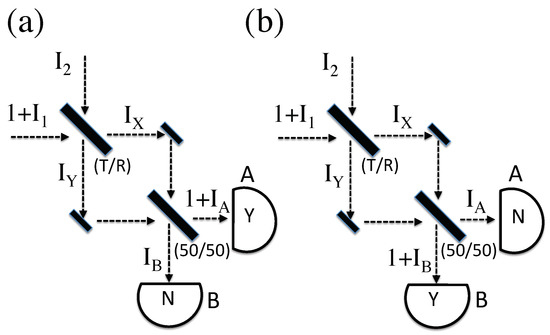

4.2. Interferometer Analysis

Consider the simple interferometer shown in Figure 3. For these purposes, we assume it is aligned such that the path length on the two arms is exactly equal. For further simplicity, the final beamsplitter is assumed to be 50/50. Again, the global constraints imply that either Figure 3a or Figure 3b actually happens. A calculation of the average intermediate value of yields the same result as Equation (3), while the average value of is the same as Equation (4). For Figure 3a, further interference at the final beamsplitter then yields, after some simplifying algebra,

Figure 3.

(a) A classical photon analog, boosted by some unknown peak intensity , enters an interferometer through a beamsplitter with transmission fraction T. An unknown field also enters from the dark port. Both paths to the final 50/50 beamsplitter are the same length; the intermediate field intensities on these paths are and . Here, detector A fires, leaving unmeasured output fields and . (b) The same situation as (a), except here detector B fires.

The first term on the right of these expressions is the outgoing classical field intensity one would expect for a single CPA input, with no unknown fields. Because of our normalization, it is also the expected probability of a single-photon detection on that arm. The second term is just the average unknown field , and the final term is a correction to this average that is non-zero if the incoming unknown fields are correlated. Note that the quantity C defined in Equation (5) again appears in this final term.

To make this end result compatible with the condition that , the correlation term C must be constrained to be . For Figure 3b, with detector B firing, this term must be . (As in the beamsplitter case, the quantity happens to be proportional to the probability of the corresponding outcome, for allowed values of C.) Notice that as the original beamsplitter approaches 50/50, the required value of C diverges for Figure 3b, but not for Figure 3a. That is because this case corresponds to a perfectly tuned interferometer, where detector A is certain to fire, but never B. (This analysis also goes through for an interferometer with an arbitrary phase shift, and arbitrary final beamsplitter ratio; these results will be detailed in a future publication.)

In this interferometer, once the outcome is known, it is possible to use C to calculate the average intensities and on the intermediate paths. For Figure 3a, some algebra yields:

Remarkably, as we are about to see, the non- portion of these calculated average intensities can actually be measured in the laboratory.

4.3. Weak Values

When the final outcome of a quantum experiment is known, it is possible to elegantly calculate the (averaged) result of a weak intermediate measurement via the real part of the “Weak Value” equation [14]:

Here, is the initial wavefunction evolved forward to the intermediate time of interest, is the final (measured) wavefunction evolved backward to the same time, and is the operator for which one would like to calculate the expected weak value. (Note that weak values by themselves are not retrocausal; post-selecting an outcome is not a causal intervention. However, if one takes the backward-evolved wavefunction to be an element of reality, as done by one of the authors here [29], then one does have a retrocausal model—albeit in configuration space rather than spacetime.) Equation (12) yields the correct answer in the limit that the measurement is sufficiently weak, so that it does not appreciably affect the intermediate dynamics. The success of this equation has been verified in the laboratory [26], but is subject to a variety of interpretations. For example, can be negative, seemingly making a classical interpretation impossible.

In the case of the interferometer, the intermediate weak values can be calculated by recalling that it is the square root of the normalized intensity that maps to the wavefunction. (Of course, the standard wavefunction knows nothing about ; only the prepared and detected photon are relevant in a quantum context.) Taking into account the phase shift due to a reflection, the wavefunction between the two beamsplitters is simply , where is the state of the photon on the upper (lower) arm of the interferometer.

The intermediate value of depends on whether the photon is measured by detector A or B. The two possibilities are:

Notice that, in this case, the reflection off the beamsplitter is associated with a negative phase shift, because we are evolving the final state in the opposite time direction.

These are easily inserted into Equation (12), where for a weak measurement of , and for a weak measurement of . (Given our normalization, probability maps to peak intensity.) If the outcome is a detection on A, this yields

If instead the outcome is a detection on B, one finds

Except for the background average intensity , these quantum weak values are precisely the same intermediate intensities computed in the previous section.

The earlier results were framed in an essentially classical context, but these weak values come from an inherently quantum calculation, with no clear interpretation. Some of the strangest features of weak values are when one gets a negative probability/intensity, which seem to have no classical analog whatsoever. For example, whenever detector B fires, either Equation (17) or Equation (18) will be negative. (Recall that if , then B never fires.) Nevertheless, a classical interpretation of this negative weak value is still consistent with the earlier results of Equations (10) and (11), because those cases also include an additional unknown intensity . It is perfectly reasonable to have classical destructive interference that would decrease the average value of to below that of ; after all, the latter is just an unknown classical field.

One objection here might be that for values of , the weak values of Equations (17) and (18) could get arbitrarily large, such that would have to be very large as well to maintain a positive intensity for both Equations (10) and (11). However, consider that if were not large enough, then there would be no classical solution at all, in contradiction to the Lagrangian Schema assumptions considered above (requiring a global solution to the entire problem). Furthermore, if the weak values get very large, that is only because the outcome at B becomes very improbable, meaning that would rarely have to take a large value. As we show in the next section, there are reasonable a priori distributions of which would be consistent with this occasional restriction.

Such connections between uncertain classical fields and quantum weak values are certainly intriguing, and also under current investigation by at least one other group [30]. However, while it may be that the unknown-classical-field framework might help make some conceptual sense of quantum weak values, the main point here is simply that these two perspectives are mutually consistent. Specifically, the known experimental success of weak value predictions seems to equally support the unknown-field formalism presented above. It remains to be seen whether (and why) these two formalisms always seem to give compatible answers in every case, but this paper will set that question aside for future research.

For the purposes of this introductory paper, the final task will be to consider whether the above results indicate a more promising model of these experiments.

5. An Improved Model

Given the intriguing connection to weak values demonstrated in the previous section, it seems worth trying to revise the example model from Section 3. In Section 4, the new assumption which led to the successful result was that every unknown field component , should be treated on an equal footing, not singling out for accompanying a known photon. (Recall the average value of each of these was assumed to be some identical parameter .) Meanwhile, the central idea of the model in Section 3 is that time-symmetry could be enforced by demanding an exact equivalence between the two input fields () and the two output fields ().

One obvious way to combine all these ideas is to instead demand an equivalence between all four of these intensities—not on average, but on every run of the experiment. This might seem to be in conflict with the weak value measurements, which are not the same on every run, but only converge to the weak values after an experimental averaging. However, these measurements are necessarily weak/noisy, so these results are inconclusive as to whether the underlying signal is constant or varying. (Alternatively, one could consider a class of models that on average converge to the below model, but this option will also be set aside for the purposes of this paper.)

With the very strict constraint that each of () are always equal to the same intensity , the only two free parameters are and the relative initial phase (between the two incoming modes and ). In addition, and must be correlated, depending on the experimental parameters, in order to fulfill these constraints. For the case of the beamsplitter (Figure 1b,c), this amounts to removing all the time-averages from the analysis of Section 4.1. This leads to the conditions

Here, is the value of needed for an outcome on detector A (as in Figure 1b), and is the value of needed for an outcome on detector B (as in Figure 1c). Both are functions of .

This model requires a priori probability distributions and (the prime is to distinguish these two functions). The hope is that these distributions can then be restricted by the global constraints such that the correct outcome probabilities are recovered. To implement the above constraints, instead of integrating over the two-dimensional space [], the correlations between and essentially make this a one-dimensional space, which can be calculated with a delta function:

It is very hard to imagine any rule whereby would not start out as a flat distribution—all relative phases should be equally a priori likely. The earlier observation that the appropriate probability was always proportional to (in both the beamsplitter and the interferometer geometries) motivates the following guess for an a priori probability distribution for background fields:

assuming the normalization where corresponds to a single classical photon. This expression diverges as , which is appropriate for avoiding the infinities of SED, although some cutoff would be required to form a normalized distribution. (Again, it is unclear whether an a priori assessment of relative likelihood would actually have to be normalized, given that in any experimental instance there would only be some values of which were possible, and only these probabilities would have to be normalized.)

Inserting Equation (22) into Equation (21), along with a flat distribution for , the beamsplitter conditions from Equations (19) and (20) yield

as desired. Here, the limits on come from the range of possible solutions to Equations (19) and (20). A similar successful result is found in the above case of the interferometer, because is again proportional to the outcome probability. This model also works well for the previously-problematic case of multiple beamsplitters shown in Figure 2. Now, because the incoming fields are all equal, this essentially splits into two consecutive beamsplitter problems, and the probabilities of these two beamsplitters combine in an ordinary manner.

Summarizing the assumptions behind this improved model:

- The unknown field values are constrained to all be equal: .

- The probability distribution on each unknown field intensity is given by Equation (22)—but must be updated for any given experiment.

- The relative phase between the incoming fields is a priori completely unknown—but must be updated for any given experiment.

However, there is still a conceptual difficulty in this new model, in that all considered incoming field modes are constrained to be equal intensities, but we have left the unconsidered modes equal to zero. (Meaning, the modes with the wrong frequencies, or coming in the wrong direction, etc.). If literally all zero-point modes were non-zero, it would not only change the above calculations, but it would run directly into the usual infinities of SED. Thus, if this improved model were to be further developed, there would have to be some way to determine certain groups of background modes that were linked together through the model assumptions, while other background modes could be neglected.

This point is also essential if such a revised model is to apply to entangled particles. For two down-converted photons with identical polarizations, each measured by a separate beamsplitter, there are actually four relevant incoming field modes: the unknown intensity accompanying each photon, as well as the unknown intensity incident upon the dark port of each beamsplitter. If one sets all four of these peak intensities to the same , one does not recover the correct joint probabilities of the two measurements. However, if two of these fields are (nearly) zero, as described in Section 3.2, then the correct probabilities are recovered in the usual retrocausal manner (see Section 3.2 or [3,7,8]). Again, it seems that there must be some way to parse the background modes into special groups.

The model in this section is meant to be an example starting point, not some final product. Additional features and ideas that might prove useful for future model development will now be addressed in the final section.

6. Summary and Future Directions

Retrocausal accounts of quantum phenomena have come a long way since the initial proposal by Costa de Beauregard [31]. Notably, the number of retrocausal models in the literature has expanded significantly in the past decade alone [3,7,8,9,10,11,22,32,33,34,35,36,37,38,39,40], but more ideas are clearly needed. The central novelties in the class of models discussed here are: (1) using fields (exclusively) rather than particles; and (2) introducing uncertainty to even the initial and final boundary constraints. Any retrocausal model must have hidden variables (or else there is nothing for the future measurement choices to constrain), but it has always proved convenient to segregate the known parameters from the unknown parameters in a clear manner. Nature, however, may not respect such a convenience. In the case of realistic measurements on fields, there is every reason to think that our best knowledge of the field strength may not correspond to the actual value.

Although the models considered here obey classical field equations (in this case, classical electromagnetism), they only make sense in terms of the Lagrangian Schema, where the entire experiment is solved “all-at-once”. Only then does it make sense to consider incoming dark-port fields (such as ), because the global solution may require these incoming modes in order have a solution. However, despite the presence of such fields at the beginning of the experiment (and, presumably, before it even begins), they are not “inputs” in the conventional sense; they are literally outputs of the retrocausal model.

The above models have demonstrated a number of features and consequences, most notably:

- Distributed classical fields can be consistent with particle-like detection events.

- There exist simple constraints and a priori field intensity distributions that yield the correct probabilities for basic experimental geometries.

- Most unobserved field modes are expected to have zero intensity (unlike in SED).

- The usual retrocausal account for maximally entangled photons still seems to be available.

- The average intermediate field values, minus the unobserved background, is precisely equal to the “weak value” predicted by quantum theory (in the cases considered so far).

- Negative weak values can have a classical interpretation, provided the unobserved background is sufficiently large.

This seems to be a promising start, but there are many other research directions that might be inspired by these models. For example, consider the motivation of action constraints, raised in Section 2. If the total action is ultimately important, then any constraint or probability rule would have to consider the contribution to the action of the microscopic intermediate fields. Even the simple case of a CPA passing through a finite-thickness beamsplitter has a non-trivial action. (A single free-field EM wave has a vanishing Lagrangian density at every point, but two crossing or interfering waves generally do not). It certainly seems worth developing models that constrain not only the inputs and outputs, but also these intermediate quantities (which would have the effect of further constraining the inputs and outputs).

Another possibility is to make the incoming beams more realistic, introducing spatially-varying noise, not just a single unknown parameter per beam. It is well-known that such spatial noise introduces bright speckles into laser profiles, and in some ways these speckles are analogous to detected photons—in terms of both probability distributions as well as their small spatial extent (compared to the full laser profile). A related point would be to introduce unknown matter fields, say some zero-point equivalent of the classical Dirac field, which would introduce further uncertainty and effective noise sources into the electromagnetic field. These research ideas, and other related approaches, are wide open for exploration.

Certainly, there are also conceptual and technical problems that need to be addressed, if such models are to be further developed. The largest unaddressed issue is how a global action constraint applied to macroscopic measurement devices might lead to specific rules that constrain the microscopic fields in a manner consistent with observation. (In general, two-time boundary constraints can be shown to lead to intermediate particle-like behavior [41], but different global rules will lead to different intermediate consequences.) The tension between a covariant action and the special frame of the measurement devices also needs to be treated consistently. Another topic that is in particular need of progress is an extension of retrocausal entanglement models to handle partially-entangled states, and not merely the maximally-entangled Bell states.

Although the challenges remain significant, the above list of accomplishments arising from this new class of models should give some hope that further accomplishments are possible. By branching out from particle-based models to field-based models, novel research directions are clearly motivated. The promise of such research, if successful, would be to supply a nearly-classical explanation for all quantum phenomena: realistic fields as the solution to a global constraint problem in spacetime.

Acknowledgments

The author would like to thank Justin Dressel for very helpful advice, Aephraim Steinberg for unintentional inspiration, Jan Walleczek for crucial support and encouragement, Ramen Bahuguna for insights concerning laser speckles, and Matt Leifer for hosting a productive visit to Chapman University. This work is supported in part by the Fetzer Franklin Fund of the John E. Fetzer Memorial Trust.

Conflicts of Interest

The author declares no conflict of interest.

References

- Sutherland, R.I. Bell’s theorem and backwards-in-time causality. Int. J. Theor. Phys. 1983, 22, 377–384. [Google Scholar] [CrossRef]

- Price, H. Time’s Arrow & Archimedes’ Point: New Directions for the Physics of Time; Oxford University Press: Oxford, UK, 1997. [Google Scholar]

- Wharton, K. Quantum states as ordinary information. Information 2014, 5, 190–208. [Google Scholar] [CrossRef]

- Price, H.; Wharton, K. Disentangling the quantum world. Entropy 2015, 17, 7752–7767. [Google Scholar] [CrossRef]

- Leifer, M.S.; Pusey, M.F. Is a time symmetric interpretation of quantum theory possible without retrocausality? Proc. R. Soc. A 2017, 473, 20160607. [Google Scholar] [CrossRef] [PubMed]

- Adlam, E. Spooky Action at a Temporal Distance. Entropy 2018, 20, 41. [Google Scholar] [CrossRef]

- Argaman, N. Bell’s theorem and the causal arrow of time. Am. J. Phys. 2010, 78, 1007–1013. [Google Scholar] [CrossRef]

- Almada, D.; Ch’ng, K.; Kintner, S.; Morrison, B.; Wharton, K. Are Retrocausal Accounts of Entanglement Unnaturally Fine-Tuned? Int. J. Quantum Found. 2016, 2, 1–14. [Google Scholar]

- Weinstein, S. Learning the Einstein-Podolsky-Rosen correlations on a Restricted Boltzmann Machine. arXiv, 2017; arXiv:1707.03114. [Google Scholar]

- Sen, I. A local ψ-epistemic retrocasual hidden-variable model of Bell correlations with wavefunctions in physical space. arXiv, 2018; arXiv:1803.06458. [Google Scholar]

- Sutherland, R.I. Lagrangian Description for Particle Interpretations of Quantum Mechanics: Entangled Many-Particle Case. Found. Phys. 2017, 47, 174–207. [Google Scholar] [CrossRef]

- Bohm, D.; Hiley, B.J.; Kaloyerou, P.N. An ontological basis for the quantum theory. Phys. Rep. 1987, 144, 321–375. [Google Scholar] [CrossRef]

- Kaloyerou, P. The GRA beam-splitter experiments and particle-wave duality of light. J. Phys. A 2006, 39, 11541. [Google Scholar] [CrossRef]

- Aharonov, Y.; Albert, D.Z.; Vaidman, L. How the result of a measurement of a component of the spin of a spin-1/2 particle can turn out to be 100. Phys. Rev. Lett. 1988, 60, 1351. [Google Scholar] [CrossRef] [PubMed]

- Dressel, J.; Malik, M.; Miatto, F.M.; Jordan, A.N.; Boyd, R.W. Colloquium: Understanding quantum weak values: Basics and applications. Rev. Mod. Phys. 2014, 86, 307. [Google Scholar] [CrossRef]

- Boyer, T.H. A brief survey of stochastic electrodynamics. In Foundations of Radiation Theory and Quantum Electrodynamics; Springer: Berlin/Heidelberg, Germany, 1980; pp. 49–63. [Google Scholar]

- De La Pena, L.; Cetto, A.M. The qUantum Dice: An Introduction to Stochastic Electrodynamics; Springer Science & Business Media: Berlin, Germany, 2013. [Google Scholar]

- Woodward, J. Making Things Happen: A Theory of Causal Explanation; Oxford University Press: Oxford, UK, 2005. [Google Scholar]

- Price, H. Agency and probabilistic causality. Br. J. Philos. Sci. 1991, 42, 157–176. [Google Scholar] [CrossRef]

- Pearl, J. Causality; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Menzies, P.; Price, H. Causation as a secondary quality. Br. J. Philos. Sci. 1993, 44, 187–203. [Google Scholar] [CrossRef]

- Price, H. Toy models for retrocausality. Stud. Hist. Philos. Sci. Part B 2008, 39, 752–761. [Google Scholar] [CrossRef]

- Leifer, M.S. Is the Quantum State Real? An Extended Review of ψ-ontology Theorems. Quanta 2014, 3, 67–155. [Google Scholar] [CrossRef]

- Dressel, J.; Bliokh, K.Y.; Nori, F. Classical field approach to quantum weak measurements. Phys. Rev. Lett. 2014, 112, 110407. [Google Scholar] [CrossRef] [PubMed]

- Dressel, J. Weak values as interference phenomena. Phys. Rev. A 2015, 91, 032116. [Google Scholar] [CrossRef]

- Ritchie, N.; Story, J.G.; Hulet, R.G. Realization of a measurement of a “weak value”. Phys. Rev. Lett. 1991, 66, 1107. [Google Scholar] [CrossRef] [PubMed]

- Bliokh, K.Y.; Bekshaev, A.Y.; Kofman, A.G.; Nori, F. Photon trajectories, anomalous velocities and weak measurements: A classical interpretation. New J. Phys. 2013, 15, 073022. [Google Scholar] [CrossRef]

- Howell, J.C.; Starling, D.J.; Dixon, P.B.; Vudyasetu, P.K.; Jordan, A.N. Interferometric weak value deflections: Quantum and classical treatments. Phys. Rev. A 2010, 81, 033813. [Google Scholar] [CrossRef]

- Aharonov, Y.; Vaidman, L. The two-state vector formalism: An updated review. In Time in Quantum Mechanics; Springer: Berlin/Heidelberg, Germany, 2008; pp. 399–447. [Google Scholar]

- Sinclair, J.; Spierings, D.; Brodutch, A.; Steinberg, A. Weak values and neoclassical realism. 2018; in press. [Google Scholar]

- De Beauregard, O.C. Une réponse à l’argument dirigé par Einstein, Podolsky et Rosen contre l’interprétation bohrienne des phénomènes quantiques. C. R. Acad. Sci. 1953, 236, 1632–1634. (In French) [Google Scholar]

- Wharton, K. A novel interpretation of the Klein-Gordon equation. Found. Phys. 2010, 40, 313–332. [Google Scholar] [CrossRef]

- Wharton, K.B.; Miller, D.J.; Price, H. Action duality: A constructive principle for quantum foundations. Symmetry 2011, 3, 524–540. [Google Scholar] [CrossRef]

- Evans, P.W.; Price, H.; Wharton, K.B. New slant on the EPR-Bell experiment. Br. J. Philos. Sci. 2012, 64, 297–324. [Google Scholar] [CrossRef]

- Harrison, A.K. Wavefunction collapse via a nonlocal relativistic variational principle. arXiv, 2012; arXiv:1204.3969. [Google Scholar]

- Schulman, L.S. Experimental test of the “Special State” theory of quantum measurement. Entropy 2012, 14, 665–686. [Google Scholar] [CrossRef]

- Heaney, M.B. A symmetrical interpretation of the Klein-Gordon equation. Found. Phys. 2013, 43, 733–746. [Google Scholar] [CrossRef]

- Corry, R. Retrocausal models for EPR. Stud. Hist. Philos. Sci. Part B 2015, 49, 1–9. [Google Scholar] [CrossRef]

- Lazarovici, D. A relativistic retrocausal model violating Bell’s inequality. Proc. R. Soc. A 2015, 471, 20140454. [Google Scholar] [CrossRef]

- Silberstein, M.; Stuckey, W.M.; McDevitt, T. Beyond the Dynamical Universe: Unifying Block Universe Physics and Time as Experienced; Oxford University Press: Oxford, UK, 2018. [Google Scholar]

- Wharton, K. Time-symmetric boundary conditions and quantum foundations. Symmetry 2010, 2, 272–283. [Google Scholar] [CrossRef]

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).