1. Introduction

The Price equation is an abstract mathematical description for the change in populations. The most general form describes a way to map entities between two sets. That abstract set mapping partitions the forces that cause change between populations into two components: the direct and inertial forces.

The direct forces change frequencies. The inertial forces change the values associated with population members. Changed values can be thought of as an altered frame of reference driven by the inertial forces.

From the abstract perspective of the Price equation, one can see the same partition of direct and inertial forces in the fundamental equations of many different subjects. That abstract unity clarifies understanding of natural selection and its relations to such disparate topics as thermodynamics, information, the common forms of probability distributions, Bayesian inference, and physical mechanics.

In a special form of the Price equation, the changes caused by the direct and inertial forces cancel so that the total remains conserved. That conservation law defines a universal invariance and canonical separation of the direct and inertial forces. The canonical separation of forces clarifies the common mathematical structure of seemingly different topics.

This article sketches the overall argument for the common mathematical structure of different subjects. The argument is, at present, a broad framing of conjectures. The conjectures raise many interesting problems that require further work. Consult Frank [

1,

2] for mathematical details, open problems, and citations to additional literature.

2. The Abstract Price Equation

The Price equation describes the change in the average value of some property between two populations [

1,

3]. Consider a population as a set of things. Each thing has a property indexed by

i. Those things with a common property index comprise a fraction,

, of the population and have average value,

, for whatever we choose to measure by

z. Write

and

as the vectors over all

i. The population average value is

, summed over

i.

A second population has matching vectors and . Those vectors for the second population are defined by the special set mapping of the abstract Price equation. In particular, is the fraction of the second population derived from entities with index i in the first population. The second population does not have its own indexing by i. Instead, the second population’s indices derive from the mapping of the second population’s members to the members of the first population.

Similarly, is the average value in the second population of members derived from entities with index i in the first population. Let be the difference between the derived population and the original population, and .

To calculate the change in average value, it is useful to begin by considering q and z as abstract variables associated with the first set, and and as corresponding variables from the second set.

The change in the product of

q and

z is

. Note that

and

. We can write the total change in the product as a discrete analog of the chain rule for differentiation of a product, yielding two partial change terms

The first term, , is the partial difference of q holding z constant. The second term, , is the partial difference of z holding q constant. In the second term, we use as the constant value because, with discrete differences, one of the partial change terms must be evaluated in the context of the second set.

The same product rule can be applied to vectors, yielding the abstract form of the Price equation

The abstract Price equation simply partitions the total change in the average value into two partial change terms.

Note that has a clearly defined meaning as frequency, whereas may be chosen arbitrarily as any values assigned to members. The values, , define the frame of reference. Because frequency is clearly defined, whereas values are arbitrary, the frequency changes, , take on the primary role in analyzing the structural aspects of change that unify different subjects.

The primacy of frequency change naturally labels the first term, with , as the changes caused by the direct forces acting on populations. Because and define a sequence of probability distributions, the primary aspect of change concerns the dynamics of probability distributions.

The arbitrary aspect of the values, , naturally labels the second term, with , as the changes caused by the forces that alter the frame of reference, the inertial forces.

Table 1 defines commonly used symbols.

Table A1 and

Table A2 summarize mathematical forms and relations between disciplines.

3. Canonical Form

The prior section emphasized the primary role for the dynamics of probability distributions, , which follows as a consequence of the forces acting on populations.

The canonical form of the Price equation focuses on the dynamics of probability distributions and the associated forces that cause change. To obtain the canonical form, define

as the relative change in the frequency of the

ith type.

We can use any value for

in the Price equation. Choose

. Then

in which the equality to zero expresses the conservation of total probability

because the total changes in probability must cancel to keep the sum of the probabilities constant at one.

Thus, Equation (

3) appears as a seemingly trivial result, a notational spin on

. However, many generalities and connections between seemingly different disciplines follow from the partition of conserved probability into the two terms of Equation (

3).

4. Preliminary Interpretation

The Price equation by itself does not calculate the particular

values of dynamics. Instead, the equation emphasizes the fundamental constraint on dynamics that arises from invariant total probability. The changes,

, must satisfy the constraint in Equation (

3), specifying certain properties that any possible dynamical path must have.

Put another way, all possible dynamical paths will share certain invariant properties. It is those invariant properties that reveal the ultimate unity between different applications and disciplines.

Note that is fundamental, whereas is an arbitrary assignment of value or meaning. The focus on corresponds to the reason why information theory considers only probabilities, without consideration of meaning or values. In general, the unifying fundamental aspect among disciplines concerns the dynamics of probability distributions. We can then add values or meaning to that underlying fundamental basis.

In particular, we can first study universal aspects of the canonical invariant form based on

. We can then derive broader results by simply making the coordinate transformation

, yielding the most general expression of the abstract Price equation in Equation (

1).

Constraints on or specify additional invariances, which determine further structure of the possible dynamical paths and equilibria. Each may be a vector of values, allowing multiple constraints associated with the values.

Alternatively, one can study the conditions required for to change in particular ways. For example, what are the necessary and sufficient patterns of association between initial frequency, , relative frequency change, , and value, , to drive the change, , in a particular direction?

5. Temporal Dynamics

The frequency change terms, , arise from the abstract set mapping assignment of members in the second set to members in the first set. In some cases, the abstract set mapping may differ from the traditional notion of dynamics as a temporal sequence, in which is the frequency of type i in the second set.

We may add various assumptions to achieve a temporal interpretation in which

i retains its meaning as a type through time. For example, following Price [

4], we may partition

into two steps. In the initial step,

, the mapping preserves type, such that

describes the frequency of type

i in the second set.

In the subsequent step, , the mapping accounts for the forces that change type. For a force that makes the change , we map type j members in the second set to type j members in the first set. Thus, describes the net frequency change from the gains and losses caused by the forces of type reassignment.

For this two-step process that preserves type, the net change combines the type-changing forces with other forces that alter frequency. Thus, we may consider type-preserving maps as a special case of the general abstract set mapping. In this article, I focus on the properties of the general abstract set mapping.

6. Key Results

Later sections use the abstract Price equation to show formal relations between natural selection and information theory, the dynamics of entropy and probability, basic aspects of physical dynamics, and other fundamental principles [

2]. Here, I list some key results without derivation or discussion. This listing gives a sense of where the argument will go, providing a target for further development in later sections.

Throughout this article, I use ratios of vectors to denote elementwise division, for example . A constant added to or multiplied by a vector applies the operation to each element of the vector, for example, , for constants a and b, yields for each i.

D’Alembert’s principle of physical mechanics. We can write the canonical Price equation of Equation (

3) as d’Alembert’s partition [

2,

5] between the direct forces,

, and the inertial forces of acceleration,

, as

This equation generalizes Newton’s second law that force equals mass times acceleration, describing the balance between force and acceleration. Here, the direct forces, , balance the inertial forces of acceleration, , along the path of change, . The condition describes conservative systems. For nonconservative systems, we can use , with not necessarily conserved.

Information theory. For small changes,

and

, the direct force term is

in which

is the Kullback–Leibler divergence, a fundamental measure of information, and

is a nondimensional expression of Fisher information [

6].

Extreme action. The term for direct force, or action,

, yields frequency change dynamics,

, determined by the extremum of the action, subject to constraint

in which

is a given force vector. The first parenthetical term constrains the incremental distance between probability distributions to be

, for a given constant,

C. The second parenthetical term constrains the total probability to remain invariant.

Entropy and thermodynamics. The force vector,

, can be described as a growth process,

, with

. A constraint on the system’s partial change in some quantity,

, constrains the new frequency vector,

. We may write the constraint as

, thus

The action term, , is the increase in entropy, . Maximizing the action maximizes the production of entropy.

Maximum entropy and statistical mechanics. In the prior example, the work done by the force of constraint is

, with

. At maximum entropy, we obtain an equilibrium,

. Thus, the maximum entropy equilibrium probability distribution is

This Gibbs–Boltzmann-exponential distribution is the principal result of statistical mechanics. Here, we obtained that result through a Price equation abstraction that led to maximum entropy production, subject to a constraining invariance on a component of change in .

Constraint, invariance and sufficiency. The maximum entropy probability distribution expresses the forces of constraint,

, acting on

. Different constraints yield different distributions. For example, the constraint

yields a Gaussian distribution for given mean,

, and variance,

. This constraint is sufficient to determine the form of the distribution. Similarly, for small changes, the total change of the direct forces

does not require the exact form of the frequency changes,

. It is sufficient to know the Fisher information distance,

, which determines the subsets of the possible change vectors,

, with the same invariant Fisher distance,

. Many results from the abstract Price equation express invariance and sufficiency.

Inference: data as a force. Use

as an index for different parameter values. Then

matches the Bayesian notion of a prior probability distribution for the values of

. The posterior distribution is

in which the normalized likelihood,

, describes the force of the data that drives the change in probability. In Price notation, the normalized likelihood is equivalent to the force vector,

, and also

. With that definition for

in terms of the force of the data, the structure and general properties of Bayesian inference follow as a special case of the abstract Price equation.

Invariance, scale and probability distributions. The maximum entropy probability distribution in Equation (

7) is invariant to affine transformation,

, because

k and

adjust to

a and

b. That affine invariance with respect to

z, which arises directly from the abstract Price equation, is sufficient by itself to determine the structure of commonly observed probability distributions, without need of invoking entropy maximization. The structure of common probability distributions is

The function is a scale for z, such that a shift in that scale, , only changes z by a constant multiple, and therefore does not change the probability pattern. Simple forms of w lead to the various commonly observed continuous probability distributions. For example, yields the stretched exponential distribution.

7. History of Earlier Forms

Before analyzing the abstract Price equation and the unification of disciplines, it is useful to write down some of the earlier expressions and applications of the Price equation from biology [

1,

7,

8,

9].

7.1. Fitness and Average Excess

This section extends the definition of relative changes in Equation (

2). Let

be the relative growth, or relative fitness, of the

ith type. Then we may define

which, in biology, is Fisher’s average excess in fitness [

10]. Note that

and that the average value of

w is

, thus

.

7.2. Variance in Fitness

Considering

as a measure of fitness, the first term of Equation (

3) becomes the partial change in average fitness caused by the direct forces,

. In symbols

in which

is the partial change caused by the direct forces, and

is the variance in fitness.

7.3. Fundamental Theorem

If we let

be the regression of fitness,

, on some predictor,

, and define

, then

If one interprets

as an inherited gene, and

as an environmental effect that is not transmitted to the next generation, then the partial change in fitness by natural selection that is transmitted to the next generation is

. This result is analogous to Fisher’s fundamental theorem of natural selection [

8,

11,

12,

13].

The analysis tracks three sets. The initial set before selection with , the second set after selection with , and the third set after transmission with . The set after transmission retains only those changes associated with , interpreted as an inherited gene, such that .

7.4. Covariance Form and Replicators

Using the definitions of relative fitness and average excess, the first term of the Price equation is

in which

is the covariance between fitness and value. This covariance implies that natural selection tends to increase the average value of

z in proportion to the association between fitness and value. If the values do not change,

, then the total change is

This covariance equation has been widely used to study natural selection [

9,

14,

15,

16,

17].

In one common application, sometimes referred to as the replicator problem, we label each individual in a population by its own unique index,

i, and let

be 0 or 1 to specify if each individual is a type 0 or type 1 individual [

18,

19]. We can think of

as the frequency of type 1 in individual

i. Then

is the frequency of type 1 individuals in the population, and

is the frequency change of types in the population [

20]. Here, we assume that individuals do not change their type during transmission,

, so that the second Price equation term is zero. This assumption is usually interpreted in biology as the absence of mutation.

7.5. Levels of Selection

We can write the second Price equation term as

in which E denotes the expectation operator for the average value. Combining this expression with Equation (

13), we obtain an alternative form of the Price equation

This form is often used to analyze how selection acts at different levels, such as individual versus group selection [

3,

21]. As an example, consider a variant of the replicator problem, which uses

, yielding

in which

now denotes the frequency of type 1 individuals within the

ith group of individuals,

is the fitness of the

ith group relative to all other groups, and

is the change in the frequency of type 1 individuals within the

ith group. Thus, the two terms can be interpreted as the change caused by selection between groups and the change caused by selection between individuals within groups.

8. Mathematical Properties

This section illustrates mathematical properties of the Price equation. These mathematical properties set the foundation for unifying apparently different kinds of problems from different disciplines.

8.1. Geometry and Work

Write the standard Euclidean geometry vector length as the square root of the sum of squares

For any vector

in which

is the angle between the vectors

and

. If we interpret

as an abstract, nondimensional force, then

expresses an abstract notion of work as the distance moved,

, multiplied by the component of force acting along the path,

.

8.2. Divergence between Sets

If we let

describe the relative growth of the various frequencies,

, then the divergence between sets can be expressed as

in which

R is the radius of a sphere on which must lie all possible

changes with the same divergence between sets. If we choose to interpret

as an abstract notion of force, or fitness, acting on frequency changes, then

is the work, with magnitude

, that separates the probability distribution

from

.

8.3. Small Changes, Paths and Logarithms

If we think of the separation between sets as a sequence of small changes along a path, with each small change as

, then

in which the overdot and the symbol “

” equivalently describe the differential. Then the partial change by direct forces separates the probability distributions of the two sets by the path length

in which

is an abstract, nondimensional expression of the Fisher information distance metric.

8.4. Unitary and Canonical Coordinates

Let

. Then

, expressing the conservation of total probability as a vector of unit length, in which all possible probability combinations of

define the surface of a unit sphere. In Hamiltonian analyses of d’Alembert’s principle for the canonical Price equation,

is a canonical coordinate system [

5].

The unitary coordinates,

, also provide a direct description of Fisher information path length as a distance between two probability distributions

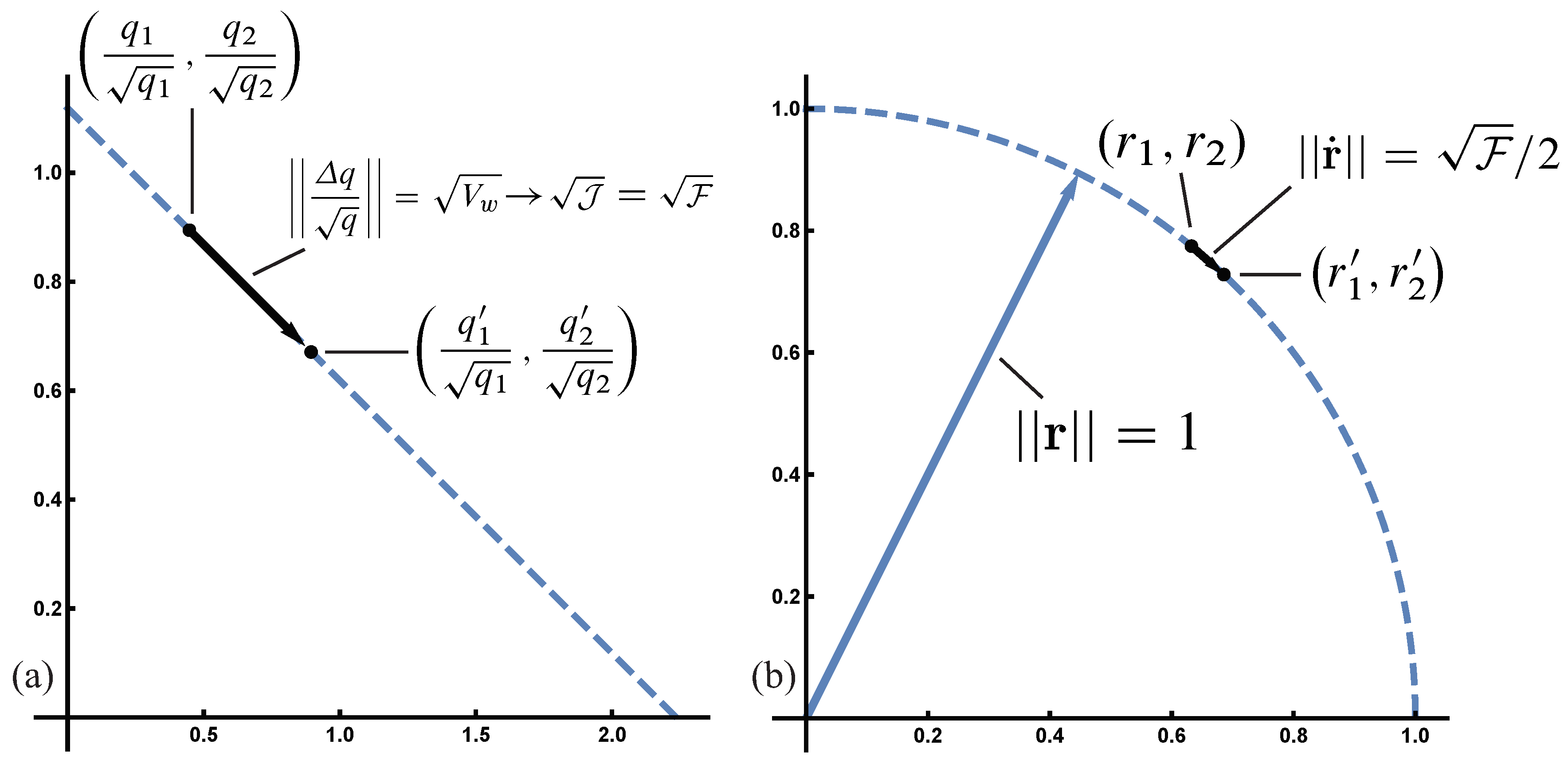

The constraint on total probability makes square root coordinates the natural system in which to analyze Euclidean distances, which are the sums of squares. See

Figure 1.

8.5. Affine Invariance

Affine transformation shifts and stretches (multiplies) values, , for shift by a and stretch by b. Here, addition or multiplication of a vector by a constant applies to each element of the vector.

In the abstract Price equation

affine transformation,

, alters the terms as:

, because the shift constant cancels in the differences;

, because in

, we have

; and

, because the shift constant cancels in the differences. The stretch factor

b multiplies each term and therefore cancels, leaving the Price equation invariant to affine transformation of the

values. Much of the universal structure expressed by the Price equation follows from this affine invariance.

8.6. Probability vs. Frequency

In this article, I use

probability and

frequency interchangeably. Many subtle issues distinguish the concepts and applications associated with those alternative words. However, in this attempt to identify common mathematical structure between various subjects, those distinctions are not essential. See Jaynes [

22] for discussion.

9. D’Alembert’s Principle

The remaining sections repeat the list of topics in the

Key results section. Prior publications discussed these topics [

1,

2]. Here, I present additional details, roughly sketching how the structure provided by the abstract Price equation unifies various subjects.

We can rewrite the canonical Price equation for the conservation of total probability in Equation (

3) as

Here,

satisfies the constraint on total probability and any other specified constraints. The direct forces are

. The inertial forces are

in which

is the second difference of

, which is roughly like an acceleration.

D’Alembert’s principle is a generalization of Newton’s second law, force equals mass times acceleration [

23]. In one dimension, Newton’s law is

, for force,

F, and mass times acceleration,

, so that

. D’Alembert generalizes Newton’s law to a statement about motion in multiple dimensions such that, in conservative systems, the total work for a displacement,

, and total forces,

, is zero. Work is the distance moved multiplied by the force acting in the direction of the movement.

The canonical Price equation of Equation (

3) is an abstract, nondimensional generalization of d’Alembert for probability distributions that conserve total probability. The movement of the probability distribution between two populations, or sets, can be partitioned into the balancing work components of the direct forces,

, and the inertial forces,

. We can often specify the direct forces in a simple and clear way. The balancing inertial forces may then be analyzed by d’Alembert’s principle [

23].

The movement of probability distributions in the canonical Price equation is always conservative, , so that d’Alembert’s principle holds. When we transform to the general Price equation by , then it may be that and the system is not conservative. In that case, we may consider constraints on and how those constraints influence the possible paths of change for .

We can obtain a simple form of d’Alembert’s principle for probability distributions when displacements are small,

. Define the relative change operator as

, the differential of the logarithm. Then

and

, yielding

with the direct force proportional to the relative change in frequencies, and the inertial force proportional to the relative nondimensional acceleration in frequencies.

From Equation (

5), the work of the direct forces,

, is the Fisher information path length that separates the probability distributions,

and

, associated with the two sets. The inertial forces cause a balancing loss,

, which describes the loss in Fisher information that arises from the recalculation of the relative forces in the new frame of reference,

. The balancing loss occurs because the average relative force, or fitness, is always zero in the current frame of reference, for example,

. Any gain in relative fitness,

, must be balanced by an equivalent loss in relative fitness,

.

Here, the notions of force, inertia, and work are nondimensional mathematical abstractions that arise from the common underlying structure between the Price equation and the equations of physical mechanics. Similarly, the Fisher information measure here is an abstraction of the standard usage of the Fisher metric.

By equating force with relative frequency change, we intentionally blur the distinction between external causes and internal effects. By describing change as the difference between two abstract sets rather than change through time or space, we intentionally blur the scale of change. By separating frequencies, , from property values, , we intentionally distinguish universal aspects of structural change between sets from the particular interpretations of property values in each application. The blurring of cause, effect and scale, and the separation of frequency from value, lead to abstract mathematical expressions that reveal the common underlying structure between seemingly different subjects.

10. Information Theory

When changes are small, the direct force term of the canonical Price equation expresses classic measures of information theory (Equation (

5)). In particular,

is a symmetric expression of the Kullback–Leibler divergence, which measures the change in information associated with the separation between two probability distributions [

6].

For small changes, the Kullback–Leibler divergence is equivalent to a nondimensional expression of the Fisher information metric. The Fisher metric provides the foundation for much of classic statistical theory and for the subject of information geometry [

24,

25]. The Fisher metric also arises as an equivalent description for dynamics in many classic problems in physics and other subjects [

26].

What does it mean that the Price equation matches classic measures of information, which also arise other subjects? That remains an open question. I suggest that the Price equation reveals the common mathematical structure among those seemingly different subjects. That mathematical structure arises from the conserved quantities, invariances, or constraints that impose a common pattern on dynamics. By this interpretation, dynamics is just a description of the changes between a sequence of sets.

The key aspect of the Price equation seems to be the separation of frequencies from property values. That separation shadows Shannon’s separation of the information in a message, expressed by frequencies of symbols in sets, from the meaning of a message, expressed by the properties associated with the message symbols. The Price equation takes that separation further by considering the abstract description of the separation between sets rather than the information in messages. Price [

4] was clearly influenced by the information theory separation between frequency and property in his discussion of a generalized notion of natural selection that might unify disparate subjects.

The equivalence of the Price equation and information measures arises directly from the assumption of small changes. For larger changes, the relation between the Price equation and information remains an open problem. We might, for example, describe larger changes as

in which

is a nondimensional expression for the total force that separates frequencies. From that expression,

in which

is a form relative fitness, and

is called the Malthusian parameter in biology. Then, similarly to Equation (

5), we have

which is known as the Jeffreys divergence. In this case, with

not necessarily small, we no longer have a direct equivalence to Fisher information.

Information geometry, which analyzes continuous paths along contours of conserved total probability, describes the relations between Fisher information and this discrete divergence [

27]. The idea is that big changes,

, become a series of small changes,

, along a continuous path that connects the endpoints,

to

. Each small step along the path can be described as a Fisher information path length, and the sum of those small lengths equals the Jeffreys divergence.

Earlier work in population genetics theory derived the total change caused by natural selection as

(reviewed by [

28,

29,

30]). That initial work did not emphasize the equivalence of the change by natural selection and Fisher information [

31]. Here, the Fisher metric arises most simply as the continuous limiting form of the canonical Price equation description for the distance between two sets.

11. Extreme Action

We can write Equation (

6) as

By the principle of extreme action, the dynamics, , maximize or minimize (extremize) the action, , subject to the constraints. In this case, maximizing the action simply describes the fact that the movement, , tends to be in the direction of the force vector, , subject to any constraints on motion.

The Lagrangian,

, combines the action and the constraints into one expression. To illustrate the principle of extreme action with the Lagrangian above, we maximize the action subject to the constraints by solving

, while also solving for

and

by requiring that

and

. The solution is

in which

is the excess force relative to the average, and

follows from satisfying the constraint on total probability under the assumption of small changes. The constant,

, satisfies the constraint on total path length,

, in which

is the standard deviation of the forces. We can rewrite the solution as

This expression shows that we can determine the frequency changes, , from the given forces, , or we can determine the forces from the given frequency changes. The mathematics is neutral about what is given and what is derived.

In this case, is an arbitrary force vector. Using in the general Price equation does not necessarily yield . A nonconservative system does not satisfy d’Alembert’s principle. Often, we can specify certain invariances associated with , and use those invariances as additional forces of constraint on in the Lagrangian. The additional forces of constraint typically alter the dynamics and the potential equilibria, as shown in the following section.

Across many disciplines, problems can often be solved by this variational method of writing a Lagrangian and then extremizing the action subject to the constraints [

23]. The difficulty is determining the correct Lagrangian for a particular problem. No general method specifies the correct form.

In this example, the Price equation essentially gave us the form of the action and the constraints. Here, the action is the frequency displacement multiplied by the arbitrary force vector, , which is analogous to the physical work done in the movement of the probability distribution. The constraints follow from the conservation of total probability and the description of total distance moved as Fisher information, , which arises from the canonical Price equation.

12. Entropy and Thermodynamics

The tendency for systems to increase in entropy provides the foundation for much of thermodynamics [

32]. Entropy can be studied abstractly by the information entropy quantity,

. For small changes in frequencies, the change in entropy is

.

System dynamics often maximize the production of entropy [

33]. Maximum entropy production suggests that the dynamics may be analyzed by a Lagrangian in which the action to be maximized is the production of entropy,

.

In the basic Lagrangian for dynamics given by Equation (

29), the action is the abstract notion of physical work,

, the displacement,

, multiplied by the force,

.

The force vector,

, can be related to frequency change in a growth process,

, with

, as in Equation (

27). The work becomes

in which the second term on the right is the production of entropy.

If the system conserves the change in some quantity, , then that invariant change imposes a constraint on the possible change in the probability distribution, . Suppose that the value is a property of a type, i, such that each type does not change its property value between sets, . Then, from the general Price equation, implies . This constraint acts as a force that limits the possible probability distributions, , given the initial distribution, .

We can express the constraint

on

in terms of a constraint on

as

, for constant,

k. Then the constraint

has an equivalent expression in terms of

as

We can now split the total force,

, as in Equation (

31) and, considering

as a force of constraint, we can rewrite the Lagrangian of Equation (

29) as

The action term, , is the increase in entropy, . Maximizing the action maximizes the production of entropy.

The maximization by solving

subject to the constraints yields a solution with the same form as Equation (

30). The force term is replaced by a partition of forces into components that match the direct entropy increase and the constraint on

as

in which the star superscripts denote the deviations from average values,

and

, thus

The value of

is

, as in the previous section. In this case, we use for

the partition of the forces on the right side of Equation (

34) into the direct entropy and the constraining forces.

The constraint

implies

The term is the regression of on , which acts to transform the scale for the forces of constraint imposed by to be on a common scale with the direct forces of entropy, . The term describes the required force of constraint on frequency changes so that the new frequencies move by the amount . The term is the variance in .

In these examples of dynamics derived from Lagrangians, the action is the partial change term of the direct forces derived from the universal properties of the Price equation. Thus, the maximum entropy production in this case can be interpreted as a universal partial maximum entropy production principle, in the Price equation sense of the partial change associated with the direct forces, holding the inertial frame constant [

2].

In many applications, causal analysis reduces to this pattern of partial change by direct focal causes, holding other causes constant. The particular partition into direct, constraining, and inertial forces is a choice that we make to isolate or highlight particular causes [

23].

13. Entropy and Statistical Mechanics

When entropy reaches its maximum value subject to the forces of constraint, equilibrium occurs at

. From the force of constraint given in the previous section,

, the equilibrium can be written as

in which I have dropped the

i subscript. This Gibbs–Boltzmann-exponential distribution is the principal result of statistical mechanics [

34]. Here, we obtained the exponential distribution through a Price equation abstraction that led to maximum entropy production.

This result suggests that equilibrium probability distributions are simple expressions of maximum entropy subject to the forces of constraint. Jaynes [

35,

36] developed this maximum entropy perspective in his quest to overthrow Boltzmann’s canonical ensemble for statistical mechanics. The canonical ensemble describes macroscopic probability patterns by aggregation over a large number of equivalent microscopic particles.

The theory of statistical mechanics, based on the microcanonical ensemble, yields several commonly observed probability distributions. However, Jaynes [

22] emphasized that the same probability distributions commonly arise in economics, biology, and many other disciplines. In those nonphysical disciplines, there is no meaningful canonical ensemble of identical microscopic particles. According to Jaynes, there must be another more general cause of the common probability patterns. The maximization of entropy is one possibility [

37].

Jaynes emphasized that increase in entropy is equivalent to loss of information. The inherent randomizing tendency in all systems causes loss of information. Maximum entropy is simply a consequence of that loss of information. Because systems lose all information except the forces of constraint, common probability distributions simply reflect those underlying forces of constraint.

The Gibbs–Boltzmann-exponential distribution in Equation (

36) expresses the simple force of constraint on the mean of some value,

, associated with the system. Different constraints lead to different distributions. For example, the constraint

yields a Gaussian distribution for mean

and variance

.

Jaynes invoked maximum entropy as a consequence of the thermodynamic principle that systems increase in entropy. Here, I developed the maximization of entropy from the abstract Price equation expression for frequency dynamics and the extreme action principle.

Extreme action simply expresses the notion that changing frequencies align with the direction of the force vector. That geometric alignment is equivalent to the maximization of frequency change multiplied by force, an abstract notion of physical work.

Jaynes argued that the fundamental notion of information sets the underlying structural unity of thermodynamics, probability, and many aspects of statistical inference. I argue for underlying unity based on abstract properties of invariance and geometry [

2]. Those properties of invariance and geometry give a common mathematical structure to any problem that can be considered abstractly by the Price equation’s description of the change between two sets. The next section reviews and extends these notions of invariance and common mathematical structure.

14. Invariance and Sufficiency

The Price equation expresses constraints on the change in probability distributions between sets, . For example, if is a constant, conserved value, then the changes, , must satisfy that constraint. We may say that the conserved value of imposes a force of constraint on the frequency changes. This section relates the Price equation’s abstract notions of change and constraint to Jaynes’ arguments.

Jaynes emphasized that systems tend to increase in entropy or, equivalently, to lose information. Entropy increase is a force that drives a system to an equilibrium at which entropy is maximized subject to any forces of constraint.

Because entropy increase is essentially universal, it is sufficient to know the particular forces of constraint to determine the most likely form of a probability distribution. Sufficiency expresses the forces of constraint in terms of conserved quantities.

Put another way, sufficiency partitions all possible populations into subsets. Each subset contains all of those populations with the same invariant conserved quantity. For example, if the constraint is a conserved value of , then all populations with the same invariant value of fall into the same subset.

To analyze the force arising from constraint on and the most likely form of the associated probability distribution, it is sufficient to know that the dynamics of populations driven by entropy increase must remain within the subset with invariant values defined by the constraints of the conserved quantities.

Jaynesian thermodynamics follows from the general force of information loss, in which the constraints sufficiently describe the only information that remains after maximum information loss.

The Price equation goes beyond Jaynes in revealing the underlying abstract mathematical structure that unifies seemingly different subjects. In all of the disciplines we have discussed, the key results for each discipline arise from the basic description of change between sets constrained by invariant conditions that we place on frequency, , and value, . In addition, the Price equation expresses the intrinsic invariance to affine transformation .

From the perspective of the abstract Price equation, notions of information and entropy increase arise as secondary descriptions of the underlying primary geometric aspects of change between sets subject to intrinsic invariances and to invariant conditions imposed as constraints. Those aspects of geometry and invariance set the shared foundations for many seemingly different disciplines.

15. Inference: Data as a Force

Jaynes considered information as a force that changes probability distributions. Entropy increase is the force that causes loss of information, driving probability distributions to maximum entropy subject to constraint. For inference, data provide an informational force that drives the Bayesian dynamics of probability distributions to provide estimates of parameter values. The parameters are typically the conserved, constrained quantities that are sufficient to define maximum entropy probability distributions.

How does the Jaynesian interpretation of data as an informational force in statistical inference follow from the underlying Price equation abstraction? Consider the estimation of a parameter, , such as the mean of an exponential probability distribution. In the Bayesian framework, we describe the current information that we have about by the probability distribution, .

The value of represents the relative likelihood that the true value of the parameter is . The probability distribution over alternative values of represents our current knowledge, or information, about . To relate this to the Price framework, note that we are now using as the subscript for types instead of i. The vector now implicitly describes the set of values for .

Our problem concerns how new information about changes the probability values to . The new probability values summarize the combination of our prior information in and the force of the new information in the data. This problem is the Bayesian dynamics of combining a prior distribution, , with new data to generate a posterior distribution, , with .

We have from our universal definitions for change given earlier the relation , in which we called the relative fitness, describing the force of change on probabilities. Here, the force arises from the way in which new data alters the net likelihood associated with a value of .

Following Bayesian tradition, denote that force of the data as

, the likelihood of observing the data,

D, given a value for the parameter,

. To interpret a force as equivalent to relative fitness, the average value of the force must be one to satisfy the conservation of total probability. Thus, define

We can now write the classic expression for Bayesian updating of a prior,

, driven by the force of new data,

, to yield the posterior,

, as

By recognizing

as a force vector acting on frequency change, we can use all of the general results derived from the Price equation. For example, the Malthusian parameter,

, relates to the log-likelihood as

This equivalence for log-likelihood relates frequency change to the Kullback–Leibler expressions for the change in information

which we may think of as the gain of information from the force of the data. Perhaps the most general expression of change describes the relative separation within the unitary square root coordinates as the Euclidean length

which is an abstract, nondimensional expression for the work done by the displacement of the frequencies,

, in relation to the force of the data,

.

I defined

as a normalized form of the likelihood,

, such that the average value is one,

. Thus, we have a canonical form of the Price equation for normalized likelihood

The second terms show how the inertial forces alter the frame of reference that determines the normalization of the likelihoods, . Typically, as information is gained from data, the normalizing force of the frame of reference reduces the force of the same data in subsequent updates.

All of this simply shows that Bayesian updating describes the change in probability distributions between two sets. That change between sets follows the universal principles given by the abstract Price equation.

Prior work noted the analogy between natural selection and Bayesian updating [

38,

39,

40]. Here, I emphasized a more general perspective that includes natural selection and Bayesian updating as examples of the common invariances and geometry that unify many topics.

16. Invariance and Probability

In the earlier section Affine invariance, I showed that the Price equation is invariant to affine transformations . This section suggests that the Price equation’s intrinsic affine invariance explains universal aspects of probability distributions in a more general and fundamental manner than Jaynes’ focus on entropy and information.

The general form of probability distributions in Equation (

36) followed from the constraint

. Affine transformation does not change the force imposed by that constraint, because

in which

and

. Because the constants,

and

, adjust to satisfy underlying constraints, the shift and stretch constants

a and

b do not alter the constraints or the final form of the probability distribution.

Thus, the probability distribution in Equation (

36), arising from analysis of extreme action applied to a Lagrangian, is affine invariant with respect to

. We can make a more fundamental argument, by deriving the form of the probability distribution solely as a consequence of the intrinsic affine invariance of the Price equation.

In particular, shift invariance by itself explains why the probability distribution in Equation (

36) has an exponential form [

41]. If we assume that the functional form for the probability distribution,

, is invariant to a constant shift,

, then, dropping the

i subscripts and using continuous notation, by the conservation of total probability

holds for any magnitude of the shift,

a, in which the proportionality constant,

, changes with the magnitude of the shift,

a, independently of the value of

z, in order to satisfy the conservation of total probability.

Because

is independent of

z, the condition for the conservation of total probability is

The invariance holds for any shift,

a, so it must hold for an infinitesimal shift,

. We can write the Taylor series expansion for an infinitesimal shift as

with

, because

is small and independent of

z, and

. Thus,

is a differential equation with solution

in which

k is determined by the conservation of total probability, and

is determined by

. When

z ranges over positive values,

, then

. Invariance to stretch transformation by

b follows from the adjustment,

, given above.

Affine invariance of the probability distribution with respect to

z implies additional structure. In particular, we can write

, in which a shift

multiplies

z by a constant, which does not change the form of the probability distribution. Thus, in terms of the shift-invariant scale,

, we obtain the canonical expression that describes nearly all commonly observed continuous probability distributions [

41,

42]

when we add a few additional details about the measure,

, and the commonly observed base scales,

. Understanding the abstract form of common probability patterns clarifies the study of many problems [

42,

43,

44] (see

Appendix A).

17. Meaning

One cannot explain mathematical form by appeal to extrinsic physical notions. The structure of mathematical results does not follow from energy or heat or natural selection. Instead, those extrinsic phenomena arise as consistent interpretations for the structure of the mathematics.

The mathematical structure can only be analyzed, explained and understood by reference to mathematical properties. For example, we may invoke invariance, conserved values, and geometry to understand why certain mathematical forms arise in the abstract Price equation description for changes in frequency, and why those same forms recur in many different applications. We may not invoke entropy or information as a cause, only as a description.

My goal has been to reveal the common mathematical structure that unifies seemingly disparate results from different subjects. The common mathematical structure arises primarily through simple invariances and their expression in geometry.