1. Introduction

This paper develops Bayesian analogs of minimum Hellinger distance methods. In particular, we aim to produce methods that enable a Bayesian analysis to be both robust to unusual values in the data and to retain their asymptotic precision when a proposed parametric model is correct.

All statistical models include assumptions which may or may not be true of the mechanisms producing a given data set. Robustness is a desired property in which a statistical procedure is relatively insensitive to deviations from these assumptions. For frequentist inference, concerns are largely associated with distributional robustness: the shape of the true underlying distribution deviates slightly from the assumed model. Usually, this deviation represents the situation where there are some outliers in the observed data set; see [

1] for example. For Bayesian procedures, the deviations may come from the model, prior distribution, or utility function, or some combination thereof. Much of the literature on Bayesian robustness has been concerned with the prior distribution or utility function. By contrast, the focus of this paper is robustness with respect to outliers in a Bayesian context, a relatively understudied form of robustness for Bayesian models. For example, we know that Bayesian models with heavy tailed data distributions are robust with respect to outliers for the case of one single location parameter estimated by many observations. However, as a consequence of the Crámer–Rao lower bound and the efficiency of the MLE, modifying likelihoods to account for outliers will usually result in a loss of precision in parameter estimates when they are not necessary. The methods we propose, and the study of their robustness properties, will provide an alternative means of making any i.i.d. data distribution robust to outliers that do not lose efficiency when no outliers are present. We speculate that they can be extended beyond i.i.d. data as in [

2], but we do not pursue this here.

Suppose we are given the task of estimating

from independent and identically distributed univariate random variables

, where we assume each

has density

. Within the frequentist literature, minimum Hellinger distance estimates proceed by first estimating a kernel density

and then choosing

to minimize the Hellinger distance

. The minimum Hellinger distance estimator was shown in [

3] to have the remarkable properties of being both robust to outliers and statistically efficient, in the sense of asymptotically attaining the information bound, when the data are generated from

. These methods have been generalized to a class of minimum disparity estimators, based on alternative measures of the difference between a kernel density estimate and a parametric model, which have been studied since then, e.g., [

4,

5,

6,

7,

8]. While some adaptive M-estimators can be shown to retain both robustness and efficiency, e.g., [

9], minimum disparity methods are the only generic methods we are aware of that retain both properties and can also be readily employed within a Bayesian context. In this paper, we only consider Hellinger distance in order to simplify the mathematical exposition; the extension to more general disparity methods can be made following similar developments to those in [

5,

7].

Recent methodology proposed in [

2] suggested the use of disparity-based methods within Bayesian inference via the construction of a “disparity likelihood” by replacing the likelihood function when calculating the Bayesian posterior distribution; they demonstrated that the resulting expected

a posteriori estimators retain the frequentist properties studied above. These methods first obtain kernel density estimates from data and then calculate the disparity between the estimated density function and the corresponding density functions in the parametric family.

In this paper, we propose the use of Bayesian non-parametric methods instead of the classical kernel methods in applying the minimum Hellinger distance method. One method we proposed is just to replace the kernel density estimate used in classical minimum Hellinger distance estimate by the Bayesian nonparametric expected a posteriori density, which we denote by MHB (minimum Hellinger distance method using a Bayesian nonparametric density estimate). The second method combines the minimum Hellinger distance estimate with the Bayesian nonparametric posterior to give a posterior distribution of the parameter of interest. This latter method is our main focus. We show that it is more robust than usual Bayesian methods and demonstrate that it retains asymptotic efficiency, hence the precision of the estimate is maintained. So far as we are aware, this is the first Bayesian method that can be applied generically and retain both robustness and (asymptotic) efficiency. We denote it by BHM (Bayesian inference using a minimum Hellinger distance).

To study the properties of the proposed new methods, we treat both MHB and BMH as special cases of semi-parametric models. The general form of a semi-parametric model has a natural parametrization

, where

is a Euclidean parameter and

belongs to an infinite-dimensional set. For such models,

is the parameter of primary interest, while

is a nuisance parameter. Asymptotic properties of some of Bayesian semi-parametric models have been discussed in [

10]. Our disparity based methods involve parameters in Euclidean space and Hilbert space, with the former being of most interest. However, unlike many semi-parametric models in which

is specified jointly by

and

, in our case, the finite dimensional parameter and the nonparametric density functions are parallel specifications of the data distribution. Therefore, standard methods to study asymptotic properties of semi-parametric models will not apply to the study of disparity-based methods. Nevertheless, considering the problem of estimating

of some function

, where

is the space of the probability models

P, semi-parametric models and disparity-based methods can be unified into one framework.

The MHB and BMH methods are introduced in detail in

Section 2, where we also discuss some related concepts and results, such as tangent sets, information, consistency, and the specific nonparametric prior that we employ. In

Section 3, both MHB and BMH are shown to be efficient, in the sense that asymptotically the variance of the estimate achieves the lower bound of the Cramér–Rao theorem. For MHB, we show that asymptotic normality of the estimate holds, where the asymptotic variance is the inverse of the Fisher information. For BMH, we show that the Bernstein von Mises (BvM) theorem holds. The robustness property and further discussion of these two methods are given in

Section 4 and

Section 5, respectively. A broader discussion is given in

Section 6.

2. Minimum Hellinger Distance Estimates

Assume that random variables are independent and identically distributed (iid) with density belonging to a specified parametric family , where all the in the family have the same support, denoted by . For simplicity, we use to denote the random variables . More flexibly, we model , where g is a probability density function with respect to the Lebesgue measure on . Let denote the collection of all such probability density functions. If the parametric family contains the data-generating distribution, then for some . Formally, we can denote the probability model of the observations in the form of a semi-parametric model . We aim at estimating and consider g as a nuisance parameter, which is typical of semi-parametric models.

Let

denote a prior on

, and for any measurable subset

, the posterior probability of

given

is

Let

denote the Bayesian nonparametric expected

a posteriori estimate. Our first proposed method can be described formally as follows:

MHB: Minimum Hellinger distance estimator with Bayesian nonparametric density estimation:

This estimator replaces the kernel density estimate in the classical minimum Hellinger distance method introduced in [

3] by the posterior expectation of the density function.

For this method, we will view

as the value at

of a functional

, which is defined via

where

denotes the

metric. We can also write

as

.

In a more general form, what we estimate is the value of some functional , where the P stands for the common distribution from which data are generated, and is the set of all possible values of P, which also denotes the corresponding probability model. In the setting of minimum Hellinger distance estimation, the model is set as , P can be specified as , and . For the methods we proposed in this paper, we will focus on the functional , for a given , as defined above. Note that the constraint associated with the family is implicitly applied by T.

Using functional T, we can also propose a Bayesian method, which assigns nonparametric prior on the density space and gives inference on the unknown parameter of a parametric family as follows:

BMH: Bayesian inference with minimum Hellinger distance estimation:

A nonparametric prior

on the space

and the observation

leads to the posterior distribution

, which can then be converted to the posterior distribution of the parameter

through the functional

.

In the following subsections, we discuss properties associated with the functional

T as well as the consistency of MHB and BHM, and we provide a detailed example of the random histogram prior that we will employ and its properties that will be used for the discussion of efficiency in

Section 2.1.

2.1. Tangent Space and Information

In this subsection, we obtain the efficient influence function of the functional T on the linear span of the tangent set on and show that the local asymptotic normality (LAN) expansion related to the norm of the efficient influence function attains the Caramér–Rao bound. These results play important roles in showing that BvM holds for the BMH method in the next section.

Estimating the parameter by under the assumption uses less information than estimating this parameter for . Hence, the lower bound of the variance of for should be at least the supremum of the lower bounds of all parametric sub-models .

To use mathematical tools such as functional analysis to study the properties of the proposed methods, we introduce some notations and concepts below. Without loss of generality, we consider one-dimensional sub-models

, which pass through the “true” distribution, denoted by

with density function

. We say a sub-model indexed by

t,

, is differentiable in quadratic mean at

if we have that, for some measurable function

,

where

is the cumulative distribution function associated with

. Functions

s are known as the score functions associated with each sub-model. The collection of these score functions, which is called a tangent set of the model

at

and denoted by

, is induced by the collection of all sub-models that are differentiable at

.

We say that

T is differentiable at

relative to a given tangent set

, if there exists a continuous linear map

such that for every

and a sub-model

with score function

q, there is

where

. By the Riesz representation theorem for Hilbert spaces, the map

can always be written in the form of an inner product with a fixed vector-valued, measurable function

,

Let

denote the unique function in

, the closure of the linear span of the tangent set. The function

is the efficient influence function and can be found as the projection of any other “influence function” onto the closed linear span of the tangent set.

For a sub-model

whose score function is

q, the Fisher information about

t at 0 is

. In this paper, we use the notation

to denote

for a general function

g and distribution

F. Therefore, the “optimal asymptotic variance” for estimating the functional

, evaluated at

, is greater than or equal to the Caramér–Rao bound

The supremum of the right-hand side (RHS) of the above expression over all elements of the tangent set is a lower bound for estimating

given model

, if the true model is

. The supremum can be expressed in the norm of the efficient influence function

by Lemma 25.19 in [

11]. The lemma and its proof is quite neat, and we reproduce it here for the completeness of the argument.

Lemma 1. Suppose that the functional is differentiable at relative to the tangent set . Then Proof. This is a consequence of the Cauchy–Schwarz inequality and the fact that, by definition, the efficient influence function, , is contained in the closure of . □

Now we show that functional T is differentiable under some mild conditions and construct its efficient influence function in the following theorem.

Theorem 1. For the functional T defined in Equation (2), and for , let denote for . We assume that there exist and both in , such that for α in a neighborhood of zero,where and converge to zero as . Assuming , the efficient influence function of T iswhere converges to 0 as . In particular, for , Proof. Let the

t-indexed sub-model be

where

satisfies

and

. By direct calculation, we see that

q is the score function associated with such a sub-model at

in the sense of Equation (

4) and thus the collection of

q is the maximal tangent set.

By the definition of

T,

maximizes

. From Equation (

6), we have that

Since

, we have that

Similarly,

. Using Equation (7) to substitute

, we have that

where

converge in

to zero as

since

. Thus,

Since by the definition of

, which requires

, we have that

By the same argument we can show that, when

, Equation (

9) holds. □

Some relatively accessible conditions under which Equations (

6) and (7) hold are given by Lemmas 1 and 2 in [

3]. We do not repeat them here.

Now we can expand

T at

as

where

is given in Theorem 1 and

.

2.2. Consistency of MHB and BMH

Since

may have more than one value, the notation

is used to denote any arbitrary one of the possible values. In [

3], the existence, continuity in Hellinger distance, and uniqueness of functional

T are ensured under the following condition:

- A1

(i) is compact, (ii) implies on a set of positive Lebesgue measures, and (iii), for almost every x, is continuous in .

When a Bayesian nonparametric density estimator is used, we assume the posterior consistency:

- A2

For any given , in probability.

Under Conditions A1 and A2, consistency holds for MHB and BMH.

Theorem 2. Suppose that Conditions A1 and A2 hold, then

- 1.

in probability, in probability, and hence in probability;

- 2.

For any given , in probability.

Proof. Part 1: To show that

in probability, which is equivalent to showing that

in probability, it is sufficient to show that

in probability, since

. We have that

Note that the change of order of integration is due to Fubini’s theorem and the last inequality is due to

. Split the integral on the right-hand side of the above expression into two parts:

where

for any given

. The first term is bounded by

by construction. By Condition A1, the posterior of measure of

to 0 in probability as

. Since Hellinger distance is bounded by 2, so does the second term above. This completes the proof for

in probability.

To show

and

in probability, we need that the functional

T is continuous and unique at

, which is proved by Theorem 1 in [

3] under Condition A1.

Part 2: By Condition A1 and Theorem 1 in [

3], the functional

T is continuous and unique at

. Hence, for any given

, there exist

such that

when

. By Condition A2, we have that

, which implies that

in probability. □

It should be noted that, if we change the

in Condition A2 to

, a sequence converging to 0, then we can apply the results for the concentration rate of the Bayesian nonparametric density estimation here. However, such an approach cannot lead to the general “efficiency” claim, no matter in the form of rate of concentration or asymptotic normality. There are two reasons for this. First, the rate of concentration for Bayesian nonparametric posterior is about

for a rather general situation and

, where

, for some special cases (see [

12,

13,

14]). This concentration rate is not sufficient in many situations to directly imply that the concentration of the corresponding parametric estimates achieves the lower bound of the variance given in the Cramér–Rao theorem. Second, the Hellinger distances between pairs of densities as functions of parameters vary among different parametric families. Therefore, obtaining the rate of concentration in parameters from the rate of convergence in the densities cannot be generally applied to different distribution families.

It should also be noted that, although

is required to be compact in Condition A1, Theorem 2 is useful for a

that is not compact, as long as the parametric family

can be re-parameterized where the space of new parameters can be embedded within a compact set. An example of re-parameterizing a general location-scale family with parameters

and

to a family with parameters

and

, where

and

, is discussed in [

3], and the conclusions of Theorem 1 in [

3] is still valid for a location-scale family. Therefore, Theorem 2 remains valid for the same type of the families, whose parameter space may not be compact and for the same reasons; the compactness requirement stated in the theorem is mainly for mathematical simplicity.

2.3. Prior on Density Functions

We introduce a random histogram as an example for priors used in Bayesian nonparametric density estimation. It can be seen as a simplified version of a Dirichlet process mixture (DPM) prior, which is commonly used in practice. Both DPM and random histogram are mixture densities. While DPM uses a Dirichlet process to model the weights within an infinite mixture of kernels, the random histogram prior only has a finite number of components. Another difference is that, although we specify the form of the kernel function for DPM, the kernel function could be any density function in general, while the random histogram uses only the uniform density as its mixing kernel. Nevertheless, the limit on the finite number of the mixing components is not that important in practice, since the Dirichlet process will always be truncated in computation. In the next section, we will verify that the random histogram satisfies the conditions that are needed for our proposed methods to be efficient. On the other hand, although we believe that DPM should also lead to efficiency, the authors are unaware of the theoretical results or tools required to prove it. This is mostly due to the flexibility of DPM, which in turn significantly increases the mathematical complexity of the analysis.

For any , denote the set of all regular k bin histograms on by where . Denote the unit simplex in by . The subset of , , denotes the collection of densities on in the form of a histogram.

The set is a closed subset of . For any function , denote its projection in the sense on by , where .

We assign priors on

via

k and

for each

k. A degenerate case is to let

. Otherwise, let

be a distribution on positive integers, where

for all

k large enough and some

. For example, Condition (

13) is satisfied by the Poisson distribution, which is commonly used in Bayesian nonparametric models.

Conditionally on

k, we consider a Dirichlet prior on

:

for some fixed constants

and any

. For posterior consistency, we need the following condition:

where

.

The consistency result of this prior is given by Proposition 1 in the supplement to [

15]. For

, let

where

denote a neighborhood of

, and we have that

(a) there exist

c,

such that

(b) Suppose

with

, if

and

, then, for

and a sufficiently large

M,

where

denotes the class of

-Hölder functions on

.

This means that the posterior of the density function concentrates around the projection

of

and around

itself in terms of the Hellinger distance. We can easily conclude that

from Equation (

18) for

.

It should be noted that, although the priors we defined above are on the densities on

, this is for mathematical simplicity, which could easily be extended to the space of probability densities on any given compact set. Further, transformations of

, similar to those discussed at the end of

Section 2.2, can extend the analysis to the real line (refer to [

3,

16] for more example and details).

4. Robustness Properties

In frequentist analysis, robustness is usually measured by the influence function and breakdown point of estimators. These have been used to study robustness in minimum Hellinger distance estimators in [

3] and in more general minimum disparity estimators in [

2,

7].

In Bayesian inference, robustness is labeled “outlier rejection” and is studied under the framework of the “theory of conflict resolution”. There is a large literature on this topic, e.g., [

20,

21,

22]. While the results of [

22] are only about symmetric distributions, [

23] provides corresponding results covering a wider class of distributions with tails in the general exponential power family. These results provide a complete theory for the case of many observations and a single location parameter.

We examine the behavior of the methods MHB and BMH under a mixture model for gross errors. Let denote the uniform density of the interval , where is small, and let , where and and z is a real number. The density models a situation, where observations are distributed from , and of the observations are the gross errors located near z.

Theorem 5. For every and every , denote the mixture model for gross errors by . We then have that , under the assumptions of Theorem 3 and that, for the BMH method, in the distribution as and , where ϕ denotes the probability function of the normal distribution, when conditions in Theorem 4 are satisfied.

Proof. By Theorem 7 in [

3], for functional

T, as we defined and under the conditions in this theorem, we have that

We also have that, for MHB, under conditions of Theorem 3,

in probability. Combining the two results,

, when the data is generated from a contaminated distribution as

. Similarly, by Theorem 4, we have that

in distribution as

, and which converges to

, as

. □

5. Demonstration

We provide a demonstration of both BMH and MHB methods on two data sets: the classical Newcomb light speed data (see [

24,

25]), in which 2 out of 66 values are clearly negative oultiers, and a bivariate simulation containing 10% contamination in two asymmetric locations.

We have implemented the BMH and MHB methods using two Bayesian nonparametric priors:

We obtain posteriors for both using BUGS. We have elected to use BUGS here as opposed to the package DPpackage within R despite the latter’s rather efficient MCMC algorithms because our BMH method requires direct access to samples from the posterior distribution as opposed to the expected a posteriori estimate. The R package distrEx is then used to construct the sampled density functions and calculated the Hellinger distance between the sampled densities from the nonparametric model and the assumed normal distribution. The R package optimx is also used to find the minima of the Hellinger distances. The time cost of our methods are dominated by the optimization step rather than by the obtaining of samples from the posterior density.

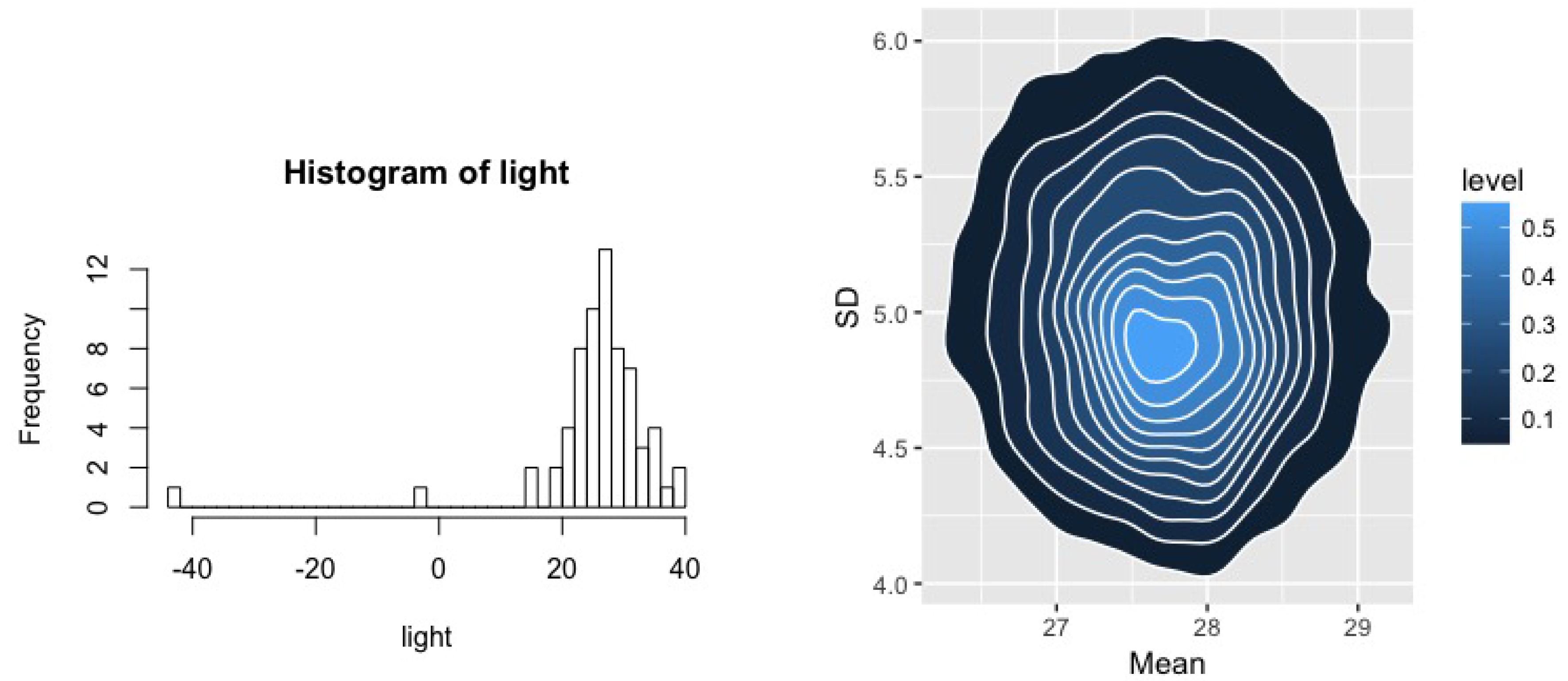

We first apply BMH and MHB on the Simon Newcomb’s measurements to measure the speed of light. The data contains 66 observations. For this example, we specify the parameters and hyper-parameters of the DPM as

,

,

, and

. We plot the data and a bivariate contour of the BMH posterior for both the mean and variance of the assumed normal in

Figure 1, where, despite outliers, the BvM result is readily apparent.

Table 1 summarizes these estimates. We report the estimated mean and variance with and without the obvious outliers as well as the same quantities estimated using both MHB and BMH methods with the last of these being the expected

a posteriori estimates. Quantities in parentheses given the “natural” standard error for each quantity: likelihood estimates correspond to standard normal theory—dividing the estimated standard error by

, and BMH standard errors are obtained from the posterior distribution. For MHB, we used a bootstrap and note that, while the computational cost involved in estimating MHB is significantly lower than BMH when obtaining a point estimate, the standard errors require and MCMC chain for each bootstrap, significantly raising the cost of obtaining these estimates. We observe that both prior specifications result in parameter estimates that are identical to two decimal places and very close to those obtained after removing outliers.

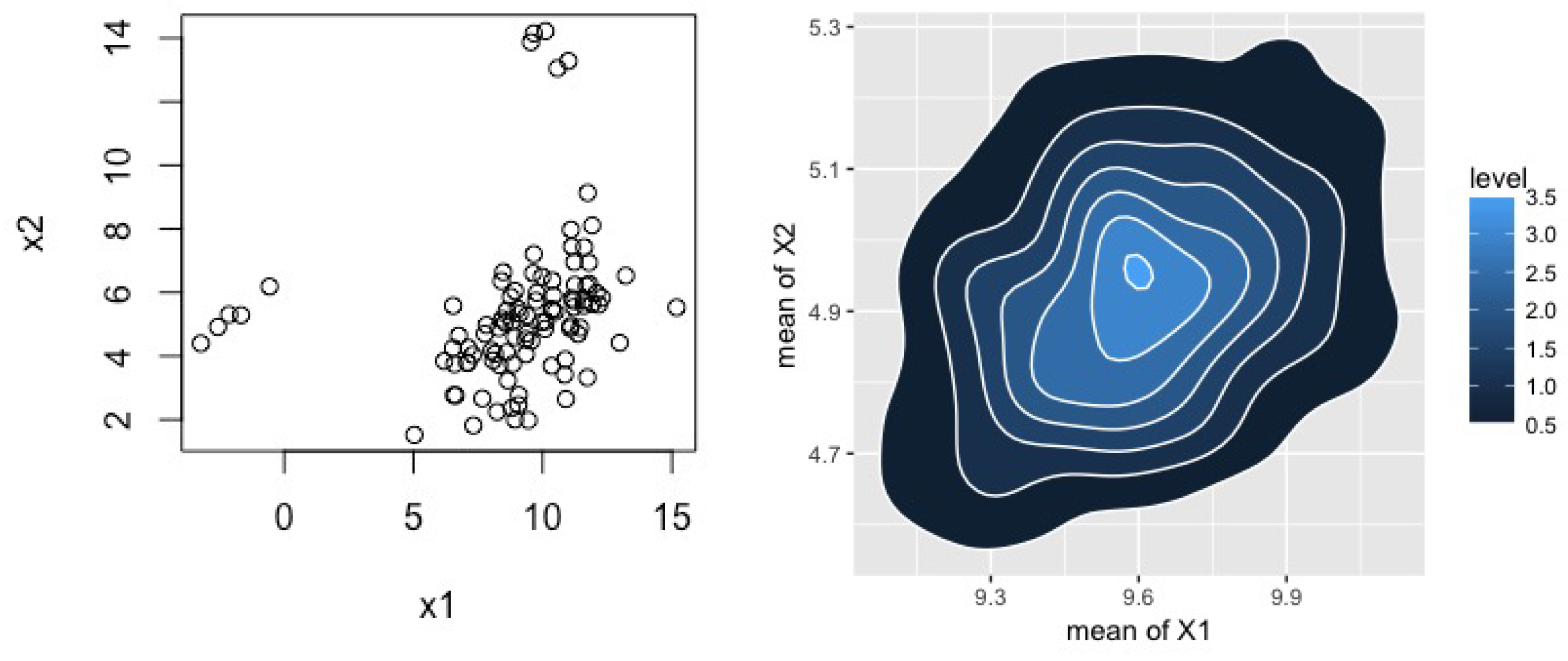

To examine the practical implementation of methods that go beyond our theoretical results, we applied these methods to a simulated two-dimensional data set of 100 data points generated from a standard normal with two contamination distributions. Specifically, our data distribution comes from

where exactly five points were generated from each of the second-two Gaussians. Our DP prior used the same hyper-parameters as above with the exception that

was obtained from the empirical variance of the (contaminated) data, and

were extended to their 2-dimensional form as

.

Figure 2 plots these data along with the posterior for the two means.

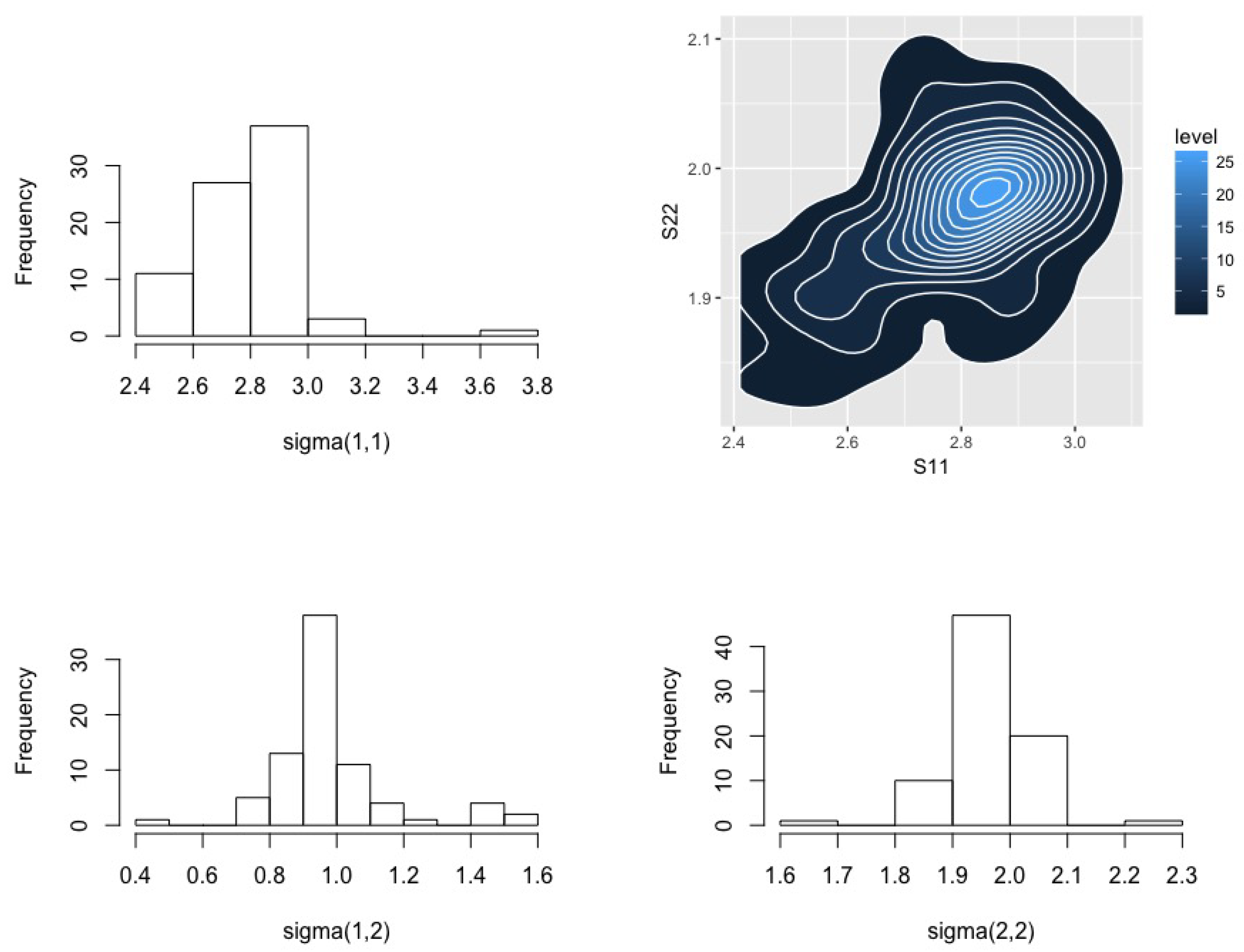

Figure 3 provides posterior distributions for the components of the variance matrix.

Table 2 presents estimation results for the full data and those with the contaminating distributions removed as well as from the BMH method. Here we again observe that BMH yields results that are very close to those obtained using the uncontaminated data. There is some more irregularity in our estimates, particularly in

Figure 3, which we speculate is due to poor optimization. There is considerable scope to improve the numerics of minimum Hellinger distance methods more generally, but this is beyond the scope of this paper.

6. Discussion

This paper investigates the use of minimum Hellinger distance methods that replace kernel density estimates with Bayesian nonparametric models. We show that simply substituting the expected

a posteriori estimator will reproduce the efficiency and robustness properties of the classical disparity methods first derived in [

3]. Further, inducing a posterior distribution on

through the posterior for

g results in a Bernstein von Mises theorem and a distributional robustness result.

There are multiple potential extensions of this work. While we have focused on the specific pairing of Hellinger distance and random histogram priors, both of these can be generalized. A more general class of disparities was examined in [

7], and we believe the extension of our methods to this class are straightforward. More general Bayesian nonparametric priors are discussed in [

14], where the Dirichlet process prior has been particularly popular. Extensions to each of these priors will require separate analysis (e.g., [

26]). Extensions of disparities to regression models were examined in [

27] using a conditional density estimate, where equivalent Bayesian nonparametrics are not as well developed. Other modeling domains such as time series may require multivariate density estimates, resulting in further challenges.

Our results are a counterpoint to the Bayesian extensions of Hellinger distance methods in [

2] where the kernel density was retained for

but a prior was given for

and the disparity treated as a log likelihood. Combining both these approaches represents a fully Bayesian implementation of disparity methods and is an important direction of future research.