Abstract

Entropy appears in many contexts (thermodynamics, statistical mechanics, information theory, measure-preserving dynamical systems, topological dynamics, etc.) as a measure of different properties (energy that cannot produce work, disorder, uncertainty, randomness, complexity, etc.). In this review, we focus on the so-called generalized entropies, which from a mathematical point of view are nonnegative functions defined on probability distributions that satisfy the first three Shannon–Khinchin axioms: continuity, maximality and expansibility. While these three axioms are expected to be satisfied by all macroscopic physical systems, the fourth axiom (separability or strong additivity) is in general violated by non-ergodic systems with long range forces, this having been the main reason for exploring weaker axiomatic settings. Currently, non-additive generalized entropies are being used also to study new phenomena in complex dynamics (multifractality), quantum systems (entanglement), soft sciences, and more. Besides going through the axiomatic framework, we review the characterization of generalized entropies via two scaling exponents introduced by Hanel and Thurner. In turn, the first of these exponents is related to the diffusion scaling exponent of diffusion processes, as we also discuss. Applications are addressed as the description of the main generalized entropies advances.

1. Introduction

The concept of entropy was introduced by Clausius [1] in thermodynamics to measure the amount of energy in a system that cannot produce work, and given an atomic interpretation in the foundational works of statistical mechanics and gas dynamics by Boltzmann [2,3], Gibbs [4], and others. Since then, entropy has played a central role in many-particle physics, notoriously in the description of non-equilibrium processes through the second principle of thermodynamics and the principle of maximum entropy production [5,6]. Moreover, Shannon made of entropy the cornerstone on which he built his theory of information and communication [7]. Entropy and the associated entropic forces are also the main character in recent innovative approaches to artificial intelligence and collective behavior [8,9]. Our formalism is information-theoretic (i.e., entropic forms are functions of probability distributions) owing to the mathematical properties that we discuss along the way, but can be translated to a physical context through the concept of microstate.

The prototype of entropy that we are going to consider below is the Boltzmann–Gibbs–Shannon (BGS) entropy,

In its physical interpretation, J/K is the Boltzmann constant, W is the number of microstates consistent with the macroscopic constraints of a given thermodynamical system, and is the probability (i.e., the asymptotic fraction of time) that the system is in the microstate i. In information theory, k is set equal to 1 for mathematical convenience, as we do hereafter, and measures the average information conveyed by the outcomes of a random variable with probability distribution . We use natural logarithms unless otherwise stated, although logarithms to base 2 is the natural choice in binary communications (the difference being the units, nats or bits, respectively). Remarkably enough, Shannon proved in Appendix B of his seminal paper [7] that Equation (1) follows necessarily from three properties or axioms (actually, four are needed; more on this below).

BGS entropy was later on generalized by other “entropy-like” quantities in dynamical systems (Kolmogorov–Sinai entropy [10], etc.), information theory (Rényi entropy [11], etc.), and statistical physics (Tsallis entropy [12], etc.), to mention the most familiar ones (see, e.g., [13] for an account of some entropy-like quantities and their applications, especially in time series analysis). Similar to with , the essence of these new entropic forms was distilled into a small number of properties that allow sorting them out in a more systematic way [13,14]. Currently, the uniqueness of is derived from the four Khinchin–Shannon axioms (Section 2). However, the fourth axiom, called the separability or strong additivity axiom (which implies additivity, i.e., , where stands for a system composed of any two probabilistically independent subsystems and ), is violated by physical systems with long-range interactions [15,16]. This poses the question of what mathematical properties have the “generalized entropies” satisfying only the other three axioms. These are the primary candidates for extensive entropic forms, i.e., functions S such that , the shorthand standing for the physical system composed of the subsystems and . Note that in non-ergodic interacting systems just because the number of states in is different from the number of states in . A related though different question is how to weaken the separability axiom to identify the extensive generalized entropies; we come back briefly to this point in Section 2 when speaking of the composability property.

Along with , typical examples of generalized entropies are the Tsallis entropy [12],

(, , with the proviso that for terms with are omitted), and the Rényi entropy [11],

(, ). The Tsallis and Rényi entropies are related to the BGS entropy through the limits

this being one of the reasons they are considered generalizations of the BGS entropy. Both and have found interesting applications [15,17]; in particular, the parametric weighting of the probabilities in their definitions endows data analysis with additional flexibility. Other generalized entropies that we consider in this paper are related to ongoing work on graphs [18]. Further instances of generalized entropies are also referred to below.

Let us remark at this point that , , and other generalized entropies considered in this review can be viewed as special cases of the -entropies introduced in [19] for the study of asymptotic probability distributions. In turn, -entropies were generalized to quantum information theory in [20]. Quantum -entropies, which include von Neumann’s entropy [21] as well as the quantum versions of Tsallis’ and Rényi’s entropies, have been applied, for example, to the detection of quantum entanglement (see [20] and references therein). In this review, we do not consider quantum entropies, which would require advanced mathematical concepts, but only entropies defined on classical, discrete and finite probability distributions. If necessary, the transition to continuous distributions is done by formally replacing probability mass functions by densities and sums by integrals. For other approaches to the concept of entropy in more general settings, see [22,23,24,25].

Generalized entropies can be characterized by two scaling exponents in the limit , which we call Hanel–Thurner exponents [16]. For the simplest generalized entropies, which include but not (see Section 2), these exponents allow establishing a relationship between the abstract concept of generalized entropy and the physical properties of the system they describe through their asymptotic scaling behavior in the thermodynamic limit. That is, the two exponents label equivalence classes of systems which are universal in that the corresponding entropies have the same thermodynamic limit. In this regard, it is interesting to mention that, for any pair of Hanel–Thurner exponents (at least within certain ranges), there is a generalized entropy with those exponents, i.e., systems with the sought asymptotic behavior. Furthermore, the first Hanel–Thurner exponent allows also establishing a second relation with physical properties, namely, with the diffusion scaling exponents of diffusion processes, under some additional assumptions.

The rest of this review is organized as follows. The concept of generalized entropy along with some formal preliminaries and its basic properties are discussed in Section 1. As way of illustration, we discuss in Section 3 the Tsallis and Renyi entropies, as well as more recent entropic forms. The choice of the former ones is justified by their uniqueness properties under quite natural axiomatic formulations. The Hanel–Thurner exponents are introduced in Section 4, where their computation is also exemplified. Their aforementioned relation to diffusion scaling exponents is explained in Section 5. The main messages are recapped in Section 6. There is no section devoted to the applications but, rather, these are progressively addressed as the different generalized entropies are presented. The main text has been supplemented with three appendices at the end of the paper.

2. Generalized Entropies

Let be the set of probability mass distributions for all . For any function ( being the nonnegative real numbers), the Shannon–Khinchin axioms for an entropic form H are the following.

- SK1

- Continuity. depends continuously on all variables for each W.

- SK2

- Maximality. For all W,

- SK3

- Expansibility: For all W and ,

- SK4

- Separability (or strong additivity): For all ,where .

Let be the joint probability distribution of the random variables X and Y, with marginal distributions and , respectively. Then, axiom SK4 can be written as

where is the entropy of Y conditional on X. In particular, if X and Y are independent (i.e., ), then and

A function H such that Equation (5) holds (for independent random variables X and Y) is called additive. Physicists prefer writing for composed systems with microstate probabilities ; this condition holds approximately only for weakly interacting systems X and Y.

With regard to Equation (5), let us remind that, for two general random variables X and Y, the difference is the mutual information of X and Y. It holds if and only if X and Y are independent [26].

More generally, a function H such that

() is called -additive. With the same notation as above, we can write this property as

where, again, X and Y are independent random variables. In a statistical mechanical context, X and Y may stand also for two probabilistically independent (or weakly interacting) physical systems. If , we recover additivity (Equation (5)).

In turn, additivity and -additivity are special cases of composability [15,27]:

with the same caveats for X and Y. Here, is a symmetric function of two variables. Composability was proposed in [15] to replace axiom SK4. Interestingly, it has been proved in [27] that, under some technical assumptions, the only composable generalized entropy of the form in Equation (10) is , up to a multiplicative constant.

As mentioned in Section 1, a function satisfying axioms SK1–SK4 is necessarily of the form for every W, where k is a positive constant ([28], Theorem 1). The same conclusion can be derived using other equivalent axioms [14,29]. For instance, Shannon used continuity, the property that increases with n, and a property called grouping [29] or decomposibility [30], which he defined graphically in Figure 6 of [7]:

(). This property allows reducing the computation of to the computation of the entropy of dichotomic random variables. According to ([15], Section 2.1.2.7), Shannon missed in his uniqueness theorem to formulate the condition in Equation (5), X and Y being independent random variables.

Nonnegative functions defined on that satisfy axioms SK1–SK3 are called generalized entropies [16]. In the simplest situation, a generalized entropy has the sum property [14], i.e., the algebraic form

with .

The following propositions are immediate.

- (i)

- Symmetry: is invariant under permutation of .

- (ii)

- satisfies axiom SK1 if and only if g is continuous.

- (iii)

- If satisfies axiom SK2, thenfor all and with .

- (iv)

- If g is concave (i.e., ∩-convex), then satisfies axiom SK2.

- (v)

- satisfies axiom SK3 if and only if .

Note that Proposition (iv) follows from the symmetry and concavity of (since the unique maximum of must occur at equal probabilities).

We conclude from Propositions (ii), (iv) and (v) that, for to be a generalized entropy, the following three condition suffice:

- (C1)

- g is continuous.

- (C2)

- g is concave.

- (C3)

- .

As in [16], we say that a macroscopic statistical system is admissible if it is described by a generalized entropy of the form in Equation (10) such that g verifies Conditions (C1)–(C3). By extension, we say also that the generalized entropy is admissible. Admissible systems and generalized entropies are the central subject of this review. Clearly, is admissible because

. On the other hand, corresponds to

For to be admissible, Condition (C1) requires and Condition (C3) requires .

An example of a function with the sum property that does not qualify for admissible generalized entropy is

Indeed, is not ∩-convex but ∪-convex and . This probability functional was used in [31] to classify sleep stages.

Other generalized entropies that are considered below have the form

where G is a continuous monotonic function, and g is continuous with . By definition, is also symmetric, and Proposition (iii) holds with the obvious changes. However, the concavity of g is not a sufficient condition any more for to be a generalized entropy. Such is the case of the Rényi entropy (Equation (3)); here

but (and, hence, ) is not ∩-convex for . Furthermore, note that axiom SK3 requires for to be a generalized entropy.

Since Equation (10) is a special case of Equation (14) (set G to be the identity map ), we can refer to both cases just by using the notation , as we do hereafter.

We say that two probability distributions and , , are close if

where ; other norms, such as the two-norm and the max-norm, will do as well since they are all equivalent in the metric sense. A function is said to be Lesche-stable if for all W and there exists such that

where . It follows that

Lesche stability is called experimental robustness in [15] because it guarantees that similar experiments performed on similar physical systems provide similar results for the function F. According to [16], all admissible systems are Lesche stable.

3. Examples of Generalized Entropies

As way of illustration, we put the focus in this section on two classical generalized entropies as well as on some newer ones. The classical examples are the Tsallis entropy and the Rényi entropy because they have extensively been studied in the literature from an axiomatic point of view too. As it turns out, they are unique under some natural assumptions, such as additivity, -additivity or composability (see below for details). The newer entropies are related to potential applications of the concept of entropy to graph theory [18]. Other examples of generalized entropies are listed in Appendix A for further references.

3.1. Tsallis Entropy

A simple way to introduce Tsallis’ entropy as a generalization of the BGS entropy is the following [15]. Given , define the q-logarithm of a real number as

Note that is defined by continuity since . If the logarithm in the definition of , Equation (1), is replaced by , then we obtain the Tsallis entropy:

As noted before, for to be an admissible generalized entropy.

Alternatively, the definition

can also be generalized to provide the Tsallis entropy via the q-derivative,

where

Set , i.e., , and let to check that .

Although Tsallis proposed his entropy (Equation (17)) in 1988 to go beyond the standard statistical mechanics [12], basically the same formula had already been proposed in 1967 by Havrda and Charvát (with a different multiplying factor) in the realm of cybernetics and control theory [32].

Some basic properties of follow.

- (T1)

- because (or ).

- (T2)

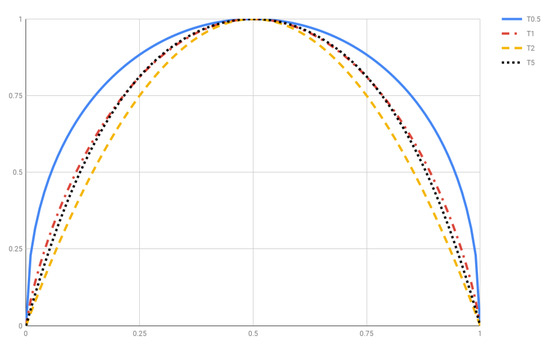

- is (strictly) ∩-convex for . Figure 1 plots for , 1, 2 and 5. Let us mention in passing that is ∪-convex for .

Figure 1. Tsallis entropy for and 5.

Figure 1. Tsallis entropy for and 5. - (T3)

- is Lesche-stable for all [33,34]. Actually, we stated at the end of Section 2 that all admissible systems are Lesche stable.

- (T4)

- (T5)

- Similar to what happens with the BGS entropy, Tsallis entropy can be uniquely determined (except for a multiplicative positive constant) by a small number of axioms. Thus, Abe [35] characterized the Tsallis entropy by: (i) continuity; (ii) the increasing monotonicity of with respect to W; (iii) expansivity; and (iv) a property involving conditional entropies. Dos Santos [36], on the other hand, used the previous Axioms (i) and (ii), q-additivity, and a generalization of the grouping axiom (Equation (9)). Suyari [37] derived from the first three Shannon–Khinchin axioms and a generalization of the fourth one. The perhaps most economical characterization of was given by Furuichi [38]; it consists of continuity, symmetry under the permutation of , and a property called q-recursivity. As mentioned in Section 2, Tsallis entropy was recently shown [27] to be the only composable generalized entropy of the form in Equation (10) under some technical assumptions. Further axiomatic characterizations of the Tsallis entropy can be found in [39].

An observable of a thermodynamical (i.e., many-particle) system, say its energy or entropy, is said to be extensive if (among other characterizations), for a large number N of particles, that observable is (asymptotically) proportional to N. For example, for a system whose particles are weakly interacting (think of a dilute gas), the additive is extensive, whereas the non-additive () is non-extensive. The same happens with ergodic systems [40]. However, according to [15], for a non-ergodic system with strong correlations, can be non-extensive while can be extensive for a particular value of q; such is the case of a microcanonical spin system on a network with growing constant connectancy [40]. This is why represents a physically relevant generalization of the traditional . Axioms SK1–SK3 are expected to hold true also in strongly interacting systems.

Further applications of the Tsallis entropy include astrophysics [41], fractal random walks [42], anomalous diffusion [43,44], time series analysis [45], classification [46,47], and artificial neural networks [48].

3.2. Rényi Entropy

A simple way to introduce Rényi’s entropy as a generalization of is the following [17]. By definition, the BGS entropy of the probability distribution (or of a random variable X with that probability distribution) is the linear average of the information function

or, equivalently, the expected value of the random variable :

In the general theory of expected values, for any invertible function and realizations of X in the definition domain of , an expected value can be defined as

Applying this definition to , we obtain

If this generalized average has to be additive for independent events, i.e., it has to satisfy Equation (6) with , then

must hold, where , are positive constants, and . The first case leads to , Equation (1), after choosing . The second case leads to the Rényi entropy (actually, a one-parameter family of entropies) , Equation (3), after choosing as well.

Next, we summarize some important properties of the Rényi entropy.

- (R1)

- is additive by construction.

- (R2)

- . Indeed, use L’Hôpital’s Rule to derive

- (R3)

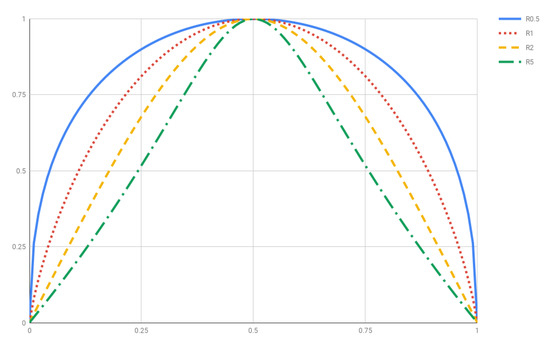

- is ∩-convex for and it is neither ∩-convex nor ∪-convex for . Figure 2 plots for , 1, 2 and 5.

Figure 2. Rényi entropy for and 5.

Figure 2. Rényi entropy for and 5. - (R4)

- is Lesche-unstable for all , [49].

- (R5)

- The entropies are monotonically decreasing with respect to the parameter q for any distribution of probabilities, i.e.,This property follows from the formulawhere , and is the Kullback–Leibler divergence of the probability distributions and . vanishes only in the event that both probability distributions coincide, otherwise is positive [26].

- (R6)

- A straightforward relation between Rényi’s and Tsallis’ entropies is the following [50]:However, the axiomatic characterizations of the Rényi entropy are not as simple as those for the Tsallis entropy. See [27,51,52] for some contributions in this regard.

For some values of q, has particular names. Thus, is called Hartley or max-entropy, which coincides numerically with for an even probability distribution. We saw in (R2) that converges to the BGS entropy in the limit . is called collision entropy. In the limit , converges to the min-entropy

The name of is due to property (R5).

Rényi entropy has found interesting applications in random search [53], information theory (especially in source coding [54,55]), cryptography [56], time series analysis [57], and classification [46,58], as well as in statistical signal processing and machine learning [17].

3.3. Graph Related Entropies

As part of ongoing work on graph entropy [18], the following generalized entropies are defined:

and

Note that , while . Other oddities of the above entropies include the terms in their definitions, as well as the presence of products instead of sums in the definition of .

First, is of the type in Equation (10) with

By definition, is continuous (even smooth), concave on the interval , and . Therefore (see Conditions (C1)–(C3) in Section 2), H1 satisfies the axioms SK1–SK3, hence it is a generalized entropy.

As for , this probability functional is of the type in Equation (14) with

and . To prove that is a generalized entropy, note that

satisfies axioms SK1–SK3 for the same reasons as does. Therefore, the same happens with on account of the exponential function being continuous (SK1), increasingly monotonic (SK2), and univalued (SK3).

Finally, is of the type in Equation (10) with

Since , it is a generalized entropy because, as shown above, satisfies axioms SK1–SK3.

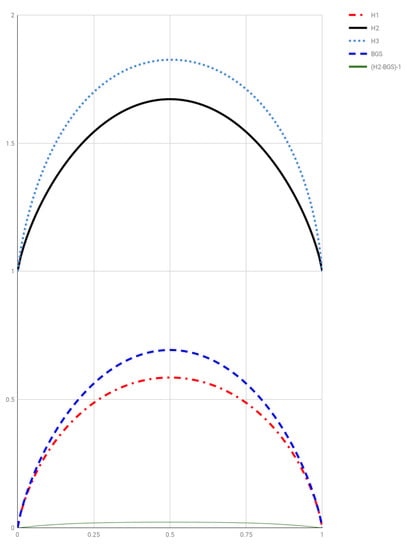

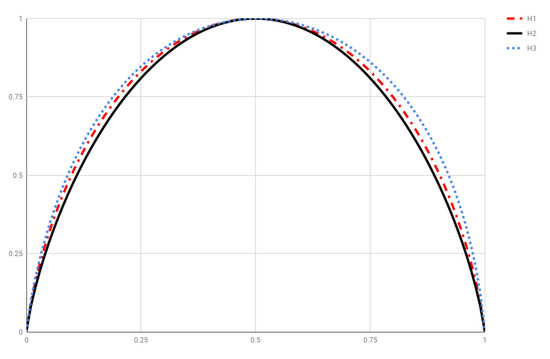

Figure 3 depicts , , , along with and for comparison. As a curiosity, let us point out that the scaled versions

(), see Figure 4, approximate measured in bits very well. In particular, the relative error in the approximation of by is less than , so their graphs overlap when plotted.

Figure 3.

Entropies , , along with and for comparison.

Figure 4.

Scaled entropies , see Equation (24).

4. Hanel–Thurner Exponents

All generalized entropies group in classes labeled by two exponents introduced by Hanel and Thurner [16], which are determined by the limits

(W being as before the cardinality of the probability distribution or the total number of microstates in the system, ) and

(). Note that the limit in Equation (26) does not depend actually on c. The limits in Equations (25) and (26) can be computed via the asymptotic equipartition property [26]. Thus,

and

asymptotically with ever larger W (thermodynamic limit). Set now to derive

and

Clearly, the scaling exponents c, d of a generalized entropy depend on the behavior of g in an infinitesimal neighborhood of 0 (i.e., with ), as well as on the properties of G if . We call the Hanel–Thurner (HT) exponents of the generalized entropy .

When , Equations (27) and (28) abridge to

(after replacing by z), and

respectively. In this case, , while d can be any real number. If , the concavity of g implies [16]. The physical properties of admissible systems are uniquely characterized by their HT exponents, i.e., by their asymptotic properties in the limit [16]. In this sense, we can also speak of the universality class .

As way of illustration, we are going to derive the HT exponents of , and .

- (E1)

- (E2)

- For the Tsallis entropy, see Equation (12),It follows readily that if , and if . Hence, although , there is no parallel convergence concerning the HT exponents.

- (E3)

- For the Rényi entropy, and (see Equation (15)), soas (both for and ). Therefore, . Furthermore,for all , so that . In sum, for all q.

As for the generalized entropies , and considered in Section 3.3, we show in Appendix B that their HT exponents are , , and , respectively. Thus, and belong to the same universality class as , while the HT exponents of and (both of the same the type in Equation (14)) are different. Moreover, the interested reader will find in Table 1 of [16] the HT exponents of the generalized entropies listed in Appendix A.

An interesting issue that arises at this point is the inverse question: Given and , is there an admissible system such that its HT exponents are precisely ? The answer is yes, at least under some restrictions on the values of c and d. Following [16], we show in Appendix C that, if

then the “generalized -entropy”

has HT exponents . Here, and is the incomplete Gamma function (Section 6.5 of [59]), that is,

Several application cases where generalized -entropies are relevant have been discussed by Hanel and Thurner in [40] (super-diffusion, spin systems, binary processes, and self-organized critical systems) and [60] (aging random walks, i.e., random walks whose transition rates between states are path- and time-dependent).

5. Asymptotic Relation between the HT Exponent c and the Diffusion Scaling Exponent

In contrast to “non-interacting” systems, where both the additivity and extensivity of the BGS entropy hold, in the case of general interacting statistical systems these properties can no longer be simultaneously satisfied, requiring a more general concept of entropy [16,40]. Following [16] (Section 4), a possible generalization of for admissible systems is defined via the two asymptotic scaling relations in Equations (29) and (30), i.e., the HT exponents c and d, respectively. These asymptotic exponents can be interpreted as a measure of deviation from the “non-interacting” case regarding the stationary behavior.

5.1. The Non-Stationary Regime

In this section, we describe a relation between the exponent c and a similar macroscopic measure that characterizes the system in the non-stationary regime, thus providing a meaningful interpretation of the exponent. The non-stationary behavior of a system can possibly be described by the Fokker–Planck (FP) equation governing the time evolution of a probability density function . In this continuous limit, the generalized entropy is assumed to be written as , where g is asymptotically characterized by Equation (29) and is a time-independent scalar function of the space coordinate x (for example, a potential) [61,62].

Going beyond the scope of the simplest FP equation, we consider systems for which the correlation among their (sub-)units can be taken into account by replacing the diffusive term with an effective term , where is a pre-defined functional of the probability density. can be either derived directly from the microscopical transition rules or it may be defined based on macroscopic assumptions. The resulting FP equation can be written as

where are constants and is a time-independent external potential.

For simplicity, hereafter we exclusively focus on one dimensional FP equations. In the special case of and no external forces, Equation (34) reduces to the well-known linear diffusion equation

The above equation is invariant under the space-time scaling transformation

with [63,64]. This scaling property opens up the possibility of a phenomenological and macroscopic characterization of anomalous diffusion processes [15,44] as well, which correspond to more complicated non-stationary processes described by FP equations in the form of Equation (34) with a non-trivial value of . With the help of the transformation in Equation (36), we can also classify correlated statistical systems according to the rate of the spread of their probability density functions over time in the asymptotic limit and, thus, quantitatively describe their behavior in the non-stationary regime.

5.2. Relation between the Stationary and Non-Stationary Regime

To reasonably and consistently relate the generalized entropies to the formalism of FP equations—corresponding to the stationary and non-stationary regime, respectively—the functional has to be chosen such that the stationary solution of the general FP equation becomes equivalent to the Maximum Entropy (MaxEnt) probability distribution calculated with the generalized entropies. These MaxEnt distributions can be obtained analogously to the results by Hanel and Thurner in [16,40], where they used standard constrained optimization to find the most general form of MaxEnt distributions, which turned out to be with

Here, are constants depending only on the parameters and is the kth branch of the Lambert-W function (specifically, branch for and branch for ). The consistency criterion imposed above accords with the fact that many physical systems tend to converge towards maximum entropy configuration over time, however, it specifies the limits of our assumptions.

Consider systems described by Equation (34) in the absence of external force, i.e.,

By assuming that the corresponding stationary solutions can be identified with the MaxEnt distributions in Equation (37), it can be shown that the functional form of the effective density must be expressed as

where we neglected additive and multiplicative constant factors for the sake of simplicity. Similar implicit equations have already been investigated in [61,62,65]. Once the asymptotic phase space volume scaling relation in Equation (29) holds, it can also be shown that the generalized FP in Equation (38) (with as in Equation (39)) obeys the diffusion scaling property in Equation (36) with a non-trivial value of in the asymptotic limit [66] (assuming additionally the existence of the solution of Equation (38), at least from an appropriate initial condition). A simple algebraic relation between the diffusion scaling exponent and the phase space volume scaling exponent c can be established [66], which can be written as

Therefore, this relation between c and defines families of FP equations which show asymptotic invariance under the scaling relation in Equation (36).

6. Conclusions

This review concentrates on the concept of generalized entropy (Section 2), which is relevant in the study of real thermodynamical systems and, more generally, in the theory of complex systems. Possibly the first example of a generalized entropy was introduced by Rényi (Section 3.2), who was interested in the most general information measure which is additive in the sense of Equation (5), with the random variables X and Y being independent. Another very popular generalized entropy was introduced by Tsallis as a generalization of the Boltzmann–Gibbs entropy (Section 3.1) to describe the properties of physical systems with long range forces and complex dynamics in equilibrium. Some more exotic generalized entropies are considered in Section 3.3, while other examples that have been published in the last two decades are gathered in Appendix A. Our approach was to a great extent formal, with special emphasis in Section 2 and Section 3 on axiomatic formulations and mathematical properties. For expository reasons, applications are mentioned and the original references given as our description of the main generalized entropies progressed, rather than addressing them jointly in a separate section.

An alternative approach to generalized entropies other than the axiomatic one (Section 2) consists in characterizing their asymptotic behavior in the thermodynamic limit . Hanel and Thurner showed that two scaling exponents suffice for admissible generalized entropies, i.e., those entropies of the form in Equation (10) with g continuous, concave and (Section 4); it holds and . As a result, the admissible systems fall in equivalence classes labeled by the exponents of the corresponding entropies. Conversely, to each , there is a generalized entropy with those Hanel–Thurner exponents (see Equation (32)), at least for the most interesting value ranges.

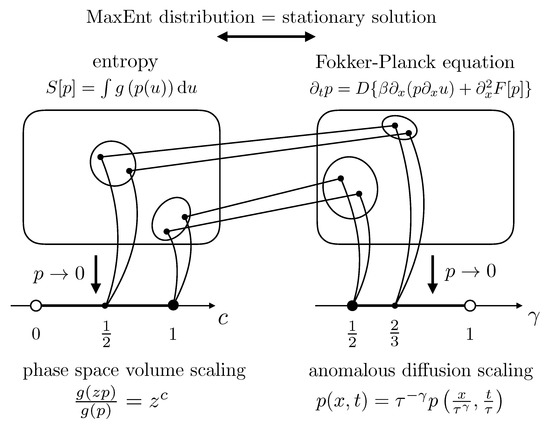

It is also remarkable that, at asymptotically large times and volumes, there is a 1-to-1 relation between the equivalence class of generalized entropies with a given and the equivalence class of Fokker–Planck equations in which the invariance in Equation (36) holds with (Section 5). This means that the equivalence classes of admissible systems can generally be mapped into anomalous diffusion processes and vice versa, thus conveying the same information about the system in the asymptotic limit (i.e., when ) [66]. A schematic visualization of this relation is provided in Figure 5. Moreover, the above result can actually be understood as a possible generalization of the Tsallis–Bukman relation [44].

Figure 5.

Visual summary of the main result presented in Section 5 schematically depicting the relation between the exponents and c. Source: [66].

Author Contributions

All the authors have contributed to conceptualization, methodology, validation, formal analysis, investigation, writing, review and editing, both of the initial draft and the final version.

Funding

J.M.A. was supported by the Spanish Ministry of Economy, Industry and Competitiveness, grant MTM2016-74921-P (AEI/FEDER, EU). S.G.B. was partially supported by the Hungarian National Research, Development and Innovation Office (grant no. K 128780) and the European Union’s Horizon 2020 Research and Innovation Programme under Grant Agreement No. 740688.

Acknowledgments

We thank our referees for their helpful and constructive criticism. J.M.A. was supported by the Spanish Ministry of Economy, Industry and Competitiveness, grant MTM2016-74921-P (AEI/FEDER, EU). This research was also partially supported by the Hungarian National Research, Development and Innovation Office (grant no. K 128780) and the European Union’s Horizon 2020 Research and Innovation Programme under Grant Agreement No. 740688.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

We list in this appendix further generalized entropies of the form in Equation (10) and the original references (notation as in Table 1 of [16]). is the incomplete Gamma function, in Equation (33).

- [67].

- [68].

- [69].

- [70].

- [71].

- ([15], page 60).

Appendix B

Since and are generalized entropies of the type in Equation (10), we conclude that both belong to the same class as (see Equation (11)), hence .

is a generalized entropy of the type in Equation (14) with . Therefore,

Comparison with Equation (27) shows that .

Moreover,

Comparison with Equation (28) shows that .

Appendix C

1. First, note from Equation (32) that

where the incomplete Gamma function exists for and all (see Equation (33)), with .

Among Conditions (C1)–(C3) on (Section 2), for the entropy in Equation (32) to be admissible, only concavity (Condition (C2)) needs to be checked. Since

it holds if and only if where for each and . Therefore, for all if and only if , where . On the other hand, for the integral to exist. Both restrictions together lead then to the condition in Equation (31) on d for to be a generalized entropy.

2. Use the asymptotic approximation 6.5.32 of [59]

(, ) to obtain the leading approximation of in an infinitesimal neighborhood of 0:

Using Equation (A3), the following can be derived:

References

- Clausius, R. The Mechanical Theory of Heat; McMillan and Co.: London, UK, 1865. [Google Scholar]

- Boltzmann, L. Weitere Studien über das Wärmegleichgewicht unter Gasmolekülen. Sitz. Ber. Akad. Wiss. Wien (II) 1872, 66, 275–370. [Google Scholar]

- Boltzmann, L. Über die Beziehung eines allgemeinen mechanischen Satzes zum zweiten Hauptsatz der Wärmetheorie. Sitz. Ber. Akad. Wiss. Wien (II) 1877, 75, 67–73. [Google Scholar]

- Gibbs, J.W. Elementary Principles in Statistical Mechanics—Developed with Especial References to the Rational Foundation of Thermodynamics; C. Scribner’s Sons: New York, NY, USA, 1902. [Google Scholar]

- Dewar, R. Information theory explanation of the fluctuation theorem, maximum entropy production and self-organized criticality in nonequilibrium stationary state. J. Phys. A Math. Gen. 2003, 36, 631–641. [Google Scholar] [CrossRef]

- Martyushev, L.M. Entropy and entropy production: old misconceptions and new breakthroughs. Entropy 2013, 15, 1152–1170. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Wissner-Gross, A.D.; Freer, C.E. Causal entropic forces. Phys. Rev. Lett. 2013, 110, 168702. [Google Scholar] [CrossRef] [PubMed]

- Mann, R.P.; Garnett, R. The entropic basis of collective behaviour. J. R. Soc. Interface 2015, 12, 20150037. [Google Scholar] [CrossRef] [PubMed]

- Kolmogorov, A.N. A new metric invariant of transitive dynamical systems and Lebesgue space endomorphisms. Dokl. Acad. Sci. USSR 1958, 119, 861–864. [Google Scholar]

- Rényi, A. On measures of entropy and information. In Proceedings of the 4th Berkeley Symposium on Mathematics, Statistics and Probability; Neyman, J., Ed.; University of California Press: Berkeley, CA, USA, 1961; pp. 547–561. [Google Scholar]

- Tsallis, C. Possible generalization of Boltzmann–Gibbs statistics. J. Stat. Phys. 1988, 52, 479–487. [Google Scholar] [CrossRef]

- Amigó, J.M.; Keller, K.; Unakafova, V. On entropy, entropy-like quantities, and applications. Disc. Cont. Dyn. Syst. B 2015, 20, 3301–3343. [Google Scholar] [CrossRef]

- Csiszár, I. Axiomatic characterization of information measures. Entropy 2008, 10, 261–273. [Google Scholar] [CrossRef]

- Tsallis, C. Introduction to Nonextensive Statistical Mechanics; Springer: New York, NY, USA, 2009. [Google Scholar]

- Hanel, R.; Thurner, S. A comprehensive classification of complex statistical systems and an axiomatic derivation of their entropy and distribution functions. EPL 2011, 93, 20006. [Google Scholar] [CrossRef]

- Principe, J.C. Information Theoretic Learning: Renyi’s Entropy and Kernel Perspectives; Springer: New York, NY, USA, 2010. [Google Scholar]

- Hernández, S. Introducing Graph Entropy. Available online: http://entropicai.blogspot.com/search/label/Graph%20entropy (accessed on 22 October 2018).

- Salicrú, M.; Menéndez, M.L.; Morales, D.; Pardo, L. Asymptotic distribution of (h, ϕ)-entropies. Commun. Stat. Theory Meth. 1993, 22, 2015–2031. [Google Scholar] [CrossRef]

- Bosyk, G.M.; Zozor, S.; Holik, F.; Portesi, M.; Lamberti, P.W. A family of generalized quantum entropies: Definition and properties. Quantum Inf. Process. 2016, 15, 3393–3420. [Google Scholar] [CrossRef]

- Von Neumann, J. Thermodynamik quantenmechanischer Gesamtheiten. Nachrichten von der Gesellschaft der Wissenschaften zu Göttingen 1927, 1927, 273–291. (In German) [Google Scholar]

- Hein, C.A. Entropy in Operational Statistics and Quantum Logic. Found. Phys. 1979, 9, 751–786. [Google Scholar] [CrossRef]

- Short, A.J.; Wehner, S. Entropy in general physical theories. New J. Phys. 2010, 12, 033023. [Google Scholar] [CrossRef]

- Holik, F.; Bosyk, G.M.; Bellomo, G. Quantum information as a non-Kolmogovian generalization of Shannon’s theory. Entropy 2015, 17, 7349–7373. [Google Scholar] [CrossRef]

- Portesi, M.; Holik, F.; Lamberti, P.W.; Bosyk, G.M.; Bellomo, G.; Zozor, S. Generalized entropie in quantum and classical statistical theories. Eur. Phys. J. Spec. Top. 2018, 227, 335–344. [Google Scholar] [CrossRef]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; John Wiley and Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

- Enciso, A.; Tempesta, P. Uniqueness and characterization theorems for generalized entropies. J. Stat. Mech. 2017, 123101. [Google Scholar] [CrossRef]

- Khinchin, A.I. Mathematical Foundations of Information Theory; Dover Publications: New York, NY, USA, 1957. [Google Scholar]

- Ash, R.B. Information Theory; Dover Publications: New York, NY, USA, 1990. [Google Scholar]

- MacKay, D.J. Information Theory, Inference, and Earning Algorithms; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Bandt, C. A new kind of permutation entropy used to classify sleep stages from invisible EEG microstructure. Entropy 2017, 19, 197. [Google Scholar] [CrossRef]

- Havrda, J.; Charvát, F. Quantification method of classification processes. Concept of structural α-entropy. Kybernetika 1967, 3, 30–35. [Google Scholar]

- Abe, S. Stability of Tsallis entropy and instabilities of Renyi and normalized Tsallis entropies. Phys. Rev. E 2002, 66, 046134. [Google Scholar] [CrossRef] [PubMed]

- Tsallis, C.; Brigatti, E. Nonextensive statistical mechanics: A brief introduction. Contin. Mech. Thermodyn. 2004, 16, 223–235. [Google Scholar] [CrossRef]

- Abe, S. Tsallis entropy: How unique? Contin. Mech. Thermodyn. 2004, 16, 237–244. [Google Scholar] [CrossRef]

- Dos Santos, R.J.V. Generalization of Shannon’s theorem for Tsallis entropy. J. Math. Phys. 1997, 38, 4104–4107. [Google Scholar] [CrossRef]

- Suyari, H. Generalization of Shannon–Khinchin axioms to nonextensive systems and the uniqueness theorem for the nonextensive entropy. IEEE Trans. Inf. Theory 2004, 50, 1783–1787. [Google Scholar] [CrossRef]

- Furuichi, S. On uniqueness theorems for Tsallis entropy and Tsallis relative entropy. IEEE Trans. Inf. Theory 2005, 51, 3638–3645. [Google Scholar] [CrossRef]

- Jäckle, S.; Keller, K. Tsallis entropy and generalized Shannon additivity. Axioms 2016, 6, 14. [Google Scholar] [CrossRef]

- Hanel, R.; Thurner, S. When do generalized entropies apply? How phase space volume determines entropy. Europhys. Lett. 2011, 96, 50003. [Google Scholar] [CrossRef]

- Plastino, A.R.; Plastino, A. Stellar polytropes and Tsallis’ entropy. Phys. Lett. A 1993, 174, 384–386. [Google Scholar] [CrossRef]

- Alemany, P.A.; Zanette, D.H. Fractal random walks from a variational formalism for Tsallis entropies. Phys. Rev. E 1994, 49, R956–R958. [Google Scholar] [CrossRef]

- Plastino, A.R.; Plastino, A. Non-extensive statistical mechanics and generalized Fokker–Planck equation. Physica A 1995, 222, 347–354. [Google Scholar] [CrossRef]

- Tsallis, C.; Bukman, D.J. Anomalous diffusion in the presence of external forces: Exact time-dependent solutions and their thermostatistical basis. Phys. Rev. E 1996, 54, R2197. [Google Scholar] [CrossRef]

- Capurro, A.; Diambra, L.; Lorenzo, D.; Macadar, O.; Martin, M.T.; Mostaccio, C.; Plastino, A.; Rofman, E.; Torres, M.E.; Velluti, J. Tsallis entropy and cortical dynamics: The analysis of EEG signals. Physica A 1998, 257, 149–155. [Google Scholar] [CrossRef]

- Maszczyk, T.; Duch, W. Comparison of Shannon, Renyi and Tsallis entropy used in decision trees. In Proceedings of the International Conference on Artificial Intelligence and Soft Computing, Zakopane, Poland, 22–26 June 2008; Springer: Berlin, Germany, 2008; pp. 643–651. [Google Scholar]

- Gajowniczek, K.; Karpio, K.; Łukasiewicz, P.; Orłowski, A.; Zabkowski, T. Q-Entropy approach to selecting high income households. Acta Phys. Pol. A 2015, 127, 38–44. [Google Scholar] [CrossRef]

- Gajowniczek, K.; Orłowski, A.; Zabkowski, T. Simulation study on the application of the generalized entropy concept in artificial neural networks. Entropy 2018, 20, 249. [Google Scholar] [CrossRef]

- Lesche, B. Instabilities of Renyi entropies. J. Stat. Phys. 1982, 27, 419–422. [Google Scholar] [CrossRef]

- Mariz, A.M. On the irreversible nature of the Tsallis and Renyi entropies. Phys. Lett. A 1992, 165, 409–411. [Google Scholar] [CrossRef]

- Aczél, J.; Daróczy, Z. Charakterisierung der Entropien positiver Ordnung und der Shannonschen Entropie. Acta Math. Acad. Sci. Hung. 1963, 14, 95–121. (In German) [Google Scholar] [CrossRef]

- Jizba, P.; Arimitsu, T. The world according to Rényi: Thermodynamics of multifractal systems. Ann. Phys. 2004, 312, 17–59. [Google Scholar] [CrossRef]

- Rényi, A. On the foundations of information theory. Rev. Inst. Int. Stat. 1965, 33, 1–4. [Google Scholar] [CrossRef]

- Campbell, L.L. A coding theorem and Rényi’s entropy. Inf. Control 1965, 8, 423–429. [Google Scholar] [CrossRef]

- Csiszár, I. Generalized cutoff rates and Rényi information measures. IEEE Trans. Inf. Theory 1995, 41, 26–34. [Google Scholar] [CrossRef]

- Bennett, C.; Brassard, G.; Crépeau, C.; Maurer, U. Generalized privacy amplification. IEEE Trans. Inf. Theory 1995, 41, 1915–1923. [Google Scholar] [CrossRef]

- Kannathal, N.; Choo, M.L.; Acharya, U.R.; Sadasivan, P.K. Entropies for detection of epilepsy in EEG. Comput. Meth. Prog. Biomed. 2005, 80, 187–194. [Google Scholar] [CrossRef] [PubMed]

- Contreras-Reyes, J.E.; Cortés, D.D. Bounds on Rényi and Shannon Entropies for Finite Mixtures of Multivariate Skew-Normal Distributions: Application to Swordfish (Xiphias gladius Linnaeus). Entropy 2016, 11, 382. [Google Scholar] [CrossRef]

- Abramowitz, M.; Stegun, I.A. Handbook of Mathematical Tables; Dover Publications: New York, NY, USA, 1972. [Google Scholar]

- Hanel, R.; Thurner, S. Generalized (c, d)-entropy and aging random walks. Entropy 2013, 15, 5324–5337. [Google Scholar] [CrossRef]

- Chavanis, P.H. Nonlinear mean field Fokker–Planck equations. Application to the chemotaxis of biological populations. Eur. Phys. J. B 2008, 62, 179–208. [Google Scholar] [CrossRef]

- Martinez, S.; Plastino, A.R.; Plastino, A. Nonlinear Fokker–Planck equations and generalized entropies. Physica A 1998, 259, 183–192. [Google Scholar] [CrossRef]

- Bouchaud, J.P.; Georges, A. Anomalous diffusion in disordered media: Statistical mechanisms, models and physical applications. Phys. Rep. 1990, 195, 127–293. [Google Scholar] [CrossRef]

- Dubkov, A.A.; Spagnolo, B.; Uchaikin, V.V. Lévy flight superdiffusion: An introduction. Int. J. Bifurcat. Chaos 2008, 18, 2649–2672. [Google Scholar] [CrossRef]

- Schwämmle, V.; Curado, E.M.F.; Nobre, F.D. A general nonlinear Fokker–Planck equation and its associated entropy. EPJ B 2007, 58, 159–165. [Google Scholar] [CrossRef]

- Czégel, D.; Balogh, S.G.; Pollner, P.; Palla, G. Phase space volume scaling of generalized entropies and anomalous diffusion scaling governed by corresponding nonlinear Fokker–Planck equations. Sci. Rep. 2018, 8, 1883. [Google Scholar] [CrossRef] [PubMed]

- Anteneodo, C.; Plastino, A.R. Maximum entropy approach to stretched exponential probability distributions. J. Phys. A Math. Gen. 1999, 32, 1089–1098. [Google Scholar] [CrossRef]

- Kaniadakis, G. Statistical mechanics in the context of special relativity. Phys. Rev. E 2002, 66, 056125. [Google Scholar] [CrossRef] [PubMed]

- Curado, E.M.; Nobre, F.D. On the stability of analytic entropic forms. Physica A 2004, 335, 94–106. [Google Scholar] [CrossRef]

- Tsekouras, G.A.; Tsallis, C. Generalized entropy arising from a distribution of q indices. Phys. Rev. E 2005, 71, 046144. [Google Scholar] [CrossRef] [PubMed]

- Shafee, F. Lambert function and a new non-extensive form of entropy. IMA J. Appl. Math. 2007, 72, 785–800. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).