Abstract

The geometric process (GP) is a simple and direct approach to modeling of the successive inter-arrival time data set with a monotonic trend. In addition, it is a quite important alternative to the non-homogeneous Poisson process. In the present paper, the parameter estimation problem for GP is considered, when the distribution of the first occurrence time is Power Lindley with parameters and . To overcome the parameter estimation problem for GP, the maximum likelihood, modified moments, modified L-moments and modified least-squares estimators are obtained for parameters a, and . The mean, bias and mean squared error (MSE) values associated with these estimators are evaluated for small, moderate and large sample sizes by using Monte Carlo simulations. Furthermore, two illustrative examples using real data sets are presented in the paper.

1. Introduction

The Renewal process (RP) is a commonly used method for the statistical analysis of the successive inter-arrival times data set observed from a counting process. When the data set is non-trending, independently and identically distributed (iid), RP is a possible approach to modeling the data set. However, if the data follow a monotone trend, the data set can be modeled by a more possible approach than RP, such as the non-homogeneous Poisson process with a monotone intensity function, or a GP.

GP is first introduced as a direct approach to modeling of the inter-arrival times data with the monotone trend by Lam [1]. Actually, GP is a generalization of the RP with a ratio parameter. However, GP is a more flexible approach than RP for modeling of the successive inter-arrival time data with a trend. Because of this feature, GP has been successfully used as a model in many real-life problems from science, engineering, and health.

Before progressing further, let us recall the following definition of GP given in [2].

Definition 1.

Let be the inter-arrival time the th and ith events of a counting process for . Then, the stochastic process generated by random variables is said to be a geometric process (GP) with parameter a if there exists a real number such that

are iid random variables with the distribution function F, where a is called the ratio parameter of the GP.

Clearly, the parameter a arranges monotonic behavior of the GP. In Table 1, the monotonic behavior of the GP is given.

Table 1.

Behavior of GP according to values of ratio parameter a.

In the GP, the assumption on the distribution of has a special significance because of the fact that the distribution of and the other random variables are from the same family of distributions with a different set of parameters. Namely, s are distributed independently, but not identically. This is trivial from Definition 1. By considering this property, the expectation and variance of s are immediately obtained as

where and are the expectation and variance of the random variable respectively. Since the distribution of the random variable determines the distribution of the other variables, the selection of the distribution of based on the observed data is quite important to optimal statistical inference [3,4]. There are many studies in the literature on the solution to the parameter estimation problem of GP while selecting some special distributions for . Chan et al. [5] investigated the parameter estimation problem of GP by assuming that the distribution of random variable was Gamma. Lam et al. [6] investigated the statistical inference problem for GP with Log-Normal distribution according to parametric and non-parametric methods. When the distribution of random variable was the inverse Gaussian, Rayleigh, two-parameter Rayleigh and Lindley, the problems of statistical inference for GP were investigated according to ML and modified moment method by [7,8,9,10], respectively.

The main objective of this study extensively investigates the solution of parameter estimation problem for GP when the distribution of the first arrival time is Power Lindley. In accordance with this objective, estimators for the parameters of GP with the Power Lindley distribution are obtained according to methods of maximum likelihood (ML), modified moment (MM), modified L-moment (MLM) and modified least-squares (MLS). The method of moments and the least-squares estimators of Power Lindley distribution are available in the literature [11]. The L-moments estimator of the Power Lindley distribution is obtained with this paper. In addition, the novelty of this paper is that the distribution of first inter-arrival time is assumed to be Power Lindley for GP and the ML and MLM estimators under this assumption are obtained.

The rest of this study is organized as follows: Section 2 includes the detailed infomation about the Power Lindley Distribution. In Section 3, the ML, the MLS, the MM and the MLM estimators of the parameters , and are obtained. Section 4 presents the results of performed Monte Carlo simulations for comparing the performances of the estimators obtained in Section 3. For illustrative purposes, two examples with real data sets are given in Section 5. Section 6 concludes the study.

2. An Overview to Power Lindley Distribution

The Power Lindley distribution was originally introduced by Githany et al. [11] as an important alternative for modeling of failure times. The distribution has a powerful modeling capability for positive data from different areas, such as reliability, lifetime testing, etc. In addition, for modeling the data sets with various shapes, many different extensions of the Power Lindley distribution have been attempted by researchers under different scenarios [12,13,14,15,16,17,18,19,20].

Let X be a Power Lindley distributed random variable with the parameters and . From now on, a Power Lindley distributed random variable X will be indicated as for brevity. The probability density function (pdf) of the random variable X is

and the corresponding cumulative distribution function (cdf) is

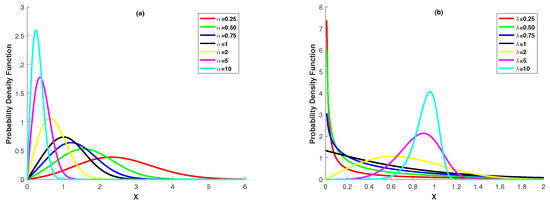

where and are the positive and real-valued scale parameter and shape parameter of the distribution, respectively. Essentially, the Power Lindley distribution is a two-component mixture distribution with mixing ratio in which the first component is a Weibull distribution with parameters and and the second component is a generalized Gamma distribution with parameter 2, and . The Power Lindley distribution is a quite important alternative to standard distribution families for analyzing of lifetime data, since its distribution function, survival function and hazard function are expressed in explicit form. The Power Lindley distribution also has uni-mode and belongs to the exponential family. To clearly show the shape of the distribution, we present Figure 1. Figure 1a,b show the behavior of the pdf of Power Lindley distribution at the different values of the parameters and .

Figure 1.

Pdf of the Power Lindley distribution (a): and ; (b) and .

Some basic measures of the Power Lindley distribution are tabulated in Table 2.

Table 2.

Some basic measures of the Power Lindley distributions.

Advanced readers can refer to [11] for more information on the Power Lindley distribution.

2.1. Shannon and Rényi Entropy of the Power Lindley Distribution

The entropy is a measure of variation or uncertainty of a random variable. In this subsection, we investigate the Shannon and Rényi entropy, which are the two most popular entropies for Power Lindley distribution. The Shannon entropy (SE) of a random variable X with pdf f is defined as, see [21],

Then, by using the pdf (4), the SE of the Power Lindley distribution is found as

where is the digamma function [22]. The Rényi entropy of a random variable with pdf f is defined as

By using the pdf (4), Rényi entropy of the Power Lindley distribution is obtained as follows:

Applying the power expansion formula and gamma function to Equation (9), is obtained as

3. Estimation of Parameters of GP with Power Lindley Distribution

3.1. Maximum Likelihood Estimation

Let be a random sample from a GP with ratio a and . The likelihood function for is

From Equation (11), the corresponding log-likelihood function can be written as below:

If the first derivatives of Equation (12) with respect to and are taken, we have the following likelihood equations:

and

From the solution of likelihood Equations (13)–(15), the parameter is obtained as

where . However, analytical expressions for the ML estimators of the parameters a and cannot be obtained from likelihood Equations (13)–(15). In order to estimate these parameters, Equations (13)–(15) must be simultaneously solved by using a numerical method such as Newton’s method.

Let be the parameter vector and corresponding gradient vector for this parameter vector, i.e.,

Under these notations, Newton’s method is given as

where m is the iteration number and is the inverse of the Hessian matrix , . The elements of the matrix are of the second derivatives of the log-likelihood function given in Equation (11) with respect to parameters , and . Let be the element of the matrix . The s are obtained as below:

Hence, is obtained as

where is the determinant of the matrice and it is calculated by

Thus, by starting with being given an initial estimation , the parameter vector can be estimated with an iterative method given by (18). Hence, the ML estimates of the parameters , and say , and respectively, are obtained as respective elements of the . The joint distribution of , and estimators is asymptotically normal with mean vector and covariance matrix (see, [23]), where I is the Fisher information matrix, i.e.,

The elements of the Fisher information matrix I given by (21) are immediately written from elements of the Hessian matrix . However, an explicit form of the Fisher information matrix I cannot be derived. Fortunately, as an estimator, the observed information matrix can be used instead of matrix I. Note that the observed information matrix of the estimators is the negative value of the matrix obtained at the last iteration.

3.2. Modified Methods

Since GP is a monotonic stochastic process, some divergence problems may arise in the estimation stage of the ratio parameter a. To overcome this problem, estimating the ratio parameter a by nonparametrically is a widely used method in statistical inference for GP [2,24]. A nonparametric estimator for the ratio parameter a is given by, see [6,25],

The estimator is an unbiased estimator and follows the asymptotic normal distribution, see [25]. When the given by (22) is substituted into Equation (1), it can be immediately written

Thus, the parameters and can be estimated with a selected estimation method by using the estimators . This estimation rule is called modified method (see [6]).

3.2.1. MM Estimation

Let be a random sample from a GP with ratio a and . In addition, we assume that the ratio parameter a is nonparametrically estimated by (22). For the sample , first and second sample moments, and are calculated by

and

respectively. On the other hand, from Table 2, first and second population moments of the distribution say and , can be easily written as

and

respectively. Thus, the MM estimators of the parameters and , and respectively, can be obtained from the solution of the following nonlinear equation system:

3.2.2. MLM Estimation

In this subsection, the MLM estimators and are obtained for the parameters and respectively, when the ratio parameter a is estimated by (22). L-moments’ estimators have been proposed as a method based on the linear combination of the order statistics by Hosking [26]. Due to their useful and robust structure, the L-moments estimators have been intensively studied and, in order to estimate the unknown parameters of many probability distributions, L-moment estimators have been obtained. For more information about the L-moments, we refer the readers to [26].

As in the method of moments, to obtain the L-moment estimators, population L-moments are equated to sample L-moments. In our problem, the first two samples and population L-moments are necessary for obtaining the estimators and . Under the transform , first and second sample L-moments are calculated as follows, see [26]:

where , represents the ordered observations. On the other hand, using the notations in [26], first and second population L-moments of are

and

See Appendix A for the derivation of population L-moments.

Thus, the estimators and are obtained from the numerical solution of the following nonlinear system:

3.2.3. MLS Estimation

The least-squares estimator (LSE) is a regression-based method proposed by Swain et al. [27]. Essentially, the method is a nonlinear curve fitting for the cdf of a random variable by using the empirical distribution function. In the least-squares method, the estimator(s) is determined such that the squared difference between the empirical distribution function and the fitted curve is minimum. Let be a random sample from a distribution function . In addition, the order statistics of the random sample are represented by . In this situation, the LSE of the parameters are obtained minimizing

with respect to the parameters of [27], where expectation is calculated by

Therefore, in our problem, the MLS estimators of parameters and , say and , respectively, are obtained by minimizing

with respect to and .

4. Simulation Study

In order to evaluate the estimation performances of the ML, the MLS, the MM and the MLM estimators obtained in the previous section, some Monte Carlo simulation experiments are presented in this section. Throughout the simulation experiments, the scale parameter is assumed to be , without loss of generality and also the parameter set as and 2. For different sample size and ratio parameter values of means, biases and MSEs have been calculated for estimating the ML, the MLS, the MM and the MLM. The obtained results based on 10,000 replications are displayed in Table 3, Table 4, Table 5 and Table 6.

Table 3.

The simulated Means, Biases and n × MSE values for the MLE, MLS, MM and MLM estimators of the parameters a, and , when .

Table 4.

The simulated Means, Biases and n × MSE values for the MLE, MLS, MM and MLM estimators of the parameters a, and , when .

Table 5.

The simulated Means, Biases and n × MSE values for the MLE, MLS, MM and MLM estimators of the parameters a, and , when .

Table 6.

The simulated Means, Biases and n × MSE values for the MLE, MLS, MM and MLM estimators of the parameters a, and , when .

When the results of the simulation experiments given in Table 3, Table 4, Table 5 and Table 6 are analyzed, it is seen that the ML estimators have smaller MSE values than the other estimators in all cases. Therefore, the ML estimators outperform the modified estimators with smaller bias and MSE. In addition, when the sample size n increases, the values of the bias and MSE decrease for all estimators. Based on this result, it can be said that all estimators obtained in the previous section are asymptotically unbiased and consistent.

5. Illustrative Examples

Practical applications of the parameter estimation with developed procedures in Section 3 are illustrated in this section with two data sets called Aircraft data set and Coal mining disaster data set.

In the examples, we use two criteria, the mean-squared error (MSE*) [28] for the fitted values and the maximum percentage error (MPE), which are defined in [25], for comparing the stochastic processes GP and RP. The MSE* and MPE are described as follows:

- MSE* =

- MPE =

where is calculated by

and and . Then, in order to compare the relative performances of the RP and four GPs with the ML, the MLS, the MM and the MLM estimators, the plot of and against can be used.

Example 1.

Aircraft data.

The aircraft dataset consists of 30 observations that deal with the air-conditioning system failure times of a Boeing 720 aircraft (aircraft number 7912). The aircraft dataset was originally studied by Proschan [29]. The successive failure times in aircraft dataset are 23, 261, 87, 7, 120, 14, 62, 47, 225, 71, 246, 21, 42, 20, 5, 12, 120, 11, 3, 14, 71, 11, 14, 11, 16, 90, 1, 16, 52, 95.

We first investigate the underlying distribution of the set of data. To test whether the underlying distribution of the data is the Power Lindley, the following procedures can be used. From Definition 1, we know that the and the s follow the Power Lindley. We can write immediately as by taking the logarithm of . Note that s follow the Log-Power Lindley distribution. Therefore, a linear regression model

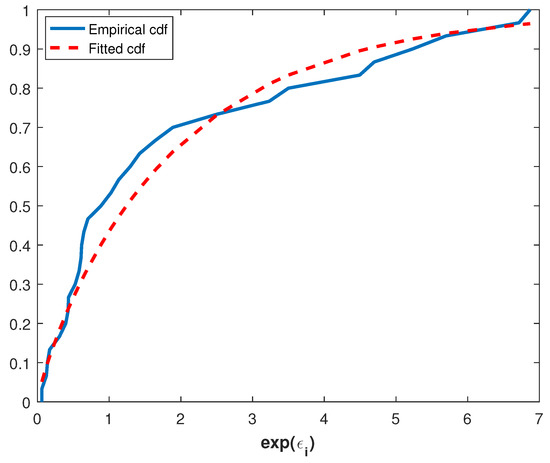

can be defined, where and . If the exponential errors are Power Lindley distributed, then the underlying distribution of the set of data is Power Lindley. The error term in Equation (39) can be estimated by

where . Thus, consistency of the exponentiated errors to Power Lindley distribution can be tested by using a goodness of fit test such as Kolmogorov–Smirnov (K-S). The parameter estimates of the exponentiated errors are and and also the value of the K-S test is 0.1225 and the corresponding p-value is 0.7134. Therefore, it can be said that the underlying distribution of this data set is Power Lindley. This can also be seen from Figure 2, which illustrates the plots of the empirical and the fitted cdf.

Figure 2.

Empirical and fitted cdf of the .

When a GP with the Power Lindley distribution is used for modeling of this dataset, the ML, the MLS, the MM and the MLM estimates of the parameters and and also MSE* and MPE values are tabulated in Table 3.

As it can be seen from Table 7, the ML estimates have the smallest MSE * values consistent with the simulation experiments. The estimator having the smallest MPE is the MM, but estimators of the ML and the MLM are very close to the MM.

Table 7.

Estimation of parameters for the Aircraft data set and the process comparison.

Now, we check the model optimality. In order to select an optimal model to a data set, Akaike information criterion (AIC) and maximized log-likelihood (L) value are commonly used methods. For deciding an optimal model among the Power Lindley and its alternatives (Log-Normal, Gamma and inverse Gaussian) for this data set, we compute the -L and AIC values. The -L and AIC values for the models are given in Table 8.

Table 8.

AIC and negative Log-Likelihood values for the Aircraft data set.

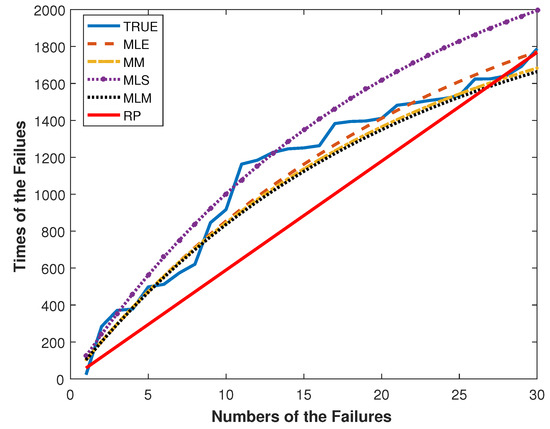

The results given in Table 8 show that the Power Lindley distribution is an optimal model for the aircraft dataset with smaller AIC and -L values. In Figure 3, the failure times for the aircraft data and their fitted times are plotted.

Figure 3.

The plots of and against the number of failures for the aircraft data.

The modeling performances of the RP and GP with the ML, the MLS, the MM and the MLM estimators can be easily compared by Figure 3. By Figure 3, the GP with the ML, the MLS, the MM and the MLM estimators outperform the RP. This result is compatible with Table 7.

Example 2.

Coal mining disaster data.

This example is from [30] on the intervals in days between successive disasters in Great Britain from 1851 to 1962. The coal mining disaster data set, which includes 190 successive intervals, was used as an illustrative example for GP by [7,10,24].

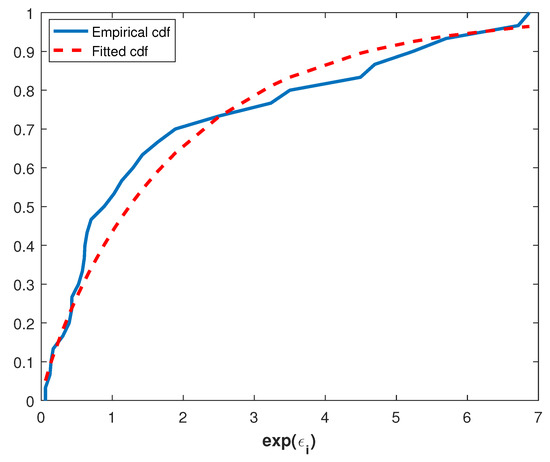

For this data set, the value of K-S test is 0.0396 and the corresponding p-value is 0.9148. Thus, the Power Lindley distribution is an appropriate model for the coal mining disaster data. This is also supported by the following Q-Q plot, Figure 4, which is constructed by plotting the ordered exponential errors against the quantiles of the because the data points fall approximately on the straight line in Figure 4.

Figure 4.

Q-Q plot of the coal mining disaster data.

For the coal mining disaster data set, the estimates of the parameters a, and are given in Table 9.

Table 9.

Estimation of parameters for the coal mining disaster data and process comparison.

Moreover, calculated AIC and L values for the coal mining disaster data set with the different models are tabulated in Table 10.

Table 10.

AIC and negative Log-Likelihood values for coal mining disaster data.

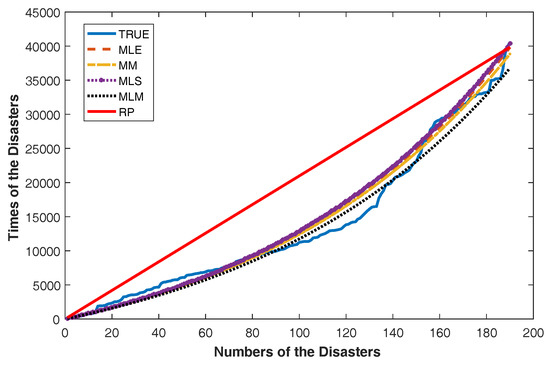

We can say that the Power Lindley distribution is an optimal model for this data set since it has minimum AIC and -L values. Under the assumption that the underlying distribution of the data is Power Lindley, we present Figure 5 for comparing the modeling performance of the RP and the GP with four estimators obtained in the previous section. Figure 5 plots and versus the number of disasters .

Figure 5.

The plots and against the number of failures for the coal mining disaster data.

As can be seen in Figure 5, the GP with the ML, the MLS, the MM and the MLM estimators more fairly follow real values than the RP. It can also be seen from Table 9 that the MSE* and MPE values of GP models are much smaller than RP. Thus, according to Figure 5 and Table 9, it is concluded that the GP provides the better data fit than RP.

6. Conclusions

In this paper, we have discussed the parameter estimation problem for GP by assuming that distribution of the first inter-arrival time is Power Lindley with parameters and . In the paper, the parameter estimation problem has been solved from two points of view as the parametric (ML) and nonparametric (MLS, MM and MLM). Parametric estimators, ML, of the parameters A, ALF and LAM are also asymptotically normally distributed. However, more work should be done in order to say something about the asymptotic properties of nonparametric estimators MLS, MM and MLM. In addition, this is usually not an easy task because an analytical form of these estimators cannot be written.

Numerical study results have shown that the ML estimators outperform the MLS, MLM and MLS estimators with smaller bias and MSE measures. In addition, it has been observed that both bias and MSE values of all estimators decrease when the sample size increases. Hence, in light of numerical studies, it can be concluded that all of the estimators are asymptotically unbiased and consistent.

In the illustrative examples presented to demonstrate the data modeling performance of GP with the obtained estimators, the GP with Power Lindley distribution gives a better data fit than the RP in both examples. In addition, according to AIC and -L values, it can be said that modeling both the aircraft dataset and the coal mining disaster dataset using a GP with Power Lindley distribution is more appropriate than a GP with Gamma, log-normal or inverse Gaussian distribution. Therefore, it can be said that a GP with Power Lindley distribution is a quite important alternative to a GP with famous distributions such as Gamma, Log-normal or inverse Gaussian in modeling the successive inter-arrival times.

Funding

This research received no external funding

Acknowledgments

The author is sincerely grateful to the anonymous referees for their comments and suggestions that greatly improved an early version of this paper.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

According to [26], the first two population L-moments of a continuous distribution are calculated by

and

respectively, where and are the pdf and the cdf of the random variable X. Obviously, the first popuplation L-moment is the expectation of random variable . Thus, from Table 2, the first L-moment of Power Lindley distribution is

Now, we calculate the second population L-moment of Power Lindley distribution. Substituting the cdf given by (5) into (A2), we can write

In the last equality, using given in (4), it can be easily written

Therefore, by using the gamma function, we have

References

- Lam, Y. Geometric processes and replacement problem. Acta Math. Appl. Sin. 1988, 4, 366–377. [Google Scholar]

- Lam, Y. The Geometric Process and Its Applications; World Scientific: Singapore, 2007. [Google Scholar]

- Demirci Bicer, H.; Bicer, C. Discrimination Between Gamma and Lognormal Distributions for Geometric Process Data. In Researches on Science and Art in 21st Century Turkey; Gece Publishing: Ankara, Turkey, 2017; Chapter 315; pp. 2830–2836. [Google Scholar]

- Demirci Bicer, H.; Bicer, C. Discriminating between the Gamma and Weibull Distributions for Geometric Process Data. Int. J. Econ. Admin. Stud. 2018, 18, 239–252. [Google Scholar] [CrossRef]

- Chan, J.S.; Lam, Y.; Leung, D.Y. Statistical inference for geometric processes with gamma distributions. Comput. Stat. Data Anal. 2004, 47, 565–581. [Google Scholar] [CrossRef]

- Lam, Y.; Chan, S.K. Statistical inference for geometric processes with lognormal distribution. Comput. Stat. Data Anal. 1998, 27, 99–112. [Google Scholar] [CrossRef]

- Kara, M.; Aydogdu, H.; Turksen, O. Statistical inference for geometric process with the inverse Gaussian distribution. J. Stat. Comput. Simul. 2015, 85, 3206–3215. [Google Scholar] [CrossRef]

- Biçer, C.; Biçer, H.D.; Kara, M.; Aydoğdu, H. Statistical inference for geometric process with the Rayleigh distribution. Commun. Fac. Sci. Univ. Ankara Ser. A1 Math. Stat. 2019, 68, 149–160. [Google Scholar]

- Bicer, C. Statistical Inference for Geometric Process with the Two-parameter Rayleigh Distribution. In The Most Recent Studies in Science and Art; Gece Publishing: Ankara, Turkey, 2018; Chapter 43; pp. 576–583. [Google Scholar]

- Bicer, C.; Demirci Bicer, H. Statistical Inference for Geometric Process with the Lindley Distribution. In Researches on Science and Art in 21st Century Turkey; Gece Publishing: Ankara, Turkey, 2017; Chapter 314; pp. 2821–2829. [Google Scholar]

- Ghitany, M.; Al-Mutairi, D.K.; Balakrishnan, N.; Al-Enezi, L. Power Lindley distribution and associated inference. Comput. Stat. Data Anal. 2013, 64, 20–33. [Google Scholar] [CrossRef]

- Pararai, M.; Warahena-Liyanage, G.; Oluyede, B.O. Exponentiated power Lindley–Poisson distribution: Properties and applications. Commun. Stat. Theory Methods 2017, 46, 4726–4755. [Google Scholar] [CrossRef]

- Ashour, S.K.; Eltehiwy, M.A. Exponentiated power Lindley distribution. J. Adv. Res. 2015, 6, 895–905. [Google Scholar] [CrossRef] [PubMed]

- Alizadeh, M.; MirMostafaee, S.; Ghosh, I. A new extension of power Lindley distribution for analyzing bimodal data. Chil. J. Stat. 2017, 8, 67–86. [Google Scholar]

- Warahena-Liyanage, G.; Pararai, M. A generalized power Lindley distribution with applications. Asian J. Math. Appl. 2014, 2014, ama0169. [Google Scholar]

- Alizadeh, M.; Altun, E.; Ozel, G. Odd Burr power Lindley distribution with properties and applications. Gazi Univ. J. Sci. 2017, 30, 139–159. [Google Scholar]

- Iriarte, Y.A.; Rojas, M.A. Slashed power-Lindley distribution. Commun. Stat. Theory Methods 2018, 1–12. [Google Scholar] [CrossRef]

- Barco, K.V.P.; Mazucheli, J.; Janeiro, V. The inverse power Lindley distribution. Commun. Stat. Simul. Comput. 2017, 46, 6308–6323. [Google Scholar] [CrossRef]

- Alizadeh, M.; K MirMostafaee, S.; Altun, E.; Ozel, G.; Khan Ahmadi, M. The odd log-logistic Marshall-Olkin power Lindley Distribution: Properties and applications. J. Stat. Manag. Syst. 2017, 20, 1065–1093. [Google Scholar] [CrossRef]

- Demirci Bicer, H. Parameter Estimation for Finite Mixtures of Power Lindley Distributions. In The Most Recent Studies in Science and Art; Gece Publishing: Ankara, Turkey, 2018; Chapter 128; pp. 1659–1669. [Google Scholar]

- Arellano-Valle, R.B.; Contreras-Reyes, J.E.; Stehlík, M. Generalized skew-normal negentropy and its application to fish condition factor time series. Entropy 2017, 19, 528. [Google Scholar] [CrossRef]

- Abramowitz, M.; Stegun, I.A. Handbook of Mathematical Functions: With Formulas, Graphs, and Mathematical Tables; Courier Corporation: Chelmsford, MA, USA, 1964; Volume 55. [Google Scholar]

- Cox, D.; Barndorff-Nielsen, O. Inference and Asymptotics; CRC Press: Boca Raton, FL, USA, 1994. [Google Scholar]

- Yeh, L. Nonparametric inference for geometric processes. Commun. Stat. Theory Methods 1992, 21, 2083–2105. [Google Scholar] [CrossRef]

- Lam, Y.; Zhu, L.X.; Chan, J.S.; Liu, Q. Analysis of data from a series of events by a geometric process model. Acta Math. Appl. Sin. Engl. Ser. 2004, 20, 263–282. [Google Scholar] [CrossRef]

- Hosking, J.R. L-moments: Analysis and estimation of distributions using linear combinations of order statistics. J. R. Stat. Soc. Ser. B (Methodol.) 1990, 52, 105–124. [Google Scholar]

- Swain, J.J.; Venkatraman, S.; Wilson, J.R. Least-squares estimation of distribution functions in Johnson’s translation system. J. Stat. Comput. Simul. 1988, 29, 271–297. [Google Scholar] [CrossRef]

- Wand, M.; Jones, M. Kernel Smoothing; Chapman & Hall: London, UK, 1995. [Google Scholar]

- Proschan, F. Theoretical explanation of observed decreasing failure rate. Technometrics 1963, 5, 375–383. [Google Scholar] [CrossRef]

- Andrews, D.; Herzberg, A. Data; Springer: New York, NY, USA, 1985. [Google Scholar]

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).