A Cost/Speed/Reliability Tradeoff to Erasing †

Abstract

:1. Introduction

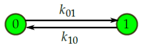

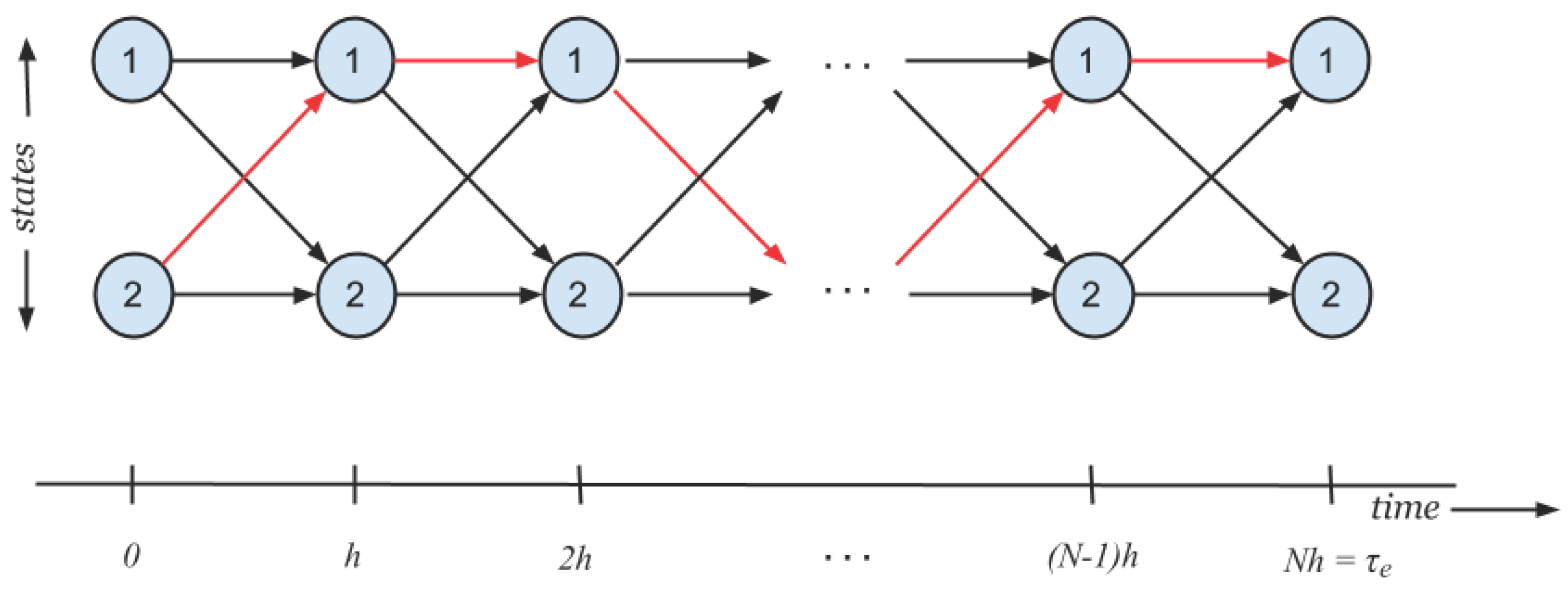

2. The Erasing Problem

Kullback–Leibler Cost

3. Solution to the Erasing Problem

4. Interpreting the KL Cost

4.1. Path Space Szilard–Landauer Correspondence

4.2. Thermodynamic Interpretation

4.2.1. Thermodynamics on a Two-State Markov Chain

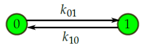

- Consider again the two-state continuous-time Markov chain with passive dynamics given by transition rates and .Let and denote the internal energy of states “0” and “1”, respectively. Then, the equilibrium distribution is given by and . We also have from detailed balance. Together this yields

- Now consider the same two-state system with a control applied to it by means of a field of potential so that the potential energy in state i becomes . The transition rates due to the control become and . By a reasoning similar to how we derived Equation (9), we getCombining with Equation (9), this yields

- Given a distribution on the states, we can define the following thermodynamic quantities:

- Expected internal energy .

- Entropy .

- Nonequilibrium free energy .

- Given a transition from state i to state j in the presence of the control field, we can define the following thermodynamic quantities:

- Heat dissipated .

- Entropy increase of the system .

- Suppose the system is described at time t by a distribution . Define the Current so that .

- We can further compute

- Define Total Entropy Production to be the total entropy produced from time 0 to time t. In other words, andAfter simplification,which is a statement of the second law of thermodynamics.

- The following identity is immediateand is another form of the first law.

4.2.2. Thermodynamic Cost for Rapid Erasing of a Reliable Bit

4.2.3. Link between KL-Cost and Thermodynamic Work

4.3. Large Deviations Interpretation

4.4. Gibbs Measure

5. Conclusions

Acknowledgments

Conflicts of Interest

References

- Szilard, L. Über die Entropieverminderung in einem thermodynamischen System bei Eingriffen intelligenter Wesen. Z. Phys. 1929, 53, 840–856. (In German) [Google Scholar] [CrossRef]

- Landauer, R. Irreversibility and heat generation in the computing process. IBM J. Res. Dev. 1961, 5, 183–191. [Google Scholar] [CrossRef]

- Esposito, M.; van den Broeck, C. Second law and Landauer principle far from equilibrium. Europhys. Lett. 2011, 95, 40004. [Google Scholar] [CrossRef]

- Gopalkrishnan, M. The Hot Bit I: The Szilard–Landauer correspondence. 2013; arXiv:1311.3533. [Google Scholar]

- Reeb, D.; Wolf, M.M. An improved Landauer principle with finite-size corrections. New J. Phys. 2014, 16, 103011. [Google Scholar] [CrossRef]

- Laughlin, S.B.; de Ruyter van Steveninck, R.R.; Anderson, J.C. The metabolic cost of neural information. Nat. Neurosci. 1998, 1, 36–41. [Google Scholar] [CrossRef] [PubMed]

- Mudge, T. Power: A first-class architectural design constraint. Computer 2001, 34, 52–58. [Google Scholar] [CrossRef]

- Von Neumann, J. Theory of Self-Reproducing Automata; University of Illinois Press: Urbana, IL, USA, 1966; p. 66. [Google Scholar]

- Bennett, C.H. The thermodynamics of computation—A review. Int. J. Theor. Phys. 1982, 21, 905–940. [Google Scholar] [CrossRef]

- Aurell, E.; Gawȩdzki, K.; Mejía-Monasterio, C.; Mohayaee, R.; Muratore-Ginanneschi, P. Refined second law of thermodynamics for fast random processes. J. Stat. Phys. 2012, 147, 487–505. [Google Scholar] [CrossRef]

- Diana, G.; Bagci, G.B.; Esposito, M. Finite-time erasing of information stored in fermionic bits. Phys. Rev. E 2013, 87, 012111. [Google Scholar] [CrossRef] [PubMed]

- Zulkowski, P.R.; DeWeese, M.R. Optimal finite-time erasure of a classical bit. Phys. Rev. E 2014, 89, 052140. [Google Scholar] [CrossRef] [PubMed]

- Salamon, P.; Nitzan, A. Finite time optimizations of a Newton’s law Carnot cycle. J. Chem. Phys. 1981, 74, 441482. [Google Scholar] [CrossRef]

- Swanson, J.A. Physical versus logical coupling in memory systems. IBM J. Res. Dev. 1960, 4, 305–310. [Google Scholar] [CrossRef]

- Alicki, R. Information is not physical. 2014; arXiv:1402.2414. [Google Scholar]

- Todorov, E. Efficient computation of optimal actions. Proc. Natl. Acad. Sci. USA 2009, 106, 11478–11483. [Google Scholar] [CrossRef] [PubMed]

- Fleming, W.H.; Mitter, S.K. Optimal control and nonlinear filtering for nondegenerate diffusion processes. Stochastics 1982, 8, 63–77. [Google Scholar] [CrossRef]

- Kappen, H.J. Path integrals and symmetry breaking for optimal control theory. J. Stat. Mech. 2005, 2005, P11011. [Google Scholar] [CrossRef]

- Kappen, H.J. Linear theory for control of nonlinear stochastic systems. Phys. Rev. Lett. 2005, 95, 200201. [Google Scholar] [CrossRef] [PubMed]

- Theodorou, E.A. Iterative Path Integral Stochastic Optimal Control: Theory and Applications to Motor Control. Ph.D. Thesis, University of Southern California, Los Angeles, CA, USA, 2011. [Google Scholar]

- Theodorou, E.; Todorov, E. Relative entropy and free energy dualities: Connections to path integral and KL control. In Proceedings of the 51st IEEE Conference on Decision and Control (CDC), Maui, HI, USA, 10–13 December 2012; pp. 1466–1473.

- Stulp, F.; Theodorou, E.A.; Schaal, S. Reinforcement learning with sequences of motion primitives for robust manipulation. IEEE Trans. Robot. 2012, 28, 1360–1370. [Google Scholar] [CrossRef]

- Dvijotham, K.; Todorov, E. A unified theory of linearly solvable optimal control. In Proceedings of the 27th Conference on Uncertainty in Artificial Intelligence (UAI 2011), Barcelona, Spain, 14–17 July 2011.

- Kappen, H.J.; Gómez, V.; Opper, M. Optimal control as a graphical model inference problem. Mach. Learn. 2012, 87, 159–182. [Google Scholar] [CrossRef]

- Van den Broek, B.; Wiegerinck, W.; Kappen, B. Graphical model inference in optimal control of stochastic multi-agent systems. J. Artif. Intell. Res. 2008, 32, 95–122. [Google Scholar]

- Wiegerinck, W.; van den Broek, B.; Kappen, H. Stochastic optimal control in continuous space-time multi-agent systems. 2012; arXiv:1206.6866. [Google Scholar]

- Horowitz, M.B. Efficient Methods for Stochastic Optimal Control. Ph.D. Thesis, California Institute of Technology, Pasadena, CA, USA, 2014. [Google Scholar]

- Dupuis, P.; Ellis, R.S. A Weak Convergence Approach to the Theory of Large Deviations; Wiley: New York, NY, USA, 2011. [Google Scholar]

- Jaynes, E.T. Information theory and statistical mechanics. Phys. Rev. 1957, 106, 620–630. [Google Scholar] [CrossRef]

- Propp, M.B. The Thermodynamic Properties of Markov Processes. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 1985. [Google Scholar]

- Sekimoto, K. Kinetic characterization of heat bath and the energetics of thermal ratchet models. J. Phys Soc. Jpn. 1997, 66, 1234–1237. [Google Scholar] [CrossRef]

- Seifert, U. Stochastic thermodynamics, fluctuation theorems and molecular machines. Rep. Prog. Phys. 2012, 75, 126001. [Google Scholar] [CrossRef] [PubMed]

- Browne, C.; Garner, A.J.P.; Dahlsten, O.C.O.; Vedral, V. Guaranteed energy-efficient bit reset in finite time. Phys. Rev. Lett. 2014, 113, 100603. [Google Scholar] [CrossRef] [PubMed]

- Schrödinger, E. Uber die umkehrung der naturgesetze, sitzung ber preuss. Akad. Wiss. Berlin Phys. Math. 1931, 2, 144–153. (In German) [Google Scholar]

- Beurling, A. An automorphism of product measures. Ann. Math. 1960, 72, 189–200. [Google Scholar] [CrossRef]

- Föllmer, H. Random fields and diffusion processes. In École d’Été de Probabilités de Saint-Flour XV–XVII, 1985–87; Springer: Berlin/Heidelberg, Germany, 1988; pp. 101–203. [Google Scholar]

- Aebi, R. Schrödinger Diffusion Processes; Springer: Berlin/Heidelberg, Germany, 1996. [Google Scholar]

- Wissner-Gross, A.D.; Freer, C.E. Causal entropic forces. Phys. Rev. Lett. 2013, 110, 168702. [Google Scholar] [CrossRef] [PubMed]

- Zwanzig, R. Nonequilibrium Statistical Mechanics; Oxford University Press: New York, NY, USA, 2001. [Google Scholar]

© 2016 by the author; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gopalkrishnan, M. A Cost/Speed/Reliability Tradeoff to Erasing. Entropy 2016, 18, 165. https://doi.org/10.3390/e18050165

Gopalkrishnan M. A Cost/Speed/Reliability Tradeoff to Erasing. Entropy. 2016; 18(5):165. https://doi.org/10.3390/e18050165

Chicago/Turabian StyleGopalkrishnan, Manoj. 2016. "A Cost/Speed/Reliability Tradeoff to Erasing" Entropy 18, no. 5: 165. https://doi.org/10.3390/e18050165

APA StyleGopalkrishnan, M. (2016). A Cost/Speed/Reliability Tradeoff to Erasing. Entropy, 18(5), 165. https://doi.org/10.3390/e18050165