Abstract

This paper proposes a novel estimator of mutual information for discrete and continuous variables. The main feature of this estimator is that it is zero for a large sample size n if and only if the two variables are independent. The estimator can be used to construct several histograms, compute estimations of mutual information, and choose the maximum value. We prove that the number of histograms constructed has an upper bound of and apply this fact to the search. We compare the performance of the proposed estimator with an estimator of the Hilbert-Schmidt independence criterion (HSIC), though the proposed method is based on the minimum description length (MDL) principle and the HSIC provides a statistical test. The proposed method completes the estimation in time, whereas the HSIC kernel computation requires time. We also present examples in which the HSIC fails to detect independence but the proposed method successfully detects it.

1. Introduction

Shannon’s information theory [1] has contributed to the development of communication and storage systems in which sequences can be compressed up to the entropy of the source assuming that the sender and receiver know the probability of each sequence. In the 30 years since its birth, information theory has developed such that sequences can be compressed without sharing the associated probability (universal coding): the probability of each future sequence can be learned from the past sequence such that the compression ratio of the total sequence converges to its entropy.

Mutual information is a quantity that can be used to analyze the performances of encoding and decoding in information theory, and its value expresses the dependency of two random variables and is nonnegative (that is, zero) if and only if they are independent. Mutual information can be estimated from actual sequences. In this paper, we construct an estimator of the mutual information based on the minimum description length (MDL) principle [2] such that the estimator is zero if and only if the two variables are independent for long sequences.

In any science, a law is determined based on experiments: the law should be simple and explain the experiments. Suppose that we generate pairs of a rule and its exceptions for the experiments and describe the pairs using universal coding. Then, the MDL principle chooses the rule of the pair that has the shortest description length (the number of bits) as the scientific law: the simpler the rule is, the more exceptions there are. In our situation, two variables may be either independent or dependent, and we compute the values of the corresponding description lengths to choose one of them based on which length is shorter. We estimate mutual information based on the difference between the description length values assuming that the two variables are independent and dependent, divided by the original sequence length n.

Let X and Y be discrete random variables. Suppose that we have examples and that we wish to know whether X and Y are independent, denoted as , not knowing the distributions , and of X, Y and , respectively.

One way of approaching this problem would be to estimate the correlation coefficient of to determine whether it is close to zero. Although the notions of independence and correlation are close, simply because does not mean that X and Y are independent. For example, let X and U be mutually independent variables with a standard Gaussian distribution and with probability 0.5, respectively, and let . Apparently, X and Y are not independent, but note that , , , and

which means that

For this problem, we know that the mutual information defined by

satisfies

Thus, it is sufficient to estimate to determine whether it is positive.

Given and , one might estimate by plugging in the frequencies , , and of , , and divided by n into , , and , respectively, to obtain the quantity

However, we observe that even when for large values of n. In fact, since Equation (1) is the Kullback-Leibler divergence between and , we have , and if and only if

for all , which does not hold infinitely many times with a positive probability, even when . Thus, we need to guess when is small, say when for some appropriate function of n:

Nobody was certain that such a function of sample size n exists.

In 1993, Suzuki [3] identified such a function δ and proposed a new mutual information estimator such that

for large n based on the minimum description length (MDL) principle. The exact form of function δ is presented in Section 2. In this paper, we consider an extension of the estimation of mutual information for a case where X and Y may be continuous.

There are many ways of estimating mutual information for continuous variables. If we assume that X and Y are Gaussian, then the mutual information is expressed by

and we can show that

for large n, where is the maximum likelihood estimator of . However, the equivalence only holds for Gaussian variables.

For general settings, several mutual information estimators are available, such as kernel density-based estimators [4], k-nearest neighbors [5,6], and other estimators based on quantizers [7]. In general, the kernel-based method requires an extraordinarily large computational effort to test for independence. To overcome this problem, efficient estimators have been proposed, such as one that completes the test in time [5]. However, correctness, such as consistency, is required and has a higher priority than efficiency. Although some of these methods converge to the correct value for large n in time [7], the estimation values are positive with nonzero probability for large n when X and Y are independent ().

Currently, the construction of nonlinear alternatives of to test for independence between X and Y by using positive definite kernels is becoming popular. In particular, a quantity known as the Hilbelt-Schmidt independence criterion (HSIC) [8], which is defined in Section 2, is extensively used for independence testing. It is known that the HSIC value depends on the kernels k and l w.r.t. the ranges of X and Y, and

if the kernel pair is chosen properly. In this paper, we assume that we always use such a kernel pair and denote simply by . For the estimation of given and , the most popular estimator of , which is defined in Section 2, always takes positive values, and given a significance level of (typically, ), we need to obtain such that the decision

is as accurate as possible.

In this paper, we propose a new estimator of mutual information. This new estimator quantizes the two-dimensional Euclidean space of X and Y into bins for . For each value of u that indicates a histogram, we obtain the estimation of mutual information for discrete variables. The maximum value of over is the final estimation. We prove that the optimal value of u is at most . In particular, the proposed method divides without distinguishing between discrete and continuous data, and it satisfies Equation (3).

Then, we experimentally compare the proposed estimator of with the estimator of HSIC in terms of independence testing. Although we obtained several insights, we could not obtain confirmation that one of the estimators outperforms the other. However, we found that the HSIC only considers the magnitude of the data and would fail to detect relations among the data that cannot be identified by simply observing the changes in magnitude. We present two examples for which the HSIC fails to detect the dependencies among and due to the aforementioned limitation. The proposed estimation procedure completes the computation in time, whereas the HSIC requires time. In this sense, the proposed method based on mutual information would be useful in many situations.

The remainder of this paper is organized as follows. Section 2 provides the background for the work presented in this paper, and Section 2.1 and Section 2.2 explain the mutual information and HSIC estimations, respectively. Section 3 presents the contributions of this paper, and Section 3.1 proposes the new algorithm for estimating mutual information. Section 3.2 mathematically proves the merits of the proposed method, and Section 3.3 presents the results of the preliminary experiments. Section 4 presents the results of the experiments using the R language to compare the performance in terms of independence testing for the proposed estimator of mutual information and its HSIC counterpart. Section 5 summarizes the contributions and discusses opportunities for future work.

Throughout the paper, the base two logarithm is assumed unless specified otherwise.

2. Background

This section describes the basic properties of the estimations of mutual information for discrete variables and HSIC .

2.1. Mutual Information for Discrete Variables

In 1993, Suzuki [3] proposed an estimator of mutual information based on the minimum description length (MDL) principle [2]. Given examples, the MDL chooses a rule that minimizes the total description length when the examples are described in terms of a rule and its exceptions. In this case, there are two candidate rules: X and Y are either independent or not. When they are independent, for each X and Y, we first describe the independent conditional probability values, and using them, the examples can be described. The total length will be

plus

up to constant values, where α and β are the cardinalities of X and Y, respectively. When they are not independent, we describe the examples in length

up to constant values. Hence, the difference Equation (6) + Equation (7) − Equation (8) divided by n is

It is known that

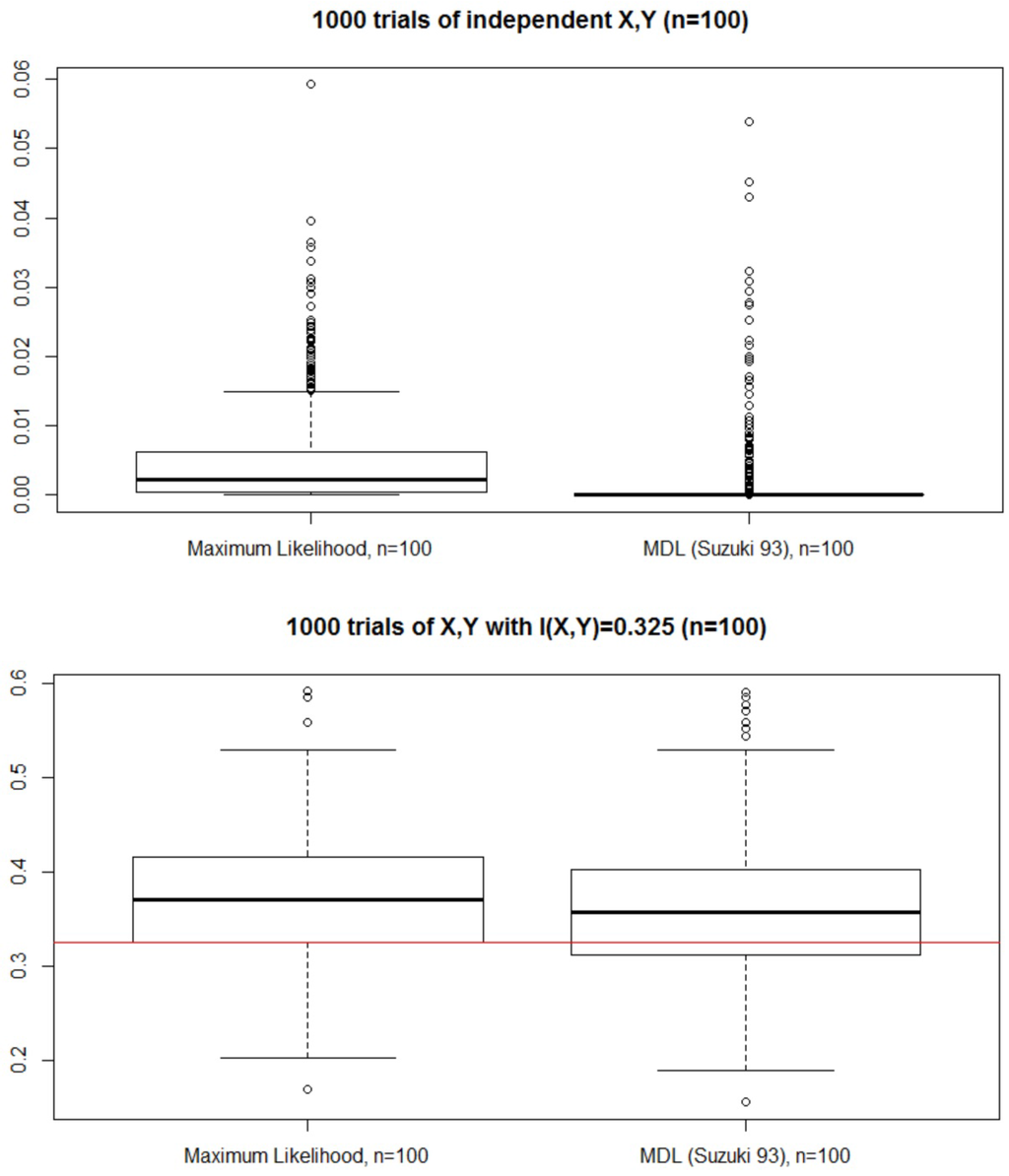

for large n [9], which means that in Equation (2). Figure 1 presents a box plot of 1000 trials for the two estimations for and , where X and Y are independent and dependent, respectively.

Figure 1.

Estimating mutual information: the minimum description length (MDL) computes the correct values, whereas the maximum likelihood yields values that are larger than the correct values.

2.2. Maximizing the Posterior Probability

Note that this paper seeks whether or not rather than the mutual information value itself.

We claim that the decision (9.5) asymptotically maximizes the posterior probability of given and . Let , where is the occurrence of in , and is the prior probability of the probability of assuming the hyper-parameters with . Suppose that we similarly construct and with and . It is known that if we choose , , and , then , , and are bounded by constants [10]. Hence, for large n, we have

where the prior probability p of is a constant and is negligible for large n.

On the other hand, Nemenman, Shafee, and Bialek [11] proposed a Bayesian estimator

and its expectation w.r.t. a prior over the hyper-parameter , where is the probability of the event . If we similarly construct a Bayesian estimators and of entropies , , respectively, then we also obtain a Bayesian estimator

of mutual information [12]. M. Hutter [13] proposed another estimator

and its expectation w.r.t. a prior over the hyper-parameter , where and are the probabilities of the events and .

The main drawback of estimators and is that both of

fail for large n. For example, we have unless concentrates on the case for all , which occurs with probability zero even when . Note that they seek the mutual information value itself rather than whether or not.

2.3. HSIC

The HSIC is formally defined by

using the positive definite kernels and , where and are the ranges of X and Y, respectively, and . The most common estimator of , given and , would be

We prepare (for example, ). Then, there exists a threshold such that if the null hypothesis is true, then the value of should be less than with probability . The decision is based on

Applying HSIC to independence testing is widely accepted in the machine learning community; in addition to the equivalence Equation (5) with independence, (weak) consistency has been shown in the sense that the difference between Equations (12) and (13) is at most in probability [8]. Furthermore, HSIC exhibits satisfactory performance in actual situations and is currently considered to be the de facto method for independence testing.

However, we still encounter serious problems when applying HSIC. The most significant problem is that HSIC requires computational time, and n is required to be small if it is necessary that the test be completed within a predetermined time. Moreover, the calculation of the correct value of requires many hours for simulating the null hypothesis. Given and , we randomly reorder to obtain independent pairs of examples and compute many times to obtain the percentile point . If , then we obtain 10 samples of a higher percentile to ensure that the value of is correct by executing the computation more than 200 times.

3. Estimation of Mutual Information for both Discrete and Continuous Variables

This paper proposes a new estimator of mutual information that is able to address both discrete and continuous variables and that becomes zero if and only if X and Y are independent for large n.

3.1. Proposed Algorithm

The proposed estimation consists of three steps:

- prepare nested histograms [14],

- compute estimations of mutual information for the histogram , and

- choose the maximum among the estimations w.r.t. the histograms .

Suppose that we are given examples and and that they have been sorted as

First, we assume that no consecutive values are equal in each of the two sequences Equation (14), which is true with probability one when the density function exists. Let be an integer, and for each , we prepare histograms with bins for X, Y, and . Let . The sequences Equation (14) are divided into clusters such as

and

Thus, we have quantized sequences and with using the clusters. For example, suppose that we generate standard Gaussian random sequences and with a correlation coefficient of . The frequency distribution tables of and for are

1 2 3 4 5 6 7 8

125 125 125 125 125 125 125 125

and that of for are as follows:

1 2 3 4 5 6 7 8

1 75 32 12 5 1 0 0 0

2 25 41 25 18 9 7 0 0

3 15 23 32 27 14 11 1 2

4 5 17 24 22 27 19 11 0

5 5 9 19 24 23 23 17 5

6 0 3 7 18 26 26 28 17

7 0 0 6 9 19 21 45 25

8 0 0 0 2 6 18 23 76

Thus, the distributions of and are nearly uniform. Because a sufficient number of samples is allocated to each cluster, at least for one-dimensional if n is large, the estimations are more robust than for other histogram-based methods [9].

Because the obtained sequences and are discrete, we can compute

where is the empirical mutual information w.r.t. histogram and is the number of independent parameters. The derivation of Equation (15) is similar to that of Equation (9).

Let and be the random variables for histograms u and v such that . Suppose that examples and have been emitted from ; we wish to know whether are conditionally independent given based on the MDL principle. Then, we can answer the question affirmatively if we compare the description length values to find that . This means that according to the MDL principle, we can use the decision that are conditionally independent given if and only if . Hence, if u provides the maximum value of , then we choose the histogram u. Thus, we propose the estimation given by , and we prove why the optimal value of u is at most in Section 3.2 (Theorem 1).

Another interpretation is that if the sample size in each bin is smaller, then the estimation is less robust. However, if the number of bins is smaller, then the approximation of the histogram is less appropriate. These two factors are balanced by the MDL principle.

For example, suppose that ; thus, . If we have the following four values:

u J(u)

1 0.2664842

2 0.5077115

3 0.5731657

4 0.4601272

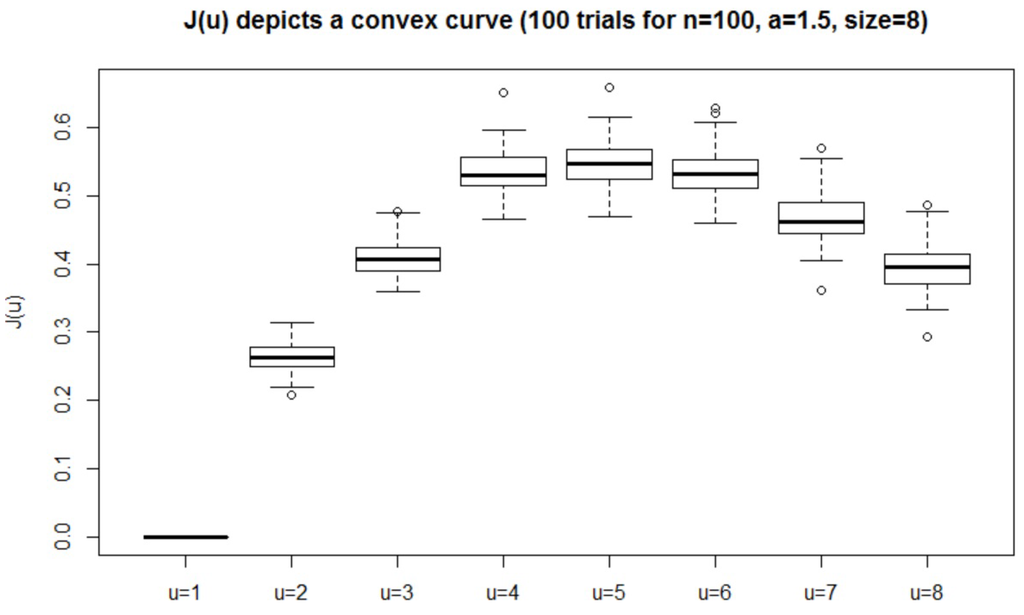

then the final estimation will be 0.5731657 (). Note that there are other methods for finding the maximum mutual information. For example, and clusters for each of work if (the smaller a is, the larger s is). For , we experimentally find (Figure 2) that the value of depicts a concave curve, i.e., the maximum value is obtained at the point at which the sample size of each bin (robustness of the estimation) and the number of bins (approximation of the histogram) are balanced.

Figure 2.

Values of with : the maximum value is obtained at the point where the sample size of each bin and the number of bins are balanced.

Next, we consider the case for which two values at consecutive locations are the same in one of the two sequences Equation (14). In general, we divide each cluster in half at each stage . If two values at consecutive locations are equal and they need to be divided, then we choose another border: suppose that k values are equal from the -th location,

and that we need to divide the -th and -th positions (); rather, we either divide between the j-th and -th positions or between the -th and -th positions, depending on whether or . For example, if and , then the cluster generating process for is as follows:

In this way, even when the sequence is discrete, we can obtain the quantization . In particular, we have if u is sufficiently large. The proposed scheme does not distinguish whether each of the given sequences is discrete or continuous.

3.2. Properties

In this subsection, we prove two fundamental claims:

- The optimal u that maximizes is no larger than .

- For large n, the mutual information estimation of each histogram converges to the correct approximated value.

- For large n, the estimation is zero if and only if X and Y are independent.

First, we have the following lemma from the law of large numbers:

Lemma 1.

The breaking points of histograms converge to the correct values ( percentile points, ) with probability one as the sample size n (hence, its maximum depth s) increases, and the value of a is assumed to be two for simplicity.

Let be the true mutual information w.r.t. the correct breaking points of the histogram .

Theorem 1.

For , the optimal u is no larger than .

We observe that for all ,

In fact, from u to , the increases in the empirical entropies of X and Y are at most one, respectively, and the decrease in the empirical entropy of is at least zero. If we have the inequality

for some , then we cannot expect to be the optimal value. However, when , under Equation (16), Equation (17) implies that

which is equivalent to

and is true for . Moreover, for and , from and

we also have . This completes the proof.

Theorem 2.

For large n, the estimation of the mutual information of each histogram converges to the correct value.

Proof. Each boundary converges to the true value for each histogram (Lemma 1), and the number of samples in each bin increases as n becomes larger; therefore, the estimation in histogram converges to the correct mutual information value .

Theorem 3.

With probability one as , if and only if X and Y are independent.

Proof. Suppose that X and Y are not independent. Because , we have for the value u. Thus, the for u is positive mutual information with probability one as (Theorem 2), and . For proof of the other direction, see the Appendix.

3.3. Preliminary Experiments

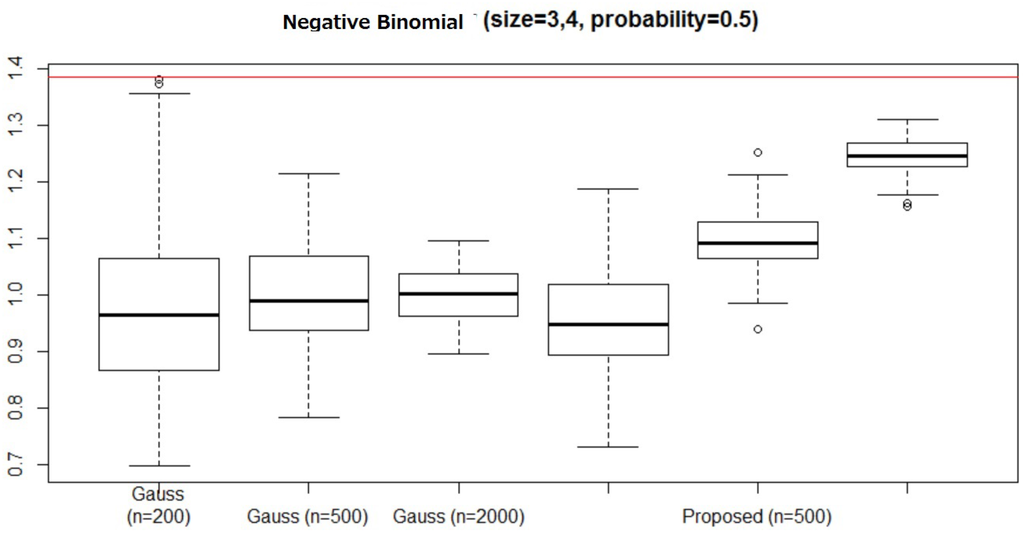

If the random variables are known to be Gaussian a priori, it is considered to be easier to use a Gaussian method to estimate the correlation coefficient and to compute the estimation based on Equation (4) than by using the proposed method, which does not require the variables to be Gaussian. We compared the proposed algorithm with the Gaussian method.

- X and Y follow the negative binomial distribution with parameters and such that X and Y are the numbers of occurrences before an event with probability P occurs and () times, respectively. In particular, we set , , , and .

- with , with probability 0.5, , and .

- with probability 0.5, with , , and .

For the first experiment, because X and Y are discrete, we expect the proposed method to successfully compute the mutual information values even though none of the ranges of X and Y are bounded. The Gaussian method only considers the correlation between two variables, whereas the proposed method counts the occurrences of the pairs. Consequently, particularly for large n, the proposed method outperformed the Gaussian method and tended to converge to the true mutual information value as n increased (Figure 3).

Figure 3.

Experiment 1: for large n, the proposed method outperforms the Gaussian method.

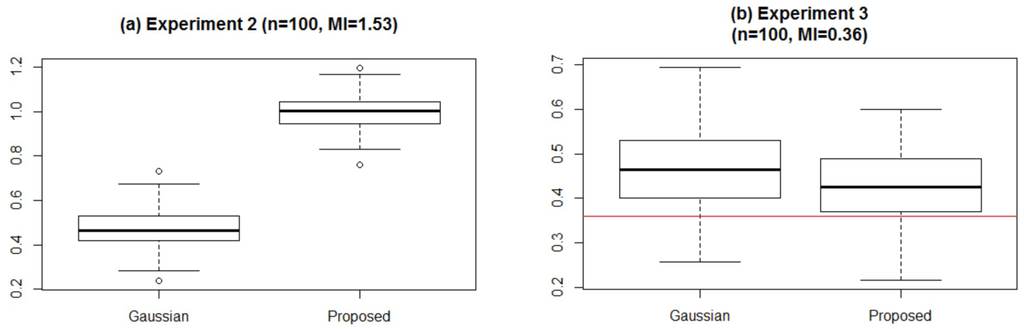

For the second experiment, although X and Y are continuous, the difference is discrete, as is the probabilistic relation. The proposed method can count the differences (integers) and the quantized values. Consequently, the proposed method estimated the mutual information values more correctly than the Gaussian method (Figure 4a).

Figure 4.

Experiments 2 and 3: the proposed method outperformed the Gaussian method for both experiments. The difference is less in Experiment 3 than in Experiment 2.

However, for the ANOVA case (Experiment 3), the mutual information values obtained using the proposed method are closer to the true value than those obtained using the Gaussian method (Figure 4b). We expected that the Gaussian method would outperform the proposed method, but in this case, X is discrete and the mutual information is at most the entropy of X; thus, the proposed method shows a slightly better performance than the Gaussian method. However, the difference between the two methods is not as large as that in Experiment 2, which is because the noise is Gaussian and the Gaussian method is designed to address Gaussian noise.

4. Application to Independence Tests

We conducted experiments using the R language, and we obtained evidence that supports the “No Free Lunch” theorem [15] for independence tests: no single independence test is capable of outperforming all the other tests. The proposed and HSIC methods require and time, respectively, to perform the computation; thus, the former is considerably faster than the latter, particularly for large values of n.

For the HSIC method, we used the Gaussian kernel [8]

with for both and . We set the significance level α to be 0.05. To compute the threshold such that we decide if and only if , because only and are available, this requires us to repeatedly and randomly reorder to generate mutually independent and such that we can simulate the null hypothesis. However, this process is time-consuming for our experiments, and we generate mutually independent pairs and to compute 200 times to estimate the distribution of under the null hypothesis and the 95 percentile point .

For the proposed method, we set the prior probability of to be 0.5.

4.1. Binary and Gaussian Sequences

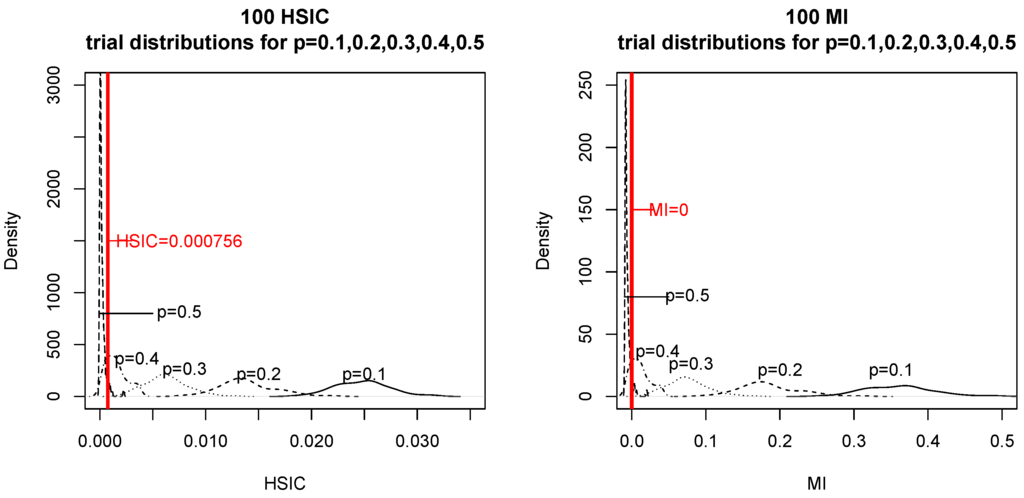

First, we generated mutually independent binary X and U, with the probabilities of and being 0.5 and , respectively, to obtain mod 2. When we simulated the null hypothesis, we generated in the same way as that used for generating . We computed and 100 times for and .

The obtained results are presented in Figure 5. For each p and , we depict the distributions of and in the plots on the left and right, respectively. If the data occur to the left of the red vertical line, then the tests consider and to be independent. In particular, for () and (X  Y), we counted how many times the two tests chose and X

Y), we counted how many times the two tests chose and X  Y (see Table 1).

Y (see Table 1).

Y), we counted how many times the two tests chose and X

Y), we counted how many times the two tests chose and X  Y (see Table 1).

Y (see Table 1).

Figure 5.

Experiments for binary sequences ().

Table 1.

Experiments for binary sequences: the figures show how many times (out of 100) the HSIC and the proposed method regarded the two sequences as being independent () and dependent (  ) for .

) for .

) for .

) for .

We could not find any significant difference in the correctness of testing for the two tests.

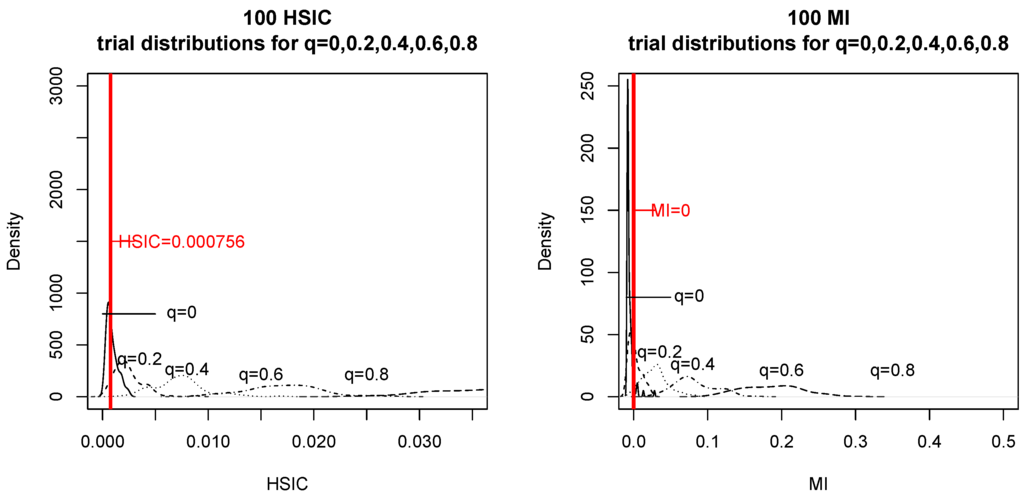

Next, we generated mutually independent Gaussian X and U with mean zero and variance one, and for . When we simulated the null hypothesis, we generated in the same way as that used for generating . We computed and 100 times for and .

The obtained results are presented in Figure 6. For each q and , we depict the distributions of and on the left and right, respectively. If the data occur to the left of the red vertical line, then the tests consider and to be independent. In particular, for () and (X  Y), we counted how many times the two tests chose and X

Y), we counted how many times the two tests chose and X  Y (see Table 2).

Y (see Table 2).

Y), we counted how many times the two tests chose and X

Y), we counted how many times the two tests chose and X  Y (see Table 2).

Y (see Table 2).

Figure 6.

Experiments for Gaussian sequences ().

Table 2.

Experiments for Gaussian sequences: the figures show how many times (out of 100) the HSIC and the proposed method regarded the two sequences as being independent () and dependent (  ) for .

) for .

) for .

) for .

We could not find any significant difference in the correctness of testing between the two tests.

4.2. When is the Proposed Method Superior?

We found two cases in which the proposed method outperforms the HSIC:

- X and U are mutually independent and follow the Gaussian distribution with mean 0 and variance 0.25, and , where is the rounded integer of x ( = 1) (ROUNDING).

- X takes a value in uniformly and Y takes a value in either or uniformly depending on the value of X such that is an even number (INTEGER).

We refer to the two problems as ROUNDING and INTEGER, respectively. Apparently, the answers to both of these are that are not independent, although the correlation coefficient is zero.

Table 3 shows the number of times the tests chose and X  Y for the experiments. We observed that the HSIC failed to detect dependencies for both of the problems, whereas the proposed method successfully found X and Y to not be independent.

Y for the experiments. We observed that the HSIC failed to detect dependencies for both of the problems, whereas the proposed method successfully found X and Y to not be independent.

Y for the experiments. We observed that the HSIC failed to detect dependencies for both of the problems, whereas the proposed method successfully found X and Y to not be independent.

Y for the experiments. We observed that the HSIC failed to detect dependencies for both of the problems, whereas the proposed method successfully found X and Y to not be independent.

Table 3.

Hilbert-Schmidt independence criterion (HSIC) fails to detect dependencies.

The obvious reason appears to be that the HSIC simply considers the magnitudes of . For the ROUNDING problem, are independent for the integer parts, but the fractional parts are related. However, when using HSIC, because the integer part contributes to the score considerably more than the fractional part, the HSIC cannot detect the relation between the whole parts.

The same reasoning can be applied to the INTEGER problem. In fact, the values of and are independent, where denotes the largest integer not exceeding x. However, the relation mod 2 always holds, and this cannot be detected by the HSIC.

Note that we do not claim that the proposed method is always superior to the HSIC. Admittedly, for many problems, the HSIC performs better. For example, for typical problems such as

- 3

- X and U follow the standard Gaussian and binary (probability 0.5) distributions, and (ZERO-COV),

Table 4.

HSIC outperforms the proposed method.

We rather claim that no single independence test outperforms all the others.

4.3. Execution Time

We compare the execution times for the Gaussian sequences. Table 5 lists the average execution times for , and 2000 and (the results were almost identical for the other values of q).

Table 5.

Execution time (seconds).

We find that the proposed method is considerably faster than the HSIC, particularly for large n. This result occurs because the proposed method requires time for the computation, whereas the HSIC requires time. Although the HSIC estimator might detect some independence for large n because of its (weak) consistency, it appears that the HSIC is not efficient for large n. Because the HSIC requires the null hypothesis to be simulated, a considerable amount of additional computation would be required.

5. Concluding Remarks

We proposed an estimator of mutual information and demonstrated the effectiveness of the algorithm in solving the independence testing problem.

Although estimating mutual information of continuous variables was considered to be difficult, the proposed estimator was shown to detect independence for a large sample size if and only if the two variables are independent. The estimator constructs many histograms of size , estimates their mutual information , and chooses the one with the maximum value over . We find that the optimal u has an upper bound of . The proposed algorithm requires time to perform the computation.

Then, we compared the performance of our proposed estimator with that of the HSIC estimator, de facto for the independence testing principle. The two methods differ in that the proposed method is based on the MDL principle given data , although the HSIC detects abnormalities assuming the null hypothesis given the data. We could not obtain a definite answer to enable us to determine which method is superior for general settings; rather, we obtained evidence that no single statistical test outperforms all the others for all problems. In fact, although HSIC will clearly be superior when certain specific dependency structures form the alternative hypothesis, the proposed estimator is more universal.

One meaningful insight obtained is that the HSIC only considers the magnitude of the data and neglects to find relations that cannot be detected by simply considering the changes in magnitude.

The most notable merit of the proposed algorithm compared to the HSIC is its efficiency. The HSIC requires computational time for one test. However, prior to the test, it is necessary to simulate the null hypothesis and set the threshold such that the algorithm determines that the data are independent if and only if the HSIC values do not exceed the threshold. In this sense, executing the HSIC would be time-consuming, and it would be safe to say that the proposed algorithm is useful for designing intelligent machines, whereas the HSIC is appropriate for scientific discovery.

In future work, we will consider exactly when the proposed method exhibits a particularly good performance.

Moreover, we should address the question of how generalizations to three dimensions might work. In this paper, it is not clear whether one would want to estimate some form of total independence such as or conditional mutual information such as . In fact, for Bayesian network structure learning (BNSL), we need to compute Bayesian scores of conditional mutual information from the data to apply a standard scheme of BNSL based on dynamic programming [16]. Currently, a constraint-based approach for estimating conditional mutual information values using positive definite kernels is available [17], but no theoretical guarantee, such as consistency, is obtained by the method.

Conflicts of Interest

The author declares no conflict of interest.

Appendix

Proof. of Theorem 3 (Necessity)

We assume that are independent to show with probability one.

To this end, we use the following fact: with for large for each [18,19]. If we write the Gamma density and functions as

and set , the fact implies that

First, to show with probability one as , we set for each , and to obtain an upper bound on the probability

of . Note that Theorem 2 does not necessarily mean with probability one as .

Let (integer). Because

and

only but for a finite number of k, we find that

only but for a finite number of k. Moreover, from the mean value theorem, there exists such that

Furthermore, if we let as a function of m and n, from () and the Stirling formula , we have

which means that

only but for a finite n(n s.t. ), where we have written the quantity s.t. as as .

Hence, from the Borel-Cantelli lemma, with probability as , which combined with with probability one means that with probability one as . This completes the proof. ☐

References

- Shannon, C.E. A Mathematical Theory of Communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Rissanen, J. Modeling by shortest data description. Automatica 1978, 14, 465–471. [Google Scholar] [CrossRef]

- Suzuki, J. A Construction of Bayesian Networks from Databases on an MDL Principle. In Proceedings of the Conference on Uncertainty in Artificial Intelligence, Washington, DC, USA, 9–11 July 1993; pp. 266–273.

- Härdle, W.; Müller, M.; Sperlich, S.; Werwatz, A. Nonparametric and Semiparametric Models; Springer-Verlag: Berlin/Heidelberg, Germany, 2004. [Google Scholar]

- Evans, D. A computationally efficient estimator for mutual information. Proc. R. Soc. A 2013, 464, 1203–1215. [Google Scholar] [CrossRef]

- Kraskov, A.; Stögbauer, H.; Grassberger, P. Estimating mutual information. Phys. Rev. E 2004, 69, 066138. [Google Scholar] [CrossRef] [PubMed]

- Silva, J.; Narayanan, S.S. Nonproduct Data-Dependent Partitions for Mutual Information Estimation: Strong Consistency and Applications. IEEE Trans. Signal Process. 2010, 58, 3497–3511. [Google Scholar] [CrossRef]

- Gretton, A.; Bousquet, O.; Smola, A.J.; Scholkopf, B. Measuring Statistical Dependence with Hilbert-Schmidt Norms. In Proceedings of the 16th Conference on Algorithmic Learning Theory, Singapore, Singapore, 8–11 October 2005.

- Suzuki, J. The Bayesian Chow-Liu Algorithm. In Proceedings of the Sixth European Workshop on Probabilistic Graphical Models, Granada, Spain, 19–21 September 2012.

- Krichevsky, R.E.; Trofimov, V.K. The Performance of Universal Encoding. IEEE Trans. Inf. Theory 1981, 27, 199–207. [Google Scholar] [CrossRef]

- Nemenman, I.; Shafee, F.; Bialek, F.W. Entropy and inference, revisited. In Advances in Neural Information Processing Systems 14; MIT Press: Cambridge, MA, USA, 2002; pp. 471–478. [Google Scholar]

- Archer, E.; Park, M.I.; Pillow, J. Bayesian and Quasi-Bayesian Estimators for Mutual Information from Discrete Data. Entropy 2013, 15, 1738–1755. [Google Scholar] [CrossRef]

- Hutter, M. Distribution of mutual information. In Advances in Neural Information Processing Systems 14; MIT Press: Cambridge, MA, USA, 2002; pp. 399–406. [Google Scholar]

- Gessaman, M.P. A Consistent Nonparametric Multivariate Density Estimator Based on Statistically Equivalent Blocks. Ann. Math. Stat. 1970, 41, 1344–1346. [Google Scholar] [CrossRef]

- Wolpert, D.; Macready, W. No Free Lunch Theorems for Optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Silander, T.; Myllymaki, P. A simple approach for finding the globally optimal Bayesian network structure. In Proceedings of the 22nd Conference on Uncertainty in Artificial Intelligence, Cambridge, MA, USA, 13–16 July 2006.

- Zhang, K.; Peters, J.; Janzing, D.; Scholkopf, B. Kernel-based Conditional Independence Test and Application in Causal Discovery. In Proceedings of the Conference on Uncertainty in Artificial Intelligence, Barcelona, Spain, 14–17 July 2011; pp. 804–813.

- Suzuki, J. On Strong Consistency of Model Selection in Classification. IEEE Trans. Inf. Theory 2006, 52, 4767–4774. [Google Scholar] [CrossRef]

- Suzuki, J. Consistency of Learning Bayesian Network Structures with Continuous Variables: An Information Theoretic Approach. Entropy 2015, 17, 5752–5770. [Google Scholar] [CrossRef]

© 2016 by the author; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).