Entropy vs. Energy Waveform Processing: A Comparison Based on the Heat Equation

Abstract

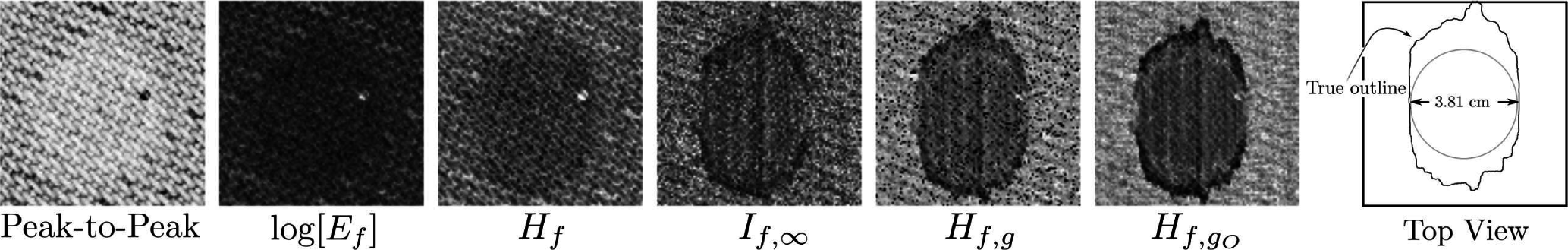

:1. Introduction

2. The Main Result

- Then, for Δ small, there exists a constant K1 that depends on ║η′║∞, but not on g or σ, such that:

- There exists K2 > 0, that depends on ║η║∞, but not on Δ or σ, such that:

3. Approach

3.1. The Physical Setup

3.2. Characteristics of the Measurement Window µΔ(t) Relevant to the Variation and Variance of Hf+εη+σς,g

3.3. Characteristics of the Measurement Window µΔ(t) Relevant to the Variation and Variance of Ef+εη+σς

4. Calculation of the Variations of Joint Entropy and Signal Energy

4.1. Calculation of the Variation, δHf+σς,g(η)

4.1.1. The First Term

4.1.2. Logarithmic Term

4.1.3. Total Variation

4.2. Calculation of the Variation, δEf+σς(η), for Ef

5. Calculation of the Average Variations of Joint Entropy and Signal Energy

5.1. Calculation of the Average Variation, ⟨δHf+σς,g(η)⟩, by Wiener Integration

5.1.1. The Average Variation, ⟨δHf+σς,g[η]⟩, and the Heat Equation

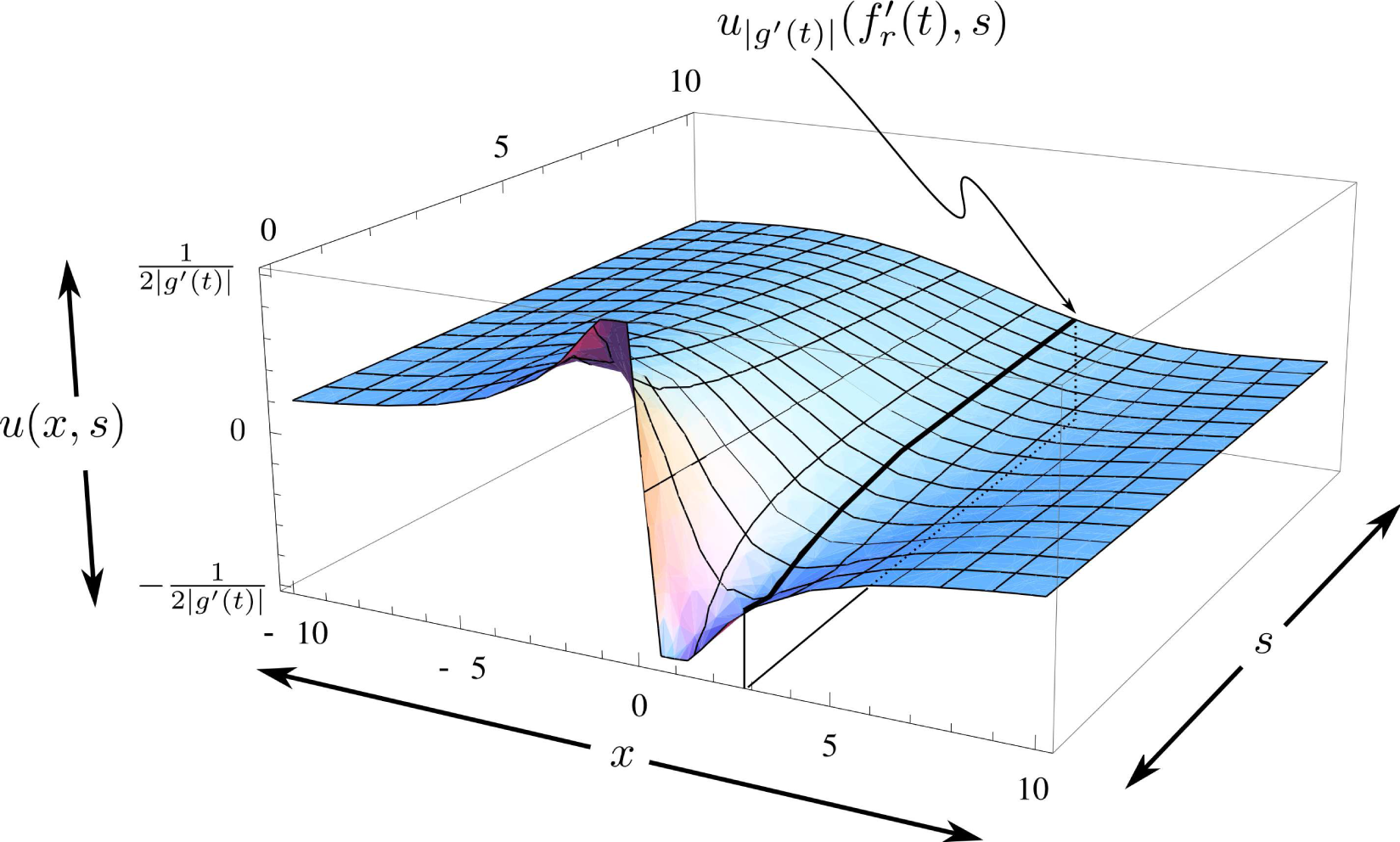

5.1.2. The Structure of the Solution-Surfaces Associated with ⟨δHf+σς,g[η]⟩

5.2. Calculation of the Average Variation ⟨δEf+σς(η)⟩

6. The Variances of the Variations

- Then, there exists a constant K1 that depends on ║η′║∞, but not on g or σ, such that:

- There exists K2 > 0, that depends on ║η║∞, but not on Δ or σ, such that:

7. A Short List of Wiener Integrals

7.1. The First Wiener Integral

7.1.1. Random Variables Derivation

7.2. The Second Wiener Integral

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Young, S.; Driggers, R.; Jacobs, E. Signal Processing and Perfromance Analysis for Imaging Systems; Artech House: Boston, MA, USA, 2008. [Google Scholar]

- Carson, P.L.; Abbott, J.G.; Harris, G.R.; Lewin, P. Acoustic output Measurement and Labeling Standard for Diagnostic Ultrasound Equipment; American Institute of Ultrasound in Medicine: Boca Raton, FL, USA, 2014. [Google Scholar]

- Insana, M. Ultrasonic imaging of microscopic structures in living organs. Int. Rev. Exp. Pathol. 1996, 36, 73–92. [Google Scholar]

- Chaturvedi, P.; Insana, M. Error bounds on ultrasonic scatterer size estimates. J. Acoust. Soc. Am. 1996, 100, 392–399. [Google Scholar]

- Madsen, E.; Insana, M.; Zagzebski, J. Method of data reduction for accurate determination of acoustic backscatter coefficients. J. Acoust. Soc. Am. 1984, 76, 913–923. [Google Scholar]

- Forsberg, F.; Liu, J.; Merton, D. Gray scale second harmonic imaging of acoustic emission signals improves detection of liver tumors in rabbits. J Ultras. Med. 2000, 19, 557–563. [Google Scholar]

- Forsberg, F.; Dicker, A.P.; Thakur, M.L.; Rawool, N.M.; Liu, J.B.; Shi, W.T.; Nazarian, L.N. Comparing contrast-enhanced ultrasound to immunohistochemical markers of angiogenesis in a human melanoma xenograft model: Preliminary results. Ultrasound Med. Biol. 2002, 28, 445–451. [Google Scholar]

- Lizzi, F.L.; Feleppa, E.J.; Alam, S.K.; Deng, C.X. Ultrasonic spectrum analysis for tissue evaluation. Pattern Recognit. Lett. 2003, 24, 637–658. [Google Scholar]

- Ciancio, A. Analysis of Time Series with Wavelets. Int. J. Wavel. Multiresolut. Inf. Process 2007, 5, 241–256. [Google Scholar]

- Rukun, S.; Subanar; Rosadi, D; Suharto. The Adequateness of Wavelet Based Model for Time Series. J. Phys. Conf. Ser. 2007, 423, 241–256. [Google Scholar]

- Baek, C.; Pipiras, V. Long range Dependence, Unbalanced Haar Wavelet Transformation and Changes in Local Mean Level. Int. J. Wavel. Multiresolut. Inf. Process 2009, 7, 23–58. [Google Scholar]

- Lee, S.; Park, J. Efficient Similarity Search for Multi-Dimensional Time Sequences. Int. J. Wavel. Multiresolut. Inf. Process 2010, 8, 343–357. [Google Scholar]

- Aman, S.; Chelmis, C.; Prasanna, V.K. Influence-Driven Model for Time Series Prediction from Partial Observations, Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; pp. 601–607.

- Dutta, I.; Banerjee, R.; Bit, S.D. Energy Efficient Audio Compression Scheme Based on Red Black Wavelet Lifting for Wireless Multimedia Sensor Network, Proceedings of the 2013 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Mysore, India, 22–25 August 2013; 45, pp. 1070–1075.

- Shannon, C.; Weaver, W. The Mathematical Theory of Communication; University of Illinois Press: Urbana, IL, USA, 1971. [Google Scholar]

- Reinsch, C.H. Smoothing by Spline Functions. Numer. Math. 1967, 10, 177–183. [Google Scholar]

- Li, M.; Vitanyi, P. An Introduction to Kolmogorov Complexity and Its Applications; Texts in Computer Science; Springer: New York, NY, USA, 2008. [Google Scholar]

- Chaitin, G. Algorithmic Information Theory; Cambridge University Press: New York, NY, USA, 2004. [Google Scholar]

- Hughes, M.S. A Comparison of Shannon Entropy versus Signal Energy for Acoustic Detection of Artificially Induced Defects in Plexiglass. J. Acoust. Soc. Am. 1992, 91, 2272–2275. [Google Scholar]

- Hughes, M.S. Analysis of Ultrasonic Waveforms Using Shannon Entropy, Proceedings of the IEEE Ultrasonics Symposium, Tucson, AZ, USA, 20–23 October 1992; pp. 1205–1209.

- Hughes, M.S. Analysis of Digitized Waveforms Using Shannon Entropy. J. Acoust. Soc. Am. 1993, 93, 892–906. [Google Scholar]

- Hughes, M.S. NDE Imaging of Flaws Using Rapid Computation of Shannon Entropy, Proceedings of the IEEE Ultrasonics Symposium, Baltimore, MD, USA, 31 October–3 November 1993; pp. 697–700.

- Hughes, M.S. Analysis of digitized waveforms using Shannon entropy. II. High-speed Algorithms based on Green’s functions. J. Acoust. Soc. Am. 1994, 95, 2582–2588. [Google Scholar]

- Hughes, M.S.; Marsh, J.N.; Hall, C.S.; Savery, D.; Lanza, G.M.; Wickline, S.A. Characterization of Digital Waveforms Using Thermodynamic Analogs: Applications to Detection of Materials Defects. IEEE Trans. Ultrason. Ferroelectr. Freq. Control. 2005, 52, 1555–1564. [Google Scholar]

- Hughes, M.S.; McCarthy, J.; Marsh, J.; Wickline, S. Joint entropy of continuously differentiable ultrasonic waveforms. J. Acoust. Soc. Am. 2013, 133, 283–300. [Google Scholar]

- Hughes, M.S.; McCarthy, J.; Marsh, J.; Wickline, S. High Sensitivity Imaging of Resin-Rich Regions in Graphite/Epoxy Laminates using an Optimized Joint-Entropy-based Signal Receiver. J. Acoust. Soc. Am. 2015. submitted. [Google Scholar]

- Hughes, M.S.; McCarthy, J.E.; Wickerhauser, M.V.; Marsh, J.N.; Arbeit, J.M.; Fuhrhop, R.W.; Wallace, K.D.; Thomas, T.; Smith, J.; Agyem, K.; et al. Real-time Calculation of a Limiting form of the Renyi Entropy Applied to Detection of Subtle Changes in Scattering Architecture. J. Acoust. Soc. Am. 2009, 126, 2350–2358. [Google Scholar]

- Hughes, M.S.; Marsh, J.N.; Hall, C.S.; Savoy, D.; Scott, M.J.; Allen, J.S.; Lacy, E.K.; Carradine, C.; Lanza, G.M.; Wickline, S.A. In vivo ultrasonic detection of angiogenesis with site-targeted nanoparticle contrast agents using measure-theoretic signal receivers, Proceedings of the IEEE Ultrasonics Symposium, Montréal, Canada, 23–27 August 2004; pp. 1106–1109.

- Hughes, M.S.; Marsh, J.N.; Arbeit, J.; Neumann, R.; Fuhrhop, R.W.; Lanza, G.M.; Wickline, S.A. Ultrasonic Molecular Imaging of Primordial Angiogenic Vessels in Rabbit and Mouse Models With αvβ3-integrin Targeted Nanoparticles Using Information-Theoretic Signal Detection: Results at High Frequency and in the Clinical Diagnostic Frequency Range, Proceedings of the IEEE Ultrasonics Symposium, Rotterdam, The Netherlands, 18–21 September 2005; pp. 617–620.

- Hughes, M.S.; Marsh, J.; Woodson, A.; Lacey, E.; Carradine, C.; Lanza, G.M.; Wickline, S.A. Characterization of Digital Waveforms Using Thermodynamic Analogs: Detection of Contrast Targeted Tissue in MDA435 Tumors Implanted in Athymic Nude Mice, Proceedings of the IEEE Ultrasonics Symposium, Rotterdam, The Netherlands, 18–21 September 2005; pp. 373–376.

- Hughes, M.S.; Marsh, J.; Lanza, G.M.; Wickline, S.A.; McCarthy, J.; Wickerhauser, V.; Maurizi, B.; Wallace, K. Improved signal processing to detect cancer by ultrasonic molecular imaging of targeted nanoparticles. J. Acoust. Soc. Am. 2011, 129, 3756–3767. [Google Scholar]

- Hughes, M.S.; Marsh, J.N.; Zhang, H.; Woodson, A.K.; Allen, J.S.; Lacy, E.K.; Carradine, C.; Lanza, G.M.; Wickline, S.A. Characterization of Digital Waveforms Using Thermodynamic Analogs: Detection of Contrast-Targeted Tissue In Vivo. IEEE Trans. Ultrason. Ferroelectr. Freq. Control. 2006, 53, 1609–1616. [Google Scholar]

- Hughes, M.S.; McCarthy, J.E.; Marsh, J.N.; Arbeit, J.M.; Neumann, R.G.; Fuhrhop, R.W.; Wallace, K.D.; Znidersic, D.R.; Maurizi, B.N.; Baldwin, S.L.; et al. Properties of an entropy-based signal receiver with an application to ultrasonic molecular imaging. J. Acoust. Soc. Am. 2007, 121, 3542–3557. [Google Scholar]

- Hughes, M.S.; Marsh, J.; Wallace, K.; Donahue, T.; Connolly, A.; Lanza, G.M.; Wickline, S.A. Sensitive Ultrasonic Detection of Dystrophic Skeletal Muscle in Patients with Duchenne’s Muscular Dystrophy using an Entropy-Based Signal Receiver. Ultrasound Med. Biol. 2007, 33, 1236–1243. [Google Scholar]

- Hughes, M.S.; McCarthy, J.E.; Marsh, J.N.; Arbeit, J.M.; Neumann, R.G.; Fuhrhop, R.W.; Wallace, K.D.; Thomas, T.; Smith, J.; Agyem, K.; et al. Application of Renyi Entropy for ultrasonic molecular imaging. J. Acoust. Soc. Am. 2009, 125, 3141–3145. [Google Scholar]

- Hughes, M.S.; Marsh, J.; Agyem, K.; McCarthy, J.; Maurizi, B.; Wickerhauser, M.; Wallance, K.D.; Lanza, G.; Wickline, S. Use of smoothing splines for analysis of backscattered ultrasonic waveforms: Application to monitoring of steroid treatment of dystrophic mice. IEEE Trans. Ultrason. Ferroelectr. Freq. Control. 2011, 58, 2361–2369. [Google Scholar]

- Marsh, J.N.; McCarthy, J.E.; Wickerhauser, M.; Arbeit, J.M.; Fuhrhop, R.W.; Wallace, K.D.; Lanza, G.M.; Wickline, S.A.; Hughes, M.S. Application of Real-Time Calculation of a Limiting Form of the Renyi Entropy for Molecular Imaging of Tumors. IEEE Trans. Ultrason. Ferroelectr. Freq. Control. 2010, 57, 1890–1895. [Google Scholar]

- Marsh, J.N.; Wallace, K.D.; Lanza, G.M.; Wickline, S.A.; Hughes, M.S.; McCarthy, J.E. Application of a limiting form of the Renyi entropy for molecular imaging of tumors using a clinically relevant protocol, Proceedings of the IEEE Ultrasonics Symposium, San Diego, CA, USA, 11–14 October 2010; pp. 53–56.

- Sackett, D.L. Why randomized controlled trials fail but needn’t: 2. Failure to employ physiological statistics, or the only formula a clinician-trialist is ever likely to need (or understand!). Can. Med. Assoc. J. 2001, 165, 1226–1237. [Google Scholar]

- Yeh, J.C. Stochastic Processes and the Wiener Integral; Marcel Dekker: New York, NY, USA, 1973. [Google Scholar]

- Cannon, J.R. The One-Dimensional Heat Equation; Addison-Wesley: Reading, MA, USA, 1984. [Google Scholar]

- Widder, D.V. The Heat Equation; Academic Press: New York, NY, USA, 1975. [Google Scholar]

- Bender, C.M.; Orszag, S.A. Advanced Mathematical Methods for Scientists and Engineers; Springer: New York, NY, USA, 1978. [Google Scholar]

- Koval’chik, I. The Wiener Integral. Russ. Math. Surv. 1963, 18, 97–134. [Google Scholar]

- Cameron, R.H.; Martin, W.T. Transformations of Weiner Integrals Under Translations. Ann. Math. 1944, 45, 386–396. [Google Scholar]

- Paley, R.E.A.C.; Wiener, N.; Zygmund, A. Notes on Random Functions. Math. Z. 1933, 37, 651–672. [Google Scholar]

- Wiener, N.; Siegel, A.; Rankin, B.; Martin, W.T. Differential Space, Quantum Systems, and Prediction; The MIT Press: Cambridge, MA, USA, 1966. [Google Scholar]

© 2015 by the authors; licensee MDPI, Basel, Switzerland This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hughes, M.S.; McCarthy, J.E.; Bruillard, P.J.; Marsh, J.N.; Wickline, S.A. Entropy vs. Energy Waveform Processing: A Comparison Based on the Heat Equation. Entropy 2015, 17, 3518-3551. https://doi.org/10.3390/e17063518

Hughes MS, McCarthy JE, Bruillard PJ, Marsh JN, Wickline SA. Entropy vs. Energy Waveform Processing: A Comparison Based on the Heat Equation. Entropy. 2015; 17(6):3518-3551. https://doi.org/10.3390/e17063518

Chicago/Turabian StyleHughes, Michael S., John E. McCarthy, Paul J. Bruillard, Jon N. Marsh, and Samuel A. Wickline. 2015. "Entropy vs. Energy Waveform Processing: A Comparison Based on the Heat Equation" Entropy 17, no. 6: 3518-3551. https://doi.org/10.3390/e17063518

APA StyleHughes, M. S., McCarthy, J. E., Bruillard, P. J., Marsh, J. N., & Wickline, S. A. (2015). Entropy vs. Energy Waveform Processing: A Comparison Based on the Heat Equation. Entropy, 17(6), 3518-3551. https://doi.org/10.3390/e17063518