Abstract

We show that the maximin average redundancy in pattern coding is eventually larger than for messages of length n. This improves recent results on pattern redundancy, although it does not fill the gap between known lower- and upper-bounds. The pattern of a string is obtained by replacing each symbol by the index of its first occurrence. The problem of pattern coding is of interest because strongly universal codes have been proved to exist for patterns while universal message coding is impossible for memoryless sources on an infinite alphabet. The proof uses fine combinatorial results on partitions with small summands.

1. Introduction

1.1. Universal Coding

Let be a stationary source on an alphabet A, both known by the coder and the decoder. Let be a random process with distribution . For a positive integer n, we denote by the vector of the n first components of X and by the distribution of on . We denote the logarithm with base 2 by log and the natural logarithm by ln. Shannon’s classical bound [1] states the average bit length of codewords for any coding function is lower-bounded by the n-th order entropy ; moreover, this codelength can be nearly approached, see [2]. One important idea in the proof of this result is the following: every code on the strings of length n is associated with a coding distribution on in such a way that the code length for x is , and reciprocally any distribution on can be associated with a coding function whose code length is approximately . When is ergodic, its entropy rate exists. It is a tight lower bound on the number of bits required per character.

If is only known to be an element of some class , universal coding consists in finding a single code, or equivalently a single sequence of coding distributions , approaching the entropy rate for all sources at the same time. Such versatility has a price: for any given source , there is an additional cost called the (expected) redundancy of the coding distribution that is defined as the difference between the expected code length and the n-th order entropy . Two criteria measure the universality of :

- First, a deterministic approach judges the performance of in the worst case by the maximal redundancy The lowest achievable maximal redundancy is called minimax redundancy:

- Second, a Bayesian approach consists in providing Θ with a prior distribution π, and then considering the expected redundancy (the expectation is here taken over θ). Let be the coding distribution minimizing The maximin redundancy of class is the supremum of all over all possible prior distributions π:

A classical minimax theorem (see [3]) states that mild hypotheses are sufficient to ensure that . Class is said to be strongly universal if : then universal coding is possible uniformly on . An important result by Rissanen [4] asserts that if the parameter set Θ is k-dimensional, and if there exists a consistent estimator for θ, then

This well-known bound has many applications in information theory, often related to the Minimum Description Length Principle. It is remembered as a “rule of thumb” that redundancy is for each parameter of the model. This result actually covers a large variety of cases, among others: memoryless processes, Markov chains, Context tree sources, hidden Markov chains. However, further generalization have been investigated. Shields (see [5]) proved that no coder can achieve a non-trivial redundancy rate on all stationary ergodic processes. Csiszár and Shields [6] gave an example of a non-parametric, intermediate complexity class, known as renewal processes, for which and are both of order . If alphabet A is not known, or if its size is not insignificant compared to n, Rissanen’s bound (1) is uninformative. If the alphabet A is infinite, Kieffer [7] showed that no universal coding is possible even for the class of memoryless processes.

1.2. Dictionary and Pattern

Those negative results prompted the idea of coding separately the structure of string x and the symbols present in x. It was first introduced by Åberg in [8] as a solution to the multi-alphabet coding problem, where the message x contains only a small subset of the known alphabet A. It was further studied and motivated in a series of articles by Shamir [9,10,11,12] and by Jevtić, Orlitsky, Santhanam and Zhang [13,14,15,16] for practical applications: the alphabet is unknown and has to be transmitted separately anyway (for instance, transmission of a text in an unknown language), or the alphabet is very large in comparison to the message (consider the case of images with colors, or texts when taking words as the alphabet units).

To explain the notion of pattern, let us take the example of [9]: string “abracadabra” is made of characters. The information it conveys can be separated in two blocks:

- a dictionary defined as the sequence of different characters present in x in order of appearance; in the example .

- a pattern defined as the sequence of positive integers pointing to the indices of each letter in Δ; here, .

Let be the set of all possible patterns of n-strings. For instance, , , . Using the same notations as in [15], we call multiplicity of symbol j in pattern the number of occurrences of j in ψ; the multiplicity of pattern ψ is the vector made of all symbol’s multiplicities: —in the former example, . Note that . Moreover, the profile of pattern ψ provides, for every multiplicity μ, its frequency in . It can be formally defined as the multiplicity of ψ’s multiplicity: . The profile of string “abracadabra” is as two symbols (c and d) appear once, two symbols (b and r) appear twice and one symbol (a) appears five times. We denote by the set of possible profiles for patterns of length n, so that , , . Note that . As explained in [15], there is one-to-one mapping between and the set of unordered partitions of integer n. In Section 3, this point will be used and specified.

1.3. Pattern Coding

Any process X from a source induces a pattern process with marginal distributions on defined by . Thus, we can define a n-th block pattern entropy . For stationary ergodic , Orlitsky & al. [16] prove that the pattern entropy rate exists and is equal to (whether this quantity is finite or not). This result was independently discovered by Gemelos and Weissman [17].

In the sequel, we shall consider only the case of memoryless sources , with marginal distributions on a (possibly infinite) alphabet . Hence, Θ will be the set parameterizing all probability distributions on .

Obviously, the process they induce on is not memoryless. But as patterns convey less information than the initial strings, coding them seems to be an easier task. The expected pattern redundancy of a coding distribution on can be defined by analogy as the difference between the expected code length under distribution and the n-th block pattern entropy:

As the alphabet is unknown, the maximal pattern redundancy must be defined as the maximum of over all alphabets A and all memoryless distributions on A. Of course, the minimax pattern redundancy is defined as the lower-bound of in . Similarly, the maximin pattern redundancy is defined as the supremum with respect to all possible alphabets A and all prior distributions π of the lowest achievable average redundancy, that is:

2. Theorem

There is still uncertainty on the true order of magnitude of and . However, Orlistky & et al. in [15] and Shamir in [11] proved that for some constants and it holds that . There is hence a gap between upper- and lower-bounds. This gap has been reduced in an article by Shamir [10] where the upper-bound is improved to . The following theorem contributes to the evaluation of , by providing a slightly better and more explicit lower-bound, the proof of which is particularly elegant.

Theorem 1 For all integers n large enough, the maximin pattern redundancy is lower-bounded as:

Gil Shamir [18] suggests that a bound of similar order can be obtained by properly updating (B12) in [11]. The proof provided in this paper was elaborated independently; both of them use the channel capacity inequality described in Section 3. However, it is interesting to note that they rely on different ideas (unordered partitions of integers and Bernstein’s inequality here, sphere packing arguments or inhomogeneous grids there). An important difference appears in the treatment of the quantization, see Equation 2. [11] provides fine relations between the minimax average redundancy and the alphabet size. The approach presented here does not discriminate between alphabet sizes; in a short and elegant proof, it leads to a slightly better bound for infinite alphabets.

3. Proof

We use here standard technique for lower-bounds (see [19]): the n-th order maximin redundancy is bounded from below by (and asymptotically equivalent to) the capacity of the channel joining an input variable W with distribution π on Θ to the output variable with conditional probabilities . Let be the conditional entropy of given W, and let denote the mutual information of these two random variables, see [2]. Then from [19] and [4] we know that inequality

holds for all alphabets and all prior distributions π on the set of memoryless distributions on : it is sufficient to give a lower-bound for the mutual information between parameter W and observation Ψ. In words, is larger than the logarithm of the number of memoryless sources that can be distinguished from one observation of .

Given the positive integer n, let be an integer growing with n to infinity in a way defined later, let λ be a positive constant to be specified later, let and let We denote by the set of all unordered partitions of c made of summands at most equal to d:

Then is the set of all unordered partitions of c. Let also be the subset of containing the profiles of all patterns whose symbols appear at most d times:

There is a one-to-one mapping between and defined by

It is immediately verified that . In [20], citing [21], Dixmier and Nicolas show the existence of an increasing function such that as , where . Numerous properties of function f, and numerical values, are given in [20]; notably, f is an infinitely derivable and concave function which satisfies when and when .

For , let be the distribution on defined by , and let be the memoryless process with marginal distribution . Let W be a random variable with uniform distribution on the set . Let be a random process such that conditionally on the event , then the distribution of X is , and let be the induced pattern process.

We want to bound from below. As

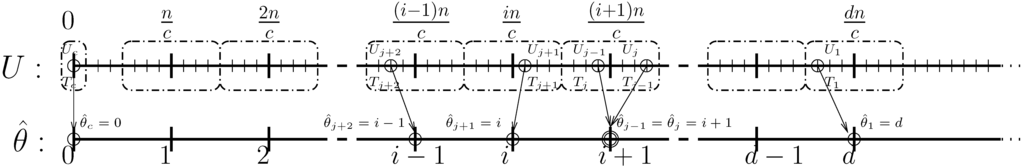

we need to find an upper-bound for . The idea of the proof is the following. >From Fano’s inequality, upper-bounding reduces to finding a good estimator for W: conditionally on , string is a memoryless process with distribution and we aim at recovering parameter θ from its pattern . Each parameter is here an unordered partition with small summands of integer c. Let be the number of occurrences of j-th most frequent symbol in ψ. Then constitutes a random unordered partition of n. We show that by “shrinking” T by a factor we build a unordered partition of c that is equal to parameter θ with high probability, see Figure 1. Note that only partitions with small summands are considered: this allows to have a better uniform control on the probabilities of deviation of each symbol’s frequency, while the cardinality of remains of same (logarithmic) order as that of . Parameters c and d are chosen in order to optimize the rate in Theorem 1, while the value of is chosen at the end to maximize the constant.

Figure 1.

The profile of pattern ψ forms a partition of n that can be “shrunk” to θ, the parameter partition of c, with high probability.

Let us now give the details of the proof. If and if we observe string having pattern , we construct an estimator of θ in the following way: let be the profile of ψ, and be the corresponding partition of n. For , let , where denotes the nearest integer of x. Observe that as alphabet contains only c different symbols, for all we have .

The distribution of T is difficult to study, but is very related to much simpler random variables. For and , let ; as has a Bernoulli distribution with parameter , and as process X is memoryless, we observe that , the number of occurrences of symbol j in x, has a binomial distribution . Let , and ; would be an estimator of θ if we had access to x, but here estimators may only be constructed from ψ. However, there is a strong connection between and : the symbols in x are in one-to-one correspondence with the symbols in ψ. Hence, T is just the order statistics of U: and thus .

Now, if then . Thus, if for all j in the set it holds that , then and , as an increasing sequence, is equal to its order statistics . It follows that

and hence, using the union bound:

We chose parameter set so that all summands in partition θ are small with respect to c. Consequently, the variance of the is uniformly bounded: . Recall the following Bernstein inequality [22]: if are independent random variables such that takes its values in and such that , and if , then for any positive x it holds that:

Using this inequality for the , we obtain:

Thus, we obtain from (3):

Now, using Fano’s inequality [2]:

Hence,

By choosing we get:

By looking at the table of f given at page 151 of [20], we see that function reaches its maximum around ; for that choice, and we obtain:

Acknowledgment

I would particularly like to thank professor Jean-Louis Nicolas from Institut Girard Desargues, who very kindly helped for the combinatorial arguments.

References

- Shannon, C.E. A mathematical theory of communication. Bell System Tech. J. 1948, 27, 379–423, 623–656. [Google Scholar]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; John Wiley & Sons Inc.: New York, NY, USA, 1991. [Google Scholar]

- Haussler, D. A general minimax result for relative entropy. IEEE Trans. Inform. Theory 1997, 43, 1276–1280. [Google Scholar] [CrossRef]

- Rissanen, J. Universal coding, information, prediction, and estimation. IEEE Trans. Inform. Theory 1984, 30, 629–636. [Google Scholar] [CrossRef]

- Shields, P.C. Universal redundancy rates do not exist. IEEE Trans. Inform. Theory 1993, 39, 520–524. [Google Scholar] [CrossRef]

- Csiszár, I.; Shields, P.C. Redundancy rates for renewal and other processes. IEEE Trans. Inform. Theory 1996, 42, 2065–2072. [Google Scholar] [CrossRef]

- Kieffer, J.C. A unified approach to weak universal source coding. IEEE Trans. Inform. Theory 1978, 24, 674–682. [Google Scholar] [CrossRef]

- Åberg, J.; Shtarkov, Y.M.; Smeets, B.J. Multialphabet Coding with Separate Alphabet Description. In Proceedings of Compression and complexity of sequences; Press, I.C.S., Ed.; IEEE: Palermo, Italy, 1997; pp. 56–65. [Google Scholar]

- Shamir, G.I.; Song, L. On the entropy of patterns of i.i.d. sequences. In Proceedings of 41st Annual Allerton Conference on Communication, Control and Computing; Curran Associates, Inc.: Monticello, IL, USA, 2003; pp. 160–169. [Google Scholar]

- Shamir, G.I. A new redundancy bound for universal lossless compression of unknown alphabets. In Proceedings of the 38th Annual Conference on Information Sciences and Systems - CISS; IEEE: Princeton, NJ, USA, 2004; pp. 1175–1179. [Google Scholar]

- Shamir, G.I. Universal lossless compression with unknown alphabets-the average case. IEEE Trans. Inform. Theory 2006, 52, 4915–4944. [Google Scholar] [CrossRef]

- Shamir, G.I. On the MDL principle for i.i.d. sources with large alphabets. IEEE Trans. Inform. Theory 2006, 52, 1939–1955. [Google Scholar] [CrossRef]

- Orlitsky, A.; Santhanam, N.P. Speaking of infinity. IEEE Trans. Inform. Theory 2004, 50, 2215–2230. [Google Scholar] [CrossRef]

- Jevtić, N.; Orlitsky, A.; Santhanam, N.P. A lower bound on compression of unknown alphabets. Theoret. Comput. Sci. 2005, 332, 293–311. [Google Scholar] [CrossRef]

- Orlitsky, A.; Santhanam, N.P.; Zhang, J. Universal compression of memoryless sources over unknown alphabets. IEEE Trans. Inform. Theory 2004, 50, 1469–1481. [Google Scholar] [CrossRef]

- Orlitsky, A.; Santhanam, N.P.; Viswanathan, K.; Zhang, J. Limit Results on Pattern Entropy of Stationary Processes. In Proceedings of the 2004 IEEE Information Theory workshop; IEEE: San Antonio, TX, USA, 2004; pp. 2954–2964. [Google Scholar]

- Gemelos, G.; Weissman, T. On the entropy rate of pattern processes; Technical report hpl-2004-159; HP Laboratories Palo Alto: San Antonio, TX, USA, 2004. [Google Scholar]

- Shamir, G.I.; From University of Utah, Electrical and Computer Ingeneering. Private communication, 2006.

- Davisson, L.D. Universal noiseless coding. IEEE Trans. Inform. Theory 1973, IT-19, 783–795. [Google Scholar] [CrossRef]

- Dixmier, J.; Nicolas, J.L. Partitions sans petits sommants. In A Tribute to Paul Erdös; Cambridge University Press: New York, NY, USA, 1990; Chapter 8; pp. 121–152. [Google Scholar]

- Szekeres, G. An asymptotic formula in the theory of partitions. Quart. J. Math. Oxford 1951, 2, 85–108. [Google Scholar] [CrossRef]

- Massart, P. Ecole d’Eté de Probabilité de Saint-Flour XXXIII; LNM; Springer-Verlag: London, UK, 2003; Chapter 2. [Google Scholar]

© 2009 by the authors; licensee Molecular Diversity Preservation International, Basel, Switzerland. This article is an open-access article distributed under the terms and conditions of the Creative Commons Attribution license http://creativecommons.org/licenses/by/3.0/.