A Diversity Model Based on Dimension Entropy and Its Application to Swarm Intelligence Algorithm

Abstract

1. Introduction

1.1. Optimization Problems and Swarm Intelligence Algorithms

1.2. An Overview of the Diversity of Swarm Intelligence Algorithms

- (1)

- It is robust to parameters such as population size and problem dimensions.

- (2)

- It has repeatability for different populations.

- (3)

- It can give feedback directly to changes in the population.

2. Diversity Model Based On Dimension Entropy

2.1. General Concept

2.2. Research Status of Diversity

2.3. Some Fundamental Flaws in Current Metrics

2.4. Diversity Model Based on Dimension Entropy

2.5. A Comparative Study

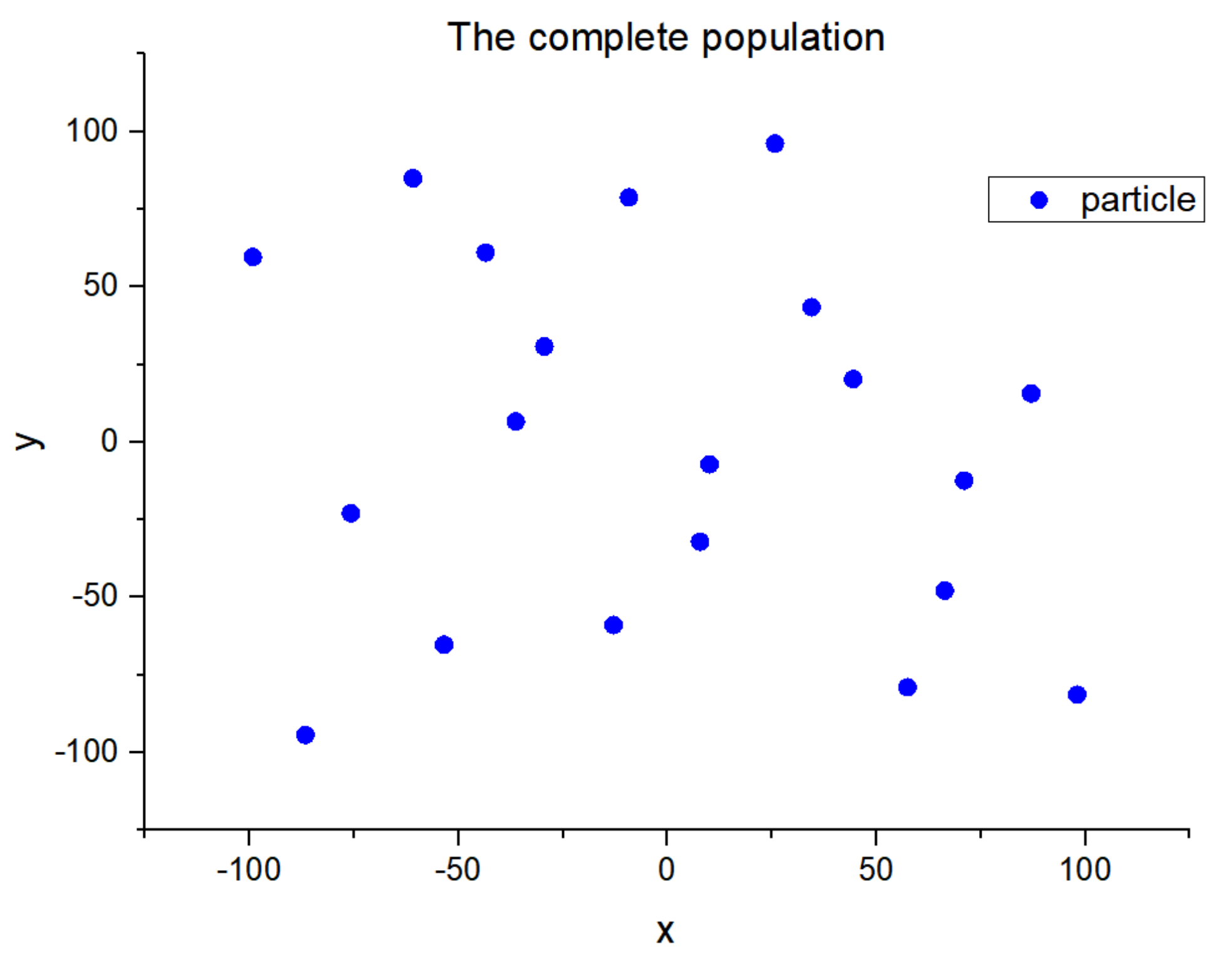

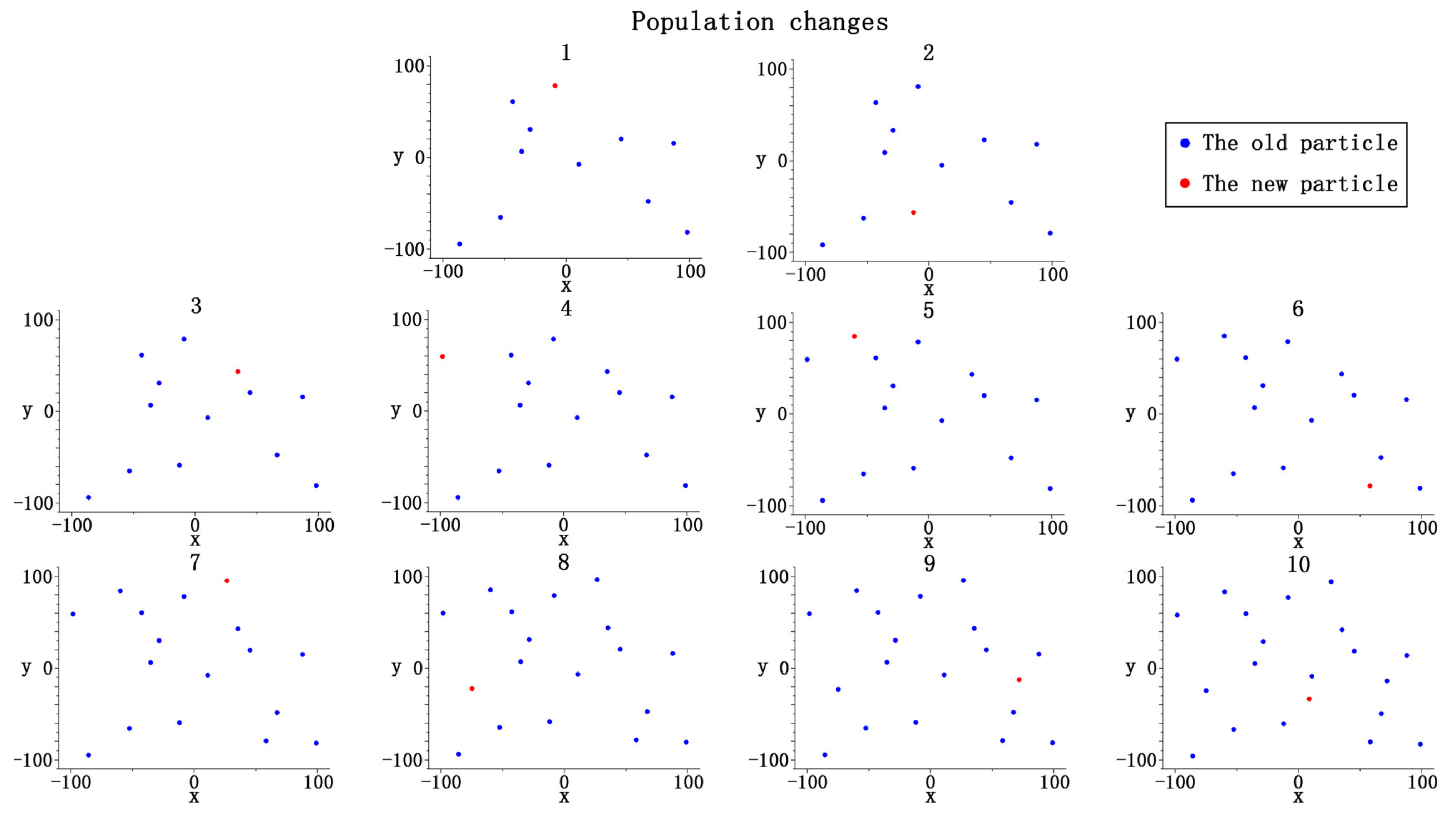

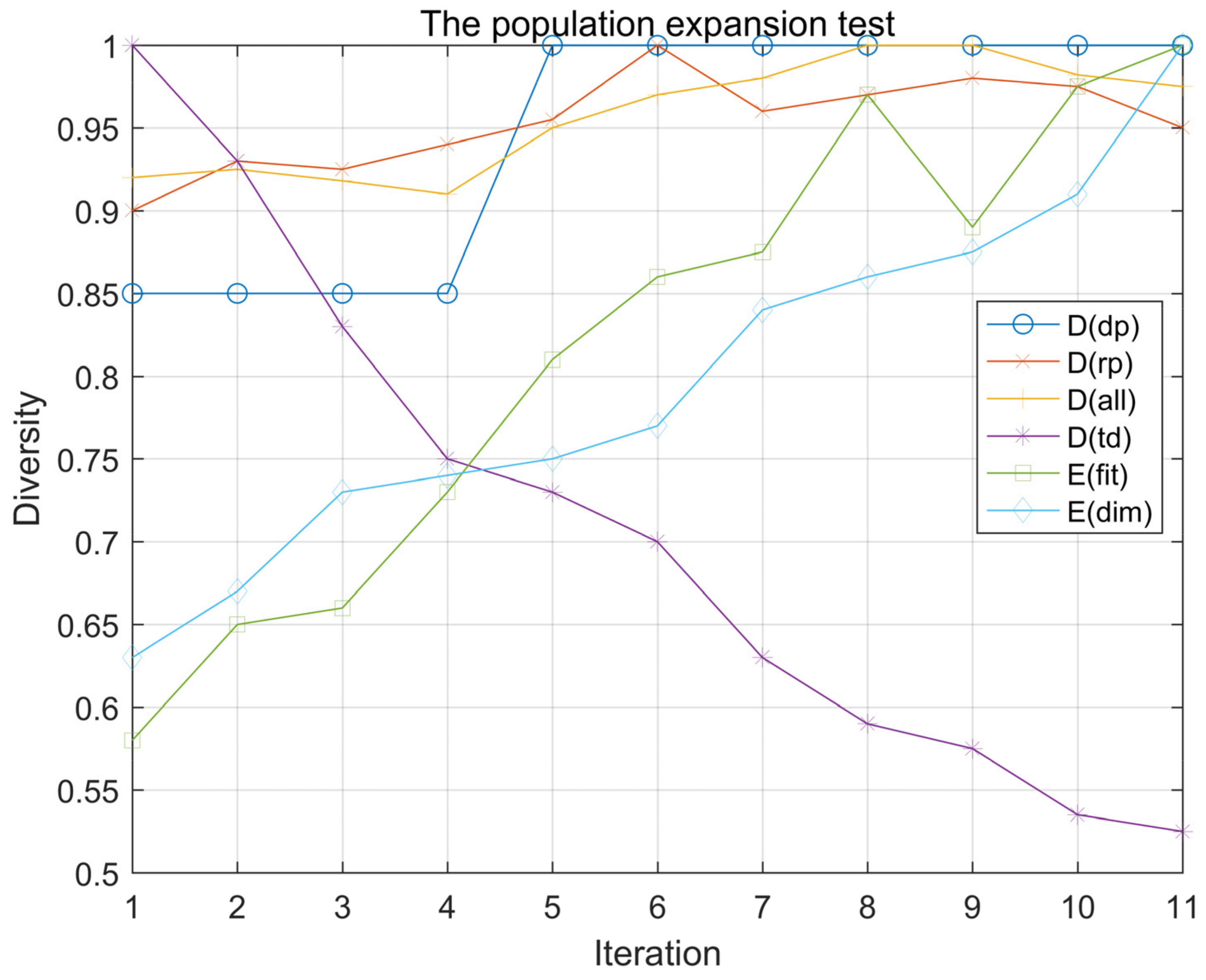

2.5.1. Population Expansion Experiment

- (1)

- All particles in the population are uniformly distributed in all dimensions.

- (2)

- No two particles are the same.

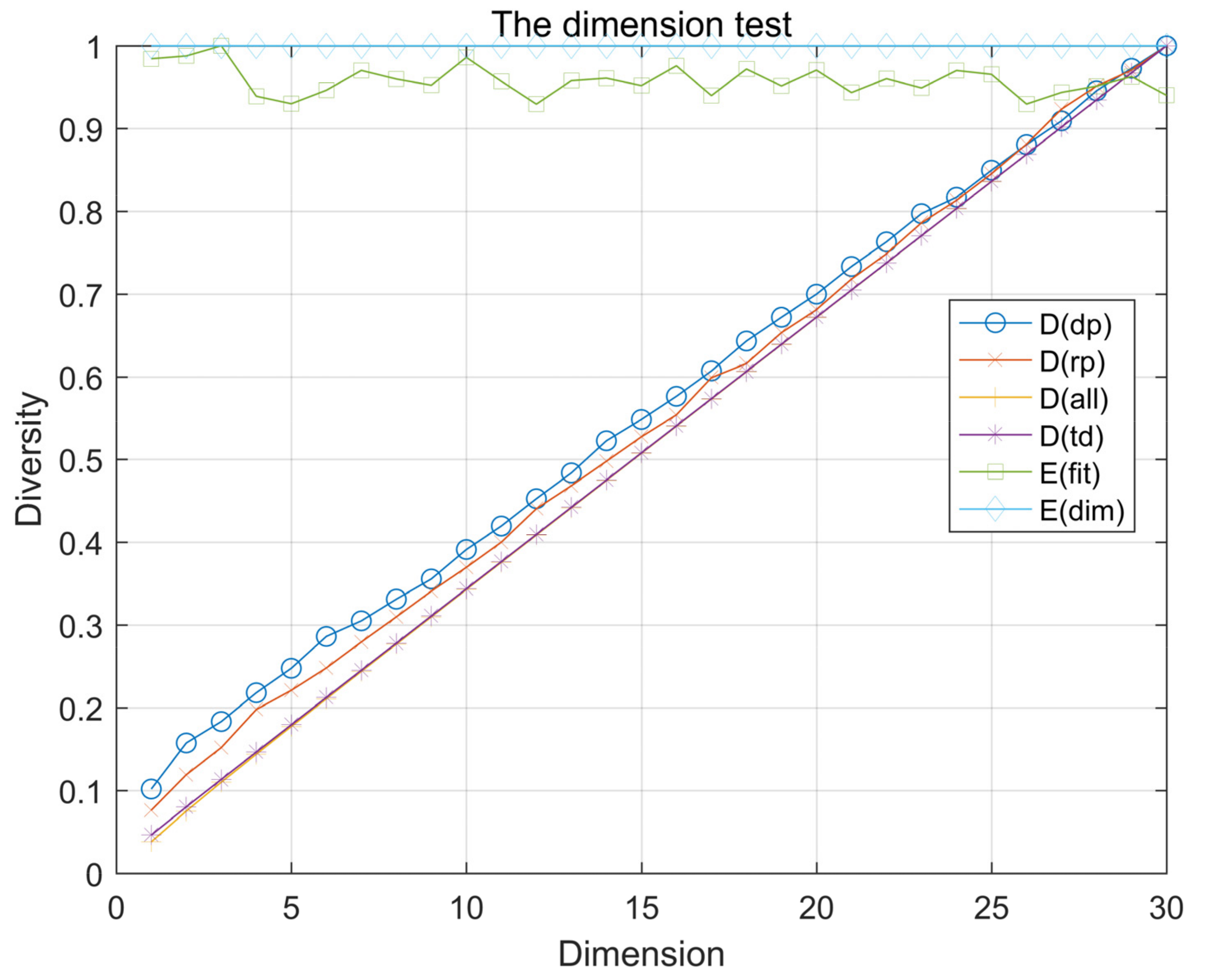

2.5.2. Dimensional Change Experiment

2.5.3. Practical Problem Testing

3. Swarm Intelligence Algorithm Control Method Based on Dimension Entropy

3.1. Introduction to Swarm Intelligence Algorithms

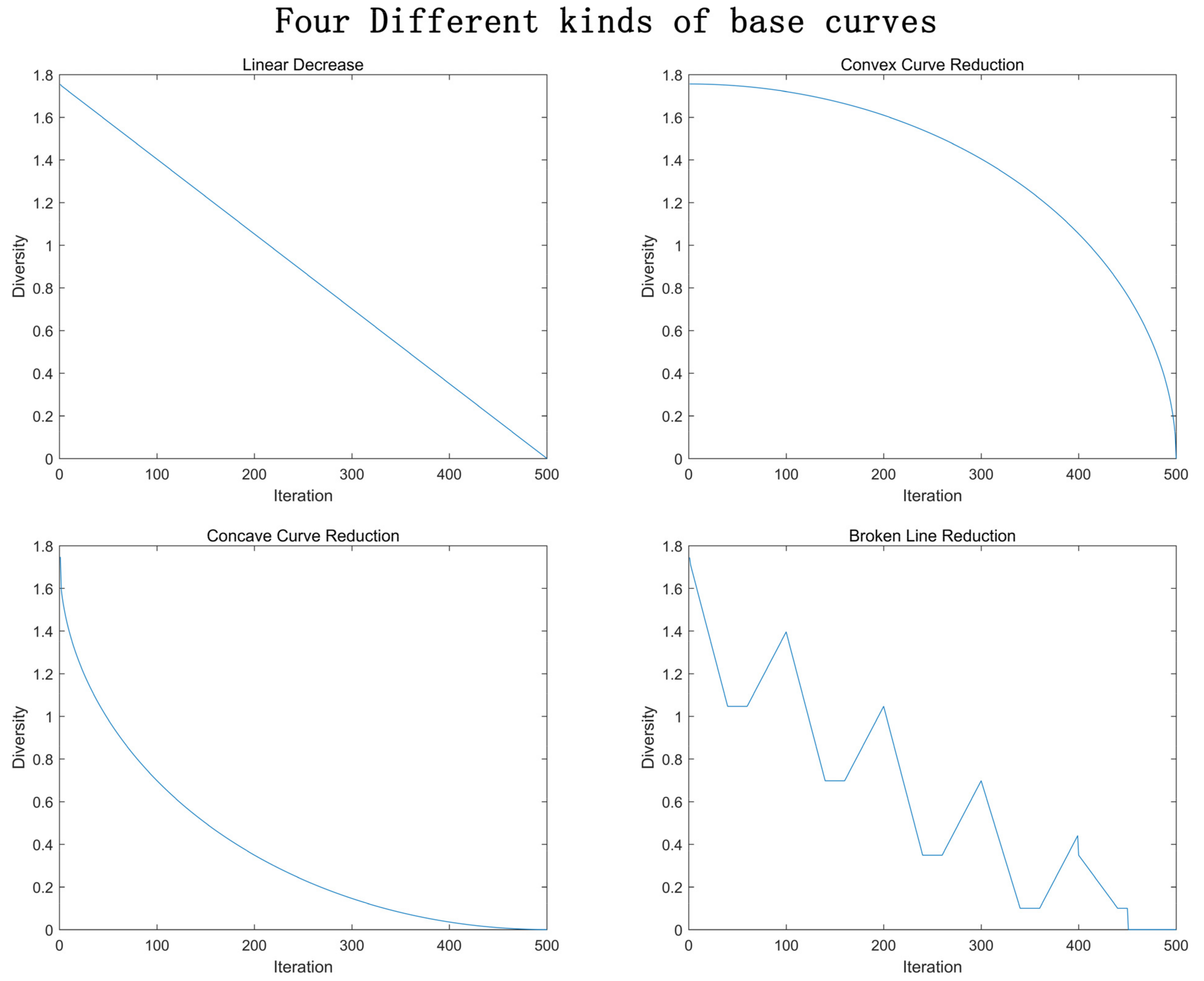

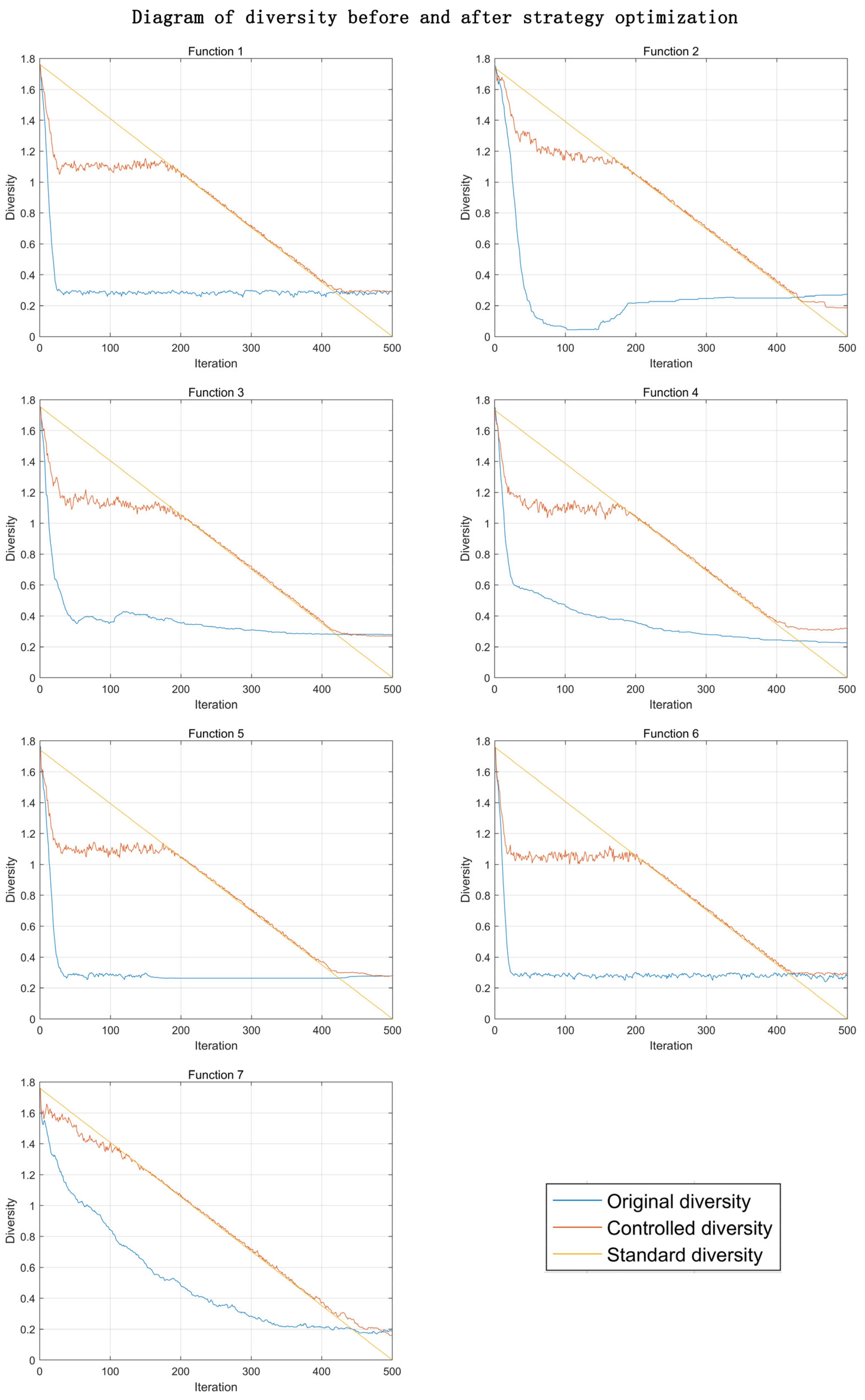

3.2. The Swarm Intelligence Algorithm Control Method Based on Dimension Entropy

| Algorithm 1 Diversity control |

| Input: : The dimensional entropy of the population in this iteration; : The expected entropy of this iteration; Coord: The population Output: The new population 1 if < 2 delete a redundant particle; 3 add a new particle; 4 else if > 5 delete a worst particle; 6 copy a redundant particle; 7 end |

4. Experiment and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jin, Y.; Dexian, Z. Summaries on Some Novel Bionic Optimization Algorithms. Softw. Guide 2019, 18, 49–51. [Google Scholar]

- Limei, B. Application of Particle Swarm Optimization Algorithm in Engineering Optimization Design. Electron. Technol. Softw. Eng. 2016, 17, 157. [Google Scholar]

- Chuntian, S.; Yanyang, Z.; Shouming, H. Summary of the Application of Swarm Intelligence Algorithms in Image Segmentation. Comput. Eng. Appl. 2021, 1–17. Available online: http://kns.cnki.net/kcms/detail/11.2127.TP.20210126.1016.004.html (accessed on 29 January 2021).

- Chenbin, W.; Haiming, L.; Dong, L.; Zhengyang, W.; Lei, W. Application of improved particle swarm optimization algorithm to power system economic load dispatch. Power Syst. Prot. Control 2016, 44, 44–48. [Google Scholar]

- Mei, W.; Yunlong, Z.; Xiaoxian, H. A Survey of Swarm Intelligence. Comput. Eng. 2005, 22, 204–206. [Google Scholar]

- Karaboga, D.; Akay, B. A comparative study of Artificial Bee Colony algorithm. Appl. Math. Comput. 2009, 214, 108–132. [Google Scholar] [CrossRef]

- Qinghong, W.; Ying, Z.; Zongmin, M. Overview of ant colony algorithms. Microcomput. Inf. 2011, 27, 1–2. [Google Scholar]

- Naigang, Z.; Jingshun, D. A review of particle swarm optimization algorithms. Sci. Technol. Innov. Guide 2015, 12, 216–217. [Google Scholar]

- De Jong, K.A. An Analysis of the Behavior of a Class of Genetic Adaptive Systems. Ph.D. Thesis, Department of Computer Science Central Michigan University, Ann Arbor, MI, USA, 1975. [Google Scholar]

- Mauldin, M.L. Maintaining diversity in genetic search. In Proceedings of the 4th National Conference on Artificial Intelligence, Austin, TX, USA, 6–10 August 1984; pp. 247–250. [Google Scholar]

- Goldberg, D.E. Genetic Algorithms in Search, Optimization and Machine Learning; Addison-Wesley: Reading, MA, USA, 1989. [Google Scholar]

- Eshelman, L.J.; Schaffer, J.D. Preventing Premature Convergence in Genetic Algorithms by Preventing Incest. In Proceedings of the 4th International Conference on Genetic Algorithms and Their Applications, San Mateo, CA, USA, 13–16 July 1991; pp. 115–122. [Google Scholar]

- Eiben, A.E.; Schippers, C.A. On evolutionary exploration and exploitation. Fundam. Inform. 1998, 2, 35–50. [Google Scholar] [CrossRef]

- Gu, Q.; Wang, Q.; Xiong, N.N.; Jiang, S.; Chen, L. Surrogate-assisted evolutionary algorithm for expensive constrained multi-objective discrete optimization problems. Complex Intell. Syst. 2021, 302, 1–20. [Google Scholar]

- Gupta, A.K.; Smith, K.G.; Shalley, C.E. The interplay between exploration and exploitation. Acad. Manag. J. 2006, 49, 693–706. [Google Scholar] [CrossRef]

- Zhiping, T.; Kangshun, L.; Yi, W. Differential evolution with adaptive mutation strategy based on fitness landscape analysis. Inf. Sci. 2021, 549, 142–163. [Google Scholar]

- Folino, G.; Forestiero, A. Using Entropy for Evaluating Swarm Intelligence Algorithms[C]//. Nature Inspired Cooperative Strategies for Optimization, NICSO 2010, Granada, Spain, 12–14 May 2010. [Google Scholar]

- González, J.R.; Pelta, D.A.; Cruz, C.; Terrazas, G.; Krasnogor, N. (Eds.) Studies in computational intelligence. In Nature Inspired Cooperative Strategies for Optimization (NICSO 2010); Springer: Berlin/Heidelberg, Germany, 2011; Volume 284. [Google Scholar] [CrossRef]

- Muhammad, Y.; Khan, R.; Raja, M.A.Z.; Ullah, F.; Chaudhary, N.I.; He, Y. Design of Fractional Swarm Intelligent Computing With Entropy Evolution for Optimal Power Flow Problems. IEEEE Access 2020, 8, 111401–111419. [Google Scholar] [CrossRef]

- Lieberson, S. Measuring population diversity. Am. Sociol. Rev. 1969, 34, 850–862. [Google Scholar] [CrossRef]

- Da Ronco, C.C.; Benini, E. GeDEA-II: A Simplex Crossover Based Evolutionary Algorithm Including the Genetic Diversity as Objective. Eng. Lett. 2013, 21, 23–35. [Google Scholar]

- Patil, G.P.; Taillie, C. Diversity as a concept and its measurement. J. Am. Stat. Assoc. 1982, 77, 548–561. [Google Scholar] [CrossRef]

- Lu, A.; Ling, H.; Ding, Z. How Does the Heterogeneity of Members Affect the Evolution of Group Opinions? Discret. Dyn. Nat. Soc. 2021, 2021. [Google Scholar] [CrossRef]

- Ursem, R.K. Diversity-guided evolutionary algorithms. In International Conference on Parallel Problem Solving from Nature; Springer: Berlin/Heidelberg, Germany, 2002; Volume 2439, pp. 462–471. [Google Scholar]

- Morrison, R.W.; de Jong, K.A. Measurement of population diversity. In International Conference on Artificial Evolution (Evolution Artificielle); Springer: Berlin/Heidelberg, Germany, 2002; Volume 2310, pp. 31–41. [Google Scholar]

- Herrera, F.; Lozano, M. Adaptation of genetic algorithm parameters based on fuzzy logic controllers. In Genetic Algorithms and Soft Computing, 1st ed.; Herrera, F., Verdegay, J.L., Eds.; Physica-Verlag: Heidelberg, Germany, 1996; Volume 8, pp. 95–125. [Google Scholar]

- Olorunda, O.; Engelbrecht, A.P. Measuring exploration/exploitation in particle swarms using swarm diversity. In Proceedings of the 2008 IEEE Congress on Evolutionary Computation (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–6 June 2008; pp. 1128–1134. [Google Scholar]

- Barker, A.L.; Martin, W.N. Dynamics of a distance-based population diversity measure. In Proceedings of the 2000 Congress on Evolutionary Computation. CEC00 (Cat. No. 00TH8512), La Jolla, CA, USA, 16–19 July 2000; pp. 1002–1009. [Google Scholar]

- Gouvea, M.M., Jr.; Araujo, A.F.R. Diversity control based on population heterozygosity dynamics. In Proceedings of the 2008 IEEE Congress on Evolutionary Computation (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–6 June 2008; pp. 3671–3678. [Google Scholar]

- Aliaga, J.; Crespo, G.; Proto, A.N. Squeezed states and Shannon entropy. Phys. Rev. A At. Mol. Opt. Phys. 1994, 49, 5146–5148. [Google Scholar] [CrossRef]

- Collins, R.J.; Jefferson, D.R. Selection in massively parallel genetic algorithms. In Proceedings of the 4th International Conference on Genetic Algorithms and Their Applications, San Mateo, CA, USA, 13–16 July 1991; pp. 249–256. [Google Scholar]

- Corriveau, G.; Guilbault, R.; Tahan, A.; Sabourin, R. Review and Study of Genotypic Diversity Measures for Real-Coded Representations. Trans. Evollutionary Comput. 2012, 16, 695–710. [Google Scholar] [CrossRef]

- Wineberg, M.; Oppacher, F. The underlying similarity of diversity measures used in evolutionary computation. In Genetic and Evolutionary Computation Conference; Springer: Berlin/Heidelberg, Germany, 2003; Volume 2724, pp. 1493–1504. [Google Scholar]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Rosca, J.P. Entropy-driven adaptive representation. In Proceedings of the Workshop on Genetic Programming: From Theory to Real-World Applications, Tahoe City, CA, USA, 9 July 1995; pp. 23–32. [Google Scholar]

- Chen, Z.; He, Z.; Zhang, C. Particle swarm optimization algorithm using dynamic neighborhood adjustment. Pattern Recognit. Artif. Intell. 2010, 23, 586–592. [Google Scholar]

- Zhang, A.; Sun, G.; Ren, J.; Li, X.; Wang, Z.; Jia, X. A Dynamic Neighborhood Learning-Based Gravitational Search Algorithm. IEEE Trans. Cybern. 2018, 48, 436–447. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Lei, Y. Adaptive particle swarm optimization algorithm based on intuitionistic fuzzy population entropy. J. Comput. Appl. 2008, 11, 2871–2873. [Google Scholar] [CrossRef]

- Baoye, S.; Zidong, W.; Lei, Z. An improved PSO algorithm for smooth path planning of mobile robots using continuous high-degree Bezier curve. Appl. Soft Comput. 2021, 100, 106960. [Google Scholar]

- Xu, G.; Cui, Q.; Shi, X.; Ge, H.; Zhan, Z.H.; Lee, H.P.; Liang, Y.; Tai, R.; Wu, C. Particle swarm optimization based on dimensional learning strategy. Swarm Evol. Comput. 2019, 45, 33–51. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95–International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995. [Google Scholar]

| Symbol | Definition |

|---|---|

| Variable | |

| Dimension number | |

| Interval number | |

| Total number of intervals | |

| Total number of dimension | |

| The position of the particle on the dimension | |

| Average value of the population on the dimension | |

| Fraction of that belongs to interval m on the dimension |

| Time | |||||||

|---|---|---|---|---|---|---|---|

| 1 | 0.782 | 0.692 | 0.997 | 0.983 | 0.967 | 0.779 | 0.977 |

| 2 | 0.925 | 0.855 | 1.000 | 0.980 | 0.950 | 0.889 | 0.984 |

| 3 | 0.708 | 0.889 | 0.997 | 0.973 | 0.947 | 0.840 | 0.976 |

| 4 | 0.715 | 0.642 | 0.997 | 0.978 | 0.942 | 0.758 | 0.977 |

| 5 | 0.821 | 0.714 | 0.998 | 0.986 | 0.961 | 0.747 | 0.989 |

| 6 | 0.931 | 0.852 | 0.992 | 0.942 | 0.951 | 0.875 | 0.979 |

| 7 | 0.949 | 0.618 | 1.000 | 0.990 | 0.978 | 0.907 | 0.980 |

| 8 | 0.925 | 0.844 | 0.998 | 0.989 | 0.975 | 0.907 | 0.980 |

| 9 | 0.799 | 0.699 | 0.996 | 0.976 | 0.952 | 0.805 | 0.984 |

| 10 | 0.818 | 0.712 | 0.937 | 0.982 | 0.953 | 0.807 | 0.972 |

| Mean | 0.837 | 0.752 | 0.991 | 0.978 | 0.958 | 0.831 | 0.980 |

| Rank | 5 | 7 | 1 | 3 | 4 | 6 | 2 |

| Num | Function Name | Property | Best Value |

|---|---|---|---|

| 1 | Sphere’s Function | U | 0 |

| 2 | Rosenbrock’s Function | M | 0 |

| 3 | Rastrigin’s Function | M | 0 |

| 4 | Griewank’s Function | M | 0 |

| 5 | Ackley’s Function | M | 0 |

| 6 | Schwefel’s Problem 2.22 | M | 0 |

| 7 | Schwefel’s Problem 1.2 | M | 0 |

| No | Line | Convex Curve | Concave Curve | Broken Line | |

|---|---|---|---|---|---|

| 1 | Min | 9.47 × 10−57 | 3.26 × 10−57 | 6.86 × 10−58 | 6.69 × 10−58 |

| Max | 3.45 × 10−51 | 6.43 × 10−51 | 2.48 × 102 | 5.81 × 10−48 | |

| Mean | 1.93 × 10−52 | 3.51 × 10−52 | 8.27 × 100 | 1.94 × 10−49 | |

| DimEnt | 0.820 | 1.039 | 0.574 | 0.756 | |

| 2 | Min | 8.83 × 10−1 | 9.40 × 10−1 | 9.00 × 10−1 | 9.45 × 10−1 |

| Max | 4.80 × 101 | 2.26 × 102 | 1.69 × 105 | 6.99 × 102 | |

| Mean | 8.30 × 100 | 2.23 × 101 | 5.65 × 103 | 5.65 × 101 | |

| DimEnt | 0.831 | 1.019 | 0.596 | 0.744 | |

| 3 | Min | 8.53 × 10−14 | 9.95 × 10−1 | 0.00 × 100 | 8.27 × 10−12 |

| Max | 6.81 × 100 | 6.96 × 100 | 2.22 × 102 | 4.97 × 100 | |

| Mean | 1.94 × 10−0 | 2.89 × 100 | 2.00 × 101 | 2.04 × 100 | |

| DimEnt | 0.834 | 1.039 | 0.626 | 0.771 | |

| 4 | Min | 1.23 × 10−2 | 1.23 × 10−2 | 1.97 × 10−2 | 1.23 × 10−2 |

| Max | 1.26 × 10−1 | 1.43 × 10−1 | 1.65 × 10−1 | 1.68 × 101 | |

| Mean | 6.08 × 10−2 | 5.83 × 10−2 | 6.69 × 10−2 | 6.15 × 10−1 | |

| DimEnt | 0.792 | 1.012 | 0.540 | 0.750 | |

| 5 | Min | 4.44 × 10−15 | 4.44 × 10−15 | 8.88 × 10−16 | 4.44 × 10−15 |

| Max | 1.21 × 101 | 4.44 × 10−15 | 1.42 × 101 | 4.44 × 10−15 | |

| Mean | 4.03 × 10−1 | 4.44 × 10−15 | 8.08 × 10−1 | 4.44 × 10−15 | |

| DimEnt | 0.830 | 1.036 | 0.585 | 0.753 | |

| 6 | Min | 3.98 × 10−33 | 1.33 × 10−32 | 1.09 × 10−32 | 3.11 × 10−32 |

| Max | 1.20 × 10−27 | 3.46 × 10−29 | 1.32 × 101 | 4.30 × 10−29 | |

| Mean | 4.34 × 10−29 | 3.78 × 10−30 | 1.71 × 100 | 5.57 × 10−30 | |

| DimEnt | 0.818 | 1.047 | 0.664 | 0.751 | |

| 7 | Min | 7.57 × 10−1 | 6.30 × 10−1 | 2.19 × 10−2 | 1.60 × 10−1 |

| Max | 2.18 × 103 | 3.08 × 101 | 5.34 × 103 | 1.23 × 103 | |

| Mean | 9.04 × 101 | 7.58 × 100 | 5.60 × 102 | 5.27 × 101 | |

| DimEnt | 1.003 | 1.188 | 0.886 | 0.964 |

| Num | Function Name | Property | Best Value |

|---|---|---|---|

| F01 | Shifted and Rotated Bent Cigar Function | U | 100 |

| F03 | Shifted and Rotated Zakharov Function | M | 300 |

| F04 | Shifted and Rotated Rosenbrock’s Function | M | 400 |

| F05 | Shifted and Rotated Rastrigin’s Function | M | 500 |

| F06 | Shifted and Rotated Expanded Scaffer’s F6 Function | M | 600 |

| F07 | Shifted and Rotated Lunacek Bi_Rastrigin Function | M | 700 |

| F08 | Shifted and Rotated Non-Continuous Rastrigin’s Function | M | 800 |

| F09 | Shifted and Rotated Levy Function | M | 900 |

| F10 | Shifted and Rotated Schwefel’s Function | M | 1000 |

| F11 | Hybrid Function 1 (N = 3) | H | 1100 |

| F12 | Hybrid Function 2 (N = 3) | H | 1200 |

| F13 | Hybrid Function 3 (N = 3) | H | 1300 |

| F14 | Hybrid Function 4 (N = 4) | H | 1400 |

| F15 | Hybrid Function 5 (N = 4) | H | 1500 |

| F16 | Hybrid Function 6 (N = 4) | H | 1600 |

| F17 | Hybrid Function 6 (N = 5) | H | 1700 |

| F18 | Hybrid Function 6 (N = 5) | H | 1800 |

| F19 | Hybrid Function 6 (N = 5) | H | 1900 |

| F20 | Hybrid Function 6 (N = 6) | H | 2000 |

| F21 | Composition Function 1 | C | 2100 |

| F22 | Composition Function 2 | C | 2200 |

| F23 | Composition Function 3 | C | 2300 |

| F24 | Composition Function 4 | C | 2400 |

| F25 | Composition Function 5 | C | 2500 |

| F26 | Composition Function 6 | C | 2600 |

| F27 | Composition Function 7 | C | 2700 |

| F28 | Composition Function 8 | C | 2800 |

| F29 | Composition Function 9 | C | 2900 |

| F30 | Composition Function 10 | C | 3000 |

| Algorithm | Parameter Setting |

|---|---|

| PSO | , |

| fun | PSO(Dim = 10) | PSOG(Dim = 10) | ||||||

|---|---|---|---|---|---|---|---|---|

| min | max | mean | std | min | max | mean | std | |

| 1.02 × 102 | 2.54 × 103 | 1.16 × 103 | 8.87 × 102 | 1.01 × 102 | 1.53 × 103 | 5.89 × 102 | 4.20 × 102 | |

| 3.00 × 102 | 3.00 × 102 | 3.00 × 102 | 0.00 × 100 | 3.00 × 102 | 3.00 × 102 | 3.00 × 102 | 0.00 × 100 | |

| 4.00 × 102 | 4.35 × 102 | 4.26 × 102 | 1.46 × 101 | 4.00 × 102 | 4.35 × 102 | 4.09 × 102 | 1.36 × 101 | |

| 5.07 × 102 | 5.34 × 102 | 5.18 × 102 | 6.35 × 100 | 5.04 × 102 | 5.17 × 102 | 5.13 × 102 | 3.95 × 100 | |

| 6.00 × 102 | 6.07 × 102 | 6.00 × 102 | 1.22 × 100 | 6.00 × 102 | 6.00 × 102 | 6.00 × 102 | 0.00 × 100 | |

| 7.13 × 102 | 7.38 × 102 | 7.21 × 102 | 5.54 × 100 | 7.12 × 102 | 7.21 × 102 | 7.18 × 102 | 2.12 × 100 | |

| 8.06 × 102 | 8.36 × 102 | 8.16 × 102 | 6.94 × 100 | 8.07 × 102 | 8.21 × 102 | 8.14 × 102 | 4.62 × 100 | |

| 9.00 × 102 | 9.00 × 102 | 9.00 × 102 | 1.63 × 10−2 | 9.00 × 102 | 9.00 × 102 | 9.00 × 102 | 0.00 × 100 | |

| 1.13 × 103 | 1.85 × 103 | 1.48 × 103 | 1.96 × 102 | 1.13 × 103 | 1.45 × 103 | 1.31 × 103 | 9.45 × 101 | |

| 1.10 × 103 | 1.14 × 103 | 1.12 × 103 | 8.54 × 100 | 1.10 × 103 | 1.12 × 103 | 1.11 × 103 | 4.58 × 100 | |

| 2.05 × 103 | 4.35 × 105 | 2.75 × 104 | 7.79 × 104 | 1.88 × 103 | 2.13 × 104 | 9.67 × 103 | 6.94 × 103 | |

| 1.34 × 103 | 9.10 × 103 | 3.39 × 103 | 2.16 × 103 | 1.31 × 103 | 3.43 × 103 | 2.06 × 103 | 6.48 × 102 | |

| 1.43 × 103 | 1.77 × 103 | 1.49 × 103 | 6.32 × 101 | 1.44 × 103 | 1.47 × 103 | 1.45 × 103 | 7.40 × 100 | |

| 1.51 × 103 | 1.76 × 103 | 1.56 × 103 | 6.06 × 101 | 1.51 × 103 | 1.53 × 103 | 1.52 × 103 | 5.95 × 100 | |

| 1.60 × 103 | 1.86 × 103 | 1.72 × 103 | 6.14 × 101 | 1.60 × 103 | 1.72 × 103 | 1.64 × 103 | 5.27 × 101 | |

| 1.73 × 103 | 1.78 × 103 | 1.75 × 103 | 1.39 × 101 | 1.71 × 103 | 1.75 × 103 | 1.73 × 103 | 8.66 × 100 | |

| 1.84 × 103 | 1.29 × 104 | 5.07 × 103 | 2.91 × 103 | 1.93 × 103 | 5.67 × 103 | 3.66 × 103 | 1.04 × 103 | |

| 1.90 × 103 | 1.96 × 103 | 1.92 × 103 | 1.07 × 101 | 1.91 × 103 | 1.92 × 103 | 1.91 × 103 | 3.93 × 100 | |

| 2.01 × 103 | 2.20 × 103 | 2.07 × 103 | 5.31 × 101 | 2.00 × 103 | 2.04 × 103 | 2.03 × 103 | 7.21 × 100 | |

| 2.20 × 103 | 2.20 × 103 | 2.20 × 103 | 2.67 × 10−13 | 2.20 × 103 | 2.20 × 103 | 2.20 × 103 | 2.09 × 10−13 | |

| 2.30 × 103 | 2.30 × 103 | 2.30 × 103 | 2.39 × 10−13 | 2.21 × 103 | 2.30 × 103 | 2.30 × 103 | 2.06 × 101 | |

| 2.40 × 103 | 2.82 × 103 | 2.71 × 103 | 7.80 × 101 | 2.40 × 103 | 2.67 × 103 | 2.62 × 103 | 9.54 × 101 | |

| 2.50 × 103 | 2.80 × 103 | 2.61 × 103 | 5.79 × 101 | 2.50 × 103 | 2.60 × 103 | 2.59 × 103 | 3.08 × 101 | |

| 2.89 × 103 | 2.95 × 103 | 2.94 × 103 | 2.04 × 101 | 2.90 × 103 | 2.95 × 103 | 2.93 × 103 | 2.32 × 101 | |

| 2.80 × 103 | 3.49 × 103 | 2.94 × 103 | 2.04 × 102 | 2.60 × 103 | 2.90 × 103 | 2.83 × 103 | 7.33 × 101 | |

| 3.10 × 103 | 3.50 × 103 | 3.29 × 103 | 1.15 × 102 | 3.10 × 103 | 3.23 × 103 | 3.16 × 103 | 4.45 × 101 | |

| 3.10 × 103 | 3.23 × 103 | 3.15 × 103 | 2.52 × 101 | 3.10 × 103 | 3.15 × 103 | 3.13 × 103 | 2.40 × 101 | |

| 3.15 × 103 | 3.30 × 103 | 3.18 × 103 | 3.45 × 101 | 3.14 × 103 | 3.17 × 103 | 3.16 × 103 | 1.05 × 101 | |

| 3.49 × 103 | 3.85 × 104 | 9.59 × 103 | 7.45 × 103 | 3.71 × 103 | 7.49 × 103 | 5.22 × 103 | 1.13 × 103 | |

| count | 0 | 24 | ||||||

| fun | PSO(Dim = 30) | PSOG(Dim = 30) | ||||||

|---|---|---|---|---|---|---|---|---|

| min | max | mean | std | min | max | mean | std | |

| 1.00 × 102 | 1.22 × 109 | 1.37 × 108 | 3.26 × 108 | 1.00 × 102 | 1.01 × 102 | 1.00 × 102 | 2.95 × 10−1 | |

| 3.05 × 102 | 3.93 × 102 | 3.34 × 102 | 2.29 × 101 | 3.09 × 102 | 3.53 × 102 | 3.31 × 102 | 1.51 × 101 | |

| 4.00 × 102 | 6.38 × 102 | 4.89 × 102 | 5.06 × 101 | 4.04 × 102 | 4.71 × 102 | 4.66 × 102 | 1.48 × 101 | |

| 5.73 × 102 | 6.71 × 102 | 6.05 × 102 | 2.42 × 101 | 5.64 × 102 | 5.95 × 102 | 5.80 × 102 | 9.27 × 100 | |

| 6.00 × 102 | 6.23 × 102 | 6.08 × 102 | 5.93 × 100 | 6.00 × 102 | 6.08 × 102 | 6.04 × 102 | 2.85 × 100 | |

| 7.68 × 102 | 8.46 × 102 | 8.10 × 102 | 2.12 × 101 | 7.77 × 102 | 8.22 × 102 | 7.99 × 102 | 1.40 × 101 | |

| 8.67 × 102 | 9.89 × 102 | 9.18 × 102 | 2.93 × 101 | 8.75 × 102 | 9.39 × 102 | 9.09 × 102 | 1.90 × 101 | |

| 9.08 × 102 | 4.85 × 103 | 2.61 × 103 | 1.02 × 103 | 9.31 × 102 | 2.88 × 103 | 1.87 × 103 | 6.15 × 102 | |

| 2.80 × 103 | 5.20 × 103 | 4.07 × 103 | 6.36 × 102 | 2.96 × 103 | 4.15 × 103 | 3.63 × 103 | 3.18 × 102 | |

| 1.18 × 103 | 1.43 × 103 | 1.25 × 103 | 5.91 × 101 | 1.17 × 103 | 1.27 × 103 | 1.23 × 103 | 3.01 × 101 | |

| 2.64 × 103 | 3.35 × 108 | 1.12 × 107 | 6.12 × 107 | 3.63 × 103 | 1.50 × 104 | 7.33 × 103 | 3.51 × 102 | |

| 1.35 × 103 | 1.02 × 104 | 2.05 × 103 | 1.80 × 103 | 1.41 × 103 | 2.71 × 103 | 1.85 × 103 | 4.06 × 102 | |

| 1.48 × 103 | 1.97 × 103 | 1.68 × 103 | 1.12 × 102 | 1.53 × 103 | 1.71 × 103 | 1.64 × 103 | 5.51 × 101 | |

| 1.53 × 103 | 1.92 × 103 | 1.59 × 103 | 7.02 × 101 | 1.52 × 103 | 1.61 × 103 | 1.57 × 103 | 2.55 × 101 | |

| 1.86 × 103 | 2.91 × 103 | 2.35 × 103 | 2.68 × 102 | 1.96 × 103 | 2.44 × 103 | 2.23 × 103 | 1.67 × 102 | |

| 1.80 × 103 | 2.52 × 103 | 2.09 × 103 | 1.83 × 102 | 1.79 × 103 | 2.02 × 103 | 1.89 × 103 | 7.44 × 101 | |

| 5.96 × 103 | 1.26 × 105 | 3.88 × 104 | 2.60 × 104 | 9.55 × 103 | 4.17 × 104 | 2.33 × 104 | 1.11 × 104 | |

| 1.98 × 103 | 2.93 × 104 | 6.50 × 103 | 6.01 × 103 | 1.95 × 103 | 4.41 × 103 | 2.77 × 103 | 8.62 × 102 | |

| 2.20 × 103 | 2.71 × 103 | 2.42 × 103 | 1.08 × 102 | 2.13 × 103 | 2.44 × 103 | 2.31 × 103 | 1.05 × 102 | |

| 2.20 × 103 | 2.20 × 103 | 2.20 × 103 | 2.25 × 10−1 | 2.25 × 103 | 2.25 × 103 | 2.25 × 103 | 4.67 × 10−13 | |

| 2.30 × 103 | 2.30 × 103 | 2.30 × 103 | 2.24 × 10−1 | 2.35 × 103 | 2.35 × 103 | 2.35 × 103 | 4.55 × 10−13 | |

| 3.04 × 103 | 4.20 × 103 | 3.54 × 103 | 3.24 × 102 | 2.83 × 103 | 2.88 × 103 | 2.87 × 103 | 1.30 × 101 | |

| 2.60 × 103 | 2.61 × 103 | 2.60 × 103 | 1.55 × 100 | 2.60 × 103 | 2.60 × 103 | 2.60 × 103 | 4.43 × 10−13 | |

| 2.90 × 103 | 3.05 × 103 | 2.94 × 103 | 4.21 × 101 | 2.90 × 103 | 2.97 × 103 | 2.94 × 103 | 2.79 × 101 | |

| 2.80 × 103 | 2.90 × 103 | 2.80 × 103 | 1.83 × 101 | 2.80 × 103 | 2.80 × 103 | 2.80 × 103 | 5.00 × 10−13 | |

| 3.78 × 103 | 5.06 × 103 | 4.39 × 103 | 3.41 × 102 | 3.38 × 103 | 3.59 × 103 | 3.51 × 103 | 5.25 × 101 | |

| 3.17 × 103 | 3.95 × 103 | 3.31 × 103 | 1.39 × 102 | 3.17 × 103 | 3.28 × 103 | 3.24 × 103 | 3.29 × 101 | |

| 3.35 × 103 | 4.11 × 103 | 3.59 × 103 | 2.12 × 102 | 3.29 × 103 | 3.65 × 103 | 3.49 × 103 | 1.10 × 102 | |

| 4.19 × 103 | 1.88 × 105 | 1.60 × 104 | 3.39 × 104 | 4.44 × 103 | 1.60 × 104 | 9.79 × 103 | 3.31 × 103 | |

| count | 2 | 24 | ||||||

| fun | BBPSO(Dim = 10) | BBPSOG(Dim = 10) | ||||||

|---|---|---|---|---|---|---|---|---|

| min | max | mean | std | min | max | mean | std | |

| 1.28 × 102 | 2.54 × 103 | 1.28 × 103 | 6.85 × 102 | 1.50 × 102 | 2.12 × 103 | 1.21 × 103 | 5.45 × 102 | |

| 3.00 × 102 | 3.00 × 102 | 3.00 × 102 | 0.00 × 100 | 3.00 × 102 | 3.00 × 102 | 3.00 × 102 | 0.00 × 100 | |

| 4.00 × 102 | 5.21 × 102 | 4.30 × 102 | 2.23 × 101 | 4.00 × 102 | 4.35 × 102 | 4.17 × 102 | 1.65 × 101 | |

| 5.04 × 102 | 5.27 × 102 | 5.13 × 102 | 5.83 × 100 | 5.05 × 102 | 5.12 × 102 | 5.09 × 102 | 2.08 × 100 | |

| 6.00 × 102 | 6.01 × 102 | 6.00 × 102 | 1.76 × 10−1 | 6.00 × 102 | 6.00 × 102 | 6.00 × 102 | 3.69 × 10−14 | |

| 7.08 × 102 | 7.26 × 102 | 7.18 × 102 | 4.38 × 100 | 7.13 × 102 | 7.22 × 102 | 7.18 × 102 | 2.75 × 100 | |

| 8.05 × 102 | 8.22 × 102 | 8.12 × 102 | 4.40 × 100 | 8.05 × 102 | 8.13 × 102 | 8.09 × 102 | 2.63 × 100 | |

| 9.00 × 102 | 9.02 × 102 | 9.00 × 102 | 4.54 × 10−1 | 9.00 × 102 | 9.00 × 102 | 9.00 × 102 | 0.00 × 100 | |

| 1.03 × 103 | 1.77 × 103 | 1.34 × 103 | 2.04 × 102 | 1.04 × 103 | 1.35 × 103 | 1.17 × 103 | 1.10 × 102 | |

| 1.10 × 103 | 1.12 × 103 | 1.11 × 103 | 5.17 × 100 | 1.10 × 103 | 1.11 × 103 | 1.10 × 103 | 1.91 × 100 | |

| 2.40 × 103 | 4.36 × 105 | 3.59 × 104 | 7.77 × 104 | 3.97 × 103 | 2.58 × 104 | 1.19 × 104 | 5.72 × 103 | |

| 1.31 × 103 | 9.37 × 103 | 4.41 × 103 | 2.86 × 103 | 1.32 × 103 | 4.04 × 103 | 2.27 × 103 | 9.62 × 102 | |

| 1.43 × 103 | 1.54 × 103 | 1.46 × 103 | 2.87 × 101 | 1.43 × 103 | 1.44 × 103 | 1.43 × 103 | 5.87 × 100 | |

| 1.51 × 103 | 1.69 × 103 | 1.59 × 103 | 5.23 × 101 | 1.51 × 103 | 1.59 × 103 | 1.54 × 103 | 2.34 × 101 | |

| 1.60 × 103 | 1.81 × 103 | 1.68 × 103 | 7.19 × 101 | 1.60 × 103 | 1.64 × 103 | 1.61 × 103 | 1.26 × 101 | |

| 1.71 × 103 | 1.85 × 103 | 1.75 × 103 | 3.17 × 101 | 1.72 × 103 | 1.74 × 103 | 1.73 × 103 | 5.67 × 100 | |

| 1.86 × 103 | 2.34 × 104 | 6.48 × 103 | 5.58 × 103 | 2.09 × 103 | 5.37 × 103 | 3.03 × 103 | 8.68 × 102 | |

| 1.90 × 103 | 2.12 × 103 | 1.94 × 103 | 5.16 × 101 | 1.90 × 103 | 1.92 × 103 | 1.91 × 103 | 4.37 × 100 | |

| 2.00 × 103 | 2.08 × 103 | 2.03 × 103 | 1.79 × 101 | 2.00 × 103 | 2.04 × 103 | 2.03 × 103 | 1.05 × 101 | |

| 2.20 × 103 | 2.20 × 103 | 2.20 × 103 | 2.53 × 10−13 | 2.25 × 103 | 2.27 × 103 | 2.26 × 103 | 7.21 × 100 | |

| 2.21 × 103 | 2.30 × 103 | 2.30 × 103 | 1.70 × 101 | 2.24 × 103 | 2.39 × 103 | 2.35 × 103 | 4.83 × 101 | |

| 2.65 × 103 | 2.71 × 103 | 2.68 × 103 | 1.31 × 101 | 2.65 × 103 | 2.67 × 103 | 2.67 × 103 | 5.07 × 100 | |

| 2.50 × 103 | 2.82 × 103 | 2.74 × 103 | 1.21 × 102 | 2.50 × 103 | 2.81 × 103 | 2.72 × 103 | 1.39 × 102 | |

| 2.89 × 103 | 2.97 × 103 | 2.93 × 103 | 2.54 × 101 | 2.89 × 103 | 2.94 × 103 | 2.91 × 103 | 1.86 × 101 | |

| 2.90 × 103 | 3.62 × 103 | 3.21 × 103 | 2.42 × 102 | 2.60 × 103 | 3.37 × 103 | 3.00 × 103 | 1.92 × 102 | |

| 3.14 × 103 | 3.31 × 103 | 3.17 × 103 | 4.30 × 101 | 3.12 × 103 | 3.15 × 103 | 3.14 × 103 | 5.87 × 100 | |

| 3.10 × 103 | 3.37 × 103 | 3.18 × 103 | 6.51 × 101 | 3.10 × 103 | 3.15 × 103 | 3.13 × 103 | 2.50 × 101 | |

| 3.14 × 103 | 3.29 × 103 | 3.18 × 103 | 3.12 × 101 | 3.14 × 103 | 3.17 × 103 | 3.16 × 103 | 7.45 × 100 | |

| 3.97 × 103 | 2.32 × 105 | 1.58 × 104 | 4.10 × 104 | 4.66 × 103 | 1.03 × 104 | 7.68 × 103 | 1.52 × 103 | |

| count | 2 | 22 | ||||||

| fun | BBPSO(Dim = 30) | BBPSOG(Dim = 30) | ||||||

|---|---|---|---|---|---|---|---|---|

| min | max | mean | std | min | max | mean | std | |

| 1.00 × 102 | 5.10 × 109 | 1.58 × 109 | 1.52 × 109 | 1.00 × 102 | 2.91 × 103 | 1.22 × 103 | 1.41 × 103 | |

| 9.72 × 103 | 3.63 × 104 | 2.08 × 104 | 6.19 × 103 | 6.85 × 103 | 2.74 × 104 | 2.05 × 104 | 5.65 × 103 | |

| 4.06 × 102 | 9.89 × 102 | 6.01 × 102 | 1.48 × 102 | 4.63 × 102 | 4.76 × 102 | 4.69 × 102 | 4.71 × 100 | |

| 5.52 × 102 | 7.29 × 102 | 6.31 × 102 | 3.50 × 101 | 5.51 × 102 | 5.91 × 102 | 5.76 × 102 | 1.04 × 101 | |

| 6.00 × 102 | 6.26 × 102 | 6.06 × 102 | 5.35 × 100 | 6.00 × 102 | 6.01 × 102 | 6.00 × 102 | 2.04 × 10−1 | |

| 7.72 × 102 | 9.96 × 102 | 8.53 × 102 | 5.20 × 101 | 7.81 × 102 | 8.34 × 102 | 8.16 × 102 | 1.54 × 101 | |

| 8.69 × 102 | 1.02 × 103 | 9.28 × 102 | 3.52 × 101 | 8.53 × 102 | 8.85 × 102 | 8.71 × 102 | 1.00 × 101 | |

| 1.39 × 103 | 1.06 × 104 | 2.93 × 103 | 1.90 × 103 | 9.16 × 102 | 1.22 × 103 | 1.03 × 103 | 9.76 × 101 | |

| 2.63 × 103 | 5.80 × 103 | 4.24 × 103 | 7.55 × 102 | 2.76 × 103 | 3.82 × 103 | 3.40 × 103 | 2.87 × 102 | |

| 1.21 × 103 | 1.97 × 103 | 1.43 × 103 | 1.49 × 102 | 1.18 × 103 | 1.30 × 103 | 1.25 × 103 | 3.68 × 101 | |

| 2.25 × 104 | 5.70 × 108 | 5.68 × 107 | 1.31 × 108 | 6.28 × 103 | 9.46 × 104 | 3.70 × 104 | 2.61 × 104 | |

| 1.92 × 103 | 9.56 × 105 | 4.38 × 104 | 1.73 × 105 | 3.58 × 103 | 1.03 × 104 | 6.82 × 103 | 1.86 × 103 | |

| 1.46 × 103 | 1.38 × 106 | 4.90 × 104 | 2.51 × 105 | 1.50 × 103 | 1.64 × 103 | 1.55 × 103 | 4.16 × 101 | |

| 1.88 × 103 | 3.36 × 104 | 9.47 × 103 | 8.20 × 103 | 1.83 × 103 | 3.81 × 103 | 2.37 × 103 | 4.74 × 102 | |

| 1.83 × 103 | 3.09 × 103 | 2.53 × 103 | 3.53 × 102 | 1.97 × 103 | 2.53 × 103 | 2.28 × 103 | 1.73 × 102 | |

| 1.89 × 103 | 2.51 × 103 | 2.07 × 103 | 1.41 × 102 | 1.87 × 103 | 2.06 × 103 | 1.95 × 103 | 5.21 × 101 | |

| 2.58 × 104 | 7.94 × 105 | 1.65 × 105 | 1.55 × 105 | 2.09 × 104 | 1.25 × 105 | 7.43 × 104 | 2.93 × 104 | |

| 2.02 × 103 | 4.68 × 104 | 1.18 × 104 | 1.19 × 104 | 2.00 × 103 | 1.23 × 104 | 5.57 × 103 | 3.89 × 103 | |

| 2.09 × 103 | 2.57 × 103 | 2.30 × 103 | 1.22 × 102 | 2.09 × 103 | 2.29 × 103 | 2.21 × 103 | 6.24 × 101 | |

| 2.10 × 103 | 2.82 × 103 | 2.25 × 103 | 1.33 × 102 | 2.25 × 103 | 2.25 × 103 | 2.25 × 103 | 4.30 × 10−13 | |

| 2.26 × 103 | 2.40 × 103 | 2.31 × 103 | 3.30 × 101 | 2.35 × 103 | 2.35 × 103 | 2.35 × 103 | 4.55 × 10−13 | |

| 2.88 × 103 | 3.06 × 103 | 2.93 × 103 | 3.57 × 101 | 2.85 × 103 | 2.88 × 103 | 2.86 × 103 | 8.17 × 100 | |

| 3.43 × 103 | 3.55 × 103 | 3.47 × 103 | 3.19 × 101 | 3.38 × 103 | 3.41 × 103 | 3.40 × 103 | 9.35 × 100 | |

| 2.90 × 103 | 3.25 × 103 | 3.00 × 103 | 8.65 × 101 | 2.91 × 103 | 2.98 × 103 | 2.93 × 103 | 2.68 × 101 | |

| 5.26 × 103 | 6.77 × 103 | 5.93 × 103 | 3.56 × 102 | 3.54 × 103 | 5.50 × 103 | 5.13 × 103 | 5.39 × 102 | |

| 3.44 × 103 | 3.79 × 103 | 3.58 × 103 | 8.82 × 101 | 3.41 × 103 | 3.50 × 103 | 3.46 × 103 | 2.89 × 101 | |

| 3.28 × 103 | 5.56 × 103 | 4.34 × 103 | 9.07 × 102 | 3.18 × 103 | 5.16 × 103 | 3.80 × 103 | 9.12 × 102 | |

| 3.41 × 103 | 4.13 × 103 | 3.70 × 103 | 1.82 × 102 | 3.31 × 103 | 3.57 × 103 | 3.46 × 103 | 7.66 × 101 | |

| 7.84 × 103 | 9.17 × 105 | 1.52 × 105 | 2.42 × 105 | 5.25 × 103 | 4.65 × 104 | 2.07 × 104 | 1.24 × 104 | |

| count | 1 | 27 | ||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kang, H.; Bei, F.; Shen, Y.; Sun, X.; Chen, Q. A Diversity Model Based on Dimension Entropy and Its Application to Swarm Intelligence Algorithm. Entropy 2021, 23, 397. https://doi.org/10.3390/e23040397

Kang H, Bei F, Shen Y, Sun X, Chen Q. A Diversity Model Based on Dimension Entropy and Its Application to Swarm Intelligence Algorithm. Entropy. 2021; 23(4):397. https://doi.org/10.3390/e23040397

Chicago/Turabian StyleKang, Hongwei, Fengfan Bei, Yong Shen, Xingping Sun, and Qingyi Chen. 2021. "A Diversity Model Based on Dimension Entropy and Its Application to Swarm Intelligence Algorithm" Entropy 23, no. 4: 397. https://doi.org/10.3390/e23040397

APA StyleKang, H., Bei, F., Shen, Y., Sun, X., & Chen, Q. (2021). A Diversity Model Based on Dimension Entropy and Its Application to Swarm Intelligence Algorithm. Entropy, 23(4), 397. https://doi.org/10.3390/e23040397