How Perceived Motivations Influence User Stickiness and Sustainable Engagement with AI-Powered Chatbots—Unveiling the Pivotal Function of User Attitude

Abstract

1. Introduction

2. Theoretical Framework and Hypotheses Development

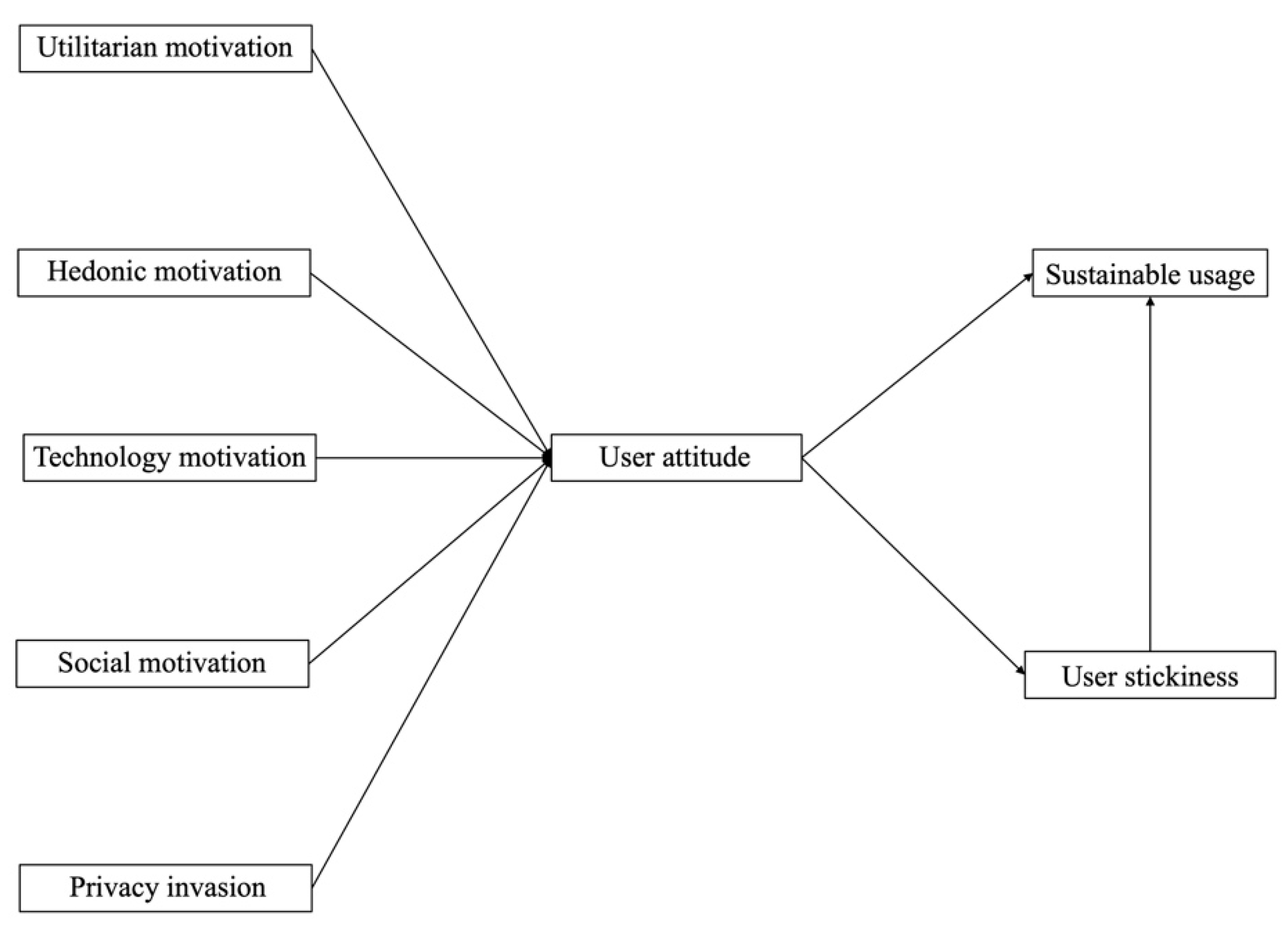

2.1. Research Model

2.2. Linking Utilitarian and Hedonic Motivations to User Attitude

2.3. Linking Technology and Social Motivations to User Attitude

2.4. Linking Privacy Invasion to User Attitude

2.5. Linking User Attitude and Stickiness to Sustainable Usage

3. Research Methodology

3.1. Subjects and Data Collection

3.2. Measurement

3.2.1. Utilitarian Motivation

3.2.2. Hedonic Motivation

3.2.3. Technology Motivation

3.2.4. Social Motivation

3.2.5. Privacy Invasion

3.2.6. User Attitude

3.2.7. Sustainable Usage

3.2.8. User Stickiness

4. Result

4.1. Measurement Model

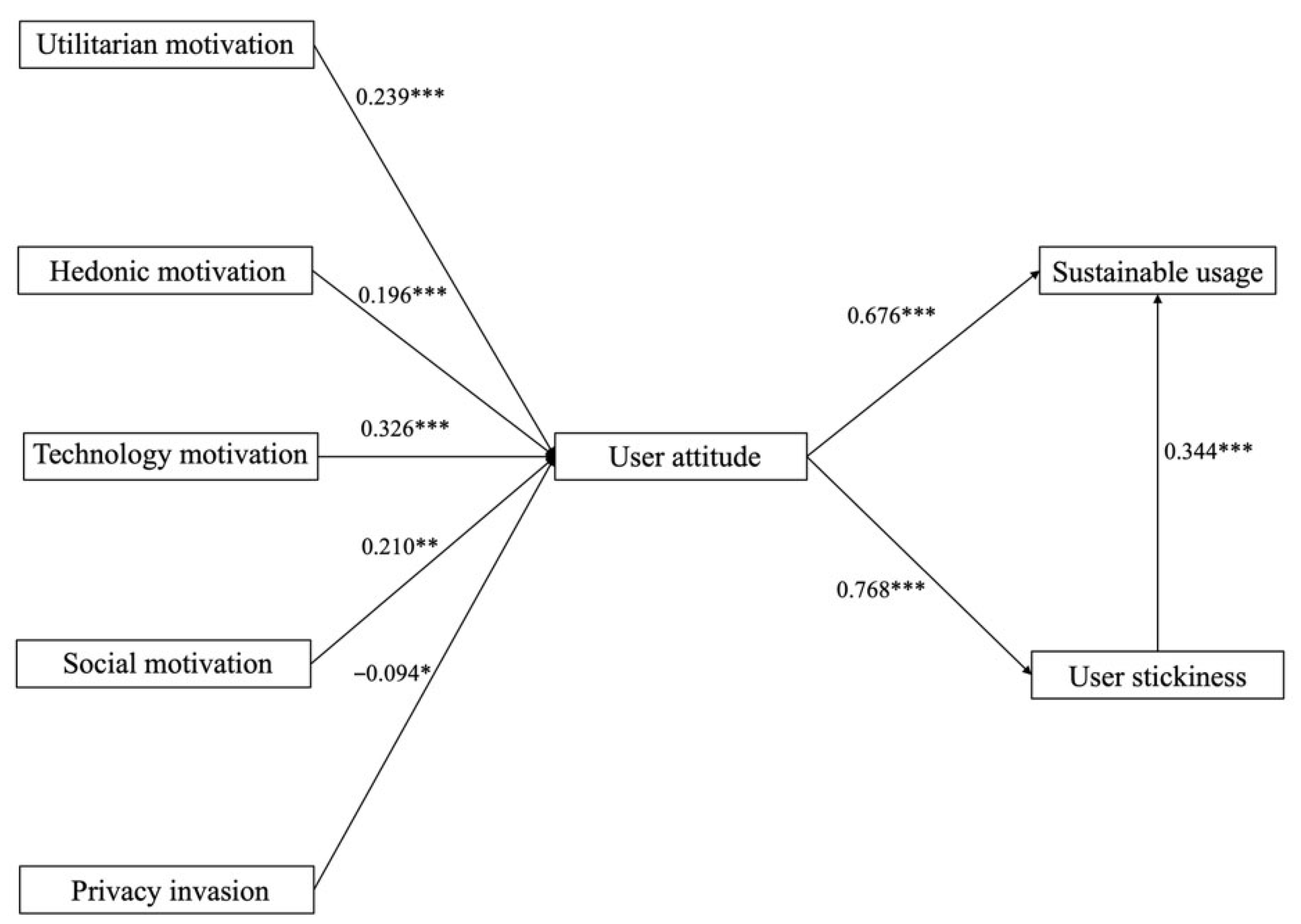

4.2. Structural Model

5. Discussion

6. Limitations and Implications for Future Research

6.1. Theoretical Implications

6.2. Practical Implications

6.3. Limitations and Directions for Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chaturvedi, R.; Verma, S. Opportunities and challenges of AI-driven customer service. In Artificial Intelligence in Customer Service: The Next Frontier for Personalized Engagement; Springer: Berlin/Heidelberg, Germany, 2023; pp. 33–71. [Google Scholar] [CrossRef]

- Foroughi, B.; Huy, T.Q.; Iranmanesh, M.; Ghobakhloo, M.; Rejeb, A.; Nikbin, D. Why users continue E-commerce chatbots? Insights from PLS-fsQCA-NCA approach. Serv. Ind. J. 2024, 45, 935–965. [Google Scholar] [CrossRef]

- Sundjaja, A.M.; Utomo, P.; Colline, F. The determinant factors of continuance use of customer service chatbot in Indonesia e-commerce: Extended expectation confirmation theory. J. Sci. Technol. Policy Manag. 2024, 16, 182–203. [Google Scholar] [CrossRef]

- Tsai, W.-H.S.; Liu, Y.; Chuan, C.-H. How chatbots’ social presence communication enhances consumer engagement: The mediating role of parasocial interaction and dialogue. J. Res. Interact. Mark. 2021, 15, 460–482. [Google Scholar] [CrossRef]

- Shahzad, M.F.; Xu, S.; An, X.; Javed, I. Assessing the impact of AI-chatbot service quality on user e-brand loyalty through chatbot user trust, experience and electronic word of mouth. J. Retail. Consum. Serv. 2024, 79, 103867. [Google Scholar] [CrossRef]

- Ashfaq, M.; Yun, J.; Yu, S.; Loureiro, S.M.C. I, Chatbot: Modeling the determinants of users’ satisfaction and continuance intention of AI-powered service agents. Telemat. Inform. 2020, 54, 101473. [Google Scholar] [CrossRef]

- Yang, J.; Chen, Y.-L.; Por, L.Y.; Ku, C.S. A systematic literature review of information security in chatbots. Appl. Sci. 2023, 13, 6355. [Google Scholar] [CrossRef]

- Rajaobelina, L.; Prom Tep, S.; Arcand, M.; Ricard, L. Creepiness: Its antecedents and impact on loyalty when interacting with a chatbot. Psychol. Mark. 2021, 38, 2339–2356. [Google Scholar] [CrossRef]

- Bhardwaj, V.; Khan, S.S.; Singh, G.; Patil, S.; Kuril, D.; Nahar, S. Risks for Conversational AI Security. Conversational Artif. Intell. 2024, 557–587. [Google Scholar] [CrossRef]

- Nguyen, T.H.; Le, X.C. Artificial intelligence-based chatbots–a motivation underlying sustainable development in banking: Standpoint of customer experience and behavioral outcomes. Cogent Bus. Manag. 2025, 12, 2443570. [Google Scholar] [CrossRef]

- Pang, H.; Ruan, Y.; Zhang, K. Deciphering technological contributions of visibility and interactivity to website atmospheric and customer stickiness in AI-driven websites: The pivotal function of online flow state. J. Retail. Consum. Serv. 2024, 78, 103795. [Google Scholar] [CrossRef]

- Rese, A.; Ganster, L.; Baier, D. Chatbots in retailers’ customer communication: How to measure their acceptance? J. Retail. Consum. Serv. 2020, 56, 102176. [Google Scholar] [CrossRef]

- Trivedi, J. Examining the customer experience of using banking chatbots and its impact on brand love: The moderating role of perceived risk. J. Internet Commer. 2019, 18, 91–111. [Google Scholar] [CrossRef]

- Pang, H.; Wang, Y. Deciphering dynamic effects of mobile app addiction, privacy concern and cognitive overload on subjective well-being and academic expectancy: The pivotal function of perceived technostress. Technol. Soc. 2025, 81, 102861. [Google Scholar] [CrossRef]

- Ernest Mfumbilwa, E.; Mnong’one, C.; Chao, E.; Amani, D. Invigorating continuance intention among users of AI chatbots in the banking industry: An empirical study from Tanzania. Cogent Bus. Manag. 2024, 11, 2419482. [Google Scholar] [CrossRef]

- Florenthal, B. Students’ motivation to participate via mobile technology in the classroom: A uses and gratifications approach. J. Mark. Educ. 2019, 41, 234–253. [Google Scholar] [CrossRef]

- Xu, X.-Y.; Tayyab, S.M.U.; Jia, Q.; Huang, A.H. A multi-model approach for the extension of the use and gratification theory in video game streaming. Inf. Technol. People 2023, 38, 137–179. [Google Scholar] [CrossRef]

- Luo, X.; Tong, S.; Fang, Z.; Qu, Z. Frontiers: Machines vs. humans: The impact of artificial intelligence chatbot disclosure on customer purchases. Mark. Sci. 2019, 38, 937–947. [Google Scholar] [CrossRef]

- Mailizar, M.; Burg, D.; Maulina, S. Examining university students’ behavioural intention to use e-learning during the COVID-19 pandemic: An extended TAM model. Educ. Inf. Technol. 2021, 26, 7057–7077. [Google Scholar] [CrossRef]

- Pang, H.; Zhang, K. Determining influence of service quality on user identification, belongingness, and satisfaction on mobile social media: Insight from emotional attachment perspective. J. Retail. Consum. Serv. 2024, 77, 103688. [Google Scholar] [CrossRef]

- Aguirre-Rodriguez, A.; Bagozzi, R.P.; Torres, P.L. Beyond craving: Appetitive desire as a motivational antecedent of goal-directed action intentions. Psychol. Mark. 2021, 38, 2169–2190. [Google Scholar] [CrossRef]

- Widagdo, B.; Roz, K. Hedonic shopping motivation and impulse buying: The effect of website quality on customer satisfaction. J. Asian Financ. Econ. Bus. 2021, 8, 395–405. [Google Scholar] [CrossRef]

- Camilleri, M.A.; Falzon, L. Understanding motivations to use online streaming services: Integrating the technology acceptance model (TAM) and the uses and gratifications theory (UGT). Span. J. Mark. ESIC 2021, 25, 217–238. [Google Scholar] [CrossRef]

- Yin, J.; Goh, T.-T.; Hu, Y. Interactions with educational chatbots: The impact of induced emotions and students’ learning motivation. Int. J. Educ. Technol. High. Educ. 2024, 21, 47. [Google Scholar] [CrossRef]

- Kim, J.S.; Lee, T.J.; Kim, N.J. What motivates people to visit an unknown tourist destination? Applying an extended model of goal-directed behavior. Int. J. Tour. Res. 2021, 23, 13–25. [Google Scholar] [CrossRef]

- Pang, H.; Hu, Z. Detrimental influences of social comparison and problematic WeChat use on academic achievement: Significant role of awareness of inattention. Online Inf. Rev. 2025, 49, 552–569. [Google Scholar] [CrossRef]

- Bagozzi, R.P. The self-regulation of attitudes, intentions, and behavior. Soc. Psychol. Q. 1992, 55, 178–204. [Google Scholar] [CrossRef]

- Ashraf, A.R.; Thongpapanl, N. Connecting with and converting shoppers into customers: Investigating the role of regulatory fit in the online customer’s decision-making process. J. Interact. Mark. 2015, 32, 13–25. [Google Scholar] [CrossRef]

- Anifa, N.; Sanaji, S. Augmented Reality Users: The Effect of Perceived Ease of Use, Perceived Usefulness, and Customer Experience on Repurchase Intention. J. Bus. Manag. Rev. 2022, 3, 252–274. [Google Scholar] [CrossRef]

- Akdim, K.; Casaló, L.V.; Flavián, C. The role of utilitarian and hedonic aspects in the continuance intention to use social mobile apps. J. Retail. Consum. Serv. 2022, 66, 102888. [Google Scholar] [CrossRef]

- Thongpapanl, N.; Ashraf, A.R.; Lapa, L.; Venkatesh, V. Differential effects of customers’ regulatory fit on trust, perceived value, and m-commerce use among developing and developed countries. J. Int. Mark. 2018, 26, 22–44. [Google Scholar] [CrossRef]

- Ashraf, A.R.; Razzaque, M.A.; Thongpapanl, N.T. The role of customer regulatory orientation and fit in online shopping across cultural contexts. J. Bus. Res. 2016, 69, 6040–6047. [Google Scholar] [CrossRef]

- Wang, K.-Y.; Ashraf, A.R.; Thongpapanl, N.; Iqbal, I. How perceived value of augmented reality shopping drives psychological ownership. Internet Res. 2025, 35, 1213–1251. [Google Scholar] [CrossRef]

- Schmuck, D.; Karsay, K.; Matthes, J.; Stevic, A. “Looking Up and Feeling Down”. The influence of mobile social networking site use on upward social comparison, self-esteem, and well-being of adult smartphone users. Telemat. Inform. 2019, 42, 101240. [Google Scholar] [CrossRef]

- Tamborini, R.; Eden, A.; Bowman, N.D.; Grizzard, M.; Weber, R.; Lewis, R.J. Predicting media appeal from instinctive moral values. Mass Commun. Soc. 2013, 16, 325–346. [Google Scholar] [CrossRef]

- Gan, C.; Li, H. Understanding the effects of gratifications on the continuance intention to use WeChat in China: A perspective on uses and gratifications. Comput. Hum. Behav. 2018, 78, 306–315. [Google Scholar] [CrossRef]

- Johnson, E.K.; Hong, S.C. Instagramming social presence: A test of social presence theory and heuristic cues on Instagram sponsored posts. Int. J. Bus. Commun. 2023, 60, 543–559. [Google Scholar] [CrossRef]

- Kreijns, K.; Xu, K.; Weidlich, J. Social presence: Conceptualization and measurement. Educ. Psychol. Rev. 2022, 34, 139–170. [Google Scholar] [CrossRef]

- Araujo, T. Living up to the chatbot hype: The influence of anthropomorphic design cues and communicative agency framing on conversational agent and company perceptions. Comput. Hum. Behav. 2018, 85, 183–189. [Google Scholar] [CrossRef]

- Chakraborty, U.; Biswal, S.K. Is digital social communication effective for social relationship? A study of online brand communities. J. Relatsh. Mark. 2024, 23, 94–118. [Google Scholar] [CrossRef]

- Chong, S.-E.; Lim, X.-J.; Ng, S.I.; Kamal Basha, N. Unlocking the enigma of social commerce discontinuation: Exploring the approach and avoidance drivers. Mark. Intell. Plan. 2025, 43, 952–976. [Google Scholar] [CrossRef]

- Wang, K.-Y.; Ashraf, A.R.; Thongpapanl, N.T.; Nguyen, O. Influence of social augmented reality app usage on customer relationships and continuance intention: The role of shared social experience. J. Bus. Res. 2023, 166, 114092. [Google Scholar] [CrossRef]

- Söderlund, M. Employee encouragement of self-disclosure in the service encounter and its impact on customer satisfaction. J. Retail. Consum. Serv. 2020, 53, 102001. [Google Scholar] [CrossRef]

- Franque, F.B.; Oliveira, T.; Tam, C.; Santini, F.d.O. A meta-analysis of the quantitative studies in continuance intention to use an information system. Internet Res. 2021, 31, 123–158. [Google Scholar] [CrossRef]

- Shao, Z.; Zhang, L.; Chen, K.; Zhang, C. Examining user satisfaction and stickiness in social networking sites from a technology affordance lens: Uncovering the moderating effect of user experience. Ind. Manag. Data Syst. 2020, 120, 1331–1360. [Google Scholar] [CrossRef]

- Khairawati, S. Effect of customer loyalty program on customer satisfaction and its impact on customer loyalty. Int. J. Res. Bus. Soc. Sci. (2147-4478) 2020, 9, 15–23. [Google Scholar] [CrossRef]

- Ashraf, A.R.; Thongpapanl, N.T.; Spyropoulou, S. The connection and disconnection between e-commerce businesses and their customers: Exploring the role of engagement, perceived usefulness, and perceived ease-of-use. Electron. Commer. Res. Appl. 2016, 20, 69–86. [Google Scholar] [CrossRef]

- Van Lierop, D.; Badami, M.G.; El-Geneidy, A.M. What influences satisfaction and loyalty in public transport? A review of the literature. Transp. Rev. 2018, 38, 52–72. [Google Scholar] [CrossRef]

- Jung, J.-H.; Shin, J.-I. The effect of choice attributes of internet specialized banks on integrated loyalty: The moderating effect of gender. Sustainability 2019, 11, 7063. [Google Scholar] [CrossRef]

- Pöyry, E.; Parvinen, P.; Malmivaara, T. Can we get from liking to buying? Behavioral differences in hedonic and utilitarian Facebook usage. Electron. Commer. Res. Appl. 2013, 12, 224–235. [Google Scholar] [CrossRef]

- Chung, M.; Ko, E.; Joung, H.; Kim, S.J. Chatbot e-service and customer satisfaction regarding luxury brands. J. Bus. Res. 2020, 117, 587–595. [Google Scholar] [CrossRef]

- Godey, B.; Manthiou, A.; Pederzoli, D.; Rokka, J.; Aiello, G.; Donvito, R.; Singh, R. Social media marketing efforts of luxury brands: Influence on brand equity and consumer behavior. J. Bus. Res. 2016, 69, 5833–5841. [Google Scholar] [CrossRef]

- Kang, Y.J.; Lee, W.J. Effects of sense of control and social presence on customer experience and e-service quality. Inf. Dev. 2018, 34, 242–260. [Google Scholar] [CrossRef]

- Pang, H.; Zhao, Y.; Yang, Y. Struggling or Shifting? Deciphering potential influences of cyberbullying perpetration and communication overload on mobile app switching intention through social cognitive approach. Inf. Process. Manag. 2025, 62, 104167. [Google Scholar] [CrossRef]

- Gerber, N.; Gerber, P.; Volkamer, M. Explaining the privacy paradox: A systematic review of literature investigating privacy attitude and behavior. Comput. Secur. 2018, 77, 226–261. [Google Scholar] [CrossRef]

| Frequency | % | |

|---|---|---|

| Gender | ||

| Males | 375 | 51.0 |

| Females | 360 | 49.0 |

| Age | ||

| 18–21 | 375 | 51.0 |

| 22–25 | 320 | 43.5 |

| 26–29 | 35 | 4.8 |

| 30–33 | 5 | 0.7 |

| Have you ever used an AI-powered chatbot? | ||

| Yes | 663 | 90.2 |

| No | 72 | 9.8 |

| How often do you use AI-powered chatbots? | ||

| Almost everyday | 125 | 17.0 |

| Several times a week | 300 | 40.8 |

| Several times a month | 195 | 26.5 |

| Very rarely | 115 | 15.7 |

| Variable | Item | Source |

|---|---|---|

| Utilitarian motivation |

| [50] |

| Hedonic motivation |

| [50,51] |

| Technology motivation |

| [36] |

| Social motivation |

| [39] |

| Privacy invasion |

| [7,9] |

| User attitude |

| [51] |

| Substantial usage |

| [36] |

| User stickiness |

| [52] |

| Model Fit Measures | Model Fit Criterion | Index Value | Good Model Fit (Y/N) |

|---|---|---|---|

| Absolute fit indices | |||

| RMSEA | <0.08 | 0.065 | Y |

| RMR | <0.08 | 0.064 | Y |

| χ2/d.f. (χ2 = 2195.656, d.f. = 1062) | <3 | 2.067 | Y |

| Incremental fit indices | |||

| CFI | >0.9 | 0.928 | Y |

| AGFI | >0.8 | 0.864 | Y |

| IFI | >0.9 | 0.930 | Y |

| TLI | >0.9 | 0.917 | Y |

| Constructs and Items | Loading (>0.7) | SMC (>0.5) | CR (>0.7) | AVE (>0.5) |

|---|---|---|---|---|

| Utilitarian Motivation (UM) | 0.940 | 0.724 | ||

| UM1 | 0.888 | 0.789 | ||

| UM2 | 0.849 | 0.721 | ||

| UM3 | 0.846 | 0.716 | ||

| UM4 | 0.813 | 0.661 | ||

| UM5 | 0.845 | 0.714 | ||

| UM6 | 0.863 | 0.745 | ||

| Hedonic Motivation (HM) | 0.946 | 0.716 | ||

| HM1 | 0.728 | 0.530 | ||

| HM2 | 0.813 | 0.661 | ||

| HM3 | 0.875 | 0.766 | ||

| HM4 | 0.877 | 0.770 | ||

| HM5 | 0.846 | 0.716 | ||

| HM6 | 0.897 | 0.805 | ||

| HM7 | 0.877 | 0.770 | ||

| Technology Motivation (TM) | 0.918 | 0.617 | ||

| TM1 | 0.760 | 0.578 | ||

| TM2 | 0.815 | 0.664 | ||

| TM3 | 0.777 | 0.604 | ||

| TM4 | 0.716 | 0.513 | ||

| TM5 | 0.831 | 0.691 | ||

| TM6 | 0.845 | 0.714 | ||

| TM7 | 0.747 | 0.558 | ||

| Social Motivation (SM) | 0.904 | 0.611 | ||

| SM1 | 0.763 | 0.582 | ||

| SM2 | 0.812 | 0.659 | ||

| SM3 | 0.788 | 0.621 | ||

| SM4 | 0.854 | 0.729 | ||

| SM5 | 0.736 | 0.542 | ||

| SM6 | 0.730 | 0.533 | ||

| Privacy Invasion (PI) | 0.937 | 0.750 | ||

| PI1 | 0.915 | 0.837 | ||

| PI2 | 0.892 | 0.796 | ||

| PI3 | 0.852 | 0.726 | ||

| PI4 | 0.856 | 0.733 | ||

| PI5 | 0.810 | 0.656 | ||

| User Attitude (UA) | 0.918 | 0.617 | ||

| UA1 | 0.812 | 0.659 | ||

| UA2 | 0.749 | 0.561 | ||

| UA3 | 0.754 | 0.569 | ||

| UA4 | 0.771 | 0.594 | ||

| UA5 | 0.809 | 0.654 | ||

| UA6 | 0.795 | 0.632 | ||

| UA7 | 0.805 | 0.648 | ||

| Substantial Usage (SU) | 0.853 | 0.537 | ||

| SU1 | 0.730 | 0.533 | ||

| SU2 | 0.727 | 0.529 | ||

| SU3 | 0.707 | 0.501 | ||

| SU4 | 0.749 | 0.561 | ||

| SU5 | 0.751 | 0.564 | ||

| User Stickiness (US) | 0.902 | 0.650 | ||

| US1 | 0.784 | 0.615 | ||

| US2 | 0.743 | 0.552 | ||

| US3 | 0.864 | 0.746 | ||

| US4 | 0.871 | 0.759 | ||

| US5 | 0.759 | 0.576 |

| PI | SM | TM | HM | UM | UA | US | SU | |

|---|---|---|---|---|---|---|---|---|

| PI | 0.866 | |||||||

| SM | 0.190 | 0.781 | ||||||

| TM | 0.176 | 0.289 | 0.785 | |||||

| HM | 0.309 | 0.473 | 0.329 | 0.846 | ||||

| UM | 0.144 | 0.246 | 0.486 | 0.310 | 0.850 | |||

| UA | 0.256 | 0.401 | 0.451 | 0.537 | 0.434 | 0.781 | ||

| US | 0.197 | 0.308 | 0.347 | 0.412 | 0.334 | 0.430 | 0.806 | |

| SU | 0.241 | 0.377 | 0.424 | 0.505 | 0.408 | 0.526 | 0.469 | 0.733 |

| Hypotheses | Paths | Standardized Coefficient | p-Value |

|---|---|---|---|

| H1 | Utilitarian motivation → User attitude | 0.239 | 0.000 |

| H2 | Hedonic motivation → User attitude | 0.196 | 0.000 |

| H3 | Technology motivation → User attitude | 0.326 | 0.000 |

| H4 | Social motivation → User attitude | 0.210 | 0.008 |

| H5 | Privacy invasion → User attitude | −0.094 | 0.032 |

| H6 | User attitude → Sustainable usage | 0.676 | 0.000 |

| H7 | User attitude → User stickiness | 0.768 | 0.000 |

| H8 | User stickiness → Sustainable usage | 0.344 | 0.000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pang, H.; Hu, Z.; Wang, L. How Perceived Motivations Influence User Stickiness and Sustainable Engagement with AI-Powered Chatbots—Unveiling the Pivotal Function of User Attitude. J. Theor. Appl. Electron. Commer. Res. 2025, 20, 228. https://doi.org/10.3390/jtaer20030228

Pang H, Hu Z, Wang L. How Perceived Motivations Influence User Stickiness and Sustainable Engagement with AI-Powered Chatbots—Unveiling the Pivotal Function of User Attitude. Journal of Theoretical and Applied Electronic Commerce Research. 2025; 20(3):228. https://doi.org/10.3390/jtaer20030228

Chicago/Turabian StylePang, Hua, Zhuyun Hu, and Lei Wang. 2025. "How Perceived Motivations Influence User Stickiness and Sustainable Engagement with AI-Powered Chatbots—Unveiling the Pivotal Function of User Attitude" Journal of Theoretical and Applied Electronic Commerce Research 20, no. 3: 228. https://doi.org/10.3390/jtaer20030228

APA StylePang, H., Hu, Z., & Wang, L. (2025). How Perceived Motivations Influence User Stickiness and Sustainable Engagement with AI-Powered Chatbots—Unveiling the Pivotal Function of User Attitude. Journal of Theoretical and Applied Electronic Commerce Research, 20(3), 228. https://doi.org/10.3390/jtaer20030228