How Doctors’ Proactive Crafting Behaviors Influence Performance Outcomes: Evidence from an Online Healthcare Platform

Abstract

1. Introduction

2. Literature Review

2.1. Studies on Job Crafting Theory

2.2. Studies on Doctors’ Behavior

2.3. Studies on Doctors’ Performance

2.4. Review Summary

3. Research Hypotheses

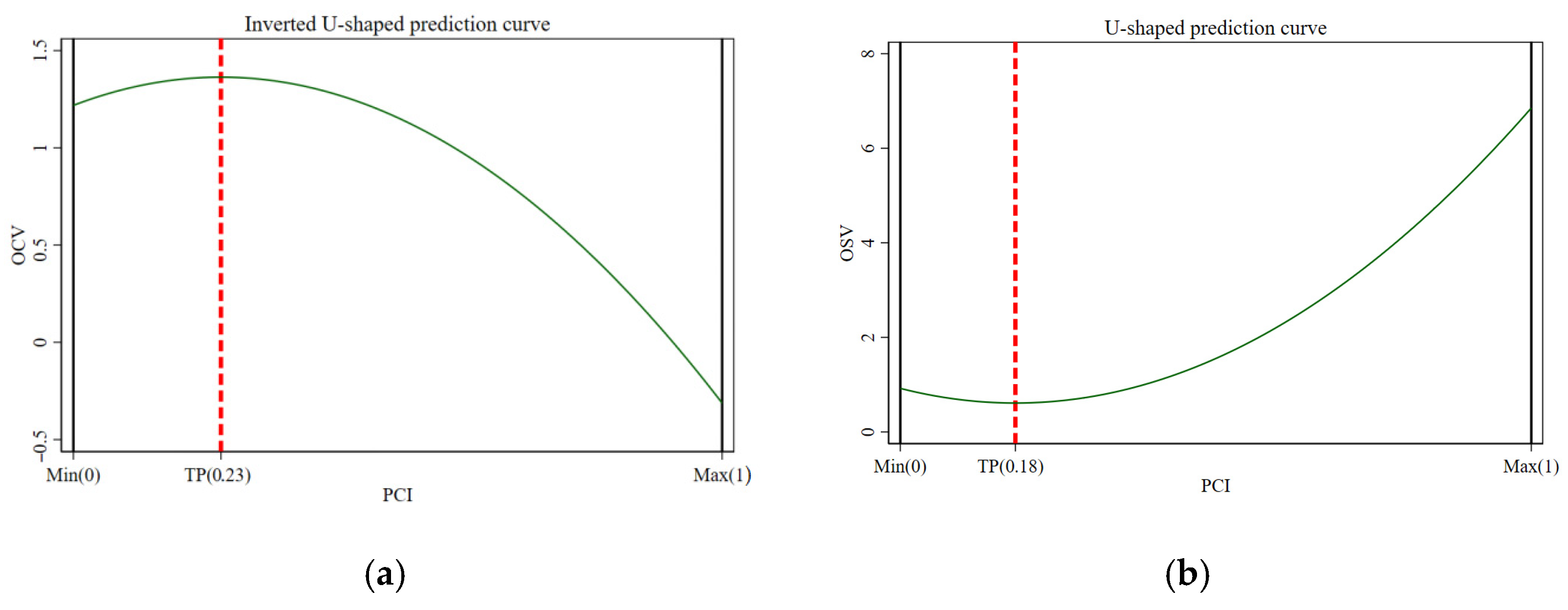

3.1. Proactive Crafting and Online Consultation Volume

3.2. Proactive Crafting and Offline Service Volume

3.3. Proactive Crafting and User Evaluation Performance

3.4. Heterogeneity by Professional Rank, Disease Type, and Regional Context

4. Research Design

4.1. Data Source and Processing

4.2. Variable Selection and Measurement

4.3. Research Model

5. Results Analysis

5.1. Descriptive Statistics

5.2. Correlation Analysis

5.3. Multicollinearity Test

5.4. Two-Way Fixed Effects Analysis

5.5. Robustness Tests

5.5.1. Removal of Outliers

5.5.2. Control Variable Adjustment: Replacing LR with NNR

5.5.3. Recalculation of the Proactive Crafting Index

5.6. Endogeneity Test

5.7. Heterogeneity Analysis

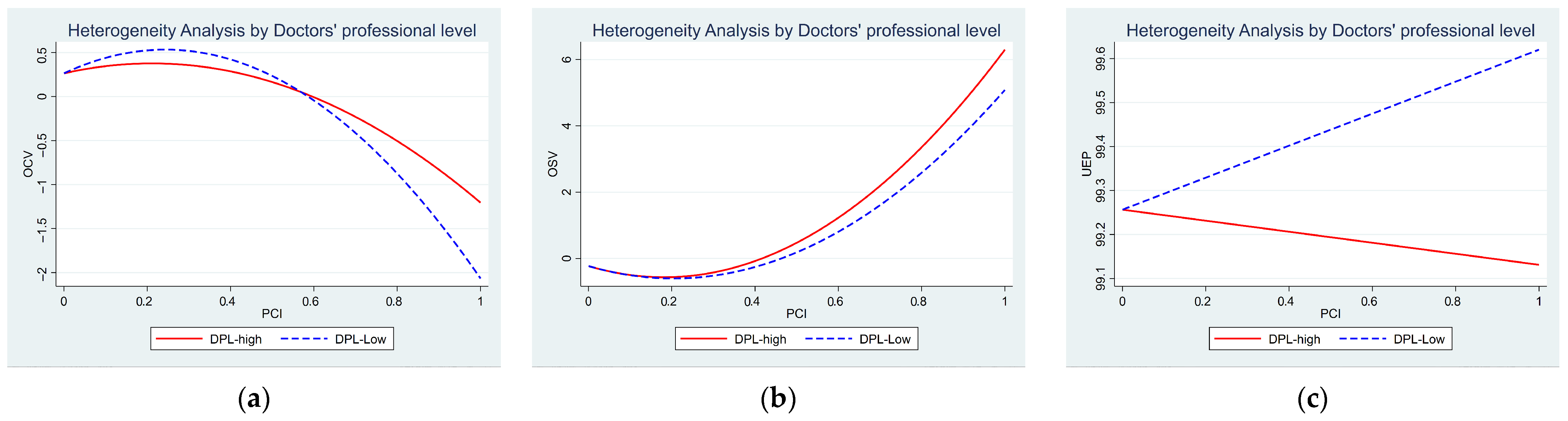

5.7.1. Heterogeneity Analysis by Doctors’ Professional Level

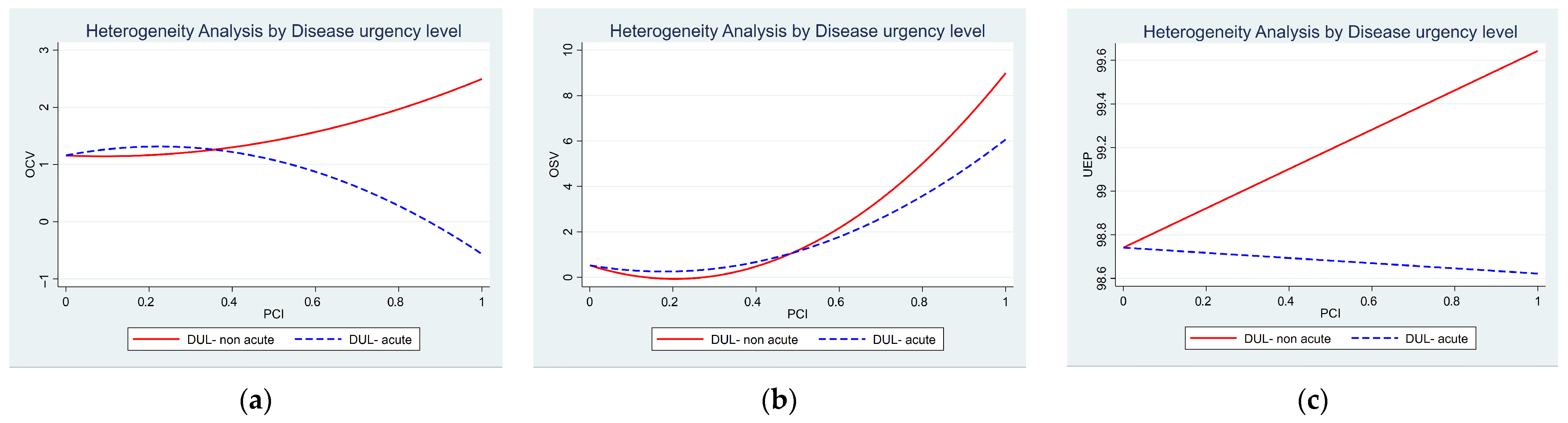

5.7.2. Heterogeneity Analysis by Disease Urgency Level

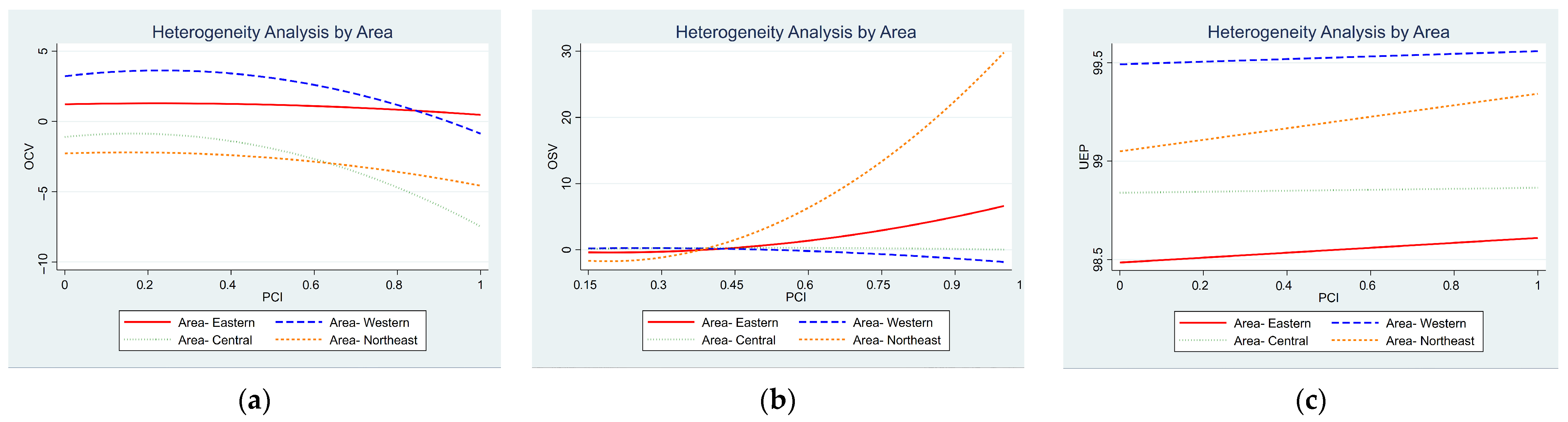

5.7.3. Heterogeneity Analysis by Area

6. Discussion and Conclusions

6.1. Implications for Research

6.2. Implications for Practice

6.3. Limitations and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| OCVt+1 | Online consultation volume (t+1) |

| OSVt+1 | Offline service volume (t+1) |

| UEPt+1 | User evaluation performance (t+1) |

| PCIt | Proactive crafting index (t) |

| LRt | Latest Review (t) |

| OAPt | Online average price (t) |

| OSPt | Offline service prices (t) |

| NOOSt | Number of online and offline services (t) |

| DULt | Disease urgency level (t) |

| DPLt | Doctors’ professional level (t) |

| Areat | Area (t) |

| NNRt | Number of Negative Reviews (t) |

Appendix A

| OCVt+1 | OSVt+1 | UEPt+1 | ||||

|---|---|---|---|---|---|---|

| DPL-high | DPL-Low | DPL-high | DPL-Low | DPL-high | DPL-Low | |

| PCIt | 1.198 ** | 2.541 *** | −2.020 ** | −2.412 | −0.007 | −0.011 |

| −0.409 | −0.763 | −0.853 | −2.496 | −0.078 | −0.189 | |

| PCI2t | −2.890 ** | −5.528 ** | 5.359 ** | 5.312 | ||

| −1.049 | −1.95 | −2.362 | −7.026 | |||

| LRt | 0.000 | 0.000 | 0.001 | −0.001 | 0.000 ** | 0.000 * |

| 0.000 | 0.000 | 0 | −0.001 | 0.000 | 0.000 | |

| OAPt | 0.002 * | 0.005 * | 0.001 | 0.006 | 0.000 | 0.000 |

| −0.001 | −0.002 | −0.001 | −0.006 | 0.000 | −0.001 | |

| OSPt | −0.013 | 0.068 | −0.044 | 0.000 | 0.018 | −0.002 |

| −0.021 | −0.089 | −0.05 | −0.001 | −0.022 | −0.002 | |

| NOOSt | 0.000 ** | 0.000 ** | 0.000 *** | 0.001 *** | 0 | −0.000 ** |

| 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |

| _cons | 1.111 | 0.325 | 2.724 | −0.186 | 98.024 *** | 99.306 *** |

| −0.77 | −1.424 | −1.78 | −0.467 | −0.707 | −0.102 | |

| ID Fixed | Yes | Yes | Yes | Yes | Yes | Yes |

| Month Fixed | Yes | Yes | Yes | Yes | Yes | Yes |

| N | 13,000 | 4965 | 7405 | 1422 | 22,000 | 8302 |

| R2 | 0.067 | 0.115 | 0.497 | 0.321 | 0.006 | 0.013 |

| R2_a | 0.067 | −0.379 | 0.496 | 0.317 | 0.006 | 0.012 |

| F | 74.176 | 45.982 | 440.212 | 21.308 | 5.545 | 2.818 |

| OCVt+1 | OSVt+1 | UEPt+1 | ||||

|---|---|---|---|---|---|---|

| DUL-non acute | DUL-acute | DUL-non acute | DUL-acute | DUL-non acute | DUL-acute | |

| PCIt | 0.304 | 1.545 *** | −4.911 ** | −1.314 | 0.376 * | −0.062 |

| −1.25 | −0.408 | −1.831 | −0.8 | −0.225 | −0.081 | |

| PCI2t | −0.055 | −3.554 *** | 11.574 ** | 3.531 | ||

| −3.334 | −1.04 | −5.016 | −2.208 | |||

| LRt | 0.000 | 0.000 | 0.002 | 0.000 | 0.000 | 0.000 ** |

| 0.000 | 0.000 | −0.002 | 0.000 | 0.000 | 0.000 | |

| OAPt | 0.001 | 0.003 ** | 0.003 ** | 0.000 | 0.000 | 0.000 |

| −0.002 | −0.001 | −0.002 | −0.002 | 0.000 | 0.000 | |

| OSPt | 0.035 | −0.017 | 0.146 *** | −0.015 | −0.009 | 0.007 |

| −0.058 | −0.012 | −0.01 | −0.054 | −0.011 | −0.009 | |

| NOOSt | 0.000 * | 0.000 ** | −0.001 ** | 0.001 *** | 0.000 | −0.000 ** |

| 0.000 | 0.000 | 0.000 | 0.0000 | 0.000 | 0.000 | |

| _cons | −0.248 | 1.348 *** | 0.2 | 0.471 | 99.118 *** | 98.644 *** |

| −1.692 | −0.402 | −1.232 | −1.758 | −0.308 | −0.247 | |

| ID Fixed | Yes | Yes | Yes | Yes | Yes | Yes |

| Month Fixed | Yes | Yes | Yes | Yes | Yes | Yes |

| N | 2357 | 16,000 | 1094 | 7733 | 3915 | 26,000 |

| R2 | 0.063 | 0.065 | 0.513 | 0.45 | 0.006 | 0.009 |

| R2_a | 0.06 | 0.064 | 0.509 | 0.449 | 0.003 | 0.009 |

| F | 11.21 | 90.526 | . | 365.699 | 2.735 | 6.211 |

| OCVt+1 | ||||

|---|---|---|---|---|

| Area-Eastern | Area-Western | Area-Central | Area-Northeast | |

| PCIt | 0.906 ** | 3.753 ** | 2.646 * | 0.998 |

| −0.413 | −1.594 | −1.401 | −2.325 | |

| PCI2t | −2.201 ** | −8.266 ** | −8.368 ** | −3.273 |

| −1.052 | −4.003 | −3.792 | −6.125 | |

| LRt | 0.000 | 0.000 | −0.001 | 0.001 * |

| 0.000 | 0.000 | −0.001 | 0.000 | |

| OAPt | 0.002 ** | −0.012 *** | 0.001 | 0.013 |

| −0.001 | −0.003 | −0.002 | −0.01 | |

| OSPt | −0.009 | −0.057 | −0.025 | 0.153 *** |

| −0.018 | −0.064 | −0.018 | −0.02 | |

| NOOSt | 0.000 ** | 0.000 * | 0.001 ** | 0.001 |

| 0.000 | 0.000 | 0.000 | 0.000 | |

| _cons | 1.267 * | 3.027 *** | −0.051 | −2.042 ** |

| −0.651 | −0.861 | −0.692 | −1.006 | |

| ID Fixed | Yes | Yes | Yes | Yes |

| Month Fixed | Yes | Yes | Yes | Yes |

| N | 12,000 | 1159 | 2101 | 602 |

| R2 | 0.047 | 0.097 | 0.065 | 0.097 |

| R2_a | 0.046 | 0.09 | 0.061 | 0.084 |

| F | 55.985 | 9.68 | 10.343 | . |

| OSVt+1 | ||||

|---|---|---|---|---|

| Area-Eastern | Area-Western | Area-Central | Area-Northeast | |

| PCIt | −2.070 ** | 2.171 | 1.395 | −18.281 *** |

| −0.766 | −4.044 | −1.69 | −4.167 | |

| PCI2t | 4.732 ** | −4.266 | −2.222 | 48.097 *** |

| −2.071 | −9.601 | −4.917 | −11.991 | |

| LRt | 0.001 | 0.000 | 0.003 ** | −0.001 |

| −0.001 | −0.001 | −0.001 | −0.009 | |

| OAPt | −0.001 | 0.010 ** | 0.008 | −22.033 ** |

| −0.001 | −0.004 | −0.007 | −10.031 | |

| OSPt | 0.038 | 0.000 | −0.108 ** | 0.000 |

| −0.067 | 0.000 | −0.041 | 0.000 | |

| NOOSt | 0.001 *** | 0.001 *** | −0.002 *** | 0.014 *** |

| 0.000 | 0.000 | 0.000 | −0.004 | |

| _cons | −1.431 | −0.569 | 8.282 *** | 1975.360 ** |

| −2.59 | −0.545 | −1.357 | −900.741 | |

| ID Fixed | Yes | Yes | Yes | Yes |

| Month Fixed | Yes | Yes | Yes | Yes |

| N | 6053 | 370 | 1207 | 157 |

| R2 | 0.474 | 0.438 | 0.579 | 0.469 |

| R2_a | 0.474 | 0.426 | 0.575 | 0.44 |

| F | 326.709 | 23.779 | 107.349 | . |

| UEPt+1 | ||||

|---|---|---|---|---|

| Area-Eastern | Area-Western | Area-Central | Area-Northeast | |

| PCIt | −0.013 | 0.071 | 0.025 | 0.293 |

| −0.091 | −0.072 | −0.143 | −0.223 | |

| LRt | 0.000 | 0.000 ** | 0.000 ** | 0.000 |

| 0.001 | 0.000 | 0.000 | 0.001 | |

| OAPt | 0.000 | 0.000 | −0.002 | 0.000 |

| 0.000 | 0.000 | −0.002 | −0.002 | |

| OSPt | 0.013 | −0.032 | −0.006 ** | 0.012 |

| −0.012 | −0.023 | −0.003 | −0.009 | |

| NOOSt | 0.000 | −0.000 ** | −0.001 ** | −0.000 * |

| 0.000 | 0.001 | 0.001 | 0.001 | |

| _cons | 98.383 *** | 99.499 *** | 98.844 *** | 99.058 *** |

| −0.417 | −0.286 | −0.232 | −0.196 | |

| ID Fixed | Yes | Yes | Yes | Yes |

| Month Fixed | Yes | Yes | Yes | Yes |

| N | 20,000 | 1937 | 3476 | 982 |

| R2 | 0.006 | 0.004 | 0.015 | 0.022 |

| R2_a | 0.005 | −0.001 | 0.012 | 0.012 |

| F | 3.02 | 1.16 | 2.651 | 2.515 |

References

- Guo, S.; Guo, X.; Fang, Y.; Vogel, D. How Doctors Gain Social and Economic Returns in Online Health-Care Communities: A Professional Capital Perspective. J. Manag. Inf. Syst. 2017, 34, 487–519. [Google Scholar] [CrossRef]

- Peng, Y.; Yin, P.; Deng, Z.; Wang, R. Patient–Physician Interaction and Trust in Online Health Community: The Role of Perceived Usefulness of Health Information and Services. Int. J. Environ. Res. Public Health 2020, 17, 139. [Google Scholar] [CrossRef]

- Peng, M.; Zhu, K.; Gu, Y.; Yang, X.; Su, K.; Gu, D. How to Self-Disclose? The Impact of Patients’ Linguistic Features on Doctors’ Service Quality in Online Health Communities. J. Theor. Appl. Electron. Commer. Res. 2025, 20, 56. [Google Scholar] [CrossRef]

- Li, M.; Gu, D.; Li, R.; Gu, Y.; Liu, H.; Su, K.; Wang, X.; Zhang, G. The Impact of Linguistic Signals on Cognitive Change in Support Seekers in Online Mental Health Communities: Text Analysis and Empirical Study. J. Med. Internet Res. 2025, 27, e60292. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, Y.; Li, Y.; Albright, K. Better Interaction Performance Attracts More Chronic Patients? Evidence from an Online Health Platform. Inf. Process Manag. 2023, 60, 103413. [Google Scholar] [CrossRef]

- Wang, L.; Yan, L.; Zhou, T.; Guo, X.; Heim, G.R. Understanding physicians’ online-offline behavior dynamics: An empirical study. Inf. Syst. Res. 2020, 31, 537–555. [Google Scholar] [CrossRef]

- Wrzesniewski, A.; Dutton, J.E. Crafting a Job: Revisioning Employees as Active Crafters of Their Work. Acad. Manag. Rev. 2001, 26, 179–201. [Google Scholar] [CrossRef]

- Tims, M.; Bakker, A.B. Job Crafting: Towards a New Model of Individual Job Redesign. SA J. Ind. Psychol. 2010, 36, 1–9. [Google Scholar] [CrossRef]

- Seppälä, P.; Hakanen, J.J.; Virkkala, J.; Tolvanen, A.; Punakallio, A.; Rivinoja, T.; Uusitalo, A. Can Job Crafting eLearning Intervention Boost Job Crafting and Work Engagement, and Increase Heart Rate Variability? Testing a Health Enhancement Process. J. Occup. Health Psychol. 2023, 28, 395–410. [Google Scholar] [CrossRef]

- Yan, J.; Liang, C.; Gu, D.; Zhu, K.; Zhou, P. Understanding Patients’ Doctor Choice Behavior: Elaboration Likelihood Perspective. Inf. Dev. 2024; forthcoming. [Google Scholar] [CrossRef]

- Tims, M.; Bakker, A.B.; Derks, D. Development and validation of the job crafting scale. J. Vocat. Behav. 2012, 80, 173–186. [Google Scholar] [CrossRef]

- Tims, M.; Bakker, A.B.; Derks, D. The Impact of Job Crafting on Job Demands, Job Resources, and Well-Being. J. Occup. Health Psychol. 2013, 18, 230–240. [Google Scholar] [CrossRef]

- Demerouti, E. Design Your Own Job through Job Crafting. Eur. Psychol. 2014, 19, 237–247. [Google Scholar] [CrossRef]

- Petrou, P.; Demerouti, E.; Peeters, M.C.W.; Schaufeli, W.B.; Hetland, J. Crafting a Job on a Daily Basis: Contextual Correlates and the Link to Work Engagement. J. Organ. Behav. 2012, 33, 1120–1141. [Google Scholar] [CrossRef]

- Lu, C.Q.; Wang, H.J.; Lu, J.J.; Du, D.Y.; Bakker, A.B. Does Work Engagement Increase Person–Job Fit? The Role of Job Crafting and Job Insecurity. J. Vocat. Behav. 2014, 84, 142–152. [Google Scholar] [CrossRef]

- Nagarajan, R.; Swamy, R.A.; Reio, T.G.; Elangovan, R.; Parayitam, S. The COVID-19 Impact on Employee Performance and Satisfaction: A Moderated Moderated-Mediation Conditional Model of Job Crafting and Employee Engagement. Hum. Resour. Dev. Int. 2022, 25, 600–630. [Google Scholar] [CrossRef]

- Lichtenthaler, P.W.; Fischbach, A. The Conceptualization and Measurement of Job Crafting: A Critical Review. Work Stress 2019, 33, 147–172. [Google Scholar] [CrossRef]

- Vogt, K.; Hakanen, J.J.; Brauchli, R.; Jenny, G.J.; Bauer, G.F. The Consequences of Job Crafting: A Three-Wave Study. Eur. J. Work Organ. Psychol. 2016, 25, 353–362. [Google Scholar] [CrossRef]

- Shi, Y.; Xie, J.; Wang, Y.; Zhang, N. Digital job crafting and its positive impact on job performance: The perspective of individual-task-technology fit. Adv. Psychol. Sci. 2023, 31, 1133–1145. [Google Scholar] [CrossRef]

- Liu, W.; Chang, W.; Guo, J.; Chen, S.; Wang, H.J. Electronic performance monitoring and job crafting: A psychological ownership perspective. J. Digit. Manag. 2025, 1, 9. [Google Scholar] [CrossRef]

- Bakker, A.B.; Demerouti, E. Job Demands–Resources Theory: Taking Stock and Looking Forward. J. Occup. Health Psychol. 2017, 22, 273–285. [Google Scholar] [CrossRef]

- Cao, Q.; Lu, Y.; Dong, D.; Tang, Z.; Li, Y. The Roles of Bridging and Bonding in Social Media Communities. J. Am. Soc. Inf. Sci. Technol. 2013, 64, 1671–1681. [Google Scholar] [CrossRef]

- Yan, Z.; Wang, T.; Chen, Y.; Zhang, H. Knowledge Sharing in Online Health Communities: A Social Exchange Theory Perspective. Inf. Manag. 2016, 53, 643–653. [Google Scholar] [CrossRef]

- Deja, M.; Hommel, M.; Weber-Carstens, S.; Moss, M.; von Dossow, V.; Sander, M.; Pille, C.; Spies, C. Evidence-Based Therapy of Severe Acute Respiratory Distress Syndrome: An Algorithm-Guided Approach. J. Int. Med. Res. 2008, 36, 211–221. [Google Scholar] [CrossRef]

- Shah, A.M.; Naqvi, R.A.; Jeong, O.R. The Impact of Signals Transmission on Patients’ Choice through E-consultation Websites: An Econometric Analysis of Secondary Datasets. Int. J. Environ. Res. Public Health 2021, 18, 5192. [Google Scholar] [CrossRef] [PubMed]

- Tan, H.; Yan, M. Physician-User Interaction and Users’ Perceived Service Quality: Evidence from Chinese Mobile Healthcare Consultation. Inf. Technol. People 2020, 33, 1403–1426. [Google Scholar] [CrossRef]

- Lu, Y.; Wang, Q. Doctors’ Preferences in the Selection of Patients in Online Medical Consultations: An Empirical Study with Doctor–Patient Consultation Data. Healthcare 2022, 10, 1435. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Bian, Y.; Ye, Q.; Jing, D. Free for Caring? The Effect of Offering Free Online Medical-Consulting Services on Physician Performance in e-Health Care. Telemed. E-Health 2019, 25, 979–986. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Zhang, J. Spillover Effects of Physicians’ Prosocial Behavior: The Role of Knowledge Sharing in Enhancing Paid Consultations Across Healthcare Networks. J. Theor. Appl. Electron. Commer. Res. 2025, 20, 87. [Google Scholar] [CrossRef]

- Lai, A.Y.; Wee, K.Z.; Frimpong, J.A. Proactive behaviors and health care workers: A systematic review. Health Care Manag. Rev. 2024, 49, 239–251. [Google Scholar] [CrossRef]

- Ebert, T.; Bipp, T.; Debus, M.E. Stability, reciprocity, and antecedent-outcome relations of different job crafting forms. Occup. Health Sci. 2025; forthcoming. [Google Scholar] [CrossRef]

- Topa, G.; Aranda-Carmena, M. Job crafting in nursing: Mediation between work engagement and job performance in a multisample study. Int. J. Environ. Res. Public Health 2022, 19, 12711. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Si, G.; Gao, B. Which Voice are You Satisfied with? Understanding the Physician–Patient Voice Interactions on Online Health Platforms. Decis. Support Syst. 2022, 157, 113754. [Google Scholar] [CrossRef]

- Donabedian, A. Evaluating the Quality of Medical Care. Milbank Q. 1966, 44, 166–206. [Google Scholar] [CrossRef]

- Campbell, S.M.; Roland, M.O.; Buetow, S.A. Defining Quality of Care. Soc. Sci. Med. 2000, 51, 1611–1625. [Google Scholar] [CrossRef]

- Goh, J.M.; Gao, G.; Agarwal, R. The Creation of Social Value: Can an Online Health Community Reduce Rural–Urban Health Disparities? Manag. Inf. Syst. Q. 2016, 40, 247–263. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, C.; Xu, Y. The Role of Mutual Trust in Building Members’ Loyalty to a C2C Platform Provider. Int. J. Electron. Commer. 2020, 24, 206–231. [Google Scholar] [CrossRef]

- Chen, Y.; Lee, S. User-Generated Physician Ratings and Their Effects on Patients’ Physician Choices: Evidence from Yelp. J. Mark. 2024, 88, 77–96. [Google Scholar] [CrossRef]

- Chen, L.; Tang, H.; Guo, Y. Effect of Patient-Centered Communication on Physician-Patient Conflicts from the Physicians’ Perspective: A Moderated Mediation Model. J. Health Commun. 2022, 27, 164–172. [Google Scholar] [CrossRef]

- Aalto, A.M.; Elovainio, M.; Tynkkynen, L.K.; Reissell, E.; Vehko, T.; Chydenius, M.; Sinervo, T. What Patients Think about Choice in Healthcare? A Study on Primary Care Services in Finland. Scand. J. Public Health 2018, 46, 463–470. [Google Scholar] [CrossRef]

- Zhang, X.; Guo, X.; Lai, K.; Guo, F.; Li, C. Understanding Gender Differences in m-Health Adoption: A Modified Theory of Reasoned Action Model. Telemed. E-Health 2014, 20, 39–46. [Google Scholar] [CrossRef]

- Gartner, J.B.; Lemaire, C. Dimensions of performance and related key performance indicators addressed in healthcare organisations: A literature review. Int. J. Health Plan. Manag. 2022, 37, 1941–1952. [Google Scholar] [CrossRef]

- Carini, E.; Gabutti, I.; Frisicale, E.M.; Di Pilla, A.; Pezzullo, A.M.; de Waure, C.; Cicchetti, A.; Boccia, S.; Specchia, M.L. Assessing hospital performance indicators. What dimensions? Evidence from an umbrella review. BMC Health Serv. Res. 2020, 20, 1038. [Google Scholar] [CrossRef]

- Liu, X.; Guo, H.; Wang, L.; Hu, M.; Wei, Y.; Liu, F.; Wang, X. Effect of prosocial behaviors on e-consultations in a web-based health care community: Panel data analysis. J. Med. Internet Res. 2024, 26, e52646. [Google Scholar] [CrossRef]

- van Elten, H.J.; van der Kolk, B. Performance management, metric quality, and trust: Survey evidence from healthcare organizations. Br. Account. Rev. 2024, 101511. [Google Scholar] [CrossRef]

- Huang, Z.; Duan, C.; Yang, Y.; Khanal, R. Online Selection of a Physician by Patients: The Impression Formation Perspective. BMC Med. Inform. Decis. Mak. 2022, 22, 193. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.; Lu, N. Service Provision, Pricing, and Patient Satisfaction in Online Health Communities. Int. J. Med. Inform. 2018, 110, 77–89. [Google Scholar] [CrossRef]

- Liu, X.; Guo, X.; Wu, H.; Wu, T. The Impact of Individual and Organizational Reputation on Physicians’ Appointments Online. Int. J. Electron. Commer. 2016, 20, 551–577. [Google Scholar] [CrossRef]

- Zhang, F.; Parker, S.K. Reorienting Job Crafting Research: A Hierarchical Structure of Job Crafting Concepts and Integrative Review. J. Organ. Behav. 2019, 40, 126–146. [Google Scholar] [CrossRef]

- Giæver, F.; Løvseth, L.T. Exploring Presenteeism among Hospital Physicians through the Perspective of Job Crafting. Qual. Res. Organ. Manag. 2020, 15, 296–314. [Google Scholar] [CrossRef]

- Nguyen, N.X.; Tran, K.; Nguyen, T.A. Impact of Service Quality on In-patients’ Satisfaction, Perceived Value, and Customer Loyalty: A Mixed-Methods Study from a Developing Country. Patient Prefer. Adherence 2021, 17, 2523–2538. [Google Scholar] [CrossRef]

- Guo, X.; Guo, S.; Vogel, D.; Li, Y. Online Healthcare Community Interaction Dynamics. J. Manag. Sci. Eng. 2016, 1, 58–74. [Google Scholar] [CrossRef]

- He, Y.; Guo, X.; Wu, T.; Vogel, D. The Effect of Interactive Factors on Online Health Consultation Review Deviation: An Empirical Investigation. Int. J. Med. Inform. 2022, 163, 104781. [Google Scholar] [CrossRef]

- Liu, X.; Chi, X.; Li, J.; Zhou, S.; Cheng, Y. Doctors’ Self-Presentation Strategies and the Effects on Patient Selection in Psychiatric Department from an Online Medical Platform: A Combined Perspective of Impression Management and Information Integration. J. Theor. Appl. Electron. Commer. Res. 2025, 20, 13. [Google Scholar] [CrossRef]

- Igarashi, H.; Kitamura, T.; Ohuchi, K.; Mitoma, H. Consultation frequency and perceived consultation time in a Japanese psychiatric clinic: Their relationship with patient consultation satisfaction and depression and anxiety. Psychiatry Clin. Neurosci. 2008, 62, 129–134. [Google Scholar] [CrossRef]

- Jing, L.; Shan, W.; Evans, R.; Shi, X. To Continue Consultation or Not? How Physicians’ Information Cues Affect Patients’ Continued Online Consultation Behavior. Electron. Commer. Res. 2024; forthcoming. [Google Scholar] [CrossRef]

- Cao, X.; Liu, Y.; Zhu, Z.; Hu, J.; Chen, X. Online Selection of a Physician by Patients: Empirical Study from Elaboration Likelihood Perspective. Comput. Hum. Behav. 2017, 73, 403–412. [Google Scholar] [CrossRef]

- Guetz, B.; Bidmon, S. The Impact of Social Influence on the Intention to Use Physician Rating Websites: Moderated Mediation Analysis Using a Mixed Methods Approach. J. Med. Internet Res. 2022, 24, e37505. [Google Scholar] [CrossRef]

- Sun, Q.; Tang, G.; Xu, W.; Zhang, S. Social media stethoscope: Unraveling how doctors’ social media behavior affects patient adherence and treatment outcome. Front. Public Health 2024, 12, 1459536. [Google Scholar] [CrossRef]

- Dong, W.; Lei, X.; Liu, Y. The mediating role of patients’ trust between web-based health information seeking and patients’ uncertainty in China: Cross-sectional web-based survey. J. Med. Internet Res. 2022, 24, e25275. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, X.; Han, D.; Zhao, Y.; Ma, L.; Hao, F. How the Use of an Online Healthcare Community Affects the Doctor-Patient Relationship: An Empirical Study in China. Front. Public Health 2023, 11, 1145749. [Google Scholar] [CrossRef]

- Yan, J.; Liang, C.; Zhou, P. Physician’s Service Quality and Patient’s Review Behavior: Managing Online Review to Attract More Patients. Internet Res. 2024. [Google Scholar] [CrossRef]

- Wang, Q.; Wang, H.; Wang, S.; Zhang, W. How does the design of consultation pages affect patients’ perception of physician authority and willingness to seek offline treatment: A randomized controlled trial. Behav. Sci. 2023, 13, 584. [Google Scholar] [CrossRef]

- Shen, T.; Li, Y.; Chen, X. A systematic review of online medical consultation research. Healthcare 2024, 12, 1687. [Google Scholar] [CrossRef] [PubMed]

- Yang, H.; Guo, X.; Wu, T. Exploring the Influence of the Online Physician Service Delivery Process on Patient Satisfaction. Decis. Support Syst. 2015, 78, 113–121. [Google Scholar] [CrossRef]

- Woolley, F.R.; Kane, R.L.; Hughes, C.C.; Wright, D.D. The effects of doctor-patient communication on satisfaction and outcome of care. Soc. Sci. Med. A 1978, 12, 123–128. [Google Scholar] [CrossRef]

- Woo, S.; Choi, M. Medical service quality, patient satisfaction and intent to revisit: Case study of public hub hospitals in the Republic of Korea. PLoS ONE 2021, 16, e0252241. [Google Scholar] [CrossRef]

- Li, J.; Bao, X.; Liu, X.; Ma, L. The Impact of Joining a Team on the Initial Trust in Online Physicians. Healthcare 2020, 8, 33. [Google Scholar] [CrossRef]

- Wei, W.; Ma, X.; Wang, J. Adaptive Experiments Toward Learning Treatment Effect Heterogeneity. J. R. Stat. Soc. Ser. B Stat. Methodol. 2025; forthcoming. [Google Scholar] [CrossRef]

- Cohen, C.; Pignata, S.; Bezak, E.; Tie, M.; Childs, J. Workplace Interventions to Improve Well-being and Reduce Burnout for Nurses, Physicians and Allied Healthcare Professionals: A Systematic Review. BMJ Open 2023, 13, e071203. [Google Scholar] [CrossRef]

- Forbus, J.J.; Berleant, D. Discrete-Event Simulation in Healthcare Settings: A Review. Modelling 2022, 3, 417–433. [Google Scholar] [CrossRef]

- Chen, Z.; Song, Q.; Wang, A.; Xie, D.; Qi, H. Study on the relationships between doctor characteristics and online consultation volume in the online medical community. Healthcare 2022, 10, 1551. [Google Scholar] [CrossRef]

- Jiang, H.; Mi, Z.; Xu, W. Online medical consultation service–oriented recommendations: Systematic review. J. Med. Internet Res. 2024, 26, e46073. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Lan, Y.-C.; Chang, Y.-W.; Chang, P.-Y. Exploring doctors’ willingness to provide online counseling services: The roles of motivations and costs. Int. J. Environ. Res. Public Health 2020, 17, 110. [Google Scholar] [CrossRef]

- Kammrath Betancor, P.; Boehringer, D.; Jordan, J.; Lüchtenberg, C.; Lambeck, M.; Ketterer, M.C.; Reinhard, T.; Reich, M. Efficient patient care in the digital age: Impact of online appointment scheduling in a medical practice and a university hospital on the “no-show”-rate. Front. Digit. Health 2025, 7, 1567397. [Google Scholar] [CrossRef]

- Chen, P. Effects of the entropy weight on TOPSIS. Expert Syst. Appl. 2021, 168, 114186. [Google Scholar] [CrossRef]

- Wilkins, A.S. To lag or not to lag?: Re-evaluating the use of lagged dependent variables in regression analysis. Political Sci. Res. Methods 2018, 6, 393–411. [Google Scholar] [CrossRef]

- O’Malley, A.J. Instrumental variable specifications and assumptions for longitudinal analysis of mental health cost offsets. Health Serv. Outcomes Res. Methodol. 2012, 12, 254–272. [Google Scholar] [CrossRef][Green Version]

- Sun, L.; Abraham, S. Estimating dynamic treatment effects in event studies with heterogeneous treatment effects. J. Econom. 2021, 225, 175–199. [Google Scholar] [CrossRef]

- Wooldridge, J.M. Econometric Analysis of Cross Section and Panel Data; MIT Press: Cambridge, MA, USA, 2010. [Google Scholar]

- Di Stefano, G.; Lo Piccolo, E.; Cicero, L. How job crafting dimensions differentially moderate the translation of work conditions into stress perceptions. Behav. Sci. 2025, 15, 793. [Google Scholar] [CrossRef]

- Knight, C.; Tims, M.; Gawke, J.; Parker, S.K. When do job crafting interventions work? The moderating roles of workload, intervention intensity, and participation. J. Vocat. Behav. 2021, 124, 103522. [Google Scholar] [CrossRef]

- Li, S.; Meng, B.; Wang, Q. The double-edged sword effect of relational crafting on job well-being. Front. Psychol. 2022, 13, 713737. [Google Scholar] [CrossRef] [PubMed]

- Wolski, M.; Howard, L.; Richardson, J. A Trust Framework for Online Research Data Services. Publications 2017, 5, 14. [Google Scholar] [CrossRef]

- Gerlich, M. Exploring Motivators for Trust in the Dichotomy of Human—AI Trust Dynamics. Soc. Sci. 2024, 13, 251. [Google Scholar] [CrossRef]

- Yan, Y.; Lu, B.; Xu, T. Exploring the Relationship between Host Self-Description and Consumer Purchase Behavior Using a Self-Presentation Strategy. Systems 2023, 11, 430. [Google Scholar] [CrossRef]

| Variable | Abbreviation | Variable Definition | Data Type |

|---|---|---|---|

| Online consultation volume (t+1) | OCVt+1 | The number of online consultations completed by doctors in the following month. A higher value indicates a greater volume of online consultations. Apply the natural log (ln) transformation to the value. | Continuous |

| Offline service volume (t+1) | OSVt+1 | The number of offline outpatient visits completed by doctors in the following month. A higher value indicates a greater volume of offline services. Apply the natural log (ln) transformation to the value. | Continuous |

| User evaluation performance (t+1) | UEPt+1 | The subjective rating given by platform patients to doctor services. The value ranges from 0 to 100; a score closer to 100 indicates higher patient satisfaction. | Continuous |

| Proactive crafting index (t) | PCIt | The overall degree to which doctors actively shape their work behavior on the platform, calculated using the entropy weight method. The value ranges from 0 to 1; a value closer to 1 indicates a higher level of proactive crafting behavior. | Continuous |

| Latest Review (t) | LRt | The latest number of comments from patients on the platform about the doctor. A higher value reflects a greater number of recent evaluations. | Continuous |

| Online average price (t) | OAPt | The average price for doctors’ online consultations. The value represents consultation pricing; a higher value indicates a higher online service fee. | Continuous |

| Offline service price (t) | OSPt | Doctors’ offline consultation prices. The value represents pricing for offline services; a higher value indicates a higher fee. | Continuous |

| Number of online and offline services (t) | NOOSt | Total number of online and offline consultations by doctors. A higher value indicates a greater total number of services provided. | Continuous |

| Disease urgency level (t) | DULt | The urgency level of the disease consulted by the patient. Determined by whether the consultation was with an emergency department; acute = 1, non-acute = 0. | Binary (Nominal) |

| Doctors’ professional level | DPLt | Professional title hierarchy of doctors. Categorized by doctor rank; chief physicians are coded as high-level (1), all others as non-high-level (0). | Binary (Ordinal) |

| Area (t) | Areat | Doctors’ location. Categorized by region based on National Bureau of Statistics classifications: Eastern = 1, Western = 2, Central = 3, Northeastern = 4. | Categorical |

| VarName | Obs | Mean | SD | Min | Median | Max |

|---|---|---|---|---|---|---|

| OCVt+1 | 23,455 | 1.602 | 1.346 | 0 | 1.386 | 5.659 |

| OSVt+1 | 10,969 | 1.985 | 1.499 | 0 | 1.792 | 5.591 |

| UEPt+1 | 42,500 | 98.392 | 3.311 | 75 | 99.700 | 100 |

| PCIt | 30,102 | 0.243 | 0.120 | 0 | 0.287 | 1 |

| LRt | 42,211 | 129.092 | 645.516 | 0 | 27 | 29,132 |

| OAPt | 42,504 | 104.191 | 103.566 | 0 | 75 | 1333.333 |

| OSPt | 42,504 | 27.240 | 16.337 | 4 | 25 | 100 |

| NOOSt | 42,504 | 1778.830 | 4458.605 | 0 | 379 | 98,797 |

| DULt | 42,504 | 0.133 | 0.339 | 0 | 0 | 1 |

| DPLt | 42,504 | 0.715 | 0.451 | 0 | 1 | 1 |

| Areat | 42,504 | 13.294 | 12.027 | 1 | 11 | 29 |

| OCVt+1 | OSVt+1 | UEPt+1 | PCIt | LRt | OAPt | OSPt | NOOSt | DPLt | DULt | Areat | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| OCVt+1 | 1 | ||||||||||

| OSVt+1 | 0.286 *** | 1 | |||||||||

| UEPt+1 | 0.076 *** | 0.018 * | 1 | ||||||||

| PCIt | 0.160 *** | −0.011 *** | 0.054 *** | 1 | |||||||

| LRt | 0.375 *** | 0.215 *** | 0.042 *** | 0.236 *** | 1 | ||||||

| OAPt | 0.051 *** | 0.280 *** | 0.024 *** | −0.062 *** | −0.001 | 1 | |||||

| OSPt | −0.043 *** | 0.281 *** | 0.010 ** | −0.081 *** | −0.043 *** | 0.485 *** | 1 | ||||

| NOOSt | 0.348 *** | 0.464 *** | 0.016 *** | 0.114 *** | 0.461 *** | 0.258 *** | 0.156 *** | 1 | |||

| DPLt | −0.063 *** | 0.188 *** | 0.029 *** | −0.085 *** | −0.042 *** | 0.287 *** | 0.451 *** | 0.125 *** | 1 | ||

| DULt | −0.015 ** | −0.013 | 0.021 *** | 0.014 ** | 0.006 | 0.030 *** | −0.012 ** | −0.032 *** | −0.006 | 1 | |

| Areat | 0.002 | −0.254 *** | −0.020 *** | −0.031 *** | −0.008 * | −0.179 *** | −0.419 *** | −0.106 *** | −0.023 *** | −0.035 *** | 1 |

| Var | OCVt+1 | OSVt+1 | UEPt+1 | PCIt | LRt | OAPt | OSPt | NOOSt | DPLt | DULt | Areat |

|---|---|---|---|---|---|---|---|---|---|---|---|

| VIF | 1.32 | 1.36 | 1.06 | 1.08 | 1.25 | 1.37 | 2.11 | 1.56 | 1.32 | 1.01 | 1.52 |

| 1/VIF | 0.757 | 0.735 | 0.947 | 0.929 | 0.803 | 0.728 | 0.474 | 0.641 | 0.757 | 0.992 | 0.658 |

| (1) | (2) | (3) | |

|---|---|---|---|

| OCVt+1 | OSVt+1 | UEPt+1 | |

| PCIt | 1.279 ** | −3.413 ** | 0.011 |

| −0.46 | −1.351 | −0.167 | |

| PCI2t | −2.809 ** | 9.341 ** | |

| −1.213 | −3.831 | ||

| LRt | 0.001 | 0.000 | 0.000 ** |

| 0.000 | 0.001 | 0.000 | |

| OAPt | 0.003 ** | 0.001 | −0.001 |

| −0.001 | −0.001 | 0 | |

| OSPt | −0.017 | 0.009 | 0.003 |

| −0.012 | −0.05 | −0.008 | |

| NOOSt | 0.001 *** | 0.001 *** | −0.001 ** |

| 0.000 | 0.000 | 0.001 | |

| _cons | 1.218 ** | −0.077 | 98.754 *** |

| −0.411 | −1.647 | −0.243 | |

| ID Fixed | Yes | Yes | Yes |

| Month Fixed | Yes | Yes | Yes |

| N | 18,000 | 8827 | 30,000 |

| R2 | 0.064 | 0.443 | 0.004 |

| R2_a | 0.064 | 0.442 | 0.004 |

| F | 97.673 | 404.094 | 4.843 |

| OCVt+1 | OSVt+1 | UEPt+1 | |

|---|---|---|---|

| PCIt | 1.466 *** | −1.719 ** | −0.006 |

| −0.385 | −0.739 | −0.076 | |

| PCI2t | −3.348 *** | 4.456 ** | |

| −0.986 | −2.039 | ||

| LRt | 0.001 | 0.000 | 0.000 ** |

| 0.000 | 0.001 | 0.000 | |

| OAPt | 0.002 ** | 0.001 | 0 |

| −0.001 | −0.001 | 0 | |

| OSPt | −0.016 | 0.008 | 0.007 |

| −0.012 | −0.05 | −0.008 | |

| NOOSt | 0.000 ** | 0.001 *** | −0.000 ** |

| 0.001 | 0.001 | 0.001 | |

| _cons | 1.375 *** | −0.122 | 98.657 *** |

| −0.389 | −1.633 | −0.232 | |

| ID Fixed | Yes | Yes | Yes |

| Month Fixed | Yes | Yes | Yes |

| N | 18,000 | 8827 | 30,000 |

| R2 | 0.063 | 0.451 | 0.008 |

| R2_a | 0.062 | 0.451 | 0.008 |

| F | 100.639 | 420.567 | 6.728 |

| OCVt+1 | OSVt+1 | UEPt+1 | |

|---|---|---|---|

| PCIt | 1.473 *** | −1.292 * | −0.002 |

| −0.385 | −0.676 | −0.076 | |

| PCI2t | −3.375 *** | 3.223 * | |

| −0.985 | −1.855 | ||

| NNRt | 0.015 | 0.037 ** | −0.062 ** |

| −0.01 | −0.014 | −0.021 | |

| OAPt | 0.002 ** | 0.001 | 0 |

| −0.001 | −0.001 | 0 | |

| OSPt | −0.016 | 0.008 | 0.006 |

| −0.012 | −0.05 | −0.008 | |

| NOOSt | 0.000 ** | 0.001 *** | 0.001 |

| 0.000 | 0.000 | 0.000 | |

| _cons | 1.378 *** | 0.034 | 98.666 *** |

| −0.387 | −1.617 | −0.238 | |

| ID Fixed | Yes | Yes | Yes |

| Month Fixed | Yes | Yes | Yes |

| N | 18,000 | 8827 | 30,000 |

| R2 | 0.063 | 0.452 | 0.014 |

| R2_a | 0.063 | 0.452 | 0.013 |

| F | 90.615 | 389.142 | 6.792 |

| OCVt+1 | OSVt+1 | UEPt+1 | |

|---|---|---|---|

| NewPCIt | 1.635 *** | −2.404 *** | −0.036 |

| −0.462 | −0.44 | −0.123 | |

| NewPCI2t | −6.797 ** | 12.568 *** | |

| −2.316 | −1.924 | ||

| LRt | 0.001 | 0.000 | 0.000 ** |

| 0.000 | 0.001 | 0.000 | |

| OAPt | 0.002 ** | 0.001 | 0 |

| −0.001 | −0.001 | 0 | |

| OSPt | −0.016 | 0.008 | 0.007 |

| −0.012 | −0.05 | −0.008 | |

| NOOSt | 0.000 *** | 0.001 *** | −0.000 ** |

| 0.000 | −0.001 | 0.000 | |

| _cons | 1.331 *** | 0.175 | 98.660 *** |

| −0.39 | −1.633 | −0.231 | |

| ID Fixed | Yes | Yes | Yes |

| Month Fixed | Yes | Yes | Yes |

| N | 18,000 | 8826 | 30,000 |

| R2 | 0.063 | 0.454 | 0.008 |

| R2_a | 0.063 | 0.453 | 0.008 |

| F | 100.359 | 440.954 | 6.724 |

| PCIt | PCI2t | OCVt+1 | PCIt | PCI2t | OSVt+1 | PCIt | UEPt+1 | |

|---|---|---|---|---|---|---|---|---|

| L.PCIt | −0.05 ** | −0.088 *** | −0.111 ** | −0.074 *** | 0.222 *** | |||

| −0.033 | −0.013 | −0.055 | −0.021 | −0.009 | ||||

| L.PCI2t | 0.629 *** | 0.437 *** | 0.737 *** | 0.379 *** | ||||

| −0.083 | −0.032 | −0.143 | −0.055 | |||||

| PCIt | 5.443 ** | −37.303 *** | −0.194 | |||||

| −2.598 | −13.344 | −0.232 | ||||||

| PCI2t | −13.955 ** | 93.726 *** | ||||||

| −5.696 | −31.165 | |||||||

| LRt | 0.001 | 0.000 | 0.000 ** | 0.001 | 0.000 | 0.000 ** | 0.000 | 0.000 ** |

| 0.000 | 0.001 | 0.000 | 0.000 | 0.001 | 0.000 | 0.001 | 0.000 | |

| OAPt | 0.000 | −0.000 ** | 0.002 ** | 0.001 | −0.000 ** | 0.003 * | 0.001 | 0.001 |

| 0.001 | 0.000 | −0.001 | 0.000 | 0.001 | −0.002 | 0.001 | 0.002 | |

| OSPt | 0.002 ** | 0.001 | −0.015 | 0.004 | 0.002 | 0.019 | −0.001 | 0.005 |

| −0.001 | 0.000 | −0.013 | −0.003 | −0.001 | −0.048 | −0.001 | −0.007 | |

| NOOSt | 0.000 | 0.000 *** | 0.000 *** | 0.001 | 0.000 *** | 0.000 | 0.000 ** | −0.000 ** |

| 0.001 | 0.001 | −0.001 | 0.000 | −0.001 | 0.000 | 0.000 | 0.000 | |

| ID Fixed | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Month Fixed | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| N | 14,827 | 14,827 | 14,827 | 6000 | 6000 | 6000 | 16,154 | 16,154 |

| R2 | 0.006 | 0.635 | 0.001 | |||||

| F | 11.455 | 20.08 | 1.644 | |||||

| CD Wald F | 93.058 | 17.14 | 662.02 | |||||

| SW S stat. | 11.987 | 35.434 | 0.981 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, W.; Yuan, Y.; Bai, Z.; Sang, S. How Doctors’ Proactive Crafting Behaviors Influence Performance Outcomes: Evidence from an Online Healthcare Platform. J. Theor. Appl. Electron. Commer. Res. 2025, 20, 226. https://doi.org/10.3390/jtaer20030226

Liu W, Yuan Y, Bai Z, Sang S. How Doctors’ Proactive Crafting Behaviors Influence Performance Outcomes: Evidence from an Online Healthcare Platform. Journal of Theoretical and Applied Electronic Commerce Research. 2025; 20(3):226. https://doi.org/10.3390/jtaer20030226

Chicago/Turabian StyleLiu, Wenlong, Yashuo Yuan, Zifan Bai, and Shenghui Sang. 2025. "How Doctors’ Proactive Crafting Behaviors Influence Performance Outcomes: Evidence from an Online Healthcare Platform" Journal of Theoretical and Applied Electronic Commerce Research 20, no. 3: 226. https://doi.org/10.3390/jtaer20030226

APA StyleLiu, W., Yuan, Y., Bai, Z., & Sang, S. (2025). How Doctors’ Proactive Crafting Behaviors Influence Performance Outcomes: Evidence from an Online Healthcare Platform. Journal of Theoretical and Applied Electronic Commerce Research, 20(3), 226. https://doi.org/10.3390/jtaer20030226