1. Introduction

Short text generation is becoming increasingly critical in electronic commerce, where real-time communication and engagement play a central role in marketing, branding, and community building. Platforms such as Twitter (now X), Instagram, and Reddit have transformed interactions between consumers and organizations, relying heavily on short-form content like tweets, captions, and announcements to convey information quickly and effectively. Unlike long-form narratives, short texts must be concise, emotionally resonant, and optimized for platform constraints, including strict character limits and evolving linguistic norms. Micro-content must also adapt to audience preferences, trending topics, and time-sensitive events—making automation in this domain both technically challenging and commercially valuable.

From a societal perspective, short text generation enables high-impact applications in domains such as emergency communication, sentiment monitoring, and political discourse analysis. A particularly dynamic and commercially significant use case is the Non-Fungible Token (NFT) ecosystem, which has rapidly evolved since 2020 into a core pillar of the digital economy. NFTs intersect with diverse domains including digital art, gaming, collectibles, and metaverse assets, with platforms like Twitter serving as the primary channel for community engagement and promotional activity.

Recent advances in generative artificial intelligence—particularly large language models (LLMs) such as GPT [

1], BLOOM [

2], LLaMA [

3], Mistral [

4], DeepSeek [

5], and Claude [

6]—have significantly improved machine-generated text quality. Domain-specific models like BERTweet and TwHIN-BERT [

7,

8] further optimize generation for the informal, emoji-rich language of social platforms.

However, existing LLM-based generation techniques do not employ reinforcement-based optimization grounded in real-world engagement metrics, and most fail to adapt to NFT-specific terminology, style, and community expectations. Engagement indicators such as likes, replies, and retweets are rarely included in generation objectives, and reinforcement learning remains underutilized for optimizing social media content toward concrete performance goals.

To address these limitations, we propose RL-TweetGen, an AI-powered socio-technical framework that integrates large language models with reinforcement learning to automate short-form content generation optimized for engagement. RL-TweetGen combines:

Domain-specific dataset construction and intent-aware prompt engineering;

Modular fine-tuning using Low-Rank Adaptation (LoRA);

Multi-dimensional output control via a Length–Style–Context (LSC) Variation Algorithm;

Reinforcement learning optimization guided by engagement-prediction models and expert feedback.

Research Contributions

RL-TweetGen presents a novel end-to-end system for generating high-quality, semantically aligned, and engagement-optimized tweets, specifically tailored for NFT marketing, branding, and community engagement. The key contributions of the system are outlined below:

Domain-Specific Dataset Construction: Curated NFT-related tweets with structured prompts, labeled metadata, and engagement metrics for model training and evaluation.

Intent-Aware Prompt Engineering: Semantic classification combined with domain expertise to generate structured, goal-aligned prompts.

Contextual and Semantic Fine-Tuning: Fine-tuning three instruction-tuned LLMs—LLaMA 3.1–8B, Mistral 7B, and DeepSeek 7B—using LoRA to align outputs with NFT-specific language and tone.

LSC Variation Algorithm: A novel mechanism for generating diverse outputs across length, style, and context dimensions for targeted audience engagement.

Reinforcement Learning Engagement Optimizer (RLEO): A blended reward function combining engagement score predictions (via XGBoost) and expert feedback, integrated with advanced decoding strategies (Tailored Beam Search, Enhanced Nucleus Sampling, and Contextual Temperature Scaling).

Together, these components enable RL-TweetGen to produce domain-aware, style-controllable, and engagement-optimized tweets, addressing a key gap in existing RL-NLP systems for digital commerce.

The remainder of this paper is organized as follows:

Section 2 reviews the literature on short-text generation, LLMs, reinforcement learning, and engagement optimization in digital commerce.

Section 3 details the RL-TweetGen methodology, including architecture, data curation, and model tuning.

Section 4 describes the experimental setup and reinforcement learning strategy.

Section 5 presents the evaluation results and analysis.

Section 7 includes representative use cases.

Section 8 concludes with key contributions and future directions.

2. Related Work

This section provides a comprehensive review of the key research areas that underpin the development of the proposed RL-TweetGen. It examines four interconnected domains: the evolution of short-text-generation techniques, the advancement and domain adaptation of large language models (LLMs), the application of reinforcement learning for goal-directed text generation, and the engagement dynamics driven by social media within the NFT ecosystem. Collectively, these areas establish both the technical foundation and the socio-commercial rationale for building an engagement-optimized, domain-aware tweet-generation system for digital commerce platforms.

2.1. Evolution of Short-Text Generation

Short-text generation has progressed from rule-based systems to sophisticated neural models capable of generating concise and contextually appropriate content. Early approaches relied on templates, statistical language models (e.g., n-grams), and Hidden Markov Models (HMMs). While these techniques produced grammatically valid sentences, they lacked semantic richness, adaptability, and user-centric tone—key requirements for micro-content on platforms like Twitter and Instagram.

The introduction of Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks significantly improved sequence modeling and long-range dependency handling [

9]. Yet, these models still exhibited limitations in capturing global context, especially for shorter, multi-intent messages. The advent of Transformer-based models marked a paradigm shift, enabling more robust contextual representation via self-attention mechanisms and parallel processing [

10]. This breakthrough gave rise to general-purpose architectures that could be repurposed across NLP tasks, including summarization, question answering, and short-text generation.

Further innovations introduced controllability and semantic grounding into generation. Approaches like semantic-key-conditioned generation [

11], style-conditioned decoding, and emotion-aware microtext generation [

12,

13] improved output alignment with user intent. Evolutionary algorithms and neuro-symbolic hybrids have also been employed to optimize generation strategies for domain-specific tasks, including low-resource language processing and context-sensitive advertising [

14,

15].

While transformer-based models have greatly improved the fluency and contextual relevance of short-text generation, they often lack mechanisms to align outputs with task-specific objectives such as engagement optimization. Prior work on style-conditioned and emotion-aware generation has shown that microtext can be tailored for sentiment or tone, but these methods typically overlook real-time user interaction data and commercial performance indicators. RL-TweetGen extends these advances by integrating predictive engagement feedback directly into the generation process, enabling the creation of concise, personalized, and engagement-driven content that fits within platform character limits while preserving semantic and emotional resonance.

2.2. Large Language Models and Domain Adaptation

Large Language Models (LLMs) such as OpenAI’s GPT, Meta’s LLaMA, Mistral AI’s Mistral, and DeepSeek have revolutionized natural language generation. Trained on massive web-scale corpora, these models achieve state-of-the-art performance in zeroshot and few-shot scenarios across diverse tasks. However, their generality can be a limitation: without domain-specific adaptation, outputs may exhibit hallucinations, lack context awareness, or deviate from user expectations in specialized settings such as NFTs or digital marketing [

16,

17].

To address these challenges, fine-tuning strategies have been developed to tailor pre-trained models to specific application areas. Instruction tuning aligns outputs with task phrasing, while supervised fine-tuning with labeled examples improves task consistency. Parameter-efficient approaches—such as Low-Rank Adaptation (LoRA) [

18], adapters, and prompt tuning—reduce the computational and memory costs of adaptation, making customization feasible even on consumer-grade hardware [

19,

20]. Extensions like D

2LoRA [

21] further enhance efficiency in low-resource settings, enabling effective fine-tuning for domain-specific tasks such as title generation and healthcare communication.

Beyond supervised methods, alternative alignment techniques have emerged. Direct Preference Optimization (DPO) [

22] offers a reinforcement-free alternative to RLHF, either standalone or integrated with LoRA, and has shown benefits in reducing bias and improving relevance in applications such as e-commerce search. In RL-TweetGen, LoRA-based fine-tuning is combined with domain-informed prompt engineering and reinforcement learning to inject NFT-specific terminology, hashtags, slang, and discourse patterns into the generation pipeline.

Evaluating domain-adapted LLMs requires more than surface-level lexical metrics. Semantic-aware measures such as BERTScore [

23] and BLEURT [

24] capture contextual fidelity, while traditional metrics like ROUGE-L remain useful for structural comparison. These automated measures are often complemented by human-in-the-loop assessments to ensure alignment with domain expectations and engagement objectives.

Despite these advances, several challenges remain, including overfitting, domain drift, and maintaining robustness under evolving platform norms. Continuous validation, real-time feedback integration, and iterative training loop optimization are essential for reliable deployment in dynamic environments like social media. Moreover, the ethical implications of optimizing content solely for engagement cannot be overlooked. Prior work in persuasive computing [

25] and socio-technical AI design [

26] highlights risks such as manipulative algorithmic behavior, bias reinforcement, and clickbait tendencies—underscoring the need for ethically grounded generative systems.

In summary, while existing LLMs offer powerful general-purpose generation capabilities, they often lack domain specificity in highly contextual environments like NFTs. Domain adaptation methods such as instruction tuning, LoRA, and adapters improve linguistic alignment but rarely address downstream impact on audience engagement. RL-TweetGen bridges this gap by combining domain-specific fine-tuning with engagement-aware reinforcement learning, ensuring both linguistic fidelity and measurable performance in social media marketing.

2.3. Reinforcement Learning for Controlled and Goal-Aware Generation

Reinforcement Learning (RL) has emerged as a powerful paradigm for aligning text generation with human-defined objectives such as factual accuracy, safety, persuasion, and user engagement. Unlike supervised fine-tuning, which focuses on replicating labeled examples, RL optimizes models based on outcome-driven feedback, enabling adaptive and goal-oriented content generation.

Reinforcement Learning from Human Feedback (RLHF) has been central to this evolution, using reward signals from preference comparisons, annotation scores, or proxy metrics (e.g., engagement statistics) to guide optimization [

27]. This approach has been applied to improve helpfulness, reduce toxicity, and align tone with social norms. More recent methods, such as Direct Preference Optimization (DPO) and Reinforced Self-Training (ReST), reduce reliance on human labels by synthesizing high-quality training data from model outputs, thereby improving sample efficiency and scalability.

For short-text generation, RL supports optimization for complex, multi-objective goals—maximizing engagement (likes, retweets), adhering to platform constraints, and matching stylistic preferences. Custom reward functions, such as those used in RL-TweetGen, blend predictive engagement models (e.g., XGBoost-based scores) with domain expert evaluations to steer generation toward real-world effectiveness. However, challenges remain, including training instability, reward sparsity, credit assignment in short sequences, and the computational cost of exploration.

While prior RL-based text-generation systems have primarily targeted safety, helpfulness, or factuality, they often overlook domain-specific engagement factors and user behavior modeling. RL-TweetGen addresses this gap by defining a custom reward function grounded in actual Twitter engagement metrics (likes, retweets) and domain-specific sentiment signals, aligning the RL signal with real-world success criteria in NFT communities.

2.4. NFTs, Social Platforms, and Community Behavior

Non-Fungible Tokens (NFTs) have rapidly evolved from niche digital assets into a major pillar of the decentralized digital economy. Their use spans digital art, gaming, collectibles, identity, and metaverse assets. As NFTs gained traction, platforms like Twitter emerged as the central communication hub for creators, collectors, influencers, and marketplaces. These platforms are used not only for announcements and marketing but also for managing sentiment, building trust, and coordinating community behavior [

28,

29].

Studies show that tweet engagement (likes, comments, and retweets) directly affects NFT visibility and valuation. Emotions like excitement, trust, and curiosity are linked to increased user interaction and price fluctuations [

28]. The social layer of NFTs—via shared memes, cultural references, and reply threads—forms a participatory ecosystem where community feedback loops shape success. However, these dynamics are vulnerable to manipulation through bot amplification, influencer-led hype cycles, and misinformation [

30].

Transparency, authenticity, and ethical communication have therefore become critical for sustained community trust [

31]. Despite this, most generative NLP systems lack the granularity to interpret or generate content that aligns with NFT-specific discourse and audience expectations. There is a growing need for domain-aware generative tools that adapt to evolving vocabulary, meme culture, and sentiment trends.

By using NFTs as a real-world evaluation domain, RL-TweetGen demonstrates how socio-technical alignment—between language models, social context, and platform norms—can improve automated communication in commerce. Tweets announcing drops, responding to followers, or celebrating milestones are not just content—they are signals in a trust-based market.

While prior studies have explored how social media sentiment influences NFT valuation [

28,

29], few have translated these insights into generative modeling techniques. Moreover, traditional content-generation tools fail to engage with the fast-paced, meme-driven, and community-centric nature of NFT discourse. RL-TweetGen directly responds to this gap by combining domain-adapted LLMs with real-time engagement signals, making it capable of producing socially resonant content that aligns with cultural and emotional expectations of NFT communities. This socio-technical alignment is critical for trust-building and visibility in decentralized digital economies.

2.5. Toward an Integrated Framework for Engagement-Optimized Generation

While considerable progress has been made in short-text modeling, domain-specific fine-tuning, and RL-based control, most existing systems operate in silos. There is a lack of unified frameworks that combine all three elements in a scalable, modular, and socially grounded manner. Additionally, few systems incorporate engagement prediction directly into the learning loop, despite its clear importance in social commerce.

RL-TweetGen addresses this gap by integrating instruction-tuned LLMs, parameter-efficient domain adaptation, and reward-based optimization into a unified pipeline. Its evaluation within the NFT domain—characterized by emotional volatility, content saturation, and fast trend cycles—offers insights into how generative AI can be made responsive to both social and commercial imperatives. Existing research often treats text generation, domain adaptation, and engagement modeling as separate concerns. By contrast, RL-TweetGen integrates these three threads into a unified, modular architecture designed for high-impact content creation in dynamic commercial settings. It operationalizes insights from prior work—such as fine-tuning efficiency, reward-based optimization, and NFT community behavior—into a cohesive pipeline. This synthesis enables RL-TweetGen to generate not just grammatical or domain-correct content, but content that is strategically optimized for interaction, relevance, and ethical resonance within the social web.

The design of RL-TweetGen is grounded in five interrelated research areas, each contributing a critical dimension to its overall architecture. Advances in short-text generation provide the foundational modeling strategies for producing concise and coherent content. Building on this, developments in large language models (LLMs) and domain adaptation enable the deployment of instruction-tuned models tailored specifically for NFT-related discourse. Reinforcement learning frameworks introduce mechanisms for optimizing tweet generation based on engagement-oriented reward signals and expert feedback, aligning outputs with user interaction goals. Insights from the social dynamics of NFT communities further inform the system’s emphasis on stylistic variability, credibility markers, and audience sensitivity. These elements converge within a modular architecture designed to integrate and operationalize these research strands into a scalable, flexible, and engagement-aware framework.

More broadly, RL-TweetGen contributes to a growing movement toward responsible AI for digital commerce. By enabling controllable, engagement-driven, and ethically guided generation, the system advances the design of communication technologies that are not only linguistically fluent but also socially resonant and commercially effective.

3. Methodology

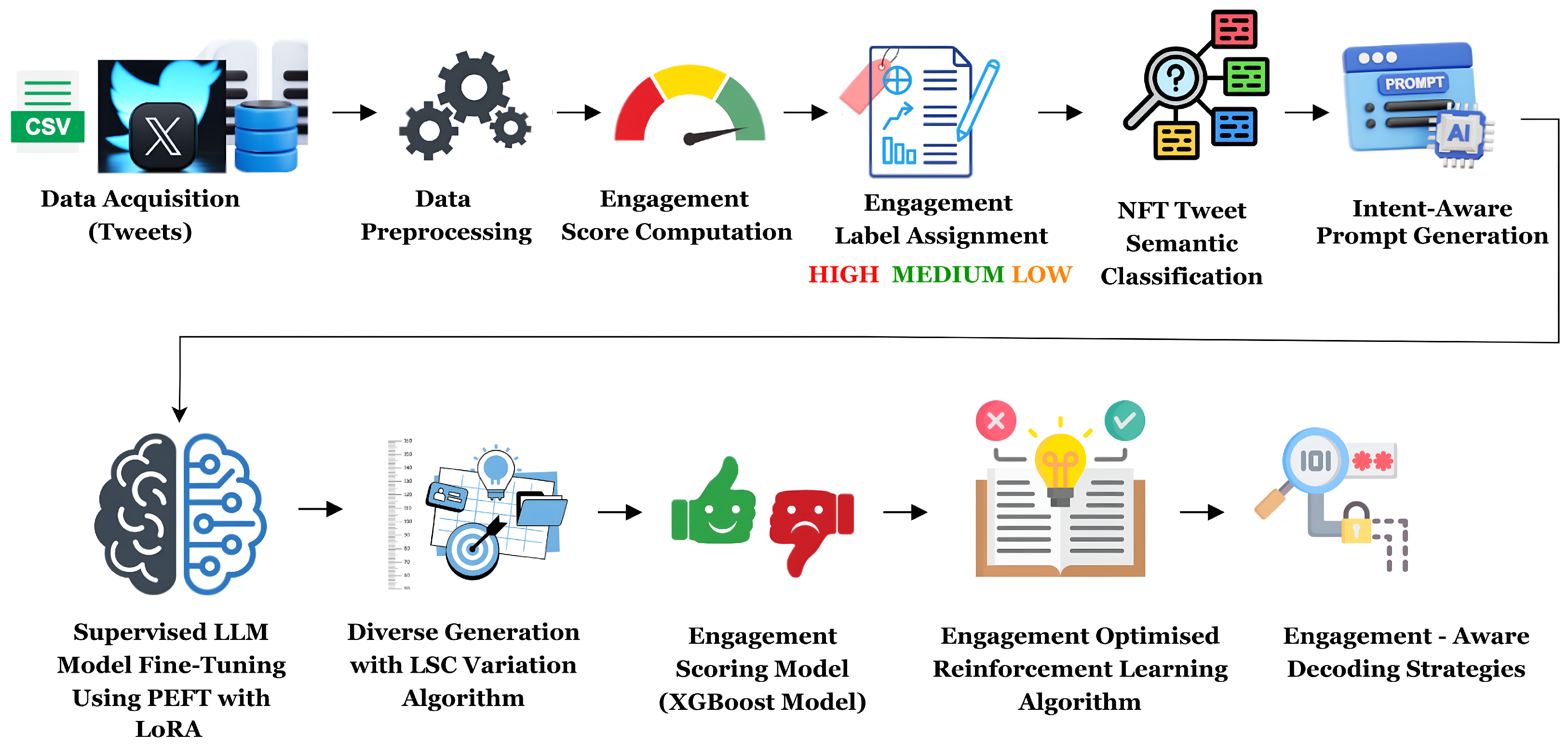

This section outlines the socio-technical architecture and implementation strategy of the proposed

RL-TweetGen system—an integrated framework for generating engagement-optimized tweets in the NFT domain. The system is composed of multiple interlinked modules, including data collection, semantic classification, structured prompt generation, large language model (LLM) fine-tuning, decoding strategy selection, reinforcement learning-based optimization, and iterative output refinement. The complete system architecture is depicted in

Figure 1.

3.1. Dataset Acquisition, Characteristics, and Preprocessing

The dataset used in this study was derived from the publicly available “Verified NFT Tweets” collection on Kaggle [

32], spanning tweets posted between 2020 and 2022. It provides a rich corpus of NFT-related tweets accompanied by detailed metadata and engagement statistics. All data usage strictly complied with the dataset’s Creative Commons Attribution–NonCommercial–ShareAlike 4.0 International License (CC BY-NC-SA 4.0) and Twitter’s data redistribution policy. No data was collected via the Twitter API, and the dataset was used solely for non-commercial, academic research purposes. A curated subset of 2134 high-quality tweets was selected for analysis and model development.

Each tweet entry includes metadata such as tweet ID, timestamp, username, tweet content, language, mentioned users, hashtags, cashtags, quoted users, engagement indicators (likes, retweets, replies), and media presence flags. These features enable comprehensive semantic and contextual modeling, supporting both generation and engagement-scoring tasks.

3.1.1. Preprocessing Pipeline

A multi-stage preprocessing pipeline was implemented to ensure textual quality, linguistic uniformity, and format consistency:

Duplicate Removal: Identical tweets were removed to reduce redundancy and prevent data leakage.

Short Text Filtering: Tweets with fewer than 20 characters were excluded to eliminate low-information posts.

Language Filtering: The langid library retained only English-language tweets to ensure linguistic consistency.

Encoding Normalization: A Latin-1 to UTF-8 fallback decoding procedure was applied to resolve encoding artifacts and recover any corrupted text. The conversion re-encodes tweet content into UTF-8, ensuring compatibility with modern NLP pipelines that require UTF-8 input.

Character Cleaning: Non-ASCII and corrupted characters were removed using regular expressions to preserve tokenizability.

Whitespace and Punctuation Normalization: Irregular spacing and malformed punctuation were standardized to improve parsing and formatting.

3.1.2. Dataset Statistics

The step-by-step preprocessing results are summarized in

Table 1.

The resulting dataset constitutes a semantically coherent, structurally clean, and contextually diverse corpus of NFT-specific tweets, suitable for training both generative language models and engagement-prediction modules. The engagement-level stratification ensures balanced representation across low-, medium-, and high-engagement tweets, enabling fair evaluation of the proposed RL-TweetGen framework.

3.2. Engagement Score Computation and Labeling

To quantify and categorize tweet-level user engagement, a composite engagement score was computed based on user interaction metrics. The scoring formula, presented in Equation (

1), assigns differential weights to likes, retweets, and replies—reflecting the relative depth of interaction, where replies are deemed most indicative of meaningful engagement.

The weights 1, 2, and 3 in Equation (

1) represent a hierarchy of user engagement: likes denote passive approval, retweets reflect content amplification, and replies suggest deeper interaction. This heuristic captures increasing effort and intent behind each action.

Following score computation, a quantile-based binning strategy was applied to discretize engagement values into three balanced classes: Low, Medium, and High. This stratification enables effective training of classification models and facilitates multi-class evaluation.

The full preprocessing and labeling workflow is outlined in Algorithm 1.

This preprocessing and engagement annotation procedure ensures clean, structured input data for model fine-tuning and facilitates reliable performance evaluation under a supervised learning framework.

| Algorithm 1 Data preprocessing and engagement scoring |

- 1:

Input: Raw_Tweets - 2:

Output: Cleaned_Tweets with Engagement Labels - 3:

Load raw dataset - 4:

for each tweet t in Raw_Tweets do - 5:

if length(t) < 20 or t is a duplicate then - 6:

Remove t - 7:

else - 8:

Detect language using langid; retain only English tweets - 9:

Normalize encoding from Latin-1 to UTF-8 - 10:

Remove non-ASCII characters using regular expressions - 11:

Normalize whitespace and punctuation - 12:

end if - 13:

end for - 14:

for each tweet t in cleaned set do - 15:

Compute Engagement Score: - 16:

end for - 17:

Apply quantile-based binning to all E - 18:

Assign label: Low, Medium, or High

|

3.3. Tweet Semantic Classification

To enable domain-aware NFT tweet generation, a semantic classification pipeline was designed to categorize tweets into six predefined domains: Art, Gaming, Music, Photography, Membership, and Profile Pictures (PFPs).

Annotation and Feature Extraction: Tweets were annotated using a rule-based strategy combining keyword matching with regex-based pattern detection to extract domain indicative cues. This heuristic labeling approach produced a weakly supervised training set aligned with the semantic content of each domain. For feature extraction, TF-IDF vectorization with bigrams was applied to capture discriminative token patterns relevant to NFT subject areas.

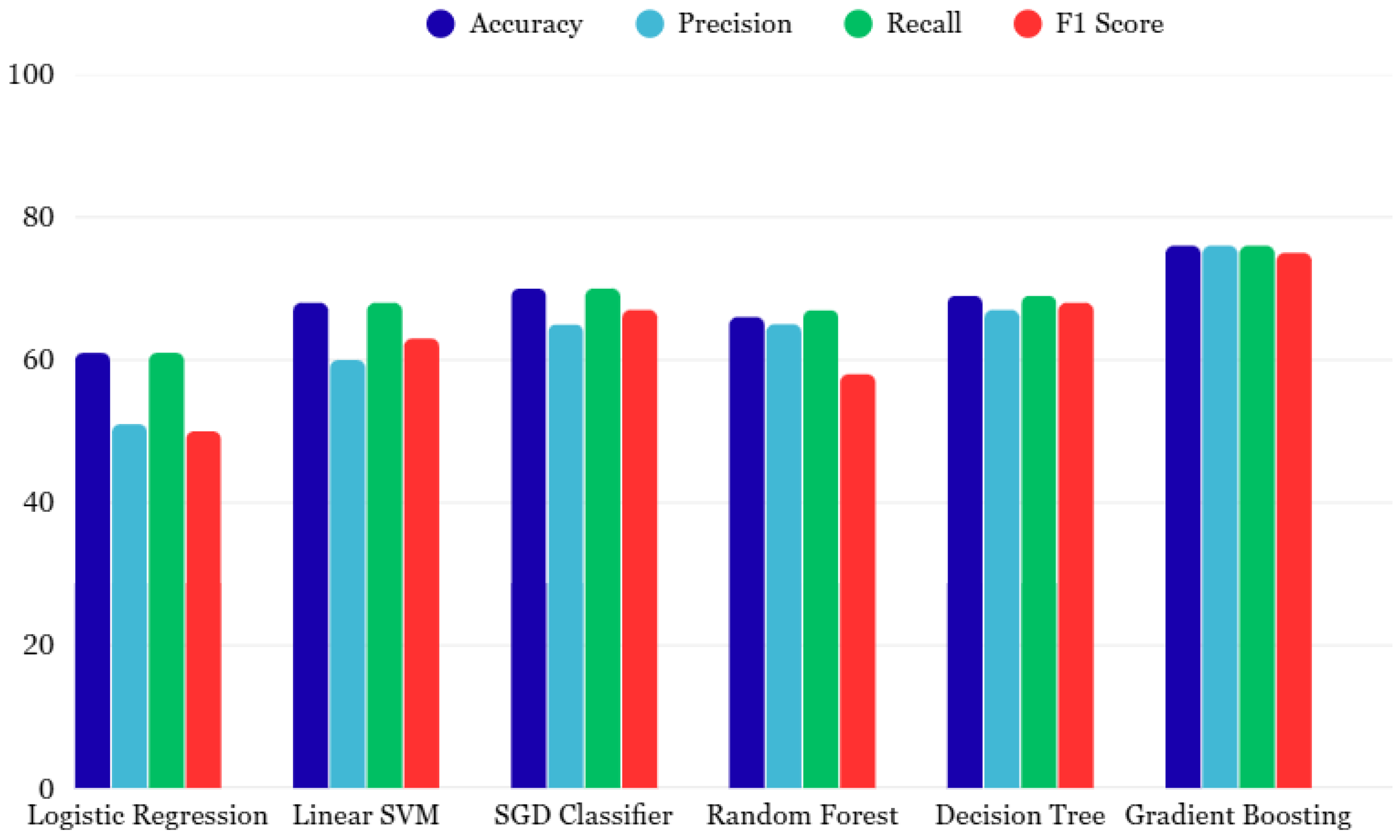

Model Training: The annotated dataset was used to train and evaluate a suite of traditional machine learning classifiers [

33], including Logistic Regression, Linear Support Vector Machine (SVM), Stochastic Gradient Descent (SGD) Classifier, Decision Tree, Random Forest, and Gradient Boosting.

Evaluation Strategy: Model training and evaluation were performed with stratified data splits to maintain balanced label distributions across semantic categories. Standard classification metrics were used to assess performance.

Model Selection and Prompt Construction: Among the evaluated models, the Gradient Boosting classifier achieved the highest validation accuracy (

Section 5.1 for detailed results) and was selected to generate semantic labels for guiding the construction of prompts. The output of this classification pipeline consisted of structured semantic labels, which were subsequently integrated into prompt templates to ensure that generated NFT tweets reflected coherent, domain-specific content.

3.4. Intent-Aware Prompt Generation

To generate relevant, coherent, and intent-aligned NFT tweets, a structured prompt-generation module was developed. This module transformed raw tweet text into natural language prompts that conditioned the generative model on both the semantic category and the extracted textual components of each tweet. The process began with a dataset of pre-labeled tweets, each annotated with one of six semantic intent categories—Art, Gaming, Music, Photography, Membership, or Profile Pictures (PFPs)—as determined by the semantic classification pipeline. These semantic intent labels acted as guiding signals for prompt construction, enabling intent-aware generation where the communicative goal of the tweet directly influenced the prompt’s content and structure. Each tweet underwent linguistic preprocessing using NLTK and spaCy to extract structured features:

Hashtag, Mention, and URL Extraction: Up to five hashtags, user mentions, and URLs were extracted using regular expressions.

Named Entity Recognition (NER): Named entities (e.g., organizations, people, products, locations) were identified using spaCy’s en_core_web_sm Named Entity Recognition model.

Keyword Extraction: Content-bearing keywords were extracted through tokenization, stopword removal, and frequency-based filtering.

Based on each tweet’s assigned semantic category, the module mapped it to a predefined intent-specific template encoding the communicative function of the tweet. These intent-aware templates were dynamically populated with the extracted features to construct complete, interpretable prompts, ensuring that the generated tweets clearly reflected the semantic purpose of the original content. The entire prompt construction process was implemented in batch using a CSV-based pipeline, generating a parallel dataset containing:

These prompt–tweet pairs were subsequently used as inputs for the RL-TweetGen System, which combined supervised fine-tuning with simulated reinforcement learning to train a generative model capable of producing tweets that are semantically grounded, intent-aligned, and optimized for engagement. Algorithm 2 outlines the proposed semantic and intent-aware prompt-generation process, enabling tailored input construction for NFT tweet generation.

| Algorithm 2 Proposed semantic and intent-aware prompt generation |

- 1:

Input: Cleaned_Tweet_Dataset - 2:

Output: Final_Prompt_Dataset - 3:

Semantic Annotation and Classification - 4:

Annotate each tweet with domain labels using keyword heuristics - 5:

Extract TF-IDF features with bigrams - 6:

Train classifiers: Logistic Regression, SVM, SGD, Decision Tree, Random Forest, Gradient Boosting - 7:

Evaluate each classifier using stratified split and metrics: accuracy, precision, recall, F1 - 8:

Select best-performing classifier (Gradient Boosting) - 9:

Predict semantic label: {Art, Gaming, Music, Photography, Membership, PFPs} - 10:

Prompt Feature Extraction - 11:

for each tweet in dataset do - 12:

Extract structural elements: hashtags, mentions, and URLs (up to 5) - 13:

Apply Named Entity Recognition (NER) using spaCy - 14:

Extract key tokens via tokenization and frequency filtering - 15:

Retrieve semantic label and compute engagement level - 16:

Select intent-specific prompt template based on semantic label - 17:

Populate prompt with extracted features and labels - 18:

end for - 19:

Save constructed prompts to Final_Prompt_Dataset

|

3.5. Model Selection Overview

To establish a strong foundation and performance baseline for NFT tweet generation, three state-of-the-art instruction-tuned language models were selected: Mistral-7B Instruct v0.1, LLaMA-3.1-8B Instruct, and DeepSeek LLM 7B Chat. These models were chosen for their robust instruction-following capabilities, efficient low-latency generation, and adaptability to domain-specific tasks—key requirements for producing short-form, high-impact content such as NFT-related tweets. A comparative summary of their architectural characteristics, model capacities, and instruction-following strengths is presented in

Table 2. This comparison underscores each model’s suitability for specific aspects of NFT tweet generation—such as contextual reasoning, sampling efficiency, and compatibility with LoRA-based fine-tuning.

The following sections provide deeper insights into each model’s architecture and its role within the proposed RL-TweetGen framework, highlighting how their distinct capabilities contribute to stylistically consistent, semantically grounded, and engagement-optimized tweet generation.

3.5.1. Mistral-7B Instruct V0.1

Mistral-7B Instruct v0.1, developed by Mistral AI, is a 7-billion parameter decoder only transformer model optimized for fast and memory-efficient inference. Its low-latency generation capabilities make it particularly well suited for time-sensitive tasks such as NFT tweet generation, where rapid content creation and iteration are critical.

Architecture: Mistral-7B incorporates architectural innovations including Grouped Query Attention (GQA), Sliding Window Attention (SWA), and FlashAttention v2, which collectively enhance sequence processing efficiency and reduce computational overhead. These features enable the model to effectively handle short-form, contextually rich text generation while maintaining high throughput and responsiveness.

Key Features and Role in RL-TweetGen: Mistral-7B is designed to support generation of stylistically diverse outputs with minimal latency. Its efficient architecture allows for quick generation of multiple tweet variants, making it ideal for live updates, community engagement, and A/B testing in NFT marketing workflows. Within the RL-TweetGen system, these capabilities facilitate rapid experimentation and iteration, ensuring tweets are not only timely but also aligned with current trends and audience expectations. Its balance of speed, efficiency, and coherence positions Mistral-7B as a strong candidate for applications requiring high-frequency, high-quality text outputs.

3.5.2. LLaMA-3.1-8B Instruct

LLaMA-3.1-8B Instruct, developed by Meta, is an 8-billion-parameter decoder only transformer model optimized for instruction-following and extended context handling. It is well-suited for NFT tweet-generation tasks that require consistency, contextual awareness, and precise control over tone and structure.

Architecture: The model builds on the transformer architecture and incorporates extended Rotary Positional Embeddings (RoPE) and sliding window attention, allowing it to process longer and more structured inputs. These capabilities are particularly useful for generating sequenced or grouped tweets—such as mint announcements, update threads, or promotional campaigns. Additional features include layer normalization, instruction tuning, and mixed-precision training, along with full LoRA compatibility to support efficient domain-specific fine-tuning.

Key Features and Role in RL-TweetGen: LLaMA-3.1-8B excels in generating contextually rich, semantically coherent, and style-consistent tweets. Its support for long prompts and structured outputs enables it to maintain alignment across multiple tweet variants or parts of a campaign. Within the RL-TweetGen system, LLaMA is especially effective in tasks requiring expressive flexibility, semantic preservation, and uniform tone, making it ideal for generating tweets related to NFT project updates, event promotions, and community engagement.

3.5.3. DeepSeek LLM 7B Chat

DeepSeek LLM 7B Chat, developed by DeepSeek AI, is a 7-billion-parameter decoder only transformer designed with a focus on extensibility, lightweight deployment, and real-time performance. It supports efficient adaptation to domain-specific tasks, such as NFT tweet generation, through instruction tuning and modular fine-tuning techniques.

Architecture: The model employs Rotary Positional Embeddings and Pre-Layer Normalization, which enhance training stability and decoding efficiency. Its architecture is optimized for token-level performance and is fully compatible with Low-Rank Adaptation (LoRA), allowing for rapid fine-tuning to match domain-specific tone, vocabulary, and structure.

Key Features and Role in RL-TweetGen DeepSeek 7B Chat offers robust instruction-following capability and is optimized for generating concise, clear, and domain-aligned outputs. Its design emphasizes scalability and responsiveness, making it suitable for narrow domain tweet-generation tasks such as reusable NFT templates, collection highlights, FAQs, and community engagement messages. Within RL-TweetGen, DeepSeek functions as a lightweight and adaptable model for automated NFT tweet generation tasks.

3.6. Baseline Generation (Zeroshot LLM)

To establish a performance benchmark, a baseline tweet-generation module was implemented using zeroshot prompting on state-of-the-art openweight large language models (LLMs), specifically LLaMA-3.1-8B Instruct, Mistral-7B Instruct v0.1, and DeepSeek LLM 7B Chat. This component evaluated the capacity of pretrained, unadapted models to generate coherent NFT-related tweets from structured prompts without any domain-specific fine-tuning. The procedure was as follows:

Data Preparation: Prompts were extracted from the structured prompt dataset produced by the prompt-generation module.

Model Configuration: Each LLM was loaded using the Hugging Face Transformers library with quantized 4-bit weights (BitsAndBytes) for efficient inference on available GPUs. Sampling parameters were configured to encourage diverse but controlled outputs, including settings for maximum token-generation length, temperature-based sampling, nucleus sampling, and repetition penalties.

Prompt Formatting: Prompts were wrapped in a structured instruction template aligned with the Instruct format to encourage concise, engaging, and professional tweet generation.

Generation and Postprocessing: Each prompt was fed into the model’s text-generation pipeline to produce candidate tweets. The generated outputs were truncated to Twitter’s 280-character limit and filtered for minimum length to discard failures or incoherent generations.

This baseline zeroshot generation step provided a reference point for evaluating the effectiveness of supervised fine-tuning and reinforcement learning in subsequent stages of the RL-TweetGen system.

3.7. Supervised Model Fine-Tuning Using PEFT with LoRA

To adapt large language models (LLMs) for the domain-specific task of NFT tweet generation, we employ Parameter-Efficient Fine-Tuning (PEFT) using the Low-Rank Adaptation (LoRA) technique. This method introduces a minimal number of additional trainable parameters into the model, significantly reducing computational and memory requirements during fine-tuning, while keeping the pretrained weights frozen.

LoRA approximates the full-rank weight update

using a low-rank decomposition, as shown in Equation (

2):

where

,

, and

. The matrices

A and

B are the only trainable parameters introduced during fine-tuning.

Equation (

2) represents the low-rank factorization of the weight update matrix

into two smaller matrices. This is grounded in linear algebra, where any high-rank matrix can be closely approximated by the product of two low-rank matrices. This reduces the number of trainable parameters from

to

, enabling more efficient optimization.

The final adapted weight matrix is obtained by adding the low-rank update to the frozen pretrained weight matrix, as defined in Equation (

3):

Equation (

3) shows how the low-rank update

is applied to the original weight matrix

W. From an engineering perspective, this formulation enables fine-tuning with minimal additional memory and computation, as only

A and

B are updated while the original weights remain unchanged. This approach supports scalable, task-specific adaptation without degrading the generalization ability of the base model.

This design preserves the generalization capabilities of the base model while introducing sufficient flexibility to support efficient domain adaptation for NFT-specific linguistic patterns and engagement styles.

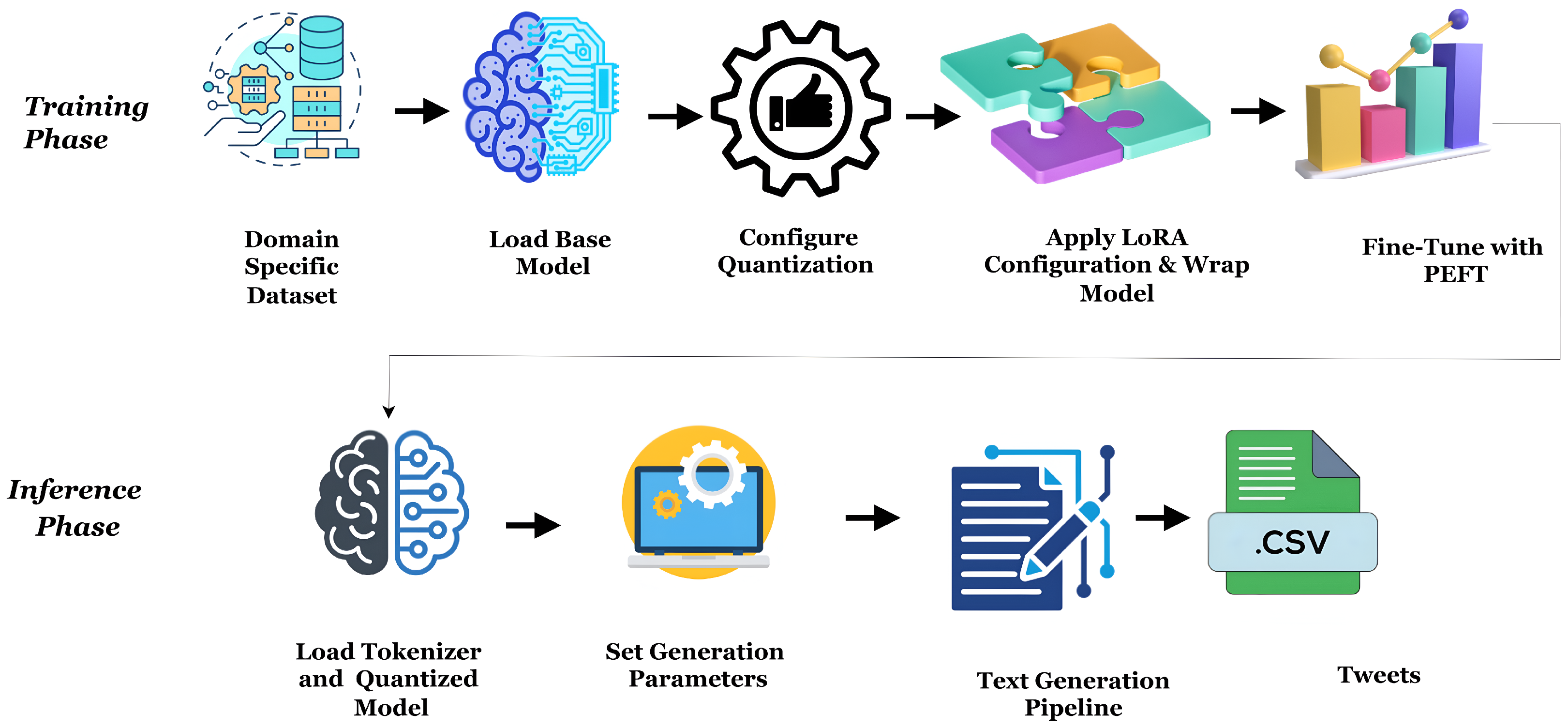

Figure 2 illustrates the LoRA-based Parameter-Efficient Fine-Tuning (PEFT) strategy, which adapts pretrained language models to the domain-specific task of NFT tweet generation by introducing low-rank updates while keeping the base model weights frozen.

3.7.1. Base Model and Tokenizer Initialization

The fine-tuning process builds upon the zeroshot generation setup described earlier in

Section 3.6. We initialize three pretrained, instruction-tuned, decoder-only transformer models: LLaMA, Mistral, and DeepSeek. These models are specifically designed for instruction-following tasks and possess the architectural capacity to generate high-quality, coherent, and context-aware NFT-related tweets.

Each model is coupled with its corresponding tokenizer, configured with consistent settings for padding, truncation, and attention masking. These configurations ensure uniform input sequence lengths across training batches, contributing to training stability and facilitating efficient inference during generation. This initialization step forms the foundation for subsequent domain-specific adaptation using parameter-efficient fine-tuning strategies.

3.7.2. Dataset Loading and Preprocessing

A curated dataset of NFT-related tweets is used for supervised fine-tuning. Each tweet is first tokenized using the corresponding tokenizer of the selected base model. The tokenized sequences are then truncated and padded to a fixed maximum length (typically 64 tokens) to ensure uniform input dimensions across batches.

The data is structured for Causal Language Modeling (CLM), where the objective is to predict the next token given all previous tokens. This autoregressive setup aligns naturally with the task of tweet generation, enabling the model to learn the sequential dependencies necessary for producing fluent and context-aware NFT tweets.

3.7.3. Base Models and Checkpoints

We fine-tuned the following frozen base model checkpoints using parameter-efficient LoRA adapters:

LLaMA-7B: meta-llama/Llama-2-7b-hf

Mistral-7B-Instruct: mistralai/Mistral-7B-Instruct-v0.2

DeepSeek-7B-Base: deepseek-ai/deepseek-llm-7b-base

3.7.4. LoRA Configuration and Target Modules

To enable parameter-efficient fine-tuning, LoRA adapters were injected into the attention projection layers of each transformer block. Only the LoRA parameters were updated; all pretrained model weights remained frozen.

Targets (LLaMA and DeepSeek): q_proj, v_proj

Targets (Mistral): q_proj, k_proj, v_proj, o_proj

LoRA Hyperparameters: rank , scaling factor , dropout

This configuration preserves the generalization capabilities of the base models while reducing GPU memory usage and enabling domain-specific adaptation for NFT tweet generation.

3.7.5. Training Configuration and Steps

Fine-tuning was implemented with the Hugging Face PEFT library under the Causal Language Modeling (CLM) objective, where the model learns to predict the next token by minimizing the negative log-likelihood loss.

Optimizer/Scheduler: AdamW, weight decay 0.01, linear warmup ratio 0.03;

Learning rate: 5 × 10−5;

Precision: Mixed FP16;

Batching: per-device batch size = 8; gradient accumulation steps = 4 (effective batch = 32);

Sequence length: T = 256 tokens with dynamic padding;

Max training steps: 1800 with early stopping after 3 consecutive evaluations without validation improvement;

Evaluation/Save frequency: every 100 steps; best checkpoint selected by validation perplexity.

3.7.6. Checkpointing and Artifacts

A structured checkpointing mechanism was implemented to ensure reliability, reproducibility, and rollback capability. Checkpoints were saved at multiple stages (e.g., steps 20, 1720, 1722) to capture both early and late training progress.

Saved: LoRA adapter weights, optimizer/scheduler states, random number generator state, tokenizer configuration and vocabulary, training arguments (including precision), and prompt template configuration.

Not saved: frozen base model weights (linked from the original checkpoint during loading).

Only LoRA adapter weights were stored to minimize storage overhead while retaining compatibility with the frozen base model.

This modular checkpointing strategy enables seamless recovery, reproducibility, and model portability while reducing resource usage—making it ideal for iterative, instruction-tuned fine-tuning pipelines.

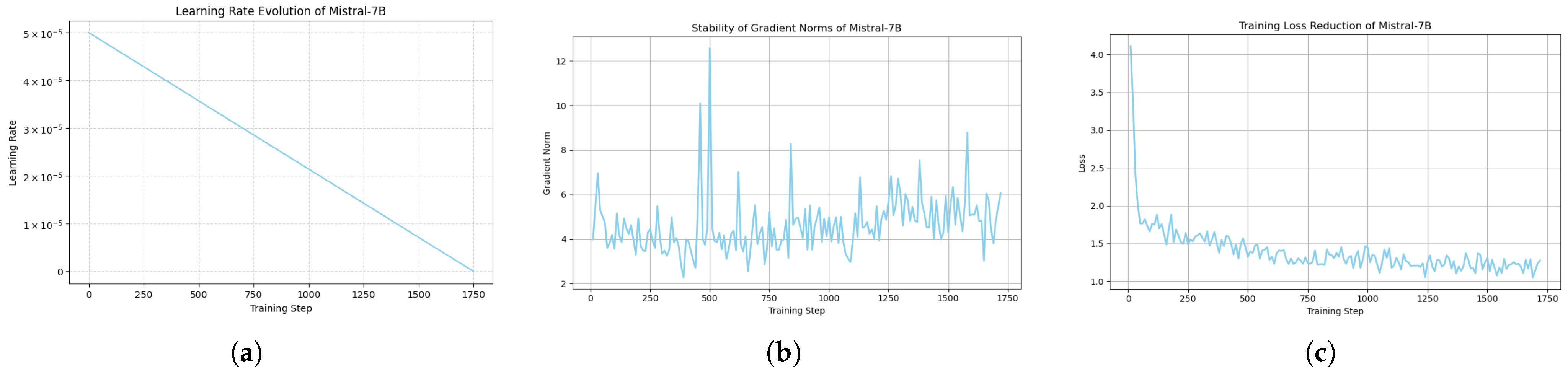

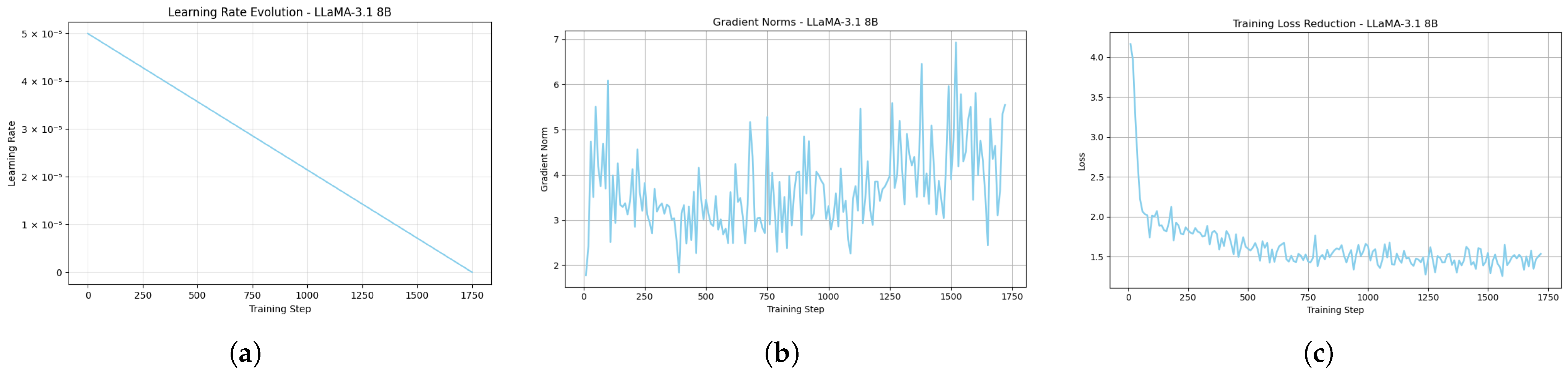

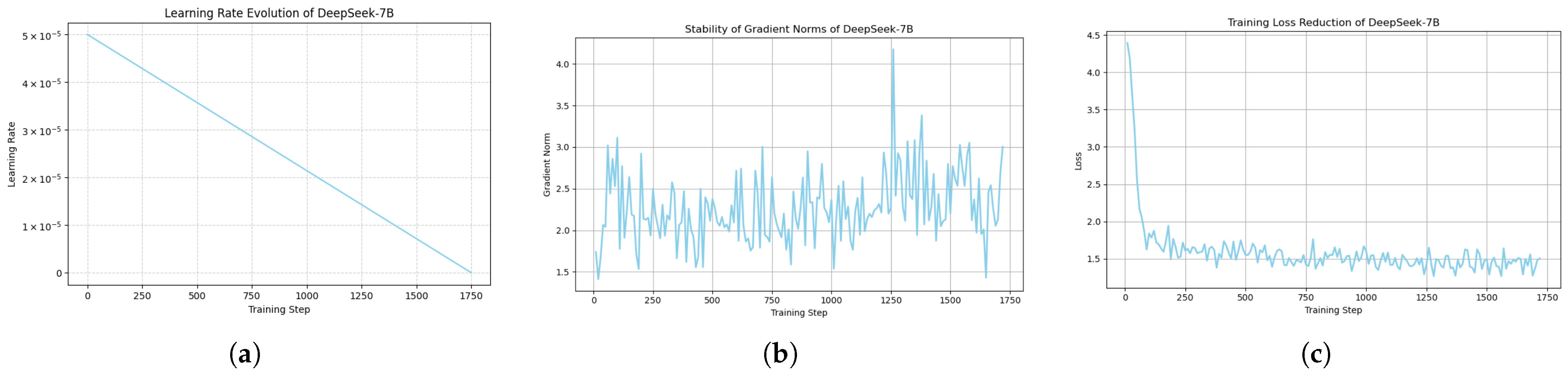

Figure 3,

Figure 4 and

Figure 5 visualizes key fine-tuning indicators across Mistral, LLaMA, and DeepSeek models, including learning rate evolution, gradient norm fluctuations, and loss reduction over training steps.

3.7.7. Inference and NFT Tweet Generation

Following fine-tuning, the trained model is reloaded alongside its tokenizer and LoRA adapter modules for inference. Structured prompts (as in

Section 3.4) are used as inputs to guide generation in an intent-aware, domain-specific manner.

To balance creativity and coherence during generation, we employ top-p (nucleus) sampling with , which selects from the smallest set of tokens whose cumulative probability exceeds the threshold. This decoding strategy introduces controlled diversity while ensuring that the output remains fluent and contextually appropriate.

The resulting NFT tweets are concise, semantically aligned, and reflect the linguistic tone, vocabulary, and communicative intent expected within NFT-related discourse, spanning various categories.

3.8. Diverse Generation with LSC Variation

To ensure that the generated NFT-related tweets are diverse, engaging, and tailored to a broad range of audience segments, we introduce the LSC Diverse Generation algorithm—where LSC refers to Length, Style, and Context. This method systematically generates tweet variations by iterating over all possible combinations of:

Length: short, medium, long;

Style: formal, informal, excited, casual, professional, friendly, humorous;

Context: art, investment, technology, community, gaming, collectibles, fashion, music.

For each unique combination, a structured prompt is dynamically constructed. This prompt integrates key elements such as context-specific hooks, relevant hashtags, strategically placed emojis, and an appropriate call-to-action (CTA) phrase—each tailored to reflect the intended length, stylistic tone, and thematic focus.

To optimize decoding performance, we empirically tuned the temperature and top-p parameters across multiple validation runs. The selected values—temperature = 0.9 and top-p = 0.7 (nucleus sampling)—consistently produced high-quality, engaging, and coherent NFT-related tweets, balancing creativity and relevance. Emojis were incorporated to enhance emotional resonance and stylistic expression in alignment with the tone specified in the prompt.

This LSC-driven approach promotes extensive linguistic and contextual coverage, resulting in tweet outputs that are not only coherent and fluent, but also stylistically diverse, semantically relevant, and well-aligned with varied NFT audiences. As detailed in Algorithm 3, the tweet-generation process leverages supervised fine-tuning with LoRA (PEFT) and incorporates Length, Style, and Context (LSC) variations to enhance output diversity, coherence, and relevance for NFT-specific communication.

| Algorithm 3 Tweet generation using supervised fine-tuning with LoRA (PEFT) and LSC variation |

- 1:

Input: Structured Prompt Dataset - 2:

Output: Diverse NFT Tweets - 3:

Supervised Fine-Tuning using LoRA (PEFT) - 4:

Initialize pretrained decoder-only LLMs and tokenizer - 5:

Format data for Causal Language Modeling (CLM) with input-output prompt pairs - 6:

Tokenize sequences with padding and truncation - 7:

Inject LoRA adapters into attention layers: - 8:

Freeze base weights and train only LoRA parameters - 9:

Set training configuration - 10:

Optimize model using CLM loss (next-token prediction) - 11:

Reload model with trained LoRA adapters - 12:

Generate tweets from fine-tuned model using top-p sampling - 13:

LSC-Based Diverse Generation - 14:

Define generation dimensions: Length: short, medium, long Style: formal, informal, excited, casual, professional, friendly, humorous Context: art, investment, technology, community, gaming, collectibles, fashion, music - 15:

for each LSC combination do - 16:

Construct prompt using selected Length, Style, and Context - 17:

Generate tweet using decoding parameters: temperature = 0.9, top-p = 0.7 - 18:

end for

|

3.9. Engagement Scoring Model

To estimate the potential engagement of generated NFT tweets, we employ an XGBoost-based regression model trained on historical NFT-related tweet data. The model integrates both textual and structural features to predict an engagement score S, which is later incorporated into the reinforcement learning reward function.

Feature Set: The input features used for training include:

Tweet Length: Total number of characters.

Word Count: Total number of words.

Presence of Emojis and Hashtags: Binary indicators capturing stylistic and topical emphasis.

Textual Features: TF-IDF vectorized representations of tweet content, including unigrams and bigrams.

Preprocessing Pipeline: The modeling pipeline begins with a TF-IDF Vectorizer to convert raw tweet text into a sparse, weighted vector format based on token frequency and importance. Simultaneously, numerical features (length, word count, emoji/hashtag presence, sentiment polarity, POS proportions) are normalized using a StandardScaler to ensure uniform scaling. Both branches—TF-IDF features and normalized numerical features—are fused via a ColumnTransformer, producing a unified feature matrix compatible with the XGBoost regressor.

Model Training and Output: The final feature matrix is used to train an XGBoost regression model, which learns to predict the engagement score S for each tweet. A log1p transformation is applied to the raw engagement counts (likes, retweets, replies) to mitigate skewness caused by outliers. The predicted engagement score serves as a quantitative signal in the blended reward function during reinforcement learning, guiding generation toward content with higher potential for audience interaction.

Model Performance: The engagement predictor achieved an

score of 0.823 and an RMSE of 0.041 (normalized scale) on the held-out test set. Feature importance rankings, derived from XGBoost’s gain-based metric, and performance metrics are summarized in

Table 3.

By combining textual semantics with structural indicators, the engagement scoring model enables RL-TweetGen to prioritize content that balances domain relevance, stylistic appeal, and high predicted engagement.

3.10. Reinforcement Learning Algorithm for Engagement-Optimized Tweet Generation

In this module, a novel reinforcement learning algorithm is proposed, specifically designed to optimize short text generation—such as tweets—for social media platforms, with a focus on the NFT ecosystem. This algorithm uniquely integrates predicted engagement modelling, human-in-the-loop feedback, advantage-based learning dynamics, and context-aware decoding strategies to maximize both quality and audience engagement in generated tweets. As presented in Algorithm 4, the proposed reinforcement learning strategy optimizes NFT tweet generation by maximizing engagement-driven rewards, aligning outputs with audience preferences, emotional tone, and community-specific language.

3.10.1. Reinforcement Learning Optimization

After supervised fine-tuning, we applied

Proximal Policy Optimization (PPO) [

34] to optimize tweet generation for engagement signals (likes, retweets, replies).

Hybrid Reward Function:

where

,

, and

are min–max normalized predictions from a reward model trained on historical tweet engagement data.

Key Training Parameters:

RL algorithm: PPO;

Learning rate: 5 × 10−5;

Batch size: 64 episodes;

PPO epochs: 5 per update;

Clipping range: 0.2;

Entropy coefficient: 0.01;

Early stopping: 3 epochs without reward improvement.

| Algorithm 4 Proposed reinforcement learning for engagement-optimized NFT tweet generation |

- 1:

Input: Structured prompt dataset, pretrained LLM, reward components - 2:

Output: High-engagement NFT tweets - 3:

Tweet-Generation Environment - 4:

Load Models - 5:

Set max tweet length - 6:

Generate tweet candidates from structured prompts with CTAs, emojis, hashtags - 7:

Reward Computation - 8:

Predict engagement score using XGBoost - 9:

Collect human feedback (1–5 scale), normalize as F = Feedback/5 - 10:

Compute reward: - 11:

Advantage Calculation and Policy Update - 12:

Compute advantage: - 13:

if then - 14:

Increase temperature T (exploration) - 15:

else - 16:

Decrease temperature T (precision) - 17:

end if - 18:

Engagement-Aware Decoding Strategies - 19:

Tailored Beam Search (TBS): Score: using keyword and intent relevance - 20:

Enhanced Nucleus Sampling (Top-p): Select tokens where cumulative probability exceeds p - 21:

Contextual Temperature Scaling (CTS): with T adjusted by context and A - 22:

Normalize expert feedback and update reward for next generation cycle

|

3.10.2. Tweet-Generation Environment

The algorithm operates in a simulated tweet-generation environment, where an instruction-tuned language model (LLaMA 3.1-8B, Mistral 7B, DeepSeek 7B) produces candidate tweets in response to structured prompts. These tweets are constrained to 280 characters and optimized for NFT marketing, incorporating elements such as calls-to-action (CTAs), emojis, and topic-specific hashtags (e.g., #NFTArt, #CryptoCollectibles).

3.10.3. Blended Reward Function

Each generated tweet is evaluated using a hybrid reward signal combining both predicted and qualitative feedback, as defined in Equation (

5):

where

is the predicted engagement score from an XGBoost model trained on historical NFT tweets using features such as keyword frequency, sentiment scores, punctuation usage, and historical engagement metrics. Feedback Score is a human rating on a 1–5 scale.

This hybrid reward strikes a balance between quantitative virality and linguistic and stylistic resonance for the NFT audience.

3.10.4. Advantage-Based Policy Update

To guide reinforcement learning and dynamically adapt generation strategies, the algorithm computes an advantage value, as defined in Equation (

6):

where the Baseline is the moving average of recent reward scores, serving as a dynamic performance reference.

Based on the computed advantage, the system adjusts the generation temperature (T) as follows:

If , increase T→ encourages exploration and creative diversity.

If , decrease T→ promotes precision and adherence to proven tweet patterns.

This dynamic adjustment mechanism allows the algorithm to fine-tune its balance between exploration and exploitation.

3.10.5. Engagement-Aware Decoding Strategies

To improve the quality, diversity, and contextual alignment of generated tweets, the proposed algorithm integrates three advanced decoding strategies:

Tailored Beam Search (TBS): An enhanced version of standard beam search that incorporates domain-specific heuristics for output reranking. Candidate sequences are evaluated based on factors such as keyword relevance, intent alignment, and domain-specific embedding similarity. The score of a candidate sequence is computed by summing token-level contributions, as defined in Equation (

7):

Enhanced Top-p (Nucleus) Sampling: A controlled sampling strategy that selects the next token from the smallest set of tokens whose cumulative probability exceeds a predefined threshold

p. This encourages stylistic diversity and creativity while maintaining fluency, as shown in Equation (

8):

Contextual Temperature Scaling (CTS): This method dynamically adjusts the softmax temperature based on the thematic context of the tweet. The temperature

T is initialized per NFT topic and updated online using the computed advantage signal. The probability distribution over tokens is computed as shown in Equation (

9):

3.10.6. Evaluation and Iteration

A continuous feedback loop evaluates each generated tweet along four dimensions: contextual relevance, engagement potential, clarity and coherence, and stylistic quality. This process is driven by expert-based evaluation, where scores are assigned on a 5-point Likert scale, normalized to the range [0, 1], and integrated into the reward function to refine the reinforcement learning process. To benchmark model performance, tweet outputs from each language model (LLaMA, Mistral, and DeepSeek) are independently assessed through domain-informed expert judgment, with model identities withheld to ensure unbiased scoring. A weighted average of the expert scores, with greater emphasis on engagement potential, reflects the practical priorities of NFT-focused content generation.

4. Experimental Procedure

This section outlines the experimental setup used to evaluate the RL-TweetGen system. It includes details on system configuration, dataset preparation, model configuration, and the design of a simulated reinforcement learning environment. These components collectively ensure a comprehensive and rigorous evaluation of the proposed system.

4.1. System Configuration

The RL-TweetGen system was implemented and evaluated on a local high-performance workstation equipped with an NVIDIA Quadro P5000 GPU (16 GB VRAM), CUDA version 12.4, and Python 3.10.12. The system utilized PyTorch 2.4.1 for deep learning and model training, while integration with large language models (LLMs) was facilitated via Hugging Face Transformers v4.41.0. TensorFlow 2.8.0 supported auxiliary tasks. For natural language preprocessing and evaluation, the system employed NLTK, spaCy, scikit-learn, and SentenceTransformers. Engagement-prediction modeling was performed using XGBoost. Logging and visualization were managed with Weights and Biases (wandb), Matplotlib 3.9.2, and Seaborn 0.13.2. This software and hardware configuration enabled efficient tokenization, supervised fine-tuning, reinforcement learning optimization, and evaluation of tweet-generation quality and engagement potential.

4.2. Dataset Preparation

The dataset preparation process for NFT tweet generation was meticulously carried out through a series of steps including dataset selection, sampling, preprocessing, semantic annotation, engagement scoring, and partitioning. Each phase was designed to ensure the quality, representativeness, and relevance of data for training engagement-optimized text-generation models.

Dataset Source: The primary data source was the publicly available “Verified NFT Tweets” dataset from Kaggle [

32], which comprises tweets from verified NFT-related accounts posted between 2020 and 2022. This dataset provides a diverse and temporally rich collection of domain-specific content relevant to NFT discourse across various communities.

Sampling Strategy: To curate a high-quality and balanced subset, stratified sampling was employed. The sampling was guided by two key stratification variables:

This resulted in a curated dataset of 1436 representative tweets, ensuring both engagement diversity and semantic coverage.

Preprocessing Steps: To prepare the dataset for downstream modeling tasks, a comprehensive preprocessing pipeline was applied:

Deduplication: Removed exact duplicate tweets.

Minimum Length Filter: Excluded tweets with fewer than 20 characters to eliminate noise.

Language Filtering: Retained only English tweets using the langid library for language detection.

Encoding Normalization: Converted from Latin-1 to UTF-8 to ensure compatibility.

Character Cleaning: Removed corrupted and non-ASCII characters using regular expressions.

Formatting Standardization: Normalized punctuation, whitespace, and special formatting artifacts.

This pipeline ensured clean, consistent input for both semantic and engagement modeling.

Engagement Score Computation: Each tweet was assigned an Engagement Score, computed using a weighted formula that prioritizes deeper interactions by assigning higher importance to replies and retweets over likes, following the scoring strategy as outlined in Equation (

1). To categorize tweets into discrete levels, quantile-based binning was applied, resulting in three engagement classes:Low, Medium or High. These engagement labels served as key supervision signals during reinforcement learning optimization.

Quantile-based binning was applied to assign each tweet a discrete engagement class: Low, Medium, or High.

Semantic Intent Classification: Tweets were also categorized into one of six semantic intent classes, capturing their primary communicative goals: Art, Gaming, Music, Photography, Membership, and Profile Pictures (PFPs).

Annotation Methodology

Weak Supervision: Employed domain-specific keyword heuristics and regular expression rules to generate initial pseudo-labels.

Feature Engineering: Used TF-IDF vectorization including bigrams to capture relevant n-gram patterns.

Model Selection: Evaluated several classifiers; a Gradient Boosting Classifier was selected based on superior validation performance.

This two-stage process enabled high-quality semantic labeling with minimal manual effort.

Dataset Partitioning: To facilitate effective training, fine-tuning, and evaluation of language models, the dataset was partitioned as follows: 80% Training, 10% Validation, 10% Testing. This stratified split preserved the class distribution across both engagement and semantic categories to avoid data imbalance and ensure fair evaluation.

4.3. Model Configuration

To evaluate the effectiveness of different strategies in optimizing NFT tweet generation for engagement, we implemented a multi-stage model configuration pipeline. This pipeline integrates baseline generation, supervised fine-tuning, stylistic and contextual diversification, engagement prediction, reinforcement learning, and decoding enhancements. Each stage is detailed below:

Baseline Zeroshot Tweet Generation:

As an initial benchmark, we assessed the generative capabilities of pretrained instruction-tuned large language models (LLMs) without any domain-specific adaptation or fine-tuning.

Models Evaluated: We employed three open-weight LLMs: LLaMA-3.1-8B Instruct, Mistral-7B Instruct v0.1, DeepSeek LLM 7B Chat.

Prompting Strategy: Prompts were derived from cleaned NFT tweets using instruction-aligned templates that aligned with instruction-tuning conventions.

Model Inference Details: Generation was performed using the Hugging Face Transformers library with 4-bit quantization through BitsAndBytes to minimize memory overhead and enable multi-model experimentation.

Decoding Configuration: Temperature = 0.9, Top-p = 0.7, and Repetition penalty = 1.1.

Post-Processing: Outputs were truncated to 280 characters (Twitter constraint) and filtered for coherence and completeness.

Supervised Fine-Tuning with LoRA:

To adapt general-purpose LLMs to the NFT domain, we applied Low-Rank Adaptation (LoRA) using the PEFT (Parameter-Efficient Fine-Tuning) approach.

LoRA adapters were injected into the q_proj and v_proj layers in all models, with k_proj and o_proj also used in Mistral for greater expressiveness.

Fine-tuning parameters:

- -

Epochs: 3, Learning rate: , Batch size: 8–16, Sequence length: 64 tokens.

Objective: Causal Language Modeling (CLM) to train next-token prediction.

Inference used decoding settings: temperature = 0.7, top-p = 0.9, repetition penalty = 1.2.

Outputs were limited to 280 characters for platform compatibility.

LSC-Based Diverse Tweet Generation:

To enhance stylistic diversity and better target varied NFT audiences, the proposed system applied the LSC (Length, Style, Context) framework post fine-tuning.

The three LSC dimensions consisted of:

- -

Length: short, medium, long;

- -

Style: formal, informal, excited, casual, professional, friendly, humorous;

- -

Context: art, investment, technology, community, gaming, collectibles, fashion, music.

For each LSC combination, custom prompts were generated using targeted hashtags, emojis, call-to-actions (CTAs), and domain-specific hooks.

Sampling temperatures were dynamically adjusted based on the style dimension. For instance, higher temperatures were used to support creativity in humorous tweets, whereas lower temperatures supported coherence in professional or formal styles.

Engagement Scoring Model:

An XGBoost-based regression model was developed to predict engagement scores for generated tweets and serve as a reward signal.

Input features included: tweet length, word count, presence of emojis and hashtags, TF-IDF vectors, sentiment polarity scores, and part-of-speech tags.

Feature preprocessing was conducted using StandardScaler and ColumnTransformer to normalize numeric and text features appropriately.

The model was trained on historical NFT-related tweets with annotated engagement metrics (likes, retweets, replies).

The predicted engagement score was used as a core component of the reward function during reinforcement learning.

Simulated Reinforcement Learning Environment:

A simulated tweet-generation environment was constructed to optimize output quality using a blended reward mechanism.

Fine-tuned models generated tweets conditioned on various LSC-driven prompt combinations.

The reward signal consisted of a weighted combination of the predicted engagement score (from the XGBoost model) and expert-curated human feedback scores.

A moving average reward baseline was maintained to stabilize policy updates.

The advantage term was used for reinforcement learning. Based on the sign of A, contextual temperature was adjusted:

- -

: temperature was increased to promote exploration.

- -

: temperature was reduced to improve consistency and precision.

Advanced Decoding Strategies:

To balance creativity and relevance in generation, the system implemented three decoding techniques:

Tailored Beam Search (TBS): Beam candidates were re-ranked using NFT-specific heuristics such as keyword alignment, semantic similarity to training data, and domain topic relevance (Equation (

7)).

Enhanced Top-p Sampling: Tokens were selected from the minimal set whose cumulative probability exceeded a threshold

p, enabling more diverse output while controlling randomness (Equation (

8)).

Contextual Temperature Scaling (CTS): The temperature value for decoding was adapted based on thematic context (e.g., art vs. gaming), ensuring stylistic consistency (Equation (

9)).

4.4. Evaluation and Feedback Loop

A comprehensive evaluation loop was designed using both automated and human-in-the-loop feedback. Human evaluators scored generated tweets across four axes: contextual relevance, engagement potential, clarity and coherence, and stylistic quality, using a 5-point Likert scale. These scores were normalized and reintegrated into the reward function. Additionally, model outputs were benchmarked by sampling tweets per model across three phases—zeroshot, supervised fine-tuning, and RL-optimized generation. Performance was assessed using engagement prediction, Likert-based feed-back scores, and qualitative analysis, allowing for detailed comparison across models and stages.

4.5. Statistical and Reliability Analysis

To ensure the robustness and interpretability of our evaluation results, we incorporated the following statistical tests, variance reporting, and inter-rater reliability measures:

Assumptions and Variance Testing: Before conducting statistical comparisons between model outputs, we performed Levene’s test to assess homogeneity of variances. For normally distributed metrics, ANOVA was used; otherwise, non-parametric tests were employed.

Statistical Significance Testing: Pairwise model comparisons were conducted using the Wilcoxon signed-rank test (non-parametric) and validated through pairwise bootstrap resampling with iterations to assess the stability of observed differences. Statistical significance was reported at .

Error Bars, Standard Deviation, and Confidence Intervals: All reported metric means are accompanied by standard deviations. For plots, 95% confidence intervals were visualized using error bars to illustrate variance across multiple runs and prompt conditions.

Engagement Model Reporting: The XGBoost engagement predictor achieved an score of 0.823 and RMSE of 0.041 (normalized scale) on the test set. Input features included tweet length, word count, emoji/hashtag presence, sentiment polarity, TF-IDF vectors, and POS-tag proportions. A log1p transformation was applied to likes, retweets, and replies to mitigate skewness.

Inter-Rater Reliability: Human evaluation scores from three annotators were tested for agreement using both Cohen’s (pairwise) and Krippendorff’s (multi-rater). Results indicated substantial agreement with and .

Semantic Similarity Metrics: In addition to BLEU, ROUGE, and METEOR, we included BERTScore (F1) using a pre-trained all-MiniLM-L6-v2 model from SentenceTransformers to capture semantic alignment between generated and reference tweets.

5. Results

This section is critical for validating the effectiveness of the proposed RL-TweetGen system through both quantitative and qualitative analysis. It demonstrates how each component contributes to performance gains and highlights the impact of reinforcement learning on generating engagement-optimized tweets. The experimental findings are presented along with a comprehensive analysis of the system’s performance. The section includes a comparative evaluation of tweet semantic classification, lexical and semantic performance metrics, and a detailed analysis across baseline and fine-tuned models. Key insights and interpretations are provided, with particular emphasis on the role of reinforcement learning in enhancing output quality and engagement relevance.

5.1. Comparative Analysis for Tweet Semantic Classification

To assess the effectiveness of the semantic classification pipeline, all models were trained and evaluated using stratified splits of the annotated dataset. The performance of each model was measured using accuracy, precision, recall, and F1-score, as summarized in

Table 4 and visualized in

Figure 6.

Among the classifiers, Gradient Boosting achieved the best overall results, with a validation accuracy of %, precision of 0.76, recall of 0.76, and F1-score of 0.75, outperforming all other classifiers evaluated in this study, including Logistic Regression (61%), Linear SVM (68%), SGD Classifier (70%), Random Forest (66%), and Decision Tree (69%).

Gradient Boosting works by iteratively refining predictions through the stage-wise addition of weak learners. At each iteration

m, it updates the model using the formula shown in Equation (

10):

where

is the ensemble prediction from the previous iteration,

is the weak learner trained on the residuals (i.e., the negative gradient of the loss function), and

is the learning rate that controls the contribution of each learner.

This process allows the model to progressively correct errors, capture complex nonlinear relationships, and reduce both bias and variance. Its superior performance in capturing semantic patterns in NFT-related tweets validates its selection as the final classifier for generating structured labels in downstream tasks.

5.2. Lexical Performance Evaluation: Metrics and Results

Lexical metrics reveal how closely generated tweets match reference tweets at the surface level—focusing on n-gram precision, recall, and sequence similarity. We report BLEU, METEOR, ROUGE-1, ROUGE-2, and ROUGE-L. As shown in

Table 5, the lexical performance of the models varied significantly across training types.

5.2.1. BLEU Score

The BLEU (Bilingual Evaluation Understudy) score computes modified precision over n-grams. A brevity penalty (BP) is applied to penalize overly short hypotheses. The BLEU score is computed using the formula shown in Equation (

11):

where

is the weight assigned to the

n-gram precision

, and BP is the brevity penalty.

For the BLEU scores, which reflect token-level precision of generated tweets against references, the Mistral model demonstrated clear and consistent improvement across all training stages. Starting with a BLEU score of 0.2144 at the base stage, it increased to 0.2240 after domain-specific fine-tuning, and further rose to 0.2285 following reinforcement learning optimization. This steady upward trend indicates that both fine-tuning and the engagement-driven RL reward were effective in aligning Mistral’s lexical choices with those found in authentic NFT tweets.

In contrast, the LLaMA model experienced only a modest improvement: it began with a BLEU score of 0.2058 at the base stage, climbed to 0.2110 after fine-tuning, but then slightly declined to 0.2075 post-RL. This suggests that while LLaMA benefited somewhat from exposure to NFT tweet data during fine-tuning, the RL stage did not consistently reinforce lexical alignment, possibly due to increased generation diversity aimed at engagement.

Meanwhile, the DeepSeek model exhibited minimal variation in BLEU scores during training, consistently ranging between 0.1890 and 0.1900. This stagnation suggests a limited ability to capture or adapt to the nuanced lexical patterns characteristic of NFT-related tweets, potentially due to architectural constraints or less domain-aligned pretraining data.

5.2.2. METEOR Score

METEOR (Metric for Evaluation of Translation with Explicit ORdering) improves upon BLEU by combining unigram precision and recall while introducing an alignment penalty for fragmented matches. The score is computed using Equation (

12):

where

is the harmonic mean of unigram precision and recall, and

P is the fragmentation penalty based on the number of chunks in the alignment.

The METEOR scores, which evaluate phrase-level alignment through a combination of precision, recall, and semantic similarity, revealed contrasting dynamics across the three models. Mistral began with a METEOR of 0.3120 in the zeroshot baseline and showed a modest improvement to 0.3164 after supervised fine-tuning, demonstrating its ability to better capture paraphrastic variations and synonymic patterns common in NFT discourse. However, after reinforcement learning, its METEOR score dipped slightly to 0.3110, suggesting that while the RL phase optimized for engagement, it may have introduced more creative but less directly reference-aligned phrasing, slightly sacrificing phrase-level precision for diversity.

Conversely, LLaMA exhibited a gradual rise, with its METEOR peaking at 0.3169 after RL fine-tuning—higher than both its base and fine-tuned-only scores—indicating that LLaMA benefited from the RL stage in terms of learning richer and more flexible phrase patterns that better matched NFT tweet references.

DeepSeek, however, exhibited comparatively lower METEOR scores, never surpassing 0.2399 at any stage. This consistent lag suggests it struggled to capture the syntactic and phrasal patterns typical of NFT marketing tweets, highlighting its limited adaptability to the nuanced linguistic demands of this specialized domain.

5.2.3. ROUGE Metrics

ROUGE (Recall-Oriented Understudy for Gisting Evaluation) evaluates the quality of generated text by measuring overlaps with reference text. ROUGE-1, ROUGE-2, and ROUGE-L assess unigram, bigram, and longest common subsequence (LCS) similarity, respectively, as shown in Equations (

13)–(

15):

Among the three models, Mistral demonstrated the most consistent and substantial improvements across all ROUGE metrics through both fine-tuning and reinforcement learning. ROUGE-1 increased steadily from 0.3362 (base) to 0.3484 (fine-tuned), and further to 0.3542 after RL. ROUGE-2 showed a similar trend, improving from 0.2178 to 0.2409 with fine-tuning, followed by a minor decline to 0.2357 post-RL. ROUGE-L also rose from 0.3137 (base) to 0.3366 (fine-tuned), and peaked at 0.3413 with RL. These gains indicate that Mistral became increasingly effective at reproducing not just key lexical items, but also the structural patterns characteristic of NFT-related social media content.

In contrast, LLaMA demonstrated particular strength in semantic fluency and phrase-level diversity. Its ROUGE-2 score improved from 0.2350 to 0.2521 after fine-tuning and further increased to 0.2588 following RL—representing the highest bigram overlap among all models. However, ROUGE-1 and ROUGE-L showed a marginal decline post-RL, decreasing from 0.3368 to 0.3305 and from 0.3315 to 0.3281, respectively. This suggests that while RL enhanced LLaMA’s expressive variation, it slightly reduced its ability to reproduce exact lexical sequences and structural matches.

DeepSeek, in contrast, consistently delivered weaker performance across all evaluation stages. Although fine-tuning led to modest improvements—such as an increase in ROUGE-1 from 0.2422 to 0.2471 and ROUGE-2 from 0.2008 to 0.2118—the reinforcement learning phase did not yield significant gains. In fact, some metrics slightly declined, with ROUGE-2 dropping to 0.2074 and ROUGE-L to 0.2341. These findings indicate DeepSeek’s limited effectiveness in capturing domain-specific lexical patterns and reproducing the stylistic and structural nuances characteristic of NFT-related tweets.

Collectively, the ROUGE-based analysis highlights the distinct strengths of each model. Mistral excels in lexical fidelity and structural fluency, making it ideal for precise, high-clarity tweet generation. LLaMA offers superior performance in phrase-level diversity and semantic fluidity, positioning it well for stylistically varied and engaging content.

5.3. Semantic Performance Evaluation: Metrics and Results

Lexical scores alone cannot guarantee preserved meaning. We therefore computed BERT-based metrics and cosine similarity to evaluate whether generated tweets capture the semantics of the references. As shown in

Table 6, BERT-based and cosine similarity scores validate that semantic consistency improves post fine-tuning.

5.3.1. BERTScore

BERTScore measures semantic similarity between a generated tweet and its reference by computing cosine similarity between contextualized BERT embeddings.

Let

and

be token sets from the candidate and reference, respectively. The score is:

where

denotes BERT embeddings and cos is cosine similarity.

Semantic analysis based on BERTScore F1 revealed a consistent improvement in Mistral’s ability to generate tweets that not only match target vocabulary but also capture the underlying meaning and conceptual alignment with reference NFT tweets. Specifically, Mistral’s BERT_F1 increased from 0.8240 in the zeroshot baseline to 0.8323 following supervised fine-tuning, culminating at 0.8461 after reinforcement learning. This steady upward trend indicates that each stage of adaptation enabled Mistral to produce outputs with higher semantic fidelity—capturing nuanced references, context-specific terms, and implied meanings critical in NFT marketing discourse.

Conversely, LLaMA exhibited a smaller improvement trajectory, with BERT_F1 peaking at 0.8155 post-RL. While this suggests some gains in phrase-level understanding, it consistently lagged behind Mistral, highlighting LLaMA’s relative difficulty in grasping nuanced NFT-specific semantics even after fine-tuning and RL optimization.