Abstract

In this work, the utility of multimodal vision–language models (VLMs) for visual product understanding in e-commerce is investigated, focusing on two complementary models: ColQwen2 (vidore/colqwen2-v1.0) and ColPali (vidore/colpali-v1.2-hf). These models are integrated into two architectures and evaluated across various product interpretation tasks, including image-grounded question answering, brand recognition and visual retrieval based on natural language prompts. ColQwen2, built on the Qwen2-VL backbone with LoRA-based adapter hot-swapping, demonstrates strong performance, allowing end-to-end image querying and text response synthesis. It excels at identifying attributes such as brand, color or usage based solely on product images and responds fluently to user questions. In contrast, ColPali, which utilizes the PaliGemma backbone, is optimized for explainability. It delivers detailed visual-token alignment maps that reveal how specific regions of an image contribute to retrieval decisions, offering transparency ideal for diagnostics or educational applications. Through comparative experiments using footwear imagery, it is demonstrated that ColQwen2 is highly effective in generating accurate responses to product-related questions, while ColPali provides fine-grained visual explanations that reinforce trust and model accountability.

1. Introduction

Detecting information from product pictures or allowing users to ask questions about products based on images is useful in e-commerce. For example, AI analyzes product photos to automatically identify key attributes like category, color, material or style [1,2]. This helps streamline the product listing process by reducing the need for manual tagging or description writing. It also improves consistency across the catalog. Visual search/classification is another interesting use case [3]. A customer can upload a photo of a product they saw somewhere, a chair in a magazine or a jacket worn by someone on the street, and the system finds visually similar items available for sale [4]. This functionality makes discovery much more intuitive and engaging, especially in sectors like fashion, furniture or home decoration [5,6]. Detecting information from images also helps with quality control and trust. For example, e-commerce platforms may use AI to flag counterfeit items by comparing product photos with a library of genuine images. Similarly, user-uploaded content such as review photos can be screened for inappropriate or poor-quality images automatically [7].

Customers may also ask questions about products based on images. For instance, someone might upload a picture and ask, “Is this bag leather or synthetic?” or “What’s the size of this table?” Using multimodal AI models that understand both images and text, the system either infers answers directly from the image or retrieves information from the linked product database. This capability enhances the overall customer experience [8]. It enables smarter recommendations too, by combining a user’s preferences with visual features extracted from product images, so suggestions feel more tailored [9]. Another researcher used multimodal features to predict the return [10]. Predictions of customer needs or sales and emotion detection are further performed in the e-commerce field using multimodal features [11,12,13].

Recent advances in VLMs have made it possible to integrate image and text understanding. Our research explores two such models, ColQwen2 and ColPali, in the e-commerce field. ColQwen is a family of VLMs built on the Qwen2-VL architecture, which integrates a vision transformer (ViT) frontend with a transformer-based language decoder. Its hallmark feature is the use of lightweight, LoRA-based adapters that can be “hot-swapped” at runtime to pivot between dense retrieval and multimodal generation [14,15]. In retrieval mode, ColQwen projects visual patches and text queries into a shared embedding space, performing ColBERT-style token-level matching to efficiently surface the most relevant images or document pages. When switched to generation mode, the same underlying model (and often the identical weights) serves as an image-grounded text generator, capable of answering questions directly from visual context without the need for external OCR or layout parsers [16]. Because only small adapter modules are trained for each task, ColQwen requires less memory overhead than maintaining two separate models, and it can run on modest GPUs such as those provided by Google Colab’s free tier. Its primary limitations stem from model scale: smaller checkpoints (e.g., 2 billion parameters) may struggle with complex chart understanding or detailed scene reasoning, leading to occasional hallucinations in generated answers, while larger variants demand more VRAM and computation [17,18].

ColPali, by contrast, is geared explicitly toward explainable retrieval [19,20]. Built atop Google’s PaliGemma vision-language backbone, it focuses on projecting images and text into a dense, multi-vector space via a single retrieval adapter. Rather than generating prose, ColPali excels at producing token-level similarity maps that highlight which regions of an image align most strongly with individual words or subwords of a query. These heatmaps provide clear, interpretable insight into the model’s reasoning, a feature prized in research, diagnostics and educational applications where transparency is paramount [21]. Because PaliGemma is architecturally larger (approximately 3 billion parameters in its mixed configuration), ColPali typically requires more memory and can only process one image at a time in its default adapter setup. It also lacks end-to-end generation capability, meaning that any answer synthesis must rely on an external language model or manual interpretation of similarity outputs.

Taken together, ColQwen and ColPali occupy two complementary niches in modern multimodal AI. ColQwen offers a unified, efficient solution for systems that must both retrieve pertinent visual evidence and produce fluent, grounded answers. ColPali delivers unmatched interpretability for applications where understanding the “why” behind retrieval is as important as the retrieval itself [22]. Neither model is a panacea: ColQwen’s strength in generation can come at the cost of reduced clarity in how it arrived at a given answer, while ColPali’s transparency comes with the trade-off of requiring downstream tools for any actual text production. Researchers and practitioners should therefore choose between or combine these tools based on the particular use case.

The primary objective of our research is to evaluate and compare two state-of-the-art vision–language models, ColQwen2 and ColPali, in their ability to transform visual product understanding and multimodal interaction within e-commerce contexts. As online retail platforms increasingly rely on visual content to engage users, the need for intelligent systems that can interpret, retrieve and reason over product images becomes essential. Our paper investigates how these models can be leveraged not only to automate backend operations such as tagging, classification and catalog curation, but also to enhance frontend applications, including Visual Question Answering (VQA), intelligent search and conversational customer support. Specifically, we aim to

- (a)

- Demonstrate the effectiveness of ColQwen2 pipelines for image-grounded question answering for e-commerce application;

- (b)

- Assess the explainability potential of ColPali through its generation of token-level similarity maps, offering interpretable visual reasoning;

- (c)

- Establish a comparative framework for selecting and integrating these models in diverse e-commerce use cases, from automated cataloging to customer interaction;

- (d)

- Highlight the operational trade-offs between multimodal generation capabilities and interpretability, supporting informed deployment decisions in practical retail environments.

The contribution to the field of e-commerce is both technological and operational. Technologically, our research showcases how cutting-edge multimodal AI can streamline the traditionally labor-intensive processes of image annotation, product metadata generation and consumer inquiry handling. Operationally, it provides a blueprint for retailers and developers to adopt flexible, lightweight and transparent AI architectures that suit various stages of the product lifecycle from onboarding and quality control to real-time user engagement.

The novelty of this research lies in three key areas. First, it presents a dual-model comparative evaluation on real-world product imagery, exploring how ColQwen2 and ColPali behave under identical prompts and visual conditions. Second, it introduces a unified methodological framework for e-commerce applications using adapter-based model switching in ColQwen2 that enables both dense image retrieval and grounded answer generation without the need for multiple disjoint systems. Third, it integrates explainability into the evaluation of multimodal models in retail by visually analyzing token-image alignment, a dimension often overlooked in performance-driven benchmarks.

The originality and significance of our work consist of

- Comparative evaluation in real-world contexts. While ColQwen2 and ColPali are two models in the Hugging Face platform, our research contributes by presenting the first comprehensive head-to-head evaluation of these two VLMs under identical e-commerce scenarios, using product imagery.

- Novel methodological framework. Our work introduces a Retrieval-Augmented Generation (RAG) pipeline for e-commerce purposes using adapter hot-swapping, for integrating retrieval and generation within a single lightweight architecture. This eliminates the need for fragmented RAG systems, contributing to efficient multimodal model deployment.

- Explainability in VLM. A notable advancement is our integration of token-level visual reasoning (via ColPali) as a core evaluation dimension, rather than a peripheral add-on. By visualizing token-image alignment, our research advances the interpretability in multimodal AI.

- Operationalization of AI trust in e-commerce. From a theoretical standpoint, our research links technical model capabilities and trustworthy AI deployment in commercial settings.

Our research presents architectural innovations integrating the core models (vidore/colqwen2-v1.0 and vidore/colpali-v1.2-hf) and methodological advancements.

2. Literature Review

2.1. Visual Language Models

Certain previous visual recognition studies relied on crowd-labeled data and trained separate deep neural networks (DNNs) for each task, making the process laborious and time-consuming [21]. To overcome these challenges, VLMs were investigated, learning from large-scale image–text pairs to enable zero-shot predictions across tasks. This paper systematically reviewed VLMs for visual recognition, covering their foundations, pre-training methods, datasets, transfer techniques, benchmarking results and future research directions. Large-scale contrastive pretraining advanced vision-language learning [3] by aligning images with open-vocabulary text descriptions, departing from traditional label-based training. To reduce the reliance on manual prompt engineering for downstream tasks, methods like context optimization were introduced to learn prompts automatically. This paper proposed CLIP-Adapter, a simple alternative that fine-tuned models by adding bottleneck layers to the visual or language branches and blending new features with pretrained ones. CLIP-Adapter outperformed context optimization in multiple visual classification benchmarks, as confirmed through extensive experiments and ablation studies.

VLMs trained with contrastive pre-training demonstrated strong zero-shot classification abilities [23] but struggled with imbalanced datasets where minority classes were poorly predicted. For example, CLIP achieved only 5% accuracy on iNaturalist18. To address this, the authors introduced a lightweight decoder to VLMs to handle many classes efficiently and better capture features of rare categories. They further enhanced VLMs using prompt tuning, fine-tuning and imbalanced learning strategies such as Focal Loss, Balanced SoftMax and Distribution Alignment. Experiments showed that their improved models significantly boosted classification performance on ImageNet-LT, iNaturalist18 and Places-LT. The study also analyzed the effects of pre-training dataset size, model backbones and training cost, underscoring the importance of applying imbalanced learning techniques even when working with large-scale pretrained VLMs. Moreover, pre-trained VLMs such as CLIP enabled flexible zero-shot learning by matching images with text descriptions, but their practical use was limited by the need for careful and time-consuming prompt design [24]. To overcome this, the authors proposed Context Optimization (CoOp), a method that learned contextual vectors in place of manual prompts while keeping the base model unchanged. They introduced two variations—shared and class-specific contexts—and showed that CoOp consistently outperformed manual prompts across 11 datasets, even with very limited labeled data, and achieved strong generalization to new domains. Additionally, with the growing use of pre-trained VLMs like CLIP, researchers explored ways to adapt these models to new tasks [25]. Context Optimization (CoOp) introduced prompt learning to vision tasks by replacing static prompt words with trainable vectors, achieving strong performance using a few labeled examples. However, this study identified that CoOp tended to overfit to seen classes and struggled to generalize within the same dataset. To address this, the authors proposed Conditional Context Optimization (CoCoOp), which generated input-specific prompts using a lightweight network. Unlike CoOp’s fixed prompts, CoCoOp’s dynamic approach improved generalization to unseen classes and even demonstrated cross-dataset and domain transferability in experiments.

As pretraining models were able to learn broad semantic knowledge without relying on extensive labeled data, they performed strongly across natural language and vision tasks [26]. In response, research increasingly focused on multimodal pretraining techniques that bridge language and vision. This paper provided a comprehensive overview of vision–language pretraining, outlining key task categories and summarizing advancements across both image–text and video–text models. It also discussed current challenges in the field and highlighted future research directions for improving vision–language multimodal learning. Furthermore, VLMs, situated at the intersection of computer vision and NLP, gained significant attention with the rise in transformer architectures and large-scale web data [27]. While models like CLIP and DALL·E achieved state-of-the-art performance in retrieval and generation tasks, their computational demands made full fine-tuning impractical for many researchers. Efficient Fine-Tuning (EFT) emerged as a cost-effective alternative, prompting a shift in the fine-tuning paradigm. This review analyzed recent advancements in EFT for VLMs, focusing on two widely used methods: prompt tuning and adapter-based tuning. The authors categorized prompt usage into three main types (with seven subtypes) based on modality and adapter usage into two patterns depending on their fusion role. Applications across vision-only and vision–language tasks were discussed, along with reflections on the stability, ethical concerns and future research directions in EFT.

Large VLMs, trained to follow instructions over image data, extended the capabilities of large language models to multimodal settings [28]. However, existing evaluations primarily addressed coarse-grained hallucinations, instances where models generated entirely nonexistent objects, while overlooking finer-grained errors involving incorrect attributes or behaviors. To tackle this issue, the authors introduced ReCaption, a framework that rewrote image captions using ChatGPT and fine-tuned LVLMs on these refined descriptions. They also proposed a new metric, Fine-Grained Object Hallucination Evaluation (FGHE), to assess such errors more precisely. Experiments showed that ReCaption significantly reduced fine-grained hallucinations and improved output quality across multiple LVLMs. Vision–language pre-training had shown strong potential for open-vocabulary object detection by enabling models trained on base classes to detect previously unseen categories [29]. These methods typically relied on prompts fed to a text encoder to generate class embeddings, which were then used to guide detector training. However, their effectiveness depended heavily on manually crafted prompts, and existing prompt learning methods, developed for classification, were suboptimal for detection tasks. To address this, the authors proposed DetPro, a method for learning continuous prompt representations tailored for open-vocabulary detection. DetPro introduced two key mechanisms: a background interpretation module to incorporate background proposals into training, and a context grading scheme to refine how foreground proposals influence prompt learning. When combined with the ViLD detector, DetPro achieved consistent performance gains across benchmarks, including LVIS, Pascal VOC, COCO and Objects365, notably improving detection on novel classes.

2.2. Applications in E-Commerce

Another research addressed the importance of jointly understanding visual and textual product representations for improving e-commerce search and recommendation systems [30]. The authors proposed a contrastive learning framework that aligned image and text modalities using large-scale, unlabeled product data. They detailed training strategies and domain-specific adaptations for representation learning in the retail setting. The resulting pre-trained models were evaluated as backbones for multiple downstream tasks, including classification, attribute extraction, product matching, clustering and adult content detection. Experimental results demonstrated consistent improvements over baseline models across both unimodal and multimodal scenarios. Another paper introduced ECLIP, an instance-centric multimodal pretraining framework designed to serve as a general-purpose foundation model for large-scale e-commerce applications [31]. Recognizing the domain gap between natural and product images, the authors developed a decoder architecture using learnable instance queries to capture instance-level semantics more effectively. To avoid reliance on manual annotations, they proposed two self-supervised pretext tasks tailored to e-commerce content. Trained on 100 million product-related image–text pairs, ECLIP learned rich and transferable representations. Experimental results showed that ECLIP significantly outperformed prior methods across multiple downstream tasks without additional fine-tuning, highlighting its robustness and scalability in real-world e-commerce scenarios.

The emerging challenge of generating detailed natural language descriptions for fashion items has been addressed [32], a task gaining traction in the computer vision and multimedia communities, but is still underdeveloped. To improve upon prior methods, the authors proposed a transformer-based captioning model enhanced with an external textual memory accessed via k-nearest neighbor (kNN) retrieval. The model incorporated cross-attention mechanisms to read from memory and employed a novel fully attentive gating module to regulate memory influence. Evaluated on the FACAD dataset, which includes over 130,000 fine-grained fashion descriptions, the proposed method consistently outperformed strong baselines and state-of-the-art models, demonstrating its effectiveness in fashion image captioning. Another study addressed the task of same-style product retrieval in e-commerce, where identifying identical or highly similar items with varying images or text descriptions is critical for improving product discovery and duplicate detection [33]. While traditional image-based methods overlooked textual attributes and struggled in text-dominant categories like hardware or electronics, the authors proposed a unified VLM that jointly learned from both modalities. The approach leveraged user click logs to construct positive training pairs using category and relevance constraints, and employed a contrastive loss to align image, text and combined representations in a shared embedding space. The model supported cross-modal retrieval, style transfer and user-interactive search. It demonstrated strong offline performance on annotated datasets and improved user engagement in online deployments on Alibaba.com, confirming its practical impact in large-scale B2B e-commerce.

Also, ref. [34] examined concerns about AI technologies, particularly in light of ethical issues such as bias, inequality, lack of transparency and data protection, which have undermined public trust. In response, the European Commission proposed the AI Act to regulate AI products and services. Key topics identified include AI in industry, transparency and accountability and testing of AI technologies.

3. Methodology

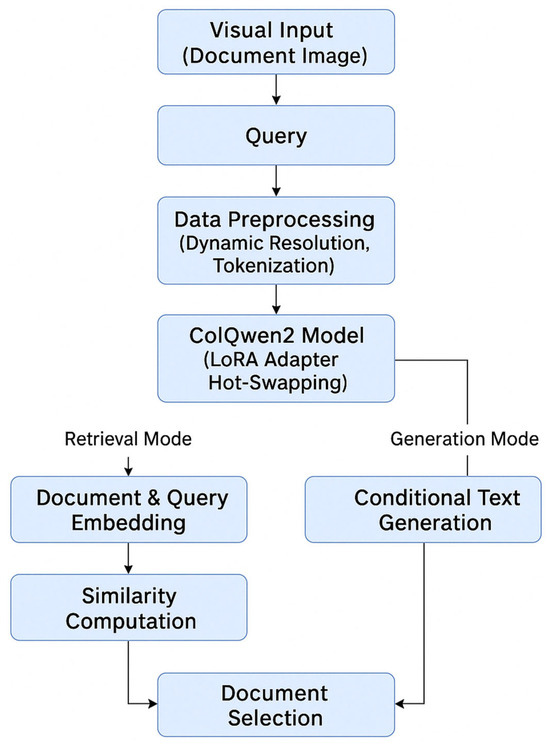

Our research proposes a novel end-to-end methodology for Retrieval-Augmented Generation (RAG) application using ColQwen2, a multimodal model that integrates both retrieval and generation capabilities through adapter hot-swapping (as in Figure 1). Hot-swapping means switching parts of a system on-the-fly without needing to stop or restart the whole model. In the case of LoRA-based adapters (which are lightweight modules used to customize a large AI model for specific tasks), hot-swapping allows the system to dynamically change its behavior between different modes, such as (a) retrieval mode, where the model searches for relevant images or documents; and (b) generation mode, where it writes natural language answers based on what it sees. This is performed without reloading or retraining the entire model, which saves memory and computation.

Figure 1.

Methodological flow.

The proposed approach simplifies the traditional RAG pipeline by unifying document indexing and answer generation into a single architecture capable of processing visual and textual modalities concurrently. We adopt ColQwen2, a lightweight VLM based on the Qwen2-VL-2B-Instruct checkpoint, optimized for document understanding and Visual Question Answering (VQA). By using its capacity to interpret visual patches from documents, the model generates multi-vector embeddings using a projection layer on top of the ViT outputs, enabling dense and semantically meaningful document representations.

3.1. Model Architecture

ColQwen2 builds on the Qwen2-VL backbone and extends its functionality via Low-Rank Adaptation (LoRA). This allows the model to switch dynamically between retrieval and generation modes. In retrieval mode, adapter weights are activated to project vision transformer outputs into a shared embedding space aligned with query representations. In generation mode, the adapters are disabled, and the full capacity of the VLM is employed for conditional generation tasks. We further employ a custom wrapper, ColQwen2_4RAG, to streamline the adapter switching process and provide a unified API for both retrieval and generation workflows. This wrapper determines the operational context based on the task (retrieval or generation) and routes the forward pass accordingly.

3.2. Data Processing and Retrieval

For document indexing, visual inputs (e.g., pictures, scanned pages, infographics or documents) are preprocessed using a dynamic resolution strategy that scales images to a height of 512 pixels while maintaining aspect ratio. This preserves legibility and reduces GPU memory usage. Query inputs are tokenized and encoded using the retrieval-specific processor. Both images and queries are then passed through the model to obtain their respective embeddings. Similarity between query and image embeddings is computed using a ColBERT-inspired late-interaction scoring mechanism, which aggregates token-level similarities across the query and image vectors. The top-k most relevant documents (typically top-1 for demonstration purposes) are selected for downstream generation.

3.3. Generation and Response Synthesis

For generation, the selected top-ranked document is reprocessed using the generation-specific processor. A conversational prompt is constructed in a chat-like format that fuses the query and image into a single input. The model, operating in generation mode, decodes the response using conditional text generation informed by the visual context of the retrieved document. This setup allows the model to answer questions directly grounded in visual evidence (Figure 1), such as tables, charts or layout-aware textual cues, without requiring traditional OCR or layout-parsing pipelines. The methodological flow is presented in Figure 1.

All experiments were conducted using a Google Colab environment equipped with a T4 GPU. The model checkpoint vidore/colqwen2-v1.0 was used in conjunction with the colpali-engine Python package (version 0.3.1). While ColQwen2-2B is computationally efficient and suited for text-heavy documents (e.g., DocVQA), we acknowledge its limitations in interpreting complex data visualizations. For future work, larger variants such as Qwen2-VL-72B or Pixtral-12B may be considered to enhance performance on chart- and info-centric datasets.

3.4. Architecture Comparison

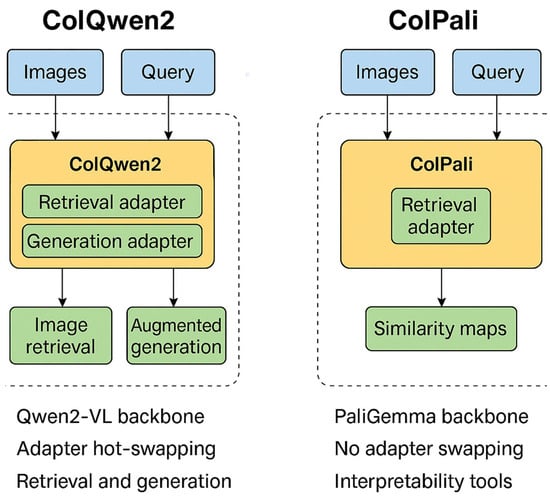

Figure 2 shows a side-by-side comparison of two state-of-the-art VLMs used in RAG pipelines: ColQwen2 and ColPali. At the top of both sections, the diagram shows that each model accepts two types of inputs: images and natural language queries. This illustrates their multimodal nature, allowing them to jointly process visual and textual information for downstream reasoning tasks.

Figure 2.

VLM architecture comparison.

On the left, the ColQwen2 architecture is depicted as a unified model incorporating two distinct functional adapters: one for retrieval and one for generation. These adapters are managed through a mechanism known as adapter hot-swapping, which allows the model to dynamically switch between retrieval and generation modes at runtime. This modular design enables ColQwen2 to perform dense image or document retrieval using multi-vector embeddings and then generate text-based answers conditioned on the retrieved content, all within the same model. The underlying model is based on the Qwen2-VL backbone, which integrates vision and language understanding in a compact and efficient form, making it especially well-suited for deployment in environments with limited computational resources.

On the right, ColPali is shown as a model focused solely on the retrieval phase. It is based on the PaliGemma backbone and includes a single retrieval adapter without generation capabilities. Rather than producing textual outputs, ColPali excels in interpretability by generating visual similarity maps. These maps illustrate the alignment between specific tokens in the query and visual regions in the input image, offering a clear view into how the model relates language with visual features. This feature makes ColPali particularly valuable in use cases that demand explainability, such as learning tools, medical/diagnostic systems or AI accountability scenarios.

At the bottom of the diagram, the core distinctions between the two models are summarized. ColQwen2, powered by the Qwen2-VL backbone, supports both retrieval and generation in an integrated manner and leverages adapter hot-swapping for operational flexibility. In contrast, ColPali relies on the more interpretability-focused PaliGemma model, supports only retrieval and does not incorporate adapter switching or generation features (but provides explainability).

This diagram highlights the practical divergence between the two systems. ColQwen2 is ideal for building end-to-end RAG systems that require both document retrieval and answer generation, whereas ColPali is more appropriate for tasks that benefit from visual transparency and fine-grained interpretability. Together, they demonstrate two complementary approaches to multimodal document understanding and retrieval. A more comprehensive comparison is provided in Table 1. Two more comparisons between ColQwen2 and similar tools and between ColPali and similar tools are provided in Table 2 and Table 3.

Table 1.

ColQwen and ColPali comparison.

Table 2.

ColQwen2 and other similar tool comparison.

Table 3.

ColPali and other similar tool comparison.

The following versions were used in this comparison: GPT-4o (June 2024 release), Gemini 1.5 Pro (2024.05), Claude 3 Opus (March 2024).

In short, ColQwen2 is more suitable when a compact, all-in-one RAG model is needed that can both retrieve and generate, whereas ColPali is an interpretable retrieval option, suitable for token-level visual matching or when one is analyzing how models align language with visual input.

The two algorithms represent our original contribution, and they present the Retrieval-Augmented Generation pseudocode using ColQwen2_4RAG in Algorithm 1 and similarity mapping pseudocode using ColPali in Algorithm 2. They describe the input, output and the procedure for image retrieval and generated answers (Algorithm 1) and similarity mapping (Algorithm 2).

| Algorithm 1. Retrieval-Augmented Generation using ColQwen2_4RAG. |

| 1: Input: List of images, list of text queries 2: Output: Retrieved image (s) and generated answer (s) 3: procedure COLQWEN2 4RAG PIPELINE (images, queries) 4: Install and import required libraries 5: Authenticate HuggingFace 6: Load model checkpoint: vidore/colqwen2-v1.0 7: Set device using get torch device (“auto”) 8: Load LoRA adapter configuration 9: Initialize retrieval and generation processors 10: Define Class: ColQwen2_4RAG 11: Enables switching between retrieval and generation modes via adapters 12: Implements unified forward pass for dual-mode support 13: Preprocess input images: 14: Resize images to fixed height while maintaining aspect ratio 15: for query ∈ retrieval queries do 16: Enable retrieval mode 17: Preprocess images and queries using retrieval processor 18: Run forward pass to obtain image and query embeddings 19: Compute similarity scores using multi-vector dot product 20: Identify and display top-ranked image 21: end for 22: for query ∈ generation queries do 23: Enable generation mode 24: Construct conversational prompt using chat template 25: Preprocess image and prompt using generation processor 26: Run generate () to obtain output tokens 27: Trim newly generated tokens only 28: Decode and print output 29: end for 30: end procedure |

| Algorithm 2. Similarity mapping using ColPali. |

| 1: Input: Image URL or file path, natural language query 2: Output: Token-level similarity maps overlayed on image 3: procedure GENERATESIMILARITYMAPS (image url, query) 4: Install dependencies: colpali-engine, huggingface hub 5: Authenticate HuggingFace login () 6: Load model: ColPali.from pretrained (model name) 7: Load processor: ColPaliProcessor.from pretrained (model name) 8: Set device: get torch device (“auto”) 9: if image filepath is provided then 10: Load image from path 11: else 12: Download and load image from URL 13: end if 14: Scale image to height n pixels (maintain aspect ratio) 15: Process image and query with processor: batch images processor.process images ([image]) batch queries processor.process queries ([query]) 16: Move inputs to device 17: Perform forward pass to get embeddings: image embeddings ← model.forward (batch images) query embeddings ← model.forward (batch queries) 18: Compute number of image patches: n patches 19: Generate image mask: image mask 20: Compute similarity maps: similarity maps ← get similarity maps from embeddings 21: Decode query and tokenize: query tokens 22: for each token index i in query tokens do 23: Extract similarity map for token i 24: Plot heatmap over image with token label and max similarity score 25: end for 26: end procedure |

4. Results

From images of products like sport shoes or t-shirts, certain features can be interpreted or inquired using computer vision and multimodal AI models. For sport shoes, an image may reveal the type of shoe, like running, basketball, trail or casual, based on the shape, sole pattern and materials. The model might detect whether it is designed for performance or style and even distinguish features like ankle height (low, mid or high), lacing system (standard vs. BOA or slip-on) or ventilation zones. Materials like mesh, leather or knit can also be inferred visually to some extent. Logos or brand symbols help identify the manufacturer and color schemes or unique design elements can link the shoe to a particular collection or collab. Even wear and tear can be detected if it is a used item. For t-shirts, for instance, the AI can detect sleeve length (short, long or sleeveless), neckline (crew, V-neck or scoop) and fit (slim, regular or oversized) just from the silhouette and cut. It might also spot graphic prints, logos or text on the shirt and interpret the style: basic, athletic, streetwear, etc. Texture analysis may sometimes help guess the fabric type, such as cotton or synthetic blends. If a model or mannequin is wearing it, it might also estimate the size or how it fits different body types. Background context (e.g., studio shot vs. outdoor) may signal how the brand wants the item perceived, technical sportswear or lifestyle fashion.

The customers might inquire about material, size availability, color options, brand, care instructions (e.g., “Is it machine washable?”) or similar-looking alternatives. Using visual Q&A, a user might upload a shoe photo and ask, “Are these trail runners?” or “What brand is this shirt?” The AI could extract visual cues and either answer directly or pull metadata from the catalog.

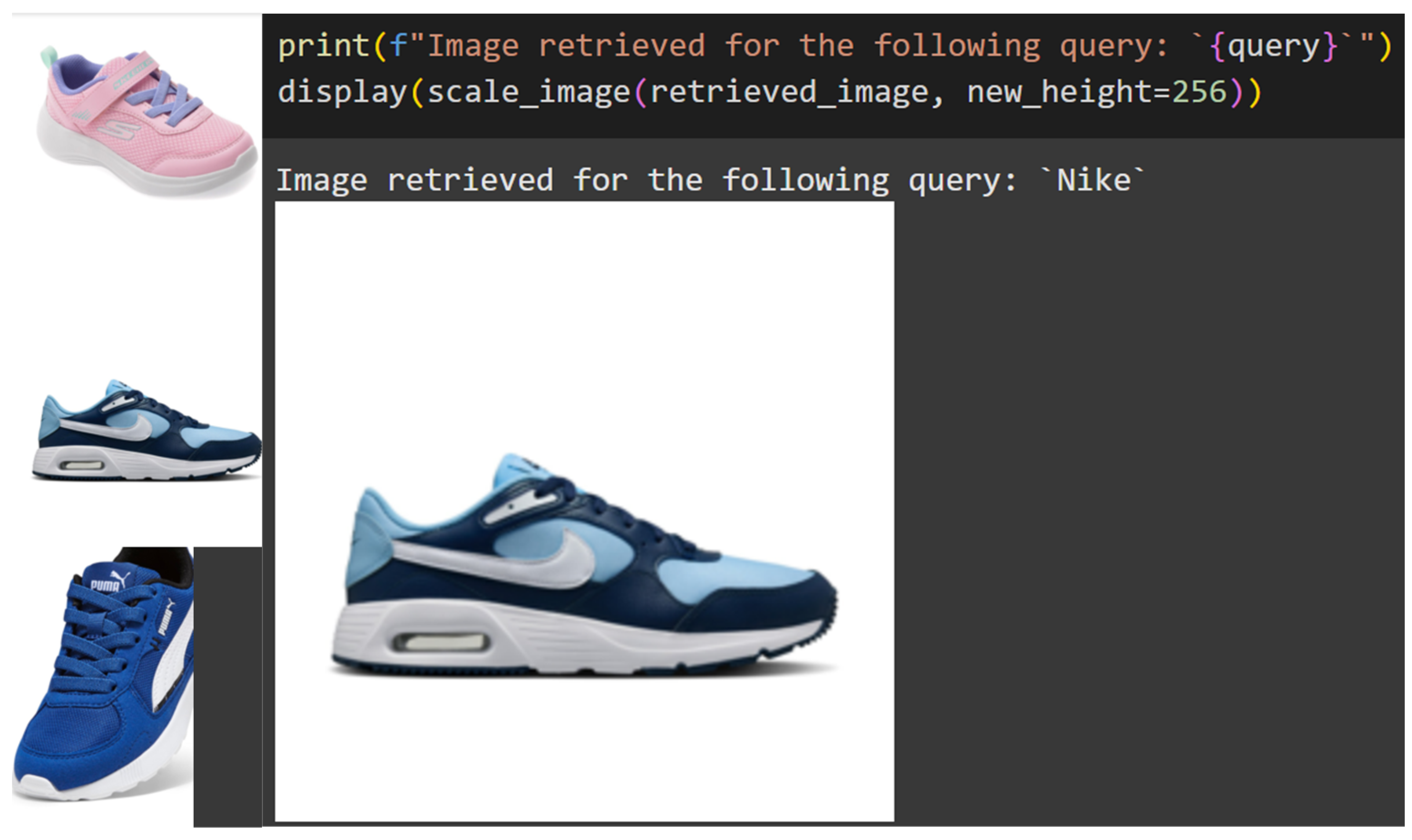

In the initial demonstration, we utilize the multimodal capabilities of the vidore/colqwen2-v1.0 model (as in Table 4, lines 1–5) to explore its performance in product-level image retrieval and VQA. The example revolves around a common real-world query scenario in e-commerce: identifying relevant footwear from a small collection of images and subsequently extracting semantic information from the retrieved image. The process begins with a textual query, “Nike”, used to guide image selection. A list of three product images, sourced from commercial domains, serves as the input set. These images depict various models of shoes in different colors and orientations. Upon querying, the model effectively identifies and retrieves the image that best matches “Nike”. The selected image, displayed prominently in the interface, shows a pair of blue and white Nike sneakers. The model’s internal embedding space captures both visual and brand-level features robustly enough to perform semantic matching from language to image with minimal supervision or metadata.

Table 4.

Demonstration with vidore/colqwen2-v1.0 model.

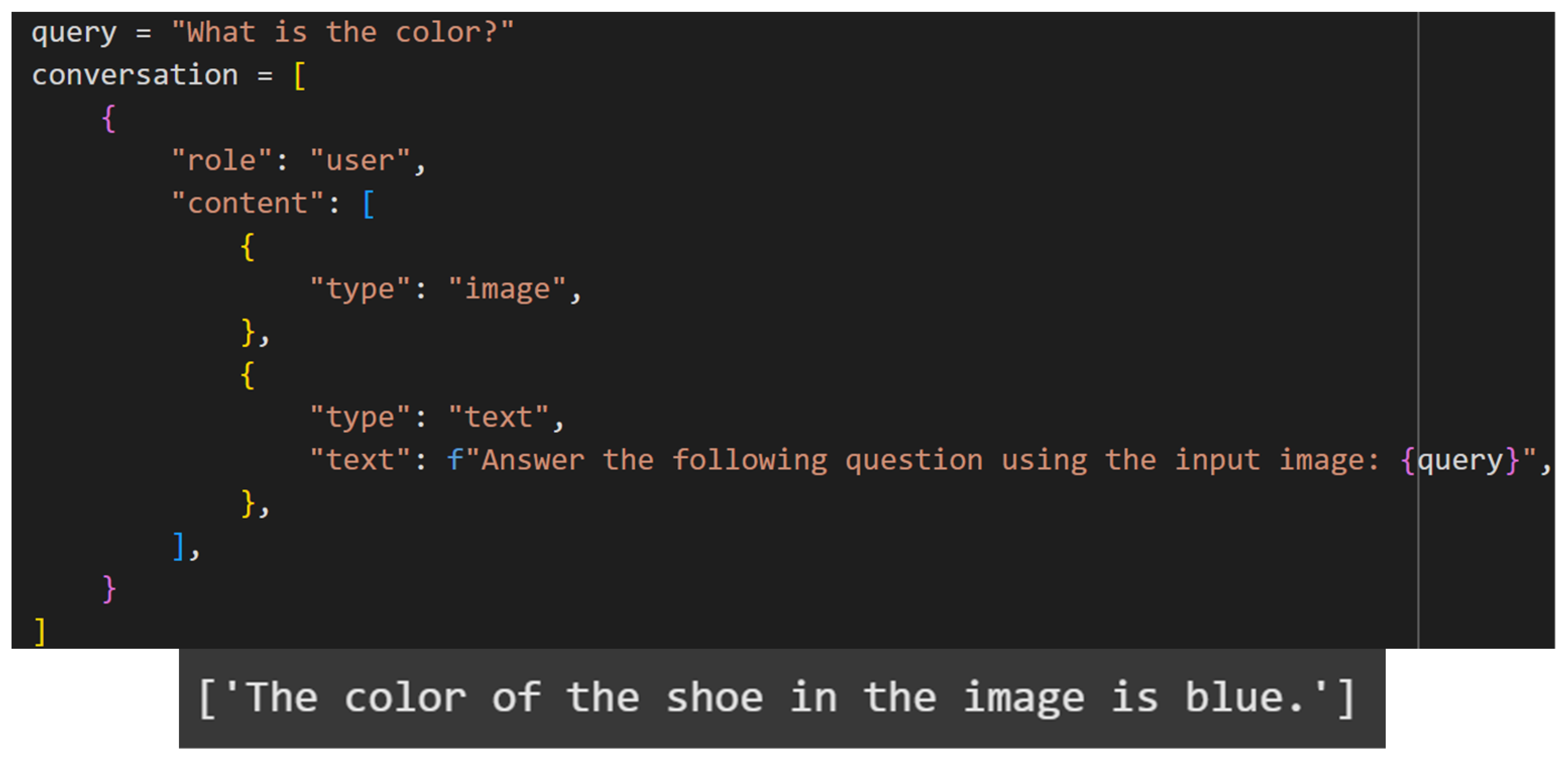

Following retrieval, the system engages in image-grounded question answering. In the first instance, the user poses a question concerning the visual attribute of color: “What is the color?” The model correctly responds with a statement indicating that the color of the shoe in the image is blue.

The second interaction involves a higher-order inference task, determining the intended function of the product. When asked, “Are they for running?”, the model infers from the structural and design cues present in the shoe that it is indeed designed for running. The generated answer “Yes, the shoes in the image are designed for running.” reflects functional understanding.

These first results illustrate the model’s ability to perform dual roles: first, in grounding a text query into a visual domain to retrieve the most relevant image; and second, in reasoning over that image to generate meaningful, context-aware natural language responses. The system’s fluency in these tasks suggests that it is well-suited for deployment in digital retail, interactive shopping assistance or any scenario where users may wish to explore product features through intuitive, conversational interfaces.

Continuing from the earlier observations, the subsequent results further validate the multimodal capabilities of the vidore/colqwen2-v1.0 model in grounding natural language queries within a visual product retrieval and interpretation framework (Table 4, lines 6–9). The model is tested across increasingly diverse textual prompts, including brand identifiers, queries and functional product categories.

In one of the examples (Table 4, line 6), the query is simply the string “Puma”. The system is able to correctly retrieve an image of a blue Puma sneaker, demonstrating its understanding of brand-specific semantics. Importantly, this result is achieved without any structured product metadata, relying purely on the alignment between visual features and the embedded linguistic concept of the brand.

An even more interesting case is presented with the query “S” (Table 4, line 7), where the system retrieves a pink sneaker from Skechers. Despite the brevity and ambiguity of the query, the model likely associates the letter “S” with the brand name imprinted on the shoe. This indicates a degree of visual–textual co-training that allows the model to interpret even minimal linguistic cues in context. The successful retrieval of a relevant image under such minimal prompt conditions shows the model’s robustness in low-shot or underspecified settings. This is followed by a query with more functional intent: “What is the image of shoes for kids?” (Table 4, line 8). The same pink Skechers shoe is retrieved. This selection suggests that the model can infer age-related use cases from visual features such as size, color palette and design elements. The pastel tones, lightweight build and child-friendly appearance likely contributed to the model’s decision. This also suggests that the model has internalized a set of soft heuristics regarding what constitutes children’s footwear, beyond explicit training labels.

In a subsequent task, the model is asked to identify the brand of a given shoe image. The question “Which brand is the shoe?” (Table 4, line 9) is paired with an image URL depicting the same Puma sneaker retrieved earlier. The system correctly answers “Puma”, with visual evidence supported by a class activation map (or similar interpretability overlay), indicating that attention is being focused on the shoe’s tongue area where the brand logo is prominently displayed. This points to the model’s ability to localize semantically meaningful regions in an image in support of its textual outputs, a feature that is particularly valuable for explainable AI in commercial applications. These use cases reflect the versatility and precision of vidore/colqwen2-v1.0 in managing a range of vision–language tasks. The model demonstrates competence not only in direct brand recognition but also in abstract category mapping (e.g., children’s shoes) and weakly specified prompts. Such capabilities have direct implications for search refinement, conversational shopping agents and intelligent visual cataloging in retail and fashion domains.

In the second set of results, the focus shifts to a comparative evaluation using a different model, vidore/colpali-v1.2-hf, highlighting its performance on brand recognition tasks from product images. Two images are under consideration (as in Table 5), one of a pink Skechers shoe and another of a blue Puma sneaker, are reused from previous tests, ensuring consistency in cross-model analysis.

Table 5.

Demonstration with vidore/colpali-v1.2-hf model.

The experimental setup involves formulating identical natural language queries directed at both images: “What is the brand of the shoe?” This prompt aims to evaluate not just the model’s recognition accuracy but also its ability to spatially localize and attend to brand-specific visual features, a critical component in multimodal interpretability. Two images are passed as inputs, each paired with the same query. The output includes both the model’s answer and an associated attention heatmap superimposed over the original image. In the case of the Puma shoe, the heatmap shows focused attention around the logo positioned on the tongue and lateral side of the sneaker. These regions are semantically rich, and the model successfully identifies them as relevant to the query. The overlay confirms that colpali-v1.2-hf is capable of correctly linking linguistic brand references to the appropriate visual areas. A similar pattern is observed for the Skechers shoe. The model allocates attention predominantly around the strap and lateral branding area where the Skechers logo is embedded. Despite the softer and lower-contrast styling of this particular shoe, the model still produces a distinct attention pattern concentrated in the correct region.

The colpali-v1.2-hf model exhibits high reliability in brand name extraction from footwear images, aligning textual references with precise visual regions. Second, the activation maps offer meaningful insight into the model’s internal reasoning process, serving as a tool for transparency and debugging in real-world applications. This is especially valuable in scenarios like automated product cataloging, where explainability and auditability are often as critical as accuracy.

ColQwen2 demonstrates superior performance in terms of generative fluency, task flexibility and multimodal reasoning, whereas ColPali offers a distinct advantage in interpretability and visual traceability. In the same footwear examples, ColQwen2 retrieves relevant products and answers brand or function-related questions with natural language output. However, ColPali excels in visually grounding its retrievals by highlighting the precise image regions (e.g., logo areas) that informed its decision, providing a level of transparency and auditability absent in ColQwen2. That said, ColPali lacks generation capability and operates more slowly, with higher memory consumption, especially due to its larger backbone and one-image-per-query constraint. In practical terms, ColQwen2 is more suitable for real-time, interactive applications, while ColPali better serves diagnostic, quality control or compliance workflows where explainability outweighs fluency.

5. Discussions

5.1. Contributions to Theory/Knowledge/Literature

We acknowledge that ColQwen2 (vidore/colqwen2-v1 (vidore/colqwen2-v1.0 · Hugging Face)) and ColPali (vidore/colpali-v1.2-hf (vidore/colpali-v1.2-hf · Hugging Face)) are publicly released models developed by third-party contributors. ColPali, PaliGemma and ColQwen2 usage is strictly for academic research purposes. For any commercial deployment, users should consult the license terms and contact the respective model providers.

Compared with prior models such as CLIP [35] and BLIP-2 [36], which rely heavily on contrastive learning for image-text alignment, ColQwen2 introduces a functional enhancement through LoRA-based adapter hot-swapping. This innovation facilitates seamless transitions between retrieval and generation modes within a unified architecture. While CLIP’s zero-shot classification capacity is impressive, it lacks generative abilities, and models like BLIP-2, though capable of image captioning, do not integrate retrieval functionalities in a dynamic or modular way. By contrast, ColQwen2 operates in a bidirectional loop, retrieving relevant visual evidence and generating grounded answers based on it, all within a single model. This architectural integration simplifies multimodal pipelines and addresses inefficiencies observed in earlier RAG architectures that relied on disjoint retrieval and generation models [37].

ColPali builds upon Google’s PaliGemma architecture and distinguishes itself from standard retrieval frameworks by embedding interpretability directly into its output. Unlike traditional attention visualization (e.g., Grad-CAM) added as a post hoc module [38], ColPali generates token-level similarity maps natively. This feature aligns with emerging demands for explainable AI (XAI), particularly in commercial domains where transparency and accountability are essential [39]. While interpretability tools like SHAP or LIME are commonly used for tabular or text data, their adaptation to visual domains remains limited. ColPali fills this gap by offering a robust method to trace model reasoning visually, bridging the transparency gap in VLMs.

Another contribution lies in the operational applicability of these models to e-commerce. Many earlier studies focused on general-purpose benchmarks (e.g., COCO, ImageNet), often overlooking the domain-specific needs of digital retail. In contrast, this study anchors its evaluation in realistic use cases such as brand recognition, catalog tagging and customer question answering. Prior work by [40,41] on fashion attribute detection or visual recommendation systems typically relied on supervised training with curated labels. ColQwen2 and ColPali, through pre-trained vision–language understanding and minimal finetuning, outperform such task-specific systems in flexibility and scalability.

In terms of visual product understanding, our work also complements research in retrieval-based models like ViLT [42], which rely on transformer-based fusion but lack modular design. The adapter-based framework proposed for ColQwen2 introduces a novel mechanism that decouples training for retrieval and generation, allowing task-specific optimization without retraining the base model. This design resonates with the trend toward efficient fine-tuning in large-scale models [43], where memory and computational constraints are major considerations.

Finally, the token-image alignment provided by ColPali introduces a new layer of semantic interpretability in VLM evaluations. Earlier benchmarks focused primarily on output accuracy (e.g., BLEU scores), but this work proposes interpretability as an equal dimension of model performance. This novel evaluation strategy has the potential to redefine how VLMs are assessed.

5.2. Implications for Practice

From a practical perspective, this research provides actionable insights for digital commerce platforms seeking to deploy intelligent, multimodal systems. ColQwen2′s unified RAG pipeline offers a cost-effective and compact solution for image-grounded Q&A and automated metadata generation. For instance, retailers can integrate ColQwen2 into their customer service interfaces to power interactive assistants that can answer visual queries like “What’s the material of this bag?” or “Are these running shoes?” using only product images. This reduces dependency on manual product tagging or static FAQs, improving responsiveness and user engagement.

Simultaneously, ColPali provides essential capabilities for trust-critical applications. Its visual-token alignment maps serve as a valuable tool in catalog verification workflows. For example, when used by content moderators or quality control teams, the model can highlight whether a claimed brand logo is visibly present and where. This ensures both product authenticity and catalog accuracy without requiring manual inspection of every image. Similarly, ColPali can be embedded into seller onboarding tools to visually validate image claims, preventing counterfeit or low-quality listings.

Furthermore, the modular and LoRA-based architecture of ColQwen2 supports deployment in constrained environments (e.g., Google Colab or mid-tier GPU servers). The simulations and results in our research were obtained using Google Colab (free version), showing that limited computational resources are not a hindrance. This democratizes access to advanced VLMs, allowing small and medium-sized enterprises (SMEs) to leverage generative multimodal AI without significant infrastructure investments. Moreover, the explainability of ColPali enhances compliance with emerging AI regulations, which emphasizes transparency and user rights in algorithmic decision-making.

Finally, the side-by-side model comparison framework proposed in this research enables developers and researchers to make informed decisions about VLM selection based on deployment context. For customer-facing interfaces requiring natural language generation, ColQwen2 is preferable; for backend verification and model auditing, ColPali is the optimal choice. This clear operational separation contributes to better system architecture and deployment planning.

Briefly, the implications for practice include

- Enhanced automation in product cataloging and customer support;

- Visual QA tools that improve customer interaction and personalization;

- Increased catalog accuracy and fraud detection through interpretability;

- Democratized VLM deployment via modular adapter design;

- Regulatory readiness through transparent AI decision-making.

By contextualizing ColQwen2 and ColPali in real-world e-commerce pipelines, this research links theoretical model development and applied AI deployment in digital retail.

6. Conclusions

Our research has examined the capabilities of two recent vision–language models, ColQwen2 (vidore/colqwen2-v1.0) and ColPali (vidore/colpali-v1.2-hf), in the context of multimodal product understanding, a rapidly emerging area in e-commerce and retail automation. Through a series of controlled experiments using commercially available product images (e.g., athletic footwear), we have demonstrated that both models offer unique and complementary functionalities that transform how products are discovered, described and recommended in online shopping environments.

The ColQwen2 model, leveraging a dual-mode architecture with LoRA-based adapter hot-swapping, exhibits strong performance in tasks that require both visual retrieval and language generation. It effectively maps natural language queries (e.g., brand names, functional categories or vague identifiers like “S”) to the most semantically relevant product images and subsequently answers detailed, context-aware questions grounded in those visuals. Notably, in terms of practical implications, ColQwen2 showed a consistent ability to infer complex product attributes, such as intended use (“Are they for running?”), brand identity or even user demographics (e.g., suitability for children), based solely on visual features like shape, material and design cues. These capabilities suggest a powerful tool for enabling automated product tagging, visual Q&A and customer support bots that require minimal manual input from sellers.

Further, ColPali was shown to prioritize interpretability over generation, offering high-resolution attention heatmaps that visualize the token-level alignment between user queries and specific image regions. This interpretability is especially valuable and practical in tasks such as brand verification, visual auditing or catalog curation, where trust, transparency and human-in-the-loop workflows are essential. For example, ColPali reliably highlighted logo areas when queried about brand identity, even in low-contrast or ambiguous visual conditions, reinforcing its role as a tool for explainable AI (XAI) in commercial applications. While it does not generate natural language outputs, its heatmaps provide precise visual diagnostics that support downstream decision-making in quality assurance, counterfeit detection and visual compliance checks.

The models represent two ends of a functional spectrum in multimodal e-commerce intelligence. ColQwen2 is well-suited for frontend consumer-facing systems where conversational interaction, intuitive discovery and contextual question-answering applications are key to user experience and engagement. ColPali, on the other hand, aligns with backend operations where accountability, model interpretability and visual validation are crucial. Their performance on real-world product imagery, including successful recognition from minimal or underspecified queries, underscores their robustness and practical utility in user-generated environments typical of retail platforms.

Our findings highlight the respective strengths of these models and their suitability for various deployment contexts in digital retail, from intelligent assistants to explainable AI (XAI) systems for catalog curation and customer interaction. Our research addresses a gap in the application of VLMs to e-commerce by proposing and demonstrating the implementation of two complementary architectures. ColQwen2 fills the need for unified RAG systems tailored to digital retail, enabling end-to-end visual product querying and response generation. In parallel, ColPali addresses the growing demand for XAI in commercial contexts by supporting interpretable and auditable tasks, such as brand verification and catalog curation.

The implications of these results for the e-commerce sector are considerable. Integrating such models into product pipelines/platforms reduces manual effort in product listing, increases metadata accuracy, improves search relevance and offers intelligent, image-grounded chat support. For retailers and marketplaces, this translates into faster onboarding, greater catalog consistency and enhanced customer satisfaction. Additionally, the ability to trace model attention or explain retrieval outcomes opens pathways for ethical deployment, helping mitigate concerns around black-box decision-making in AI systems.

The case study, focused on adults’ and children’s footwear of different brands, offers a useful benchmark due to the category’s distinctive visual and functional features. We also conducted simulations using additional images, including t-shirts and bags. These tests further confirmed the performance of both models in generating accurate textual responses and interpreting visual attributes, or recognizing brand information, such as correctly identifying the logo and its location within the image.

One limitation of the current implementations is their focus on single-turn queries and responses. However, real-world customer interactions often involve multi-turn dialogs or contextual clarification. Neither ColQwen2 nor ColPali, as currently deployed, handle conversational memory or dialog context retention, which may limit their utility in full-scale virtual shopping assistant roles. While the presented results demonstrate the effectiveness of the proposed multimodal models in controlled scenarios, the current research does not include validation through real-world user feedback or deployment data. This limits our ability to fully assess the models’ performance in live e-commerce environments, where factors such as user behavior, interface design and latency may influence outcomes. We acknowledge this as a current limitation and view it as a valuable direction for future research.

While our current evaluation focuses on a small set of showcase product images to highlight architectural differences and explainability features, we acknowledge the need for broader benchmarking. In future work, we plan to extend the analysis using a larger proprietary dataset and benchmark on public datasets such as Fashion-IQ and DocVQA. As future work, we also intend to report quantitative metrics, e.g., top-1 brand classification accuracy and mAP for attribute tagging and response latency, and compare against baselines like CLIP, BLIP-2 and LLaVA-Next to substantiate performance claims.

Future developments may also focus on hybrid architectures that combine the retrieval fluency and generative flexibility of ColQwen2 with the interpretability mechanisms of ColPali, delivering both functional breadth and epistemic transparency. As retail continues to evolve toward hyper-personalization, visual intelligence and automation, such vision–language models are poised to become foundational components of next-generation e-commerce infrastructure.

Author Contributions

Conceptualization, Methodology, Formal Analysis, Investigation, Writing—Original Draft, Writing—Review and Editing, Visualization, and Project administration, S.-V.O.; Validation, Formal Analysis, Investigation, Resources, Data Curation, Writing—Original Draft, Writing—Review and Editing, Visualization, and Supervision, A.B. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by a grant of the Ministry of Research, Innovation and Digitization, CNCS/CCCDI-UEFISCDI, project number COFUND-CETP-SMART-LEM-1, within PNCDI IV.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data will be available on request.

Acknowledgments

This work was supported by a grant of the Ministry of Research, Innovation and Digitization, CNCS/CCCDI-UEFISCDI, project number COFUND-CETP-SMART-LEM-1, within PNCDI IV.

Conflicts of Interest

The authors declare that there are no conflicts of interest.

References

- Li, H.; Yuan, P.; Xu, S.; Wu, Y.; He, X.; Zhou, B. Aspect-Aware Multimodal Summarization for Chinese e-Commerce Products. In Proceedings of the AAAI 2020—34th AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Khandelwal, A.; Kulkarni, S.S.; Mittal, H.; Gupta, D. Large Scale Generative Multimodal Attribute Extraction for E-Commerce Attributes. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023. [Google Scholar]

- Gao, P.; Geng, S.; Zhang, R.; Ma, T.; Fang, R.; Zhang, Y.; Li, H.; Qiao, Y. CLIP-Adapter: Better Vision-Language Models with Feature Adapters. Int. J. Comput. Vis. 2024, 132, 581–595. [Google Scholar] [CrossRef]

- Tashu, T.M.; Fattouh, S.; Kiss, P.; Horvath, T. Multimodal E-Commerce Product Classification Using Hierarchical Fusion. In Proceedings of the 2022 IEEE 2nd Conference on Information Technology and Data Science, CITDS 2022—Proceedings, Debrecen, Hungary, 16–18 May 2022. [Google Scholar]

- Hewawalpita, S.; Perera, I. Multimodal User Interaction Framework for E-Commerce. In Proceedings of the IEEE International Research Conference on Smart Computing and Systems Engineering, SCSE 2019, Colombo, Sri Lanka, 28 March 2019. [Google Scholar]

- Hendriksen, M. Multimodal Retrieval in E-Commerce: From Categories to Images, Text, and Back. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Tang, T.; Wu, Y.; Wu, Y.; Yu, L.; Li, Y. VideoModerator: A Risk-Aware Framework for Multimodal Video Moderation in E-Commerce. IEEE Trans. Vis. Comput. Graph. 2022, 28, 846–856. [Google Scholar] [CrossRef] [PubMed]

- Ezzameli, K.; Mahersia, H. Emotion Recognition from Unimodal to Multimodal Analysis: A Review. Inf. Fusion 2023, 99, 101847. [Google Scholar] [CrossRef]

- Zhuansun, F.Q.; Chen, J.J.; Chen, W.; Sun, Y. Analysis of Precision Service of Agricultural Product E-Commerce Based on Multimodal Collaborative Filtering Algorithm. Math. Probl. Eng. 2022, 2022, 8323467. [Google Scholar] [CrossRef]

- Xu, W.; Zhang, X.; Chen, R.; Yang, Z. How Do You Say It Matters? A Multimodal Analytics Framework for Product Return Prediction in Live Streaming e-Commerce. Decis. Support Syst. 2023, 172, 113984. [Google Scholar] [CrossRef]

- Cai, W.; Song, Y.; Wei, Z. Multimodal Data Guided Spatial Feature Fusion and Grouping Strategy for E-Commerce Commodity Demand Forecasting. Mob. Inf. Syst. 2021, 2021, 5568208. [Google Scholar] [CrossRef]

- Xu, W.; Cao, Y.; Chen, R. A Multimodal Analytics Framework for Product Sales Prediction with the Reputation of Anchors in Live Streaming E-Commerce. Decis. Support Syst. 2024, 177, 114104. [Google Scholar] [CrossRef]

- Chen, J.; Zhong, Z.; Feng, Q.; Liu, L. The Multimodal Emotion Information Analysis of E-Commerce Online Pricing in Electronic Word of Mouth. J. Glob. Inf. Manag. 2022, 30, 1–17. [Google Scholar] [CrossRef]

- Koh, J.Y.; Salakhutdinov, R.; Fried, D. Grounding Language Models to Images for Multimodal Generation. In Proceedings of the 40th International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023. [Google Scholar]

- Sun, Q.; Wang, Y.; Xu, C.; Zheng, K.; Yang, Y.; Hu, H.; Xu, F.; Zhang, J.; Geng, X.; Jiang, D. Multimodal Dialogue Response Generation. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Dublin, Ireland, 22–27 May 2022. [Google Scholar]

- Zhao, Y.; Cheng, B.; Huang, Y.; Wan, Z.; Hošovský, A. Beyond Words: An Intelligent Human-Machine Dialogue System with Multimodal Generation and Emotional Comprehension. Int. J. Intell. Syst. 2023, 2023, 9267487. [Google Scholar] [CrossRef]

- Li, Y.; Du, Y.; Zhou, K.; Wang, J.; Zhao, W.X.; Wen, J.R. Evaluating Object Hallucination in Large Vision-Language Models. In Proceedings of the EMNLP 2023—2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023. [Google Scholar]

- Ma, H.; Fan, B.; Ng, B.K.; Lam, C.T. VL-Meta: Vision-Language Models for Multimodal Meta-Learning. Mathematics 2024, 12, 286. [Google Scholar] [CrossRef]

- Wu, J.; Yu, T.; Li, S. Deconfounded and Explainable Interactive Vision-Language Retrieval of Complex Scenes. In Proceedings of the MM 2021—Proceedings of the 29th ACM International Conference on Multimedia, Virtual, 20–24 October 2021. [Google Scholar]

- Sammani, F.; Mukherjee, T.; Deligiannis, N. NLX-GPT: A Model for Natural Language Explanations in Vision and Vision-Language Tasks. In Proceedings of the Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Zhang, J.; Huang, J.; Jin, S.; Lu, S. Vision-Language Models for Vision Tasks: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 5625–5644. [Google Scholar] [CrossRef]

- Wu, W.; Sun, Z.; Song, Y.; Wang, J.; Ouyang, W. Transferring Vision-Language Models for Visual Recognition: A Classifier Perspective. Int. J. Comput. Vis. 2024, 132, 392–409. [Google Scholar] [CrossRef]

- Wang, Y.; Yu, Z.; Wang, J.; Heng, Q.; Chen, H.; Ye, W.; Xie, R.; Xie, X.; Zhang, S. Exploring Vision-Language Models for Imbalanced Learning. Int. J. Comput. Vis. 2024, 132, 224–237. [Google Scholar] [CrossRef]

- Zhou, K.; Yang, J.; Loy, C.C.; Liu, Z. Learning to Prompt for Vision-Language Models. Int. J. Comput. Vis. 2022, 130, 2337–2348. [Google Scholar] [CrossRef]

- Zhou, K.; Yang, J.; Loy, C.C.; Liu, Z. Conditional Prompt Learning for Vision-Language Models. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Wang, H.; Huang, R.; Zhang, J. Research Progress on Vision–Language Multimodal Pretraining Model Technology. Electronics 2022, 11, 3556. [Google Scholar] [CrossRef]

- Xing, J.; Liu, J.; Wang, J.; Sun, L.; Chen, X.; Gu, X.; Wang, Y. A Survey of Efficient Fine-Tuning Methods for Vision-Language Models—Prompt and Adapter. Comput. Graph. 2024, 119, 103885. [Google Scholar] [CrossRef]

- Wang, L.; He, J.; Li, S.; Liu, N.; Lim, E.P. Mitigating Fine-Grained Hallucination by Fine-Tuning Large Vision-Language Models with Caption Rewrites. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2024. [Google Scholar]

- Du, Y.; Wei, F.; Zhang, Z.; Shi, M.; Gao, Y.; Li, G. Learning to Prompt for Open-Vocabulary Object Detection with Vision-Language Model. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Shin, W.; Park, J.; Woo, T.; Cho, Y.; Oh, K.; Song, H. E-CLIP: Large-Scale Vision-Language Representation Learning in E-Commerce. In Proceedings of the International Conference on Information and Knowledge Management, Atlanta, GA, USA, 17–21 October 2022. [Google Scholar]

- Jin, Y.; Li, Y.; Yuan, Z.; Mu, Y. Learning Instance-Level Representation for Large-Scale Multi-Modal Pretraining in E-Commerce. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Moratelli, N.; Barraco, M.; Morelli, D.; Cornia, M.; Baraldi, L.; Cucchiara, R. Fashion-Oriented Image Captioning with External Knowledge Retrieval and Fully Attentive Gates. Sensors 2023, 23, 1286. [Google Scholar] [CrossRef]

- Chen, B.; Jin, L.; Wang, X.; Gao, D.; Jiang, W.; Ning, W. Unified Vision-Language Representation Modeling for E-Commerce Same-Style Products Retrieval. In Proceedings of the ACM Web Conference 2023—Companion of the World Wide Web Conference, WWW 2023, Austin, TX, USA, 30 April–4 May 2023. [Google Scholar]

- Năstasă, A.; Matei, M.M.M.; Mocanu, C. Artificial intelligence: Friend or Foe? Experts’ Concerns on European AI Act. Econ. Comput. Econ. Cybern. Stud. Res. 2023, 57, 5–22. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the Machine Learning Research, Online, 18–24 July 2021. [Google Scholar]

- Li, J.; Li, D.; Savarese, S.; Hoi, S. BLIP-2: Bootstrapping Language-Image Pre-Training with Frozen Image Encoders and Large Language Models. In Proceedings of the Machine Learning Research, Honolulu, HI, USA, 23–29 July 2023. [Google Scholar]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.T.; Rocktäschel, T.; et al. Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. In Proceedings of the Advances in Neural Information Processing Systems, Virtue, 6–12 December 2020. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Doshi-Velez, F.; Kim, B. A Roadmap for a Rigorous Science of Interpretability. arXiv 2017, arXiv:1702.08608v1. [Google Scholar]

- Gao, D.; Jin, L.; Chen, B.; Qiu, M.; Li, P.; Wei, Y.; Hu, Y.; Wang, H. FashionBERT: Text and Image Matching with Adaptive Loss for Cross-Modal Retrieval. In Proceedings of the SIGIR 2020—Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtue, 25–30 July 2020. [Google Scholar]

- Li, Y.; Chen, T.; Huang, Z. Attribute-Aware Explainable Complementary Clothing Recommendation. World Wide Web 2021, 24, 1885–1901. [Google Scholar] [CrossRef]

- Kim, W.; Son, B.; Kim, I. ViLT: Vision-and-Language Transformer Without Convolution or Region Supervision. In Proceedings of the Proceedings of Machine Learning Research, Online, 18–24 July 2021. [Google Scholar]

- Hu, E.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. Lora: Low-Rank Adaptation of Large Language Models. In Proceedings of the ICLR 2022—10th International Conference on Learning Representations, Virtue, 25 April 2022. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).