Abstract

Generative AI needs to collect user data to provide more accurate answers. This may raise users’ privacy concern and undermine their disclosure intention. The purpose of this research is to examine generative AI user disclosure intention from the perspective of technological–social affordance. We adopted a mixed method of PLS-SEM and fsQCA to conduct data analysis. The results reveal that perceived affordance of content generation (including information association, content quality, and interactivity), perceived affordance of privacy protection (including anonymity and privacy statement), and perceived affordance of anthropomorphic interaction (including empathy and social presence) affect privacy concern and reciprocity, both of which further affect disclosure intention. The fsQCA identified two paths that trigger user disclosure intention. These results imply that generative AI platforms need to increase users’ perceived affordance in order to promote their disclosure intention and ensure the continuous development of platforms.

1. Introduction

The emergence of generative AI opened a new development phase in human–intelligence interaction. It can simulate creative thinking and has shown great productivity. Major technology giants focused on AI and developed large language models (LLMs). This technology rapidly penetrated into many fields, such as healthcare, news media, and education, and provided efficient solutions for different industries. However, privacy risk in generative AI has become a problem that cannot be ignored. ChatGPT-4 was anonymously sued for up to USD 3 billion for leaking the privacy of its users [1]. As LLMs become more powerful, data management also needs to be strengthened. According to a file released by the Cyberspace Administration of China [2], generative AI platforms cannot infringe the privacy and information rights of individuals, indicating that information privacy has become an important factor for the development of generative AI.

On the one hand, besides registered information and access records, users may input privacy information, such as personal information, interests, and preferences to obtain accurate answers when interacting with generative AI. These data may be used for model training and optimization in a way that users do not know or that is beyond their acceptance [3]. Furthermore, platforms may receive attack and leak training data due to algorithmic loopholes. On the other hand, in traditional social media, the contents posted by users are publicly liked and forwarded. Thus, the path of information dissemination is relatively visible, and users can set the scope of information sharing. In contrast, information dissemination in generative AI is more hidden and users may lack control of information. Therefore, the risk of privacy leakage and the uncertainty of information dissemination make it difficult for users to make decisions on information disclosure, which hinders content co-creation between users and platforms [4]. Users’ optional input leads to the sparsity of training data, which directly reduces the quality of generated contents and increases the quantity of false information and bias.

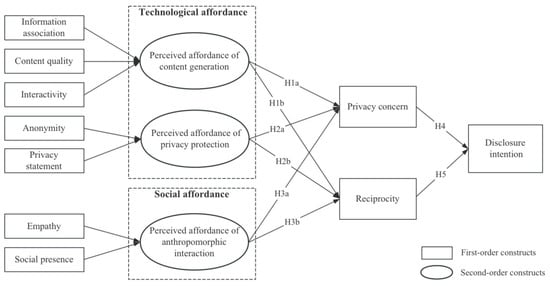

As noted earlier, user disclosure is crucial to the continuous development of generative AI. Previous studies focused on user disclosure intention in the traditional contexts, such as online healthcare [5,6], social networking [7,8], and mobile applications [9,10]. Various factors, such as trust and privacy concern, are found to affect user disclosure [5,8,9]. However, these studies seldom examined user disclosure in generative AI, which represents an emerging technology. In other words, the mechanism underlying generative AI user disclosure remains a question. This research tries to fill the gap. The purpose of this research is to examine generative AI user disclosure intention from a perceived affordance perspective. Compared with traditional information technology, generative AI has more powerful features, such as intelligence emergence and anthropomorphic interaction experience, which may improve users’ perceived affordance and affect their behavioral decision. From a technological–social perspective [11], we propose that perceived affordance includes perceived affordance of content generation, privacy protection, and anthropomorphic interaction. Among them, the former two belong to technological affordance, whereas the latter one is social affordance. Perceived affordance of content generation contains information association, content quality, and interactivity. Perceived affordance of privacy protection contains anonymity and a privacy statement. Perceived affordance of anthropomorphic interaction contains empathy and social presence. We include privacy concern and reciprocity as the mediators between perceived affordance and disclosure intention. Partial least square structural equation modeling (PLS-SEM) and fuzzy-set qualitative comparative analysis (fsQCA) were used to analyze the data. The results will uncover the effect mechanism of perceived affordance on generative AI user disclosure. They may also provide insights for generative AI platforms to promote user disclosure intention and ensure the sustainable development of platforms.

2. Literature Review

2.1. Generative AI User Behavior

Generative AI brought significant changes to fields such as medicine [12], education [13], and finance [14]. Generative AI user behavior, such as user adoption and usage, is a hot research topic. In terms of user adoption, Soni [15] revealed the drivers and barriers that influence user adoption of generative AI in digital marketing campaigns. Hazzan-Bishara et al. [16] found that usefulness, institutional support, and self-efficacy affect teachers’ intention to use AI. Zhou and Lu [17] identified the effect of trust on user adoption of AI-generated content. In terms of user usage, Shahsavar and Choudhury [18] revealed the influencing factors of ChatGPT user utilization for self-diagnosis in healthcare. Jo [4] selected university students and office workers as two major groups to examine their opinions on generative AI. Baek and Kim [3] explored the impact of generative AI user motivations on continuous usage intention. Liu and Du [19] argued that the ethical aspects, such as security, privacy, non-deception, and fulfillment, affect continuous intention of generative AI. Nan et al. [20] found that information quality, system quality, privacy concerns, and perceived innovativeness affect users’ intentions to continue using ChatGPT.

From these literatures, we can find that research on generative AI user behavior is still in its infancy. It mainly focused on user usage and adoption of generative AI, but seldom investigated user disclosure. This research tries to fill the gap by examining the effect of perceived affordance on user disclosure intention.

2.2. User Disclosure Intention

User disclosure means that users disclose personal information, such as identity information and preferences, in order to obtain personalized information and services. When users decide personal disclosure, they may conduct a trade-off between benefits (such as personalization) and costs (such as privacy risk), which represents a privacy calculus. Previous research examined user disclosure in the contexts of online healthcare, social networking, and mobile applications. In terms of online healthcare, Sun et al. [5] found that trust is the necessary condition for user disclosure of health information. Dogruel et al. [6] noted that privacy attitudes, habits, and social norms affect user disclosure intention in mobile health apps. In terms of social networking, Neves et al. [8] found that institutional and peer privacy concerns increase a user’s intention to reduce social networking use. Chen et al. [7] reported that both privacy benefits and privacy concerns affect personal information disclosure. In terms of mobile applications, Le et al. [9] reported that perceived justice and privacy concern affect the privacy disclosure behavior of mobile app users. Lu [10] noted that perceived benefits and risks affect disclosure intention in mobile banking.

As we can find from these studies, they examined user disclosure in the traditional contexts of online healthcare, social networking, and mobile apps. Consistent with these studies, this research will examine user disclosure intention in generative AI, which is an emerging technology. The results will uncover the mechanism underlying generative AI user disclosure intention.

2.3. Perceived Affordance Theory

The concept of affordance, which originates from ecological research, aims to explain the interrelationships between an organism and its environment. It was later introduced into the field of design to help designers improve usability and user experience by describing the relationship between object properties and user ability. With the development of computer technology, the concept was extended to the field of human–computer interaction. Norman [21] defined perceived affordance as the possibility of user actions enabled by information technology artifacts. Even with the same technology or feature, the affordance may vary according to different usage scenarios and user needs.

Prior research studied the effect of perceived affordance on user behavior in multiple contexts. Li et al. [22] found that cross-border e-commerce affordances affect consumer loyalty through perceived value. Wang and Zhao [23] noted that the affordances of patient-accessible electronic health records enhance older adults’ attachment to platforms and doctors. Wang and Wang [24] found that gamification affordances affect users’ satisfaction and their knowledge sharing in the social question and answer (Q&A) community. Nam et al. [25] noted that perceived live streaming affordance affects enjoyment and user purchase behavior. Lee and Li [26] noted that users perceive the ability and warmth of chatbots driven by perceived affordance, which includes ubiquitous connection, information association, visibility, and interactivity.

As evidenced by these studies, they examined perceived affordance in the contexts of e-commerce, online health, live streaming commerce, and chatbots. Consistent with these studies, we examined the impact of perceived affordance on generative AI user disclosure intention. The socio-technical theory suggests that information technology does not exist in isolation, but has social attributes. Thus, we divided perceived affordances into two dimensions, including technological affordance and social affordance. For technological affordance, generative AI provides users with the possibilities of actions in two aspects. First, it helps users solve problems by quickly generating contents in conversations. Second, it assists users in protecting their privacy by reasonably utilizing the input contents. For social affordance, generative AI can act as a social actor to interact with users in a human-like manner, providing users with the possibility to meet emotional needs and establish social relationships.

3. Research Model and Hypotheses

3.1. Technological Affordance

3.1.1. Perceived Affordance of Content Generation

As a second-order factor, perceived affordance of content generation consists of three factors, including information association, content quality, and interactivity.

Information association reflects the extent to which users can acquire the information catering to their needs in generative AI [27]. A main demand of users in content generation services is to obtain effective information. Generative AI provides great possibilities for satisfying this demand. It can not only analyze the personalized questions of users, quickly summarize the information from multiple channels, and formulate organized answers, but also help users extend questions, develop their thinking through functions such as “you can continue to ask me”, and output more in-depth and broader information. Through these functions, generative AI demonstrates its surrogate information searching, which means that users can delegate the search and aggregation of information to AI, and directly obtain the simplified answers. Therefore, information association bridges the gap between users and massive information. It helps reduce the information overload pressure on users, increase their satisfaction [28], and ultimately mitigate their privacy concern [29]. Research found that perceived affordance provides users with the potential to solve problems [26], makes them feel helped, and creates a sense of responsibility towards the platform.

Content quality reflects the accuracy, relevance, and timeliness of the contents provided by generative AI [30]. Users regard generative AI as an information intermediary and expect to obtain the required information from it within a short time. However, if the generated contents are outdated, irrelevant and full of bias and errors, users may doubt the capability of the platform [31], which in turn triggers users’ concerns about information privacy. Meanwhile, users need to spend time and effort on screening and evaluating low-quality contents. They also need to change the way they ask to obtain better answers, which will undermine their experience [30]. Therefore, high-quality and reliable content generation will lead to the positive perceptions of users. They believe in the ability of generative AI to manage data privacy and provide more benefits and values in the future.

Interactivity means that users can take active control and conduct two-way communication in a technical service [32]. Lu et al. [33] argued that the interactivity of products reduces user difficulty in information processing, engenders trust, and removes doubts after deepening the understanding between users and products. Yuan et al. [34] noted that interactivity increases customer engagement on sharing economy platforms. Generative AI platforms are characterized by the ability to interact with users in real time and dynamically adjust their output contents based on users’ feedback. Users can initiate or end interaction process at their discretion. On the one hand, effective communication helps users learn more about the platform’s operation mechanism, which may alleviate their concerns about the data collation and usage. On the other hand, interactivity enhances users’ participation in the platform, and helps them take advantage of the information to complete their tasks. This in turn leads to their perception of reciprocity.

Thus, the perceived affordance of content generation of generative AI provides users with great possibilities for acquiring information in terms of scope, quality, and efficiency, which helps build users’ trust and alleviate their privacy concerns. Moreover, the content generation service itself is designed to enhance the collaborative and reciprocal relationship between platforms and users. Therefore, we propose the following:

H1a:

Perceived affordance of content generation negatively affects privacy concern.

H1b:

Perceived affordance of content generation positively affects reciprocity.

3.1.2. Perceived Affordance of Privacy Protection

Perceived affordance of privacy protection consists of two dimensions, including anonymity and privacy statement.

Anonymity means that users perceive that their real identities are hidden in communication, regardless of whether the communication is public or not [35]. Rains [36] found that in health forums, anonymity can alleviate bloggers’ negative emotions, such as embarrassment, and facilitate their self-disclosure of health conditions. Woo [37] argued that anonymity is user-oriented and it is a way to effectively protect privacy. Thus, if users believe that their identity will not be easily recognized by the platform or other users, they will be more likely to input personal information regardless of privacy concerns. In addition, anonymity has been found to produce a de-inhibition effect [35], that is, users may develop a bold mindset and be honest and open when using generative AI to ask questions that would not be disclosed in a non-anonymity environment. Generative AI platforms allow users to freely set avatars, nicknames, and identities in conversations, which can stimulate users’ imagination and creativity to a certain extent. The platforms in turn output innovative and personalized responses to users. Moreover, users can freely provide feedback on their needs and opinions, which may lead to mutual benefits in interactions.

The privacy statement explains how the platform collects and uses information. After reading the privacy statement, users can learn about the specific operations of utilizing and protecting their input information in generative AI. Wu et al. [38] suggested that there is a significant correlation between privacy policy and privacy concern, which further affects consumers’ intention to provide personal information. An ambiguous privacy statement will lead to user stress and frustration [39]. In contrast, a transparent privacy statement will help users understand the privacy practices of the platform, which may reduce the uncertainty and alleviate their privacy concerns. Thus, a clear and transparent privacy statement can promote user-disclosing information to generative AI and facilitate two-way information flow and update.

Therefore, anonymity provides a relatively secure interaction environment for users, while privacy statement enhances the transparency of privacy protection and the perceived control of users. Both factors help alleviate users’ anxiety about the leakage of personal privacy and establish a sustainable reciprocity relationship between users and platforms. Thus, we suggest the following:

H2a:

Perceived affordance of privacy protection negatively affects privacy concern.

H2b:

Perceived affordance of privacy protection positively affects reciprocity.

3.2. Social Affordance

Social affordance is represented by the perceived affordance of anthropomorphic interaction, which consists of two factors: empathy and social presence.

Empathy reflects an individual’s ability to understand others’ feelings and thoughts, and act accordingly [40]. Generative AI simulates the patterns of human thinking and performs emotional analysis by training large datasets. It can pay attention to user-specific needs and provide personalized services, such as chatting with users and psychological counseling. Lack of empathy may lead to the neglect of privacy concerns [41]. Conversely, when perceiving the empathy of generative AI, users may believe that it can pay attention to their concerns on privacy and adopt protective measures. This may increase their confidence in privacy protection. In addition, individuals tend to be more generous to objects with empathy capabilities [42]. That means that generative AI that follows emotional needs is more likely to be rewarded by users.

Social presence refers to a feeling of connecting with people when interacting with generative AI, such as warmth and socialization of interaction style [43]. The social cues (such as human-like communication style and emotional expression) conveyed by generative AI in the output will trigger positive social responses from users, such as being persuaded [44] and trust [45]. The close psychological distance gives users a sense of security. Users may regard the interaction object as their friends and establish a trust relationship. Then, they feel relieved to exchange information and obliged to respond to “a friend’s” requests or provide help.

Generative AI is gradually endowed with human-like characteristics, with empathy as an anthropomorphic expression that conveys emotional cues and social presence as a way of stimulating social responses that engage users in their interactions with AI. The anthropomorphic interaction may ensure a positive communication process and contribute to an engaging experience. Therefore, we propose the following:

H3a:

Perceived affordance of anthropomorphic interaction negatively affects privacy concern.

H3b:

Perceived affordance of anthropomorphic interaction positively affects reciprocity.

3.3. Privacy Concern

Privacy concern refers to user concern of possible threats to personal information [46]. Users input various contents during their interaction with generative AI, which may involve privacy information. They are worried that their privacy information may be improperly utilized. Thus, they may stop inputting information to the platform or provide false information after weighing the pros and cons. However, users’ negative attitude towards disclosure may negatively affect model training. That is, the model may not be able to provide accurate and personalized content generation services, and users’ personalized needs may not be satisfied. Studies have shown the negative relationship between privacy concern and disclosure intention [7,9]. Privacy concern is an important factor influencing user information boundaries [9]. When the level of concern is low, user intention to express is high, and the information sharing scope is also expanded. Therefore, we propose the following:

H4:

Privacy concern negatively affects disclosure intention.

3.4. Reciprocity

Reciprocity is a social interaction norm that reflects the degree of mutual satisfaction derived from the exchange of goods or services [47]. The interaction between users and generative AI is essentially the exchange and utilization of information, where users need to input information to convey their needs, while generative AI output answers to provide solutions for users. In this interaction process, information serves as the medium. According to the social exchange theory [48], both sides realize reciprocity by exchanging resources, and when one side provides help, the recipient has to reciprocate accordingly. In the context of generative AI, when users are the recipients, from a rational perspective, they are willing to provide more information to push the platform to continue releasing benefits. From an emotional perspective, they are willing to maintain the relationship with the platform and contribute information to reciprocate the help they received. When the platform acts as a recipient, reciprocity also makes users believe that disclosing information to the platform is valuable and can be rewarded in the future. Reciprocity has been found to have a positive effect on disclosure intention [49]. Therefore, we suggest the following:

H5:

Reciprocity positively affects disclosure intention.

The model is shown in Figure 1.

Figure 1.

Research model.

4. Methodology

4.1. Instrument Development

All items were adapted from extant literature and revised based on the context of generative AI to ensure the content validity. A pretest was conducted among twenty users that had rich experience using generative AI. Based on their comments, we revised a few items to improve the clarity and understandability. The questionnaire includes three parts. The first part introduces generative AI, including the basic functions, privacy statements, and the display of dialogue contents with anthropomorphic features. The aim is to provide respondents with a comprehensive understanding of generative AI and reduce the impact of cognitive bias. The second part is about demographic information and the usage of generative AI. The third part lists the questions related to the ten first-order constructs. Table 1 shows the measurement items. All items were measured with a seven-point Likert scale, which ranges from strongly disagree (1) to strongly agree (7).

Table 1.

The items and source.

4.2. Data Collection and Sample Demographics

The questionnaire was developed on an online survey platform. On a few popular social media platforms, such as WeChat, we invited those users that had experience using generative AI to fill the questionnaire. To ease the participants’ concerns, we ensured that the responses were anonymous and the data would only be used for research. We can also send the research results to them per request. The snowball sampling method was adopted to collect data. The respondents were encouraged to forward the questionnaire linkage to their friends in order to expedite the data collection process. Data collection lasted for two weeks. We scrutinized all responses and removed those invalid ones, which include the responses that had no experience using generative AI and the responses that had the same reply for all questions. As a result, we obtained 512 valid responses.

Among the respondents, 39.8% were male and 60.2% were female. Most of them (81.3%) were between 20 and 39 years old, and 90.8% received a college or higher education. A CNNIC report [58] indicated that 65.4% of generative AI users were between 20 and 39 years old, and 47% received college or higher education. This indicated that young people with high education are the main group of generative AI users. Our sample is roughly consistent with the report. The frequently used generative AI includes ChatGPT (78.5%), Baidu ERNIE Bot (75.8%), New Bing (53.5%), and Ali Qwen (32.8%), which represent a few reputable generative AI products. Users often adopted generative AI to do creative work (80.5%), help study or work (59.4%), translate (55.3%), and chat (53.7%).

4.3. Data Analysis Methods

In this research, we adopted a mixed method of PLS-SEM and fsQCA to perform data analysis. Compared to the traditional methods, such as linear regression, PLS-SEM can more accurately estimate the relationships between latent variables [59]. During PLS-SEM, we first examined the measurement model to test reliability and validity. Then, we examined the structural model to test research hypotheses and model fit. As PLS-SEM focused on the net effect of individual variables on the dependent variable, it may neglect the multiple concurrent causality between variables [60]. Thus, we adopted fsQCA to identify the configurations leading to the outcome variable [61].

5. Results

5.1. SEM

First, we adopted Smart PLS 4 to test the measurement model. The results are listed in Table 2. Alpha coefficients and the CRs were greater than 0.8, indicating good reliability. Factor loadings ranged between 0.830 and 0.926, and all AVEs were larger than 0.5. In addition, as Table 3 shows, the square root of the AVE is larger than the correlation coefficients between variables. These results indicate good reliability and validity.

Table 2.

Reliability and validity.

Table 3.

Correlation matrix of variables.

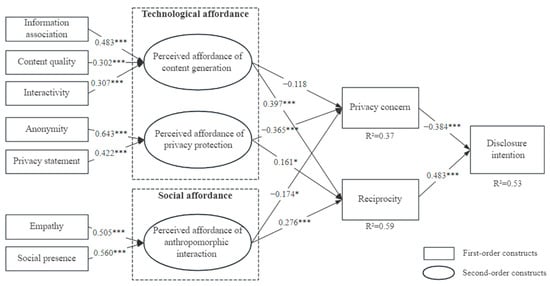

For the second-order factors, we adopted the two-stage method suggested by [62]. First, we obtained the latent variable score for each first-order factor. Then, these scores were used as formative indicators for the second-order factor. The results are shown in Table 4. The outer weights ranged between 0.302 and 0.643, which is larger than the critical value of 0.2, and all of them were significant at 0.000, indicating that the first-order factors and their corresponding second-order factor were highly correlated. The variance inflation factors (VIFs) were all below the threshold value of 3 [63], indicating that the measurement model was not affected by the collinearity problem.

Table 4.

Second-order factor analysis.

Second, Smart PLS 4 was employed to test the structural model. Path coefficients and their significance are shown in Figure 2. Except for H1a, other hypotheses were supported. The explained variances in privacy concern, reciprocity, and disclosure intention were 37%, 59%, and 53%, respectively, showing good explanatory power.

Figure 2.

Path coefficients. (Note: *, p < 0.05; ***, p < 0.001).

The mediation effects of privacy concern and reciprocity were tested using bootstrapping with 5000 iterations. If 95% confidence intervals do not contain zero, the effect is significant. The results are shown in Table 5. Except the path from content generation to privacy concern to disclosure intention, other paths were significant, indicating that privacy concern and reciprocity take mediation effects.

Table 5.

Mediation test.

5.2. FsQCA

PLS-SEM makes the causal prediction based on unidirectional linear relationships and examines the “net effect” of independent variables on the dependent variable. However, there is a multiple concurrent causality among variables [60]. FsQCA combines the advantages of quantitative and qualitative approaches to gain a deep understanding of the interactions among antecedent variables. Therefore, fsQCA was used in this research to investigate the configuration paths of antecedent variables triggering generative AI user disclosure intention. The results are listed in Table 6, ● and • suggest the presence of the core and peripheral condition, respectively. ⊗ suggests the absence of the peripheral condition, and “blank” suggests that the condition is optional.

Table 6.

Configurational analysis.

As listed in Table 6, two paths trigger user disclosure intention. Content quality, interactivity, anonymity, empathy, social presence, and reciprocity exist as common core conditions, which are the crucial factors leading to disclosure intention. Privacy statement exists as a common peripheral condition, suggesting that it is an indispensable factor affecting disclosure intention.

(1) S1: In addition to the common core and peripheral conditions in both paths, information association exists as a peripheral condition in this path, and privacy concern is an optional condition. This suggests that users are more concerned with service experience. When generative AI can ensure quality content generation, privacy protection, and anthropomorphic interaction, users may feel reciprocity and they tend to disclose information regardless of privacy concern. The coverage of S1 (0.526) is higher than that of S2 (0.504), suggesting that S1 has greater explanatory power.

(2) S2: Apart from the common core and peripheral conditions in both paths, privacy concern is absent as a peripheral condition, and information association is optional, showing that users are more concerned with privacy risk. When generative AI ensures privacy protection and anthropomorphic interaction, if users have a low privacy concern, they may be willing to disclose intention regardless of information association.

6. Discussion

The PLS-SEM results (shown in Figure 2) indicate that except the path from perceived affordance of content generation to privacy concern, other paths are significant. There are two configurations identified from fsQCA. The results are discussed as follows.

For technological affordance, perceived affordance of content generation of generative AI has no effect on privacy concern. This result is inconsistent with prior research, which reported the effect of content quality [64] and interactivity on AI user behavior [65]. This may be due to two reasons. First, privacy concern is mainly influenced by privacy protection affordance such as anonymity and privacy statement. This may lower the effect of content generation affordance on privacy concern. Second, the improvement of content generation builds on a huge dataset [3], which means that a large amount of data will be used for model training. With the increased use, users may find that the platform retains their past conversation records, and they may even find sensitive information in the generated contents. As a result, they may doubt the protection of the private information they input and be ambivalent about the affordance of content generation. This may lead to its insignificant effect on privacy concern. Although perceived affordance of content generation does not affect privacy concern, the results of fsQCA show that content quality and interactivity are the critical factors affecting disclosure intention. Information association also exists as a peripheral condition in S1. Furthermore, among three perceived affordances, the perceived affordance of content generation has the largest effect on reciprocity. Therefore, generative AI platforms need to focus on the perceived affordance of content generation in order to continuously improve content quality and enhance users’ perceived utility.

The results indicate that perceived affordance of privacy protection has significant effects on both privacy concern and reciprocity. Jang [66] also noted that privacy-protective behavior affects AI-based service usage. Compared with its positive effect on reciprocity, perceived affordance of privacy protection has a stronger negative effect on privacy concern. Perceived affordance of privacy protection includes anonymity and privacy statement, and anonymity has a higher effect on the perceived affordance of privacy protection compared to a privacy statement. FsQCA also shows that anonymity exists as a core condition, while the privacy statement exists as a peripheral condition. Users tend to ignore privacy statements due to their hidden location and long length [67], while they can directly perceive anonymity in use, such as perceiving that the identity is unrecognizable through nicknames. Generative AI should ensure that privacy statements are concise and understandable in order to improve the transparency. It can also develop more functions such as personalized privacy settings to increase users’ perceived control and mitigate their privacy concern.

For social affordance, perceived affordance of anthropomorphic interaction significantly affects privacy concern and reciprocity, and its positive effect on reciprocity is stronger than its negative effect on privacy concern. Perceived affordance of anthropomorphic interaction includes empathy and social presence, both of which exist as core conditions in fsQCA, suggesting that perceived affordance of anthropomorphic interaction is an important factor for user disclosure intention. Previous research identified the significant effect of perceived anthropomorphism on AI user attitudes [68] and self-congruence [69]. Our results are in line with these studies. According to the survey in this research, 53.7% of users use generative AI for daily chatting, showing that for many users, generative AI is a companion as well as a utility tool. When users find that they can conduct emotional interactions with generative AI, they may feel intimacy and warmth, which may mitigate their privacy concern and increase the confidence. Platforms should be concerned with users’ emotional needs and improve anthropomorphism, such as adding emojis to the generated contents and strengthening the emotional analysis capability of AI, which may create an immersive experience for users.

Privacy concern and reciprocity have a negative and positive effect on disclosure intention, respectively. This result is consistent with prior research. For example, Menard and Bott [70] found that privacy concerns on AI misuse increase perceived risk and personal privacy advocacy behavior. Li et al. [71] argued that privacy concern affects user trust in AI chatbots. Similarly, Kim et al. [72] noted that privacy loss concerns decrease user trust in generative AI. In addition, the positive effect of reciprocity on disclosure intention is stronger than that of privacy concern. Reciprocity also exists as a core condition in two paths of fsQCA, which is consistent with the result of PLS-SEM, indicating that users are utilitarian and they pay much attention to the benefits, such as seeking information, helping creation, and relieving worries. When users perceive that the benefits associated with using platforms outweigh the risks derived from inputting personal information, they tend to disclose information for better services. Generative AI should attach importance to users’ reciprocity needs and promote their disclosure intention.

7. Theoretical and Practical Implications

This research makes three theoretical contributions. First, existing studies mainly focused on the adoption and usage of generative AI, and they seldom examined user disclosure intention in generative AI, which is an emerging application. This research found that perceived affordance affects disclosure intention through privacy concern and reciprocity. The results enrich the research on generative AI user behavior. Second, from a technological–social perspective, this research classifies perceived affordance of generative AI into three dimensions: perceived affordance of content generation, perceived affordance of privacy protection, and perceived affordance of anthropomorphic interaction. The results provide a comprehensive understanding of generative AI affordance. Third, the results find that three perceived affordances of generative AI affect privacy concern and reciprocity, both of which further affect disclosure intention. Among them, perceived affordance of content generation is the main factor affecting reciprocity, whereas perceived affordance of privacy protection is the main factor affecting privacy concern. The results reveal the effect mechanism of perceived affordances on generative AI user disclosure intention.

The results have a few implications for generative AI platforms. First, they need to improve the ability of content generation. Platforms should collect reliable training data, improve algorithms, and obtain user feedback in order to better meet users’ information requirements. They also need to expand the content generation modes (such as text, image, and audio) and realize the variety of human–intelligence interaction. Second, platforms need to ensure privacy. In addition to improving the clarity and readability of privacy statements, platforms can develop other privacy protection functions, such as data anonymity, and setting privacy preferences. These privacy assurances may mitigate user privacy concern. Third, platforms need to be concerned with anthropomorphic interaction. They can create unique emotional expressions and response methods to narrow the distance between platforms and users and build a friendly interaction atmosphere. For policy makers, as generative AI has powerful ability to process vast amount of data, which presents a significant challenge to information privacy, our results imply that they need to enforce the laws and regulations catering to the context of generative AI in order to ensure user privacy. For the developers on generative AI platforms, they should try to optimize the products in order to engender users with an engaging experience and increase their perceived affordance.

8. Conclusions

Drawing on the perceived affordance theory, this research investigated the disclosure intention of generative AI users. By combining PLS-SEM and fsQCA, we found that perceived affordance of content generation (including information association, content quality, and interactivity), perceived affordance of privacy protection (including anonymity and privacy statement), and perceived affordance of anthropomorphic interaction (including empathy and social presence) affect privacy concern and reciprocity, both of which further determine disclosure intention. The fsQCA identified two paths that trigger user disclosure intention. The results highlight the important role of perceived affordance in facilitating information disclosure.

This research has a few limitations, which may offer potential directions for future research. First, our sample is mainly composed of young users with high education. Although they are the main group of generative AI users, future research may generalize our results to other groups such as elderly users. Second, this research was conducted in a single country. As various cultures may have different views on privacy, future researchers may conduct a cross-cultural study to compare the effect of privacy concern on user disclosure. Third, generative AI is integrated with various industries such as education, health, and finance. Future research may investigate privacy concern and user disclosure in these industry AI contexts. Fourth, we adopted a snowball sampling method to collect data. This may lead to the bias, such as overrepresentation of close social networks or self-selection bias. Future research may use other methods, such as random sampling, to lower the bias. Fifth, we mainly conducted a cross-sectional study. Future research may collect longitudinal data to examine the dynamic development of user disclosure.

Author Contributions

Conceptualization, T.Z. and X.W.; methodology, T.Z. and X.W.; formal analysis, X.W.; investigation, X.W.; writing—original draft preparation, X.W.; writing—review and editing, T.Z.; funding acquisition, T.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by National Social Science Foundation of China (24BGL310).

Institutional Review Board Statement

This study has been performed in accordance with the Declaration of Helsinki. Approval was granted by the Academic Committee of School of Management at Hangzhou Dianzi University (No. SM20240101, 3 January 2024).

Informed Consent Statement

Informed consent was obtained from all participants. Data were recorded in Arabic numerals, which did not include participants’ names.

Data Availability Statement

The data analyzed in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Tencent. ChatGPT Was Sued for $3 Billion! 16 People Sued OpenAI for Using the Information Without Permission. Available online: https://new.qq.com/rain/a/20230708A03R7T00 (accessed on 1 July 2024).

- Cyberspace Administration of China. The interim measures for the administration of generative artificial intelligence services. iChina 2023, 349, 18–19. [Google Scholar]

- Baek, T.H.; Kim, M. Is ChatGPT scary good? How user motivations affect creepiness and trust in generative artificial intelligence. Telemat. Inform. 2023, 83, 102030. [Google Scholar] [CrossRef]

- Jo, H. Understanding AI tool engagement: A study of ChatGPT usage and word-of-mouth among university students and office workers. Telemat. Inform. 2023, 85, 102067. [Google Scholar] [CrossRef]

- Sun, S.; Zhang, J.; Zhu, Y.; Jiang, M.; Chen, S. Exploring users’ willingness to disclose personal information in online healthcare communities: The role of satisfaction. Technol. Forecast. Soc. Change 2022, 178, 121596. [Google Scholar] [CrossRef]

- Dogruel, L.; Joeckel, S.; Henke, J. Disclosing personal information in mhealth apps. Testing the role of privacy attitudes, app habits, and social norm cues. Soc. Sci. Comput. Rev. 2023, 41, 1791–1810. [Google Scholar] [CrossRef]

- Chen, X.; Wu, M.; Cheng, C.; Mou, J. Weighing user’s privacy calculus on personal information disclosure: The moderating effect of social media identification. Online Inf. Rev. 2025, 49, 353–372. [Google Scholar] [CrossRef]

- Neves, J.; Turel, O.; Oliveira, T. SNS use reduction: A two-facet privacy concern perspective. Internet Res. 2023, 33, 974–993. [Google Scholar] [CrossRef]

- Le, C.; Zhang, Z.; Liu, Y. Research on Privacy Disclosure Behavior of Mobile App Users from Perspectives of Online Social Support and Gender Differences. Int. J. Hum. Comput. Interact. 2025, 41, 861–875. [Google Scholar] [CrossRef]

- Lu, C.-H. The moderating role of e-lifestyle on disclosure intention in mobile banking: A privacy calculus perspective. Electron. Commer. Res. Appl. 2024, 64, 101374. [Google Scholar] [CrossRef]

- Markus, M.L.; Silver, M.S. A foundation for the study of IT effects: A new look at DeSanctis and Poole’s concepts of structural features and spirit. J. Assoc. Inf. Syst. 2008, 9, 5. [Google Scholar] [CrossRef]

- Weidener, L.; Fischer, M. Artificial Intelligence in Medicine: Cross-Sectional Study Among Medical Students on Application, Education, and Ethical Aspects. JMIR Med. Educ. 2024, 10, e51247. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Anyanwu, C.C. AI in education: Evaluating the impact of moodle AI-powered chatbots and metacognitive teaching approaches on academic performance of higher Institution Business Education students. In Education and Information Technologies; Springer: Berlin/Heidelberg, Germany, 2025. [Google Scholar]

- Li, X.; Sigov, A.; Ratkin, L.; Ivanov, L.A.; Li, L. Artificial intelligence applications in finance: A survey. J. Manag. Anal. 2023, 10, 676–692. [Google Scholar] [CrossRef]

- Soni, V. Adopting Generative AI in Digital Marketing Campaigns: An Empirical Study of Drivers and Barriers. Sage Sci. Rev. Appl. Mach. Learn. 2023, 6, 1–15. [Google Scholar]

- Hazzan-Bishara, A.; Kol, O.; Levy, S. The factors affecting teachers’ adoption of AI technologies: A unified model of external and internal determinants. In Education and Information Technologies; Springer: Berlin/Heidelberg, Germany, 2025. [Google Scholar]

- Zhou, T.; Lu, H. The effect of trust on user adoption of AI-generated content. Electron. Libr. 2025, 43, 61–76. [Google Scholar] [CrossRef]

- Shahsavar, Y.; Choudhury, A. User Intentions to Use ChatGPT for Self-Diagnosis and Health-Related Purposes: Cross-sectional Survey Study. JMIR Hum. Factors 2023, 17, e47564. [Google Scholar] [CrossRef]

- Liu, Y.; Du, Y. The Effect of Generative AI Ethics on Users’ Continuous Usage Intentions: A PLS-SEM and fsQCA Approach. Int. J. Hum. Comput. Interact. 2025, 41, 1–12. [Google Scholar] [CrossRef]

- Nan, D.; Sun, S.; Zhang, S.; Zhao, X.; Kim, J.H. Analyzing behavioral intentions toward Generative Artificial Intelligence: The case of ChatGPT. Univers. Access Inf. Soc. 2025, 24, 885–895. [Google Scholar] [CrossRef]

- Norman, D.A. Affordance, conventions, and design. Interactions 1999, 6, 38–43. [Google Scholar] [CrossRef]

- Li, J.; Liu, S.; Gong, X.; Yang, S.-B.; Liu, Y. Technology affordance, national polycontextuality, and customer loyalty in the cross-border e-commerce platform: A comparative study between China and South Korea. Telemat. Inform. 2024, 88, 102099. [Google Scholar] [CrossRef]

- Wang, X.; Zhao, Y.C. Understanding older adults’ intention to use patient-accessible electronic health records: Based on the affordance lens. Front. Public Health 2023, 10, 1075204. [Google Scholar] [CrossRef]

- Wang, X.-W.; Wang, Z. The influence of gamification affordance on knowledge sharing behaviour: An empirical study based on social Q&A community. Behav. Inf. Technol. 2024, 44, 1475–1491. [Google Scholar]

- Nam, J.; Choi, H.; Jung, Y. What Drives Purchase Intention in Live-streaming Commerce in South Korea? Perspectives of IT Affordance and Streamer Characteristics. Asia Pac. J. Inf. Syst. 2024, 34, 810–836. [Google Scholar] [CrossRef]

- Lee, K.-W.; Li, C.-Y. It is not merely a chat: Transforming chatbot affordances into dual identification and loyalty. J. Retail. Consum. Serv. 2023, 74, 103447. [Google Scholar] [CrossRef]

- Khan, F.; Si, X.; Khan, K.U. Social media affordances and information sharing: An evidence from Chinese public organizations. Data Inf. Manag. 2019, 3, 135–154. [Google Scholar] [CrossRef]

- Bock, G.-W.; Mahmood, M.; Sharma, S.; Kang, Y.J. The impact of information overload and contribution overload on continued usage of electronic knowledge repositories. J. Organ. Comput. Electron. Commer. 2010, 20, 257–278. [Google Scholar] [CrossRef]

- Martínez-Navalón, J.-G.; Gelashvili, V.; Gómez-Ortega, A. Evaluation of user satisfaction and trust of review platforms: Analysis of the impact of privacy and E-WOM in the case of TripAdvisor. Front. Psychol. 2021, 12, 750527. [Google Scholar] [CrossRef]

- Gao, L.; Waechter, K.A.; Bai, X. Understanding consumers’ continuance intention towards mobile purchase: A theoretical framework and empirical study-A case of China. Comput. Hum. Behav. 2015, 53, 249–262. [Google Scholar] [CrossRef]

- Yang, S. Role of transfer-based and performance-based cues on initial trust in mobile shopping services: A cross-environment perspective. Inf. Syst. e-Bus. Manag. 2016, 14, 47–70. [Google Scholar] [CrossRef]

- Ou, C.X.; Pavlou, P.A.; Davison, R.M. Swift guanxi in online marketplaces: The role of computer-mediated communication technologies. MIS Q. 2014, 38, 209–230. [Google Scholar] [CrossRef]

- Lu, Y.; Kim, Y.; Dou, X.Y.; Kumar, S. Promote physical activity among college students: Using media richness and interactivity in web design. Comput. Hum. Behav. 2014, 41, 40–50. [Google Scholar] [CrossRef]

- Yuan, R.; Chen, Y.; Mandler, T. It takes two to tango: The role of interactivity in enhancing customer engagement on sharing economy platforms. J. Bus. Res. 2024, 178, 114658. [Google Scholar] [CrossRef]

- Lea, M.; Spears, R. Computer-mediated communication, de-individuation and group decision-making. Int. J. Man-Mach. Stud. 1991, 34, 283–301. [Google Scholar] [CrossRef]

- Rains, S.A. The implications of stigma and anonymity for self-disclosure in health blogs. Health Commun. 2014, 29, 23–31. [Google Scholar] [CrossRef] [PubMed]

- Woo, J. The right not to be identified: Privacy and anonymity in the interactive media environment. New Media Soc. 2006, 8, 949–967. [Google Scholar] [CrossRef]

- Wu, K.-W.; Huang, S.Y.; Yen, D.C.; Popova, I. The effect of online privacy policy on consumer privacy concern and trust. Comput. Hum. Behav. 2012, 28, 889–897. [Google Scholar] [CrossRef]

- Stevic, A.; Schmuck, D.; Koemets, A.; Hirsch, M.; Karsay, K.; Thomas, M.F.; Matthes, J. Privacy concerns can stress you out: Investigating the reciprocal relationship between mobile social media privacy concerns and perceived stress. Communications 2022, 47, 327–349. [Google Scholar] [CrossRef]

- Weisz, E.; Cikara, M. Strategic regulation of empathy. Trends Cogn. Sci. 2021, 25, 213–227. [Google Scholar] [CrossRef]

- Levy, M.; Hadar, I. The importance of empathy for analyzing privacy requirements. In Proceedings of the 2018 IEEE 5th International Workshop on Evolving Security & Privacy Requirements Engineering (ESPRE), Banff, AB, Canada, 20 August 2018; pp. 9–13. [Google Scholar]

- Von Bieberstein, F.; Essl, A.; Friedrich, K. Empathy: A clue for prosocialty and driver of indirect reciprocity. PLoS ONE 2021, 16, e0255071. [Google Scholar] [CrossRef]

- Brendel, A.B.; Greve, M.; Riquel, J.; Böhme, M.; Greulich, R.S. “Is It COVID or a cold?” An investigation of the role of social presence, trust, and persuasiveness for users’ intention to comply with COVID-19 chatbots. In Proceedings of the 30th European Conference on Information Systems, Timisoara, Romania, 19–24 June 2022; pp. 1–19. [Google Scholar]

- Diederich, S.; Lichtenberg, S.; Brendel, A.B.; Trang, S. Promoting sustainable mobility beliefs with persuasive and anthropomorphic design: Insights from an experiment with a conversational agent. In Proceedings of the International Conference on Information Systems, Munich, Germany, 15–18 December 2019. [Google Scholar]

- Konya-Baumbach, E.; Biller, M.; von Janda, S. Someone out there? A study on the social presence of anthropomorphized chatbots. Comput. Hum. Behav. 2023, 139, 107513. [Google Scholar] [CrossRef]

- Son, J.-Y.; Kim, S.S. Internet users’ information privacy-protective responses: A taxonomy and a nomological model. MIS Q. 2008, 32, 503–529. [Google Scholar] [CrossRef]

- Molm, L.D. The structure of reciprocity. Soc. Psychol. Q. 2010, 73, 119–131. [Google Scholar] [CrossRef]

- Blau, P.M. Exchange and Power in Social Life; Wiley: New York, NY, USA, 1964. [Google Scholar]

- Adam, M.; Benlian, A. From web forms to chatbots: The roles of consistency and reciprocity for user information disclosure. Inf. Syst. J. 2024, 34, 1175–1216. [Google Scholar] [CrossRef]

- Ashfaq, M.; Yun, J.; Yu, S.; Loureiro, S.M.C. I, Chatbot: Modeling the determinants of users’ satisfaction and continuance intention of AI-powered service agents. Telemat. Inform. 2020, 54, 101473. [Google Scholar] [CrossRef]

- Fox, J.; McEwan, B. Distinguishing technologies for social interaction: The perceived social affordances of communication channels scale. Commun. Monogr. 2017, 84, 298–318. [Google Scholar] [CrossRef]

- Pelau, C.; Dabija, D.-C.; Ene, I. What makes an AI device human-like? The role of interaction quality, empathy and perceived psychological anthropomorphic characteristics in the acceptance of artificial intelligence in the service industry. Comput. Hum. Behav. 2021, 122, 106855. [Google Scholar] [CrossRef]

- Li, M.; Mao, J. Hedonic or utilitarian? Exploring the impact of communication style alignment on user’s perception of virtual health advisory services. Int. J. Inf. Manag. 2015, 35, 229–243. [Google Scholar] [CrossRef]

- Chang, Y.; Wong, S.F.; Libaque-Saenz, C.F.; Lee, H. The role of privacy policy on consumers’ perceived privacy. Gov. Inf. Q. 2018, 35, 445–459. [Google Scholar] [CrossRef]

- Shiau, W.-L.; Luo, M.M. Factors affecting online group buying intention and satisfaction: A social exchange theory perspective. Comput. Hum. Behav. 2012, 28, 2431–2444. [Google Scholar] [CrossRef]

- Malhotra, N.K.; Kim, S.S.; Agarwal, J. Internet users’ information privacy concerns (IUIPC): The construct, the scale, and a causal model. Inf. Syst. Res. 2004, 15, 336–355. [Google Scholar] [CrossRef]

- Dang, Y.; Guo, S.; Guo, X.; Wang, M.; Xie, K. Privacy concerns about health information disclosure in mobile health: Questionnaire study investigating the moderation effect of social support. JMIR mHealth uHealth 2021, 9, e19594. [Google Scholar] [CrossRef]

- China Internet Network Information Center. The 55th Statistical Report on China’s Internet Development; China Internet Network Information Center: Beijing, China, 2025. [Google Scholar]

- Gefen, D.; Straub, D.W.; Boudreau, M.C. Structural equation modeling and regression: Guidelines for research practice. Commun. Assoc. Inf. Syst. 2000, 4, 1–70. [Google Scholar] [CrossRef]

- Rasoolimanesh, S.M.; Ringle, C.M.; Sarstedt, M.; Olya, H. The combined use of symmetric and asymmetric approaches: Partial least squares-structural equation modeling and fuzzy-set qualitative comparative analysis. Int. J. Contemp. Hosp. Manag. 2021, 33, 1571–1592. [Google Scholar] [CrossRef]

- Pappas, I.O.; Woodside, A.G. Fuzzy-set Qualitative Comparative Analysis (fsQCA): Guidelines for research practice in Information Systems and marketing. Int. J. Inf. Manag. 2021, 58, 102310. [Google Scholar] [CrossRef]

- Sarstedt, M.; Hair, J.F., Jr.; Cheah, J.-H.; Becker, J.-M.; Ringle, C.M. How to specify, estimate, and validate higher-order constructs in PLS-SEM. Australas. Mark. J. 2019, 27, 197–211. [Google Scholar] [CrossRef]

- Hair, J.F., Jr.; Howard, M.C.; Nitzl, C. Assessing measurement model quality in PLS-SEM using confirmatory composite analysis. J. Bus. Res. 2020, 109, 101–110. [Google Scholar] [CrossRef]

- Li, C.X.; Lin, Y.X.; Chen, R.Q.; Chen, J. How do users adopt AI-generated content (AIGC)? An exploration of content cues and interactive cues. Technol. Soc. 2025, 81, 102830. [Google Scholar] [CrossRef]

- Ko, M.; Lee, L.; Kim, Y.Y. The underlying mechanism of user response to AI assistants: From interactivity to loyalty. Inf. Technol. People 2024, 37, 1–12. [Google Scholar] [CrossRef]

- Jang, C. Coping with vulnerability: The effect of trust in AI and privacy-protective behaviour on the use of AI-based services. Behav. Inf. Technol. 2024, 43, 2388–2400. [Google Scholar] [CrossRef]

- Butori, R.; Lancelot Miltgen, C. A construal level theory approach to privacy protection: The conjoint impact of benefits and risks of information disclosure. J. Bus. Res. 2023, 168, 114205. [Google Scholar] [CrossRef]

- Li, X.G.; Sung, Y.J. Anthropomorphism brings us closer: The mediating role of psychological distance in User-AI assistant interactions. Comput. Hum. Behav. 2021, 118, 106680. [Google Scholar] [CrossRef]

- Alabed, A.; Javornik, A.; Gregory-Smith, D. AI anthropomorphism and its effect on users’ self-congruence and self-AI integration: A theoretical framework and research agenda. Technol. Forecast. Soc. Change 2022, 182, 121786. [Google Scholar] [CrossRef]

- Menard, P.; Bott, G.J. Artificial intelligence misuse and concern for information privacy: New construct validation and future directions. Inf. Syst. J. 2025, 35, 322–367. [Google Scholar] [CrossRef]

- Li, J.J.; Wu, L.R.; Qi, J.Y.; Zhang, Y.X.; Wu, Z.Y.; Hu, S.B. Determinants Affecting Consumer Trust in Communication With AI Chatbots: The Moderating Effect of Privacy Concerns. J. Organ. End User Comput. 2023, 35, 24. [Google Scholar] [CrossRef]

- Kim, D.; Kim, S.; Kim, S.; Lee, B.H. Generative AI Characteristics, User Motivations, and Usage Intention. J. Comput. Inf. Syst. 2024, 64, 1–16. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).