The Influence of Public Expectations on Simulated Emotional Perceptions of AI-Driven Government Chatbots: A Moderated Study

Abstract

1. Introduction

2. Literature Review

2.1. Theories of Technological Governance and Emotional Contagion

2.2. The Current Research Status of Government Chatbots

2.3. Relevant Literature Review on Emotion Perception in Government Chatbots

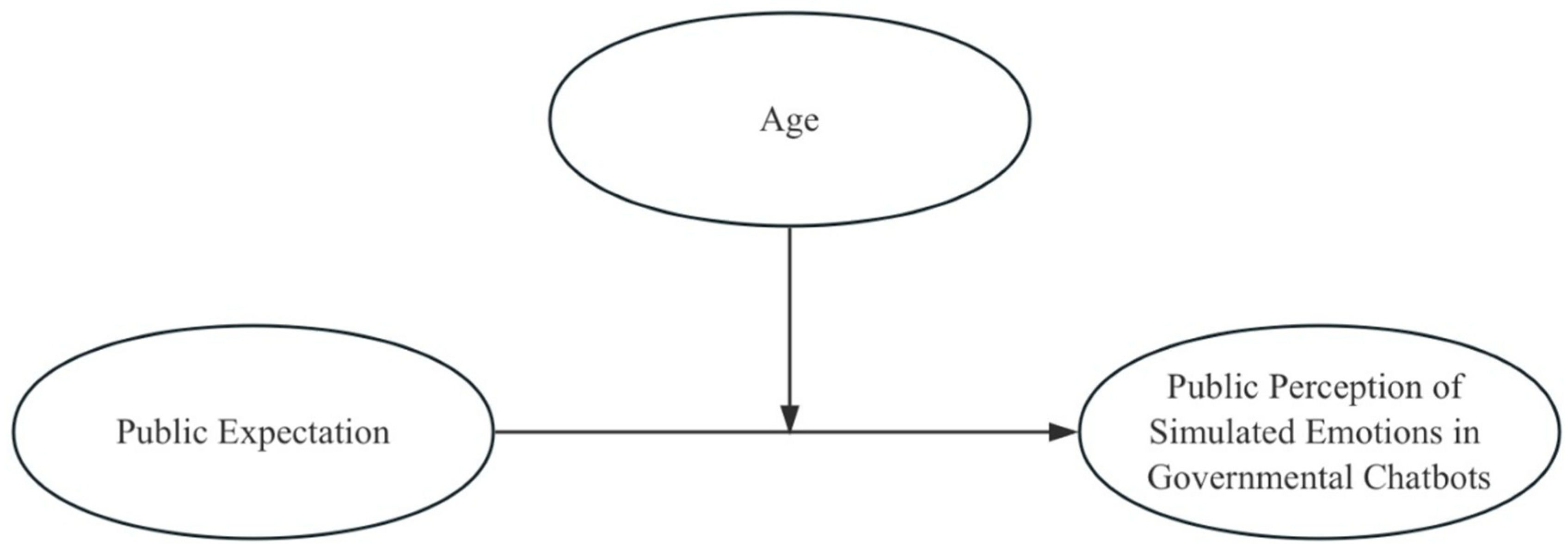

2.4. Research Framework and Hypotheses

3. Method

3.1. Data Source

3.2. Sample Information

3.3. Descriptive Statistics

3.4. Reliability and Validity Testing

4. Result and Discussion

5. Conclusions

5.1. Theoretical Insights

5.2. Practical Insights

5.3. Research Limitations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Guo, Y.; Dong, P. Factors Influencing User Favorability of Government Chatbots on Digital Government Interaction Platforms across Different Scenarios. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 818–845. [Google Scholar] [CrossRef]

- Guo, Y.; Zhang, T.; Deng, Y. The past, present, and future of digital government: Insights from Chinese practices. Public Adm. Issues 2024, 5, 6–24. (In English) [Google Scholar] [CrossRef]

- Patil, V.; Hadawale, O.; Pawar, V.; Gijre, M. Emotion-linked AIoT-based cognitive home automation system with sensovisual method. In Proceedings of the IEEE Pune Section International Conference (PuneCon), Pune, India, 16–19 December 2021; pp. 1–7. [Google Scholar]

- Hoffmann, C.; Linden, P.; Vidal, M. Creating and capturing artificial emotions in autonomous robots and software agents. J. Web Eng. 2021, 20, 993–1030. [Google Scholar] [CrossRef]

- Mowafey, S.; Gardner, S. A novel adaptive approach for home care ambient intelligent environments with an emotion-aware system. In Proceedings of the UKACC International Conference on Control, Cardiff, UK, 3–5 September 2012. [Google Scholar]

- Hwang, T.; Lee, H.; Kim, D. The impact of chatbot emotional intelligence on user engagement and satisfaction. J. Serv. Res. 2023, 26, 23–37. [Google Scholar]

- Guerrero-Vásquez, L.; Chasi-Pesantez, P.; Castro-Serrano, R.; Robles-Bykbaev, V.; Bravo-Torres, J.; López-Nores, M. AVATAR: Implementation of a human-computer interface based on an intelligent virtual agent. In Proceedings of the IEEE Colombian Conference on Communications and Computing (COLCOM), Barranquilla, Colombia, 5–7 June 2019; pp. 1–5. [Google Scholar]

- Saxena, A.; Khanna, A.; Gupta, D. Emotion recognition and detection methods: A comprehensive survey. J. Artif. Intell. Syst. 2020, 2, 53–79. [Google Scholar] [CrossRef]

- Peng, B. Research on the impact of chatbot anthropomorphism on consumer information search. Ind. Sci. Technol. Innov. 2023, 42–44. [Google Scholar]

- Bai, N. The influence of hotel service robot anthropomorphism and online review types on customer purchase intentions. Bus. Econ. Res. 2023, 12, 181–184. [Google Scholar]

- Liu, Y. The Mechanism of How Anthropomorphized Appearance and Interaction of Virtual Chatbots Affect User Acceptance; Anhui Polytechnic University: Wuhu, China, 2024. [Google Scholar]

- Zhu, M. The Impact of Cognitive Anthropomorphism in AI Service Robots on Consumer Subjective Well-Being; North China University of Water Resources and Electric Power: Zhengzhou, China, 2023. [Google Scholar]

- He, S. Study on the Impact of Empathic Abilities of AI Service Robots on Consumer Well-Being; North China University of Water Resources and Electric Power: Zhengzhou, China, 2023. [Google Scholar]

- Wang, X.; Su, C. Does anthropomorphism help alleviate customer dissatisfaction after service failure by robots? The mediating role of responsibility attribution. Financ. Trade Res. 2023, 34, 57–70. [Google Scholar]

- Bannister, F.; Connolly, R. ICT, public values and transformative government: A framework and programme for research. Gov. Inf. Q. 2014, 31, 119–128. [Google Scholar] [CrossRef]

- Linders, D. From e-government to we-government: Defining a typology for citizen coproduction in the age of social media. Gov. Inf. Q. 2012, 29, 446–454. [Google Scholar] [CrossRef]

- Gil-Garcia, J.R.; Helbig, N. Exploring e-government benefits and success factors. Gov. Inf. Q. 2006, 23, 187–216. [Google Scholar]

- Anthes, G. Chatbots in the enterprise. Commun. ACM 2017, 60, 13–15. [Google Scholar]

- Hatfield, E.; Cacioppo, J.T.; Rapson, R.L. Emotional Contagion; Cambridge University Press: Cambridge, MA, USA, 1994. [Google Scholar]

- Deng, H.; Hu, P.; Li, Z. The Role of Emotional Mimicry in Emotional Contagion: Re-reading the Mechanism of Mimicry-feedback. Chin. J. Clin. Psychol. 2016, 24, 225–228. [Google Scholar]

- Zhang, J.; Wang, X.; Lu, J.; Liu, L.; Feng, Y. The impact of emotional expression by artificial intelligence recommendation chatbots on perceived humanness and social interactivity. Decis. Support Syst. 2024, 187, 114347. [Google Scholar] [CrossRef]

- Bilquise, G.; Ibrahim, S.; Shaalan, K. Emotionally Intelligent Chatbots: A Systematic Literature Review. Hum. Behav. Emerg. Technol. 2022, 2022, 9601630. [Google Scholar] [CrossRef]

- Rodríguez-Martínez, A.; Amezcua-Aguilar, T.; Cortés-Moreno, J.; Jiménez-Delgado, J.J. Qualitative Analysis of Conversational Chatbots to Alleviate Loneliness in Older Adults as a Strategy for Emotional Health. Healthcare 2024, 12, 62. [Google Scholar] [CrossRef]

- Gnewuch, U.; Morana, S.; Maedche, A. Designing chatbot conversations for user engagement: Insights from emotional contagion theory. Inf. Syst. J. 2022, 32, 156–175. [Google Scholar] [CrossRef]

- Liu-Thompkins, Y.; Okazaki, S.; Li, H. Artificial empathy in marketing interactions: Bridging the human-AI gap in affective and social customer experience. J. Acad. Mark. Sci. 2022, 50, 1198–1218. [Google Scholar] [CrossRef]

- Beattie, G.; Ellis, A.W. The role of emotional contagion in AI-mediated communication: How do we trust emotionally responsive machines? Psychol. Mark. 2021, 38, 1461–1472. [Google Scholar] [CrossRef]

- Picard, R.W. Affective Computing; MIT Press: Cambridge, MA, USA, 1997. [Google Scholar]

- Zhao, X.; Liao, Y. The influence of empathy on AI-driven service interactions: A study of user satisfaction with emotionally responsive chatbots. J. Bus. Res. 2022, 137, 215–227. [Google Scholar] [CrossRef]

- Epley, N.; Waytz, A.; Cacioppo, J.T. On Seeing Human: A Three-factor Theory of Anthropomorphism. Psychol. Rev. 2007, 114, 864–886. [Google Scholar] [CrossRef] [PubMed]

- Troshani, I.; Hill, S.R.; Sherman, C. Do We Trust in AI: Role of Anthropomorphism and Intelligence. J. Comput. Inf. Syst. 2020, 61, 481–491. [Google Scholar] [CrossRef]

- Li, Y.; Wang, Q. The impact of robot appearance anthropomorphism on customer acceptance intentions—An empirical analysis based on different service scenarios. Jianghan Acad. 2024, 43, 16–28. [Google Scholar]

- Yu, F.; Xu, L. Anthropomorphism in Artificial Intelligence. J. Northwest Norm. Univ. (Soc. Sci. Ed.) 2020, 57, 52–60. [Google Scholar]

- Meng, Q.; Guo, Y.; Wu, J. Digital Social Governance: Conceptual Connotation, Key Areas and Innovative Direction. Soc. Gov. Rev. 2023, 22–31. [Google Scholar]

- Senadheera, S.; Yigitcanlar, T.; Desouza, K.C.; Mossberger, K.; Corchado, J.; Mehmood, R.; Li, R.Y.M.; Cheong, P.H. Understanding chatbot adoption in local governments: A review and framework. J. Urban Technol. 2024, 1–35. [Google Scholar] [CrossRef]

- Folstad, A.; Larsen, A.G.; Bjerkreim-Hanssen, N. The human likeness of government chatbots—An empirical study from Norwegian municipalities. In Electronic Government: 22nd IFIP WG 8.5 International Conference, EGOV 2023, Proceedings (Lecture Notes in Computer Science, 14130); Lindgren, I., Csaki, C., Kalampokis, E., Janssen, M., Viale Pereira, G., Virkar, S., Tambouris, E., Zuiderwijk, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2023. [Google Scholar] [CrossRef]

- Jais, R.; Ngah, A.H. The moderating role of government support in chatbot adoption intentions among Malaysian government agencies. Transform. Gov. People Process Policy 2024, 18, 417–433. [Google Scholar] [CrossRef]

- Ju, J.; Meng, Q.; Sun, F.; Liu, L.; Singh, S. Citizen preferences and government chatbot social characteristics: Evidence from a discrete choice experiment. Gov. Inf. Q. 2023, 40, 101785. [Google Scholar] [CrossRef]

- Tsai, M.-H.; Yang, C.-H.; Chen, J.Y.; Kang, S.-C. Four-stage framework for implementing a chatbot system in disaster emergency operation data management: A flood disaster management case study. KSCE J. Civ. Eng. 2021, 25, 503–515. [Google Scholar] [CrossRef]

- Keyner, S.; Savenkov, V.; Vakulenko, S. Open data chatbot. In Semantic Web: ESWC 2019 Satellite Events; Hitzler, P., Kirrane, S., Hartig, O., DeBoer, V., Vidal, M.E., Maleshkova, M., Schlobach, S., Hammar, K., Lasierra, N., Stadtmuller, S., et al., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; Volume 11762, pp. 111–115. [Google Scholar] [CrossRef]

- Aoki, N. An experimental study of public trust in AI chatbots in the public sector. Gov. Inf. Q. 2020, 37, 101490. [Google Scholar] [CrossRef]

- Abbas, N.; Folstad, A.; Bjorkli, C.A. Chatbots as part of digital government service provision—A user perspective. In Chatbot Research and Design: 6th International Workshop, CONVERSATIONS 2022, Revised Selected Papers (Lecture Notes in Computer Science, 13815); Folstad, A., Araujo, T., Papadopoulos, S., Law, E.L.-C., Luger, E., Goodwin, M., Brandtzaeg, P.B., Eds.; Springer: Berlin/Heidelberg, Germany, 2023. [Google Scholar] [CrossRef]

- Hasan, I.; Rizvi, S.; Jain, S.; Huria, S. The AI enabled chatbot framework for intelligent citizen-government interaction for delivery of services. In Proceedings of the 2021 8th International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 17–19 March 2021. [Google Scholar]

- Georgios, P.; Rafail, P.; Efthimios, T. Integration of chatbots with knowledge graphs in eGovernment: The case of getting a passport. In 25th Pan-Hellenic Conference on Informatics with International Participation (PCI2021); Vassilakopoulos, M.G., Karanikolas, N.N., Eds.; ACM: New York, NY, USA, 2021; pp. 425–429. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, N.; Zhao, X. Understanding the determinants in the different government AI adoption stages: Evidence of local government chatbots in China. Soc. Sci. Comput. Rev. 2022, 40, 534–554. [Google Scholar] [CrossRef]

- Oliveira da Silva Batista, G.; de Souza Monteiro, M.; Cardoso de Castro Salgado, L. How do ChatBots look like?: A comparative study on government chatbots profiles inside and outside Brazil. In Proceedings of the IHC ’22: Proceedings of the 21st Brazilian Symposium on Human Factors in Computing Systems, Diamantina, Brazil, 17–21 October 2022; ACM: New York, NY, USA, 2022. [Google Scholar] [CrossRef]

- Phan, Q.N.; Tseng, C.-C.; Le, T.T.H.; Nguyen, T.B.N. The application of chatbot on Vietnamese migrant workers’ right protection in the implementation of new generation free trade agreements (FTAs). AI Soc. 2023, 38, 1771–1783. [Google Scholar] [CrossRef]

- Khadija, M.A.; Nurharjadmo, W. Deep learning generative Indonesian response model chatbot for JKN-KIS. In Proceedings of the 2022 1st International Conference on Smart Technology, Applied Informatics, and Engineering (APICS), Surakarta, Indonesia, 23–24 August 2022. [Google Scholar] [CrossRef]

- de Andrade, G.G.; Sousa Silva, G.R.; Molina Duarte Junior, F.C.; Santos, G.A.; Lopes de Mendonca, F.L.; de Sousa Junior, R.T. EvaTalk: A chatbot system for the Brazilian government virtual school. In Proceedings of the 22nd International Conference on Enterprise Information Systems (ICEIS), Prague, Czech Republic, 5–7 May 2020; Filipe, J., Smialek, M., Brodsky, A., Hammoudi, S., Eds.; SCITEPRESS: Setúbal, Portugal, 2020; Volume 1, pp. 556–562. [Google Scholar] [CrossRef]

- Boden, C.; Fischer, J.; Herbig, K.; Spierling, U. CitizenTalk: Application of chatbot infotainment to E-democracy. In Technologies for Interactive Digital Storytelling and Entertainment, Proceedings; Gobel, S., Malkewitz, R., Iurgel, I., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; Volume 4326, pp. 370–381. [Google Scholar]

- Guo, Y. Digital Government and Public Interaction: Platforms, Chatbots, and Public Satisfaction; IGI Global: Hershey, PA, USA, 2025. [Google Scholar]

- Nass, C.; Moon, Y. Machines and mindlessness: Social responses to computers. J. Soc. Issues 2000, 56, 81–103. [Google Scholar] [CrossRef]

- Czaja, S.J.; Charness, N.; Fisk, A.D.; Hertzog, C.; Nair, S.N.; Rogers, W.A.; Sharit, J. Factors predicting the use of technology: Findings from the Center for Research and Education on Aging and Technology Enhancement (CREATE). Psychol. Aging 2006, 21, 333–352. [Google Scholar] [CrossRef] [PubMed]

- Carstensen, L.L.; Fung, H.H.; Charles, S.T. Socioemotional selectivity theory and the regulation of emotion in the second half of life. Motiv. Emot. 2003, 27, 103–123. [Google Scholar] [CrossRef]

- Harrison, P.; Smith, A.; Jones, R. Public Expectations and Emotional Engagement in Government Services. J. Public Adm. 2018, 25, 345–362. [Google Scholar]

- Smith, J.; Jones, L. The Role of Age in Perceptions of Government Services. Int. J. Public Policy 2020, 14, 220–237. [Google Scholar]

| Authors | Year | Purpose | Methods | Key Findings |

|---|---|---|---|---|

| Guo & Dong [1] | 2024 | The study investigates the factors influencing user favorability towards government chatbots on digital government interaction platforms across various scenarios. | Questionnaire experiment; comparative research method. | In examining user favorability in government services and policy consultations, four distinct mediating pathways emerge. First, the Social Support → Behavioral Quality → User Favorability pathway indicates that social support enhances user favorability through improved behavioral quality, with slightly greater effects observed in policy consultations compared to government services. Second, the Emotional Perception → Behavioral Quality → User Favorability pathway reveals that positive emotional perceptions lead to higher favorability, again with stronger effects noted in government services. Third, regarding the Perceived System → Behavioral Quality → User Favorability pathway, the perceived system’s influence diverges: in government services, it appears to affect favorability directly, while in policy consultations, it impacts user behavior and thus favorability. Finally, the Public Expectation → Behavioral Quality → User Favorability pathway shows that high public expectations fully mediate favorability in government services, whereas this mediation is only partial in policy consultations, suggesting that additional factors influence favorability. |

| Ju et al. [37] | 2023 | This study investigates citizen preferences regarding the social characteristics of government chatbots through a discrete choice experiment conducted among Chinese users. | Discrete choice experiment. | The findings reveal how individual characteristics, including age, gender, and prior chatbot experience, modulate these preferences. |

| Frequency | Percentage (%) | Cumulative Percentage (%) | ||

|---|---|---|---|---|

| Gender | Male | 84 | 43.30 | 43.30 |

| Female | 110 | 56.70 | 100.00 | |

| Age | Under 20 years old | 2 | 1.03 | 1.03 |

| 21–30 years old | 101 | 52.06 | 53.09 | |

| 31–40 years old | 79 | 40.72 | 93.81 | |

| 41–50 years old | 8 | 4.12 | 97.94 | |

| 51–60 years old | 4 | 2.06 | 100.00 | |

| Occupation | Student | 75 | 38.66 | 38.66 |

| Teachers and researchers, including educational professionals | 43 | 22.16 | 60.82 | |

| Policy makers | 2 | 1.03 | 61.86 | |

| Computer industry professionals | 18 | 9.28 | 71.13 | |

| Civil servant | 15 | 7.73 | 78.87 | |

| Medical personnel | 8 | 4.12 | 82.99 | |

| Unemployed | 6 | 3.09 | 86.08 | |

| Other | 27 | 13.92 | 100.00 | |

| Education | Primary school and below | 1 | 0.52 | 0.52 |

| General high school/secondary vocational school/technical school/vocational high school | 2 | 1.03 | 1.55 | |

| Junior college | 4 | 2.06 | 3.61 | |

| Bachelors | 60 | 30.93 | 34.54 | |

| Masters | 83 | 42.78 | 77.32 | |

| Ph.D. | 44 | 22.68 | 100.00 | |

| Total | 194 | 100.0 | 100.0 | |

| Item | Mean ± Standard Deviation | Variance | S.E. | Mean 95% CI (LL) | Mean 95% CI (UL) | IQR | Kurtosis | Skewness | Coefficient of Variation (CV) |

|---|---|---|---|---|---|---|---|---|---|

| Q1_1 | 4.046 ± 0.923 | 0.853 | 0.066 | 3.916 | 4.176 | 1.000 | 1.188 | −1.050 | 22.822% |

| Q1_2 | 4.021 ± 0.905 | 0.818 | 0.065 | 3.893 | 4.148 | 1.000 | 1.276 | −1.017 | 22.498% |

| Q1_3 | 4.031 ± 0.927 | 0.859 | 0.067 | 3.900 | 4.161 | 1.000 | 0.708 | −0.930 | 22.995% |

| Q3_1 | 3.747 ± 0.967 | 0.936 | 0.069 | 3.611 | 3.884 | 1.000 | 0.193 | −0.619 | 25.815% |

| Q3_2 | 3.402 ± 1.098 | 1.205 | 0.079 | 3.248 | 3.557 | 1.000 | −0.552 | −0.258 | 32.272% |

| Q3_3 | 3.356 ± 1.130 | 1.277 | 0.081 | 3.197 | 3.515 | 1.000 | −0.699 | −0.213 | 33.676% |

| Q3_4 | 3.474 ± 1.024 | 1.049 | 0.074 | 3.330 | 3.618 | 1.000 | −0.328 | −0.237 | 29.474% |

| Item | CITC | Alpha Coefficient If Item Deleted | Cronbach α | Standard Cronbach α | |

|---|---|---|---|---|---|

| The scenario of government service use | |||||

| Public expectations | Q1_1 | 0.532 | 0.728 | 0.754 | 0.754 |

| Q1_2 | 0.551 | 0.71 | |||

| Q1_3 | 0.675 | 0.56 | |||

| Simulated emotional information perception | Q2_1 | 0.551 | 0.759 | 0.786 | 0.795 |

| Q2_2 | 0.666 | 0.698 | |||

| Q2_3 | 0.548 | 0.76 | |||

| Q2_4 | 0.657 | 0.708 | |||

| Model 1 | Model 2 | Model 3 | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| B | S.E. | t | p | β | B | S.E. | t | p | β | B | S.E. | t | p | β | |

| Constant | 3.901 | 0.418 | 9.335 | 0.000 ** | - | 3.994 | 0.406 | 9.828 | 0.000 ** | - | 4.105 | 0.391 | 10.502 | 0.000 ** | - |

| Gender | 0.211 | 0.108 | 1.945 | 0.053 | 0.111 | 0.218 | 0.105 | 2.071 | 0.040 * | 0.115 | 0.202 | 0.101 | 1.998 | 0.047 * | 0.106 |

| Occupation | 0.044 | 0.022 | 2.040 | 0.043 * | 0.120 | 0.026 | 0.022 | 1.212 | 0.227 | 0.071 | 0.029 | 0.021 | 1.380 | 0.169 | 0.078 |

| Education background | −0.151 | 0.061 | −2.456 | 0.015 * | −0.144 | −0.159 | 0.060 | −2.664 | 0.008 ** | −0.152 | −0.176 | 0.057 | −3.067 | 0.002 ** | −0.168 |

| Public expectation | 0.612 | 0.065 | 9.411 | 0.000 ** | 0.541 | 0.610 | 0.063 | 9.666 | 0.000 ** | 0.539 | 0.660 | 0.062 | 10.689 | 0.000 ** | 0.583 |

| Age | 0.271 | 0.076 | 3.557 | 0.000 ** | 0.198 | 0.236 | 0.073 | 3.219 | 0.002 ** | 0.173 | |||||

| Public expectation*Age | 0.393 | 0.095 | 4.142 | 0.000 ** | 0.222 | ||||||||||

| R2 | 0.410 | 0.448 | 0.494 | ||||||||||||

| Adjustment R2 | 0.398 | 0.433 | 0.478 | ||||||||||||

| F | F (4,189) = 32.884, p = 0.000 | F (5,188) = 30.461, p = 0.000 | F (6,187) = 30.426, p = 0.000 | ||||||||||||

| ΔR2 | 0.410 | 0.037 | 0.046 | ||||||||||||

| ΔF | F (4,189) = 32.884, p = 0.000 | F (1,188) = 12.655, p = 0.000 | F (1,187) = 17.159, p = 0.000 | ||||||||||||

| Level of Moderating Variable | Regression Coefficient | Standard Error | t-Value | p-Value | 95% Confidence Interval (CI) | |

|---|---|---|---|---|---|---|

| Mean Level | 0.660 | 0.062 | 10.689 | 0.000 | 0.539 | 0.781 |

| High Level (+1 SD) | 0.932 | 0.098 | 9.460 | 0.000 | 0.739 | 1.125 |

| Low Level (−1 SD) | 0.388 | 0.081 | 4.806 | 0.000 | 0.230 | 0.547 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, Y.; Dong, P.; Lu, B. The Influence of Public Expectations on Simulated Emotional Perceptions of AI-Driven Government Chatbots: A Moderated Study. J. Theor. Appl. Electron. Commer. Res. 2025, 20, 50. https://doi.org/10.3390/jtaer20010050

Guo Y, Dong P, Lu B. The Influence of Public Expectations on Simulated Emotional Perceptions of AI-Driven Government Chatbots: A Moderated Study. Journal of Theoretical and Applied Electronic Commerce Research. 2025; 20(1):50. https://doi.org/10.3390/jtaer20010050

Chicago/Turabian StyleGuo, Yuanyuan, Peng Dong, and Beichen Lu. 2025. "The Influence of Public Expectations on Simulated Emotional Perceptions of AI-Driven Government Chatbots: A Moderated Study" Journal of Theoretical and Applied Electronic Commerce Research 20, no. 1: 50. https://doi.org/10.3390/jtaer20010050

APA StyleGuo, Y., Dong, P., & Lu, B. (2025). The Influence of Public Expectations on Simulated Emotional Perceptions of AI-Driven Government Chatbots: A Moderated Study. Journal of Theoretical and Applied Electronic Commerce Research, 20(1), 50. https://doi.org/10.3390/jtaer20010050