Analysis of Service Quality in Smart Running Applications Using Big Data Text Mining Techniques

Abstract

1. Introduction

2. Theocratical Background

2.1. Mobile Analytics in Sports and Health Applications

2.2. Sports Application User Experience and Text Mining Research

2.3. Sports Application Service Quality

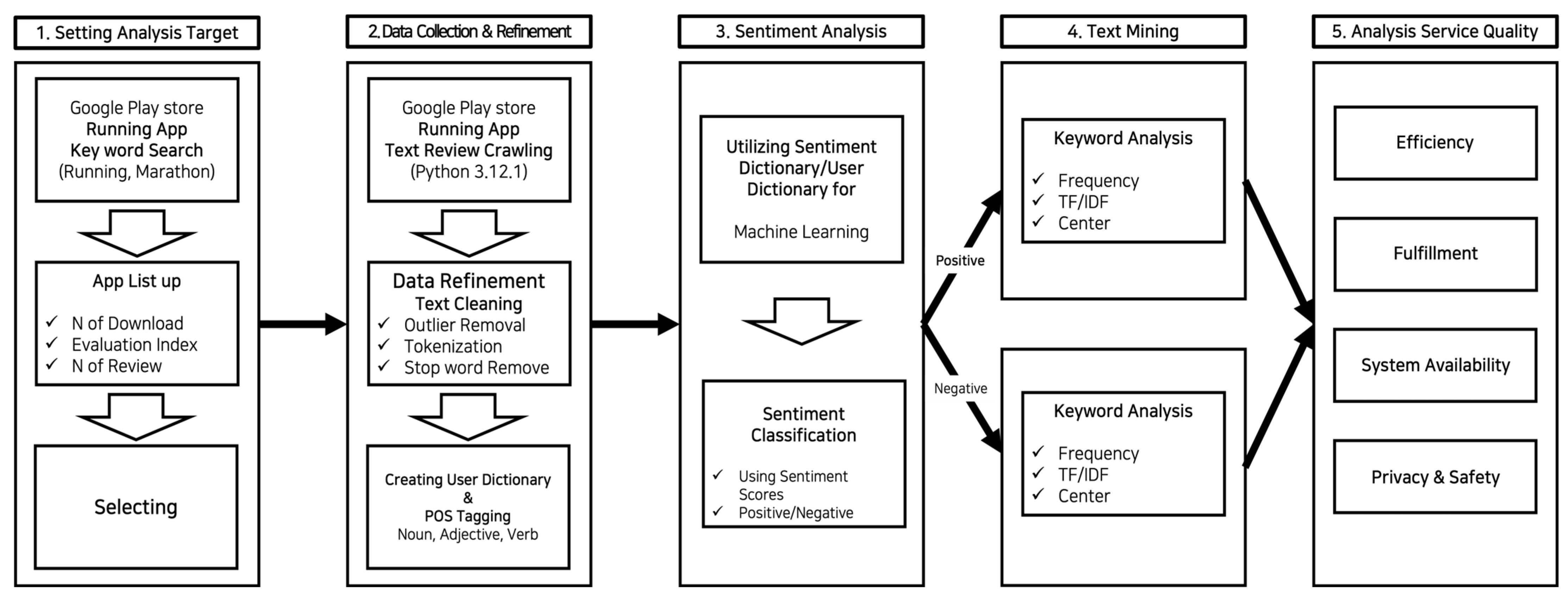

3. Research Method

3.1. Setting Analysis Target

3.2. Data Collection and Refinement

3.3. Sentiment Analysis

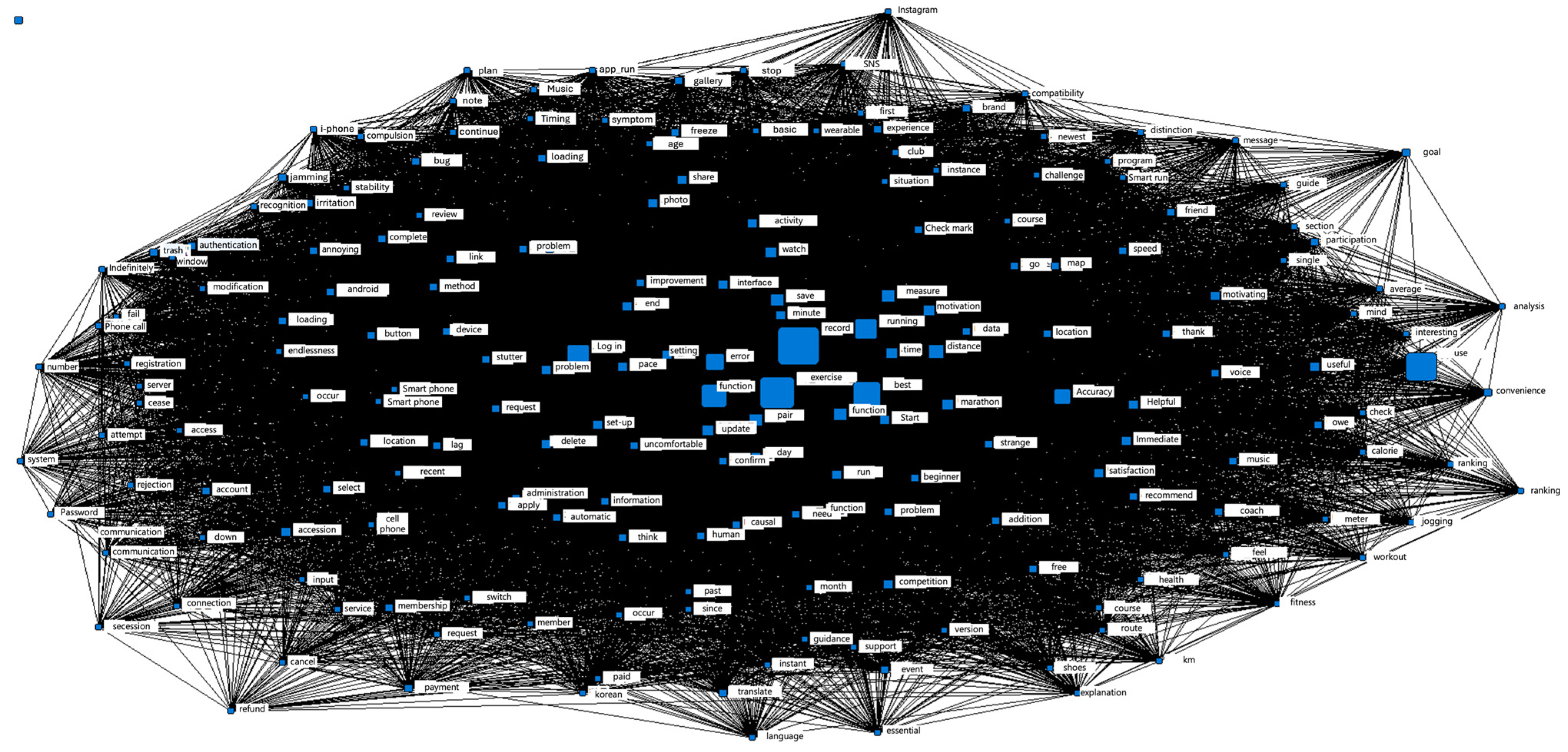

3.4. Text Mining—Service Quality Dimension

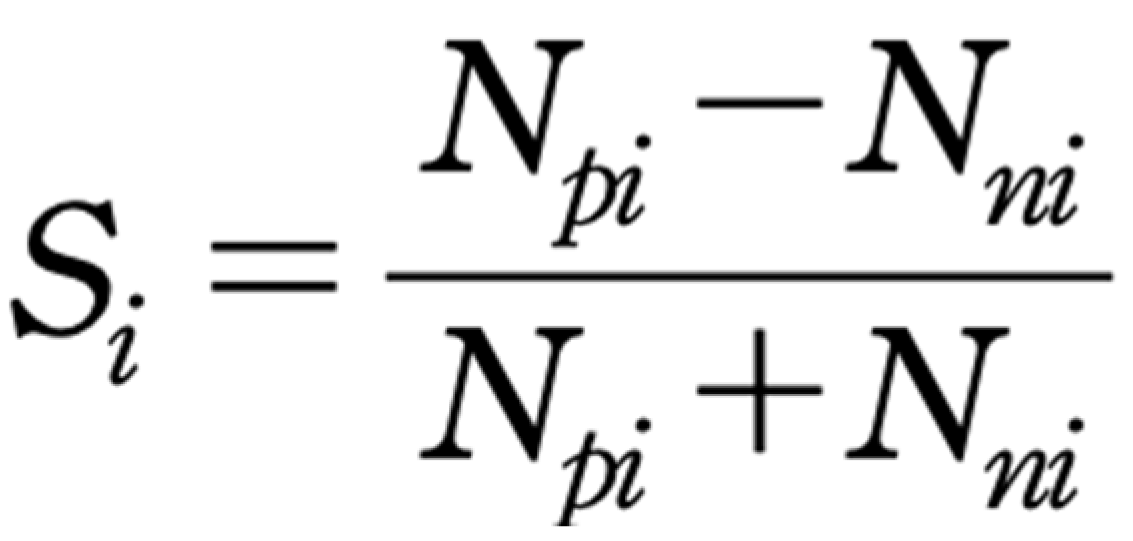

3.5. Service Quality Dimension Score

4. Results

4.1. Term Frequency and Network Analysis

4.2. Sentiment Analysis Result

4.3. Service Quality Review Text Mining Analysis

4.4. Service Quality Score Measurement

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Smith, S.L.; Parashar, R.; Nanda, S.; Shiffman, J.; Shroff, Z.C.; Shawar, Y.R.; Hamunakwadi, D.L. Shifting patterns and competing explanations for infectious disease priority in global health agenda setting arenas. Health Policy Plan. 2024, czae035. [Google Scholar] [CrossRef]

- Thompson, W.R.; Sallis, R.; Joy, E.; Jaworski, C.A.; Stuhr, R.M.; Trilk, J.L. Exercise is medicine. Am. J. Lifestyle Med. 2020, 14, 511–523. [Google Scholar] [CrossRef]

- Janssen, M.; Walravens, R.; Thibaut, E.; Scheerder, J.; Brombacher, A.; Vos, S. Understanding different types of recreational runners and how they use running-related technology. Int. J. Environ. Res. Public Health 2020, 17, 2276. [Google Scholar] [CrossRef] [PubMed]

- Statista. Running and Jogging. Available online: https://www.statista.com/topics/1743/running-and-jogging/ (accessed on 10 May 2024).

- Beinema, T.; op den Akker, H.; van Velsen, L.; Hermens, H. Tailoring coaching strategies to users’ motivation in a multi-agent health coaching application. Comput. Hum. Behav. 2021, 121, 106787. [Google Scholar] [CrossRef]

- Adwinda, C.P.; Pradono, S. Developing an android-based running application. J. Crit. Rev. 2020, 7, 851–857. [Google Scholar] [CrossRef]

- Bauer, C.; Kratschmar, A. Designing a music-controlled running application: A sports science and psychological perspective. In Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems, Seoul, Republic of Korea, 18–23 April 2015; pp. 1379–1384. [Google Scholar] [CrossRef]

- Business Research Insights. Running Apps Market. Available online: https://www.businessresearchinsights.com/ko/market-reports/running-apps-market-103263 (accessed on 8 March 2024).

- Dhurup, M.; Singh, C.; Surujlal, J. Application of the health, and fitness service quality scale (HAFSQ) in determining the relationship among service quality, satisfaction and loyalty in the service industry. Afr. J. Phys. Health Educ. Recreat. Dance 2006, 12, 238–251. [Google Scholar] [CrossRef]

- Endeshaw, B. Healthcare service quality-measurement models: A review. J. Health Res. 2021, 35, 106–117. [Google Scholar] [CrossRef]

- Cho, H.; Chi, C.; Chiu, W. Understanding sustained usage of health and fitness apps: Incorporating the technology acceptance model with the investment model. Technol. Soc. 2020, 63, 101429. [Google Scholar] [CrossRef]

- Mival, O.; Benyon, D. User experience (UX) design for medical personnel and patients. In Requirements Engineering for Digital Health; Springer: Cham, Switzerland, 2014; pp. 117–131. [Google Scholar]

- Omaghomi, T.T.; Elufioye, O.A.; Akomolafe, O.; Anyanwu, E.C.; Daraojimba, A.I. Health apps and patient engagement: A review of effectiveness and user experience. World J. Adv. Res. Rev. 2024, 21, 432–440. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, M.; Liu, L. A review on text mining. In Proceedings of the 2015 6th IEEE International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 23–25 September 2015; pp. 681–685. [Google Scholar] [CrossRef]

- Sällberg, H.; Wang, S.; Numminen, E. The combinatory role of online ratings and reviews in mobile app downloads: An empirical investigation of gaming and productivity apps from their initial app store launch. J. Mark. Anal. 2023, 11, 426–442. [Google Scholar] [CrossRef]

- Yazti, D.Z.; Krishnaswamy, S. Mobile big data analytics: Research, practice, and opportunities. In Proceedings of the 2014 IEEE 15th International Conference on Mobile Data Management, Brisbane, Australia, 15–18 July 2014; pp. 1–2. [Google Scholar] [CrossRef]

- Jayaram, D.; Manrai, A.K.; Manrai, L.A. Effective use of marketing technology in Eastern Europe: Web analytics, social media, customer analytics, digital campaigns and mobile applications. J. Econ. Financ. Adm. Sci. 2015, 20, 118–132. [Google Scholar] [CrossRef]

- Hollister, G. Out of Nowhere: The Inside Story of How Nike Marketed the Culture of Running; Meyer & Meyer Sport: Maidenhead, UK, 2008. [Google Scholar]

- Russell, H.C.; Potts, C.; Nelson, E. “If It’s not on Strava it Didn’t Happen”: Perceived Psychosocial Implications of Strava Use in Collegiate Club Runners. Recreat. Sports J. 2023, 47, 15–25. [Google Scholar] [CrossRef]

- Peart, D.J.; Balsalobre-Fernández, C.; Shaw, M.P. Use of mobile applications to collect data in sport, health, and exercise science: A narrative review. J. Strength Cond. Res. 2019, 33, 1167–1177. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Wang, S. Status Analysis and Future Development Planning of Fitness APP Based on Intelligent Word Frequency Analysis. J. Electr. Comput. Eng. 2022, 2022, 5190979. [Google Scholar] [CrossRef]

- Wen, P.; Chen, M. A new analysis method for user reviews of mobile fitness apps. In Proceedings of the Human-Computer Interaction. Human Values and Quality of Life: Thematic Area, HCI 2020, Held as Part of the 22nd International Conference, HCII 2020, Copenhagen, Denmark, 19–24 July 2020; Proceedings, Part III. Springer: Cham, Switzerland, 2020; pp. 188–199. [Google Scholar] [CrossRef]

- Usai, A.; Pironti, M.; Mital, M.; Aouina Mejri, C. Knowledge discovery out of text data: A systematic review via text mining. J. Knowl. Manag. 2018, 22, 1471–1488. [Google Scholar] [CrossRef]

- Pang, B.; Lee, L. Opinion mining and sentiment analysis. Found. Trends Inf. Retr. 2008, 2, 1–135. [Google Scholar] [CrossRef]

- Rambocas, M.; Pacheco, B.G. Online sentiment analysis in marketing research: A review. J. Res. Interact. Mark. 2018, 12, 146–163. [Google Scholar] [CrossRef]

- Luca, M. Reviews, reputation, and revenue: The case of Yelp.com. Harvard Bus. Sch. NOM Unit Work. Pap. 2016. 12-016. [Google Scholar] [CrossRef]

- Rossi, A.; Pappalardo, L.; Cintia, P.; Iaia, F.M.; Fernández, J.; Medina, D. Effective injury forecasting in soccer with GPS training data and machine learning. PLoS ONE 2018, 13, e0201264. [Google Scholar] [CrossRef] [PubMed]

- Kennedy, H.; Kunkel, T.; Funk, D.C. Using predictive analytics to measure effectiveness of social media engagement: A digital measurement perspective. Sport Mark. Q. 2021, 30, 265–277. [Google Scholar] [CrossRef]

- Constantinou, A.C.; Fenton, N.E. Determining the level of ability of football teams by dynamic ratings based on the relative discrepancies in scores between adversaries. J. Quant. Anal. Sports 2013, 9, 37–50. [Google Scholar] [CrossRef]

- Parasuraman, A.; Zeithaml, V.A.; Berry, L.L. Servqual: A multiple-item scale for measuring consumer perc. J. Retail. 1988, 64, 12. [Google Scholar]

- Santos, J. E-service quality: A model of virtual service quality dimensions. Manag. Serv. Qual. 2003, 13, 233–246. [Google Scholar] [CrossRef]

- Huang, E.Y.; Lin, S.W.; Fan, Y.C. MS-QUAL: Mobile service quality measurement. Electron. Commer. Res. Appl. 2015, 14, 126–142. [Google Scholar] [CrossRef]

- Parasuraman, A.; Zeithaml, V.A.; Malhotra, A. ES-QUAL: A multiple-item scale for assessing electronic service quality. J. Serv. Res. 2005, 7, 213–233. [Google Scholar] [CrossRef]

- Liu, Y.; Shen, Y.; Sun, S. The Influence of User Perceived Value of Sports APP on Platform Commodity Purchase. In Proceedings of the Digital Health and Medical Analytics: Second International Conference, DHA 2020, Beijing, China, 25 July 2020; Revised Selected Papers. Springer: Singapore, 2021; pp. 96–117. [Google Scholar]

- Janssen, M.; Scheerder, J.; Thibaut, E.; Brombacher, A.; Vos, S. Who uses running apps and sports watches? Determinants and consumer profiles of event runners’ usage of running-related smartphone applications and sports watches. PLoS ONE 2017, 12, e0181167. [Google Scholar] [CrossRef] [PubMed]

- Azad-Khaneghah, P.; Neubauer, N.; Miguel Cruz, A.; Liu, L. Mobile health app usability and quality rating scales: A systematic review. Disabil. Rehabil. Assist. Technol. 2021, 16, 712–721. [Google Scholar] [CrossRef] [PubMed]

- Martin-Domingo, L.; Martín, J.C.; Mandsberg, G. Social media as a resource for sentiment analysis of airport service quality (ASQ). J. Air Transp. Manag. 2019, 78, 106–115. [Google Scholar] [CrossRef]

- Jain, P.K.; Pamula, R.; Srivastava, G. A systematic literature review on machine learning applications for consumer sentiment analysis using online reviews. Comput. Sci. Rev. 2021, 41, 100413. [Google Scholar] [CrossRef]

- Luo, J.M.; Vu, H.Q.; Li, G.; Law, R. Understanding service attributes of robot hotels: A sentiment analysis of customer online reviews. Int. J. Hosp. Manag. 2021, 98, 103032. [Google Scholar] [CrossRef]

- Kiritchenko, S.; Zhu, X.; Mohammad, S.M. Sentiment analysis of short informal texts. J. Artif. Intell. Res. 2014, 50, 723–762. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef]

- Lee, S.; Song, J.; Kim, Y. An empirical comparison of four text mining methods. J. Comput. Inf. Syst. 2010, 51, 1–10. [Google Scholar]

- Duan, W.; Cao, Q.; Yu, Y.; Levy, S. Mining online user-generated content: Using sentiment analysis technique to study hotel service quality. In Proceedings of the 46th Hawaii International Conference on System Sciences, Wailea/Maui, HI, USA, 7–10 January 2013; pp. 3119–3128. [Google Scholar] [CrossRef]

| Name | Number of Users (in 10,000 s) | Rating | N of Reviews (in 1000 s) | |

|---|---|---|---|---|

| 1 | Running–Jogging Tracker | 500 | 4.7 | 6408 |

| 2 | Start Running Running for Beginners | 100 | 4.9 | 1009 |

| 3 | RunDay | 100 | 4.7 | 1487 |

| 4 | Tranggle | 100 | 4.4 | 948 |

| 5 | ASICS Run keeper | 1000 | 4.5 | 8475 |

| 6 | FITAPP | 100 | 4.3 | 1548 |

| 7 | GPS Running Cycling and Fitness | 500 | 4.8 | 6068 |

| 8 | Just Run: Zero to 5 K | 10 | 5 | 125 |

| 9 | Leap Map Runner | 1000 | 4.8 | 12,475 |

| 10 | Nike Run Club | 1000 | 3.9 | 21,079 |

| 11 | Pacer Pedometer | 1000 | 4.8 | 5871 |

| 12 | PUMATRAC Run, Train, Fitness | 100 | 4.6 | 1040 |

| 13 | Strava | 5000 | 4.3 | 60,377 |

| 14 | Under Armour MapMyRun | 1000 | 4.8 | 7475 |

| 15 | Wahoo | 100 | 4.8 | 1456 |

| 16 | Polar Flow | 500 | 3.9 | 6238 |

| 17 | Garmin Connect | 1000 | 4.7 | 21,475 |

| Rank | Word | Frequency | Rank | Word | Frequency |

|---|---|---|---|---|---|

| 1 | Record | 11,491 | 51 | Display | 2006 |

| 2 | Exercise | 9431 | 52 | Gallery | 1824 |

| 3 | Use | 7992 | 53 | Thanks | 2260 |

| 4 | Running | 7218 | 54 | Data | 1881 |

| 5 | Login | 6154 | 55 | Resolve | 1941 |

| 6 | Error | 5961 | 56 | Pace | 1947 |

| 7 | Best | 5252 | 57 | Information | 2272 |

| 8 | Distance | 4823 | 58 | Participate | 2121 |

| 9 | Function | 3897 | 59 | Competition | 2246 |

| 10 | Save | 3823 | 60 | Automatic | 1779 |

| 11 | Sync | 3603 | 61 | Button | 2218 |

| 12 | Measure | 3416 | 62 | Speed | 1774 |

| 13 | Motivation | 3246 | 63 | Recommend | 2244 |

| 14 | Running | 3107 | 64 | Certification | 2259 |

| 15 | Time | 3418 | 65 | Friend | 1994 |

| 16 | Update | 3212 | 66 | Android | 1848 |

| 17 | Watch | 3420 | 67 | Middle | 2090 |

| 18 | Accuracy | 2693 | 68 | Phone | 1920 |

| 19 | Sign up | 2953 | 69 | Location | 1800 |

| 20 | End | 2572 | 70 | Map | 1786 |

| 21 | Photo | 2444 | 71 | One | 2178 |

| 22 | Delete | 2890 | 72 | Loading | 1843 |

| 23 | Screen | 2660 | 73 | Person | 1997 |

| 24 | Install | 2608 | 74 | Bug | 1684 |

| 25 | Marathon | 2796 | 75 | Run | 1770 |

| 26 | Running | 2660 | 76 | Because | 1745 |

| 27 | Settings | 2343 | 77 | Convenient | 1924 |

| 28 | Start | 2703 | 78 | Thanks | 1908 |

| 29 | Useful | 2750 | 79 | First | 1978 |

| 30 | Help | 2568 | 80 | Add | 2010 |

| 31 | Share | 2516 | 81 | Payment | 1748 |

| 32 | Satisfaction | 2197 | 82 | Frozen | 2025 |

| 33 | Grant | 2419 | 83 | Free | 2040 |

| 34 | Edit | 2398 | 84 | Thought | 1861 |

| 35 | Problem | 2573 | 85 | Selection | 2005 |

| 36 | Activity | 2139 | 86 | Music | 1816 |

| 37 | Logout | 2493 | 87 | Voice | 1707 |

| 38 | Request | 2372 | 88 | Occur | 1631 |

| 39 | Confirm | 2252 | 89 | Coach | 1645 |

| 40 | Improvement | 2486 | 90 | Member | 1864 |

| 41 | Need | 2083 | 91 | Account | 1820 |

| 42 | Infinite Loading | 2191 | 92 | Stop | 1699 |

| 43 | Use | 2179 | 93 | After | 1698 |

| 44 | Inconvenience | 2229 | 94 | Method | 1623 |

| 45 | Manage | 2296 | 95 | Complete | 1826 |

| 46 | Strange | 1985 | 96 | Annoying | 1828 |

| 47 | Lag | 2116 | 97 | Trash | 1661 |

| 48 | Possible | 2241 | 98 | Complete | 2013 |

| 49 | Connect | 2068 | 99 | Challenge | 1941 |

| 50 | Display | 2063 | 100 | Error | 1864 |

| Dimension | Keyword | Example Comment |

|---|---|---|

| App System Efficiency | fast speed, quick updates, ease of use, intuitive UI, automatic, map, location, slow response time, complex interface, delayed updates, lack of features, integration issues, crash, error | [P] “The records and other summaries are well-organized, making it easy to view. The intuitive UI and quick updates make it convenient to check how far I’ve run, how many calories I’ve burned, and my location on the map.” [P] “I tried it for the first time today, and it’s really convenient and accurate, providing fast speed and automatic voice notifications for speed by section, distance covered, route, and calories burned.” [N] “After finishing a run, errors frequently occur when trying to save the record, leading to the app crashing, the data not being saved, or the map and previous activities being lost, highlighting integration issues and slow response time.” [N] “It’s extremely frustrating when, after running hard, the app’s delayed updates and slow response time result in errors while saving my running records. The app’s reliability is low, with crashes and a complex interface making it not worth using due to the lack of essential features.” |

| Function-Related Fulfillment | coaching, appropriate guidance, good functionality, integration, marathon preparation, exercise goals, exercise records, coaching, noise, voice guidance, GPS | [P] “It really helps me pace myself and improve my records! The coaching feature provides appropriate guidance, and the challenges keep me motivated to continue, making it feel like completing quests in a game.” [P] “After installing this app and starting to run without any plan, now, 8 months later, I can comfortably run 7–8 km regardless of my condition. The exercise records and route history are great for marathon preparation and tracking my progress.” [N] “I’m unable to set up my own exercise goals or coaching plan. When I input my current status and press complete, the integration fails, and an error occurs. Even after retrying, it just keeps repeating the process endlessly.” [N] “It would be nice to have the option to turn off voice guidance. I always use the app for running, even when I’m walking, but there are times when the voice becomes too noisy and distracting.” |

| System Availability | stable performance, high availability, no downtime, system operation, accuracy, distance, measurement, time, storage, technical issues, server instability system downtime, instability, errors, data loss, interruption | [P] “It’s free, and the exercise records are detailed, which is really great. The app offers stable performance with high availability, making it enjoyable to track my progress day by day. I highly recommend everyone download this useful app and work out hard. Sincere thanks to the developers. Thank you.:)” [P] “It measures running data, including distance and time, in real-time with complete accuracy. Also, since the records are reliably saved, I can easily compare before and after to evaluate my performance and improve in my next run. Very good.” [N] “It frequently experiences system downtime, leading to lags and slowdowns. Tracking often stops when running other apps, which points to system instability. Despite these technical issues, I continue using it due to its clean interface.” [N] “I’ve saved 500 km of running records over the past two years, but when I logged in today after suddenly being logged out, everything was reset...!!! This data loss due to server instability is unacceptable!!!” |

| Data Privacy | protection, security, privacy, screen record, login, logout, personal data information, personal data, leaks, vulnerabilities, invasion, deletion, hacking | [P] “I love that I can save my running records with photos, creating great memories while ensuring my personal data is securely stored. My personal exercise journal is coming together, making this app even more precious to me.” [P] “I’m using it well. Once, the data didn’t load and the screen froze, so I deleted and reinstalled the app, and my records were still protected and intact. It’s good.” [N] “It’s frustrating that the app requires logging in every time I enter. I keep using it because I don’t want to lose my personal data, but the login process feels like an invasion of privacy. I’m also amazed at the incredibly slow response to security vulnerabilities, despite numerous complaints in the past.” [N] “I logged in with my friend’s account once, and all of my friend’s personal information remained on the app. It makes me uncomfortable to think that my data could be vulnerable to leaks or hacking. The app should ensure that personal information is fully deleted after logging out, especially when using a different device.” |

| Dimension | Number of Positive Reviews | Number of Negative Reviews | Service Quality Score |

|---|---|---|---|

| App System Efficiency | 16,062 | 18,633 | −0.0741 |

| Function-Related Fulfillment | 27,058 | 14,302 | 0.3084 |

| System Availability | 9066 | 24,667 | −0.4625 |

| Data Privacy | 7854 | 13,200 | −0.2539 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, J.; Chung, J. Analysis of Service Quality in Smart Running Applications Using Big Data Text Mining Techniques. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 3352-3369. https://doi.org/10.3390/jtaer19040162

Kim J, Chung J. Analysis of Service Quality in Smart Running Applications Using Big Data Text Mining Techniques. Journal of Theoretical and Applied Electronic Commerce Research. 2024; 19(4):3352-3369. https://doi.org/10.3390/jtaer19040162

Chicago/Turabian StyleKim, Jongho, and Jinwook Chung. 2024. "Analysis of Service Quality in Smart Running Applications Using Big Data Text Mining Techniques" Journal of Theoretical and Applied Electronic Commerce Research 19, no. 4: 3352-3369. https://doi.org/10.3390/jtaer19040162

APA StyleKim, J., & Chung, J. (2024). Analysis of Service Quality in Smart Running Applications Using Big Data Text Mining Techniques. Journal of Theoretical and Applied Electronic Commerce Research, 19(4), 3352-3369. https://doi.org/10.3390/jtaer19040162