Role of Algorithm Awareness in Privacy Decision-Making Process: A Dual Calculus Lens

Abstract

1. Introduction

2. Literature Review and Theoretical Background

2.1. Algorithm Awareness

2.2. Information Disclosure

2.3. Dual Calculus Model

2.4. Theory of Planned Behavior (TPB)

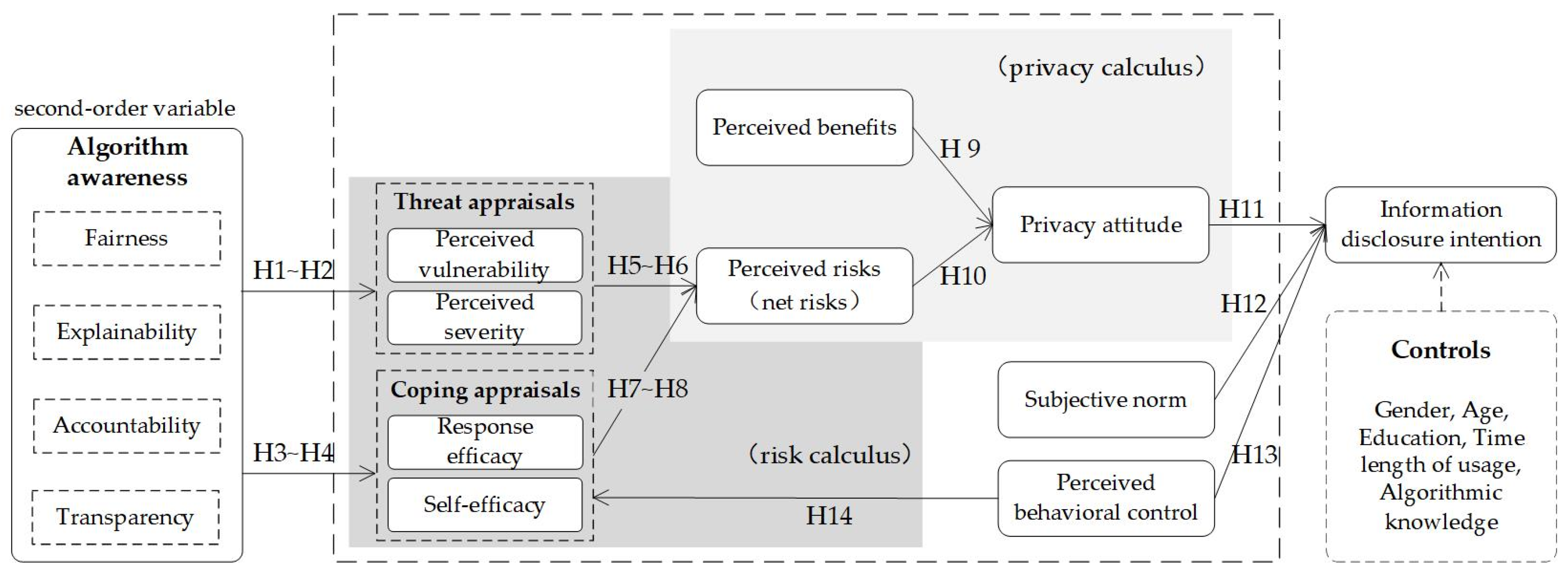

3. Hypotheses Development and Research Model

3.1. Understanding the Algorithm Awareness through Threat Appraisals and Coping Appraisals

3.2. Understanding the Outcomes of Risk Calculus

3.2.1. Threat Appraisals and Privacy Concerns

3.2.2. Coping Appraisals and Privacy Concerns

3.3. Understanding the Outcomes of Privacy Calculus

3.4. Understanding the Information-Disclosure Intention through the Theory of TPB

3.4.1. Privacy Attitude and Information-Disclosure Intention

3.4.2. Subjective Norm and Information-Disclosure Intention

3.4.3. Perceived Behavioral Control and Information-Disclosure Intention

4. Materials and Methods

4.1. Scale Development

4.2. Sample and Data Collection

4.3. Common Method Variance

5. Result

5.1. Validity and Reliability (Measurement Model)

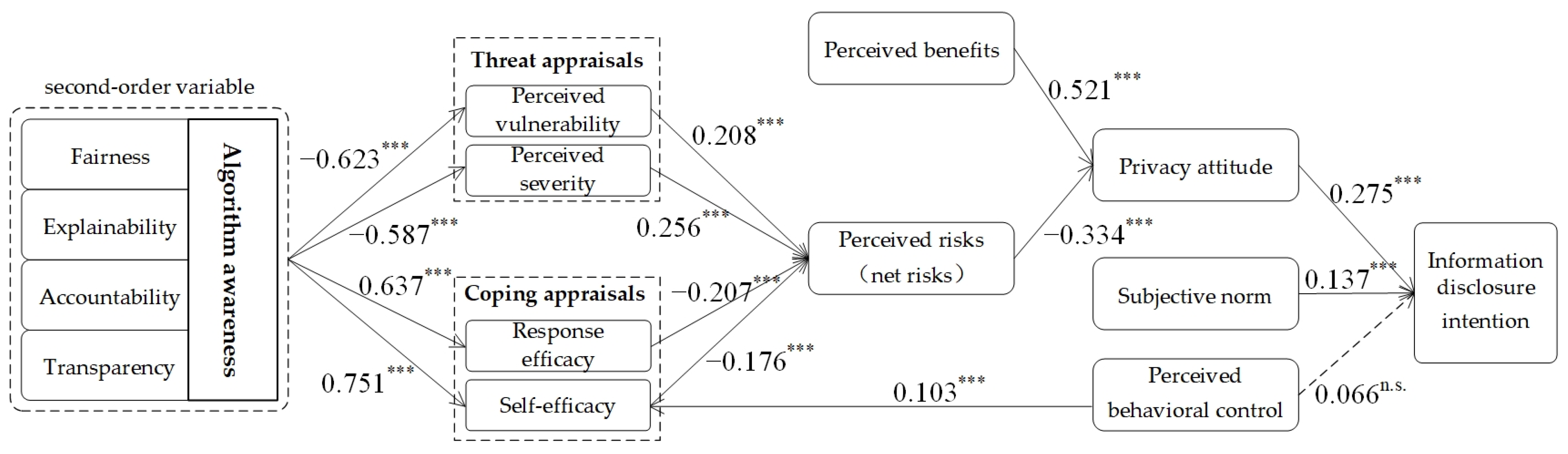

5.2. Evaluating the Structural Model

5.3. Testing the Mediating Effects

6. Conclusions and Discussion

6.1. Discussion of Findings

6.2. Theoretical Implications

6.3. Practical Implications

6.4. Limitations and Prospects

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Survey Instrument

| Variables | Measures |

| Fairness (Fai) | 1. An algorithmic platform does not discriminate against people and does now show favoritism (Nondiscrimination). 2. The source of data throughout an algorithmic process and its data analysis should be accurate and correct (Accuracy). 3. An algorithmic platform complies with the due process requirements of impartiality with no bias (Due process). |

| Explainability (Exp) | 1. I found algorithmic platforms to be comprehensible. 2. The AI algorithmic services are understandable. 3. I can understand and make sense of the internal workings of personalization. |

| Accountability (Acc) | 1. An algorithmic platform requires the person in charge to be accountable for its adverse individual or societal effects in a timely manner (Responsibility). 2. The platforms should be designed to enable third parties to audit and review the behavior of an algorithm (Auditability). 3. The platforms should have the autonomy to change the logic in their entire configuration using only simple manipulations (Controllability). |

| Transparency (Tra) | 1. The assessment and the criteria of algorithms used should be publicly open and understandable to users (Understandability). 2. Any results generated by an algorithmic system should be interpretable to the users affected by those outputs (Interpretability). 3. Algorithms should let people know how well internal states of algorithms can be understood from knowledge of their external outputs (Observability). |

| Perceived Vulnerability (PV) | 1. My information privacy is at risk of being invaded. 2. It is likely that my information privacy will be invaded. 3. It is possible that my information privacy will be invaded. |

| Perceived Severity (PS) | 1. If my information privacy is invaded, it would be severe. 2. If my information privacy is invaded, it would be serious. 3. If my information privacy is invaded, it would be significant. |

| Response Efficacy (RE) | 1. The privacy protection measures provided by this platform work for protecting my information. 2. The privacy protection measures provided by this platform can effectively protect my information. 3. When using privacy protection measures provided by this platform, my information is more likely to be protected. |

| Self-Efficacy (SE) | 1. Protecting my information privacy is easy for me. 2. I have the capability to protect my information privacy. 3. I am able to protect my information privacy without much effort. |

| Perceived risks (PR) | 1. Providing this platform with my personal information would involve many unexpected problems. 2. It would be risky to disclose my personal information to this platform. 3. There would be high potential for loss in disclosing my personal information to this platform. |

| Perceived benefits (PB) | 1. This platform can provide me with personalized services tailored to my activity context. 2. This platform can provide me with more relevant information tailored to my preferences or personal interests. 3. This platform can provide me with the kind of information or service that I might like. |

| Privacy attitude (PA) | 1. I think my benefits gained from the use of this platform can offset the risks of my information disclosure. 2. The value I gain from use of this platform is worth the information I give away. 3. Overall, I feel that providing this platform with my information is beneficial. |

| Subjective Norm (SN) | 1. People who are important to me would think that I should disclose my information if needed by this platform. 2. People who influence my behavior would think that I should disclose my information if needed by this platform. 3. People who are family to me would think that I should disclose my information if needed by this platform. |

| Perceived Behavioral Control (PBC) | 1. I believe I can control my personal information provided to this platform. 2. I believe I have control over how my personal information is used by this platform. 3. I belleve I have control over who can get access to my personal information collected by this platform. |

| Information Disclosure Intention (INT) | 1. I am likely to provide my personal information on this platform. 2. I am willing to provide my personal information on this platform to access relevant services. 3. It is possible for me to provide personal information on this platform. |

Appendix B. Correlation Coefficients and Square Root of AVE for Each Variable

| Variables | Fai | Exp | Acc | Tra | PV | PS | RE | SE | PB | PS | PA | SN | PBC | Int |

| Fai | 0.851 | |||||||||||||

| Exp | 0.636 | 0.885 | ||||||||||||

| Acc | 0.654 | 0.452 | 0.872 | |||||||||||

| Tra | 0.685 | 0.661 | 0.591 | 0.851 | ||||||||||

| PV | −0.588 | −0.479 | −0.513 | −0.513 | 0.883 | |||||||||

| PS | −0.515 | −0.422 | −0.502 | −0.492 | 0.732 | 0.905 | ||||||||

| RE | 0.489 | 0.465 | 0.579 | 0.542 | −0.680 | −0.710 | 0.907 | |||||||

| SE | 0.632 | 0.638 | 0.675 | 0.621 | −0.654 | −0.614 | 0.699 | 0.913 | ||||||

| PB | −0.562 | −0.421 | −0.599 | −0.519 | 0.717 | 0.725 | −0.720 | −0.695 | 0.875 | |||||

| PS | 0.069 | 0.113 | 0.104 | 0.059 | 0.117 | 0.014 | 0.050 | 0.069 | −0.097 | 0.875 | ||||

| PA | 0.201 | 0.207 | 0.313 | 0.157 | −0.107 | −0.228 | 0.252 | 0.257 | −0.384 | 0.553 | 0.853 | |||

| SN | 0.097 | 0.156 | 0.151 | 0.138 | −0.040 | −0.125 | 0.170 | 0.148 | −0.219 | 0.461 | 0.517 | 0.933 | ||

| PBC | 0.149 | 0.182 | 0.205 | 0.166 | −0.198 | −0.194 | 0.234 | 0.262 | −0.260 | 0.074 | 0.265 | 0.294 | 0.829 | |

| Int | 0.171 | 0.179 | 0.255 | 0.181 | −0.098 | −0.147 | 0.230 | 0.233 | −0.329 | 0.484 | 0.599 | 0.503 | 0.269 | 0.883 |

| Note: The bolded data on the diagonal are the square roots of the AVE values of each variable; the other data represent the correlation coefficients between each variable. | ||||||||||||||

Appendix C. Test Results for Cross-Loadings

| Variables | Fai | Exp | Acc | Tra | PV | PS | RE | SE | PB | PS | PA | SN | PBC | Int |

| Fai1 | 0.802 | 0.468 | 0.622 | 0.534 | −0.576 | −0.538 | 0.499 | 0.585 | −0.659 | 0.044 | 0.224 | 0.090 | 0.110 | 0.174 |

| Fai2 | 0.832 | 0.513 | 0.487 | 0.547 | −0.454 | −0.402 | 0.375 | 0.497 | −0.424 | 0.052 | 0.134 | 0.076 | 0.115 | 0.139 |

| Fai3 | 0.916 | 0.636 | 0.555 | 0.663 | −0.471 | −0.376 | 0.375 | 0.531 | −0.354 | 0.079 | 0.152 | 0.080 | 0.153 | 0.124 |

| Exp1 | 0.570 | 0.774 | 0.397 | 0.464 | −0.440 | −0.383 | 0.364 | 0.454 | −0.312 | 0.037 | 0.142 | 0.108 | 0.117 | 0.092 |

| Exp2 | 0.521 | 0.920 | 0.392 | 0.610 | −0.399 | −0.351 | 0.429 | 0.595 | −0.391 | 0.124 | 0.208 | 0.149 | 0.183 | 0.187 |

| Exp3 | 0.598 | 0.952 | 0.413 | 0.669 | −0.435 | −0.389 | 0.439 | 0.635 | −0.410 | 0.132 | 0.197 | 0.155 | 0.178 | 0.190 |

| Acc1 | 0.520 | 0.325 | 0.862 | 0.423 | −0.416 | −0.476 | 0.516 | 0.629 | −0.518 | 0.117 | 0.305 | 0.117 | 0.132 | 0.226 |

| Acc2 | 0.628 | 0.578 | 0.821 | 0.678 | −0.472 | −0.387 | 0.484 | 0.569 | −0.479 | 0.077 | 0.240 | 0.131 | 0.215 | 0.224 |

| Acc3 | 0.550 | 0.255 | 0.928 | 0.421 | −0.445 | −0.450 | 0.511 | 0.564 | −0.568 | 0.078 | 0.275 | 0.144 | 0.182 | 0.215 |

| Tra1 | 0.501 | 0.695 | 0.442 | 0.802 | −0.356 | −0.320 | 0.376 | 0.533 | −0.340 | 0.065 | 0.121 | 0.039 | 0.162 | 0.104 |

| Tra2 | 0.651 | 0.515 | 0.594 | 0.896 | −0.450 | −0.424 | 0.478 | 0.550 | −0.454 | 0.062 | 0.170 | 0.139 | 0.163 | 0.189 |

| Tra3 | 0.590 | 0.488 | 0.462 | 0.851 | −0.502 | −0.512 | 0.529 | 0.500 | −0.529 | 0.022 | 0.105 | 0.172 | 0.098 | 0.165 |

| PV1 | −0.525 | −0.425 | −0.515 | −0.459 | 0.890 | 0.643 | −0.607 | −0.620 | 0.686 | 0.129 | −0.114 | −0.013 | −0.201 | −0.099 |

| PV2 | −0.528 | −0.401 | −0.432 | −0.434 | 0.892 | 0.655 | −0.567 | −0.553 | 0.613 | 0.129 | −0.074 | 0.005 | −0.152 | −0.052 |

| PV3 | −0.504 | −0.442 | −0.403 | −0.465 | 0.866 | 0.639 | −0.627 | −0.554 | 0.593 | 0.048 | −0.094 | −0.103 | −0.168 | −0.107 |

| PS1 | −0.455 | −0.384 | −0.461 | −0.455 | 0.655 | 0.911 | −0.645 | −0.543 | 0.666 | 0.012 | −0.248 | −0.168 | −0.206 | −0.145 |

| PS2 | −0.461 | −0.350 | −0.458 | −0.416 | 0.671 | 0.909 | −0.623 | −0.583 | 0.675 | 0.022 | −0.207 | −0.063 | −0.155 | −0.120 |

| PS3 | −0.482 | −0.414 | −0.442 | −0.465 | 0.660 | 0.894 | −0.659 | −0.542 | 0.625 | 0.004 | −0.162 | −0.109 | −0.166 | −0.134 |

| RE1 | 0.476 | 0.455 | 0.525 | 0.504 | −0.587 | −0.636 | 0.903 | 0.604 | −0.601 | 0.069 | 0.248 | 0.168 | 0.182 | 0.230 |

| RE2 | 0.446 | 0.432 | 0.509 | 0.499 | −0.619 | −0.663 | 0.918 | 0.604 | −0.636 | 0.036 | 0.223 | 0.161 | 0.223 | 0.196 |

| RE3 | 0.411 | 0.380 | 0.539 | 0.473 | −0.642 | −0.631 | 0.897 | 0.689 | −0.715 | 0.033 | 0.215 | 0.135 | 0.230 | 0.202 |

| SE1 | 0.626 | 0.617 | 0.593 | 0.620 | −0.641 | −0.589 | 0.634 | 0.871 | −0.594 | 0.051 | 0.199 | 0.138 | 0.206 | 0.161 |

| SE2 | 0.615 | 0.635 | 0.635 | 0.577 | −0.592 | −0.536 | 0.587 | 0.971 | −0.605 | 0.068 | 0.233 | 0.121 | 0.217 | 0.210 |

| SE3 | 0.492 | 0.497 | 0.618 | 0.503 | −0.560 | −0.557 | 0.692 | 0.895 | −0.701 | 0.070 | 0.270 | 0.145 | 0.292 | 0.266 |

| PB1 | −0.523 | −0.361 | −0.568 | −0.427 | 0.599 | 0.611 | −0.616 | −0.625 | 0.881 | −0.168 | −0.401 | −0.185 | −0.209 | −0.326 |

| PB2 | −0.516 | −0.390 | −0.547 | −0.505 | 0.658 | 0.660 | −0.655 | −0.624 | 0.912 | −0.028 | −0.279 | −0.198 | −0.221 | −0.310 |

| PB3 | −0.432 | −0.355 | −0.452 | −0.429 | 0.626 | 0.633 | −0.620 | −0.574 | 0.831 | −0.054 | −0.326 | −0.191 | −0.256 | −0.223 |

| PS1 | 0.023 | 0.121 | 0.067 | 0.029 | 0.115 | 0.007 | 0.046 | 0.044 | −0.060 | 0.890 | 0.470 | 0.407 | 0.035 | 0.404 |

| PS2 | 0.068 | 0.107 | 0.102 | 0.071 | 0.081 | −0.007 | 0.056 | 0.069 | −0.113 | 0.867 | 0.490 | 0.417 | 0.105 | 0.412 |

| PS3 | 0.088 | 0.071 | 0.103 | 0.053 | 0.111 | 0.035 | 0.030 | 0.067 | −0.079 | 0.869 | 0.491 | 0.387 | 0.055 | 0.452 |

| PA1 | 0.131 | 0.159 | 0.254 | 0.116 | −0.078 | −0.157 | 0.189 | 0.205 | −0.273 | 0.443 | 0.842 | 0.436 | 0.216 | 0.481 |

| PA2 | 0.186 | 0.192 | 0.275 | 0.144 | −0.103 | −0.201 | 0.225 | 0.229 | −0.346 | 0.487 | 0.874 | 0.453 | 0.234 | 0.560 |

| PA3 | 0.193 | 0.178 | 0.272 | 0.141 | −0.092 | −0.222 | 0.229 | 0.223 | −0.358 | 0.484 | 0.843 | 0.434 | 0.228 | 0.486 |

| SN1 | 0.104 | 0.147 | 0.143 | 0.158 | −0.042 | −0.127 | 0.157 | 0.149 | −0.199 | 0.420 | 0.454 | 0.933 | 0.288 | 0.458 |

| SN2 | 0.081 | 0.143 | 0.149 | 0.121 | −0.045 | −0.117 | 0.143 | 0.132 | −0.207 | 0.423 | 0.500 | 0.940 | 0.252 | 0.495 |

| SN3 | 0.087 | 0.147 | 0.128 | 0.109 | −0.026 | −0.106 | 0.178 | 0.133 | −0.206 | 0.448 | 0.493 | 0.924 | 0.285 | 0.452 |

| PBC1 | 0.107 | 0.140 | 0.170 | 0.141 | −0.192 | −0.185 | 0.219 | 0.219 | −0.229 | 0.024 | 0.222 | 0.284 | 0.821 | 0.219 |

| PBC2 | 0.145 | 0.172 | 0.194 | 0.142 | −0.164 | −0.168 | 0.206 | 0.241 | −0.243 | 0.107 | 0.269 | 0.291 | 0.916 | 0.286 |

| PBC3 | 0.117 | 0.137 | 0.140 | 0.134 | −0.137 | −0.127 | 0.153 | 0.186 | −0.165 | 0.042 | 0.148 | 0.126 | 0.742 | 0.140 |

| Int1 | 0.185 | 0.164 | 0.259 | 0.193 | −0.098 | −0.120 | 0.189 | 0.230 | −0.318 | 0.433 | 0.574 | 0.450 | 0.249 | 0.888 |

| Int2 | 0.137 | 0.144 | 0.209 | 0.161 | −0.080 | −0.140 | 0.211 | 0.204 | −0.278 | 0.435 | 0.497 | 0.444 | 0.193 | 0.891 |

| Int3 | 0.129 | 0.166 | 0.206 | 0.125 | −0.079 | −0.131 | 0.211 | 0.183 | −0.273 | 0.413 | 0.512 | 0.437 | 0.270 | 0.870 |

| Note: The bolded data represent the primary loadings of each variable; the remaining data represent the cross-loadings. | ||||||||||||||

Appendix D. Test Results for Heterotrait–Monotrait Ratio

| Variables | Fai | Exp | Acc | Tra | PV | PS | RE | SE | PB | PS | PA | SN | PBC |

| Exp | 0.765 | ||||||||||||

| Acc | 0.787 | 0.524 | |||||||||||

| Tra | 0.844 | 0.796 | 0.703 | ||||||||||

| PV | 0.706 | 0.561 | 0.597 | 0.616 | |||||||||

| PS | 0.609 | 0.486 | 0.581 | 0.581 | 0.838 | ||||||||

| RE | 0.578 | 0.533 | 0.668 | 0.640 | 0.777 | 0.797 | |||||||

| SE | 0.743 | 0.725 | 0.776 | 0.730 | 0.743 | 0.687 | 0.779 | ||||||

| PB | 0.680 | 0.494 | 0.709 | 0.627 | 0.839 | 0.836 | 0.827 | 0.796 | |||||

| PS | 0.085 | 0.131 | 0.123 | 0.070 | 0.136 | 0.026 | 0.059 | 0.079 | 0.112 | ||||

| PA | 0.245 | 0.247 | 0.379 | 0.191 | 0.127 | 0.266 | 0.296 | 0.299 | 0.460 | 0.665 | |||

| SN | 0.112 | 0.175 | 0.170 | 0.159 | 0.058 | 0.138 | 0.189 | 0.162 | 0.248 | 0.521 | 0.596 | ||

| PBC | 0.187 | 0.221 | 0.248 | 0.212 | 0.242 | 0.232 | 0.279 | 0.311 | 0.318 | 0.086 | 0.324 | 0.334 | |

| Int | 0.205 | 0.206 | 0.299 | 0.215 | 0.113 | 0.169 | 0.264 | 0.264 | 0.384 | 0.566 | 0.713 | 0.563 | 0.317 |

References

- Dwivedi, Y.K.; Hughes, L.; Ismagilova, E.; Aarts, G.; Coombs, C.; Crick, T.; Williams, M.D. Artificial Intelligence (AI): Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. Int. J. Inf. Manag. 2021, 57, 101994. [Google Scholar] [CrossRef]

- Ashok, M.; Madan, R.; Joha, A.; Sivarajah, U. Ethical framework for artificial intelligence and digital technologies. Int. J. Inf. Manag. 2022, 62, 102433. [Google Scholar] [CrossRef]

- Son, J.Y.; Kim, S.S. Internet users’ information privacy-protective responses: A taxonomy and a nomological model. MIS Q. 2008, 32, 503–529. [Google Scholar] [CrossRef]

- Shin, D. The effects of explainability and causability on perception, trust, and acceptance: Implications for explainable AI. Int. J. Hum.-Comput. Stud. 2021, 146, 102551. [Google Scholar] [CrossRef]

- Shin, D.; Kee, K.F.; Shin, E.Y. Algorithm awareness: Why user awareness is critical for personal privacy in the adoption of algorithmic platforms. Int. J. Inf. Manag. 2022, 65, 102494. [Google Scholar] [CrossRef]

- Shin, D.; Park, Y.J. Role of fairness, accountability, and transparency in algorithmic affordance. Comput. Hum. Behav. 2019, 98, 277–284. [Google Scholar] [CrossRef]

- Gran, A.; Booth, P.; Bucher, T. To be or not to be algorithm aware. Inf. Commun. Soc. 2021, 24, 1779–1796. [Google Scholar] [CrossRef]

- Eslami, M.; Rickman, A.; Vaccaro, K.; Aleyasen, A.; Vuong, A.; Karahalios, K.; Hamilton, K.; Sandvig, C. I always assumed that I wasn’t really that close to her. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Republic of Korea, 18–23 April 2015; pp. 153–162. [Google Scholar]

- Zarouali, B.; Boerman, S.C.; de Vreese, C.H. Is this recommended by an algorithm? The development and validation of the algorithmic media content awareness scale (AMCA-scale). Telemat. Inform. 2021, 62, 101607. [Google Scholar] [CrossRef]

- Monzer, C.; Moeller, J.; Helberger, N.; Eskens, S. User perspectives on the news personalization process. Digit. J. 2020, 8, 1142–1162. [Google Scholar]

- Ahmad, F.; Widén, G.; Huvila, I. The impact of workplace information literacy on organizational innovation. Int. J. Inf. Manag. 2020, 51, 102041. [Google Scholar] [CrossRef]

- Siles, I.; Segura-Castillo, A.; Solís, R.; Sancho, M. Folk theories of algorithmic recommendations on Spotify. Big Data Soc. 2020, 7, 2053951720923377. [Google Scholar] [CrossRef]

- Spanaki, K.; Karafili, E.; Despoudi, S. AI applications of data sharing inagriculture 4.0: A framework for role-based data access control. Int. J. Inf. Manag. 2021, 59, 102350. [Google Scholar] [CrossRef]

- Gutierrez, A.; O’Leary, S.; Rana, N.P.; Dwivedi, Y.K.; Calle, T. Using privacy calculus theory to explore entrepreneurial directions in mobile location-based advertising: Identifying intrusiveness as the critical risk factor. Comput. Hum. Behav. 2019, 95, 295–306. [Google Scholar] [CrossRef]

- Hargittai, E.; Gruber, J.; Djukaric, T.; Fuchs, J.; Brombach, L. Black box measures? Inf. Commun. Soc. 2020, 23, 764–775. [Google Scholar]

- Zhang, L.; Yencha, C. Examining perceptions towards hiring algorithms. Technol. Soc. 2022, 68, 101848. [Google Scholar] [CrossRef]

- Swart, J. Experiencing algorithms: How young people understand, feel about, and engage with algorithmic news selection on social media. Soc. Media+ Soc. 2021, 7, 20563051211008828. [Google Scholar] [CrossRef]

- Xu, H.; Luo, X.R.; Carroll, J.M.; Rosson, M.B. The personalization privacy paradox: An exploratory study of decision making process for location-aware marketing. Decis. Support Syst. 2011, 51, 42–52. [Google Scholar] [CrossRef]

- Liu, B.; Sun, W. Research on Mobile Users’ Information Disclosure Decision Process from the Perspective of the Whole CPM Theory. J. Manag. Sci. 2021, 34, 76–87. (In Chinese) [Google Scholar]

- Kim, M.S.; Kim, S. Factors influencing willingness to provide personal information for personalized recommendations. Comput. Hum. Behav. 2018, 88, 143–152. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, J.; Zhong, W. Advertising Accuracy and Effectiveness: A Field Experiment on Privacy Concern. J. Manag. Sci. 2019, 32, 123–132. (In Chinese) [Google Scholar]

- Liu, J.; Sun, G.; Wu, D. Research on the Digital Native Algorithms Perception and Action Mechanism. Inf. Doc. Serv. 2023, 44, 80–87. (In Chinese) [Google Scholar]

- Li, Y. Theories in online information privacy research: A critical review and an integrated framework. Decis. Support Syst. 2012, 54, 471–481. [Google Scholar] [CrossRef]

- Dinev, T.; Hart, P.; Mullen, M.R. Internet privacy concerns and beliefs about government surveillance—An empirical investigation. J. Strateg. Inf. Syst. 2008, 17, 214–233. [Google Scholar] [CrossRef]

- Zhu, H.; Ou, C.X.; van den Heuvel, W.J.A.; Liu, H. Privacy calculus and its utility for personalization services in e-commerce: An analysis of consumer decision-making. Inf. Manag. 2017, 54, 427–437. [Google Scholar] [CrossRef]

- Jiang, Z.; Heng, C.; Ben, C.F.C. Privacy Concerns and Privacy-Protective Behavior in Synchronous Online Social Interactions. Inf. Syst. Res. 2013, 24, 579–595. [Google Scholar] [CrossRef]

- Cheng, X.; Hou, T.; Mou, J. Investigating perceived risks and benefits of information privacy disclosure in IT-enabled ride-sharing. Inf. Manag. 2021, 58, 103450. [Google Scholar] [CrossRef]

- Ajzen, I. The theory of planned behavior. Organ. Behav. Hum. Decis. Process. 1991, 50, 179–211. [Google Scholar] [CrossRef]

- Liu, X.; Chao, L. Analysis of Fairness in Al Governance and Its Evaluation Methods. Inf. Doc. Serv. 2022, 43, 24–33. (In Chinese) [Google Scholar]

- Li, G.; Liang, Y.; Su, J. Breaking the Algorithmic Black-box Governance Dilemma of Digital Platform Companies: Research on the Diffusion of Algorithm Transparency Strategy in China. J. Inf. Resour. Manag. 2023, 13, 81–94. (In Chinese) [Google Scholar]

- Zhou, X. Algorithmic Interpretability: The Normative Research Value of a Technical Concept. J. Comp. Law 2023, 3, 188–200. (In Chinese) [Google Scholar]

- Lepri, B.; Oliver, N.; Letouzé, E.; Pentland, A.; Vinck, P. Fair, transparent, and accountable algorithmic decision-making processes. Philos. Technol. 2018, 31, 611–627. [Google Scholar] [CrossRef]

- An, J. Hierarchy of Algorithmic Transparency. Chin. J. Law 2023, 45, 52–66. (In Chinese) [Google Scholar]

- Wang, Q. The Multiple Dimensions of Algorithmic Transparency and Algorithmic Accountability. J. Comp. Law 2020, 6, 163–173. (In Chinese) [Google Scholar]

- Maddux, J.E.; Rogers, R.W. Protection motivation and self-efficacy: A revised theory of fear appeals and attitude change. J. Exp. Soc. Psychol. 1983, 19, 469–479. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, S.; Chen, X.; Wang, L.; Gao, B.; Zhu, Q. Health information privacy concerns, antecedents, and information disclosure intention in online health communities. Inf. Manag. 2018, 55, 482–493. [Google Scholar] [CrossRef]

- Dinev, T.; Hart, P. An extended privacy calculus model for e-commerce transactions. Inf. Syst. Res. 2006, 17, 61–80. [Google Scholar] [CrossRef]

- Smith, H.J.; Dinev, T.; Xu, H. Information privacy research: An interdisciplinary review. MIS Q. 2011, 35, 989–1015. [Google Scholar] [CrossRef]

- Chellappa, R.K.; Sin, R.G. Personalization versus privacy: An empirical examination of the online consumer’s dilemma. Inf. Technol. Manag. 2005, 6, 181–202. [Google Scholar] [CrossRef]

- Deng, X. Consumers’ Ethical Purchasing Intention in Chinese Context: Based on TPB Perspective. Nankai Bus. Rev. 2012, 15, 22–32. (In Chinese) [Google Scholar]

- Zhang, K.; Wang, W.; Li, J.; Xie, Y. Research on Influencing Factors of User Information Disclosure Behavior in Electronic Health Websites. Libr. Inf. Serv. 2018, 62, 82–91. (In Chinese) [Google Scholar]

- Johnston, A.C.; Warkentin, M.; Siponen, M. An enhanced fear appeal rhetorical framework. MIS Q. 2015, 39, 113–134. [Google Scholar] [CrossRef]

- Xu, H.; Teo, H.H.; Tan, B.C.; Agarwal, R. The role of push-pull technology in privacy calculus: The case of location-based services. J. Manag. Inf. Syst. 2009, 26, 135–174. [Google Scholar] [CrossRef]

- Kaushik, K.; Jain, N.K.; Singh, A.K. Antecedents and outcomes of information privacy concerns: Role of subjective norm and social presence. Electron. Commer. Res. Appl. 2018, 32, 57–68. [Google Scholar] [CrossRef]

- Xu, H.; Dinev, T.; Smith, J.; Hart, P. Information privacy concerns: Linking individual perceptions with institutional privacy assurances. J. Assoc. Inf. Syst. 2011, 12, 798–824. [Google Scholar] [CrossRef]

- Sarstedt, M.; Ringle, C.M.; Hair, J.F. Partial least squares structural equation modeling. Eur. Bus. Rev. 2014, 26, 106–121. [Google Scholar]

- Harman, H.H. Modern Factor Analysis; University of Chicago Press: Princeton, NJ, USA, 1976. [Google Scholar]

- Kim, H.Y. Statistical notes for clinical researchers: Assessing normal distribution (2) using skewness and kurtosis. Restor. Dent. Endod. 2013, 38, 52–54. [Google Scholar] [CrossRef] [PubMed]

- Kock, N. Common method bias in PLS-SEM: A full collinearity assessment approach. Int. J. E-Collab. 2015, 11, 1–10. [Google Scholar] [CrossRef]

- Hair, J.F.; Ringle, C.M.; Sarstedt, M. PLS-SEM: Indeed a silver bullet. J. Mark. Theory Pract. 2011, 19, 139–152. [Google Scholar] [CrossRef]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Kline, R.B. Principles and Practice of Structural Equation Modeling; Guilford Publications: New York, NY, USA, 2015; pp. 262–299. [Google Scholar]

- Petter, S.; Straub, D.; Rai, A. Specifying formative constructs in information systems research. MIS Q. 2007, 31, 623–656. [Google Scholar] [CrossRef]

- Chin, W.W. The partial least squares approach to structural equation modeling. Mod. Methods Bus. Res. 1998, 295, 295–336. [Google Scholar]

- Geisser, S. The predictive sample reuse method with applications. J. Am. Stat. Assoc. 1975, 70, 320–328. [Google Scholar] [CrossRef]

- Acquisti, A.; Brandimarte, L.; Loewenstein, G. Secrets and likes: The drive for privacy and the difficulty of achieving it in the digital age. J. Consum. Psychol. 2020, 30, 736–758. [Google Scholar] [CrossRef]

- Min, S. From algorithmic disengagement to algorithmic activism. Telemat. Inform. 2019, 43, 101251. [Google Scholar] [CrossRef]

| Variables | Cronbach’s α | CR | AVE |

|---|---|---|---|

| Fai | 0.808 | 0.887 | 0.725 |

| Exp | 0.858 | 0.915 | 0.784 |

| Acc | 0.841 | 0.904 | 0.76 |

| Tra | 0.808 | 0.887 | 0.724 |

| PV | 0.858 | 0.914 | 0.779 |

| PS | 0.889 | 0.931 | 0.819 |

| RE | 0.892 | 0.933 | 0.822 |

| SE | 0.899 | 0.938 | 0.834 |

| PB | 0.846 | 0.907 | 0.766 |

| PR | 0.847 | 0.908 | 0.766 |

| PA | 0.813 | 0.889 | 0.728 |

| SN | 0.925 | 0.952 | 0.87 |

| PBC | 0.773 | 0.868 | 0.688 |

| Int | 0.859 | 0.914 | 0.779 |

| Second-Order Variable | Second-Order Variable | Weight | t-Value | p-Value | VIF |

|---|---|---|---|---|---|

| algorithm awareness | Fairness | 0.251 | 4.371 | 0.000 | 2.567 |

| Explainability | 0.214 | 4.353 | 0.000 | 2.006 | |

| Accountability | 0.488 | 11.698 | 0.000 | 1.877 | |

| Transparency | 0.229 | 4.203 | 0.000 | 2.406 |

| Paths | Indirect Effect | Bias Corrected 95%CI | Direct Effect | Bias Corrected 95%CI | ||

|---|---|---|---|---|---|---|

| UCL | LCL | UCL | LCL | |||

| AA→PV→PR | −0.130 | −0.172 | −0.089 | −0.105 | −0.181 | −0.010 |

| AA→PS→PR | −0.150 | −0.194 | −0.103 | |||

| AA→RE→PR | −0.132 | −0.187 | −0.084 | |||

| AA→SE→PR | −0.132 | −0.205 | −0.059 | |||

| PR→PA→INT | −0.092 | −0.125 | −0.063 | −0.123 | −0.198 | −0.052 |

| PB→PA→INT | 0.143 | 0.105 | 0.183 | 0.156 | 0.093 | 0.215 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, S.; Zhang, B.; He, H. Role of Algorithm Awareness in Privacy Decision-Making Process: A Dual Calculus Lens. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 899-920. https://doi.org/10.3390/jtaer19020047

Tian S, Zhang B, He H. Role of Algorithm Awareness in Privacy Decision-Making Process: A Dual Calculus Lens. Journal of Theoretical and Applied Electronic Commerce Research. 2024; 19(2):899-920. https://doi.org/10.3390/jtaer19020047

Chicago/Turabian StyleTian, Sujun, Bin Zhang, and Hongyang He. 2024. "Role of Algorithm Awareness in Privacy Decision-Making Process: A Dual Calculus Lens" Journal of Theoretical and Applied Electronic Commerce Research 19, no. 2: 899-920. https://doi.org/10.3390/jtaer19020047

APA StyleTian, S., Zhang, B., & He, H. (2024). Role of Algorithm Awareness in Privacy Decision-Making Process: A Dual Calculus Lens. Journal of Theoretical and Applied Electronic Commerce Research, 19(2), 899-920. https://doi.org/10.3390/jtaer19020047