Abstract

Solvers’ wide and continuous participation is imperative for the sustainable development of online crowdsourcing platforms (OCPs). Prior studies have deeply investigated what and how solvers’ motives and demographics, task attributes, requester attributes, and platform designs influence solvers’ participation. However, to our knowledge, few studies concentrated on what these OCPs do for solvers in practices that are concerned with solvers and thus influence their decision to participate. To fill this gap, this study conducted a content analysis of 25 typical OCPs focusing on problem-solving contests to identify service measures that they presented for solvers. Consequently, 14 major service measures that are related to contest management, solver management, and requester management were identified. Thereafter, we discussed the roles of these service measures in solvers’ participation. They are activating solvers to participate, providing solvers opportunities to participate, and supporting solvers to participate. Our analysis, on the one hand, presents a comprehensive list of service measures for solvers distributed on these OCPs separately and on the other hand aids the OCPs to improve their solver service and for solvers to compare and analyze their preferred OCPs as a reference.

1. Introduction

Crowdsourcing is a kind of participative online activity in which a large and possibly undefined group of people contribute to the tasks outsourced by requesters through a flexible open call [1]. Inspired by the financial or non-financial benefits, there is a popular trend for firms or individuals to apply and for solvers to make use of crowdsourcing to handle different possible outsourced tasks, especially problem-solving tasks [2,3,4]. In respect to problem-solving tasks, contest is an extremely widely adopted crowdsourcing form for addressing them [5,6]. Furthermore, a large number of online crowdsourcing platforms (OCPs) supporting problem-solving contests have been developed and grown rapidly, such as Hyve, Designcrowd, 99design, and GoPillar. At the same time, millions of solvers around the world have conducted activities on these OCPs and millions of dollars have been transferred from requesters to solvers [5].

OCPs act as intermediaries that connect and serve requesters and solvers. Their sustainable development heavily depends on the wide and continuous participation of solvers [7,8,9]. To stimulate solvers to participate, academic researchers have made great efforts on identifying and examining the factors that influence solvers’ participation in a specific task or a specific OCP. These factors mainly include solver motivation [10,11], expertise [12,13], and cultural background [14,15] and external factors like requester and platform fairness [16,17] and feedback [18,19], task attributes [20,21,22].

Meanwhile, many practical suggestions were developed for OCPs to improve their designs and services. In those suggestions, researchers all highlighted the importance of serving solvers effectively and gave a few specific pieces of advice. For example, Kohler [23] considered more than 20 leading crowdsourcing ventures to identify strategies for scaling crowdsourcing platforms. Further, curating existing creators and attracting new creators were two important strategies. Blohm et al. [7] suggested 21 governance mechanisms to govern platforms and further investigated their effectiveness in 19 platform case studies. Out of these mechanisms, providing proper incentives, giving feedback against solvers’ contributions, promoting socialization, modularizing tasks were effective for activating contributors to solve problems. Johnson and Liew Chern [24] presented four main crowdsourcing platform design recommendations which were to promote ease of use, attract and sustain solver interest, foster a community of solvers, and show solvers’ contributions after analyzing 12 New Zealand public cultural heritage institutions crowdsourcing platforms.

In addition, in respect to specific problem-solving contests, scholars indicated that appropriate contest design is helpful for keeping solvers’ participation. For instance, Ebner et al. [25] concentrated on IT-supported idea competitions and suggested widening the topic of the ideas competition and offer an attractive incentive structure in order to keep solvers’ participation. Ren et al. [26] proposed a top-down process of designing contest which means that design contest in advance with proper IT artifacts in order to address workers’ motives. Oguz Ali Acar [27] emphasized the importance of activities and technical features that enable one to socialize with other participants, support active participation, and create a participatory experience in idea contests.

Prior studies provide us solid knowledge to understand why (motives) and what factors influence solvers’ participation in a specific task or an OCP in terms of theoretical basis. Additionally, from the practical perspective, some valuable suggestions were developed for improving OCP or a specific problem-solving contest design. Nevertheless, few studies focus on typical and widely used OCPs particularly for problem-solving contests and further identify what these OCPs actually do which aim to serve their solvers. One of the reasons that we are concerned with OCPs is that they are the only places where solvers engage in problem-solving contests. Solvers would search and examine an OCP’s features that they then concern and even compare them with other similar OCPs in making a decision to participate. In other words, what OCPs actually do rather than what they should do catches solvers’ sight first and has a direct impact on solvers’ participation decisions. Another reason is that there is a lack of a whole picture of what OCPs do for solvers in problem-solving contests although the existing studies have recommended some separated measures for serving solvers effectively under specific contexts.

In an attempt to fill this gap, this study conducted a content analysis of OCPs’ websites for problem-solving contests to identify what they have done for solvers. These websites include all information provided by OCPs for solvers and act as the intermediaries where solvers directly engage in contests and interact with others. On these websites, what the OCP does is conveyed explicitly by specific service items, standards and regulations, rules, and tools. We name them as service measures that aim to serve, support, regulate, and orchestrate solvers’ participation in this study. Consequently, 14 major service measures that are related to contest management, solver management, and requester management were identified from 25 OCPs concentrating on problem-solving contests. Furthermore, we suggested a framework for demonstrating the relations among these service measures. Our analysis offers a comprehensive list of measures for serving solvers by conducting a content analysis of the OCPs. It, on the one hand, contributes to theoretical researches in the field of crowdsourcing by providing a reference to identify and analyze the factors that impact solvers’ participation in problem-solving contests. On the other hand, the study would contribute to the content analysis method by extending its application into the crowdsourcing field. In terms of practical implications, our findings may act as a guideline for OCPs to improve their solver service and for solvers to make a decision to participate in the appropriate OCPs.

2. Related Work

This section firstly illustrates crowdsourcing contests for problem-solving. Thereafter, the factors that influence solvers’ participation in crowdsourcing are presented. They help us to establish the scope of the followed OCPs search. Additionally, the presented influential factors could act as a reference for us to identify service measures and further to understand their functions and roles in solvers’ participation.

2.1. Problem-Solving Contests in Crowdsourcing

In a crowdsourcing system, four major elements are generally highlighted. They are tasks that need to be performed; requesters who publish tasks; crowds who engage in the tasks; and platforms linking requesters and crowds [1,2,28]. Among these elements, the task plays a central role that connects requesters, crowds, and platforms together. This study mainly concentrates on problem-solving tasks that have been performed widely through crowdsourcing [2,3,4].

In general, problem-solving tasks are complex, not easy to be decomposed apparently, do not have one (or more) correct answer(s), and more importantly, their completion heavily relies on engaged solvers’ skills, knowledge, efforts, and creativity [3,12]. Furthermore, their crowdsourcing aims to seek solutions that are feasible and can be implemented to handle the specific problems well. So a specific solver who tries to complete a problem-solving task in crowdsourcing has to make a certain effort and submit solutions that satisfy requesters’ requirements.

There are two kinds of crowdsourcing approaches, that is, integrative and selective, that are used to conduct problem-solving tasks in terms of the processes of obtaining final solutions [28,29,30]. Specifically, the integrative approach emphasizes that final results are obtained by aggregating all submitted solutions. In contrast, for the selective approach, solvers work independently and only one or maybe several of them are selected as winners. In practice, the contest is the main representative form of applying the selective process [5,6].

On the basis of the above description, we demonstrate crowdsourcing contests for problem-solving as a contest-based crowdsourcing model aiming to handle problem-solving tasks. Further, its process can be briefly demonstrated as a requester initializes a contest on an OCP to seek solutions for his/her problem-solving task with specific requirements. Solvers who choose this task to participate would develop and submit their solutions independently. Finally, those submitted solutions would be evaluated and the solvers who have provided a solution with superior quality (determined by the requester) are awarded. During the whole process, the OCP would offer a diversity of service measures to support and facilitate solvers in conducting various actions.

2.2. Factors Influencing Solvers’ Participation in Crowdsourcing

The factors that influence solvers’ participation in crowdsourcing can be categorized into four groups according to the components of crowdsourcing systems. They are solver attributes, task attributes, OCP attributes, and requester attributes.

(1) Solver attributes

Solvers’ extrinsic and intrinsic motives are the most highlighted factors that affect their participation. In particular, to earn monetary rewards, to learn, to improve career prospects, to gain reputation, to have fun, efficacy, to be a member of a community, and altruism are the main motivational factors [11,31,32,33]. Furthermore, solvers’ demographics like age, gender, and employment [34,35,36]; participation experience and past success experience [37,38]; domain knowledge [12,13,39]; cultural background [14,15] were argued as important factors that affect solvers’ participation as well.

(2) Task attributes

Task attributes demonstrate a task’s characteristics, requirements, status, and present forms, which may affect solvers’ perception of the task and further their participation and contribution. Widely investigated attributes include task complexity and autonomy [40,41], task instruction [20,21], task prize [42], task in-process status including a number of participated solvers and submissions, and feedback and comments [42,43].

(3) OCP attributes

Three main OCP attributes were examined to influence solvers’ participation. The first is OCP trustworthiness. Commonly, a high-level trust of an OCP perceived by solvers could meet their psychological requirements and thus is likely to alleviate their concerns against potential uncertainties and risks [9,40]. The second is OCP’s feedback on solvers’ activities and performance, such as rankings and reputation scores. It is helpful for increasing solvers’ sense of being fairly treated and respected [18,44]. The final attribute is various governance mechanisms such as offering knowledge integration instructions [45], sharing value captured, providing recognition from multiple sources and preserving the love for crowds [23], providing features for profiling individuals [46], estimating appropriate service fee access to each contest [47], and developing effective design toolkits and communication tools [48].

(4) Requester attributes

Requester fairness, identity, and feedback are the major attributes that may influence solvers’ participation. In particular, requester fairness specialized as distributive, procedural, and interactional fairness has an effect on solvers’ trust in the requester [17,22]. Requester identity displays a requester’s name, financial status, reputation, and experience on an OCP. Disclosure of requester identity plays a key role in promoting solvers’ decision to engage in a task [22,49]. Last but not least is requester feedback that would make solvers feel that their contributions are important and think of it as a genuine sign of appreciation [32,50]. Moreover, feedback forms [19,51], feedback content [52], the timing of giving feedback [51], and direction and strength of feedback [53] are required to be considered by requesters when presenting feedback.

3. Methods

The research design involves content analysis of service measures presented by OCPs on their websites. The content analysis method is a qualitative research method that can be used for analyzing all kinds of media texts [54]. It is considered a common method in communication studies and has been used widely in investigating communication phenomena based mostly on texts on websites [54,55,56]. Application of content analysis began with actual observations and the collection of original documents, and then proceeded to analyze, code, and refine the concepts and categories before constructing the systematic theory [57,58]. Figure 1 presents the detailed process of performing content analysis in this study.

Figure 1.

The application procedure of content analysis.

3.1. Sample Identification

Web searches were conducted using the terms “crowdsourcing platform”, “crowdsourcing website”, and “crowdsourcing contest” using the search engine Google in May 2020. We first recorded and examined OCP websites for problem-solving contests on the web directly and those that were indicated in web articles such as “top 15 world’s best crowdsourcing platforms and websites|2019 best sites” and “the 4 best crowdsourcing platforms for graphic and product design”. At this stage, a total of 79 websites were retrieved. After deleting those duplicate and defunct, 19 websites that meet all inclusion criteria remained. The criteria included (1) link various requesters and solvers; (2) focus on problem-solving tasks (e.g., graphic design, interior design, software development, data mining and analysis), those focusing on simple task were not included, such as AMTurk; (3) contest is the primary way for problem-solving; (4) crowdsourcing contest is the major value unit, so a few known OCPs like Lego ideas, Threadless, and Kaggle were excluded; (5) money as the major extrinsic incentive. This criterion excludes non-profit OCPs like OpenIdeo and Greenchallenge.

To avoid missing some representative OCPs, this study also collected those investigated in prior studies, such as listed 22 OCPs in [23], 116 open innovation web-based platforms in [59], and some examples in [15,60]. With the above inclusion criteria, six OCP websites were added to the initial samples. Consequently, the final sample consists of 25 OCP websites that are presented in Table 1. The information about each OCP was collected directly from its webpages on 26–27 July 2020. We filled the symbol “/” if data could not be found.

Table 1.

The selected OCPs.

Of the selected OCPs, 11 focused on logo design, web design, and graphic design, 7 focused on innovative ideas, concepts, and solutions creation aiming to solve various challenges, 3 served for video production and idea creation, 3 focused on architecture and interior design, and only “Topcoder” worked in software design and build.

3.2. Unit of Analysis and Data Preservation

Selecting the unit of analysis is one of the most basic decisions during implementing a content analysis [61]. This study took OCP websites as research objects and analyzed what they do for solvers one by one. Each of them is enough to be considered as a whole and to be possible to keep in mind as a context for analyzing. Thus, the suitable unit of analysis is each selected OCP website. With respect to each website, we mainly concentrated on manifest content like visible and obvious components and regulations, policies, and rules in text. Further, as these contents generally do not vary frequently in a period, we observed, extracted, recorded, and coded them directly from the webpages of each website and did not solely download and preserve them. Conduction of the above work was in June–August 2020.

3.3. The Pilot Study

For all units of analysis, the authors first browsed and acquainted each unit’s major components and their relations. Generally, five kinds of content, that is, contests and their management, solvers and their management, requesters and their management, OCPs’ basic and operation information, community/forum were presented on the OCPs’ websites. From these contents, we checked and extracted the service measures developed by OCPs for solvers. Furthermore, based on the components of a crowdsourcing system and factors influencing solvers’ participation demonstrated in Section 2, we divided the extracted service measure into three top categories. They are related to contest management, solver management, and requester management. Subsequently, under each of the categories, specific texts and descriptions of measures were observed, collected, coded, and further classified into sub-categories.

3.4. Coding Guide Development and Coding Procedures

We first reviewed six representative websites including “Eÿeka”, “HYVE”, “99designs”, “DesignCrowd”, “Topcoder”, and “Gopillar”, and further drafted a coding guide. Since the number of OCPs in this study is not too large, so our coders were working together to review, refine, and retest to develop consistent definitions, examples, and codes iteratively. The codings subject to disagreement were revisited until the agreement was reached. The specific procedure of coding content of a single website was as follows.

Step 1: Observing and coding related content on the selected websites one by one. We selected “Eÿeka” as the first website for content analysis. The manifest content on its webpages was observed, examined, and recorded in an Excel sheet. In accordance with defined categories at the stage of a pilot study, we divided the content in terms of contest, solver, and requester management. For content such as illustration pictures of a specific contest and videos illustrating how the platform works were not recorded but were reviewed by the authors. On the other hand, we did not review all contests published on “Eÿeka” but just chose five ongoing contests and five completed contests when we investigated its contest management.

Step 2: We viewed the recorded text as a meaning unit and further read, condensed, and wrote notes and headings which reflect its core content, that is, codes. Then, the codes with similar meanings were integrated together. Subsequently, a category was formed to group the list of codes and named using content-characteristic words.

Step 3: It is noted that the content that needs to be analyzed is determined by research aims. It means that not all text should be coded step by step. We mainly paid attention to the content that OCPs presented to solvers. For example, the intellectual property rights (IPR) policy on “Eÿeka” has thousands of words. We did not code all these texts because some of them may not be concerned by solvers. The main texts that are relevant to who owns solvers’ intellectual properties under what conditions were taken into account in this paper. So, the code is “requesters could own IPR of a solver’s submissions only if they have paid for them”, the sub-category is “Ownership transfer and use of solvers’ submissions”, and the category is “service measures related to contest management”. The above meaning units, condensed meaning units, codes, sub-categories, and categories were recorded in the Excel sheet. The coding process was performed, discussed, and refined by the authors together.

After coding the content of an OCP’s webpages, a content analysis of another OCP was conducted. The coding procedure was the same as the aforementioned steps. The previous coding results may be used as a reference to the new coding work. In turn, the new coding outcomes updated the previous coding results. Updates may include changing the names of some codes and sub-categories or adjusting their categories belonging.

Furthermore, to capture the final list of service measures, we merged the new coding schema into the previous schema. In particular, new codes or sub-categories would be added one by one into the global schema. This merge was conducted in a top-down way. First, we added new coding schema by categories, which means updating codes and sub-categories under a category at a time. Second, for each category, we examined if there were new codes in the new coding schema. If so, we inserted them into the sub-categories that it belongs to in the previous coding schema directly. If there are new sub-categories in the new coding schema, we merged them into the previous coding schema. Thus, it was an iteration process to gain the final coding outcomes.

4. Results Analysis

The service measures that are related to contest management, solver management, and requester management are illustrated as follows.

4.1. Service Measures Related to Contest Management

(1) Launch various contests

Contests act as the space where solvers conduct activities and gain benefits. The sustainable launch of various contests is a critical way to attract and keep solvers’ engagement. Most of OCPs provided varieties of contests while several OCPs especially “logomyway”, “Guerra creative”, and “48hourslogo” offered specialized contests, that is, logo design. Additionally, in terms of the number of new contests, the OCPs concentrating on complex/innovative problems averagely have fewer new contests and thus update less frequently than the OCPs which mainly focused on logo design, graphic design, and video production. For instance, on “eÿeka”, one to four new contests were published in a week, while 171 ongoing contests were presented on “Designcrowd”. Finally, all OCPs would send notifications to solvers once a new contest in which they may be interested was launched.

(2) Categorize and navigate contests

This service measure could facilitate solvers to search and choose contests in which they are interested. The criteria for filtering contests commonly consist of ongoing/completed, skills, industries, types, prizes, and contest levels such as base, gold, and platinum. 15 (60%) OCPs provide this service measure. However, the other 10 (40%) OCPs, including “Guerra creative”, “48hourslogo”, “Logomyway”, “Open innovability”, “Ideaconnection”, “Challenge.gov”, “Userfarm”, “Zooppa”, “GoPillar”, “Arcbazar”, just list all contests without assortment.

(3) Have a contest illustration framework

A structured framework for illustrating contests not only helps requesters to define their contests accurately but also helps solvers to make sense of the contests. This service measure is important and necessary because the clarity and accuracy of contest illustration have an influence on solvers’ willingness to engage and even submissions quality. All of OCPs develop a structured framework for contest demonstration. In general, context, problem, time scheme, total prize were mandatory to be illustrated in the framework, and requester identity and preferences, exemplars, submission evaluation criteria and jury, discussion board were optional and varied on different OCPs. Furthermore, most OCPs assigned their community managers/experts to review and improve a contest demonstration. At the same time, some tips or success cases of designing contests were offered to requesters by some OCPs.

(4) Manage submissions

It mainly includes management of submission submitting, evaluation, disclosure, and ownership transfer and use. Table 2 presents some specific features of this service measure.

Table 2.

The features of submission lifecycle management.

As seen in Table 2, all OCPs provided a structured guideline for solvers to answer requesters’ questions and demonstrate their submissions clearly. This kind of guideline always was specialized as a customizable input format.

For a specific contest, estimation of evaluation jury, process, and criteria is helpful for increasing solvers’ perception of contest justice and trust in requesters and understanding of how to develop submissions. However, only “eÿeka”, “Open innovability”, “HYVE”, and “Challenge.gov” requested requesters to publish a jury and its members who would be responsible for scoring submitted submissions and determining the wins. Several OCPs, like “eÿeka”, “Open innovability”, “Zooppa”, “Topcoder”, arranged their community managers or technical experts to review submissions first and then give suggestions to requesters before making final decisions. In addition, seven OCPs asked requesters to disclose a few evaluation criteria even a scorecard such as on “Topcoder”.

After a contest is completed, all of the OCPs would announce their winners. Nevertheless, not all OCPs disclose all submissions of a contest to the engaged solvers, even though it is an important way to embody fairness and for solvers to learn and gain skills. Specifically, four OCPs, “Open innovability”, “Herox”, “Challenge.gov”, “Topcoder”, never exhibited submitted submissions in a contest to engaged solvers or visitors. The other OCPs disclosed submitted submissions but with some preconditions. For example, “Crowdspring”, “Crowdsite”, and “Freelancer” disclosed submissions of a contest only if it is not private, which is set by requesters. Disclosure of a submission in a contest on “ZBJ.COM” and “EPWK” is up to solvers themselves.

Finally, regarding ownership of a submitted submission, all OCPs published their IPR policy which explicitly stipulates who owns solvers’ submissions and conditions of using those submissions. In general, solvers need to transfer their submissions to the requesters who have paid for them. Otherwise, solvers own the IPR of their submissions.

(5) Have a reward and charge system

Rewards and fees intimately associate with benefits that solvers can gain on an OCP and thus have their great attention. The monetary/physical prize and non-monetary prize are two major kinds of awards. As shown in Table 3, all OCPs offered monetary/physical rewards. Non-financial incentives were introduced by 13 OCPs, these are creativity points and/or activity points. They were calculated based on solvers’ performance and behaviors including participated contests, number of submitted submissions, won submissions, and review scores by others. In practice, various featured terms were used by different OCPs such as reputation scores on “Crowdspring”, quality assessment scores on “Designhill”, and ideation and production points on “Tongal”.

Table 3.

Prizes and fees defined by the OCPs.

Another feature refers to multiple opportunities for solvers to gain monetary/physical prizes. Fifteen OCPs suggested requesters set multiple winners in their contests. Most of these OCPs mainly concentrated on contests of generation and development of innovative ideas, concepts, and solutions. In contrast, the OCPs focusing on logo, web, and graphic design suggested requesters set one winner in a contest. Nevertheless, requesters on these OCPs can also set extra winners in their contests if they pay additional prizes. Except for prizes awarded by requesters, the OCPs including “Designcrowd”, “eÿeka”, “HYVE” may award several non-winners in a contest in order to praise their positive behaviors. For example, “HYVE” may select a couple of members as the most valuable participants (MVPs) and award them with money for their helpful comments, great and diverse ideas, or some kinds of special commitments. Finally, the affiliate prize acts as another reward source that a solver may get on some OCPs like “99designs”, “Designcrowd”, “Crowdspring”, “Crowdsite”, “Logomyway”, and “Tongal”. It is related to the number of requesters that a solver refers to launch a contest successfully.

Additionally, solvers may be charged by a few OCPs when they gain some prizes in contents. The charged fees include membership fee, service fee, and possible taxes. Specifically, two OCPs, that is, “Freelancer” and “GoPillar” charged some money if a solver tried to be a premium member who would enjoy more privileges than regular members. With respect to the service fee, it would be charged from solvers who won a contest or engaged in an invite-only contest. Commonly, the amount of service fee depends on OCPs, such as 15% of won prize on “Designcrowd”, 10% on “Logomyway”, “Freelancer”, 3% on “Arcbazar”, 20% on “EPWK”. The last kind of fee a winner was likely to bear is possible taxes and other possible costs that occurred during payment. It relies on winners’ geographic location and the payment method they adopt.

(6) Give feedback

The feedback from requesters, OCPs, and other solvers can promote solvers’ participation intention and even quality of submissions. All of the sampled OCPs suggested and supported requesters giving feedback against solvers’ actions and submissions through various approaches. They included ratings, commenting, discussion boards for publishing news and communicating with each other, declining unsuitable submissions, and even designing markup tools to draw directions on a specific submission.

To give feedback or not is decided by requesters. Unfortunately, some solvers who especially are not winners do not get any feedback from requesters. According to our investigation, only “eÿeka” and “Open innovability” guaranteed that solvers, and at least winners, can get feedback from requesters.

Another kind of feedback is given by OCPs. It always presents as community managers’ reviews, scores, or ranks of a solver’s submission. This feedback provides requesters a reference when they make final decisions on the winners of their contests. However, for a solver, it is rarer to acquire feedback from OCPs than from requesters in practice.

The last kind of feedback comes from other solvers or visitors. The OCPs including “Guerra creative”, “Freelancer”, “Innocentive”, “Herox”, “Tongal”, “Userfarm”, “Zooppa”, “GoPillar”, “Arcbazar” allowed solvers to give their feedback against each submission. This feedback includes votes/likes, commenting, or communicating in the discussion board.

4.2. Service Measures Related to Solver Management

(1) Offer various benefits to solvers

The OCPs offered solvers multiple benefits in order to satisfy their expectations and further attract and keep their participation. The main benefits appeared as the opportunities to earn money, practice skills, have fun, acquire lots of new work, help others, demonstrate expertise, gain reputation, develop career, merge into a community. Therein, the former five benefits are highlighted by the OCPs.

Different OCPs emphasize the benefits differently. For example, “HYVE” claims several kinds of benefits for solvers while a few OCPs just present one or two benefits. In addition, the OCPs, including “Guerra creative”, “48hourslogo”, “Crowdspring”, “Crowdsite”, “Freelancer”, “Tongal”, “Userfarm”, “Cad crowd”, “ZBJ.COM”, and “EPWK”, did not demonstrate benefits for solvers apparently. The benefits always are claimed using clear slogans or demonstrations on the OCPs’ homepages or the sector of “for solvers/creators/designers”.

(2) Have different participation channels

Two channels, that is, self-selection and invite-only, were always provided for solvers to participate in contests. The former is a predominant one, which refers to solvers selecting contests freely when they are allowed to enter. In contrast, a solver could register a contest only after being invited by requesters. For the second channel, there is another form called the one-to-one project. Requesters always invite solvers according to their skills, ranks, creativity scores, or activity scores. In some cases, OCPs would recommend a couple of suitable solvers to requesters based on their past performance, behaviors, and even membership levels.

(3) Develop a solver show system

The solver show system offers solvers a stage to display themselves on the OCPs. This system aids requesters and others to acquaint themselves with a solver and stimulates solvers to gain reputation or fame in the communities. In this study, we divide this system into three sub-systems that are the personal information system, solver rank system, and solver showcase system.

The personal information system was equipped by all the OCPs although they may be different in the presentation of a solver’s facets. In general, two types of information are presented in this sub-system. One is the solvers’ basic profiles including username, location, education, skills, work experience, member since, following, and followers. The other is the solvers’ activities and performance indicated by participated contests, submitted and won submissions, earnings, placement, and creativity/activity scores. Furthermore, some OCPs allowed solvers to run their shops with the services with specific price tags. These services demonstrate a solver’s preference, skills, and works they are capable of and good at.

For those solvers who are active and have good performance, they would be added to the top solver list. The top solvers on one hand could acquire more opportunities to capture requesters’ attention when they are going to invite solvers to contribute to their contests. On the other hand, they could get more benefits such as faster payout processing, prioritized support, and increased visibility. In addition, 15 OCPs, including “Guerra creative”, “Designcrowd”, “Crowdspring”, “Designhill”, “Logomyway”, “Freelancer”, “eÿeka”, “Innocentive”, “HYVE”, “Tongal”, “Cad crowd”, “Arcbazar”, “Topcoder”, “ZBJ.COM”, and “EPWK”, had a function of filtering the top solvers in terms of industries, creativity and activity scores, number of participation and won times, while the other OCPs just enumerate these solvers without assortment.

Of those top solvers, OCPs may interview some of them who have performed very well recently or in the long run. Six OCPs published success stories of those solvers as a way of praising them and stimulating other solvers. For instance, “99designs” developed the 99awards that showcase the best-of-the-best created by its talented and diverse designers from all over the world. “eÿeka” published creators of the month and shared their stories. “Tongal” presented an annual celebration of the brilliant people in the community and the outstanding creative works they have completed each year.

(4) Grade solver and serve them differently

On a few OCPs, solvers were assigned different levels based on two principles, that is, performance-based and member-based. For example, for the performance-based principle, solvers were graded into top, mid, and entry-levels on “99designs”, supernova, mega star, super star, rising star on “Guerra creative”, and level-1 to level-3 on “Crowdsite”. Under this principle, the number of won contests and submitted submissions, and rating scores are the major indexes to evaluate and estimate solvers’ grades. The other principle is member-based which means that the OCPs set grades of solvers based on their membership. To be a member, solvers are charged with some money by OCPs such as “Freelancer”, “Zooppa” and “GoPillar”.

Solvers with different grades own different rights. For instance, “99designs” rewarded solvers with high levels of additional benefits including faster payout processing, finalist payments, beta testing opportunities, prioritized support, increased client visibility across the platform. “Designhill” allowed pro-designers to participate in pro-contests, get a nice badge on their portfolios, and the first to test out exciting new features.

(5) Develop a support system

The solver support system was developed to support solvers to conduct activities. It comprises community support, technique support, and participation support.

Community blog/forum/social media generally has three functions: publishing the latest news, policies, new contests, stories of success contests and featured authors, facilitating communication among solvers and requesters, and presenting scientific articles and reports that are related to design ideas and skills. It may aid solvers in having a feeling of being a member of a community which is one of the motivational factors that stimulate solvers’ engagement and is also an important benefit claimed by some OCPs for solvers. All of the OCPs had their social media accounts on Facebook, Twitter, Instagram, or wechat. However, only 21 OCPs not including “Ideaconnection”, “HYVE”, “GoPillar”, and “Arcbazar” developed a community blog or forum for solvers. Further, only “ZBJ.COM” and “EPWK” developed an APP for solvers to participate and contribute to date.

Commonly, in the community blog/forum, some creative techniques, tips, and tricks were presented to solvers. These can assist solvers in developing submissions easily and effectively and train and improve their skills. In general, text, exemplars, or successful contests/submissions act as forms of tutorials provided for solvers. For example, “Crowdspring” presented some articles like “the 9 biggest packaging design trends of 2020” and “5 packaging design mistakes and how to avoid them”. “99designs” gave some examples of logo and t-shirt ideas in its designer resource center.

The last support, that is, participation support, contains IPR regulation, registration help, FAQ knowledge base, the illustration of how to participate, contest integrity policy, and solvers’ code of conduct. Particularly, all OCPs were free to register and gave clear help and steps. However, some OCPs would review and qualify solvers. For instance, “99designs” reviewed all applications based on a strict set of quality standards and the acceptance rate is highly competitive. A designer had two chances to apply before their application was permanently declined. “48hourslogo” required solvers to do a logo design test before becoming a designer. “Logomyway” demanded registering solvers provide a link to a minimum of 10 logo designs. The FAQ knowledge base and the illustration of how to participate aim to tell solvers how to participate in a contest and address their major concerns. The main goals of the contest integrity policy and code of conduct are to protect solvers, prevent eligibility or cheating behaviors, and assist solvers in knowing how to handle conflicts during participation. Further, contest integrity policy includes non-circumvention policy, privacy policy, confidentiality, and warranties in order to avoid violations of law and other misconducts. All OCPs published these regulations with similar functions even though their demonstrations have differences.

4.3. Service Measures Related to Requester Management

The OCPs spoke extensively about what and how they serve requesters on their websites. However, some of them were emphasized in the aforementioned solver and contest management and some, like providing different price packages for contest launch, are of little relevance to solvers service resulting in them being ignored in this study. We mainly shed light on the following three service measures that aim to ensure the trustworthiness, transparency, and justice of requesters.

(1) Disclose requesters’ identity

Disclosure of a requester’s identity is a key factor that may influence solvers’ perception of his/her trustworthiness and fame. Out of the selected OCPs, three OCPs, that is, “Designcrowd”, “Logomyway”, and “Arcbazar”, did not disclose any information about requester identity in contest illustration. A large proportion of OCPs told solvers requesters’ nicknames or ID numbers but with recent and overview activities including how many contests were held, review scores, last seen, and member-since. In contrast, a few OCPs like “eÿeka”, “Innocentive”, “HYVE”, “Zooppa” noted that disclosure of requester identity is helpful for promoting solvers’ participation. Consequently, the identity of requesters and what they have done related to the contests were always presented on these several OCPs. To some degree, the OCPs who disclosed requester identity were apt to serve requesters who are big or famous companies, while those who did not do this tended to serve small and medium-size enterprises or even individuals.

(2) Authenticate requesters

Requester authentication aims to verify the authenticity of requesters. Some OCPs required requesters to authenticate their email address, telephone, and even business license. These authentication items would be marked in their launched contests. In addition, solvers can also make a judgment against a requester from the disclosure of his/her identity and OCPs’ declaration about what kind of requesters they serve. In general, the OCPs such as “eÿeka”, “Innocentive”, and “Challenge.gov” always served large companies, progressive and innovation-driven companies, government agencies, and nonprofit organizations. The OCPs focusing on interior design and graphic design especially logo design always served small businesses, startups, and individuals. Engaging in contests held by big and famous companies or government agencies not only makes solvers have a feeling of security in the virtual environment but also stimulates them to gain reputation and acquire certification for future development.

(3) Require prize guaranteed

This service measure intends to ask requesters who plan to launch a contest to pay contest’s prizes to the OCPs before it opens to solvers. Solvers receive prizes from the OCPs if they are selected as winners in a contest. Generally, there is a label of “guaranteed” on the contests which have been paid in advance. Such a measure is helpful for avoiding a cheating situation of requesters delaying or even not paying the prize after a contest is completed, and lets solvers participate in a contest without hesitation. All of the OCPs suggested requesters set their contests as guaranteed contests and most requesters followed this request according to our observation on their websites.

5. Discussion and Implications

5.1. Discussion of the Results

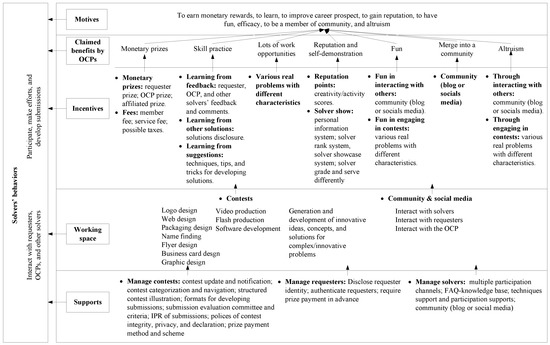

This study identified 14 measures that were developed by the OCPs for serving solvers. These service measures connect together as a whole with a major purpose, that is, facilitating solvers’ participation and contribution to the OCPs. Each service measure is indispensable in this whole, but they may play different roles. Drawing upon the model of Motive-Incentive-Activation-Behavior (MIAB) [33,62], we discuss the relations and roles of the service measures, as shown in Figure 2. The MIAB model indicates that an individual would conduct a particular behavior when their motives are activated. In order to stimulate individuals to conduct this behavior, the right mix of incentives must be distributed. The right mixes of incentives are those that match the individuals’ motives.

Figure 2.

The identified service attributes and their relations.

It can be seen that three roles, that is, incentives, working space, and supports, are played by these service measures.

(1) Incentives: match solvers’ motives and activate them to act

Specifically, on an OCP, solvers may conduct various behaviors from selecting a contest to participate [40,63], making efforts [20,64], and submitting solutions [11,12] to interacting with others [25,65]. Conduction of these behaviors is driven by solvers’ motives. They mainly include earning monetary rewards, learning, improving career prospects, gaining reputation, having fun, efficacy, being a member of a community, and altruism [11,31,33].

In accordance with the MIAB model, a lot of incentives should be developed and presented for solvers to appeal to their motives. Moreover, a particular motive can be matched by one or more incentives. As indicated in Figure 2, some of the identified service measures in this study act as incentives. In particular, the main benefits claimed by the OCPs, including earning money, practicing skills, having fun, acquiring lots of new works, helping others, merging into a community, for solvers directly match solvers’ motives. Under each motive and claimed benefit, there are some specific corresponding measures that demonstrate how OCPs fulfill it. For instance, a diversity of prizes (requester prize, OCPs prize, and affiliated prize) but also several kinds of fees (membership fee, service fee, and possible taxes) were offered by the OCPs to match the motive and benefit of earning monetary rewards. Regarding the motive and benefit of practicing skills, the OCPs offered solvers three approaches to learn, that is, feedback, disclosed solutions, and suggestions including techniques, tips, and tricks for developing solutions. As another example, virtual prizes (creativity and activity points) and solver show system (personal information system, solver rank system, and solver showcase system) are the two kinds of service measures for matching the motive of gaining reputation and self-demonstration.

(2) Working space: offer solvers opportunities to act

It is found that the aforementioned incentives are closely tied to contests and communities that act as the working space. In other words, solvers conduct any action and gain any benefit only through participating in published contests or acting in the community. For example, participating and winning in a contest is the dominant way for solvers to practice skills, earn money, or increase reputation. As well, solvers could gain some skills and have fun interacting with requesters, OCPs, and other solvers in the community. In our selected OCPs, four major kinds of contests, that is, product design (graphic design, logo design, web design), video production, software development, generation and development of innovative ideas, concepts, solutions for complex/innovative problems were launched for solvers. A community, sometimes specialized as a blog, forum, or social media-based community, was developed by most OCPs for solvers to acquire the latest news, contests, notifications, and other information and more importantly to interact with others. The community is very helpful for increasing solvers’ sense of belonging and feeling of fun [66,67]. In a word, the working space is a foundation that connects incentives and supports. Design of incentives and supports are wrapped around the working space.

(3) Supports: support solvers to act

To facilitate solvers’ behavior in the working space, some service measures acting as supports were developed and can be categorized into three groups that are contest, solver, and requester management as well. These service measures provide full-process supports covering solvers’ pre-participation, contest selection, submission development, post evaluation and winner selection, completion of payment, IPR transfer and use, and further handling of possible conflicts and disputes. Specifically, these include, for example, as shown in Figure 2, FAQ knowledge base, contest updates and notifications, contest categorization and navigation, participation channels for solvers to know how to participate. Formats for developing submissions, structured contest illustration, submission evaluation criteria support solvers to develop their submissions effectively. Further, disclosure of requester identity, requester authentication, and prize paid in advance, policies of IPR, contest integrity, privacy, and declaration can promote solvers to participate without hesitation and worries.

5.2. Implications for Practice

Based on the aforementioned results and analyses, we derive some practical suggestions for OCPs and solvers. As for requesters, they are customers of the OCPs who determine the development and even scale of OCPs as well. So a lot of service measures were designed and provided by the OCPs for requesters. However, the service measures for solvers are different from measures for requesters. We may believe that the results of this study have few direct implications for requesters resulting in drawing little attention in this section.

5.2.1. For OCPs

Establishing the working space, especially for contests, is a foundational and precedent step to determine incentives and supports. In terms of skills required to complete a contest, seven of our selected OCPs are dedicated to launching specialized contests such as logo design, architecture and interior design, and software design. Eight OCPs focused on the graphic design-core contests and dabble in other related fields such as web design, name finding, mobile app development. The other OCPs such as “eÿeka”, “Innocentive”, and “HYVE” publish contests with great diversity covering problems in science, technology, engineering, and medicine. Concentrating on diversification or specialization of contests is an important decision faced by OCPs because it influences the number of participatory contests, contest average prizes, and requesters who launch contests. For instance, the OCPs focusing on big complex/innovative problems mainly serve the biggest brands and have a small number of new contests every week comparing to the OCPs focusing on logo design and graphic design.

After estimating the focused contest categories, a subsequent critical step is to design the incentives which appeal to solvers’ motives and thus are considered most by solvers during making decisions to engage [33,68]. The OCPs have to decide which incentives should be provided and with what forms. This study mainly keeps an eye on incentives including monetary rewards, fees, learning, reputation, and merge into a community because they intimately tie to solvers’ extrinsic motives [33]. With respect to each incentive, OCPs have developed some service measures. For instance, there are six major service measures that affect the likelihood of participation and how much a solver can gain from engaging in an OCP. They are requester prize, OCP prize, multiple winners in a contest, affiliated prize, service fee, and membership fee. Some OCPs have adopted and implemented parts of the service measures. However, for OCPs who want to improve their service to attract and keep solvers’ participation further, it is important to make some decisions carefully. These are suggested in Table 4.

Table 4.

Design decisions of service measures.

Meanwhile, much attention should be paid by OCPs to the supports that are very important for solvers’ participation as well. For example, the contest illustration framework not only influences the convenience of requesters publishing contests but also is important for solvers to understand and capture contest information. They have been verified to influence the quantity and quality of solvers’ submissions [20,21,40]. Other supports, including publishing of the criteria and jury for evaluating submissions, disclosure of requester identity, and pre-paid prizes, can reflect requester fairness and trustworthiness [16]. They are helpful for increasing solvers’ sense of being fairly treated and respected [32,69] and further promoting their participation [18,44]. In addition, FAQ knowledge base, IPR policies, contest integrity, privacy, and declaration could improve solvers’ perception of the OCP’s service attitude and aid solvers in knowing how to participate and contribute and what to do if they have an issue.

5.2.2. For Solvers

The major service measures identified in this study could assist solvers in having an overview of those OCPs and what they offered. On the other hand, they would support solvers to select their preferred OCPs as selection criteria. Particularly, there are three aspects that require consideration according to our analysis. They are service measures related to working space, incentives, and supports. For the working space, solvers could mainly look into the number of participatory contests on an OCP. Obviously, the more the participatory contests, the more the likelihood that they can find a suitable one. Additionally, the service measures related to incentives should be paid much attention because they are directly associated with solvers’ expected benefits. As indicated in [31], different solvers have different motives and roles in an OCP and thus are concerned with different incentives. In this study, we mainly present the service measures related to extrinsic incentives including monetary rewards, fees, learning, and reputation since they are presented apparently on an OCP. However, solvers need to check some other intrinsic service measures such as the beauty of the user interface, how fun the website design is, and the fascination of the contests when they have intrinsic motivation. Finally, we suggest solvers examine the service measures related to supports especially IPR policies, FAQ knowledge base, contest integrity, and code of conduct that may regulate solvers based on what they can do and how to handle the encountered issues.

5.3. Limitations and Future Research

This study employed content analysis as our main empirical method which has its weaknesses. Consequently, we can only report what we found on the OCPs’ websites. It may result in only considering and coding tangible content but ignoring some intangibles such as the layout and navigation of the whole website and the friendliness of the user interface. Those are associated with solvers’ perceived ease of use of an OCP and also have an influence on their participation willingness [24]. On the other hand, we only speculate about the OCPs’ intentions and considerations on the developed service measures through the static content on their websites. Another limitation is that we just identified the major service measures that are relevant to contest, solver, and requester management and did not cover all information presented on the OCPs and also did not present the specific values of each measure. For example, we did not present the exact amounts of charged service fees for the OCPs which had this attribute. These are likely to limit the generalizability of our findings for comparing different OCPs and helping OCPs to design and improve their service measures as a technique handbook. Finally, this study mainly concentrated on the OCPs for problem-solving contests. Some OCPs such as “Lego idea”, “kaggle”, and “Threadless”, having a mix of crowdsourcing contests and user-generated content, were not included. In addition, the crowdsourcing market and OCPs’ websites are rapidly changing. For instance, “Userfarm” cannot be reached and has been integrated into Filmmaster Productions several months after we initially coded it. These conditions indicate that our analysis may not generalize to current OCPs and all OCPs with the value unit of crowdsourcing contests for problem-solving. However, we took care in suggesting a methodology that would retrieve important service measures that are likely to influence potential solvers’ participation.

The research findings and limitations invite ideas for future research on service measures adopted by OCPs for solvers. First, an integrated approach combining content analysis, interviews of managers of OCPs and solvers would be employed to develop a more comprehensive list of service measures. For the identified service measures, an extended research is to determine their importance of influencing solvers’ participation from the perspective of solvers, especially the solvers with different roles and motives. It would be helpful for us to recognize the difference between what OCPs do and what solvers think they are. Another area of future research would be the investigation of the relationship among solvers’ behaviors, solvers’ motives, and some of the service measures.

6. Conclusions

This study concentrated on OCPs for problem-solving contests and identified their service measures for solvers. These service measures would be searched and examined directly and repeatedly by solvers and thus may affect their decision to participate in an OCP. By conducting a content analysis of the 25 selected OCPs focusing on problem-solving contests, 14 major service measures were identified. These service measures were categorized into three groups that are contest management, solver management, and solver management. More specifically, six service measures, that are, launching various contests, categorizing and navigating contests, having a contest illustration framework, managing submissions, having a reward and charge system, and giving feedback, are contained in the first category. The second category includes five service measures, which are offering various benefits to solvers, having different participation channels, developing a solver show system, grading solvers and serving them differently, and developing solver support systems, respectively. The last category consists of the service measures of disclosing requesters’ identity, authenticating requesters, and requiring prize guarantees. With respect to these service measures, their roles in solvers’ participation were discussed further. They play three kinds of roles, which are appealing to solvers’ motives and activating them to act, offering solvers various opportunities to act, and supporting solvers to implement actions. Our study will contribute to the research area of solvers’ participation in crowdsourcing from a practical perspective. Specifically, the results could complement the existing studies which mainly focused on theoretically investigating solvers’ motives and external factors and their influence on solvers’ behaviors. In addition, the results are helpful for OCPs to improve and develop their service measures as a reference and for solvers to compare different OCPs and choose their preferred OCPs as evaluation criteria.

Author Contributions

X.Z. is responsible for this research including conceptualization, methodology, data collection and analysis, and writing. In addition, X.Z. is the recipient of the funding that supported the publishing of this study. L.D. is mainly responsible for data collection and analysis and improving writing. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant number 71802002, Anhui Education Department, grant number KJ2019A0137. The APC was funded by 71802002.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Estellés-Arolas, E.; González-Ladrón-De-Guevara, F. Towards an integrated crowdsourcing definition. J. Inf. Sci. 2012, 38, 189–200. [Google Scholar] [CrossRef]

- Bassi, H.; Lee, C.J.; Misener, L.; Johnson, A.M. Exploring the characteristics of crowdsourcing: An online observational study. J. Inf. Sci. 2020, 46, 291–312. [Google Scholar] [CrossRef]

- Terwiesch, C.; Xu, Y. Innovation Contests, Open Innovation, and Multiagent Problem Solving. Manag. Sci. 2008, 54, 1529–1543. [Google Scholar] [CrossRef]

- Brabham, D.C. Crowdsourcing as a Model for Problem Solving. Converg. Int. J. Res. New Media Technol. 2008, 14, 75–90. [Google Scholar] [CrossRef]

- Segev, E. Crowdsourcing contests. Eur. J. Oper. Res. 2020, 281, 241–255. [Google Scholar] [CrossRef]

- Khasraghi, H.J.; Aghaie, A. Crowdsourcing contests: Understanding the effect of competitors’ participation history on their performance. Behav. Inf. Technol. 2014, 33, 1383–1395. [Google Scholar] [CrossRef]

- Blohm, I.; Zogaj, S.; Bretschneider, U.; Leimeister, J.M. How to Manage Crowdsourcing Platforms Effectively? Calif. Manag. Rev. 2018, 60, 122–149. [Google Scholar] [CrossRef]

- Sun, Y.; Fang, Y.; Lim, K.H. Understanding sustained participation in transactional virtual communities. Decis. Support Syst. 2012, 53, 12–22. [Google Scholar] [CrossRef]

- Wang, X.; Khasraghi, H.J.; Schneider, H. Towards an Understanding of Participants’ Sustained Participation in Crowdsourcing. Contests. Inf. Syst. Manag. 2020, 37, 213–226. [Google Scholar] [CrossRef]

- Zhao, Y.C.; Zhu, Q. Effects of extrinsic and intrinsic motivation on participation in crowdsourcing contest: A perspective of self-determination theory. Online Inf. Rev. 2014, 38, 896–917. [Google Scholar] [CrossRef]

- Acar, O.A. Motivations and solution appropriateness in crowdsourcing challenges for innovation. Res. Policy 2019, 48, 103716. [Google Scholar] [CrossRef]

- Mack, T.; Landau, C. Submission quality in open innovation contests—An analysis of individual-level determinants of idea innovativeness. R&D Manag. 2020, 50, 47–62. [Google Scholar] [CrossRef]

- Zhu, J.J.; Li, S.Y.; Andrews, M. Ideator Expertise and Cocreator Inputs in Crowdsourcing-Based New Product Development. J. Prod. Innov. Manag. 2017, 34, 598–616. [Google Scholar] [CrossRef]

- Chua, R.Y.J.; Roth, Y.; Lemoine, J.-F. The Impact of Culture on Creativity: How Cultural Tightness and Cultural Distance Affect Global Innovation Crowdsourcing Work. Adm. Sci. Q. 2014, 60, 189–227. [Google Scholar] [CrossRef]

- Bockstedt, J.; Druehl, C.; Mishra, A. Problem-solving effort and success in innovation contests: The role of national wealth and national culture. J. Oper. Manag. 2015, 36, 187–200. [Google Scholar] [CrossRef]

- Franke, N.; Keinz, P.; Klausberger, K. “Does This Sound Like a Fair Deal?”: Antecedents and Consequences of Fairness Expectations in the Individual’s Decision to Participate in Firm Innovation. Organ. Sci. 2012, 24, 1495–1516. [Google Scholar] [CrossRef]

- Zou, L.; Zhang, J.; Liu, W. Perceived justice and creativity in crowdsourcing communities: Empirical evidence from China. Soc. Sci. Inf. 2015, 54, 253–279. [Google Scholar] [CrossRef]

- Heo, M.; Toomey, N. Motivating continued knowledge sharing in crowdsourcing: The impact of different types of visual feedback. Online Inf. Rev. 2015, 39, 795–811. [Google Scholar] [CrossRef]

- Wooten, J.O.; Ulrich, K.T. Idea Generation and the Role of Feedback: Evidence from Field Experiments with Innovation Tournaments. Prod. Oper. Manag. 2017, 26, 80–99. [Google Scholar] [CrossRef]

- Steils, N.; Hanine, S. Recruiting valuable participants in online IDEA generation: The role of brief instructions. J. Bus. Res. 2019, 14–25. [Google Scholar] [CrossRef]

- Vrgović, P.; Jošanov-Vrgović, I. Crowdsourcing user solutions: Which questions should companies ask to elicit the most ideas from its users? Innovation 2017, 19, 452–462. [Google Scholar] [CrossRef]

- Mazzola, E.; Acur, N.; Piazza, M.; Perrone, G. “To Own or Not to Own?” A Study on the Determinants and Consequences of Alternative Intellectual Property Rights Arrangements in Crowdsourcing for Innovation Contests. J. Prod. Innov. Manag. 2018, 35, 908–929. [Google Scholar] [CrossRef]

- Kohler, T. How to Scale Crowdsourcing Platforms. Calif. Manag. Rev. 2017, 60, 98–121. [Google Scholar] [CrossRef]

- Johnson, E.; Liew, C.L. Engagement-oriented design: A study of New Zealand public cultural heritage institutions crowdsourcing platforms. Online Inf. Rev. 2020, 44, 887–912. [Google Scholar] [CrossRef]

- Ebner, W.; Leimeister, J.M.; Krcmar, H. Community engineering for innovations: The ideas competition as a method to nurture a virtual community for innovations. R&D Manag. 2009, 39, 342–356. [Google Scholar] [CrossRef]

- Ren, J.; Ozturk, P.; Yeoh, W. Online Crowdsourcing Campaigns: Bottom-Up versus Top-Down Process Model. J. Comput. Inf. Syst. 2019, 59, 266–276. [Google Scholar] [CrossRef]

- Acar, O.A. Harnessing the creative potential of consumers: Money, participation, and creativity in idea crowdsourcing. Mark. Lett. 2018, 29, 177–188. [Google Scholar] [CrossRef]

- Malone, T.; Laubacher, R.; Dellarocas, C. The collective intelligence genome. IEEE Eng. Manag. Rev. 2010, 38, 38–52. [Google Scholar] [CrossRef]

- Schenk, E.; Guittard, C. Towards a characterization of crowdsourcing practices. J. Innov. Econ. 2011, 7, 93–107. [Google Scholar] [CrossRef]

- Malone, T.W.; Nickerson, J.V.; Laubacher, R.J.; Fisher, L.H.; Boer, P.; Han, Y.; Ben Towne, W. Putting the Pieces Back Together Again: Contest Webs for Large-Scale Problem Solving. In Proceedings of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing, Portland, OR, USA, 25 February–1 March 2017. [Google Scholar]

- Battistella, C.; Nonino, F. Exploring the impact of motivations on the attraction of innovation roles in open innovation web-based platforms. Prod. Plan. Control. 2013, 24, 226–245. [Google Scholar] [CrossRef]

- Alam, S.L.; Campbell, J. Temporal Motivations of Volunteers to Participate in Cultural Crowdsourcing Work. Inf. Syst. Res. 2017, 28, 744–759. [Google Scholar] [CrossRef]

- Leimeister, J.M.; Huber, M.; Bretschneider, U.; Krcmar, H. Leveraging Crowdsourcing: Activation-Supporting Components for IT-Based Ideas Competition. Manag. Inf. Syst. 2009, 26, 197–224. [Google Scholar] [CrossRef]

- Bagheri, S.K.; Raoufi, P.; Eshtehardi, M.S.A.; Shaverdy, S.; Akbarabad, B.R.; Moghaddam, B.; Mardani, A. Using the crowd for business model innovation: The case of Digikala. R&D Manag. 2020, 50, 3–17. [Google Scholar] [CrossRef]

- Zhang, X.; Gong, B.; Cao, Y.; Ding, Y.; Su, J. Investigating participants’ attributes for participant estimation in knowledge-intensive crowdsourcing: A fuzzy DEMATEL based approach. Electron. Commer. Res. 2020, 50, 3–17. [Google Scholar] [CrossRef]

- Zhang, X.; Su, J. A combined fuzzy DEMATEL and TOPSIS approach for estimating participants in knowledge-intensive crowdsourcing. Comput. Ind. Eng. 2019, 137, 106085. [Google Scholar] [CrossRef]

- Ye, H.; Kankanhalli, A. Solvers’ participation in crowdsourcing platforms: Examining the impacts of trust, and benefit and cost factors. J. Strat. Inf. Syst. 2017, 26, 101–117. [Google Scholar] [CrossRef]

- Liu, Q.; Du, Q.; Hong, Y.; Fan, W.; Wu, S. User idea implementation in open innovation communities: Evidence from a new product development crowdsourcing community. Inf. Syst. J. 2020, 30, 899–927. [Google Scholar] [CrossRef]

- Frey, K.; Lüthje, C.; Haag, S. Whom Should Firms Attract to Open Innovation Platforms? The Role of Knowledge Diversity and Motivation. Long Range Plan. 2011, 44, 397–420. [Google Scholar] [CrossRef]

- Zheng, H.; Li, D.; Hou, W. Task Design, Motivation, and Participation in Crowdsourcing Contests. Int. J. Electron. Commer. 2011, 15, 57–88. [Google Scholar] [CrossRef]

- Durward, D.; Blohm, I.; Leimeister, J.M. The Nature of Crowd Work and its Effects on Individuals’ Work Perception. J. Manag. Inf. Syst. 2020, 37, 66–95. [Google Scholar] [CrossRef]

- Jian, L.; Yang, S.; Ba, S.L.; Lu, L.; Jiang, L.C. Managing the Crowds: The Effect of Prize Guarantees and In-Process Feedback on Participation in Crowdsourcing Contests. Mis Q. 2019, 43, 97–112. [Google Scholar] [CrossRef]

- Zhu, H.; Kock, A.; Wentker, M.; Leker, J. How Does Online Interaction Affect Idea Quality? The Effect of Feedback in Firm-Internal Idea Competitions. J. Prod. Innov. Manag. 2019, 36, 24–40. [Google Scholar] [CrossRef]

- Boons, M.; Stam, D.; Barkema, H.G. Feelings of Pride and Respect as Drivers of Ongoing Member Activity on Crowdsourcing Platforms. J. Manag. Stud. 2015, 52, 717–741. [Google Scholar] [CrossRef]

- Malhotra, A.; Majchrzak, A. Managing Crowds in Innovation Challenges. Calif. Manag. Rev. 2014, 56, 103–123. [Google Scholar] [CrossRef]

- Schörpf, P.; Flecker, J.; Schönauer, A.; Eichmann, H. Triangular love-hate: Management and control in creative crowdworking. New Technol. Work. Employ. 2017, 32, 43–58. [Google Scholar] [CrossRef]

- Wen, Z.; Lin, L. Optimal Fee Structures of Crowdsourcing Platforms. Decis. Sci. 2016, 47, 820–850. [Google Scholar] [CrossRef]

- Täuscher, K. Leveraging collective intelligence: How to design and manage crowd-based business models. Bus. Horizons 2017, 60, 237–245. [Google Scholar] [CrossRef]

- Pollok, P.; Lüttgens, D.; Piller, F.T. Attracting solutions in crowdsourcing contests: The role of knowledge distance, identity disclosure, and seeker status. Res. Policy 2019, 48, 98–114. [Google Scholar] [CrossRef]

- Martinez, M.G. Solver engagement in knowledge sharing in crowdsourcing communities: Exploring the link to creativity. Res. Policy 2015, 44, 1419–1430. [Google Scholar] [CrossRef]

- Camacho, N.; Nam, H.; Kannan, P.; Stremersch, S. Tournaments to Crowdsource Innovation: The Role of Moderator Feedback and Participation Intensity. J. Mark. 2019, 83, 138–157. [Google Scholar] [CrossRef]

- Piezunka, H.; Dahlander, L. Idea Rejected, Tie Formed: Organizations’ Feedback on Crowdsourced Ideas. Acad. Manag. J. 2018, 62, 503–530. [Google Scholar] [CrossRef]

- Chan, K.W.; Li, S.Y.; Zhu, J.J. Fostering Customer Ideation in Crowdsourcing Community: The Role of Peer-to-peer and Peer-to-firm Interactions. J. Interact. Mark. 2015, 31, 42–62. [Google Scholar] [CrossRef]

- Karyotakis, M.-A.; Antonopoulos, N. Web Communication: A Content Analysis of Green Hosting Companies. Sustainability 2021, 13, 495. [Google Scholar] [CrossRef]

- Colbert, S.; Thornton, L.; Richmond, R. Content analysis of websites selling alcohol online in Australia. Drug Alcohol Rev. 2020, 162–169. [Google Scholar] [CrossRef]

- Gerodimos, R. Mobilising young citizens in the UK: A content analysis of youth and issue websites. Inf. Commun. Soc. 2008, 11, 964–988. [Google Scholar] [CrossRef]

- Elo, S.; Kyngäs, H. The qualitative content analysis process. J. Adv. Nurs. 2008, 62, 107–115. [Google Scholar] [CrossRef] [PubMed]

- Hsieh, H.-F.; Shannon, S.E. Three approaches to qualitative content analysis. Qual. Health Res. 2005, 15, 1277–1288. [Google Scholar] [CrossRef]

- Battistella, C.; Nonino, F. Open innovation web-based platforms: The impact of different forms of motivation on collaboration. Innovation 2012, 14, 557–575. [Google Scholar] [CrossRef]

- Dissanayake, I.; Zhang, J.; Gu, B. Task Division for Team Success in Crowdsourcing Contests: Resource Allocation and Alignment Effects. J. Manag. Inf. Syst. 2015, 32, 8–39. [Google Scholar] [CrossRef]

- Graneheim, U.; Lundman, B. Qualitative content analysis in nursing research: Concepts, procedures and measures to achieve trustworthiness. Nurse Educ. Today 2004, 24, 105–112. [Google Scholar] [CrossRef]

- Von Rosestiel, L. Grundlagen der Organisationspsychologie: Basiswissen und Anwendungshinweise (Basics of Organizational Psychology); Schäffer-Poeschel: Stuttgart, Germany, 2007. [Google Scholar]

- Pee, L.; Koh, E.; Goh, M. Trait motivations of crowdsourcing and task choice: A distal-proximal perspective. Int. J. Inf. Manag. 2018, 40, 28–41. [Google Scholar] [CrossRef]

- Liang, H.; Wang, M.-M.; Wang, J.-J.; Xue, Y. How intrinsic motivation and extrinsic incentives affect task effort in crowdsourcing contests: A mediated moderation model. Comput. Hum. Behav. 2018, 81, 168–176. [Google Scholar] [CrossRef]

- Ogink, T.; Dong, J.Q. Stimulating innovation by user feedback on social media: The case of an online user innovation community. Technol. Forecast. Soc. Chang. 2019, 144, 295–302. [Google Scholar] [CrossRef]

- Tinati, R.; Luczak-Roesch, M.; Simperl, E.; Hall, W. An investigation of player motivations in Eyewire, a gamified citizen science project. Comput. Hum. Behav. 2017, 73, 527–540. [Google Scholar] [CrossRef]

- Brabham, D.C. Moving the Crowd at Threadless. Info. Commun. Soc. 2010, 13, 1122–1145. [Google Scholar] [CrossRef]

- Feng, Y.; Ye, H.J.; Yu, Y.; Yang, C.; Cui, T. Gamification artifacts and crowdsourcing participation: Examining the mediating role of intrinsic motivations. Comput. Hum. Behav. 2018, 81, 124–136. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, N.; Yin, C.; Zhang, J.X. Understanding the relationships between motivators and effort in crowdsourcing marketplaces: A nonlinear analysis. Int. J. Inf. Manag. 2015, 35, 267–276. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).