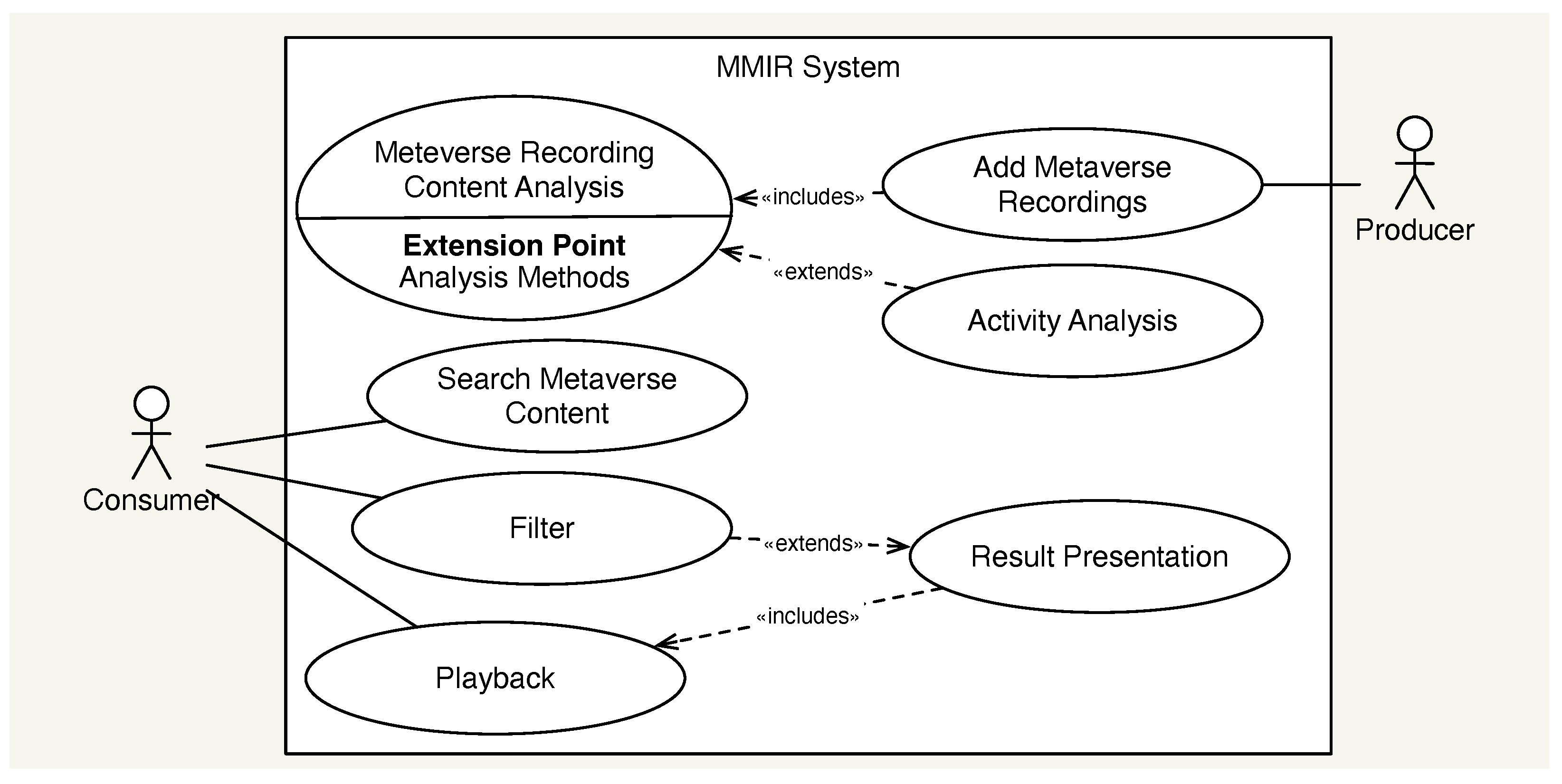

Our assumption is that SRD and PD can support MVR content analysis. To validate this, we first address the content analysis of MVRs and model a system to record MVRs. Based on the research in lifelog retrieval and video retrieval, it can be assumed that activities play a distinct role in retrieval. For example, the search for specific activities is relevant for VR training and cybersecurity. Hence, further use cases are focused on the identification of activities, i.e., activity analysis.

3.1. Content Analysis of Metaverse Recordings

With regard to the research question of this article, three hypotheses can be defined: First, Feature Extraction (FE) of SRD and/or PD can extract features that are not possible to extract by FE of MMCO. Second, because of the limited-modality vision and audio, the results of FE for MMCO should be improved with the contribution of a FE of SRD and PD, or even be replaced by FE of SRD and PD if they deliver enough features to fulfill user queries. Third, FE of SRD and PD requires substantially less computing power. Each hypothesis is addressed in the following paragraphs.

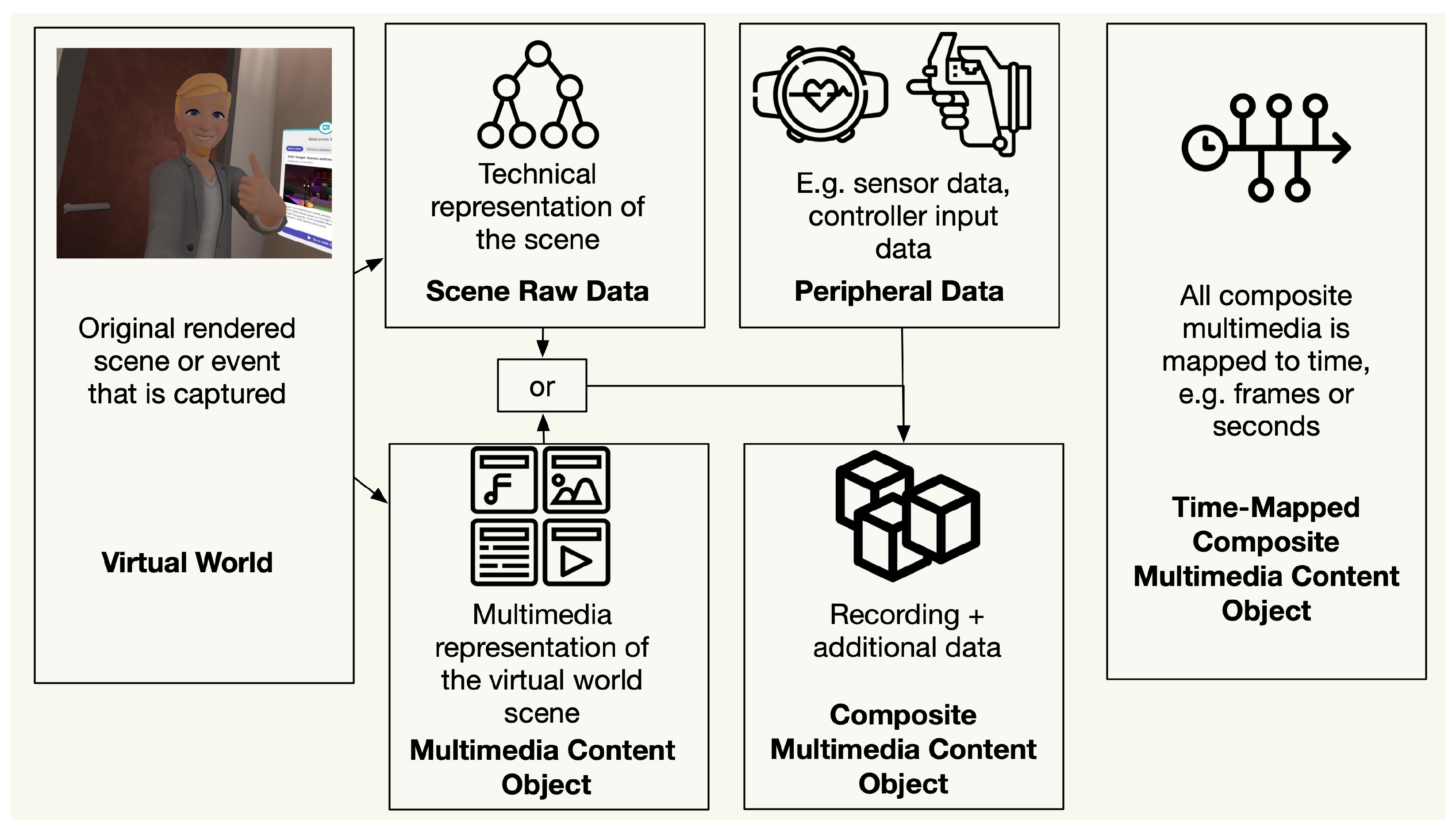

Content analysis is an important initial step in MMIR to build a semantic understanding to process user queries effectively [

51]. It extracts the features of the content, in our case MVRs, and integrates them with an index with which user queries are matched. For MVR retrieval, content analysis can analyze all elements of an MVR. MVRs can consist of the elements MMCO, SRD, and PD.

An exemplary MVR can therefore contain an MMCO, which itself can consist of video and audio, while audio can contain multiple tracks, such as the game audio and the user’s microphone. SRD can be many elements of different types, that is, a RSG, 3D models, or network data transmitted between clients, called netcode [

32]. PD can include actor data, such as controller inputs, and sensor data, e.g., heart rate (HR). All listed elements can contain relevant information, which can be extracted in the content analysis.

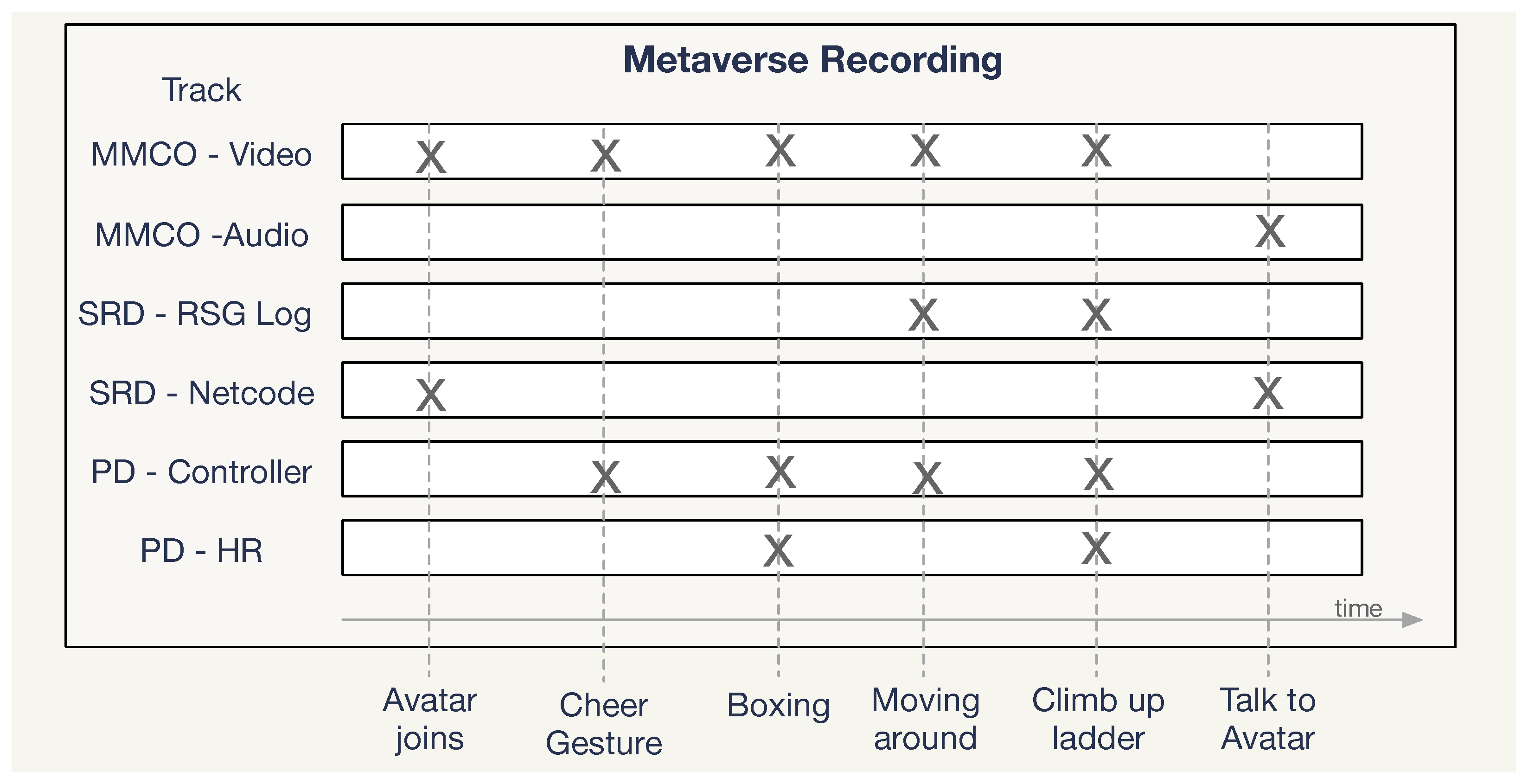

All elements of this example MVR can be modeled according to the well-established Strata model [

52] as tracks in a recording, visualized in

Figure 4. Different actions and events in the MVR are included in the tracks—for example, if another joined the world, which is visualized in the video and is included in the exchanged data, SRD netcode. Another example can be a boxing activity of a user, which is visible as a hand movement in the video, but also as movements recorded from the controller and as high HR on the HR sensor.

Let F be the universe of all features that a feature extraction pipeline can emit from any MVR. A standard reference set contains those features whose semantics are agreed upon during system design. Each element is a search factor and is represented in the index without additional learning.

The content analysis aims to extract all relevant information from the MVR. An MVR contains an ordered set of features . If any available feature can be detected using a feature extraction pipeline, we call this Optimal MMCO Feature Extraction FE. Optimal MMCO FE would result in , , but achieving optimal FE is often difficult. Some difficulties are evident in the form of erroneous predictions, such as ghost predictions or mispredicted classes, undetected objects because of errors, or undetected objects of unknown classes. Typically, only a subset of can be extracted, resulting in , where are the realistically extractable features by the method and a proper subset of , .

With regard to the hypothesis of this article, the modeling work explains that SRD and PD contain relevant additional data for the content analysis. Hence, FE of SRD and PD can support MVR content analysis.

Even if limited in robustness (see

Section 2.5),

can deliver a significant set of relevant features

. In theory,

, or

, is able to deliver relevant features

if SRD contains the information. When a standardized format for SRD and PD is missing, the data can be captured as structured or unstructured data. Feature extraction for SRD

or SRD in combination with PD,

, is unknown. In a simple example, SRD and PD are a structured data object, such as a JSON object, containing the recorded data. Another example could be a log file that contains SRD and PD. An

could be a simple filter algorithm, searching for relevant log entries and reading the data.

Another form of support is the enhancement of

by

. For example, detecting a boxing gesture based on images is difficult. However, if the visual data is combined with HR and hand controller movement data, the effectiveness of such a detection can be increased.

Overall, the theoretical target quality Q of the FE of MVR, which extracts exactly all of the desired features , represents the fraction of desired features that are correctly detected, with 1 as optimum. The difference between optimum precision 1 and the actual precision can be defined as the overall error , . Since the calculation of over any possible E with any thinkable class in an MVR is unknown, we remove the unknown classes from the quotation. Hence, Q and can be described with precision and recall or can be combined as the F1 score. This provides strong metrics to compare different FE approaches.

Given the recorded features

Boxing ,

Avatar joins ,

Avatar gesture , and

Avatar Talking from

Figure 4, the feature

can be detected by

only if the join happened in the field of view of the recording. But it can be detected by

with high confidence. Similarly,

can be recognized by

. In case of

, if reduced to high heart rate activity,

nor

can extract a high heart rate, but

can. However, a high heart rate does not indicate boxing, but a combination of

can extract features, which can be analyzed or fused to produce higher quality features. We assume that SRD contains more features than MMCO, since MMCO contains the same concepts, but only the ones in the recorded field of view. PD delivers only additional features and contributes to the other FE, but alone achieves a lower recall value. Hence, the ranking of information is assumed to be

After all, this theoretical model illustrates a simplified formalization of the FE process. In real scenarios, FE methods do not recognize features deterministically, but provide, for example, ambiguous results or results with low recognition reliability. Further processing, such as multimodal feature fusion [

53] or fuzzy logic [

54], could be applied to improve the overall result, which is left to future work.

During querying, a user statement

q is mapped by the query interface to a subset of factors:

Retrieval, therefore, becomes a problem of finding every recording whose extracted feature multiset contains .

Regarding the computational effort of the MVR content analysis, the hypothesis is that is less computationally intensive than . A simple comparison of the runtime produces an indication if they differ by an order of magnitude. Hence, the performance P measured in runtime is expected to be and . A further hypothesis is that SRD is much smaller in file size than MMCO and, hence, is more efficient to store. Because feature extraction and indexing are executed independently for each MVR, the pipeline can be extremely parallel across large collections and needs to run only when new recordings arrive. The small log-file footprint of SRD and PD and the CPU-bound extraction contribute to reducing input–output and compute costs, reinforcing system-wide scalability.

3.2. MVR Process

As described previously, no standard for the acquisition of rendering data to document MVRs is available. To explore the opportunities and challenges for MVRs, we describe an initial approach to recording SRD and PD for MVRs.

For the SRD, the RSG is particularly interesting, since it contains all objects in a scene with many attributes, such as size, position, and materials. Hence, the scene graph could provide equivalent data for object detection. A change in attribute values in the scene graph can be used to detect events, such as the movement of an object by a change of position data.

For PD, the input controllers are a first point of interest, because, with these, a player interacts with a scene. Hence, capturing the main input devices, such as mouse and keyboard on a computer and VR hand controllers and head movements on a VR headset, is required. The taxonomy of interaction techniques for immersive worlds [

55] provides a categorization of modalities, which we use as categories of input controller data to record. In the same way that input controllers provide a source of information about activity, other players in virtual worlds also influence the scene and interaction flow. Hence, the netcode is a relevant source of information. For VR worlds, the recorded hand controller data (PD) and netcode (SRD) data provide relevant interaction information, which can be easily extracted by FE. Hence, this supports the thesis that PD supports FE for MMCO and SRD.

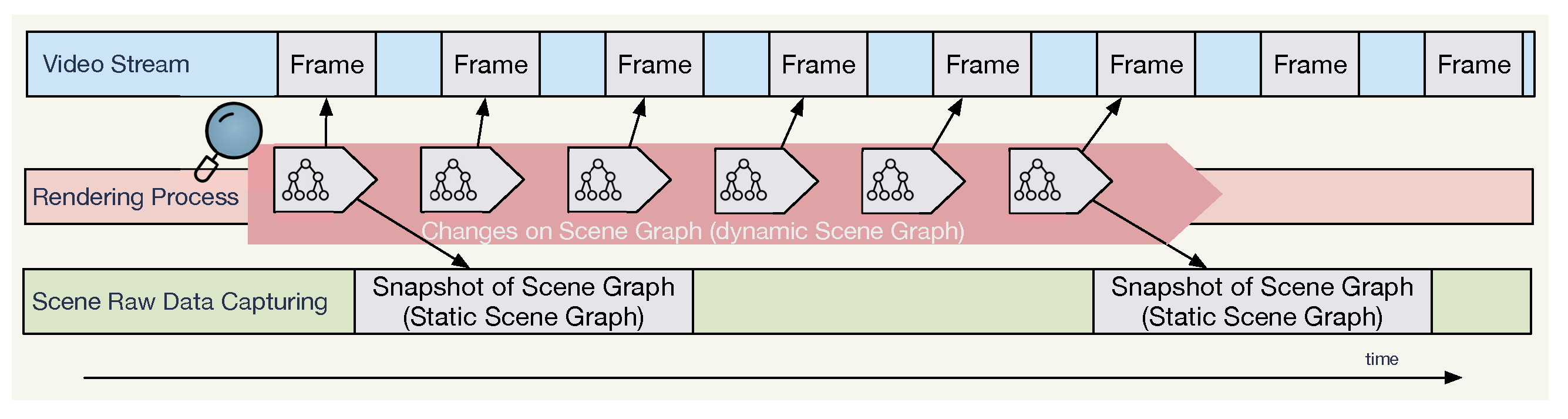

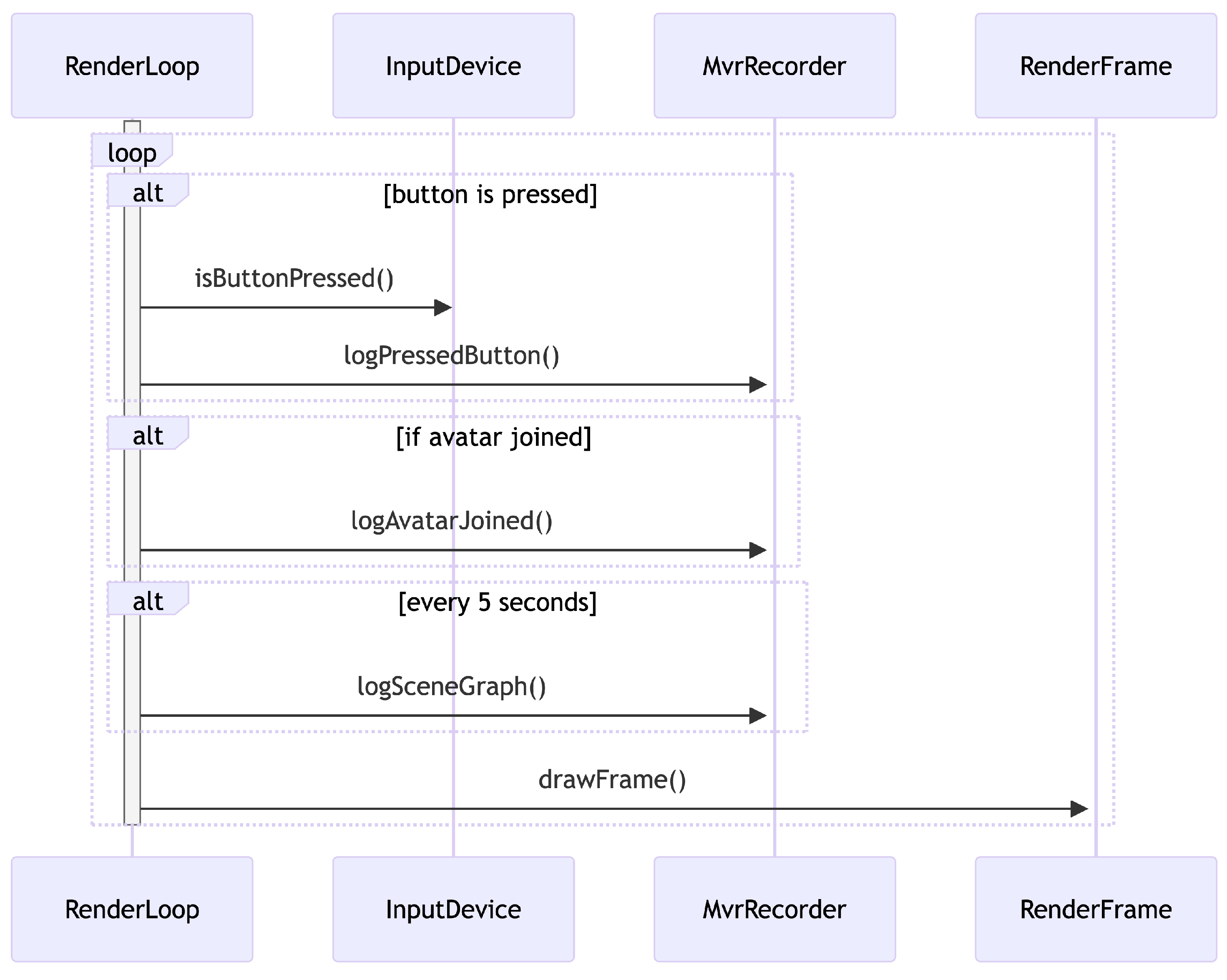

The rendering process typically incorporates a render loop, which renders every single frame based on the events and modifications of elements in the scene. In order to capture information in the rendering loop, a script can be inserted into the loop that retrieves and saves certain information.

Figure 5 shows the process, where the

RendererLoop is modified to check if a button is pressed on an

InputDevice, and, if yes, the information is sent to the

MvrRecorder. Also, if an avatar joins the scene (event), it will be sent to the

MvrRecorder and be recorded. In a repetition of, for example, every 5 s, the scene graph will be captured by the

MvrRecorder.

The PD from external devices can be captured by reading the sensor values during recording, as described, or by temporarily storing them on the device and consolidating them with the MVR later.

The persistence of the captured data as a MVR can be achieved by serialization and logging with standard methods, such as the system standard log, in a separate log file, or transmitted to a log service over the network. A hierarchical graph representing the scene can be easily converted into a textual format by indenting each node according to its hierarchy level and listing them sequentially.

Based on the modeling work, the relevant points for a standard for MVRs recording can be described. A crucial point is whether the app provides certain data or whether the recorder specifies requested data. The standard should define the interface for the transfer of the data to record from the app to the recorder. The standard should include a container format for persisting MVRs, a definition of data structures for scene graphs, and data structures for PD.

In summary, the recording of MVRs can be achieved using deep integration into the application.