Innovations in Multidimensional Force Sensors for Accurate Tactile Perception and Embodied Intelligence

Abstract

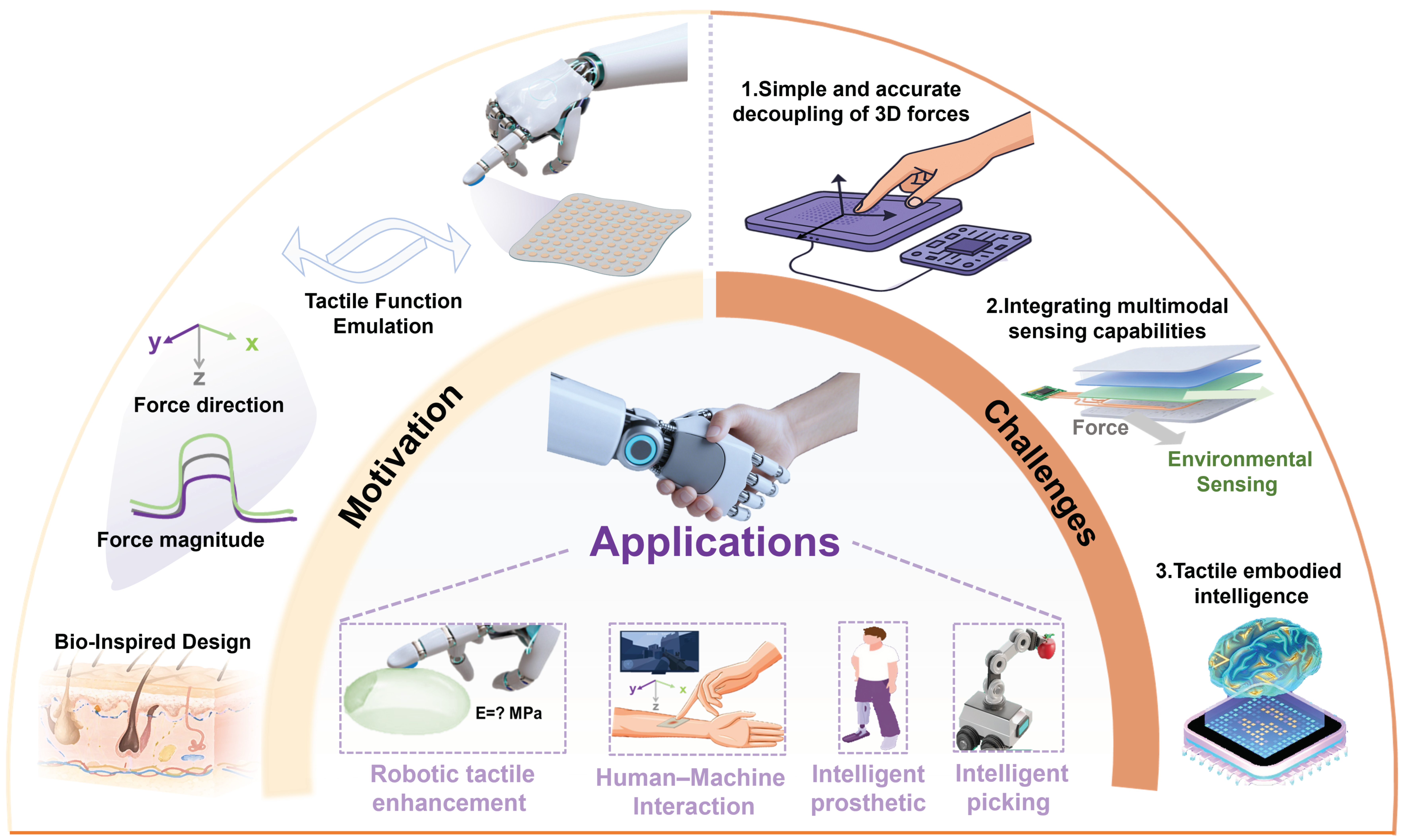

1. Introduction

2. Structural Design Strategies of Multidimensional Force Sensors

2.1. In-Plane Segment Design

2.2. Multilayer Stacking Design

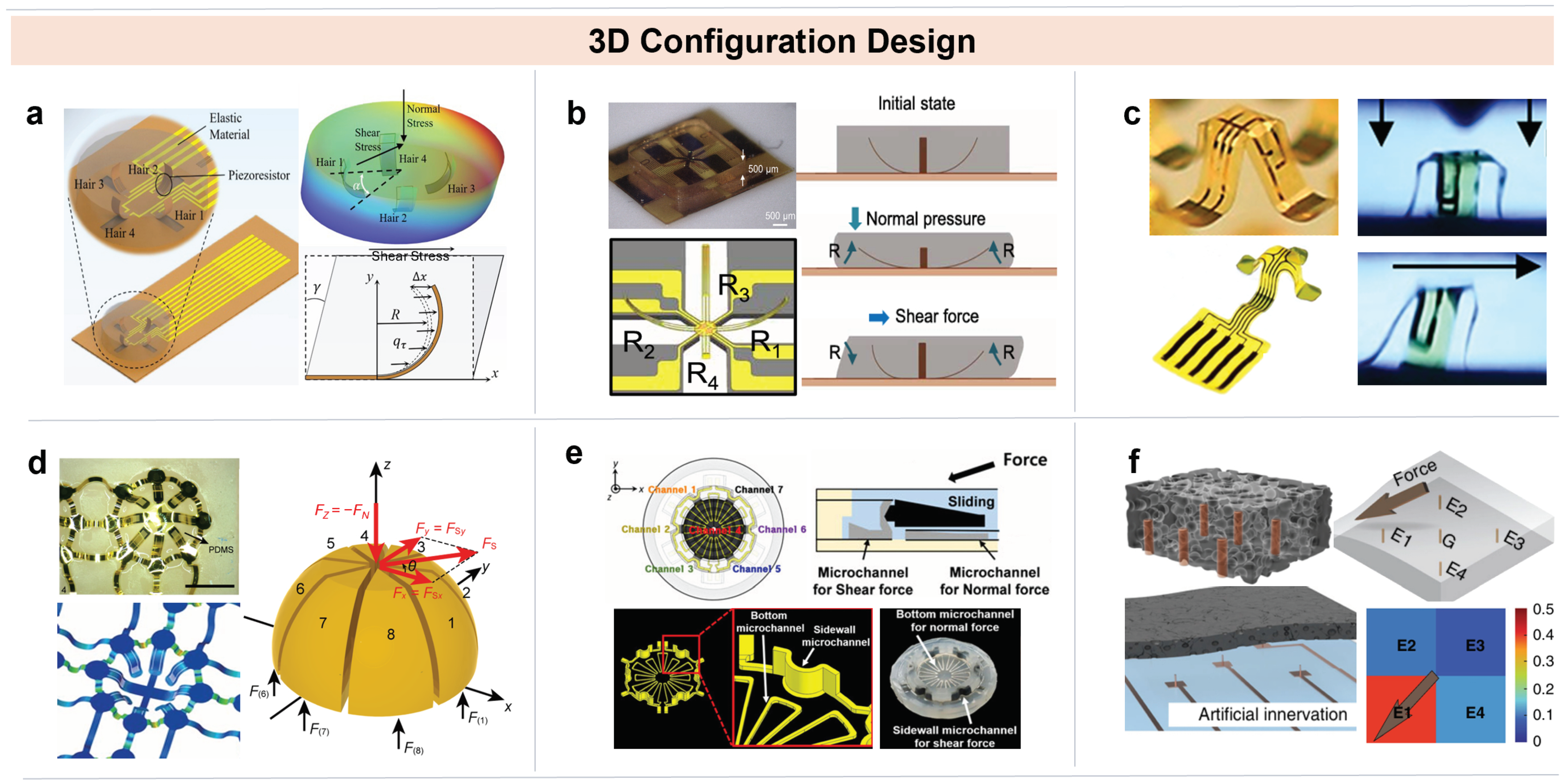

2.3. 3D Configuration Design

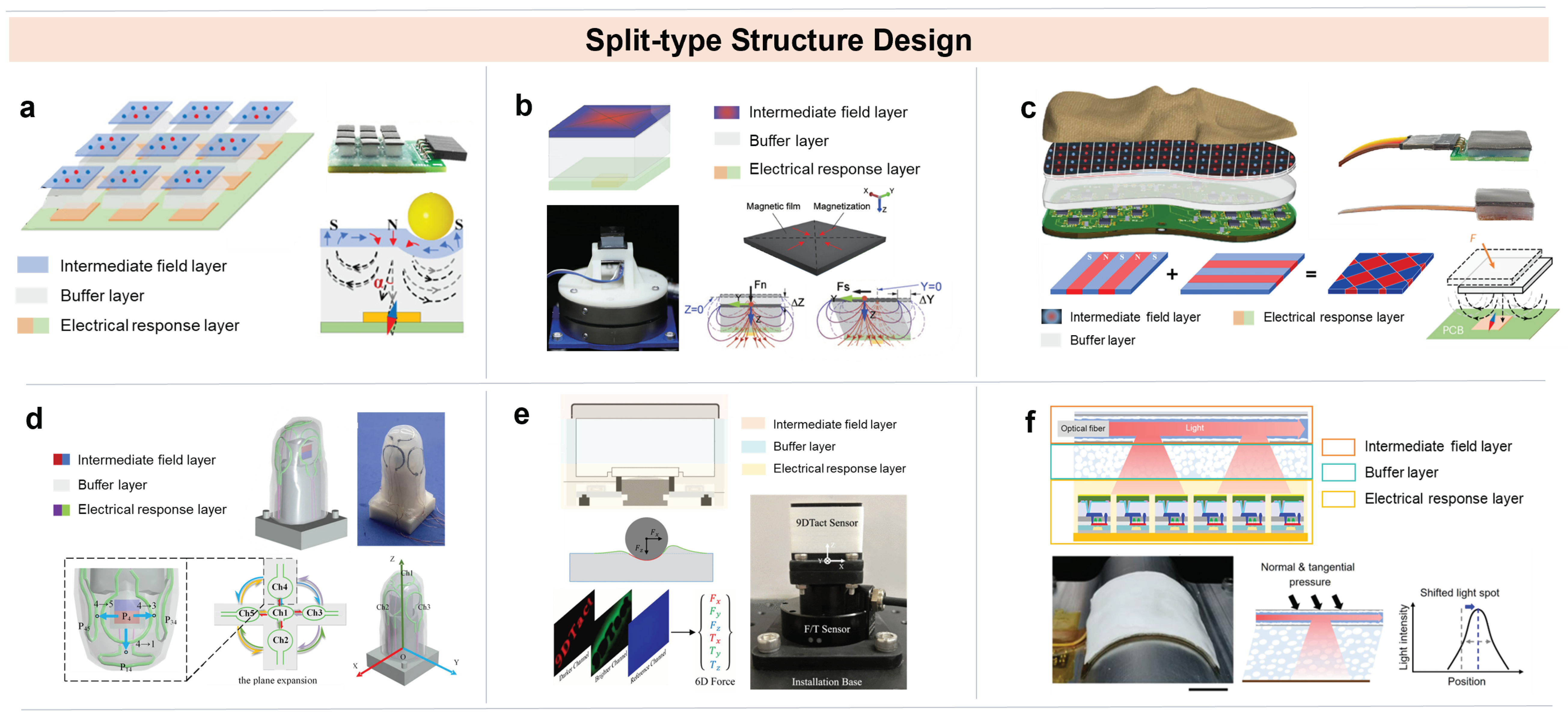

2.4. Split-Type Structure Design

2.5. Other Structure Design

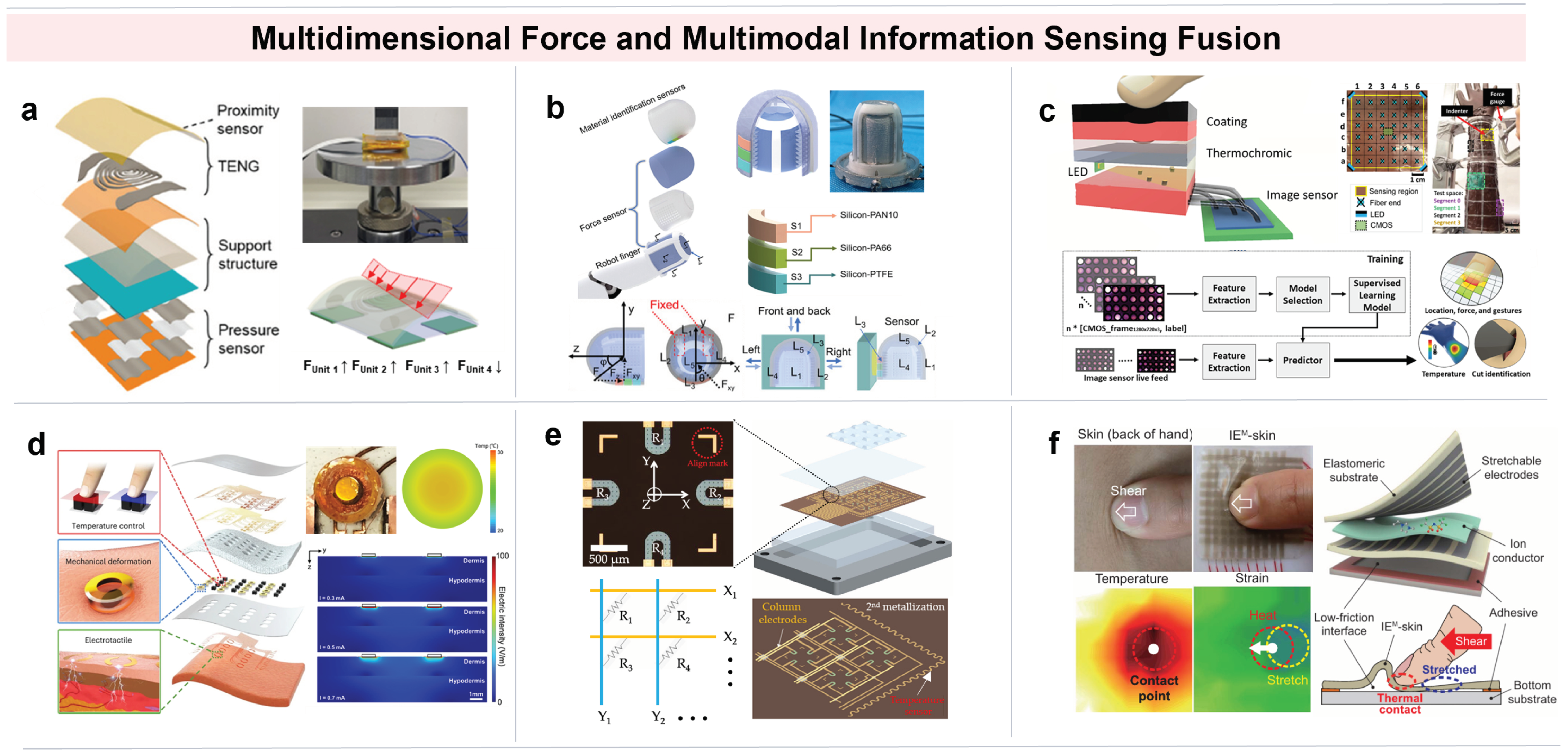

3. Sensing Fusion of Multidimensional Force and Other Tactile Modality

4. System Integration with AI for Intelligent Applications

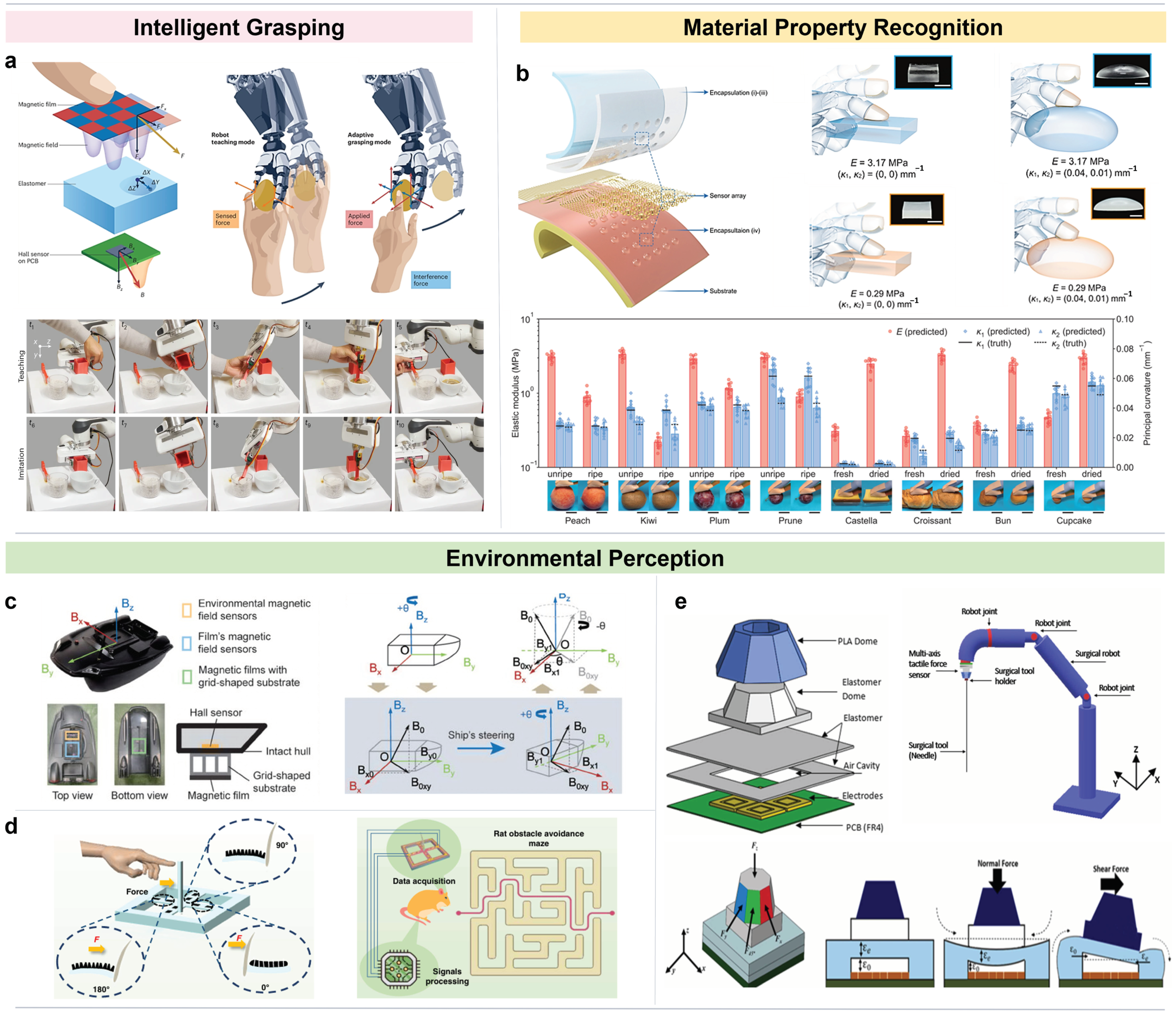

4.1. Intelligent Robotic Manipulation and Cognition

4.2. Human–Machine Interaction and Wearable Health Monitoring

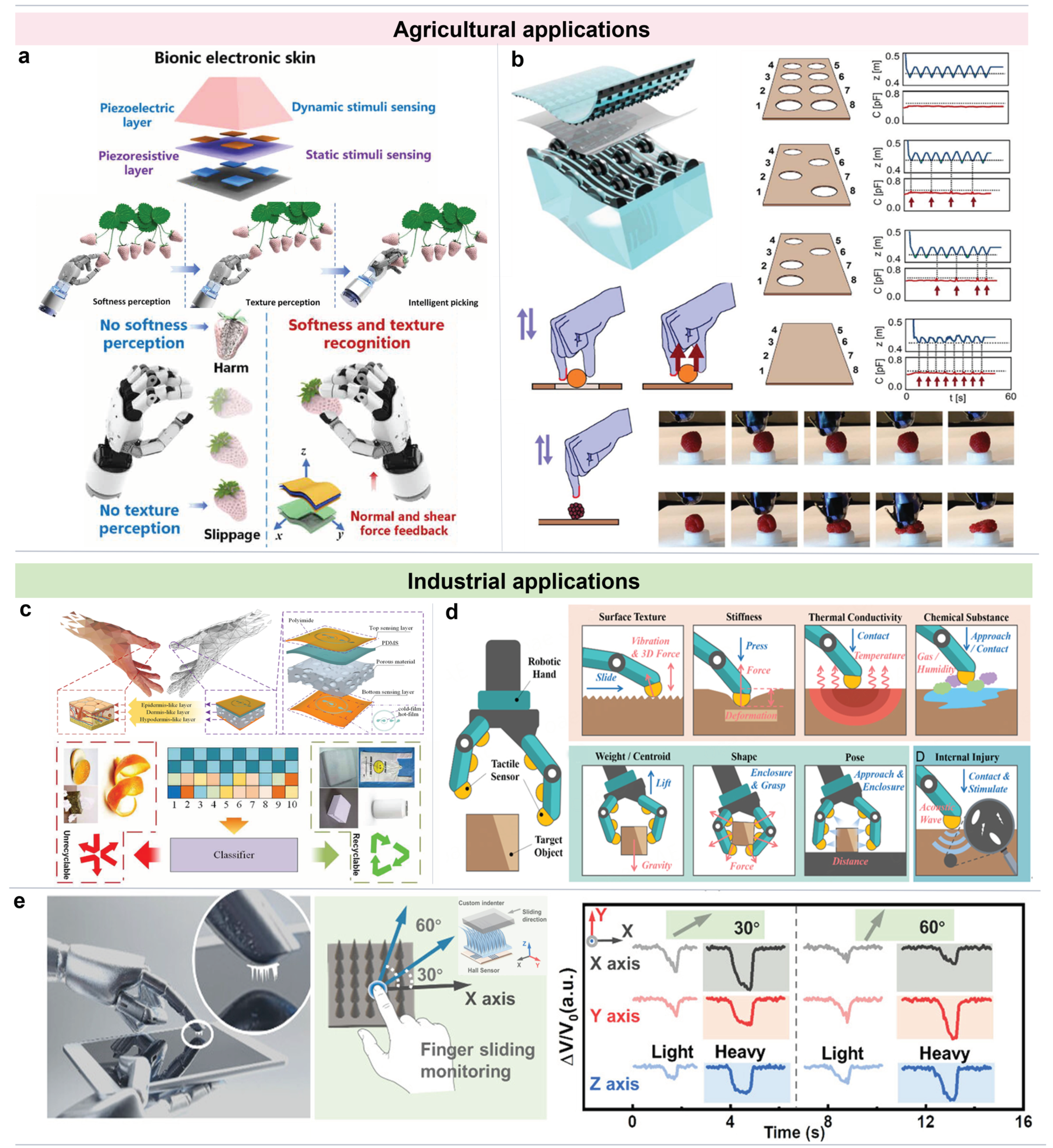

4.3. Agricultural and Industrial Inspection Automation

5. Summary and Outlook

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| 3D | Three-Dimensional |

| CNTs/PDMS | Carbon Nanotube/Polydimethylsiloxane |

| RSHTS | Rigid-Flexible Hybrid Piezoelectric Tactile Sensor |

| PVDF | Polyvinylidene Fluoride |

| ZnO | Zinc Oxide |

| TFTs | Thin-Film Transistors |

| PET | Polyethylene Terephthalate |

| H-E | Hemispherical Ellipsoidal |

| SA | Slow-Adapting |

| FA | Fast-Adapting |

| TC-MWTS | Multidimensional Wireless Tactile Sensor |

| TNFSL | Triboelectric Normal Force Sensing Layer |

| CSFSL | Capacitive Shear Forces Sensing Layer |

| MSEL | Middle Soft Elastic Layer |

| CD-TENG | Contact-Discharge Triboelectric Nanogenerator |

| ZOGW | MXene-Embedded ZnO Nanowire Arrays |

| AMSPP | Aligned Segmental Polyimide/Polyurethane Conductive Film |

| MEMS | Micro-Electromechanical Systems |

| Si-NM | Silicon Nanomembrane |

| EGaIn | Eutectic Gallium-Indium |

| AiFoam | Artificial Foam |

| PCB | Printed Circuit Board |

| LED | Light-Emitting Diode |

| OPD | Organic Photodiode |

| RGB LEDs | Red Green Blue Light-Emitting Diodes |

| CMOS | Complementary Metal-Oxide-Semiconductor |

| NIR | Near-Infrared |

| MIR | Mid-Infrared |

| MXene/LNF/PS | MXene/Lotus Nanofiber/Polystyrene Microsphere |

| TENG | Triboelectric Nanogenerator |

| FTS | Finger-shaped Tactile Sensor |

| PLA | Polylactide |

| IEm-skin | Ionic Electronic Skin |

| AI | Artificial Intelligence |

| SVM | Support Vector Machines |

| KNN | K-Nearest Neighbors |

| LSTM | Long Short-Term Memory |

| 3DAE-Skin | 3D-Architectured Electronic Skin |

| FBTS | Flexible Bionic Tactile Sensor |

| AR/VR | Augmented Reality/Virtual Reality |

| MNF | Micro-Nano Fiber |

References

- Hong, J.; Xiao, Y.; Chen, Y.; Duan, S.; Xiang, S.; Wei, X.; Zhang, H.; Liu, L.; Xia, J.; Lei, W.; et al. Body-Coupled-Driven Object-Oriented Natural Interactive Interface. Adv. Mater. 2025, 07067. [Google Scholar] [CrossRef]

- Ratschat, A.L.; van Rooij, B.M.; Luijten, J.; Marchal-Crespo, L. Evaluating tactile feedback in addition to kinesthetic feedback for haptic shape rendering: A pilot study. Front. Robot. AI 2024, 11, 1298537. [Google Scholar] [CrossRef]

- Wei, Y.; Marshall, A.G.; McGlone, F.P.; Makdani, A.; Zhu, Y.; Yan, L.; Ren, L.; Wei, G. Human tactile sensing and sensorimotor mechanism: From afferent tactile signals to efferent motor control. Nat. Commun. 2024, 15, 6857. [Google Scholar] [CrossRef] [PubMed]

- Zhang, N.; Ren, J.; Dong, Y.; Yang, X.; Bian, R.; Li, J.; Gu, G.; Zhu, X. Soft robotic hand with tactile palm-finger coordination. Nat. Commun. 2025, 16, 2395. [Google Scholar] [CrossRef] [PubMed]

- Iskandar, M.; Albu-Schäffer, A.; Dietrich, A. Intrinsic sense of touch for intuitive physical human-robot interaction. Sci. Robot. 2024, 9, 4008. [Google Scholar] [CrossRef] [PubMed]

- Xu, J.; Pan, J.; Cui, T.; Zhang, S.; Yang, Y.; Ren, T.L. Recent progress of tactile and force sensors for human–machine interaction. Sensors 2023, 23, 1868. [Google Scholar] [CrossRef]

- Flavin, M.T.; Ha, K.H.; Guo, Z.; Li, S.; Kim, J.T.; Saxena, T.; Simatos, D.; Al-Najjar, F.; Mao, Y.; Bandapalli, S.; et al. Bioelastic state recovery for haptic sensory substitution. Nature 2024, 635, 345–352. [Google Scholar] [CrossRef]

- Liu, Z.; Hu, X.; Bo, R.; Yang, Y.; Cheng, X.; Pang, W.; Liu, Q.; Wang, Y.; Wang, S.; Xu, S.; et al. A three-dimensionally architected electronic skin mimicking human mechanosensation. Science 2024, 384, 987–994. [Google Scholar] [CrossRef]

- Shi, J.; Dai, Y.; Cheng, Y.; Xie, S.; Li, G.; Liu, Y.; Wang, J.; Zhang, R.; Bai, N.; Cai, M.; et al. Embedment of sensing elements for robust, highly sensitive, and cross-talk–free iontronic skins for robotics applications. Sci. Adv. 2023, 9, 8831. [Google Scholar] [CrossRef]

- Yu, Y.; Li, J.; Solomon, S.A.; Min, J.; Tu, J.; Guo, W.; Xu, C.; Song, Y.; Gao, W. All-printed soft human-machine interface for robotic physicochemical sensing. Sci. Robot. 2022, 7, 0495. [Google Scholar] [CrossRef]

- Zhu, P.; Du, H.; Hou, X.; Lu, P.; Wang, L.; Huang, J.; Bai, N.; Wu, Z.; Fang, N.X.; Guo, C.F. Skin-electrode iontronic interface for mechanosensing. Nat. Commun. 2021, 12, 4731. [Google Scholar] [CrossRef]

- Luo, Y.; Abidian, M.R.; Ahn, J.H.; Akinwande, D.; Andrews, A.M.; Antonietti, M.; Bao, Z.; Berggren, M.; Berkey, C.A.; Bettinger, C.J.; et al. Technology roadmap for flexible sensors. ACS Nano 2023, 17, 5211–5295. [Google Scholar] [CrossRef]

- Wang, W.; Jiang, Y.; Zhong, D.; Zhang, Z.; Choudhury, S.; Lai, J.C.; Gong, H.; Niu, S.; Yan, X.; Zheng, Y.; et al. Neuromorphic sensorimotor loop embodied by monolithically integrated, low-voltage, soft e-skin. Science 2023, 380, 735–742. [Google Scholar] [CrossRef]

- Zhang, H.; Hong, J.; Zhu, J.; Duan, S.; Xia, M.; Chen, J.; Sun, B.; Xi, M.; Gao, F.; Xiao, Y.; et al. Humanoid electronic-skin technology for the era of Artificial Intelligence of Things. Matter 2025, 8, 45. [Google Scholar] [CrossRef]

- Wei, X.; Xiang, S.; Meng, C.; Chen, Z.; Cao, S.; Hong, J.; Duan, S.; Liu, L.; Zhang, H.; Shi, Q.; et al. Sensory Fiber-Based Electronic Device as Intelligent and Natural User Interface. Adv. Fiber Mater. 2025, 7, 827–840. [Google Scholar] [CrossRef]

- Libanori, A.; Chen, G.; Zhao, X.; Zhou, Y.; Chen, J. Smart textiles for personalized healthcare. Nat. Electron. 2022, 5, 142–156. [Google Scholar] [CrossRef]

- Islam, M.R.; Afroj, S.; Yin, J.; Novoselov, K.S.; Chen, J.; Karim, N. Advances in printed electronic textiles. Adv. Sci. 2024, 11, 2304140. [Google Scholar] [CrossRef]

- Liu, G.; Fan, B.; Qi, Y.; Han, K.; Cao, J.; Fu, X.; Wang, Z.; Bu, T.; Zeng, J.; Dong, S.; et al. Ultrahigh-current-density tribovoltaic nanogenerators based on hydrogen bond-activated flexible organic semiconductor textiles. ACS Nano 2025, 19, 6771–6783. [Google Scholar] [CrossRef] [PubMed]

- Jiao, H.; Lin, X.; Xiong, Y.; Han, J.; Liu, Y.; Yang, J.; Wu, S.; Jiang, T.; Wang, Z.L.; Sun, Q. Thermal insulating textile based triboelectric nanogenerator for outdoor wearable sensing and interaction. Nano Energy 2024, 120, 109134. [Google Scholar] [CrossRef]

- Ma, J.; Wen, B.; Zhang, Y.; Mao, R.; Wu, Q.; Diao, D.; Xu, K.; Zhang, X. Ultra-broad-range pressure sensing enabled by synchronous-compression mechanism based on microvilli-microstructures sensor. Adv. Funct. Mater. 2025, 35, 2425774. [Google Scholar] [CrossRef]

- Pu, J.; Zhang, Y.; Ning, H.; Tian, Y.; Xiang, C.; Zhao, H.; Liu, Y.; Lee, A.; Gong, X.; Hu, N.; et al. Dual-dielectric-layer-based iontronic pressure sensor coupling ultrahigh sensitivity and wide-Range detection for temperature/pressure dual-mode sensing. Adv. Mater. 2025, 03926. [Google Scholar] [CrossRef]

- Tian, L.; Gao, F.L.; Li, Y.X.; Yang, Z.Y.; Xu, X.; Yu, Z.Z.; Shang, J.; Li, R.W.; Li, X. High-performance bimodal temperature/pressure tactile sensor based on lamellar CNT/MXene/Cellulose nanofibers aerogel with enhanced multifunctionality. Adv. Funct. Mater. 2024, 35, 2418988. [Google Scholar] [CrossRef]

- Yang, D.; Zhao, K.; Yang, R.; Zhou, S.W.; Chen, M.; Tian, H.; Qu, D.H. A rational design of bio-derived disulfide CANs for wearable capacitive pressure sensor. Adv. Mater. 2024, 36, 2403880. [Google Scholar] [CrossRef] [PubMed]

- Niu, H.; Li, H.; Li, N.; Niu, H.; Gao, S.; Yue, W.; Li, Y. Morphological-engineering-based capacitive tactile sensors. Appl. Phys. Rev. 2025, 12, 011319. [Google Scholar] [CrossRef]

- Hu, Y.; Li, P.; Lai, G.; Lu, B.; Wang, H.; Cheng, H.; Wu, M.; Liu, F.; Dang, Z.M.; Qu, L. Separator with high ionic conductivity enables electrochemical capacitors to line-filter at high power. Nat. Commun. 2025, 16, 2772. [Google Scholar] [CrossRef]

- Berman, A.; Hsiao, K.; Root, S.E.; Choi, H.; Ilyn, D.; Xu, C.; Stein, E.; Cutkosky, M.; DeSimone, J.M.; Bao, Z. Additively manufactured micro-lattice dielectrics for multiaxial capacitive sensors. Sci. Adv. 2024, 10, 11. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, S.; Gu, H.; Li, Y.; Zhao, H.; Huang, S.; Feng, X.; Zhai, C.; Xu, M. Ultrasensitive piezoelectric-like film with designed cross-scale pores. Sci. Adv. 2025, 11, 9. [Google Scholar] [CrossRef]

- Yin, H.; Li, Y.; Tian, Z.; Li, Q.; Jiang, C. Ultra-high sensitivity anisotropic piezoelectric sensors for structural health monitoring and robotic perception. Nano-Micro Lett. 2024, 17, 42. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Tang, C.Y.; Wang, S.; Guo, J.; Jing, Q.; Liu, J.; Ke, K.; Wang, Y.; Yang, W. Biomimetic heteromodulus all-fluoropolymer piezoelectric nanofiber mats for highly sensitive acoustic detection. ACS Appl. Mater. Interfaces 2025, 17, 21808–21818. [Google Scholar] [CrossRef]

- Shi, Q.; Sun, Z.; Zhang, Z.; Lee, C. Triboelectric nanogenerators and hybridized systems for enabling next-generation IoT applications. Research 2021, 2021, 6849171. [Google Scholar] [CrossRef]

- Hong, J.; Wei, X.; Zhang, H.; Xiao, Y.; Meng, C.; Chen, Y.; Li, J.; Li, L.; Lee, S.; Shi, Q.; et al. Advances of triboelectric and piezoelectric nanogenerators toward continuous monitoring and multimodal applications in the new era. Int. J. Extrem. Manuf. 2025, 7, 012007. [Google Scholar] [CrossRef]

- Yao, C.; Liu, S.; Liu, Z.; Huang, S.; Sun, T.; He, M.; Xiao, G.; Ouyang, H.; Tao, Y.; Qiao, Y.; et al. Deep learning-enhanced anti-noise triboelectric acoustic sensor for human-machine collaboration in noisy environments. Nat. Commun. 2025, 16, 4276. [Google Scholar] [CrossRef]

- Lin, W.; Xu, Y.; Yu, S.; Wang, H.; Huang, Z.; Cao, Z.; Wei, C.; Chen, Z.; Zhang, Z.; Zhao, Z.; et al. Highly programmable haptic decoding and self-adaptive spatiotemporal feedback toward embodied intelligence. Adv. Funct. Mater. 2025, 35, 2500633. [Google Scholar] [CrossRef]

- Qiao, H.; Sun, S.; Wu, P. Non-equilibrium-Growing Aesthetic Ionic Skin for Fingertip-Like Strain-Undisturbed Tactile Sensation and Texture Recognition. Adv. Mater. 2023, 35, 2300593. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez, A. The unstable queen: Uncertainty, mechanics, and tactile feedback. Sci. Robot. 2021, 6, 4667. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Fan, X.; Zhang, Z.; Su, Z.; Ding, Y.; Yang, H.; Zhang, X.; Wang, J.; Zhang, J.; Hu, P. A skin-inspired high-performance tactile sensor for accurate recognition of object softness. ACS Nano 2024, 18, 17175–17184. [Google Scholar] [CrossRef] [PubMed]

- Guo, C.; Chen, X.; Zeng, Z.; Guo, Z.; Li, Y. Grasp like humans: Learning generalizable multi-fingered grasping from human proprioceptive sensorimotor integration. IEEE Trans. Robot. 2025, 36, 1–12. [Google Scholar] [CrossRef]

- Zhao, Z.; Li, W.; Li, Y.; Liu, T.; Li, B.; Wang, M.; Du, K.; Liu, H.; Zhu, Y.; Wang, Q.; et al. Embedding high-resolution touch across robotic hands enables adaptive human-like grasping. Nat. Mach. Intell. 2025, 9, 889–900. [Google Scholar] [CrossRef]

- Duan, S.; Shi, Q.; Wu, J. Multimodal sensors and ML-based data fusion for advanced robots. Adv. Intell. Syst. 2022, 4, 2200213. [Google Scholar] [CrossRef]

- Shi, Q.; Sun, Z.; Le, X.; Xie, J.; Lee, C. Soft robotic perception system with ultrasonic auto-positioning and multimodal sensory intelligence. ACS Nano 2023, 17, 4985–4998. [Google Scholar] [CrossRef]

- Tang, Y.; Li, G.; Zhang, T.; Ren, H.; Yang, X.; Yang, L.; Guo, D.; Shen, Y. Digital channel–enabled distributed force decoding via small datasets for hand-centric interactions. Sci. Adv. 2025, 11, 2641. [Google Scholar] [CrossRef] [PubMed]

- Xu, Q.; Yang, Z.; Wang, Z.; Wang, R.; Zhang, B.; Cheung, Y.; Jiao, R.; Shi, F.; Hong, W.; Yu, H. Sandwich miura-ori enabled large area, super resolution tactile skin for human-machine interactions. Adv. Sci. 2025, 12, 2414580. [Google Scholar] [CrossRef] [PubMed]

- Xu, G.; Wang, H.; Zhao, G.; Fu, J.; Yao, K.; Jia, S.; Shi, R.; Huang, X.; Wu, P.; Li, J.; et al. Self- powered electrotactile textile haptic glove for enhanced human- machine interface. Sci. Adv. 2025, 11, 0318. [Google Scholar] [CrossRef]

- Luo, Y.; Li, Y.; Sharma, P.; Shou, W.; Wu, K.; Foshey, M.; Li, B.; Palacios, T.; Torralba, A.; Matusik, W. Learning human–environment interactions using conformal tactile textiles. Nat. Electron. 2021, 4, 193–201. [Google Scholar] [CrossRef]

- Jung, Y.H.; Yoo, J.Y.; Vázquez-Guardado, A.; Kim, J.H.; Kim, J.T.; Luan, H.; Park, M.; Lim, J.; Shin, H.S.; Su, C.J.; et al. A wireless haptic interface for programmable patterns of touch across large areas of the skin. Nat. Electron. 2022, 5, 374–385. [Google Scholar] [CrossRef]

- Lai, Q.T.; Zhao, X.H.; Sun, Q.J.; Tang, Z.; Tang, X.G.; Roy, V.A. Emerging MXene-based flexible tactile sensors for health monitoring and haptic perception. Small 2023, 19, 2300283. [Google Scholar] [CrossRef]

- Duan, S.; Zhang, H.; Liu, L.; Lin, Y.; Zhao, F.; Chen, P.; Cao, S.; Zhou, K.; Gao, C.; Liu, Z.; et al. A comprehensive review on triboelectric sensors and AI-integrated systems. Mater. Today 2024, 80, 450–480. [Google Scholar] [CrossRef]

- Pyo, S.; Lee, J.; Bae, K.; Sim, S.; Kim, J. Recent progress in flexible tactile sensors for human-interactive systems: From sensors to advanced applications. Adv. Mater. 2021, 33, 2005902. [Google Scholar] [CrossRef]

- Wu, G.; Li, X.; Bao, R.; Pan, C. Innovations in tactile sensing: Microstructural designs for superior flexible sensor performance. Adv. Funct. Mater. 2024, 34, 2405722. [Google Scholar] [CrossRef]

- Gerald, A.; Russo, S. Soft sensing and haptics for medical procedures. Nat. Rev. Mater. 2024, 9, 86–88. [Google Scholar] [CrossRef]

- Nagi, S.S.; Marshall, A.G.; Makdani, A.; Jarocka, E.; Liljencrantz, J.; Ridderström, M.; Shaikh, S.; O’Neill, F.; Saade, D.; Donkervoort, S.; et al. An ultrafast system for signaling mechanical pain in human skin. Sci. Adv. 2019, 5, 1297. [Google Scholar] [CrossRef] [PubMed]

- Peng, Y.; Yang, N.; Xu, Q.; Dai, Y.; Wang, Z. Recent advances in flexible tactile sensors for intelligent systems. Sensors 2021, 21, 5392. [Google Scholar] [CrossRef]

- Han, C.; Cao, Z.; Hu, Y.; Zhang, Z.; Li, C.; Wang, Z.L.; Wu, Z. Flexible tactile sensors for 3D force detection. Nano Lett. 2024, 24, 277–283. [Google Scholar] [CrossRef]

- Wei, C.; Yu, S.; Meng, Y.; Xu, Y.; Hu, Y.; Cao, Z.; Huang, Z.; Liu, L.; Luo, Y.; Chen, H.; et al. Octopus Tentacle-Inspired In-Sensor Adaptive Integral for Edge-Intelligent Touch Intention Recognition. Adv. Mater. 2025, 28, 2420501. [Google Scholar] [CrossRef]

- Sun, X.; Sun, J.; Li, T.; Zheng, S.; Wang, C.; Tan, W.; Zhang, J.; Liu, C.; Ma, T.; Qi, Z.; et al. Flexible tactile electronic skin sensor with 3D force detection based on porous CNTs/PDMS nanocomposites. Nano-Micro Lett. 2019, 11, 57. [Google Scholar] [CrossRef]

- Mao, Q.; Liao, Z.; Liu, S.; Yuan, J.; Zhu, R. An ultralight, tiny, flexible six-axis force/torque sensor enables dexterous fingertip manipulations. Nat. Commun. 2025, 16, 5693. [Google Scholar] [CrossRef]

- Huang, Z.; Yu, S.; Xu, Y.; Cao, Z.; Zhang, J.; Guo, Z.; Wu, T.; Liao, Q.; Zheng, Y.; Chen, Z.; et al. In-Sensor Tactile Fusion and Logic for Accurate Intention Recognition. Adv. Mater. 2024, 36, 2407329. [Google Scholar] [CrossRef]

- Yan, Y.; Zermane, A.; Pan, J.; Kheddar, A. A soft skin with self-decoupled three-axis force-sensing taxels. Nat. Mach. Intell. 2024, 6, 1284–1295. [Google Scholar] [CrossRef]

- Zhu, Y.; Hao, M.; Zhu, X.; Bateux, Q.; Wong, A.; Dollar, A.M. Forces for free: Vision-based contact force estimation with a compliant hand. Sci. Robot. 2025, 10, 11. [Google Scholar] [CrossRef] [PubMed]

- Shen, Z.; Ren, J.; Zhang, N.; Li, J.; Gu, G. Hierarchically-interlocked, three-axis soft iontronic sensor for omnidirectional shear and normal forces. Adv. Mater. Technol. 2025, 10, 2401626. [Google Scholar] [CrossRef]

- Yang, Y.; Zhou, X.; Yang, R.; Zhang, Q.; Sun, J.; Li, G.; Zhao, Y.; Liu, Z. Real-time 3-D force measurements using vision-based flexible force sensor. IEEE Trans. Instrum. Meas. 2023, 73, 1–10. [Google Scholar] [CrossRef]

- Lei, P.; Bao, Y.; Gao, L.; Zhang, W.; Zhu, X.; Liu, C.; Ma, J. Bioinspired integrated multidimensional sensor for adaptive grasping by robotic hands and physical movement guidance. Adv. Funct. Mater. 2024, 34, 2313787. [Google Scholar] [CrossRef]

- Kang, B.; Zavanelli, N.; Sue, G.N.; Patel, D.K.; Oh, S.; Oh, S.; Vinciguerra, M.R.; Wieland, J.; Wang, W.D.; Majidi, C. A flexible skin-mounted haptic interface for multimodal cutaneous feedback. Nat. Electron. 2025, 8, 818–830. [Google Scholar] [CrossRef]

- Zeng, X.; Liu, Y.; Liu, F.; Wang, W.; Liu, X.; Wei, X.; Hu, Y. A bioinspired three-dimensional integrated e-skin for multiple mechanical stimuli recognition. Nano Energy 2022, 92, 106777. [Google Scholar] [CrossRef]

- Kim, G.; Hwang, D. BaroTac: Barometric three-axis tactile sensor with slip detection capability. Sensors 2022, 23, 428. [Google Scholar] [CrossRef] [PubMed]

- Ham, J.; Huh, T.M.; Kim, J.; Kim, J.O.; Park, S.; Cutkosky, M.R.; Bao, Z. Porous dielectric elastomer based flexible multiaxial tactile sensor for dexterous robotic or prosthetic hands. Adv. Mater. Technol. 2023, 8, 2200903. [Google Scholar] [CrossRef]

- Wang, Y.; Duan, S.; Liu, J.; Zhao, F.; Chen, P.; Shi, Q.; Wu, J. Highly-sensitive expandable microsphere-based flexible pressure sensor for human–machine interaction. J. Micromech. Microeng. 2023, 33, 115009. [Google Scholar] [CrossRef]

- Rehan, M.; Saleem, M.M.; Tiwana, M.I.; Shakoor, R.I.; Cheung, R. A soft multi-axis high force range magnetic tactile sensor for force feedback in robotic surgical systems. Sensors 2022, 22, 3500. [Google Scholar] [CrossRef]

- Kebede, G.A.; Ahmad, A.R.; Lee, S.C.; Lin, C.Y. Decoupled six-axis force–moment sensor with a novel strain gauge arrangement and error reduction techniques. Sensors 2019, 19, 3012. [Google Scholar] [CrossRef]

- Liu, G.; Yu, P.; Tao, Y.; Liu, T.; Liu, H.; Zhao, J. Hybrid 3D printed three-axis force sensor aided by machine learning decoupling. Int. J. Smart Nano Mater. 2024, 15, 261–278. [Google Scholar] [CrossRef]

- Wang, S.; Liu, H. Research on decoupling model of six-component force sensor based on artificial neural network and polynomial regression. Sensors 2024, 24, 2698. [Google Scholar] [CrossRef]

- Dai, H.; Wu, Z.; Meng, C.; Zhang, C.; Zhao, P. A magnet splicing method for constructing a three-dimensional self-decoupled magnetic tactile sensor. Magnetochemistry 2024, 10, 6. [Google Scholar] [CrossRef]

- Chun, S.; Kim, J.-S.; Yoo, Y.; Choi, Y.; Jung, S.J.; Jang, D.; Lee, G.; Song, K.-I.; Nam, K.S.; Youn, I.; et al. An artificial neural tactile sensing system. Nat. Electron. 2021, 4, 429–438. [Google Scholar] [CrossRef]

- Bok, B.G.; Jang, J.S.; Kim, M.S. A highly sensitive multimodal tactile sensing module with planar structure for dexterous manipulation of robots. Adv. Intell. Syst. 2023, 5, 2200381. [Google Scholar] [CrossRef]

- Qu, X.; Liu, Z.; Tan, P.; Wang, C.; Liu, Y. Artificial tactile perception smart finger for material identification based on triboelectric sensing. Sci. Adv. 2022, 8, 11. [Google Scholar] [CrossRef]

- Peng, S.; Wu, S.; Yu, Y.; Xia, B.; Lovell, N.H.; Wang, C.H. Multimodal capacitive and piezoresistive sensor for simultaneous measurement of multiple forces. ACS Appl. Mater. Interfaces 2020, 12, 22179–22190. [Google Scholar] [CrossRef]

- Kong, H.; Li, W.; Song, Z.; Niu, L. Recent advances in multimodal sensing integration and decoupling strategies for tactile perception. Mater. Futures 2024, 3, 022501. [Google Scholar] [CrossRef]

- Zhang, J.; Yao, H.; Mo, J.; Chen, S.; Xie, Y.; Ma, S.; Chen, R.; Luo, T.; Ling, W.; Qin, L.; et al. Finger-inspired rigid-soft hybrid tactile sensor with superior sensitivity at high frequency. Nat. Commun. 2022, 13, 5076. [Google Scholar] [CrossRef] [PubMed]

- Oh, H.; Yi, G.C.; Yip, M.; Dayeh, S.A. Scalable tactile sensor arrays on flexible substrates with high spatiotemporal resolution enabling slip and grip for closed-loop robotics. Sci. Adv. 2020, 6, 14. [Google Scholar] [CrossRef] [PubMed]

- Qiu, Y.; Wang, F.; Zhang, Z.; Shi, K.; Song, Y.; Lu, J.; Xu, M.; Qian, M.; Zhang, W.; Wu, J.; et al. Quantitative softness and texture bimodal haptic sensors for robotic clinical feature identification and intelligent picking. Sci. Adv. 2024, 10, 14. [Google Scholar] [CrossRef]

- Gu, Y.; Zhang, T.; Li, J.; Zheng, C.; Yang, M.; Li, S. A new force-decoupling triaxial tactile sensor based on elastic microcones for accurately grasping feedback. Adv. Intell. Syst. 2023, 5, 2200321. [Google Scholar] [CrossRef]

- Boutry, C.M.; Negre, M.; Jorda, M.; Vardoulis, O.; Chortos, A.; Khatib, O.; Bao, Z. A hierarchically patterned, bioinspired e-skin able to detect the direction of applied pressure for robotics. Sci. Robot. 2018, 3, 9. [Google Scholar] [CrossRef]

- Gu, H.; Lu, B.; Gao, Z.; Wu, S.; Zhang, L.; Xie, L.; Yi, J.; Liu, Y.; Nie, B.; Wen, Z.; et al. A battery-free wireless tactile sensor for multimodal force perception. Adv. Funct. Mater. 2024, 34, 2410661. [Google Scholar] [CrossRef]

- Kim, T.; Park, Y.L. A soft three-axis load cell using liquid-filled three-dimensional microchannels in a highly deformable elastomer. IEEE Robot. Autom. Lett. 2018, 3, 881–887. [Google Scholar] [CrossRef]

- Guo, H.; Tan, Y.J.; Chen, G.; Wang, Z.; Susanto, G.J.; See, H.H.; Yang, Z.; Lim, Z.W.; Yang, L.; Tee, B.C. Artificially innervated self-healing foams as synthetic piezo-impedance sensor skins. Nat. Commun. 2020, 11, 5747. [Google Scholar] [CrossRef]

- Cao, Y.; Li, J.; Dong, Z.; Sheng, T.; Zhang, D.; Cai, J.; Jiang, Y. Flexible tactile sensor with an embedded-hair-in-elastomer structure for normal and shear stress sensing. Soft Sci. 2023, 3, 32. [Google Scholar] [CrossRef]

- Xu, C.; Wang, Y.; Zhang, J.; Wan, J.; Xiang, Z.; Nie, Z. Three-dimensional micro strain gauges as flexible, modular tactile sensors for versatile integration with micro- and macroelectronics. Sci. Adv. 2024, 10, 13. [Google Scholar] [CrossRef]

- Won, S.M.; Wang, H.; Kim, B.H.; Lee, K.; Jang, H.; Kwon, K.; Han, M.; Crawford, K.E.; Li, H.; Lee, Y.; et al. Multimodal sensing with a three-dimensional piezoresistive structure. ACS Nano 2019, 13, 10972–10979. [Google Scholar] [CrossRef]

- Wang, X.; Tan, B.; Long, H.; Huang, J.; Li, E.; Qin, Y. Material and structural innovations for high-performance flexible triaxial force sensors: A Review. IEEE Sens. J. 2025, 25, 30291–30312. [Google Scholar] [CrossRef]

- Xu, Y.; Zhang, S.; Li, S.; Wu, Z.; Li, Y.; Li, Z.; Chen, X.; Shi, C.; Chen, P.; Zhang, P.; et al. A soft magnetoelectric finger for robots’ multidirectional tactile perception in non-visual recognition environments. npj Flex. Electron. 2024, 8, 2. [Google Scholar] [CrossRef]

- Lin, C.; Zhang, H.; Xu, J.; Wu, L.; Xu, H. A compact vision-based tactile sensor for accurate 3d shape reconstruction and generalizable 6d force estimation. IEEE Robot. Autom. Lett. 2023, 9, 923–930. [Google Scholar] [CrossRef]

- Heo, M.; Kang, S.R.; Yu, M.; Kwon, T.K. The development of split-treadmill with a fall prevention training function. Technol. Health Care 2023, 31, 1189–1201. [Google Scholar] [CrossRef]

- Mittendorfer, P.; Cheng, G. Humanoid multimodal tactile-sensing modules. IEEE Trans. Robot. 2011, 27, 401–410. [Google Scholar] [CrossRef]

- De Oliveira, T.E.A.; Cretu, A.M.; Petriu, E.M. Multimodal bio-inspired tactile sensing module. IEEE Sens. J. 2017, 17, 3231–3243. [Google Scholar] [CrossRef]

- Pu, M.; Zhao, T.; Zhang, L.; Han, C.; Chai, Z.; Zhou, Y.; Ding, H.; Wu, Z. An AI-Enabled All-In-One Visual, Proximity, and Tactile Perception Multimodal Sensor. Adv. Robot. Res. 2025, 12, 202500062. [Google Scholar] [CrossRef]

- Khamis, H.; Xia, B.; Redmond, S.J. A novel optical 3D force and displacement sensor—Towards instrumenting the papillarray tactile sensor. Sens. Actuat. A-Phys. 2019, 291, 174–187. [Google Scholar] [CrossRef]

- Ma, X.; Wang, C.; Wei, R.; He, J.; Li, J.; Liu, X.; Huang, F.; Ge, S.; Tao, J.; Yuan, Z.; et al. Bimodal tactile sensor without signal fusion for user-interactive applications. ACS Nano 2022, 16, 2789–2797. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Yu, Y.; Sun, F.; Gu, J. Visual–tactile fusion for object recognition. IEEE Trans. Autom. Sci. Eng. 2016, 14, 96–108. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, Q.; Ren, W.; Song, Y.; Luo, H.; Han, Y.; He, L.; Wu, X.; Wang, Z. Bioinspired tactile sensation based on synergistic microcrack-bristle structure design towards high mechanical sensitivity and direction-resolving capability. Research 2023, 6, 0172. [Google Scholar] [CrossRef]

- Bo, R.; Xu, S.; Yang, Y.; Zhang, Y. Mechanically-guided 3D assembly for architected flexible electronics. Chem. Rev. 2023, 123, 11137–11189. [Google Scholar] [CrossRef]

- Cheng, X.; Fan, Z.; Yao, S.; Jin, T.; Lv, Z.; Lan, Y.; Bo, R.; Chen, Y.; Zhang, F.; Shen, Z.; et al. Programming 3D curved mesosurfaces using microlattice designs. Science 2023, 379, 1225–1232. [Google Scholar] [CrossRef]

- Shuai, Y.; Zhao, J.; Bo, R.; Lan, Y.; Lv, Z.; Zhang, Y. A wrinkling-assisted strategy for controlled interface delamination in mechanically-guided 3D assembly. J. Mech. Phys. Solids. 2023, 173, 105–203. [Google Scholar] [CrossRef]

- Xu, S.; Yan, Z.; Jang, K.I.; Huang, W.; Fu, H.; Kim, J.; Wei, Z.; Flavin, M.; McCracken, J.; Wang, R.; et al. Assembly of micro/nanomaterials into complex, three-dimensional architectures by compressive buckling. Science 2015, 347, 154–159. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Zhang, F.; Yan, Z.; Ma, Q.; Li, X.; Huang, Y.; Rogers, J.A. Printing, folding and assembly methods for forming 3D mesostructures in advanced materials. Nat. Rev. Mater. 2017, 2, 17019. [Google Scholar] [CrossRef]

- Yan, Y.; Hu, Z.; Yang, Z.; Yuan, W.; Song, C.; Pan, J.; Shen, Y. Soft magnetic skin for super-resolution tactile sensing with force self-decoupling. Sci. Robot. 2021, 6, 8801. [Google Scholar] [CrossRef]

- Dai, H.; Zhang, C.; Pan, C.; Hu, H.; Ji, K.; Sun, H.; Lyu, C.; Tang, D.; Li, T.; Fu, J.; et al. Split-type magnetic soft tactile sensor with 3D force decoupling. Adv. Mater. 2024, 36, 2310145. [Google Scholar] [CrossRef] [PubMed]

- Dai, H.; Zhang, C.; Hu, H.; Hu, Z.; Sun, H.; Liu, K.; Li, T.; Fu, J.; Zhao, P.; Yang, H. Biomimetic hydrodynamic sensor with whisker array architecture and multidirectional perception ability. Adv. Sci. 2024, 11, 2405276. [Google Scholar] [CrossRef]

- Hu, H.; Zhang, C.; Pan, C.; Dai, H.; Sun, H.; Pan, Y.; Lai, X.; Lyu, C.; Tang, D.; Fu, J.; et al. Wireless flexible magnetic tactile sensor with super-resolution in large-areas. ACS Nano 2022, 16, 19271–19280. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhao, X.; Xu, J.; Chen, G.; Tat, T.; Li, J.; Chen, J. A multimodal magnetoelastic artificial skin for underwater haptic sensing. Sci. Adv. 2024, 10, 8567. [Google Scholar] [CrossRef] [PubMed]

- Jones, D.; Wang, L.; Ghanbari, A.; Vardakastani, V.; Kedgley, A.E.; Gardiner, M.D.; Vincent, T.L.; Culmer, P.R.; Alazmani, A. Design and evaluation of magnetic hall effect tactile sensors for use in sensorized splints. Sensors 2020, 20, 1123. [Google Scholar] [CrossRef]

- Li, G.; Zhang, T.; Tang, J. Decoding chemo-mechanical failure mechanisms of solid-state lithium metal battery under low stack pressure via optical fiber sensors. Adv. Mater. 2025, 37, 12. [Google Scholar] [CrossRef]

- Takeshita, T.; Harisaki, K.; Ando, H.; Higurashi, E.; Nogami, H.; Sawada, R. Development and evaluation of a two-axial shearing force sensor consisting of an optical sensor chip and elastic gum frame. Precis. Eng. 2016, 45, 136–142. [Google Scholar] [CrossRef]

- Wang, W.; De Souza, M.M.; Ghannam, R.; Li, W.J.; Roy, V.A. A novel micro-scaled multi-layered optical stress sensor for force sensing. J. Comput. Electron. 2023, 22, 768–782. [Google Scholar] [CrossRef]

- Wang, W.; Yiu, H.H.; Li, W.; Roy, V.A. The principle and architectures of optical stress sensors and the progress on the development of microbend optical sensors. Adv. Opt. Mater. 2021, 9, 2001693. [Google Scholar] [CrossRef]

- Wang, H.; Wang, W.; Kim, J.J.; Wang, C.; Wang, Y.; Wang, B.; Lee, S.; Yokota, T.; Someya, T. An optical-based multipoint 3-axis pressure sensor with a flexible thin-film form. Sci. Adv. 2023, 9, 2445. [Google Scholar] [CrossRef] [PubMed]

- Yang, H.; Fu, J.; Cao, R.; Liu, J.; Wang, L. A liquid lens-based optical sensor for tactile sensing. Smart Mater. Struct. 2022, 31, 035011. [Google Scholar] [CrossRef]

- Guo, J.; Shang, C.; Gao, S.; Zhang, Y.; Fu, B.; Xu, L. Flexible plasmonic optical tactile sensor for health monitoring and artificial haptic perception. Adv. Mater. Technol. 2023, 8, 2201506. [Google Scholar] [CrossRef]

- Bai, H.; Li, S.; Barreiros, J.; Tu, Y.; Pollock, C.R.; Shepherd, R.F. Stretchable distributed fiber-optic sensors. Science 2020, 370, 848–852. [Google Scholar] [CrossRef]

- Xiong, P.; Huang, Y.; Yin, Y.; Zhang, Y.; Song, A. A novel tactile sensor with multimodal vision and tactile units for multifunctional robot interaction. Robotica 2024, 42, 1420–1435. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, X.; Wang, M.; Yu, H. Multidimensional tactile sensor with a thin compound eye-inspired imaging system. Soft Robot. 2022, 9, 861–870. [Google Scholar] [CrossRef]

- Li, S.; Yu, H.; Pan, G.; Tang, H.; Zhang, J.; Ye, L.; Zhang, X.P.; Ding, W. M3Tac: A multispectral multimodal visuotactile sensor with beyond-human sensory capabilities. IEEE Trans. Robot. 2024, 40, 4484–4503. [Google Scholar] [CrossRef]

- Leslie, O.; Bulens, D.C.; Ulloa, P.M.; Redmond, S.J. A tactile sensing concept for 3D displacement and 3D force measurement using light angle and intensity sensing. IEEE Sens. J. 2023, 23, 21172–21188. [Google Scholar] [CrossRef]

- Li, H.; Nam, S.; Lu, Z.; Yang, C.; Psomopoulou, E.; Lepora, N.F. Biotactip: A soft biomimetic optical tactile sensor for efficient 3d contact localization and 3d force estimation. IEEE Robot. Autom. Lett. 2024, 9, 5314–5321. [Google Scholar] [CrossRef]

- Li, Z.; Cheng, L.; Liu, Z.; Wei, J.; Wang, Y. An Ultrasensitive and Robust Soft Optical 3D Tactile Sensor. Soft Robot. 2025, 12, 445–454. [Google Scholar] [CrossRef]

- Chen, Y.; Hong, J.; Xiao, Y.; Zhang, H.; Wu, J.; Shi, Q. Multimodal intelligent flooring system for advanced smart-building monitoring and interactions. Adv. Sci. 2024, 11, 2406190. [Google Scholar] [CrossRef]

- Duan, S.; Chen, P.; Xiong, Y.A.; Zhao, F.; Jing, Z.; Du, G.; Wei, X.; Xiang, S.; Hong, J.; Shi, Q.; et al. Flexible mechano-optical dual-responsive perovskite molecular ferroelectric composites for advanced anticounterfeiting and encryption. Sci. Adv. 2024, 10, 11. [Google Scholar] [CrossRef]

- Yu, P.; Chen, F.; Long, J. A three-dimensional force/temperature composite flexible sensor. Sens. Actuators A Phys. 2024, 365, 114891. [Google Scholar] [CrossRef]

- Jin, K.; Li, Z.; Nan, P.; Xin, G.; Lim, K.S.; Ahmad, H.; Yang, H. Fiber Bragg grating-based fingertip tactile sensors for normal/shear forces and temperature detection. Sens. Actuators A Phys. 2023, 357, 114368. [Google Scholar] [CrossRef]

- Chen, C.; Wang, P.; Hong, W.; Zhu, X.; Hao, J.; He, C.; Hou, H.; Kong, D.; Liu, T.; Zhao, Y.; et al. Arch-inspired flexible dual-mode sensor with ultra-high linearity based on carbon nanomaterials/conducting polymer composites for bioelectronic monitoring and thermal perception. Compos. Sci. Technol. 2025, 267, 111182. [Google Scholar] [CrossRef]

- Ikejima, T.; Mizukoshi, K.; Nonomura, Y. Predicting sensory and affective tactile perception from physical parameters obtained by using a biomimetic multimodal tactile sensor. Sensors 2025, 25, 147. [Google Scholar] [CrossRef] [PubMed]

- Duan, S.; Wei, X.; Zhao, F.; Yang, H.; Wang, Y.; Chen, P.; Hong, J.; Xiang, S.; Luo, M.; Shi, Q.; et al. Bioinspired young’s modulus-hierarchical e-skin with decoupling multimodality and neuromorphic encoding outputs to biosystems. Adv. Sci. 2023, 10, 2304121. [Google Scholar] [CrossRef]

- Jiang, Y.; Fan, L.; Sun, X.; Luo, Z.; Wang, H.; Lai, R.; Wang, J.; Gan, Q.; Li, N.; Tian, J. A multifunctional tactile sensory system for robotic intelligent identification and manipulation perception. Adv. Sci. 2024, 11, 2402705. [Google Scholar] [CrossRef]

- Han, C.; Cao, Z.; An, Z.; Zhang, Z.; Wang, Z.L.; Wu, Z. Multimodal finger-shaped tactile sensor for multi-directional force and material identification. Adv. Mater. 2025, 37, 2414096. [Google Scholar] [CrossRef]

- Barreiros, J.A.; Xu, A.; Pugach, S.; Iyengar, N.; Troxell, G.; Cornwell, A.; Hong, S.; Selman, B.; Shepherd, R.F. Haptic perception using optoelectronic robotic flesh for embodied artificially intelligent agents. Sci. Robot. 2022, 7, 6745. [Google Scholar] [CrossRef]

- Huang, Y.; Zhou, J.; Ke, P.; Guo, X.; Yiu, C.K.; Yao, K.; Cai, S.; Li, D.; Zhou, Y.; Li, J.; et al. A skin-integrated multimodal haptic interface for immersive tactile feedback. Nat. Electron. 2023, 6, 1020–1031. [Google Scholar] [CrossRef]

- You, I.; Mackanic, D.G.; Matsuhisa, N.; Kang, J.; Kwon, J.; Beker, L.; Mun, J.; Suh, W.; Kim, T.Y.; Tok, J.B.H.; et al. Artificial multimodal receptors based on ion relaxation dynamics. Science 2020, 370, 961. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Li, Y.; Li, Y.A.; Zheng, X.; Guo, J.; Sun, T.; Sung, H.K.; Chernogor, L.; Cao, M.; Xu, T.; et al. Bionic fingerprint tactile sensor with deep learning-decoupled multimodal perception for simultaneous pressure-friction mapping. Adv. Funct. Mater. 2025, e06158. [Google Scholar] [CrossRef]

- Xu, W.; Zhou, G.; Zhou, Y.; Zou, Z.; Wang, J.; Wu, W.; Li, X. A vision-based tactile sensing system for multimodal contact information perception via neural network. IEEE Trans. Instrum. Meas. 2024, 73, 5026411. [Google Scholar] [CrossRef]

- Li, F.; Dai, Z.; Jiang, L.; Song, C.; Zhong, C.; Chen, Y. Prediction of the remaining useful life of bearings through cnn-bi-lstm-based domain adaptation model. Sensors 2024, 24, 6906. [Google Scholar] [CrossRef] [PubMed]

- Piramoon, S.; Ayoubi, M. Neural-network-based active vibration control of rotary machines. IEEE Access 2024, 12, 107552–107569. [Google Scholar] [CrossRef]

- Kou, R.; Wang, C.; Liu, J.; Wan, R.; Jin, Z.; Zhao, L.; Liu, Y.; Guo, J.; Li, F.; Wang, H.; et al. Construction and interpretation of tobacco leaf position discrimination model based on interpretable machine learning. Front. Plant Sci. 2025, 16, 1619380. [Google Scholar] [CrossRef]

- Yu, L.; Xiao, W.; Wang, Q.; Liu, D. Soft microtubular sensors as artificial fingerprints for incipient slip detection. Measurement 2025, 253, 117729. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, Z.; Wu, J.; Shi, Q. Self-Powered Sensing and Wireless Communication Synergic Systems Enabled by Triboelectric Nanogenerators. Nanoenergy Adv. 2024, 4, 367–398. [Google Scholar] [CrossRef]

- Huang, F.; Sun, X.; Shi, Y.; Pan, L. Flexible ionic-gel synapse devices and their applications in neuromorphic system. FlexMat 2025, 2, 30–54. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, R.; Ji, C.; Pei, Z.; Fu, Z.; Liu, Y.; Sang, S.; Hao, R.; Zhang, Q. Bioinspired crocodile skin-based flexible piezoelectric sensor for three-dimensional force detection. IEEE Sens. J. 2023, 23, 21050–21060. [Google Scholar] [CrossRef]

- Liu, J.; Zhao, W.; Ma, Z.; Zhao, H.; Ren, L. Self-powered flexible electronic skin tactile sensor with 3D force detection. Mater. Today 2024, 81, 84–94. [Google Scholar] [CrossRef]

- Sun, H.; Kuchenbecker, K.J.; Martius, G. A soft thumb-sized vision-based sensor with accurate all-round force perception. Nat. Mach. Intell. 2022, 4, 135–145. [Google Scholar] [CrossRef]

- Liu, F.; Deswal, S.; Christou, A.; Sandamirskaya, Y.; Kaboli, M.; Dahiya, R. Neuro-inspired electronic skin for robots. Sci. Robot. 2022, 7, l7344. [Google Scholar] [CrossRef]

- Zhang, W.; Xi, Y.; Wang, E.; Qu, X.; Yang, Y.; Fan, Y.; Shi, B.; Li, Z. Self-powered force sensors for multidimensional tactile sensing. ACS Appl. Mater. Interfaces 2022, 14, 20122–20131. [Google Scholar] [CrossRef]

- Yao, K.; Zhuang, Q. Self-decoupling three-axis forces in a simple sensor. Nat. Mach. Intell. 2024, 6, 1431–1432. [Google Scholar] [CrossRef]

- Nie, B.; Geng, J.; Yao, T.; Miao, Y.; Zhang, Y.; Chen, X.; Liu, J. Sensing arbitrary contact forces with a flexible porous dielectric elastomer. Mater. Horiz. 2021, 8, 962–971. [Google Scholar] [CrossRef] [PubMed]

- Jiang, C.; Li, Y.; Yin, H.; Li, Y.; Bao, Y.; Li, Q.; Guo, Y. Multiscale interconnected and anisotropic morphology genetic piezoceramic skeleton based flexible self-powered 3D force sensor. Adv. Funct. Mater. 2025, 15, 2503120. [Google Scholar] [CrossRef]

- Liu, X.; Li, K.; Qian, S.; Niu, L.; Chen, W.; Wu, H.; Song, X.; Zhang, J.; Bi, X.; Yu, J.; et al. A high-sensitivity flexible bionic tentacle sensor for multidimensional force sensing and autonomous obstacle avoidance applications. Microsy. Nanoeng. 2024, 10, 149. [Google Scholar] [CrossRef] [PubMed]

- Arshad, A.; Saleem, M.M.; Tiwana, M.I.; ur Rahman, H.; Iqbal, S.; Cheung, R. A high sensitivity and multi-axis fringing electric field based capacitive tactile force sensor for robot assisted surgery. Sens. Actuators A Phys. 2023, 354, 114272. [Google Scholar] [CrossRef]

- Zhu, Y.; Li, Y.; Xie, D.; Yan, B.; Wu, Y.; Zhang, Y.; Wang, G.; Lai, L.; Sun, Y.; Yang, Z.; et al. High-performance flexible tactile sensor enabled by multi-contact mechanism for normal and shear force measurement. Nano Energy 2023, 117, 108862. [Google Scholar] [CrossRef]

- Lv, Z.; Song, Z.; Ruan, D.; Wu, H.; Liu, A. Flexible capacitive three-dimensional force sensor for hand motion capture and handwriting recognition. Funct. Mater. Lett. 2022, 15, 2250026. [Google Scholar] [CrossRef]

- Zhang, J.; Hou, X.; Qian, S.; Huo, J.; Yuan, M.; Duan, Z.; Song, X.; Wu, H.; Shi, S.; Geng, W.; et al. Flexible wide-range multidimensional force sensors inspired by bones embedded in muscle. Microsyst. Nanoeng. 2024, 10, 64. [Google Scholar] [CrossRef]

- Wang, Y.; Ruan, X.; Xing, C.; Zhao, H.; Luo, M.; Chen, Y. Highly sensitive and flexible three-dimensional force tactile sensor based on inverted pyramidal structure. Smart Mater. Struct. 2022, 31, 095013. [Google Scholar] [CrossRef]

- Ruan, D.; Chen, G.; Luo, X.; Cheng, L.; Wu, H.; Liu, A. Bionic octopus-like flexible three-dimensional force sensor for meticulous handwriting recognition in human-computer interactions. Nano Energy 2024, 123, 109357. [Google Scholar] [CrossRef]

- Xie, Y.; Pan, J.; Yu, L.; Fang, H.; Yu, S.; Zhou, N.; Tong, L.; Zhang, L. Optical micro/nanofiber enabled multiaxial force sensor for tactile visualization and human–machine interface. Adv. Sci. 2024, 11, 2404343. [Google Scholar] [CrossRef] [PubMed]

- Fei, Z.; Ryeznik, Y.; Sverdlov, O.; Tan, C.W.; Wong, W.K. An overview of healthcare data analytics with applications to the COVID-19 pandemic. IEEE Trans. Big Data. 2021, 8, 1463–1480. [Google Scholar] [CrossRef]

- Tan, C.W.; Yu, P.D.; Chen, S.; Poor, H.V. DeepTrace: Learning to optimize contact tracing in epidemic networks with graph neural networks. IEEE Trans. Signal Inf. Process. Over Netw. 2025, 11, 97–113. [Google Scholar] [CrossRef]

- Alotaibi, A. Flexible 3D force sensor based on polymer nanocomposite for soft robotics and medical applications. Sensors 2024, 24, 1859. [Google Scholar] [CrossRef] [PubMed]

- Dong, T.; Wang, J.; Chen, Y.; Liu, L.; You, H.; Li, T. Research progress on flexible 3-D force sensors: A review. IEEE Sens. J. 2024, 24, 15706–15726. [Google Scholar] [CrossRef]

- Zhang, Q.; Yang, R.; Duan, Q.; Zhao, Y.; Qian, Z.; Luo, D.; Liu, Z.; Wang, R. A wearable three-axis force sensor based on deep learning technology for plantar measurement. Chem. Eng. J. 2024, 482, 148491. [Google Scholar] [CrossRef]

- Hu, J.; Qiu, Y.; Wang, X.; Jiang, L.; Lu, X.; Li, M.; Wang, Z.; Pang, K.; Tian, Y.; Zhang, W.; et al. Flexible six-dimensional force sensor inspired by the tenon-and-mortise structure of ancient Chinese architecture for orthodontics. Nano Energy 2022, 96, 107073. [Google Scholar] [CrossRef]

- Zhang, D.; Zhang, W.; Yang, H.; Yang, H. Application of soft grippers in the field of agricultural harvesting: A review. Machines 2025, 13, 55. [Google Scholar] [CrossRef]

- Visentin, F.; Castellini, F.; Muradore, R. A soft, sensorized gripper for delicate harvesting of small fruits. Comput. Electron. Agric. 2023, 213, 108202. [Google Scholar] [CrossRef]

- Navas, E.; Shamshiri, R.R.; Dworak, V.; Weltzien, C.; Fernández, R. Soft gripper for small fruits harvesting and pick and place operations. Front. Robot. AI 2024, 10, 1330496. [Google Scholar] [CrossRef]

- Javed, Y.; Mansoor, M.; Shah, I.A. A review of principles of MEMS pressure sensing with its aerospace applications. Sens. Rev. 2019, 39, 652–664. [Google Scholar] [CrossRef]

- Zhu, L.; Wang, Y.; Mei, D.; Jiang, C. Development of fully flexible tactile pressure sensor with bilayer interlaced bumps for robotic grasping applications. Micromachines 2020, 11, 770. [Google Scholar] [CrossRef]

- Takeda, Y.; Wang, Y.F.; Yoshida, A.; Sekine, T.; Kumaki, D.; Tokito, S. Advancing robotic gripper control with the integration of flexible printed pressure sensors. Adv. Eng. Mater. 2024, 26, 2302031. [Google Scholar] [CrossRef]

- Watanabe, Y.; Sekine, T.; Miura, R.; Abe, M.; Shouji, Y.; Ito, K.; Wang, Y.F.; Hong, J.; Takeda, Y.; Kumaki, D.; et al. Optimization of a soft pressure sensor in terms of the molecular weight of the ferroelectric-polymer sensing layer. Adv. Funct. Mater. 2022, 32, 2107434. [Google Scholar] [CrossRef]

- Hong, W.; Guo, X.; Zhang, T.; Liu, Y.; Yan, Z.; Zhang, A.; Qian, Z.; Wang, J.; Zhang, X.; Jin, C.; et al. Bioinspired engineering of fillable gradient structure into flexible capacitive pressure sensor toward ultra-high sensitivity and wide working range. Macromol. Rapid Commun. 2023, 44, 2300420. [Google Scholar] [CrossRef] [PubMed]

- Li, G.; Liu, S.; Wang, L.; Zhu, R. Skin-inspired quadruple tactile sensors integrated on a robot hand enable object recognition. Sci. Robot. 2020, 5, 8134. [Google Scholar] [CrossRef]

- Guo, D.; Liu, H.; Fang, B.; Sun, F.; Yang, W. Visual affordance guided tactile material recognition for waste recycling. IEEE Trans. Autom Sci. Eng. 2022, 19, 2656–2664. [Google Scholar] [CrossRef]

- Jin, J.; Wang, S.; Zhang, Z.; Mei, D.; Wang, Y. Progress on flexible tactile sensors in robotic applications on objects properties recognition, manipulation and human-machine interactions. Soft Sci. 2023, 3, 8. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, Y.; Zhang, C.; Zhan, W.; Zhang, Q.; Xue, L.; Xu, Z.; Peng, N.; Jiang, Z.; Ye, Z.; et al. Cilia-inspired magnetic flexible shear force sensors for tactile and fluid monitoring. ACS Appl. Mater. Interfaces 2024, 16, 50524–50533. [Google Scholar] [CrossRef]

- Yun, G.; Hu, Z. Triaxial tactile sensing for next-gen robotics and wearable devices. Smart Mater. Devices 2025, 1, 202518. [Google Scholar] [CrossRef]

- Wang, D.; Zhao, N.; Yang, Z.; Yuan, Y.; Xu, H.; Wu, G.; Zheng, W.; Ji, X.; Bai, N.; Wang, W.; et al. Iontronic capacitance-enhanced flexible three-dimensional force sensor with ultrahigh sensitivity for machine-sensing interface. IEEE Electr. Device Lett. 2023, 44, 2023. [Google Scholar] [CrossRef]

- Yuan, X.; Zhou, J.; Huang, B.; Wang, Y.; Yang, C.; Gui, W. Hierarchical quality-relevant feature representation for soft sensor modeling: A novel deep learning strategy. IEEE Trans. Ind. Inform. 2019, 16, 3721–3730. [Google Scholar] [CrossRef]

- Hu, X.; Liu, Z.; Zhang, Y. Three-dimensionally architected tactile electronic skins. ACS Nano 2025, 19, 14523–14539. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Wang, C.; Lin, Q.; Zhang, Y.; Zhang, Y.; Liu, Z.; Luo, Y.; Xu, X.; Han, F.; Jiang, Z. Flexible three-dimensional force sensor of high sensing stability with bonding and supporting composite structure for smart devices. Smart Mater. Struct. 2021, 30, 105004. [Google Scholar] [CrossRef]

- Pang, Y.; Xu, X.; Chen, S.; Fang, Y.; Shi, X.; Deng, Y.; Wang, Z.L.; Cao, C. Skin-inspired textile-based tactile sensors enable multifunctional sensing of wearables and soft robots. Nano Energy 2022, 96, 107137. [Google Scholar] [CrossRef]

- Gao, S.; Dai, Y.; Nathan, A. Tactile and vision perception for intelligent humanoids. Adv. Intell. Syst. 2022, 4, 2100074. [Google Scholar] [CrossRef]

- Patel, S.; Rao, Z.; Yang, M.; Yu, C. Wearable haptic feedback interfaces for augmenting human touch. Adv. Funct. Mater. 2025, 23, 2417906. [Google Scholar] [CrossRef]

- Feng, K.; Lei, M.; Wang, X.; Zhou, B.; Xu, Q. A flexible bidirectional interface with integrated multimodal sensing and haptic feedback for closed-loop human–machine interaction. Adv. Intell. Syst. 2023, 5, 2300291. [Google Scholar] [CrossRef]

- Ge, R.; Yu, Q.; Zhou, F.; Liu, S.; Qin, Y. Dual-modal piezotronic transistor for highly sensitive vertical force sensing and lateral strain sensing. Nat. Commun. 2023, 14, 6315. [Google Scholar] [CrossRef]

- Alsadik, B.; Spreeuwers, L.; Dadrass Javan, F.; Manterola, N. Mathematical camera array optimization for face 3D modeling application. Sensors 2023, 23, 9776. [Google Scholar] [CrossRef]

- Jiang, Y.; Ji, S.; Sun, J.; Huang, J.; Li, Y.; Zou, G.; Salim, T.; Wang, C.; Li, W.; Jin, H.; et al. A universal interface for plug-and-play assembly of stretchable devices. Nature 2023, 614, 456–462. [Google Scholar] [CrossRef]

- Baruah, R.K.; Yoo, H.; Lee, E.K. Interconnection technologies for flexible electronics: Materials, fabrications, and applications. Micromachines 2023, 14, 1131. [Google Scholar] [CrossRef]

- Zhang, X.; Ericksen, O.; Lee, S.; Akl, M.; Song, M.K.; Lan, H.; Pal, P.; Suh, J.M.; Lindemann, S.; Ryu, J.E.; et al. Atomiclift-off of epitaxial membranes for cooling-free infrared detection. Nature 2025, 641, 98–105. [Google Scholar] [CrossRef]

- Zhang, L.; Mo, Y.; Ma, W.; Wang, R.; Wan, Y.; Bao, R.; Pan, C. High-resolution spatial mapping of pressure distribution by a flexible and piezotronics transistor array. ACS Appl. Electron. Mater. 2023, 5, 5823–5830. [Google Scholar] [CrossRef]

- Sun, Q.J.; Lai, Q.T.; Tang, Z.; Tang, X.G.; Zhao, X.H.; Roy, V.A. Advanced Functional Composite Materials toward E-Skin for Health Monitoring and Artificial Intelligence. Adv. Mater. Technol. 2022, 8, 2201088. [Google Scholar] [CrossRef]

- Xiao, Y.; Liu, Y.; Zhang, B.; Chen, P.; Zhu, H.; He, E.; Zhao, J.; Huo, W.; Jin, X.; Zhang, X.; et al. Bio-plausible reconfigurable spiking neuron for neuromorphic computing. Sci. Adv. 2025, 11, 8. [Google Scholar] [CrossRef] [PubMed]

- Yue, W.; Wu, K.; Li, Z.; Zhou, J.; Wang, Z.; Zhang, T.; Yang, Y.; Ye, L.; Wu, Y.; Bu, W.; et al. Physical unclonable in-memory computing for simultaneous protecting private data and deep learning models. Nat. Commun. 2025, 16, 1031. [Google Scholar] [CrossRef]

- Wu, B.; Li, K.; Wang, L.; Yin, K.; Nie, M.; Sun, L. Revolutionizing sensing technologies: A comprehensive review of flexible acceleration sensors. FlexMat 2025, 2, 55–81. [Google Scholar] [CrossRef]

- Zhao, B.; Xin, Z.; Wang, Y.; Wu, C.; Wang, W.; Shi, R.; Peng, R.; Wu, Y.; Xu, L.; Pan, T.; et al. Bioinspired gas-receptor synergistic interaction for high-performance two-dimensional neuromorphic devices. Matter 2025, 8, 13. [Google Scholar] [CrossRef]

- Shao, L.; Zhang, J.; Chen, X.; Xu, D.; Gu, H.; Mu, Q.; Yu, F.; Liu, S.; Shi, X.; Sun, J.; et al. Artificial intelligence-driven distributed acoustic sensing technology and engineering application. PhotoniX 2025, 6, 4. [Google Scholar] [CrossRef]

| Structural Design Strategies | Principle | Advantages | Disadvantages |

|---|---|---|---|

| In-Plane Segment | Piezoresistive Piezoelectric Mechanical isolation Piezoelectric-piezoresistive synergy | Simplified structure without multilayer stacking | Lower spatial resolution due to multiple elements per pixel |

| Multilayer Stacking | Ion-electron supercapacitor mechanism Capacitance variation of porous dielectric layers Capacitance variation of micro-cone structures Interlocking structure capacitive sensing Friction electricity and capacitance effect Orthogonal integration | Strong resistance to interlayer crosstalk | Complex fabrication and strict interlayer alignment |

| 3D Configuration | Bending piezoelectric cantilever beam 3D Microstrain gauge 3D Tabletop piezoelectric sensor Bionic 3D architecture 3D Microchannel 3D Electrode embedding | High directional resolution and sensitivity | Complex manufacturing process |

| Split-Type Structure | Sine-magnetized thin film Centripetal magnetization split-type Orthogonal overlay magnetic film Electromagnetic induction Visual Optics | Inherent self-decoupling of multidimensional forces | Susceptible to environmental interference |

| Other Structure | Visual-tactile synchronized perception Bionic compound eye structure Multispectral imaging The principle of pinhole cameras Bionic skin structure Foldable optical path with rigid-flexible coupled structure | Excellent force-decoupling capability | High system complexity |

| Structural Design Strategies | Ref | Principle | Sensitivity | Error Rate | Sensing Range | Response Time | Applications |

|---|---|---|---|---|---|---|---|

| In-Plane Segment | [55] Figure 3a | Piezoresistive | Normal: 12.1 kPa−1; Shear: 59.9 N−1 | <12% | Normal: 0–5 kPa; Shear: 0–0.6 N | 3.1 ms | Robotic gripping control |

| [99] Figure 3b | Piezoresistive | Normal:/; Shear: 25.76 N−1 | / | Normal:/; Shear: 5.4–100 mN | 112 ms | Surface texture recognition | |

| [78] Figure 3c | Piezoelectric | Normal: 346.5 pC/N; Shear:/ | / | Normal: 0.009–4.3 N; Shear:/ | / | Robotic dynamic haptics | |

| [79] Figure 3d | Piezoelectric | Normal: 0.0635%/mN; Shear:/ | / | Normal: 50–250 mN; Shear:/ | 10 ms | Closed-loop robotic gripping | |

| [64] Figure 3e | Mechanical isolation | Normal: 3.78 kPa−1; Shear: 0.1 N−1 | / | Normal: 1–25 kPa; Shear:/ | 150–200 ms | Multi-touch gesture recognition | |

| [80] Figure 3f | Piezoelectric-piezoresistive synergy | Normal: 70.6–35.8 mV/N; Shear: 179–261 mV/N | / | Normal: 0.01–7 N; Shear: 0.01–7 N | / | Clinical tissue identification | |

| Multilayer Stacking | [60] Figure 4a | Ion-electron supercapacitor mechanism | Normal:/; Shear:/ | <4.22° | Normal: 0–3 N; Shear: 0–1 N | 64 ms | Robot grasping |

| [66] Figure 4b | Capacitance variation of porous dielectric layers | Normal: 3800 count/N; Shear: Fx/Fy: 682/2818 count/N | / | Normal: 0–50 N; Shear: 0–3.3 N | 1.66 ms | Handling fragile food items | |

| [81] Figure 4c | Capacitance variation of micro-cone structures | Normal: 3.5 kPa−1; Shear: 0.134 N−1 | / | Normal: 0–85 kPa; Shear: 0–0.5 N | 26 ms | Robot grasping | |

| [82] Figure 4d | Interlocking structure capacitive sensing | Normal: 0.19 kPa−1; Shear: 3.0 Pa−1 | / | Normal: 0–100 kPa; Shear:/ | / | Robotic arm obstacle avoidance | |

| [83] Figure 4e | Friction electricity and capacitance effect | Normal: 2.47 V/kPa; Shear: 0.28 MHz/N | / | Normal: 2–30 kPa; Shear: 0.3–1.0 N | / | Human–computer interaction | |

| [62] Figure 4f | Orthogonal integration | Normal: 187.71 kPa−1; Shear:/ | / | Normal: 0–220 kPa; Shear:/ | 50 ms | Robot adaptive grasping | |

| 3D Configuration | [86] Figure 5a | Bending piezoelectric cantilever beam | Normal: 3.74 × 10−7 Pa−1; Shear: 7.91 × 10−7 Pa−1 | ≤3% | Normal: 10–2000 Pa; Shear: −1300–2000 Pa | / | Robot object weight estimation |

| [78] Figure 5b | 3D microstrain gauge | Normal: 8.16 × 10−3 N−1; Shear: 1.09 × 10−2 N−1 | <6% | Normal: 0–2 N; Shear: 0–0.4 N | 69 ms | Wireless monitoring of biomechanical signals | |

| [88] Figure 5c | 3D Tabletop piezoelectric sensor | Normal: −0.1% kPa−1; Shear: ±0.07% kPa−1 | <0.1% | Normal: 0–200 kPa; Shear: 0–10 N | 10 ms | Wireless haptic system | |

| [8] Figure 5d | Bionic 3D architecture | Normal: 5 × 10−5 Pa−1; Shear: 6 × 10−4 N−1 | <1.5° | Normal: 0–80 kPa; Shear: 0–0.5 N | / | Real-time measurement of elastic modulus | |

| [84] Figure 5e | 3D microchannel | Normal:/; Shear:/ | / | Normal: 0–35 N; Shear: 0–13 N | / | Human–machine interaction soft robotics | |

| [85] Figure 5f | 3D Electrode embedding | Normal: 0.0982 kPa−1; Shear: 0.378 kPa−1 | / | Normal: 0–120 kPa; Shear: 0–12 cm | 19 ms | Self-healing proximity/pressure dual-mode sensing | |

| Split-Type Structure | [105] Figure 6a | Sine-magnetized thin film | Normal: 0.01 kPa−1; Shear: 0.1 kPa−1 | / | Normal: 0–120 kPa; Shear: 0–16 kPa | 15 ms | Stable grasping of fragile objects |

| [106] Figure 6b | Centripetal magnetization split-type | Normal:/; Shear:/ | Normal <2.33% Shear <1.33% | Normal:/; Shear:/ | / | Underwater navigation for vessels | |

| [58] Figure 6c | Orthogonal overlay magnetic film | Normal: 0.002 kPa−1; Shear: 0.006–0.039 kPa−1 | / | Normal: 28–260 N; Shear: ±8–±27 N | / | Three-dimensional force distribution measurement of artificial knee joints | |

| [111] Figure 6d | Electromagnetic induction | Normal: 3.87 μV/N; Shear:/ | / | Normal: 0.04–15 N; Shear:/ | / | Black box exploration | |

| [91] Figure 6e | Visual | Normal:/; Shear:/ | / | Normal:/; Shear:/ | / | Robot teaching | |

| [115] Figure 6f | Optics | Normal:/; Shear:/ | Normal <6.7 kPa; Shear <0.56 kPa | Normal: 0–360 kPa; Shear: 0–100 kPa | / | Multi-point 3D pressure distribution detection | |

| Other Structure | [119] Figure 7a | Visual-tactile synchronized perception | Normal:/; Shear:/ | / | Normal: 0.5–50 kg; Shear:/ | 1000 Hz | Slip detection |

| [120] Figure 7b | Bionic compound eye structure | Normal:/; Shear:/ | / | Normal:/; Shear:/ | 30 Hz | Grab experiment | |

| [121] Figure 7c | Multispectral imaging | Normal: ±0.023 N; Shear: ±0.023 N | / | Normal:/; Shear:/ | / | Fragile object grasping | |

| [122] Figure 7d | The principle of pinhole cameras | Normal:/; Shear:/ | / | Normal: 0–11 N; Shear: 0–4 N | 1 ms | Sliding detection and friction measurement | |

| [123] Figure 7e | Bionic skin structure | Normal:/; Shear:/ | <0.2 N | Normal:/; Shear:/ | 30 fps | Object shape recognition | |

| [124] Figure 7f | Foldable optical path with rigid-flexible coupled structure | Normal: 1228.7 kPa−1; Shear: 7339.5 kPa−1 | / | Normal: 0–5 kPa; Shear: 0–1.5 kPa | 6 ms | Joystick human–computer interaction |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, J.; Xia, M.; Chen, P.; Cai, B.; Chen, H.; Xie, X.; Wu, J.; Shi, Q. Innovations in Multidimensional Force Sensors for Accurate Tactile Perception and Embodied Intelligence. AI Sens. 2025, 1, 7. https://doi.org/10.3390/aisens1020007

Chen J, Xia M, Chen P, Cai B, Chen H, Xie X, Wu J, Shi Q. Innovations in Multidimensional Force Sensors for Accurate Tactile Perception and Embodied Intelligence. AI Sensors. 2025; 1(2):7. https://doi.org/10.3390/aisens1020007

Chicago/Turabian StyleChen, Jiyuan, Meili Xia, Pinzhen Chen, Binbin Cai, Huasong Chen, Xinkai Xie, Jun Wu, and Qiongfeng Shi. 2025. "Innovations in Multidimensional Force Sensors for Accurate Tactile Perception and Embodied Intelligence" AI Sensors 1, no. 2: 7. https://doi.org/10.3390/aisens1020007

APA StyleChen, J., Xia, M., Chen, P., Cai, B., Chen, H., Xie, X., Wu, J., & Shi, Q. (2025). Innovations in Multidimensional Force Sensors for Accurate Tactile Perception and Embodied Intelligence. AI Sensors, 1(2), 7. https://doi.org/10.3390/aisens1020007