Students’ Burnout Symptoms Detection Using Smartwatch Wearable Devices: A Systematic Literature Review

Abstract

1. Introduction

2. Objectives

- RQ1.

- What are the research purposes, subjects, and the behavioral patterns of the reviewed studies?

- RQ2.

- Which wearable devices, AI technology, and AI predictive models are adopted in the reviewed studies?

- RQ3.

- Which surveys have been used in the reviewed studies and which mental disorders have been verified?

- RQ4.

- What challenges and limitations are stated in the reviewed studies?

- RQ5.

- What are the ethical considerations that participants had to handle during the usage of wearable AI technology?

- RQ6.

- What are the accuracy and the performance of the surveyed systems and how are they calculated?

- RQ7.

- How are the results of each study exploited and what are the main findings of each of them?

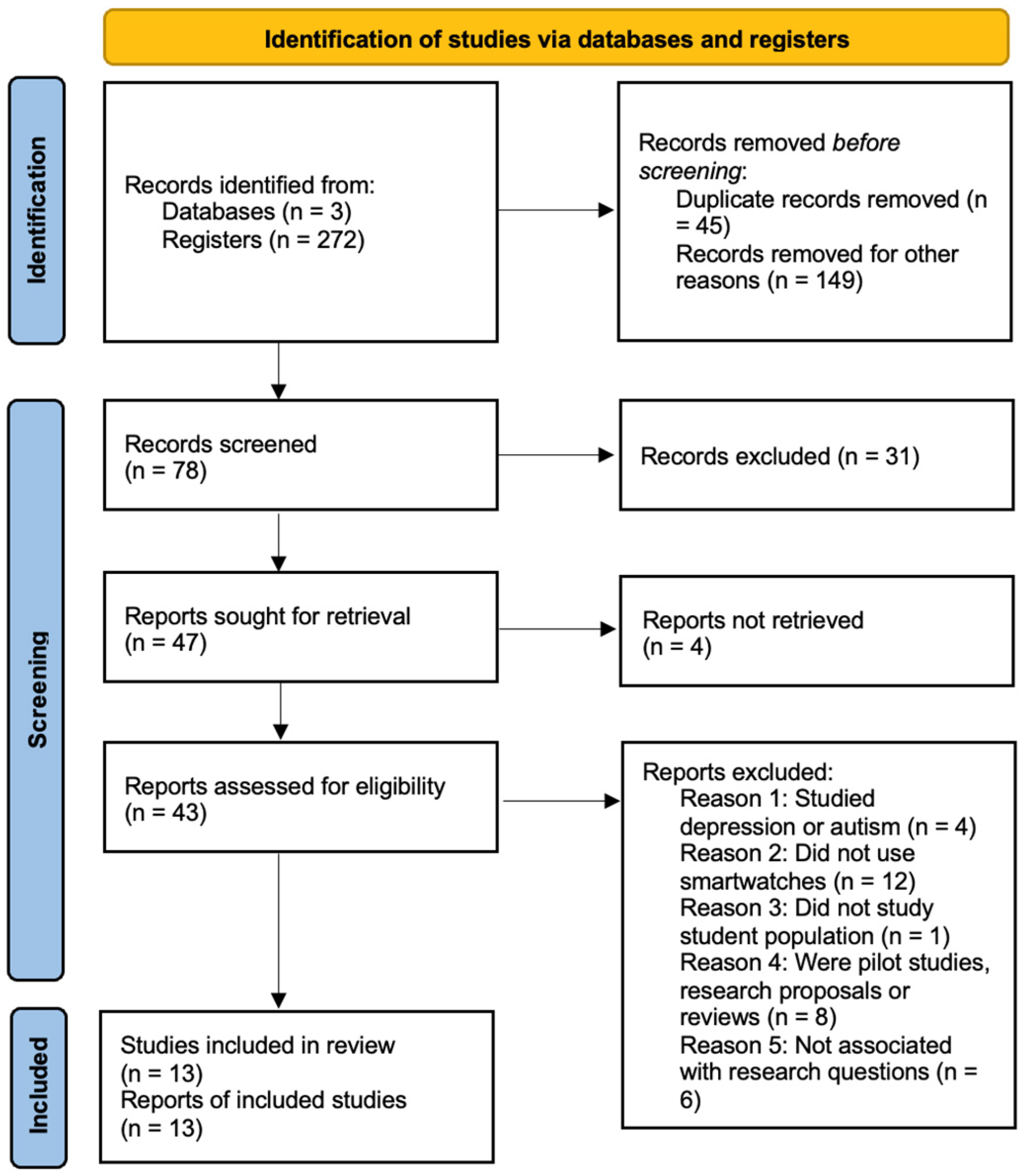

3. Materials and Methods

3.1. Inclusion and Exlusion Criteria

3.2. Searching Strategies

3.3. Open Data Repositories

3.4. Bias of the Selected Studies

4. Results

4.1. Purposes, Subjects, and Behavioral Patterns

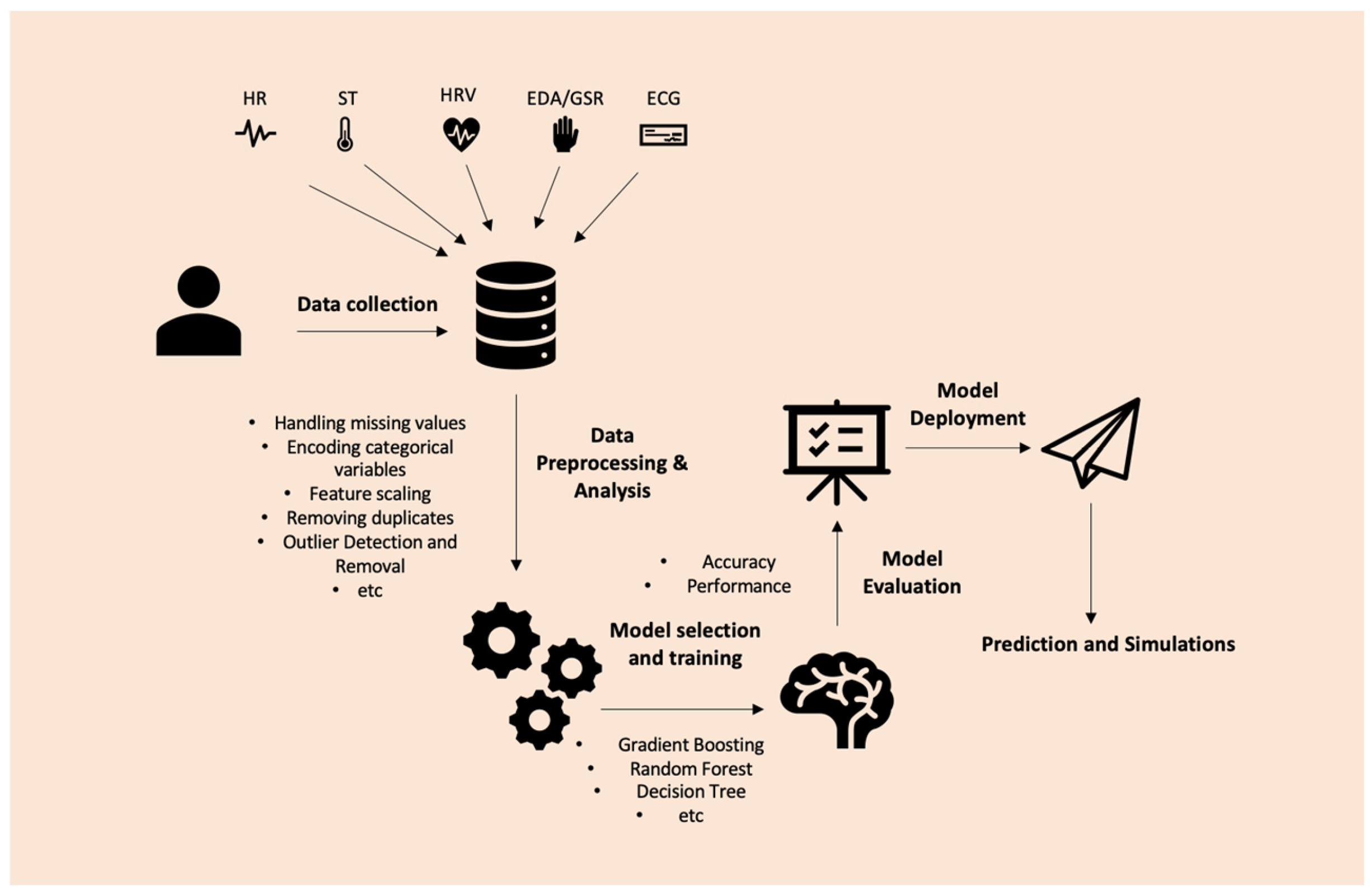

4.2. Wearable Devices, AI Technology, and AI Predictive Models

4.3. Measuring Mental Disorders Using Physiological Signals, Mental Scales, and AI Predictive Models

4.4. Challenges and Limitations in the Reviewed Studies

4.5. Ethical Considerations That Participants Had to Handle During the Usage of Wearable AI Technology

4.6. Performance and Accuracy of the Surveyed Systems

4.6.1. Statistical and Analytical Techniques

4.6.2. Machine Learning Approaches

4.7. Results and Main Findings of Each Survey

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| HR | Heart Rate |

| HRV | Heart Rate Variability |

| EDA | Electrodermal Activity |

| EMA | Ecological Momentary Assessment |

| EPA | Ecological Physiological Assessment |

| ECG | Electrocardiogram |

| EEG | Electroencephalogram |

| ST | Skin Temperature |

| CGBT | Cognitive Behavior Therapy |

| GST | Galvanic Skin Temperature |

| PSS | Perceived Stress Scale |

| SRI | Stress Response Inventory |

| STAI | State–Trait Anxiety Inventory |

| HAMD | Hamilton Depression Scale |

| BDI | Beck Depression Inventory Scale |

| DSM-IV | Diagnostic and Statistical Manual of Mental Disorders |

| LMMs | Linear Mixed Methods |

| LOBO | Leave-One-Beep-Out |

| LOSO | Leave-One-Subject-Out |

| LOTO | Leave-One-Trial-Out |

| RF | Random Forest |

| DCDR | Data Completion with Diurnal Regularizers |

| THAN | Temporally Hierarchical Attention |

| MTL | Multitask Learning |

References

- WHO|Basic Documents. Available online: https://apps.who.int/gb/bd/ (accessed on 29 August 2024).

- Di Mario, S.; Rollo, E.; Gabellini, S.; Filomeno, L. How Stress and Burnout Impact the Quality of Life Amongst Healthcare Students: An Integrative Review of the Literature. Teach. Learn. Nurs. 2024, 19, 315–323. [Google Scholar] [CrossRef]

- Silva, E.; Aguiar, J.; Reis, L.P.; Sá, J.O.E.; Gonçalves, J.; Carvalho, V. Stress among Portuguese Medical Students: The EuStress Solution. J. Med. Syst. 2020, 44, 45. [Google Scholar] [CrossRef]

- Gabola, P.; Meylan, N.; Hascoët, M.; De Stasio, S.; Fiorilli, C. Adolescents’ School Burnout: A Comparative Study between Italy and Switzerland. Eur. J. Investig. Health Psychol. Educ. 2021, 11, 849–859. [Google Scholar] [CrossRef] [PubMed]

- Treluyer, L.; Tourneux, P. Burnout among Paediatric Residents during the COVID-19 Outbreak in France. Eur. J. Pediatr. 2021, 180, 627–633. [Google Scholar] [CrossRef]

- Walburg, V. Burnout among high school students: A literature review. Child. Youth Serv. Rev. 2014, 42, 28–33. [Google Scholar] [CrossRef]

- Beck, M.S.; Fjorback, L.O.; Juul, L. Associations between Mental Health and Sociodemographic Characteristics among Schoolchildren. A Cross-Sectional Survey in Denmark 2019. Scand. J. Public Health 2022, 50, 463–470. [Google Scholar] [CrossRef]

- Govorova, E.; Benítez, I.; Muñiz, J. How Schools Affect Student Well-Being: A Cross-Cultural Approach in 35 OECD Countries. Front. Psychol. 2020, 11, 431. [Google Scholar] [CrossRef]

- Fiorilli, C.; De Stasio, S.; Di Chiacchio, C.; Pepe, A.; Salmela-Aro, K. School Burnout, Depressive Symptoms and Engagement: Their Combined Effect on Student Achievement. Int. J. Educ. Res. 2017, 84, 1–12. [Google Scholar] [CrossRef]

- Liu, W.; Zhang, R.; Wang, H.; Rule, A.; Wang, M.; Abbey, C.; Singh, M.K.; Rozelle, S.; She, X.; Tong, L. Association between Anxiety, Depression Symptoms, and Academic Burnout among Chinese Students: The Mediating Role of Resilience and Self-Efficacy. BMC Psychol. 2024, 12, 335. [Google Scholar] [CrossRef]

- Sarfika, R.; Azzahra, W.; Ananda, Y.; Saifudin, I.M.; Moh., Y.; Abdullah, K.L. Academic Burnout among Nursing Students: The Role of Stress, Depression, and Anxiety within the Demand Control Model. Teach. Learn. Nurs. 2025, in press. [Google Scholar] [CrossRef]

- Kong, L.-N.; Yao, Y.; Chen, S.-Z.; Zhu, J.-L. Prevalence and Associated Factors of Burnout among Nursing Students: A Systematic Review and Meta-Analysis. Nurse Educ. Today 2023, 121, 105706. [Google Scholar] [CrossRef]

- Al-Awad, F.A. Academic Burnout, Stress, and the Role of Resilience in a Sample of Saudi Arabian Medical Students. Med. Arch. Sarajevo Bosnia Herzeg. 2024, 78, 39–43. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, L.; Wu, G.; Yang, R.; Liang, Y. The Longitudinal Relationship between Sleep Problems and School Burnout in Adolescents: A Cross-Lagged Panel Analysis. J. Adolesc. 2021, 88, 14–24. [Google Scholar] [CrossRef] [PubMed]

- Chirkowska-Smolak, T.; Piorunek, M.; Górecki, T.; Garbacik, Ż.; Drabik-Podgórna, V.; Kławsiuć-Zduńczyk, A. Academic Burnout of Polish Students: A Latent Profile Analysis. Int. J. Environ. Res. Public. Health 2023, 20, 4828. [Google Scholar] [CrossRef] [PubMed]

- Koulouris, D.; Menychtas, A.; Maglogiannis, I. An IoT-Enabled Platform for the Assessment of Physical and Mental Activities Utilizing Augmented Reality Exergaming. Sensors 2022, 22, 3181. [Google Scholar] [CrossRef]

- Abd-Alrazaq, A.; Alajlani, M.; Ahmad, R.; AlSaad, R.; Aziz, S.; Ahmed, A.; Alsahli, M.; Damseh, R.; Sheikh, J. The Performance of Wearable AI in Detecting Stress Among Students: Systematic Review and Meta-Analysis. J. Med. Internet Res. 2024, 26, e52622. [Google Scholar] [CrossRef]

- Agarwal, A.K.; Gonzales, R.; Scott, K.; Merchant, R. Investigating the Feasibility of Using a Wearable Device to Measure Physiologic Health Data in Emergency Nurses and Residents: Observational Cohort Study. JMIR Form. Res. 2024, 8, e51569. [Google Scholar] [CrossRef]

- Mason, R.; Godfrey, A.; Barry, G.; Stuart, S. Wearables for Running Gait Analysis: A Study Protocol. PLoS ONE 2023, 18, e0291289. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Rethlefsen, M.L.; Kirtley, S.; Waffenschmidt, S.; Ayala, A.P.; Moher, D.; Page, M.J.; Koffel, J.B.; PRISMA-S Group. PRISMA-S: An Extension to the PRISMA Statement for Reporting Literature Searches in Systematic Reviews. Syst. Rev. 2021, 10, 39. [Google Scholar] [CrossRef]

- Amir-Behghadami, M.; Janati, A. Reporting Systematic Review in Accordance with the PRISMA Statement Guidelines: An Emphasis on Methodological Quality. Disaster Med. Public Health Prep. 2021, 15, 544–545. [Google Scholar] [CrossRef]

- Zotero|Your Personal Research Assistant. Available online: https://www.zotero.org/ (accessed on 4 April 2025).

- Morgan, D.E. Zotero as a Teaching Tool for Independent Study Courses, Honors Contracts, and Undergraduate Research Mentoring. J. Microbiol. Biol. Educ. 2024, 25, e0013224. [Google Scholar] [CrossRef] [PubMed]

- Islam, T.Z.; Wu Liang, P.; Sweeney, F.; Pragner, C.; Thiagarajan, J.J.; Sharmin, M.; Ahmed, S. College Life Is Hard!—Shedding Light on Stress Prediction for Autistic College Students Using Data-Driven Analysis. In Proceedings of the 2021 IEEE 45th Annual Computers, Software, and Applications Conference (COMPSAC), Madrid, Spain, 12–16 July 2021; pp. 428–437. [Google Scholar] [CrossRef]

- Nguyen, J.; Cardy, R.E.; Anagnostou, E.; Brian, J.; Kushki, A. Examining the Effect of a Wearable, Anxiety Detection Technology on Improving the Awareness of Anxiety Signs in Autism Spectrum Disorder: A Pilot Randomized Controlled Trial. Mol. Autism 2021, 12, 72. [Google Scholar] [CrossRef]

- Van Laarhoven, T.R.; Johnson, J.W.; Andzik, N.R.; Fernandes, L.; Ackerman, M.; Wheeler, M.; Melody, K.; Cornell, V.; Ward, G.; Kerfoot, H. Using Wearable Biosensor Technology in Behavioral Assessment for Individuals with Autism Spectrum Disorders and Intellectual Disabilities Who Experience Anxiety. Adv. Neurodev. Disord. 2021, 5, 156–169. [Google Scholar] [CrossRef]

- Conderman, G.; Van Laarhoven, T.; Johnson, J.; Liberty, L. Wearable Technologies for Students with Disabilities. Support Learn. 2021, 36, 664–677. [Google Scholar] [CrossRef]

- Thammasan, N.; Stuldreher, I.; Schreuders, E.; Giletta, M.; Brouwer, A.-M. A Usability Study of Physiological Measurement in School Using Wearable Sensors. Sensors 2020, 20, 5380. [Google Scholar] [CrossRef]

- Lim, K.Y.T.; Nguyen Thien, M.T.; Nguyen Duc, M.A.; Posada-Quintero, H.F. Application of DIY Electrodermal Activity Wristband in Detecting Stress and Affective Responses of Students. Bioengineering 2024, 11, 291. [Google Scholar] [CrossRef]

- Harvey, R.H.; Peper, E.; Mason, L.; Joy, M. Effect of Posture Feedback Training on Health. Appl. Psychophysiol. Biofeedback 2020, 45, 59–65. [Google Scholar] [CrossRef]

- Chiovato, A.; Demarzo, M.; Notargiacomo, P. Evaluation of Mindfulness State for the Students Using a Wearable Measurement System. J. Med. Biol. Eng. 2021, 41, 690–703. [Google Scholar] [CrossRef]

- Lin, B.; Prickett, C.; Woltering, S. Feasibility of Using a Biofeedback Device in Mindfulness Training-a Pilot Randomized Controlled Trial. Pilot Feasibility Stud. 2021, 7, 84. [Google Scholar] [CrossRef]

- Jiao, Y.; Wang, X.; Liu, C.; Du, G.; Zhao, L.; Dong, H.; Zhao, S.; Liu, Y. Feasibility Study for Detection of Mental Stress and Depression Using Pulse Rate Variability Metrics via Various Durations. Biomed. Signal Process. Control 2023, 79, 104145. [Google Scholar] [CrossRef]

- Radhakrishnan, S.; Duvvuru, A.; Kamarthi, S.V. Investigating Discrete Event Simulation Method to Assess the Effectiveness of Wearable Health Monitoring Devices. Procedia Econ. Financ. 2014, 11, 838–856. [Google Scholar] [CrossRef]

- Nelson, B.W.; Harvie, H.M.K.; Jain, B.; Knight, E.L.; Roos, L.E.; Giuliano, R.J. Smartphone Photoplethysmography Pulse Rate Covaries with Stress and Anxiety During a Digital Acute Social Stressor. Psychosom. Med. 2023, 85, 577–584. [Google Scholar] [CrossRef] [PubMed]

- Mocny-Pachońska, K.; Doniec, R.J.; Sieciński, S.; Piaseczna, N.J.; Pachoński, M.; Tkacz, E.J. The Relationship between Stress Levels Measured by a Questionnaire and the Data Obtained by Smart Glasses and Finger Pulse Oximeters among Polish Dental Students. Appl. Sci. 2021, 11, 8648. [Google Scholar] [CrossRef]

- Ma, C.; Xu, H.; Yan, M.; Huang, J.; Yan, W.; Lan, K.; Wang, J.; Zhang, Z. Longitudinal Changes and Recovery in Heart Rate Variability of Young Healthy Subjects When Exposure to a Hypobaric Hypoxic Environment. Front. Physiol. 2022, 12, 688921. [Google Scholar] [CrossRef]

- Wu, W.; Pirbhulal, S.; Zhang, H.; Mukhopadhyay, S.C. Quantitative Assessment for Self-Tracking of Acute Stress Based on Triangulation Principle in a Wearable Sensor System. IEEE J. Biomed. Health Inform. 2019, 23, 703–713. [Google Scholar] [CrossRef]

- Abromavičius, V.; Serackis, A.; Katkevičius, A.; Kazlauskas, M.; Sledevic, T. Prediction of Exam Scores Using a Multi-Sensor Approach for Wearable Exam Stress Dataset with Uniform Preprocessing. Technol. Health Care 2023, 31, 2499–2511. [Google Scholar] [CrossRef]

- Choi, A.; Ooi, A.; Lottridge, D. Digital Phenotyping for Stress, Anxiety, and Mild Depression: Systematic Literature Review. JMIR mHealth uHealth 2024, 12, e40689. [Google Scholar] [CrossRef]

- Odaka, T.; Misaki, D. A proposal for the stress assessment of online education based on the use of a wearable device. J. Res. Appl. Mech. Eng. 2021, 9, 1–9. [Google Scholar] [CrossRef]

- De Arriba Perez, F.; Santos-Gago, J.M.; Caeiro-Rodriguez, M.; Fernandez Iglesias, M.J. Evaluation of Commercial-Off-The-Shelf Wrist Wearables to Estimate Stress on Students. JOVE-J. Vis. Exp. 2018, 136, e57590. [Google Scholar] [CrossRef]

- Johnson, J.; Conderman, G.; Van Laarhoven, T.; Liberty, L. Wearable Technologies: A New Way to Address Student Anxiety. Kappa Delta Pi Rec. 2022, 58, 124–129. [Google Scholar] [CrossRef]

- Price, M.; Hidalgo, J.E.; Bird, Y.M.; Bloomfield, L.S.P.; Buck, C.; Cerutti, J.; Dodds, P.S.; Fudolig, M.I.; Gehman, R.; Hickok, M.; et al. A Large Clinical Trial to Improve Well-Being during the Transition to College Using Wearables: The Lived Experiences Measured Using Rings Study. Contemp. Clin. Trials 2023, 133, 107338. [Google Scholar] [CrossRef] [PubMed]

- Takpor, T.O.; Atayero, A.A. Integrating Internet of Things and EHealth Solutions for Students’ Healthcare. In World Congress on Engineering, WCE 2015; Ao, S.I., Gelman, L., Hukins, D.W.L., Hunter, A., Korsunsky, A.M., Eds.; Lecture Notes in Engineering and Computer Science; International Association Engineers-IAENG: Hong Kong, China, 2015; Volume I, pp. 265–268. [Google Scholar]

- Bopp, T.; Vadeboncoueur, J.D. “It makes me want to take more steps”: Racially and economically marginalized youth experiences with and perceptions of Fitbit Zips® in a sport-based youth development program. J. Sport Dev. 2021, 9, 54–57. Available online: https://jsfd.org/2021/10/01/it-makes-me-want-to-take-more-steps-racially-and-economically-marginalized-youth-experiences-with-and-perceptions-of-fitbit-zips-in-a-sport-based-youth-development-program/ (accessed on 30 August 2024).

- Shui, X.; Chen, Y.; Hu, X.; Wang, F.; Zhang, D. Personality in Daily Life: Multi-Situational Physiological Signals Reflect Big-Five Personality Traits. IEEE J. Biomed. Health Inform. 2023, 27, 2853–2863. [Google Scholar] [CrossRef]

- Yen, H.-Y. Smart Wearable Devices as a Psychological Intervention for Healthy Lifestyle and Quality of Life: A Randomized Controlled Trial. Qual. Life Res. 2021, 30, 791–802. [Google Scholar] [CrossRef]

- Kim, S.Y.; Kim, D.H.; Kim, M.J.; Ko, H.J.; Jeong, O.R. XAI-Based Clinical Decision Support Systems: A Systematic Review. Appl. Sci. 2024, 14, 6638. [Google Scholar] [CrossRef]

- Wack, M.; Coulet, A.; Burgun, A.; Rance, B. Enhancing Clinical Data Warehouses with Provenance and Large File Management: The gitOmmix Approach for Clinical Omics Data. arXiv 2024, arXiv:2409.03288. [Google Scholar] [CrossRef]

- Tang, X.; Upadyaya, K.; Salmela-Aro, K. School Burnout and Psychosocial Problems among Adolescents: Grit as a Resilience Factor. J. Adolesc. 2021, 86, 77–89. [Google Scholar] [CrossRef]

- Kang, S.; Choi, W.; Park, C.Y.; Cha, N.; Kim, A.; Khandoker, A.H.; Hadjileontiadis, L.; Kim, H.; Jeong, Y.; Lee, U. K-EmoPhone: A Mobile and Wearable Dataset with In-Situ Emotion, Stress, and Attention Labels. Sci. Data 2023, 10, 351. [Google Scholar] [CrossRef]

- Zenodo: Open-access repository. Available online: https://about.zenodo.org/projects/ (accessed on 12 April 2024).

- Figshare—Credit for All Your Research. Available online: https://figshare.com/ (accessed on 12 April 2024).

- Dryad|Publish and Preserve Your Data. Available online: https://datadryad.org/ (accessed on 12 April 2024).

- OSF. Available online: https://osf.io/ (accessed on 12 April 2024).

- PhysioNet Databases. Available online: https://physionet.org/about/database/ (accessed on 12 April 2024).

- Dataverse. Available online: https://www.dataverse.gr/ (accessed on 12 April 2024).

- OpenNeuro. Available online: https://openneuro.org/ (accessed on 12 April 2024).

- European Open Science Cloud (EOSC)—European Commission. Available online: https://research-and-innovation.ec.europa.eu/strategy/strategy-research-and-innovation/our-digital-future/open-science/european-open-science-cloud-eosc_en (accessed on 12 April 2024).

- Find Open Datasets and Machine Learning Projects|Kaggle. Available online: https://www.kaggle.com/datasets (accessed on 12 April 2024).

- Saylam, B.; Incel, O.D. Multitask Learning for Mental Health: Depression, Anxiety, Stress (DAS) Using Wearables. Diagnostics 2024, 14, 501. [Google Scholar] [CrossRef]

- Ponzo, S.; Morelli, D.; Kawadler, J.M.; Hemmings, N.R.; Bird, G.; Plans, D. Efficacy of the Digital Therapeutic Mobile App BioBase to Reduce Stress and Improve Mental Well-Being Among University Students: Randomized Controlled Trial. JMIR mHealth uHealth 2020, 8, e17767. [Google Scholar] [CrossRef] [PubMed]

- Ramírez-Moreno, M.A.; Carrillo-Tijerina, P.; Candela-Leal, M.O.; Alanis-Espinosa, M.; Tudón-Martínez, J.C.; Roman-Flores, A.; Ramírez-Mendoza, R.A.; Lozoya-Santos, J.J. Evaluation of a Fast Test Based on Biometric Signals to Assess Mental Fatigue at the Workplace—A Pilot Study. Int. J. Environ. Res. Public. Health 2021, 18, 11891. [Google Scholar] [CrossRef]

- Chandra, V.; Sethia, D. Machine Learning-Based Stress Classification System Using Wearable Sensor Devices. IAES Int. J. Artif. Intell. 2024, 13, 337–347. [Google Scholar] [CrossRef]

- Chalmers, T.; Hickey, B.A.; Newton, P.; Lin, C.-T.; Sibbritt, D.; McLachlan, C.S.; Clifton-Bligh, R.; Morley, J.; Lal, S. Stress Watch: The Use of Heart Rate and Heart Rate Variability to Detect Stress: A Pilot Study Using Smart Watch Wearables. Sensors 2022, 22, 151. [Google Scholar] [CrossRef]

- Tutunji, R.; Kogias, N.; Kapteijns, B.; Krentz, M.; Krause, F.; Vassena, E.; Hermans, E.J. Detecting Prolonged Stress in Real Life Using Wearable Biosensors and Ecological Momentary Assessments: Naturalistic Experimental Study. J. Med. Internet Res. 2023, 25, e39995. [Google Scholar] [CrossRef]

- Jiang, J.-Y.; Chao, Z.; Bertozzi, A.L.; Wang, W.; Young, S.D.; Needell, D. Learning to Predict Human Stress Level with Incomplete Sensor Data from Wearable Devices. In Proceedings of the 28th ACM International Conference on Information & Knowledge Management (CIKM ’19), Beijing, China, 3–7 November 2019; Association Computing Machinery: New York, NY, USA, 2019; pp. 2773–2781. [Google Scholar] [CrossRef]

- Gagnon, J.; Khau, M.; Lavoie-Hudon, L.; Vachon, F.; Drapeau, V.; Tremblay, S. Comparing a Fitbit Wearable to an Electrocardiogram Gold Standard as a Measure of Heart Rate Under Psychological Stress: A Validation Study. JMIR Form. Res. 2022, 6, e37885. [Google Scholar] [CrossRef]

- Pakhomov, S.V.S.; Thuras, P.D.; Finzel, R.; Eppel, J.; Kotlyar, M. Using Consumer-Wearable Technology for Remote Assessment of Physiological Response to Stress in the Naturalistic Environment. PLoS ONE 2020, 15, e0229942. [Google Scholar] [CrossRef]

- Oweis, K.; Quteishat, H.; Zgoul, M.; Haddad, A. A Study on the Effect of Sports on Academic Stress Using Wearable Galvanic Skin Response. In Proceedings of the 2018 12th International Symposium on Medical Information and Communication Technology (ISMICT), Sydney, Australia, 26–28 March 2018; International Symposium on Medical Information and Communication Technology. IEEE: New York, NY, USA, 2018; pp. 99–104. [Google Scholar]

- Kang, J.; Park, D. Stress Management Design Guideline with Smart Devices during COVID-19. Arch. Des. Res. 2022, 35, 115–131. [Google Scholar] [CrossRef]

- Huckins, J.F.; daSilva, A.W.; Wang, W.; Hedlund, E.; Rogers, C.; Nepal, S.K.; Wu, J.; Obuchi, M.; Murphy, E.I.; Meyer, M.L.; et al. Mental Health and Behavior of College Students During the Early Phases of the COVID-19 Pandemic: Longitudinal Smartphone and Ecological Momentary Assessment Study. J. Med. Internet Res. 2020, 22, e20185. [Google Scholar] [CrossRef]

- Manchia, M.; Gathier, A.W.; Yapici-Eser, H.; Schmidt, M.V.; de Quervain, D.; van Amelsvoort, T.; Bisson, J.I.; Cryan, J.F.; Howes, O.D.; Pinto, L.; et al. The Impact of the Prolonged COVID-19 Pandemic on Stress Resilience and Mental Health: A Critical Review across Waves. Eur. Neuropsychopharmacol. 2022, 55, 22–83. [Google Scholar] [CrossRef]

- Chung, E.; Jiann, B.-P.; Nagao, K.; Hakim, L.; Huang, W.; Lee, J.; Lin, H.; Mai, D.B.T.; Nguyen, Q.; Park, H.J.; et al. COVID Pandemic Impact on Healthcare Provision and Patient Psychosocial Distress: A Multi-National Cross-Sectional Survey among Asia-Pacific Countries. World J. Mens Health 2021, 39, 797–803. [Google Scholar] [CrossRef]

- Al-Ajlouni, Y.A.; Park, S.H.; Alawa, J.; Shamaileh, G.; Bawab, A.; El-Sadr, W.M.; Duncan, D.T. Anxiety and Depressive Symptoms Are Associated with Poor Sleep Health during a Period of COVID-19-Induced Nationwide Lockdown: A Cross-Sectional Analysis of Adults in Jordan. BMJ Open 2020, 10, e041995. [Google Scholar] [CrossRef]

- Maslach, C.; Schaufeli, W.B.; Leiter, M.P. Job Burnout. Annu. Rev. Psychol. 2001, 52, 397–422. [Google Scholar] [CrossRef] [PubMed]

- Beckstrand, J.; Yanchus, N.; Osatuke, K. Only One Burnout Estimator Is Consistently Associated with Health Care Providers’ Perceptions of Job Demand and Resource Problems. Psychology 2017, 8, 1019–1041. [Google Scholar] [CrossRef]

- Lin, S.-H.; Huang, Y.-C. Life Stress and Academic Burnout. Act. Learn. High. Educ. 2014, 15, 77–90. [Google Scholar] [CrossRef]

- Balogun, J.A.; Hoeberlein-Miller, T.M.; Schneider, E.; Katz, J.S. Academic Performance Is Not a Viable Determinant of Physical Therapy Students’ Burnout. Percept. Mot. Skills 1996, 83, 21–22. [Google Scholar] [CrossRef]

- El-Masry, R.; Ghreiz, S.; Helal, R.; Audeh, A.; Shams, T. Perceived Stress and Burnout among Medical Students during the Clinical Period of Their Education. Ibnosina J. Med. Biomed. Sci. 2022, 05, 179–188. [Google Scholar] [CrossRef]

- Kodikara, C.; Wijekoon, S.; Meegahapola, L. FatigueSense: Multi-Device and Multimodal Wearable Sensing for Detecting Mental Fatigue. ACM Trans. Comput. Healthc. 2025, 6, 1–36. [Google Scholar] [CrossRef]

- Saeedi, F.; Ansari, R.; Haghgoo, M. Recent Development in Wearable Sensors for Healthcare Applications. Nano-Struct. Nano-Objects 2025, 42, 101473. [Google Scholar] [CrossRef]

- Mandal, A.; Paradkar, M.; Panindre, P.; Kumar, S. AI-Based Detection of Stress Using Heart Rate Data Obtained from Wearable Devices. Procedia Comput. Sci. 2023, 230, 749–759. [Google Scholar] [CrossRef]

- Schroeder, N.L.; Romine, W.L.; Kemp, S.E. A Scoping Review of Wrist-Worn Wearables in Education. Comput. Educ. Open 2023, 5, 100154. [Google Scholar] [CrossRef]

- Chen, F.; Zhao, L.; Pang, L.; Zhang, Y.; Lu, L.; Li, J.; Liu, C. Wearable Physiological Monitoring of Physical Exercise and Mental Health: A Systematic Review. Intell. Sports Health 2025, 1, 11–21. [Google Scholar] [CrossRef]

- Maglogiannis, I.; Trastelis, F.; Kalogeropoulos, M.; Khan, A.; Gallos, P.; Menychtas, A.; Panagopoulos, C.; Papachristou, P.; Islam, N.; Wolff, A.; et al. AI4Work Project: Human-Centric Digital Twin Approaches to Trustworthy AI and Robotics for Improved Working Conditions in Healthcare and Education Sectors. Stud. Health Technol. Inform. 2024, 316, 1013–1017. [Google Scholar] [CrossRef]

- Bieling, P.J.; Antony, M.M.; Swinson, R.P. The State--Trait Anxiety Inventory, Trait Version: Structure and Content Re-Examined. Behav. Res. Ther. 1998, 36, 777–788. [Google Scholar] [CrossRef]

- Knowles, K.A.; Olatunji, B.O. Specificity of Trait Anxiety in Anxiety and Depression: Meta-Analysis of the State-Trait Anxiety Inventory. Clin. Psychol. Rev. 2020, 82, 101928. [Google Scholar] [CrossRef]

- Ghazizadeh, E.; Deigner, H.-P.; Al-Bahrani, M.; Muzammil, K.; Daneshmand, N.; Naseri, Z. DNA Bioinspired by Polyvinyl Alcohol -MXene-Borax Hydrogel for Wearable Skin Sensors. Sens. Actuators Phys. 2025, 386, 116331. [Google Scholar] [CrossRef]

- Ulrich, C.M.; Demiris, G.; Kennedy, R.; Rothwell, E. The Ethics of Sensor Technology Use in Clinical Research. Nurs. Outlook 2020, 68, 720–726. [Google Scholar] [CrossRef]

- Hensel, B.K.; Demiris, G.; Courtney, K.L. Defining Obtrusiveness in Home Telehealth Technologies: A Conceptual Framework. J. Am. Med. Inform. Assoc. 2006, 13, 428–431. [Google Scholar] [CrossRef]

- Vouzis, E.; Maglogiannis, I. Prediction of Early Dropouts in Patient Remote Monitoring Programs. SN Comput. Sci. 2023, 4, 467. [Google Scholar] [CrossRef]

- Ahsan, M.M.; Luna, S.A.; Siddique, Z. Machine-Learning-Based Disease Diagnosis: A Comprehensive Review. Healthcare 2022, 10, 541. [Google Scholar] [CrossRef]

- Bifarin, O.O.; Fernández, F.M. Automated Machine Learning and Explainable AI (AutoML-XAI) for Metabolomics: Improving Cancer Diagnostics. BioRxiv 2023. [Google Scholar] [CrossRef]

- Madden, G.R.; Boone, R.H.; Lee, E.; Sifri, C.D.; Petri, W.A. Predicting Clostridioides Difficile Infection Outcomes with Explainable Machine Learning. EBioMedicine 2024, 106, 105244. [Google Scholar] [CrossRef] [PubMed]

- Nagavelli, U.; Samanta, D.; Chakraborty, P. Machine Learning Technology-Based Heart Disease Detection Models. J. Healthc. Eng. 2022, 2022, 7351061. [Google Scholar] [CrossRef]

- Gatos, I.; Tsantis, S.; Spiliopoulos, S.; Karnabatidis, D.; Theotokas, I.; Zoumpoulis, P.; Loupas, T.; Hazle, J.D.; Kagadis, G.C. A Machine-Learning Algorithm Toward Color Analysis for Chronic Liver Disease Classification, Employing Ultrasound Shear Wave Elastography. Ultrasound Med. Biol. 2017, 43, 1797–1810. [Google Scholar] [CrossRef]

- Mollica, G.; Francesconi, D.; Costante, G.; Moretti, S.; Giannini, R.; Puxeddu, E.; Valigi, P. Classification of Thyroid Diseases Using Machine Learning and Bayesian Graph Algorithms. IFAC-Pap. 2022, 55, 67–72. [Google Scholar] [CrossRef]

- Ghosal, S.; Jain, A. Depression and Suicide Risk Detection on Social Media Using fastText Embedding and XGBoost Classifier. Procedia Comput. Sci. 2023, 218, 1631–1639. [Google Scholar] [CrossRef]

- Pavlopoulos, A.; Rachiotis, T.; Maglogiannis, I. An Overview of Tools and Technologies for Anxiety and Depression Management Using AI. Appl. Sci. 2024, 14, 9068. [Google Scholar] [CrossRef]

- Chen, D.; Yao, Y.; Moser, E.D.; Wang, W.; Soliman, E.Z.; Mosley, T.; Pan, W. A Novel Electrocardiogram-Based Model for Prediction of Dementia—The Atherosclerosis Risk in Communities (ARIC) Study. J. Electrocardiol. 2025, 88, 153832. [Google Scholar] [CrossRef]

- Hasan, M.N.; Hossain, M.A.; Rahman, M.A. An Ensemble Based Lightweight Deep Learning Model for the Prediction of Cardiovascular Diseases from Electrocardiogram Images. Eng. Appl. Artif. Intell. 2025, 141, 109782. [Google Scholar] [CrossRef]

- Lu, S.C.; Chen, G.Y.; Liu, A.S.; Sun, J.T.; Gao, J.W.; Huang, C.H.; Tsai, C.L.; Fu, L.C. Deep Learning–Based Electrocardiogram Model (EIANet) to Predict Emergency Department Cardiac Arrest: Development and External Validation Study. J. Med. Internet Res. 2025, 27, e67576. [Google Scholar] [CrossRef]

- Wang, R.; Zhuang, P. A Strategy for Network Multi-Layer Information Fusion Based on Multimodel in User Emotional Polarity Analysis. Int. J. Cogn. Comput. Eng. 2025, 6, 120–130. [Google Scholar] [CrossRef]

- Zlatintsi, A.; Filntisis, P.P.; Garoufis, C.; Efthymiou, N.; Maragos, P.; Menychtas, A.; Maglogiannis, I.; Tsanakas, P.; Sounapoglou, T.; Kalisperakis, E.; et al. E-Prevention: Advanced Support System for Monitoring and Relapse Prevention in Patients with Psychotic Disorders Analyzing Long-Term Multimodal Data from Wearables and Video Captures. Sensors 2022, 22, 7544. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Ren, Y.; Kong, X.; Liu, S. Learning Analytics Based on Wearable Devices: A Systematic Literature Review From 2011 to 2021. J. Educ. Comput. Res. 2022, 60, 1514–1557. [Google Scholar] [CrossRef]

- Jeong, S.C.; Kim, S.-H.; Park, J.Y.; Choi, B. Domain-Specific Innovativeness and New Product Adoption: A Case of Wearable Devices. Telemat. Inform. 2017, 34, 399–412. [Google Scholar] [CrossRef]

- Albareda-Tiana, S.; Vidal-Raméntol, S.; Fernández-Morilla, M. Implementing the Sustainable Development Goals at University Level. Int. J. Sustain. High. Educ. 2018, 19, 473–497. [Google Scholar] [CrossRef]

- Chaleta, E.; Saraiva, M.; Leal, F.; Fialho, I.; Borralho, A. Higher Education and Sustainable Development Goals (SDG)—Potential Contribution of the Undergraduate Courses of the School of Social Sciences of the University of Évora. Sustainability 2021, 13, 1828. [Google Scholar] [CrossRef]

- Chong, P.L.; Ismail, D.; Ng, P.K.; Kong, F.Y.; Basir Khan, M.R.; Thirugnanam, S. A TRIZ Approach for Designing a Smart Lighting and Control System for Classrooms Based on Counter Application with Dual PIR Sensors. Sensors 2024, 24, 1177. [Google Scholar] [CrossRef]

- Vergara-Rodríguez, D.; Antón-Sancho, Á.; Fernández-Arias, P. Variables Influencing Professors’ Adaptation to Digital Learning Environments during the COVID-19 Pandemic. Int. J. Environ. Res. Public Health 2022, 19, 3732. [Google Scholar] [CrossRef]

| Exclusion Reasons | Retrieved Studies |

|---|---|

| R1. Studied a mental disorder, e.g., depression, autism spectrum, etc. | [25,26,27,28] |

| R2. Did not use smartwatches | [29,30,31,32,33,34,35,36,37,38,39] |

| R3. Did not study student population | [40] |

| R4. Were pilot studies, research proposals, or reviews | [41,42,43,44,45] |

| R5. Not associated with research questions | [40,46,47,48,49,50] |

| Public Databases | Overview | Reference |

|---|---|---|

| Zenodo | Open-access repository developed by CERN for all research disciplines, including health and biomedical sciences. It provides broad interdisciplinary coverage, DOI assignment, and integration with other repositories. | [54] |

| Figshare | Digital repository for research sharing outputs, datasets, figures, and presentations. It provides a user-friendly interface, high visibility, and metadata support. | [55] |

| Dryad | Open repository for life science and medical research, primarily for datasets underlying publications. It provides peer-reviewed datasets, integration with journal submissions. | [56] |

| Open Science Framework | Collaborative platform for sharing and managing research data, including mental health and epidemiology studies. It has strong version control and project management tools. | [57] |

| PhysioNet | Provides access to biomedical datasets, including physiological signals, such as ECG or EEG. It affords high-quality curated datasets, widely used in clinical and machine learning research. | [58] |

| Dataverse | Open-source repository developed by Harvard University, hosting various datasets, including public health data. There are well-structured metadata and institutional support. | [59] |

| OpenNeuro | Public repository for neuroimaging datasets, including fMRI, EEG, and MEG. Provides a standardized format, integration with neuroimaging software. It is focused on neuroimaging data. | [60] |

| European Open Science Cloud (EOSC) | European initiative for research data, including biomedical datasets. | [61] |

| Kaggle | Online platform that hosts datasets, notebooks, and machine learning competitions, including health-related datasets. It is a large community with strong support for data science and AI applications. | [62] |

| Bias | Reviewed Studies |

|---|---|

| Reporting | [53,63,64,65] |

| Cognitive | [53,66,67,68] |

| Selection | [53,66,69,70,71,72] |

| Measurement | [67,68,69,70] |

| Design | [63,64,65,67] |

| Content | [53,65,68,69,70,71,72] |

| Demographic | [53,64,72] |

| Purposes | Studies | N |

|---|---|---|

| Predict | Stress levels using deep learning machines [70], mental stress levels [71], mental well-being, depression, stress, and anxiety [72], the predictive utility of pretreatment HRV in effectiveness of GCBT in reducing depression and anxiety symptoms [73], prediction of stress when exposed to an acute stressor [68] | 5 |

| Assess the efficacy | Efficacy of BioBase for anxiety and stress [65] | 1 |

| Detect | Stress levels [71,73], ecological stress [71], fatigue detection [72], response to psychological stress in everyday life [66] | 5 |

| Management | Attention management [54], stress management with cognitive process with smart device interventions [74] | 2 |

| Anxiety | Depression | Stress | Fatigue | Attention | |

|---|---|---|---|---|---|

| Empatica E4 Wristband | 2 | 1 | |||

| Microsoft Band 2 | 1 | ||||

| Fitbit Versa 2/Fitbit | 1 | 1 | 4 | ||

| BioBeam | 1 | 1 | |||

| Smart Wristband (Not Specified) | |||||

| Huawei Band 6 with Photoplethysmography Sensors | 1 | 1 | |||

| Apple Watch | 1 | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lialiou, P.; Maglogiannis, I. Students’ Burnout Symptoms Detection Using Smartwatch Wearable Devices: A Systematic Literature Review. AI Sens. 2025, 1, 2. https://doi.org/10.3390/aisens1010002

Lialiou P, Maglogiannis I. Students’ Burnout Symptoms Detection Using Smartwatch Wearable Devices: A Systematic Literature Review. AI Sensors. 2025; 1(1):2. https://doi.org/10.3390/aisens1010002

Chicago/Turabian StyleLialiou, Paschalina, and Ilias Maglogiannis. 2025. "Students’ Burnout Symptoms Detection Using Smartwatch Wearable Devices: A Systematic Literature Review" AI Sensors 1, no. 1: 2. https://doi.org/10.3390/aisens1010002

APA StyleLialiou, P., & Maglogiannis, I. (2025). Students’ Burnout Symptoms Detection Using Smartwatch Wearable Devices: A Systematic Literature Review. AI Sensors, 1(1), 2. https://doi.org/10.3390/aisens1010002