1. Introduction

Polarimetric weather radars provide enhanced insights into precipitation characteristics by enabling the estimation parameters such as the mean particle size, the hydrometeor type, and the drop shape. Traditional Z–R relationships rely on empirical assumptions and often face challenges in capturing the spatial and temporal complexities of precipitation. Thus, the conversion of radar reflectivity to rain rate at the surface presents a timeless challenge in radar meteorology. In response, machine learning offers a data-driven alternative that has proven potential in improving the accuracy and adaptability of rainfall retrieval.

In recent years, neural networks have gained attraction in improving rainfall estimation from radar data, due to their various advantages over traditional Z–R relationships. Xiao et al. [

1] developed a three-layer perceptron using both horizontal (Zh) and differential reflectivity (ZDR), which resulted in more accurate results that employing Zh alone. With regard to rainfall prediction, Teschl et al. [

2] applied a back-propagation neural network (BPNN) using vertical reflectivity (Zv) profiles and precipitation height to predict rainfall 5 min ahead. Recent advancements introduced enhanced architectures like convolutional neural networks (CNNs) [

3,

4,

5]. Zhang et al. [

3] applied a 1D CNN for real-time precipitation estimation using both radar and meteorological data.

This work proposes a dual neural network framework to convert ground-based radar reflectivity into rainfall rates, combining satellite and in situ data with radar observations that are aligned to GPM DPR.

2. Materials and Methods

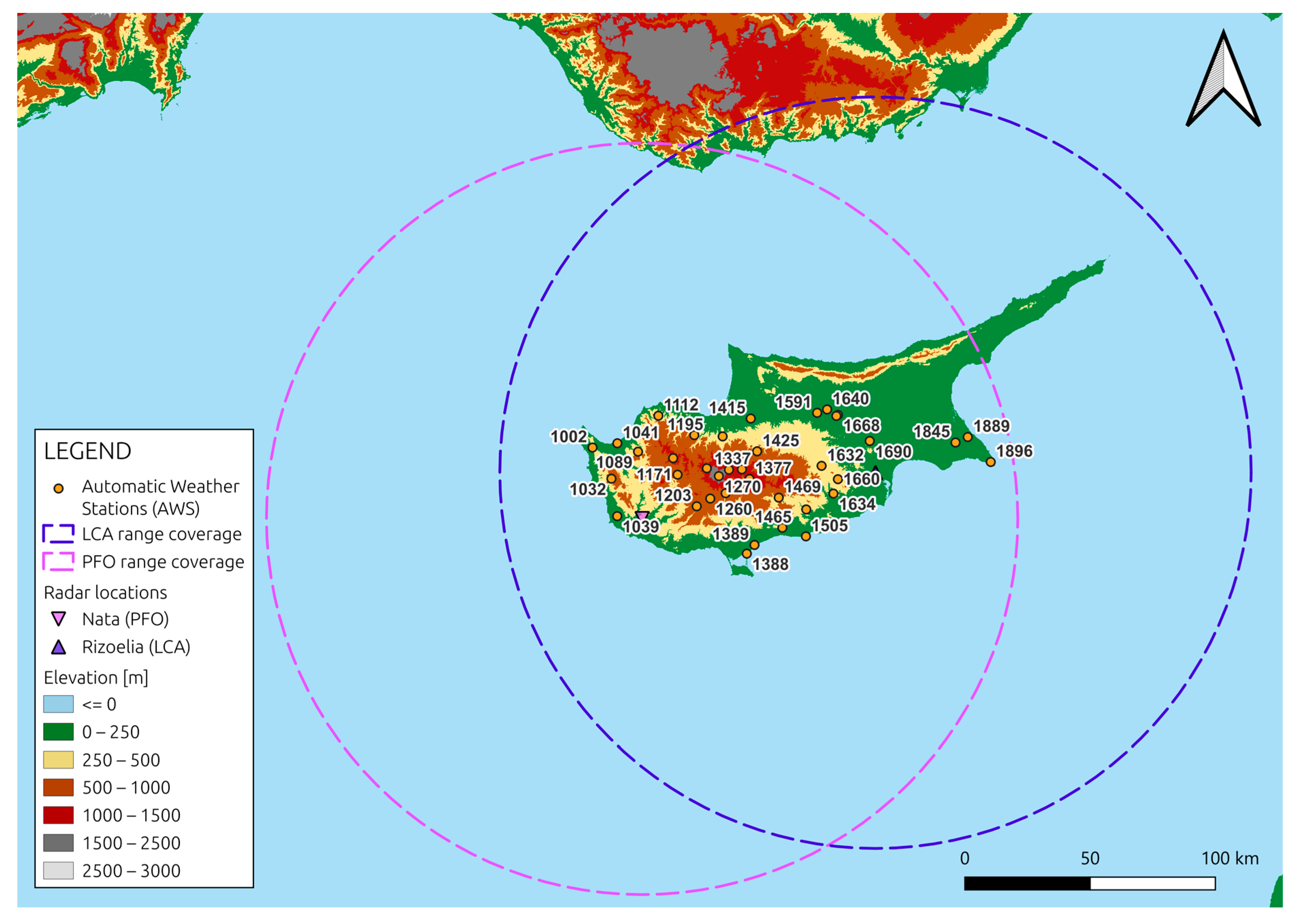

This study uses data from the Cyprus radar network, which includes two ground-based X-band dual-polarization radars operated by the Cyprus Department of Meteorology. The stations are located in Rizoelia (LCA) and Nata (PFO). The radar reflectivity measurements are calibrated using data from the GPM DPR Ku-band (GPM Ku). Additionally, rainfall observations from 37 automatic weather stations (AWS) equipped with tipping bucket rain gauges (pulse data) were used (see

Figure 1). The rainfall data were provided as timestamped events marking a fixed rainfall depth. These timestamps were converted from local time to UTC, and rainfall intensity (mm/h) was calculated by dividing the tip volume by the time difference between events, and subsequently multiplying by 3600. Events with implausibly long time gaps or unrealistically high intensities were excluded to ensure data quality.

The methodology of this study is based on a dual-stage framework designed to convert ground radar reflectivity measurements into near-surface rainfall rates. In the first stage, a feedforward neural network is used to correct the ground radar reflectivity using volume-matched reflectivity from the GPM Ku-band. In the second stage, a separate neural network model estimates near-surface rainfall rates based on the corrected reflectivity data and corresponding pulse rainfall measurements.

The first step in developing the neural network framework was to define the input and output vectors. For the first network (Stage 1), the input vector included the volume-matched raw ground reflectivity, the corresponding range to the radar, the Path-Integrated Reflectivity (PIR), and the GPM overpass time. The output vector consisted of the volume-matched GPM Ku reflectivity. The corrected ground reflectivity produced by this first network was then used as an input in the second network (Stage 2) to estimate near-surface rainfall rates using pulse data.

3. Results

The analysis of the results focuses on the hydrological year 2019–2020, identified as a stable calibration period for both the LCA and PFO radars. In the first stage of the framework, the neural network model for the LCA radar demonstrated strong and consistent performance across the training, validation, and test sets, showing high agreement with the target values and minimal bias. In contrast, the model for the PFO radar showed moderately lower performance, with a slightly weaker fit and a consistent tendency to slightly underestimate reflectivity values. Despite these differences, both models delivered acceptable results for the purposes of reflectivity correction.

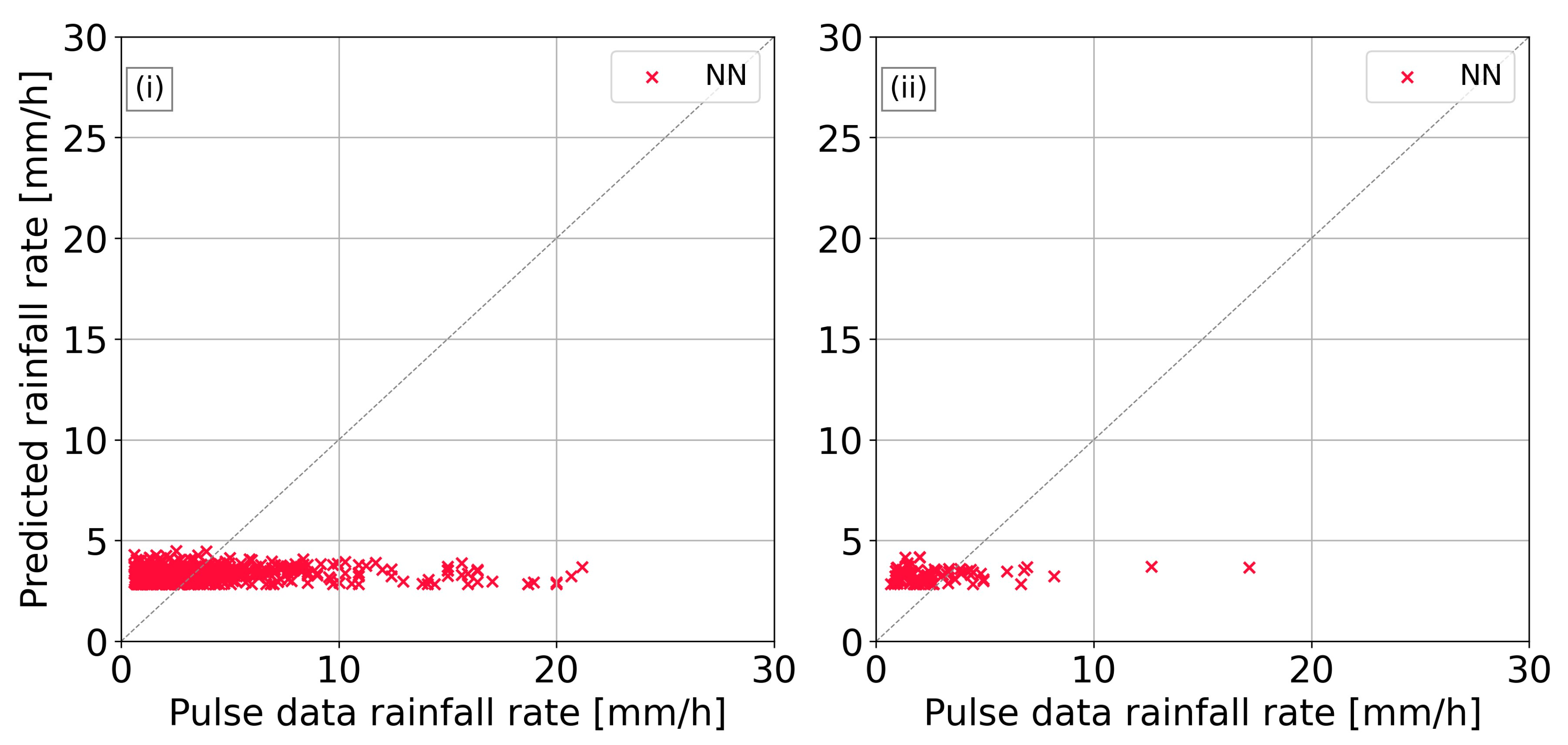

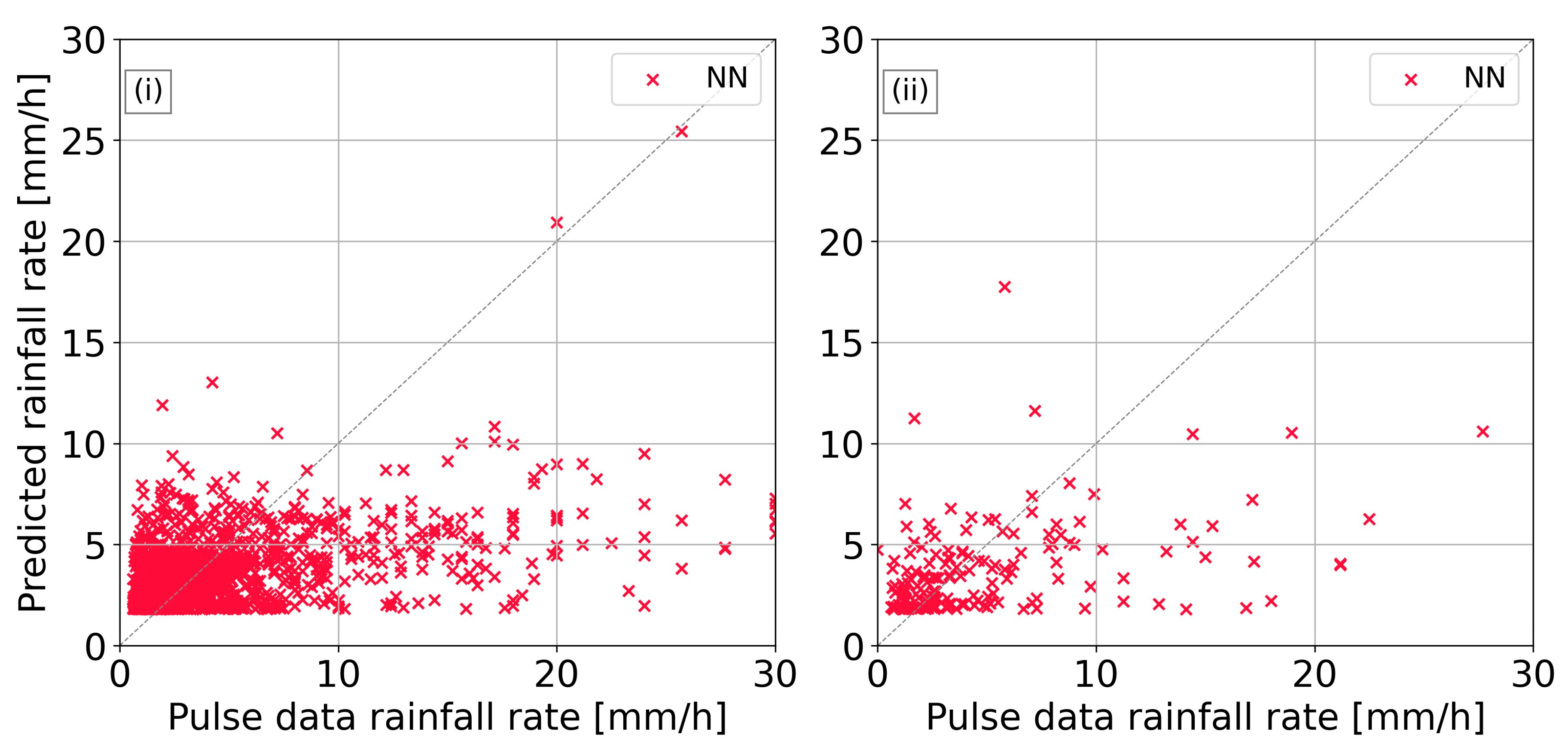

Figure 2 and

Figure 3 illustrate the performance of the neural network models for LCA and PFO radars, respectively. For the LCA radar, the neural network consistently underestimated rainfall rates in both training and test sets, failing to predict values above approximately 5 mm/h, indicating a limitation in capturing higher rainfall intensities. Similarly, for the PFO radar, the neural network also showed a tendency to underestimate, particularly struggling with low-intensity events, as it did not predict rainfall rates below about 1.5 mm/h.

4. Discussion and Conclusions

The first stage of the dual-stage framework, which corrected ground radar reflectivity using volume-matched GPM Ku data, proved effective and improved the quality of radar inputs, offering a potential alternative to traditional calibration methods. However, the second stage, which estimated rainfall rates from the corrected reflectivity using pulse data, faced significant limitations. One key challenge was the continued underestimation in the corrected reflectivity values, which likely contributed to the underprediction of rainfall rates by the neural network model. Additionally, the pulse-derived rainfall rates, being indirect estimates from tipping bucket gauges, introduced further uncertainty into model training and performance.

Despite the above-described limitations, the results highlight the potential of data-driven approaches for radar rainfall estimation. The effective performance of the first stage demonstrates that neural networks can play a valuable role in enhancing radar reflectivity quality. Building on this foundation, future research should explore more advanced or alternative AI models to improve radar rainfall rate estimations, particularly those capable of capturing extreme and low-intensity events more accurately. Incorporating additional meteorological variables and using more directly comparable training data (e.g., disdrometer measurements) could further enhance model reliability and performance, supporting the development of more accurate AI-based precipitation estimation frameworks.

Author Contributions

Conceptualization, E.L. and S.M.; methodology, E.L. and G.G.; software, E.L.; validation, G.G. and S.M.; formal analysis, E.L.; investigation, E.L.; data curation, E.L.; writing—original draft preparation, E.L. and G.G.; writing—review and editing, S.M. and D.G.H.; visualization, E.L.; supervision, D.G.H.; project administration, D.G.H.; funding acquisition, D.G.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the AI-OBSERVER project (Grant Agreement No 101079468) and the EXCELSIOR Teaming project (Grant Agreement No. 857510).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The ground radar data, as well as the AWS data are available upon request from the Cyprus Department of Meteorology, subject to their data-sharing policy. The GPM data are publicly accessible and were obtained from the NASA Earthdata portal (

https://search.earthdata.nasa.gov/, accessed on 18 April 2023).

Acknowledgments

The present work was carried out in the framework of the AI-OBSERVER project (

https://ai-observer.eu/, accessed on 2 July 2025) titled “Enhancing Earth Observation capabilities of the Eratosthenes Centre of Excellence on Disaster Risk Reduction through Artificial Intelligence”, which has received funding from the European Union’s Horizon Europe Framework Programme HORIZON-WIDERA-2021-ACCESS-03 (Twinning) under the Grant Agreement No 101079468. The authors acknowledge the ‘EXCELSIOR’: ERATOSTHENES: EΧcellence Research Centre for Earth Surveillance and Space-Based Monitoring of the Environment H2020 Widespread Teaming project (

http://www.excelsior2020.eu, accessed on 2 July 2025). The ‘EXCELSIOR’ project has received funding from the European Union’s Horizon 2020 research and innovation programme under Grant Agreement No 857510, from the Government of the Republic of Cyprus through the Directorate General for the European Programmes, Coordination and Development and the Cyprus University of Technology. The authors also acknowledge the Department of Meteorology of the Republic of Cyprus for providing the X-band radar data, as well as the rain gauge data.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Xiao, R.; Chandrasekar, V. Development of a Neural Network Based Algorithm for Rainfall Estimation from Radar Observations. IEEE Trans. Geosci. Remote Sens. 1997, 35, 160–171. [Google Scholar]

- Teschl, R.; Randeu, W.L.; Teschl, F. Improving Weather Radar Estimates of Rainfall Using Feed-forward Neural Networks. Neural Netw. 2007, 20, 519–527. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Chen, S.; Tian, W.; Chen, S. Radar Reflectivity and Meteorological Factors Merging-Based Precipitation Estimation Neural Network. Earth Space Sci. 2021, 8, e2021EA001811. [Google Scholar] [CrossRef]

- Caseri, A.N.; Lima Santos, L.B.; Stephany, S. A Convolutional Recurrent Neural Network for Strong Convective Rainfall Nowcasting Using Weather Radar Data in Southeastern Brazil. Artif. Intell. Geosci. 2022, 3, 8–13. [Google Scholar] [CrossRef]

- Yang, H.; Wang, T.; Zhou, X.; Dong, J.; Gao, X.; Niu, S. Quantitative Estimation of Rainfall Rate Intensity Based on Deep Convolutional Neural Network and Radar Reflectivity Factor. In Proceedings of the 2nd International Conference on Big Data Technologies, Jinan, China, 28–30 August 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 244–247. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).