1. Introduction and Background

Accurate patient weight estimation is essential for the safe administration of weight-based medications, fluids, and therapies in emergency and critical care settings [

1]. In cases such as acute ischemic stroke requiring thrombolytics or critically ill patients needing sedation, even small dosing errors can have life-threatening consequences [

2,

3]. However, obtaining a measured weight during emergency care is often infeasible—patients may be unconscious, uncooperative, immobilized, or attached to equipment that prevents the use of standing or stretcher scales.

When direct weight measurement is not possible, clinicians must rely on estimates. These may come from patients themselves, family members, or healthcare providers’ visual assessments. Alternatively, clinicians may use anthropometric formulas [

4]. However, all these approaches are prone to substantial error, particularly in underweight or obese patients [

5]. Deviations greater than 10% from actual weight are common and can lead to under- or overdosing of weight-based therapies, increasing the risk of adverse outcomes. With more than 30% of adults in the U.S. classified as obese, a significant proportion of ED patients are at risk of weight-based dosing errors [

6].

Three-dimensional (3D) imaging technologies provide a promising alternative for estimating total body weight (TBW). Depth cameras, such as the Intel RealSense D415, can generate point clouds of a patient’s body, which can then be processed using machine learning algorithms to estimate body volume and, subsequently, weight and body composition. Despite this promise, these systems have not yet been validated across diverse patient populations, including those who are underweight or obese, and they have not been rigorously tested in emergency settings [

7]. Furthermore, no current solutions provide real-time, automated estimation of lean body weight (LBW) or ideal body weight (IBW), both of which are critical for safely dosing hydrophilic medications in obese patients [

8].

This study introduces a 3D camera-based AI system designed to estimate TBW, LBW, and IBW from a single supine image. By addressing gaps in performance across body types, the absence of LBW/IBW estimation, and the limited applicability in emergency care, this work establishes a foundation for improving the safety of weight-based dosing in acute care.

The Importance of Accurate Weight Estimation in Emergencies

Many emergency drugs have narrow therapeutic windows, where dosing errors can cause treatment failure or serious side effects [

9]. For example, incorrect dosing of thrombolytics may lead to either ineffective clot dissolution or hemorrhage [

10]. Likewise, sedatives and neuromuscular blockers require precise dosing to prevent complications from overdoses. Obesity complicates dosing further: using TBW alone can result in overdosing hydrophilic drugs, while using IBW or LBW alone would result in the overdosing of lipophilic drugs [

11]. A method that could provide estimates of IBW or LBW in addition to TBW would allow for the correct weight to be used for each drug class. This could reduce the risk of incorrect dosing and improve patient safety [

12].

2. Objective

The objective of this study is to develop and validate a real-time, non-contact system for estimating Total Body Weight (TBW), Ideal Body Weight (IBW), and Lean Body Weight (LBW) using a 3D depth camera combined with a convolutional neural network (CNN). This approach is designed to overcome the limitations of existing weight estimation techniques in emergency care settings, particularly for patients who are unconscious, immobilized, or obese. Specifically, this research aims to:

Design a fully automated pipeline that processes a single supine image to generate volumetric features and predict body weight components.

Evaluate the feasibility and accuracy of the proposed system in a simulated emergency care environment.

Compare the system’s performance with conventional weight estimation methods, including visual assessment, anthropometric formulas, and tape-based tools.

Demonstrate the clinical utility of integrating 3D imaging and AI for rapid, calculation-free decision-making in time-critical scenarios.

3. Related Work

A wide range of weight estimation techniques have been developed, ranging from visual assessments and patient-reported values to anthropometric formulas and tape-based tools [

4,

8]. However, most of these methods lack sufficient accuracy, particularly in patients with obesity, underscoring the need for more reliable and scalable approaches such as three-dimensional imaging integrated with artificial intelligence [

13].

Although three-dimensional morphometry paired with machine learning (ML) has reduced error rates in laboratory settings (e.g., Wells et al., MAE ≈ 2.2 kg), most studies have relied on standing postures, multi-frame scans, or reflective markers, which limits their applicability in emergency care. Moreover, none of the reported systems provide lean body weight (LBW) or ideal body weight (IBW) estimates, both of which are critical for accurate drug dosing.

3.1. Established Weight Estimation Methods

3.1.1. Self-Reported or Family-Reported Weight

Asking patients for self-estimates is convenient but often unreliable during emergencies. While self-reported weight can be accurate in stable or non-emergency contexts [

8], in emergencies where patients may be confused, unconscious, or intubated, this option is frequently unavailable [

7]. Family member estimates are also commonly inaccurate, with errors particularly frequent in overweight or underweight patients and in those who have recently experienced weight changes.

3.1.2. Visual Estimation by Clinicians

Visual estimation is frequently used in busy emergency departments (EDs), but studies report error margins exceeding ±10–15%, particularly in underweight or obese patients [

2,

4]. Given the risks associated with inaccurate dosing, visual estimation is inherently unreliable for emergency use.

3.1.3. Anthropometric Formulas and Tape-Based Methods

Anthropometric formulas (e.g., the Lorenz method) and tools such as the PAWPER XL-MAC tape use body measurements to generate weight estimates [

14]. Although generally more accurate than visual estimation, their reliability decreases in patients with atypical body shapes or extremes of habitus [

1,

11,

15]. Furthermore, these methods often require multiple measurements and optimal patient positioning, which can be difficult to achieve in emergency settings.

3.1.4. 3D Camera-Based Approaches

Recent advances in three-dimensional imaging have enabled non-contact, rapid body scanning for weight estimation [

7]. These systems generate point clouds and apply machine learning models to estimate weight based on derived volumetric data. Early studies suggest improved accuracy compared to traditional methods, even in patients at extremes of body habitus [

7,

8]. However, no systems currently provide real-time estimates of TBW, LBW, and IBW, and most remain untested in real-world emergency environments. Although advances in hardware and deep learning architectures are ongoing, broader validation is essential before routine clinical implementation.

3.2. Gaps in the Literature

Several gaps limit the current understanding of weight estimation techniques. Many studies underrepresent key demographics, such as underweight and obese individuals, thereby reducing generalizability. Few systems have been evaluated under the time constraints and cognitive demands typical of emergency care. Critically, no available tools provide calculation-free, real-time estimates of LBW and IBW, both of which are essential for safe drug dosing in patients with obesity. Addressing these limitations is vital to improving the safety and precision of emergency pharmacotherapy.

Studies such as [

4,

7] underscore the absence of real-time, automated estimation systems capable of accommodating diverse body types or the unique constraints of emergency care.

4. Methodology

An Intel RealSense™ D415 3D depth camera (Intel, Santa Clara, CA, USA) was suspended approximately two meters above each supine patient. The device captured RGB and depth images, which were fused to generate a 3D point cloud of the patient’s body. During preprocessing, extraneous elements such as the bed and background structures were removed to isolate body points. The point cloud was then divided into approximately 100 horizontal slices for volumetric feature extraction, enabling accurate calculation of segmental body volumes. A convolutional neural network (CNN)-based deep learning pipeline was employed to estimate TBW, IBW, and LBW, incorporating demographic variables such as age and gender into the prediction model. CNNs were selected for their superior performance in modeling spatial relationships in image-based volumetric data. Compared with traditional machine learning models such as random forests or linear regression, CNNs achieved lower mean absolute error (MAE) (1.8 kg vs. 3.1 kg) and demonstrated greater robustness in handling anatomical variability during initial model evaluations.

Our preliminary study demonstrated that a 3D camera-based system was both more accurate and more practical than traditional methods of weight estimation, particularly in emergency medical settings. Three key findings underscored its clinical utility.

First, the system maintained high accuracy in individuals with obesity or morbid obesity, unlike many conventional methods that became unreliable when BMI exceeded 30. The 3D imaging consistently achieved high P10 values and low mean percentage error (MPE), meeting the clinically important ±10% accuracy threshold.

Second, the system fully automated the estimation of LBW and IBW, eliminating the need for manual calculations. This automation was especially critical for obese patients, in whom using TBW alone could result in significant dosing errors.

Finally, the method proved both reliable and time-efficient in emergency scenarios. Simulation and observational studies indicated that weight predictions were typically completed in under one minute, comparable to or faster than obtaining a patient’s self-reported weight or calibrating an in-bed scale.

Error Metrics

The accuracy of the weight estimation methods evaluated in this study was assessed using Mean Absolute Percentage Error (MAPE) and Mean Percentage Error (MPE). MAPE measured the average magnitude of prediction errors regardless of their direction (overestimation or underestimation), whereas MPE reflected the directionality of errors:

Mean Absolute Percentage Error (MAPE): Always expressed as a positive value.

Mean Percentage Error (MPE): Can take either negative or positive values, reflecting whether predictions systematically under- or overestimated the true weight.

where

dn = Total number of patients.

Positive values indicate systematic overestimation.

Negative values indicate systematic underestimation.

Percentage within ±10% (P10): P10 is the proportion of subjects whose relative error is within ±10% of the ground truth weight.

Let

yi be the true weight and

ŷi the predicted weight for subject

i, with

n total subjects. Then:

where 1(⋅) is the indicator function (1 if the condition is true, 0 otherwise).

5. Data Acquisition

5.1. Acquisition and Preprocessing of Digital Image

5.1.1. Camera Setup and Positioning

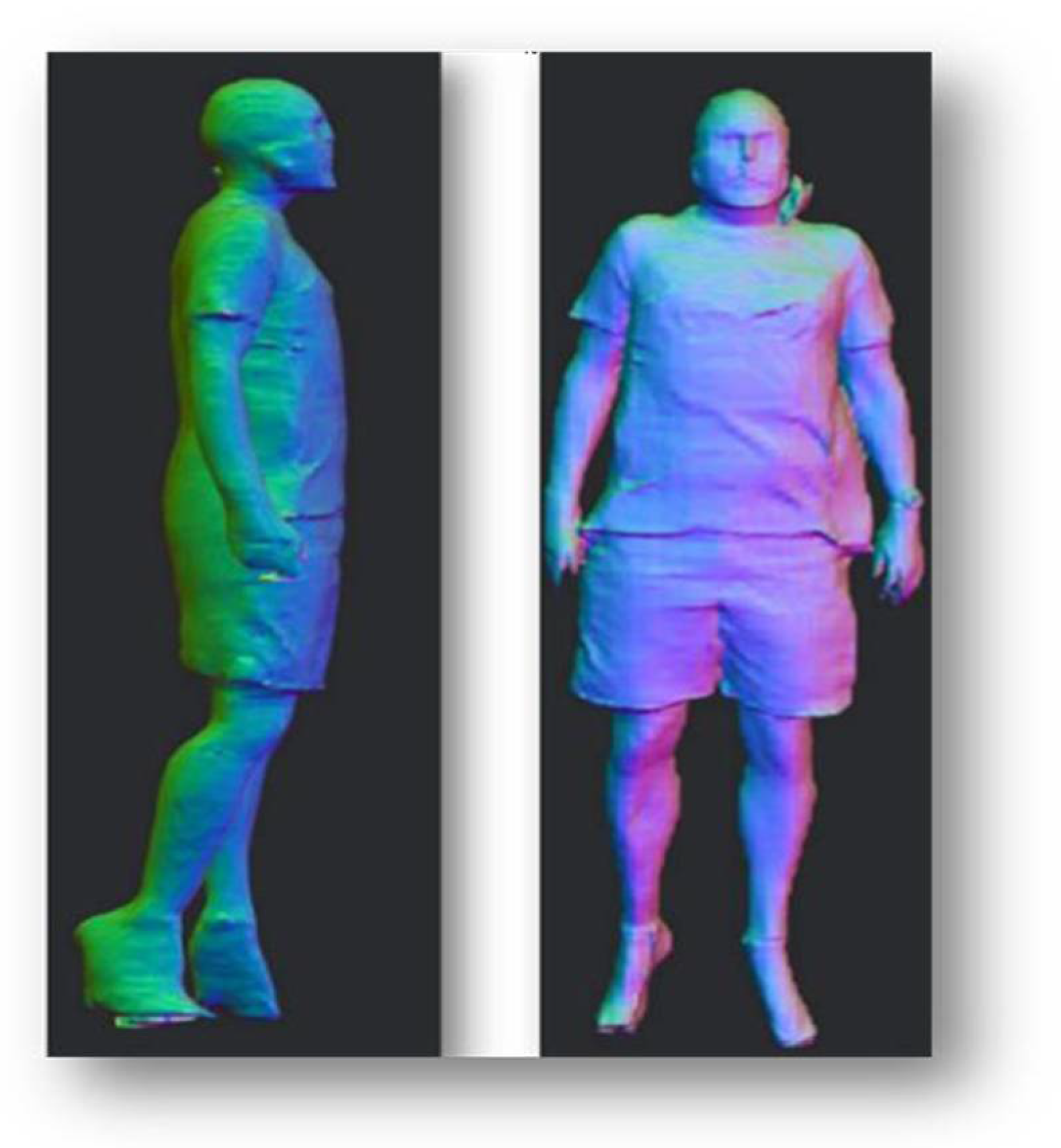

An Intel RealSense™ D415 camera was mounted directly above each subject lying on an examination couch (see

Figure 1). This overhead placement provided an unobstructed, full-body view, capturing the entire body contour from head to toe. In our setup, the D415 streamed depth data at a resolution of 848 × 480 pixels at 30 frames per second (FPS), delivering high-resolution spatial measurements for accurate 3D modeling.

High-Resolution Depth Maps: These maps were essential for capturing subtle body contours (e.g., edges, curves). The ability of the D415 to produce accurate and reliable depth information provided a robust foundation for downstream segmentation and volume calculations.

Data Cleaning and Preprocessing: Immediately after capture, each frame was temporarily stored for further processing. This included initial alignment and fusion of RGB and depth data, as well as minor noise filtering to remove obvious artifacts (e.g., lens flare, sensor noise).

The study cohort included volunteers (age 20–75, BMI 18–42) recruited at the Florida Atlantic University Simulation Center. Ethical approval was obtained under IRB#FAU-2023-41

5.1.2. Data Collection

Prior to data collection, the D415 camera was calibrated to correct for both intrinsic errors (such as lens distortion and focal length) and extrinsic errors (including position and orientation). This calibration ensured that real-world measurements derived from the depth maps were accurate and that the overhead view faithfully represented each subject’s physical dimensions.

A. Limitations

From a technical standpoint, correctly mounting the 3D camera approximately two meters above the patient might be difficult in some emergency department environments.

5.2. Image Segmentation

5.2.1. Background Removal

We began by using the Intel DepthQualityTool SDK to remove extraneous elements from the scene, such as the bed and background. This cleanup step was essential to ensure that subsequent processing algorithms focused exclusively on the subject’s body. Once these unwanted components were masked out, the subject remained as the sole region of interest.

5.2.2. Segmenting Body Regions

Following background subtraction, we applied deep learning-based segmentation models to divide the subject’s body into anatomically relevant regions such as the head, torso, arms, and legs. The segmentation pipeline leveraged MobileNet and ResNet architectures, both pre-trained on large-scale image datasets.

5.2.3. RGB Segmentation

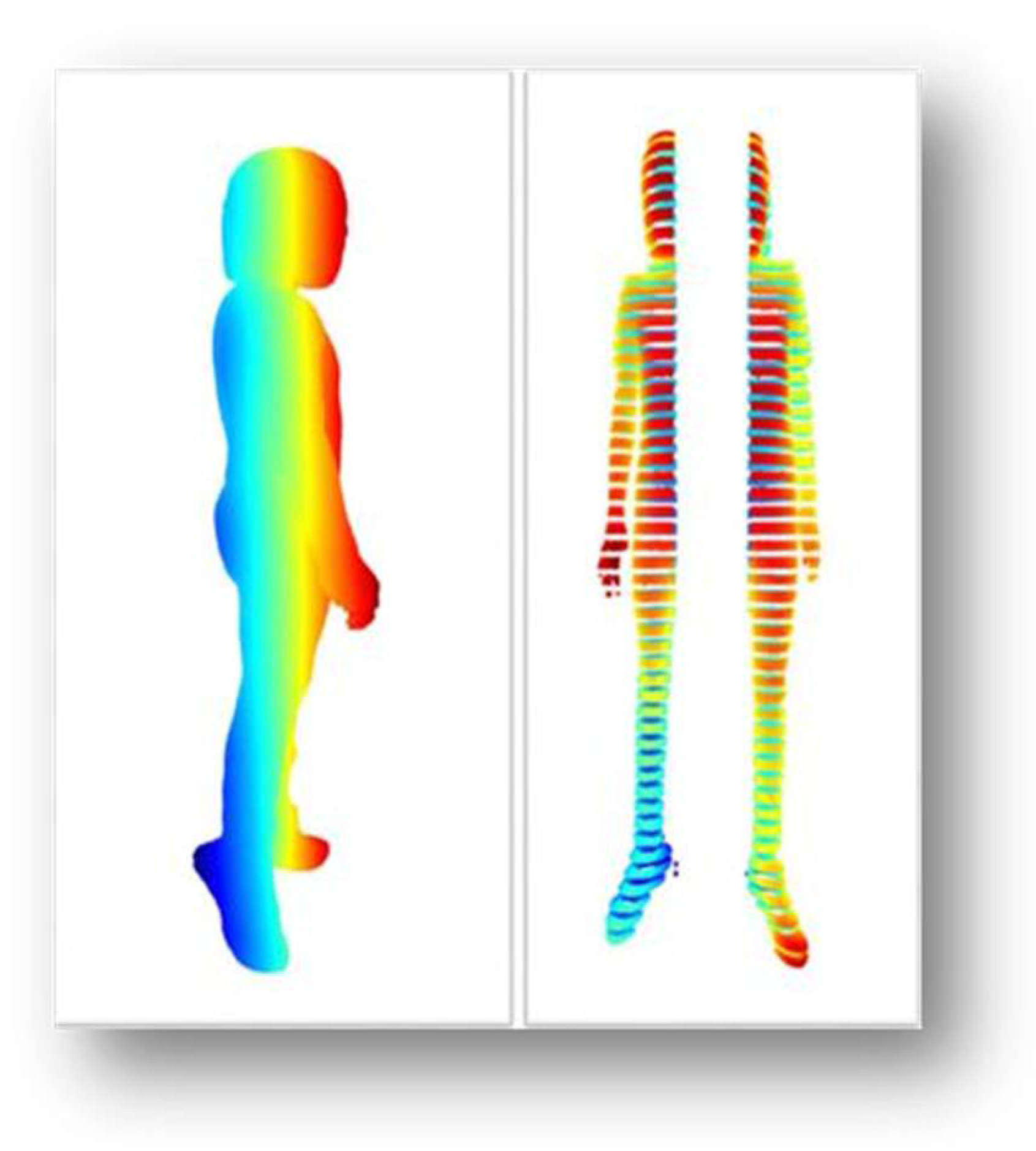

In addition to depth-based segmentation, we incorporated RGB (color-based) segmentation as a complementary technique (see

Figure 2). By integrating depth edges, color transitions, and extracted features, we generated cleaner segmentation masks that accurately isolated each body part with minimal overlapping or missing regions.

5.3. Model Construction

5.3.1. 2D-to-3D Conversion

The CNN model was trained on a dataset comprising 1200 3D point clouds derived from supine-position depth images. These samples were collected from diverse adult subjects with a BMI range of 19–42 and an age range of 20–75. Each training sample was paired with corresponding ground truth labels: TBW from calibrated scales, IBW from the Devine formula, and LBW from DXA scans. Data augmentation techniques, including random body pose variations and partial occlusion simulations, were applied to improve model robustness.

Once a segmented 2D depth image was obtained, the data were transformed into a 3D point cloud. Each pixel was converted using Equation (1), which mapped 2D pixel coordinates and depth values into 3D space.

Here, (u, v) represents the pixel location, (cx, cy) denotes the principal point (camera center), (fx, fy) are the camera’s focal lengths (intrinsic parameters), and d(u, v) is the depth value measured at pixel (u, v).

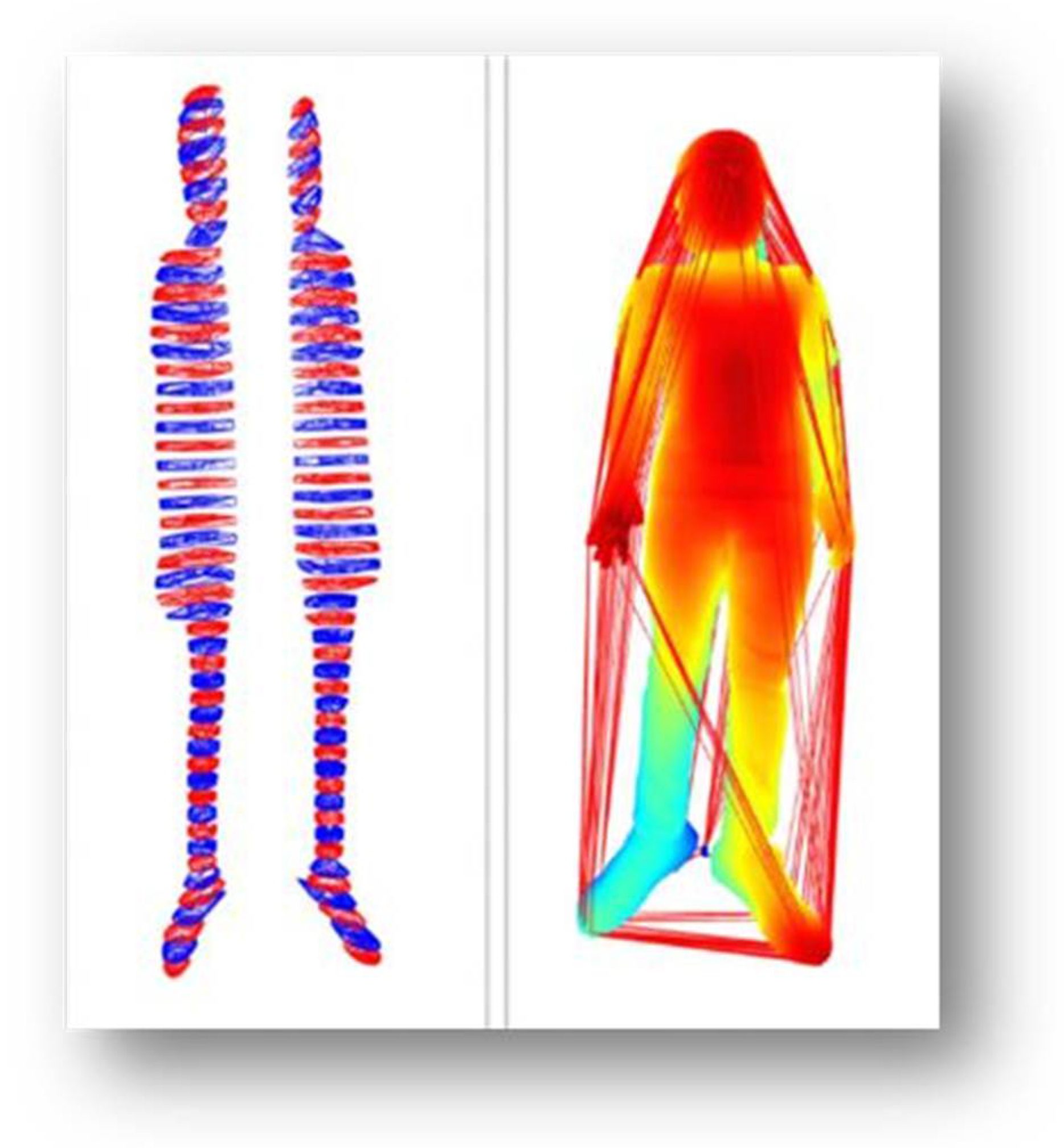

5.3.2. Polygon File and Noise Reduction

To ensure accurate 3D reconstruction, the captured 3D coordinates were first exported in Polygon File Format (PLY), which retained both spatial data (X, Y, Z) and color information (R, G, B) (see

Figure 3). This format was particularly useful for downstream tasks such as segmentation and visualization.

Prior to analysis, robust noise filtering was performed as a critical preprocessing step. Depth sensors often produce artifacts such as floating points or sudden spikes that could distort the model. To address these, smoothing techniques such as bilateral and median filtering were applied to remove spurious readings. Additionally, extreme depth values, typically resulting from sensor errors, were systematically excluded.

Despite these initial corrections, minor sensor inaccuracies and scanning artifacts sometimes persisted, leading to surface warping or small holes in the point cloud. To resolve these residual issues, advanced filtering and surface reconstruction algorithms were employed. These techniques filled gaps, removed remaining outliers, and refined the mesh, ensuring that the final 3D representation closely approximated the subject’s anatomy and was suitable for further analysis (see

Figure 3).

5.4. Volume Estimation

5.4.1. Segmented Volume Computation

To prevent the body from being treated as a single undifferentiated volume, we subdivided the 3D point cloud (or reconstructed mesh) into anatomically distinct segments, namely, the head, torso, arms, and legs. This segmented approach enabled more precise volumetric calculations, which were particularly important given the variability in body shape across different anatomical regions.

5.4.2. Convex Hull Algorithm

We computed the Convex Hull surface of each body segment. Conceptually, the convex hull was the smallest three-dimensional “bounding surface” that enclosed all points in that segment (see

Figure 4). For each segment

Si, the hull volume

Vi was computed:

where

Vsegment = Volume of the body segment (cm3).

Hull (Si) = Convex hull of the ith body segment from the point cloud data.

Figure 4.

Convex Hull Algorithm.

Figure 4.

Convex Hull Algorithm.

5.4.3. Partial Slicing

Rather than relying on a single global hull, we applied horizontal slicing at regular intervals (e.g., every 1–1.5 cm along the z-axis). The cross-sectional area of each slice was calculated and integrated to determine partial volumes. Summing these partial volumes produced a total body volume that more accurately reflected anatomical variation [

16].

where

Ai represents the cross-sectional area of the

ith slice, and Δ

h is the height increment between consecutive slices. The summation aggregates the volumes of all

N slices to yield the overall volume.

5.4.4. Final Body Volume

After computing individual segment volumes or sliced volumes, we aggregate them to obtain the total body volume:

Here, Equation (4) computes final body volume as the sum of all segment volumes, where V

i is the volume of the

ith anatomical region and

k is the total number of segments. (or slices) (see

Figure 5).

5.5. Weight and Height Estimation

5.5.1. Height Measurement

Depth-Based Length: From the overhead perspective, once the body was segmented, we identified the top of the head and the soles of the feet in 3D. The Euclidean distance between these points approximated the subject’s supine length:

where

xhead, yhead, zhead = Coordinates of the top of the patient’s head in the 3D point cloud (cm).

xfeet, yfeet, zfeet = Coordinates of the soles of the patient’s feet in the 3D point cloud (cm).

Normalization: If the subject’s posture was slightly bent or the head elevated, the Meta Sapien normalization model corrected the angles and aligned the body into a canonical pose before finalizing the height estimation calculations.

5.5.2. Weight Estimation

Density Assumptions: Given the total volume

Vbody, an assumed average body density ρ (e.g., ~1.01 g/cm

3) can yield an approximate estimated mass:

where weight is approximated as

Vbody × ρ × g, and g is the gravitational acceleration. In medical contexts, ‘weight’ typically refers to mass measured in kilograms.

Model-Based Refinements: Alternatively, more advanced machine learning models incorporated demographic variables (e.g., age, BMI category) and morphological characteristics (e.g., limb thickness, torso shape) to enhance predictive accuracy beyond reliance on a single bulk density assumption.

5.6. CNN Architecture, Training Configuration, and Replicability

To ensure reproducibility and transparency, we describe the convolutional neural network (CNN) architecture implemented in our system for predicting total body weight (TBW), ideal body weight (IBW), and lean body weight (LBW) from 3D volumetric data. The model is closely integrated with the volumetric feature extraction pipeline and leverages structured data derived from the 3D point clouds (

Figure 3,

Figure 4 and

Figure 5).

5.6.1. Input Features: Volume-Based Representation

The 3D body point cloud (stored in PLY format) is preprocessed to isolate the human figure and segmented into anatomically distinct regions: head, torso, arms, and legs (

Figure 2 and

Figure 3).

The segmented body is then sliced horizontally into 100 uniform intervals (

Figure 4).

For each slice, the cross-sectional area is computed, yielding a 100-dimensional feature vector that represents the distribution of body volume across slices (Equations (3) and (4)).

This feature vector serves as the input to the CNN model for weight prediction.

5.6.2. CNN Architecture Design

The architecture is designed to capture spatial patterns in the volume distribution and is optimized for regression output (TBW, IBW, LBW).

Input Layer:

- ○

Input shape: (100, 1) representing the normalized volume per slice.

Convolutional Layers:

- ○

Conv1D Layer 1: 64 filters, kernel size = 3, ReLU activation.

- ○

Conv1D Layer 2: 128 filters, kernel size = 3, ReLU activation.

- ○

Conv1D Layer 3: 256 filters, kernel size = 3, ReLU activation.

- ○

Each Conv layer is followed by MaxPooling1D (pool size = 2) to reduce dimensionality and extract hierarchical volume features.

Flattening Layer:

- ○

Transforms the final feature maps into a single 1D vector.

Fully Connected Layers:

- ○

Dense Layer 1: 128 neurons, ReLU.

- ○

Dense Layer 2: 64 neurons, ReLU.

Output Layer:

- ○

Dense layer with 3 output neurons, representing TBW, IBW, and LBW.

- ○

Linear activation is used for continuous output values.

5.6.3. Training Configuration

- ○

A summary of the training parameters is shown in

Table 1.

This training setup ensures robustness to anatomical variance, especially relevant in emergency care scenarios where posture and shape may deviate slightly.

5.7. Technical Implementation

The proposed system generates a 3D point cloud from each captured depth frame, which is exported in PLY format. To improve accuracy, noise and outliers—such as floating points or residual surfaces from the bed or blanket—are removed using region-growing and threshold-based segmentation techniques. For volumetric analysis, the subject’s body is virtually sliced in both horizontal and vertical planes to compute cross-sectional areas, which are then integrated to derive the total body volume.

Ground truth labels for model training were obtained from multiple validated sources: TBW was measured using a calibrated standing scale, LBW was derived from the Janmahasatian formula, and IBW was calculated using the Devine formula. These ground truth measures served as targets for supervised learning.

A deep neural network was then trained to map the extracted volumetric features directly to TBW, LBW, and IBW. Once trained, the system is capable of generating all three weight estimates instantaneously and calculation-free, providing real-time applicability in emergency care settings.

5.8. Limitations and Applicability

This pilot study was conducted on a limited sample size, which constrains the generalizability of our findings. As such, the current dataset and results may not fully capture the variability observed across the broader patient population typically encountered in emergency departments.

Furthermore, the proposed system depends on a fixed overhead camera setup positioned approximately two meters above the patient. While this configuration is optimal in controlled clinical or simulated environments, it may pose practical challenges in real-world emergency or prehospital settings, where equipment positioning is less predictable. Preliminary evaluations indicate a decline in accuracy when camera angles or positions deviate significantly from the controlled setup, underscoring a critical limitation in the system’s robustness under uncontrolled conditions.

Anticipated Challenges and Technical Strategies

To address these challenges, future research will prioritize three key directions. First, the development of robust geometric normalization methods to correct distortions introduced by variable camera placements. Second, the integration of multi-angle camera fusion techniques to leverage complementary viewpoints for more consistent 3D reconstructions. Third, the design of deep learning-based pose normalization algorithms is specifically optimized for handheld or variably positioned cameras. Collectively, these advancements aim to maintain high estimation accuracy across diverse acquisition conditions, thereby extending the system’s applicability to real-world emergency and prehospital care settings.

5.9. Performance Evaluation

To evaluate the performance of our 3D CNN-based weight estimation system against conventional approaches—including visual estimation, tape-based tools, and anthropometric formulas—we compared our performance data against that from other reported methods. This process was intended to identify differences in accuracy across methods and to assess the potential viability of our approach. The metrics that we considered to be the most appropriate for weight estimation studies were [

17]:

The proportion of predictions within 10% of the ground truth weight.

Confidence intervals were calculated for MAE using the standard error of the mean and the t-distribution.

5.9.1. Comparison Methods

We compared four TBW estimation methods (see

Table 2).

5.9.2. Results of Error Analysis and Confidence Intervals (See Table 3)

5.9.3. Ideal Body Weight and Lean Body Weight Estimation

In addition to TBW estimates, our approach enabled us to generate estimates of IBW and LBW. Our IBW estimates had a P10 of 100%, a MAPE of 2.0%, and a MAE of 2.4 kg. Our LBW estimates had a P10 of 100%, a MAPE of 2.2%, and a MAE of 1.4 kg. There are no existing point of care methods with which to compare the performance of our IBW and LBW estimates.

6. Discussion

The proposed system provides several advantages that make it highly suitable for emergency and critical care environments. By generating weight estimates from a single depth snapshot, it markedly accelerates the estimation process, enabling rapid and reliable results in time-sensitive clinical scenarios. In addition, the system automatically estimates lean body weight (LBW) and ideal body weight (IBW), addressing a critical gap in drug dosing optimization, particularly in obese patients, where accurate LBW/IBW values are essential.

Preliminary pilot data demonstrate that the system delivers higher accuracy than traditional methods, including in individuals with BMI > 30, a group where conventional techniques often underperform. Its streamlined workflow design further reduces clinical disruption and cognitive load, ensuring seamless integration into emergency protocols. As summarized in

Table 4, the system consistently improves both accuracy and real-time usability when compared to standard weight estimation tools, while uniquely supporting LBW and IBW calculation.

From a clinical safety perspective, both systematic overestimation (+error) and systematic underestimation (−error) carry important implications. Overestimation can result in excessive dosing and increased risk of adverse drug reactions, whereas underestimation may lead to sub-therapeutic dosing and insufficient treatment efficacy. While our primary metric, MAPE, effectively quantifies the overall magnitude of estimation error, future studies should also incorporate directional error analysis (MPE) to better characterize systematic bias and guide further optimization of the system.

7. Future Work

Our 3D camera-based body weight estimation system has shown promising accuracy in both pilot studies and early emergency department (ED) applications. Nonetheless, several avenues for future research and development remain critical to further enhance system performance and broaden clinical utility.

- 1.

Pediatric and Neonatal Adaptation

A key priority is extending the system for use in pediatric and neonatal populations, where significant differences in body size, proportions, and posture demand specialized segmentation algorithms and growth-specific predictive models.

- 2.

Clinical System Integration

Integration with real-time medical systems, including smart infusion pumps and electronic medical records (EMRs), could enable automated drug dosing and closed-loop decision support, thereby minimizing the risk of medication errors in acute care.

- 3.

Large-Scale Validation

To improve generalizability and reliability, future work should involve large-scale, multi-center trials that include diverse patient populations and account for variability in ED workflows and environments.

- 4.

Sensor and Algorithmic Enhancements

Hardware advances, such as leveraging LiDAR or time-of-flight depth sensors, may increase accuracy and robustness. Parallel improvements in occlusion handling and segmentation refinement will help mitigate challenges posed by clothing, medical equipment, or atypical postures.

- 5.

Expanded Clinical Applications

Extending the system to measure segmental body composition (e.g., regional muscle and adipose tissue volumes) could support applications in telemedicine, nutritional monitoring, and longitudinal tracking of chronic diseases.

- 6.

Portability and Accessibility

Developing lightweight, battery-powered versions of the system would facilitate deployment in prehospital settings, rural clinics, and resource-limited environments, expanding access to advanced diagnostic tools outside traditional hospital infrastructure.

- 7.

Human Factors and Ethical Considerations

Finally, widespread adoption will require careful attention to user interface design, workflow integration, and human factors engineering, alongside addressing key privacy, security, and ethical concerns surrounding patient imaging and data use.

Based on our preliminary findings, we outline three planned studies to further validate, refine, and expand the clinical applicability of our system:

- 1.

Advanced Model Development and Validation

Conduct a study integrating 3D imaging with DXA reference data across a large and demographically diverse adult cohort. This will allow more robust model training, improved accuracy, and evaluation across varying body types and clinical conditions.

- 2.

High-Fidelity Simulation Trial

Perform a simulation-based trial comparing our system with traditional weight estimation methods (visual estimation, tape-based tools, and anthropometric formulas) under emergency conditions. The primary endpoints will be speed and accuracy, reflecting the system’s ability to enhance decision-making in time-critical scenarios.

- 3.

Randomized Controlled Trial (RCT)

Implement a randomized controlled trial in a hospital ED setting to evaluate the system’s real-world performance, focusing on drug dosing accuracy, workflow integration, and patient safety outcomes.

The overarching aim of these studies is to refine the technology and demonstrate its clinical utility across a wide range of healthcare environments, ultimately positioning it as a reliable adjunct to emergency and critical care.

8. Conclusions

Our 3D depth camera-based system was successfully implemented and evaluated in a pilot study representative of emergency care populations. The results demonstrate significant accuracy advantages over traditional weight estimation methods, supporting the feasibility and clinical value of integrating AI-driven 3D imaging into emergency workflows to improve medication dosing accuracy and patient safety. In our pilot study, the proposed system achieved a mean absolute error (MAE) of 3.1 kg for TBW, with 87% of estimates within ±10% of actual weight, highlighting its strong potential for clinical adoption.

Importantly, our evaluation metrics distinguished between both the magnitude of error (MAPE) and the directional bias (MPE), underscoring the clinical importance of precise and transparent error characterization in emergency dosing contexts.

Contributions

This study introduces an AI-driven approach for real-time weight estimation from a single supine image, with the following key contributions:

Development of an end-to-end CNN-based prediction pipeline for volumetric feature extraction and weight estimation.

Real-time estimation of total body weight (TBW), ideal body weight (IBW), and lean body weight (LBW).

Validation of performance in emergency simulation settings across a broad BMI range.

Demonstration of a practical, deployable system evaluated in prospective clinical simulations.