1. Introduction

Wireless Sensor Networks (WSNs) have emerged as a key enabling technology in modern digital infrastructures, supporting diverse applications such as precision agriculture, environmental monitoring, disaster management, military surveillance, healthcare systems, and smart city development [

1,

2,

3]. A typical WSN consists of a large number of spatially distributed sensor nodes equipped with limited sensing, computation, and wireless communication capabilities. These nodes are generally powered by non-rechargeable batteries and are deployed to measure ambient parameters including temperature, humidity, vibration, and pressure, with the sensed data transmitted to a base station (sink) for further processing [

4,

5].

Despite their versatility, WSNs face critical operational challenges, with energy consumption being the most significant. Since sensor nodes are usually deployed in inaccessible or hostile environments, battery replacement is impractical. Node energy depletion leads to sensing coverage gaps, routing failures, and eventually, the collapse of network connectivity [

6]. Consequently, the design of energy-efficient clustering and routing protocols remains central to prolonging network lifetime while ensuring reliable data transmission.

Several clustering-based protocols have been introduced to address these concerns. Early approaches such as Low-Energy Adaptive Clustering Hierarchy (LEACH) [

7], Power-Efficient Gathering in Sensor Information Systems (PEGASIS) [

8], and Hybrid Energy-Efficient Distributed Clustering (HEED) [

9] significantly reduced redundant transmissions and minimized direct communication with the sink. However, their decision-making strategies relied on static or probabilistic criteria, offering limited adaptability under dynamic conditions such as node failures, heterogeneous energy states, and varying traffic demands. For instance, LEACH randomly selects cluster heads, often causing uneven energy distribution. PEGASIS organizes nodes into linear chains, but its rigid structure cannot efficiently adapt to dynamic topologies. HEED partially addresses these issues by considering residual energy in cluster head selection, yet its reliance on fixed parameters restricts scalability and flexibility.

Recent advancements in Artificial Intelligence (AI), particularly Reinforcement Learning (RL), provide a promising alternative for addressing the limitations of traditional protocols [

10,

11]. RL enables distributed decision-making by allowing nodes to learn from their interactions with the network environment and adapt strategies that optimize long-term energy efficiency and reliability. Deep Reinforcement Learning (DRL), and specifically Deep Q-Networks (DQNs), further enhance this capability by approximating Q-values through deep neural networks, thereby enabling efficient learning in large-scale state-action spaces inherent to WSNs [

12].

Motivated by these insights, this paper proposes an AI-driven, energy-efficient data aggregation and routing protocol for WSNs. The contributions of this work can be summarized as follows:

Adaptive Clustering Strategy: Residual energy, node proximity, and local traffic load are jointly considered in cluster-head selection, ensuring balanced energy consumption and preventing premature node failures.

Intelligent Routing Mechanism: A DQN-based routing scheme is employed to dynamically determine energy-efficient forwarding paths, considering both reliability and latency requirements.

Performance Validation: The proposed protocol is evaluated against conventional approaches such as LEACH, PEGASIS, and HEED using metrics including network lifetime, energy consumption, packet delivery ratio (PDR), latency, and load distribution. Simulation results demonstrate superior performance, scalability, and adaptability under dynamic network conditions.

The research introduces an AI-based energy-aware protocol for WSNs that combines adaptive clustering with a DQN-driven routing strategy. Experimental results show improved lifetime, efficiency, reliability, and scalability over traditional methods.

The integration of Artificial Intelligence (AI) into WSN protocols introduces significant distinctions compared to conventional approaches. Unlike traditional schemes, AI-enabled protocols exhibit adaptive behavior, allowing the network to respond dynamically to variations in environmental conditions. This adaptability is particularly critical in WSNs, where node failures, fluctuating traffic patterns, and progressive energy depletion are common. By leveraging learning mechanisms, AI-driven protocols are capable of making time-optimal decisions through experience-based adaptation, thereby continuously refining energy management strategies. This enables efficient distribution of energy-intensive tasks, such as data transmission and aggregation, with respect to the overall lifetime of the network. Furthermore, deep reinforcement learning methods, such as Deep Q-Networks (DQNs), inherently support scalability, making them suitable for large-scale deployments involving hundreds or thousands of nodes.

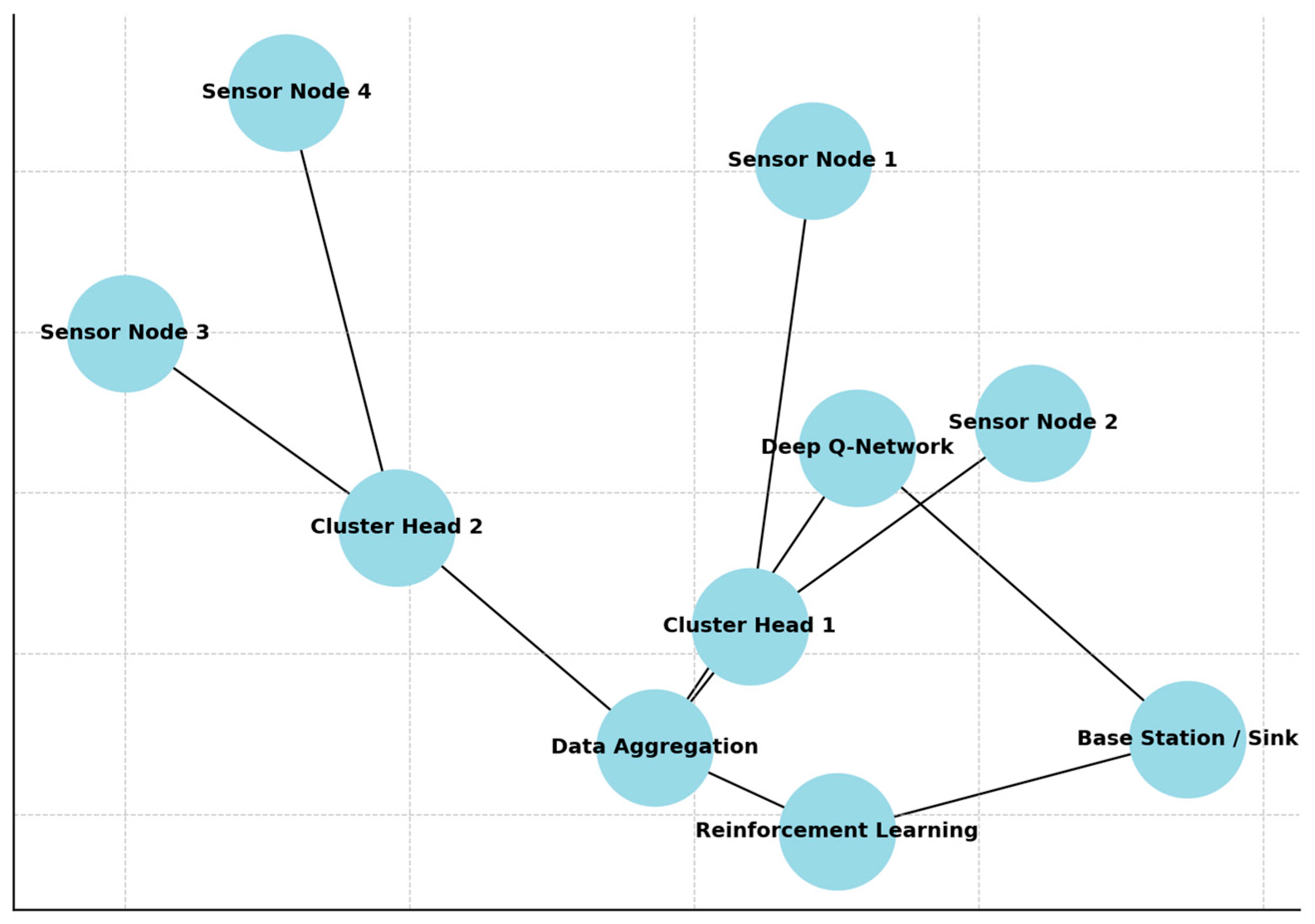

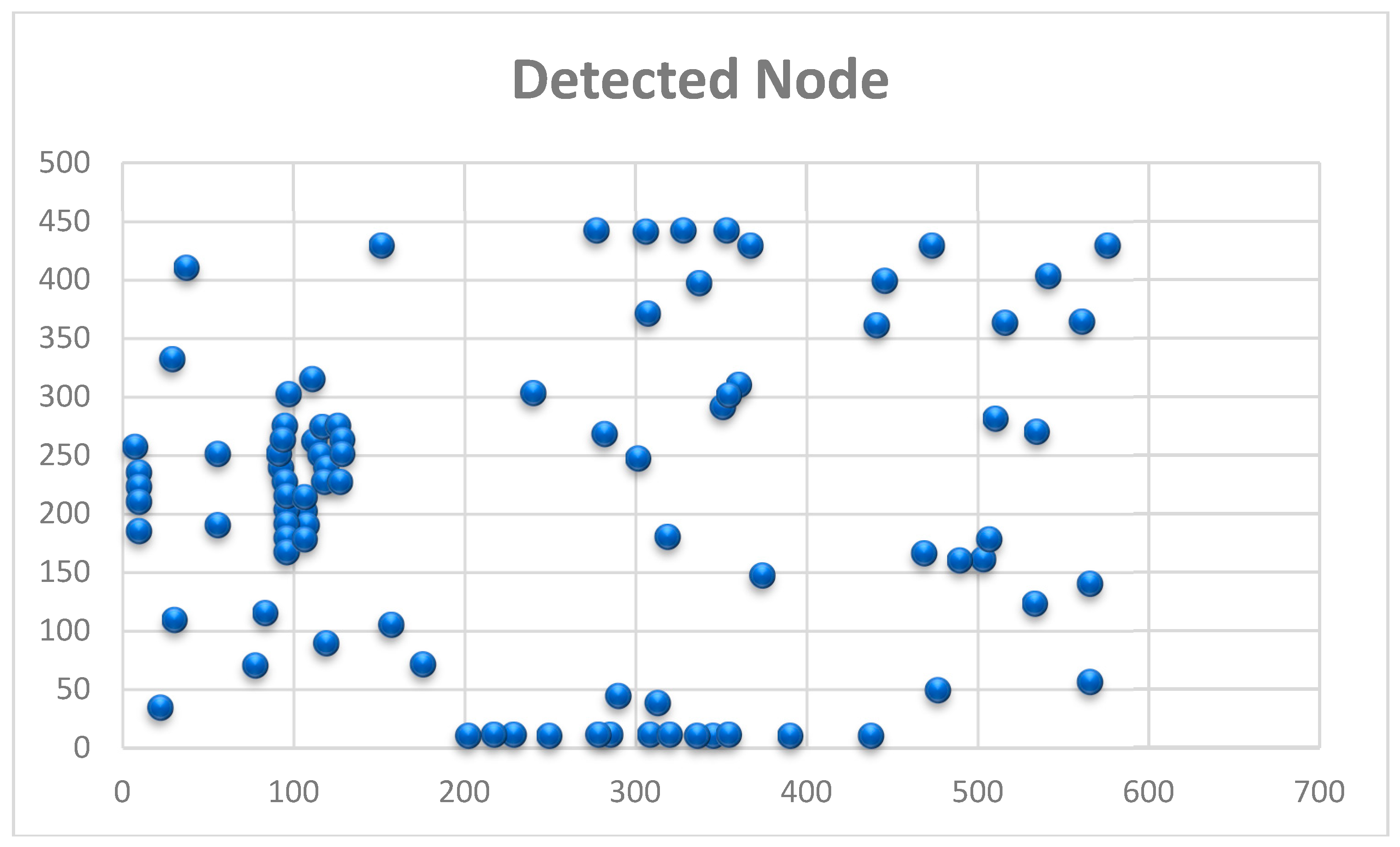

The effectiveness of the proposed AI-assisted data aggregation and routing protocol, as shown in

Figure 1, is evaluated using extensive simulations that replicate real-world WSN scenarios. These scenarios consider large-scale random node deployment for monitoring environmental conditions. Performance assessment is carried out through key metrics, including network lifetime, energy consumption, packet delivery ratio (PDR), latency, and load distribution. Comparative analysis with established protocols such as LEACH, PEGASIS, and HEED demonstrates substantial improvements in terms of energy efficiency, packet delivery reliability, and overall network sustainability. The results confirm that the AI-driven protocol ensures balanced energy utilization across nodes, reduces packet losses through intelligent routing, and significantly extends operational lifetime.

A key outcome of this study is the reinforcement learning-based cluster head (CH) selection strategy, which dynamically rotates the CH role among nodes based on residual energy levels and spatial distribution. This real-time adaptability prevents overburdening of individual nodes with data aggregation responsibilities, thereby mitigating premature failures and avoiding network partitioning. In addition, the DQN-based routing mechanism identifies energy-efficient forwarding paths that minimize communication overhead and enhance throughput. Collectively, these mechanisms yield a robust, scalable, and energy-aware solution for WSN communication challenges.

The proposed AI-driven energy-efficient data aggregation and routing protocol provides a comprehensive solution to the energy and reliability limitations of traditional WSN protocols. The integration of adaptive clustering with DQN-based routing enhances energy conservation, improves load balancing, and extends network longevity, while maintaining high-quality data delivery. These contributions establish a solid foundation for future advancements in AI-assisted WSN protocols, with potential applications in environmental monitoring, smart city infrastructure, healthcare systems, and other IoT-driven domains. As WSNs continue to play a critical role in next-generation IoT ecosystems, the demand for intelligent, energy-efficient communication strategies will become increasingly vital.

2. Related Works

Recent advances in Internet of Things (IoT) and Wireless Sensor Networks (WSNs) research emphasize optimization techniques that enhance energy efficiency, security, and adaptability through the integration of Artificial Intelligence (AI). Approaches under investigation include fuzzy clustering models, hybrid machine-learning-based intrusion detection frameworks, blockchain-assisted communication protocols, and AI-driven routing mechanisms. Collectively, these studies converge on several key requirements: minimization of energy expenditure, robust data protection, scalability for large-scale deployments, and adaptability to dynamic operating conditions. By integrating state-of-the-art technologies such as blockchain, machine learning, and deep reinforcement learning, researchers have developed solutions aimed at improving the lifetime, reliability, and resilience of IoT-enabled WSNs, particularly in domains such as healthcare, industrial automation, and smart city infrastructures.

Javadpour et al. [

1] introduced an optimization-based fuzzy clustering method to enhance energy management in IoT networks. Their work focused on refining cluster head selection using fuzzy logic combined with hybrid optimization algorithms, leading to extended network lifetime and more balanced power usage across sensor nodes. They further suggested that predictive AI-based models could be incorporated to adapt clustering strategies in real time. Gebremariam et al. [

2] proposed a hybrid machine learning-based intrusion detection system (IDS) tailored for hierarchical WSNs. By combining decision trees and support vector machines within a multilayer IDS, they achieved improved accuracy while minimizing false positives. They also noted that deep learning extensions could further strengthen adaptability against evolving cyber threats.

Energy-efficient clustering solutions were also studied by Labib et al. [

3], who developed an enhanced threshold-sensitive distributed protocol designed for IoT-based WSNs. By dynamically adjusting cluster head thresholds according to network conditions, their approach achieved balanced energy consumption and extended network longevity. Similarly, Priyadarshi et al. [

4] surveyed a wide spectrum of energy-aware routing algorithms, including genetic algorithms, particle swarm optimization, and reinforcement learning. Their findings highlighted the potential of AI-based models for achieving real-time optimization under dynamic network conditions and recommended hybrid frameworks that combine meta-heuristics with AI for greater efficiency.

In the domain of secure routing, Haseeb et al. [

5] proposed an AI-assisted sustainable model for Mobile WSNs (MWSNs). Their protocol predicts and mitigates security threats while optimizing transmission energy costs, with adaptability to time-varying conditions. They further suggested blockchain integration to guarantee data integrity. Kumari and Tyagi [

6] reviewed the design and applications of WSNs, stressing challenges related to scalability, energy conservation, and secure communication, and emphasized the potential of digital twins and blockchain for future deployments in urban environments.

Application-specific AI frameworks have also been investigated. Basingab et al. [

7] introduced an AI-based decision support system for optimizing WSN resource allocation in consumer electronics. By predicting traffic patterns with machine learning, their system reduced energy usage while improving quality of service in e-commerce applications. Hu et al. [

8] proposed a deep reinforcement learning (DRL)-based security mechanism for WSNs, integrating DRL with traditional security measures to achieve both secure and energy-efficient communication. They recommended federated learning as a future enhancement to improve scalability. Bairagi et al. [

9] presented a recursive geographic forwarding protocol that reduced forwarding costs by exploiting spatial information, with potential extensions through machine learning for predictive routing.

Additional works have focused on integrating blockchain with AI. Satheeskumar et al. [

10] developed a hybrid framework combining neural networks and blockchain to improve routing and ensure secure communication in WSNs. Ntabeni et al. [

11] analyzed device-level energy-saving strategies for machine-type communications (MTC), including power management and RF optimization, and suggested AI-based approaches for real-time adaptation in resource-constrained IoT devices. Chinnasamy et al. [

12] designed a blockchain-6G-enabled framework that incorporates machine learning for security management in IoT applications, recommending further advancements in predictive AI algorithms for real-time optimization.

Research into domain-specific IoT applications has also gained momentum. Venkata Prasad et al. [

13] reviewed lightweight secure routing techniques for the Internet of Medical Things (IoMT), emphasizing hybrid models that combine AI-driven optimization with classical routing protocols. Kaur et al. [

14] proposed an NSGA-III-based fog-assisted WSN architecture for emergency evacuation in large buildings, demonstrating its ability to optimize multiple objectives such as latency and energy efficiency. Ibrahim et al. [

15] presented a 6G-IoT resource allocation framework leveraging Bayesian game theory and packet scheduling, with AI integration for adaptive reconfiguration in real time.

Overall, these studies reveal a growing trend toward AI- and blockchain-assisted WSN optimization, where clustering, routing, and security are designed to handle large-scale, heterogeneous, and dynamic environments. The collective findings demonstrate that energy efficiency, reliability, and scalability remain central challenges, while AI-driven adaptive solutions present the most promising direction for future IoT and WSN deployments.

4. Experimental Analysis

The evaluation of the proposed protocol is performed through simulations in a WSN environment composed of randomly deployed sensor nodes with limited energy resources across a defined geographical region. Performance is assessed using key metrics, including network lifetime, overall energy consumption, packet delivery ratio (PDR), and latency. Comparative analysis with conventional schemes such as LEACH, PEGASIS, and HEED illustrates that the proposed protocol achieves improved energy efficiency and enhanced network sustainability under varying operating conditions.

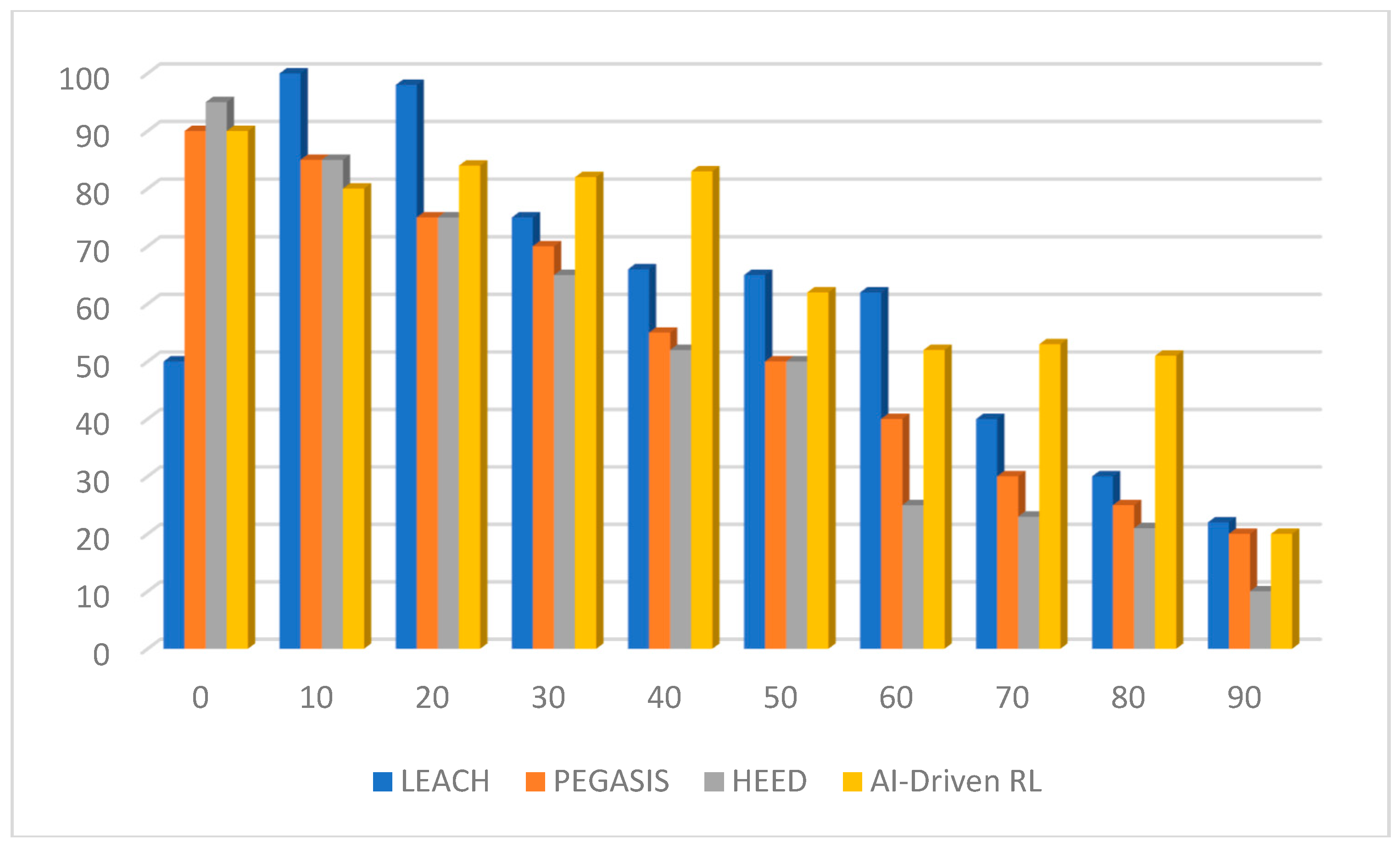

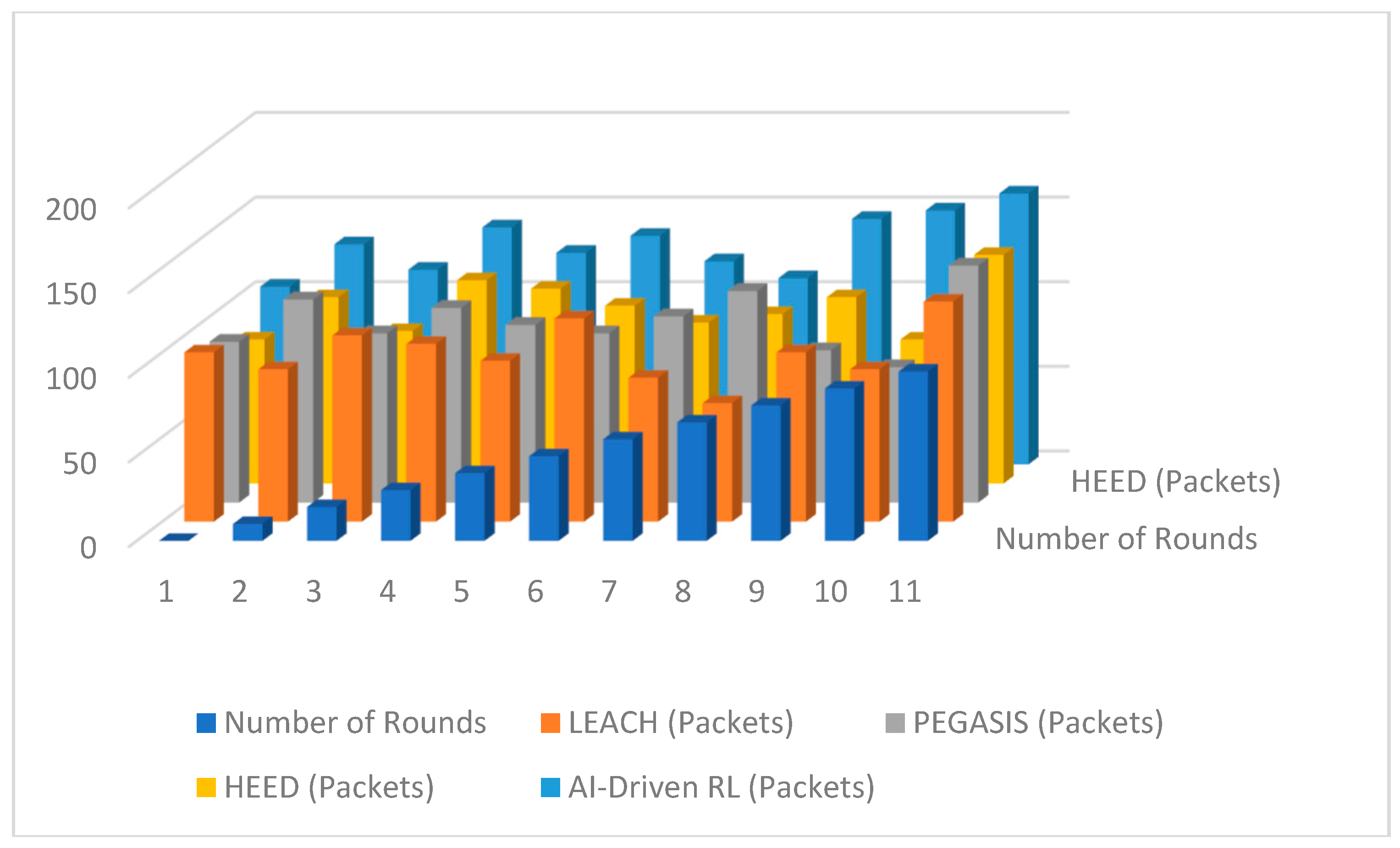

Figure 2 represents the number of alive nodes across several rounds for the AI-driven RL protocol and traditional protocols, such as LEACH, PEGASIS, and HEED. With an increase in rounds, it is quite apparent that the AI-driven RL protocol had maintained a higher value of alive nodes compared to other protocols, thereby depicting its efficiency in distributing energy consumption and preventing the premature death of nodes. The above improvement by the AI is due to adaptive decision-making regarding cluster head selection and routing to optimize the network lifetime. The steep decline in network lifetime is noted for LEACH, PEGASIS, and HEED networks because of static decision-making approaches.

In Round 1, the network topology illustrates the initial cluster formation in a wireless sensor network (WSN), where several nodes are randomly distributed across the field. Cluster heads (CHs) are denoted by red crosses, while ordinary sensor nodes are represented with blue crosses. The black dotted lines indicate associations between sensor nodes and their corresponding CHs, thereby forming clusters. Furthermore, the green solid lines highlight the communication paths from CHs to the sink (base station). This clustering mechanism enables energy-efficient communication, as individual nodes transmit data to nearby CHs rather than directly to the sink, thereby reducing overall energy consumption.

In Round 2, the selection of CHs is influenced by both the residual energy of nodes and their relative proximity. The newly elected CHs, again marked with red crosses, differ from those in the previous round, while the blue crosses continue to represent ordinary nodes. The changes in node–CH connections reflect a dynamic restructuring of clusters, which balances energy dissipation and prolongs the operational lifetime of the WSN. Such reorganization prevents excessive energy burden on specific nodes, thus mitigating uneven energy distribution and avoiding premature node failures.

In Round 3, further re-clustering occurs as new CHs are elected, represented once again by red crosses, with updated associations to sensor nodes. This continuous adaptive process ensures that energy expenditure remains distributed across the network. The CH selection considers real-time parameters such as residual energy levels and inter-node distances, demonstrating the adaptive capability of AI-assisted clustering protocols. By optimizing the utilization of available resources, this approach enhances the network lifetime while preventing rapid energy depletion of critical nodes, as illustrated in

Figure 3.

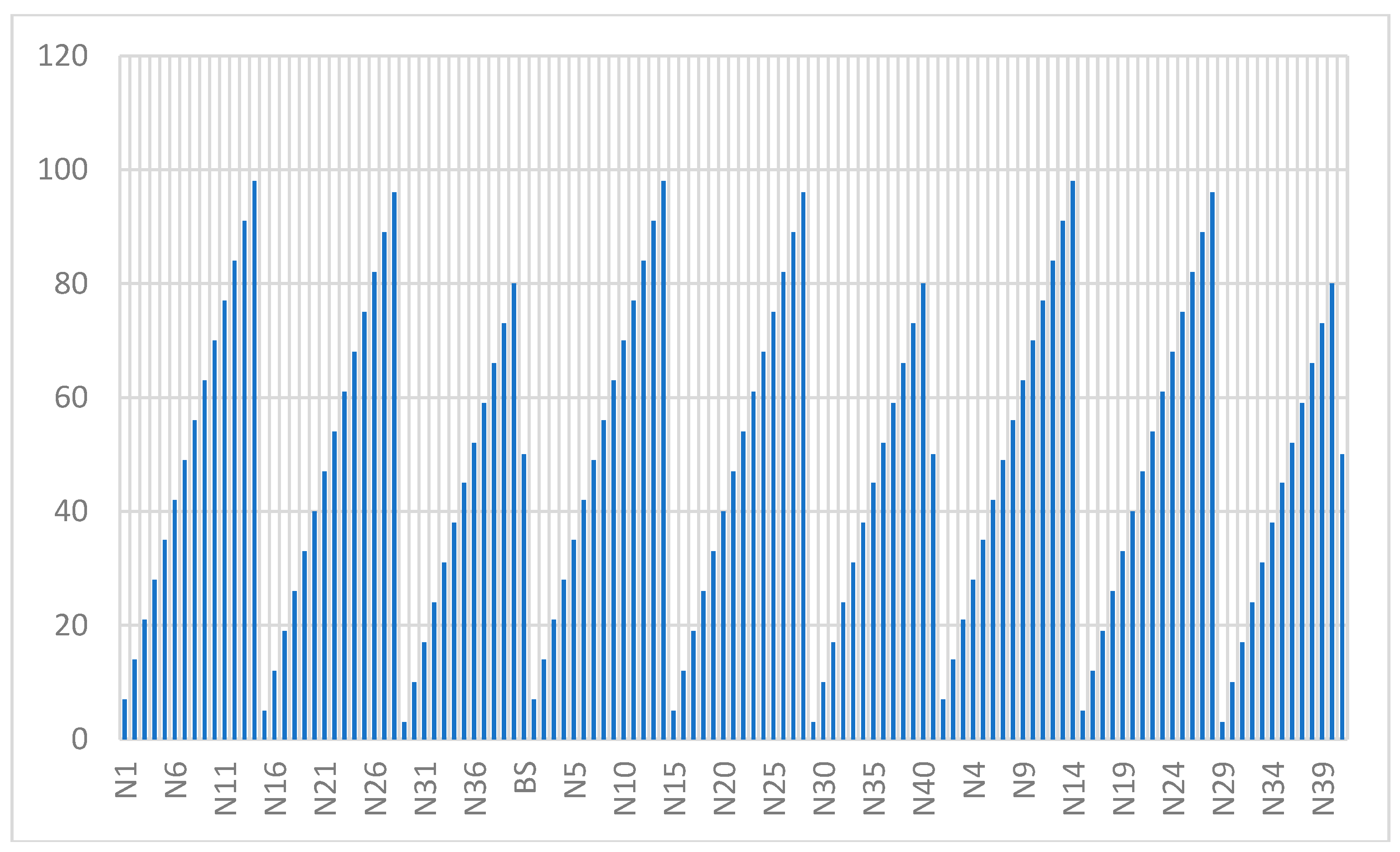

Figure 4 illustrates the residual energy distribution among sensor nodes during the 10th round of the energy consumption balancing simulation. A color gradient is employed for visualization, where nodes highlighted in green correspond to higher residual energy, while those shaded in red represent nodes with relatively low remaining energy. Cluster heads (CHs) are denoted by larger red crosses, distinguishing them from ordinary sensor nodes. The dashed lines indicate the established communication links between sensor nodes and their designated CHs.

Figure 4, demonstrates the adaptive nature of the applied clustering algorithm. By periodically rotating the role of CHs and reconfiguring routing paths, the protocol effectively prevents excessive energy depletion of specific nodes. Such adaptive reorganization ensures that no single node is overburdened, thereby maintaining a balanced distribution of energy consumption across the network. Consequently, the network lifetime is extended, while simultaneously enhancing the efficiency of data aggregation and reducing redundant transmissions. Furthermore, this energy-aware mechanism guarantees reliable connectivity and equitable load sharing, which are critical factors in sustaining large-scale wireless sensor network (WSN) deployments.

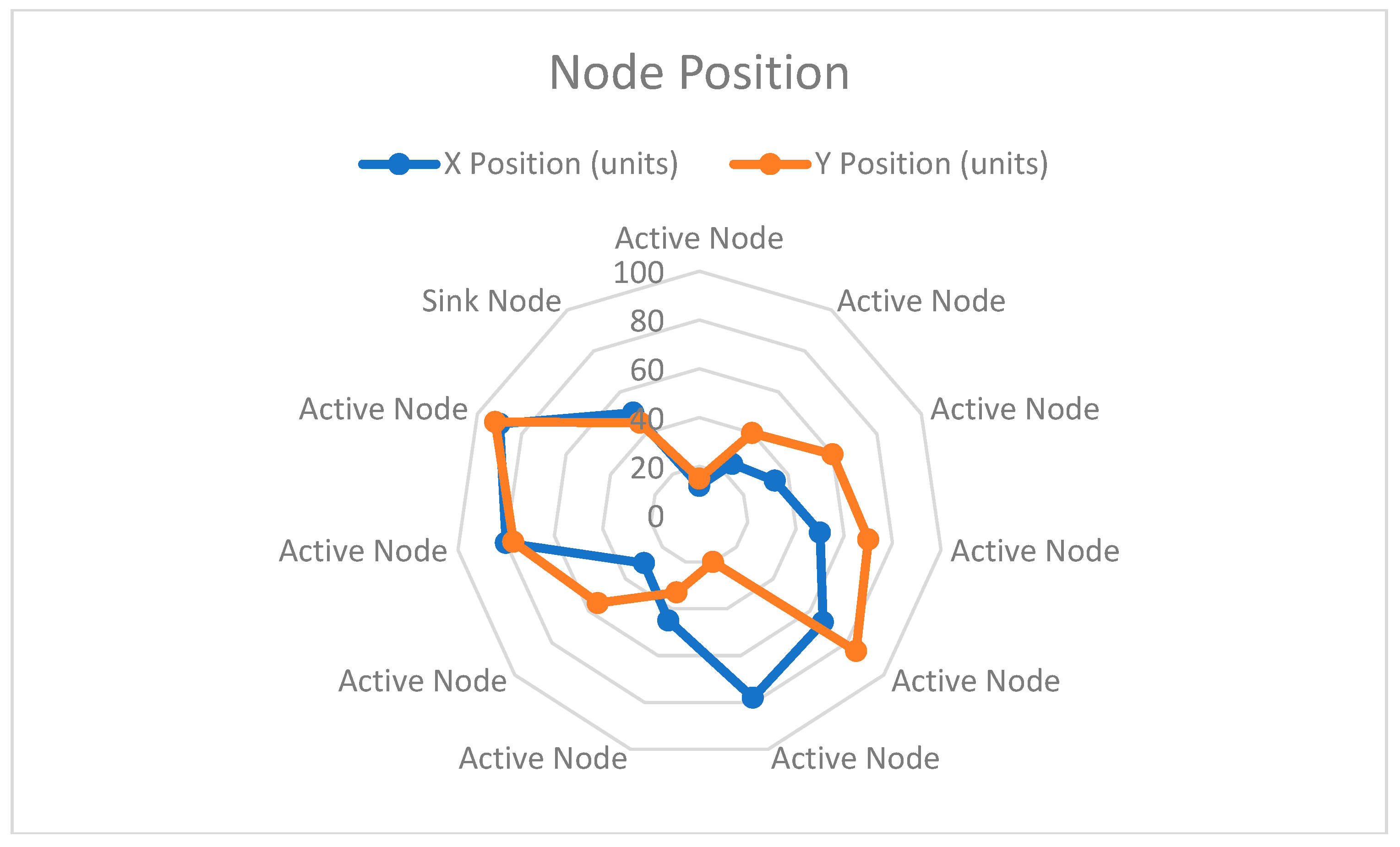

Figure 5, presents the network coverage obtained in the fifth simulation round over a field of 100 × 100 units. The active sensor nodes are represented by green crosses, while the sink node is indicated by a red diamond. Each sensor node has a predefined sensing radius.

From the figure, it can be observed that the sensor nodes are randomly scattered, providing significant coverage of the deployment area. Several regions exhibit overlapping coverage zones, which increases fault tolerance by enabling multiple nodes to monitor the same area. This redundancy improves the reliability of data acquisition even in the presence of node failures. The placement of the sink node near the center facilitates direct communication with nearby nodes, while distant nodes rely on multi-hop transmission.

Overall, the results indicate that the network maintains effective spatial coverage with limited uncovered regions. Such coverage characteristics are crucial in wireless sensor networks, as they directly influence network lifetime, connectivity, and quality of service.

Figure 6 illustrates the time-evolution of cluster stability during the fifth simulation round. The network is organized into multiple clusters, each governed by a cluster head, while the remaining nodes act as members associated with their respective heads. The figure highlights the placement of cluster heads and the grouping of nodes, which collectively demonstrate the stable structure maintained across rounds of the clustering algorithm.

The formation of clusters demonstrates that sensor nodes consistently associate with their respective cluster heads, thereby minimizing the need for frequent reconfiguration. Such stability reduces the overhead associated with cluster re-formation and supports efficient energy utilization. The distribution of cluster heads across the sensing area further enables load balancing by avoiding excessive communication demand in localized regions.

By ensuring relatively stable cluster membership, the algorithm effectively prolongs the operational lifetime of the network. Nodes communicate with nearby cluster heads at lower transmission costs, while the balanced placement of heads across the field prevents premature depletion of energy in critical zones. These characteristics indicate that the clustering scheme contributes to energy efficiency, scalability, and enhanced reliability of the wireless sensor network.

A simulation was conducted to evaluate packet delivery performance in a wireless sensor network (WSN) with randomized node deployment. A total of 36 sensor nodes were distributed within a 100 × 100-unit area, each attempting to transmit a data packet to a centrally located sink node positioned at (55, 50). The results recorded 15 successful deliveries and 21 failed transmissions, as shown in

Figure 7. The delivery outcomes were randomly assigned to reflect variable communication conditions such as signal attenuation, interference, or node energy levels. Analysis of the spatial distribution revealed that nodes located closer to the sink generally experienced higher success rates, whereas those positioned near the boundaries of the simulation field were more prone to delivery failures. This observation aligns with known limitations in WSNs, where greater transmission distances and limited relay options can adversely affect network reliability. The study underscores the importance of considering spatial placement and routing strategies in WSN design to enhance data delivery efficiency and reduce transmission loss. These findings provide a foundational understanding for future work involving dynamic routing protocols and energy-aware communication models in sensor networks.

The load distribution comparison, as shown in

Figure 8, contains four routing protocols—LEACH, PEGASIS, HEED, and the AI-driven reinforcement learning approach—was evaluated over multiple simulation rounds. The horizontal bar chart indicates the number of packets handled by each protocol at different intervals.

In the initial rounds, all protocols exhibited comparable load distribution, with slight variations in packet handling. LEACH and PEGASIS showed moderate packet handling capabilities, whereas HEED maintained relatively balanced load allocation. However, the AI-driven reinforcement learning protocol consistently achieved higher load distribution, particularly in later rounds, demonstrating its adaptability and robustness.

At mid-level rounds (30–60), PEGASIS and HEED displayed improved performance compared to LEACH, suggesting better energy utilization and network balance. Despite these improvements, the AI-driven reinforcement learning scheme outperformed the traditional protocols by sustaining higher packet throughput.

In the final rounds (80–100), the superiority of the AI-driven reinforcement learning approach became more evident. While traditional protocols exhibited performance fluctuations, the AI-based protocol maintained steady and higher load distribution, indicating better scalability and prolonged network efficiency.

Overall, the results confirm that incorporating reinforcement learning mechanisms enhances load balancing, reduces network congestion, and ensures effective packet delivery when compared with conventional clustering and chain-based protocols.

The results in

Table 1, clearly demonstrate that the AI-driven method consistently outperforms the baseline approaches across most metrics. In terms of network lifetime, the AI-driven protocol sustains operation for 90 rounds, compared to 58–80 rounds for the other schemes. This indicates better energy management and node participation. Similarly, throughput is substantially improved, reaching 185 packets, while LEACH, PEGASIS, and HEED achieve values in the range of 95–115 packets.

The latency performance also shows that the AI-driven protocol delivers lower delay (150 ms) relative to PEGASIS (195 ms) and the other two methods (165 ms each), ensuring faster communication. Furthermore, residual energy reaches 300 Joules in the AI-driven scheme, substantially higher than the 210–235 Joules observed in conventional protocols, highlighting the effectiveness of energy utilization.

In terms of energy efficiency, the AI-driven approach attains 95%, exceeding the 73–84% range of the other methods. The stability period is also prolonged to 75 rounds, demonstrating resilience and balanced cluster formation. Finally, packet loss is minimized to 35%, which is significantly lower than the 40–52% observed in existing schemes.

Overall, the comparative analysis indicates that the AI-driven protocol not only enhances energy utilization and packet delivery but also ensures higher stability and reduced communication overhead. These improvements collectively contribute to extending the network’s operational lifetime and reliability, making the AI-driven scheme more suitable for large-scale WSN deployments.

5. Conclusions

The proposed AI-driven energy-efficient Data Aggregation and Routing Protocol has demonstrated significant improvements in the performance of Wireless Sensor Networks (WSNs). By integrating reinforcement learning (RL) with deep Q-networks (DQNs), the protocol effectively addresses the fundamental challenge of energy efficiency while maintaining network stability and scalability. Comparative analysis with classical approaches, such as LEACH, PEGASIS, and HEED, reveals that the AI-based method consistently achieves longer network lifetime, higher throughput, reduced latency, and improved energy efficiency. Furthermore, packet loss is considerably minimized, ensuring reliable data delivery across the network.

The simulation outcomes emphasize that the incorporation of adaptive cluster head selection and intelligent routing strategies allows for balanced energy consumption, thereby reducing reconfiguration overheads and prolonging the overall network operation. These advantages make the protocol highly suitable for large-scale applications, including environmental monitoring, smart city deployments, and Internet of Things (IoT) ecosystems.

In addition, this work highlights the potential of artificial intelligence, particularly RL and DQNs, to address both energy and scalability issues that are intrinsic to WSNs. The results establish a pathway for more robust, resilient, and sustainable sensor networks. Future research directions include the adoption of multi-agent reinforcement learning (MARL) techniques to enable collaborative learning among nodes, the consideration of node or sink mobility to adapt to dynamic real-world environments, and the incorporation of security mechanisms to safeguard against malicious activities such as jamming or data manipulation. Real-world large-scale deployment will further validate the scalability and practical viability of the proposed protocol.

In conclusion, the findings of this study underscore the role of AI-driven methods as a transformative approach in advancing WSN protocols, setting the foundation for next-generation IoT and smart sensing applications.