Framework for a Modular Emergency Departments Registry: A Case Study of the Tasmanian Emergency Care Outcomes Registry (TECOR)

Abstract

1. Introduction

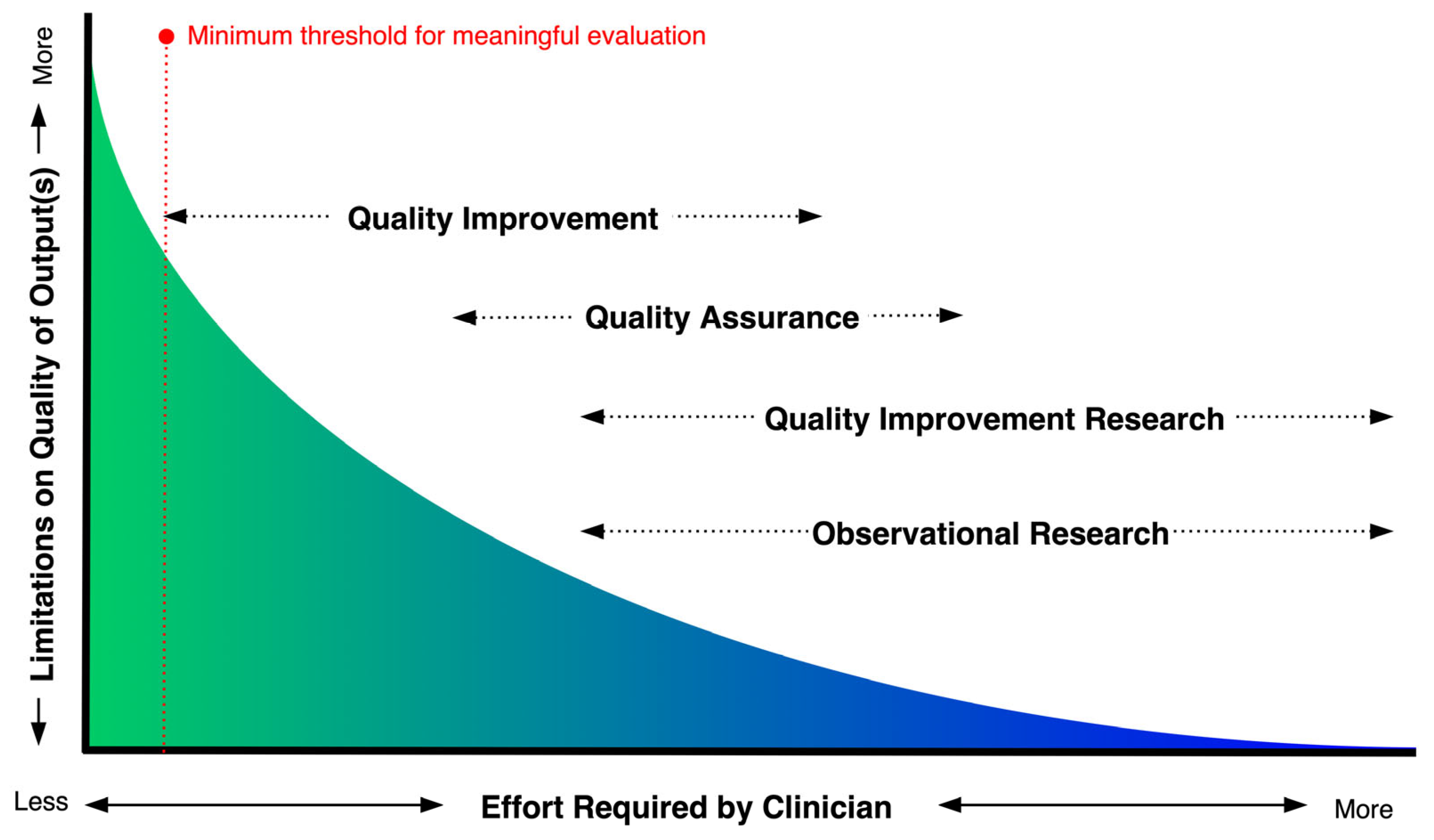

1.1. Methods for Evaluating Clinical Quality

1.2. ED CQRs

1.3. Objectives

2. Materials and Methods

2.1. Audit of Quality Assurance, Quality Improvement and Observational Research in Tasmanian EDs

2.2. Methods for Data Mapping

3. Results

3.1. Scoping Audit of Quality Initatives

3.2. Data Mapping

4. Discussion

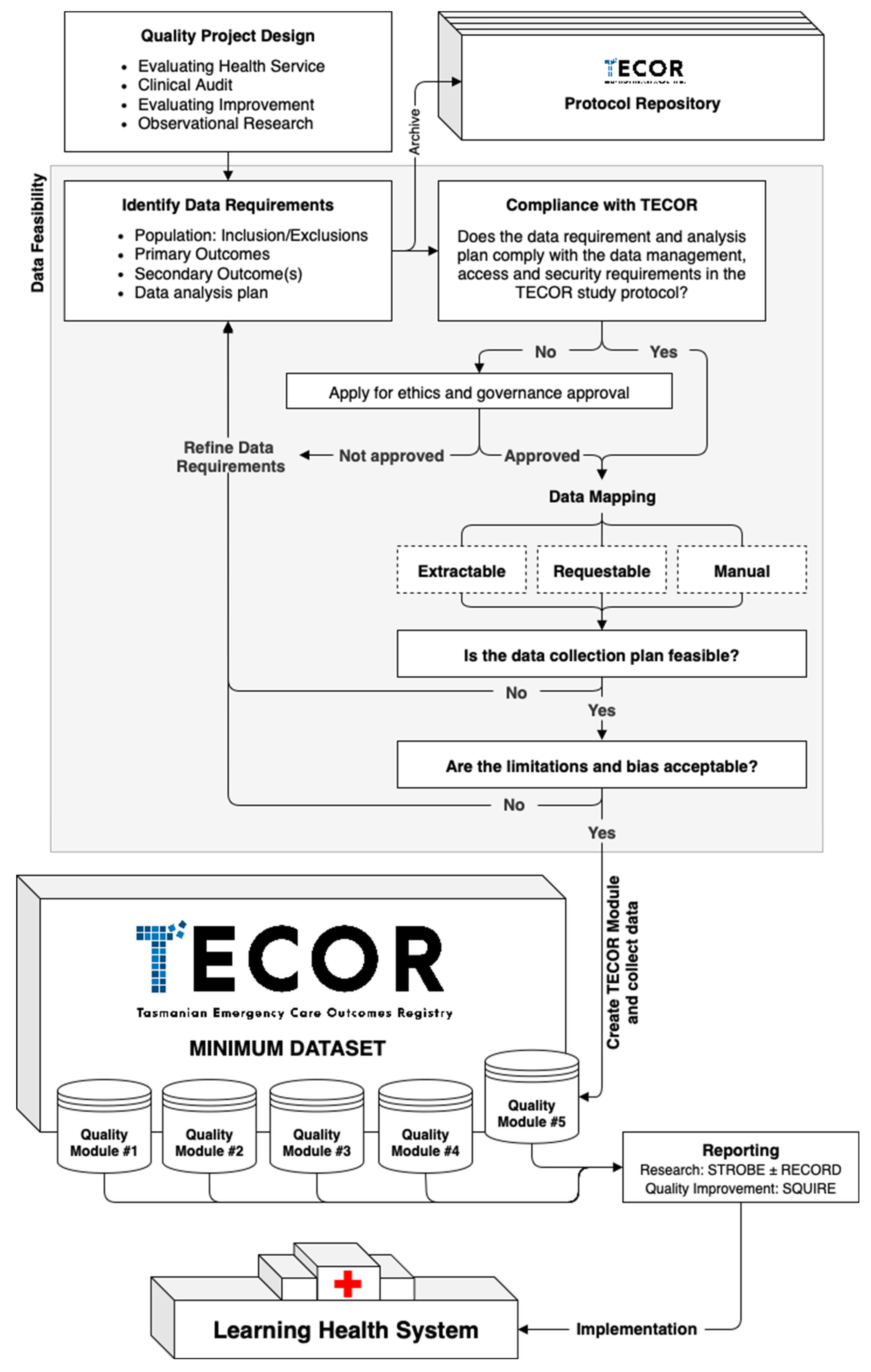

4.1. Project Setup and the TECOR Protocol Repository

4.2. Data Management and Security

4.3. Data Feasability

4.4. Quality Module Setup

4.5. Reporting

4.6. Integrating TECOR in a Learning Health System

4.7. Future Directions

4.8. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ED | Emergency Department |

| QI | Quality Improvement |

| QA | Quality Assurance |

| CQR | Clinical Quality Registry |

| OR | Observational Research |

| TECOR | The Tasmanian Emergency Care Outcomes Registry |

| MM | Modified Monash |

| REDCap | Research Electronic Data Capture |

| STROBE | Strengthening the Reporting of Observational Studies in Epidemiology |

| RECORD | Reporting of studies Conducted using Observational Routinely collected Data |

| SQUIRE | Standards for Quality Improvement Reporting Excellence |

References

- Lin, M.P.; Sharma, D.; Venkatesh, A.; Epstein, S.K.; Janke, A.; Genes, N.; Mehrotra, A.; Augustine, J.; Malcolm, B.; Goyal, P.; et al. The Clinical Emergency Data Registry: Structure, Use, and Limitations for Research. Ann. Emerg. Med. 2024, 83, 467–476. [Google Scholar] [CrossRef] [PubMed]

- Tran, V.; Barrington, G.; Page, S. Emergency Department Clinical Quality Registries: A Scoping Review. Healthcare 2025, 13, 1022. [Google Scholar] [CrossRef] [PubMed]

- Tran, V.; Barrington, G.; Page, S. The Tasmanian Emergency Care Outcomes Registry (TECOR) Protocol. Emerg. Care Med. 2024, 1, 153–164. [Google Scholar] [CrossRef]

- Lowthian, J.; Cameron, P. Improving Timeliness While Improving the Quality of Emergency Department Care. Emerg. Med. Australas. 2012, 24, 219–221. [Google Scholar] [CrossRef] [PubMed]

- Mostafa, R.; El-Atawi, K. Strategies to Measure and Improve Emergency Department Performance: A Review. Cureus 2024, 16, e52879. [Google Scholar] [CrossRef] [PubMed]

- Sartini, M.; Carbone, A.; Demartini, A.; Giribone, L.; Oliva, M.; Spagnolo, A.M.; Cremonesi, P.; Canale, F.; Cristina, M.L. Overcrowding in Emergency Department: Causes, Consequences, and Solutions—A Narrative Review. Healthcare 2022, 10, 1625. [Google Scholar] [CrossRef] [PubMed]

- Campbell, S.M.; Roland, M.O.; Buetow, S.A. Defining Quality of Care. Soc. Sci. Med. 2000, 51, 1611–1625. [Google Scholar] [CrossRef] [PubMed]

- Soldatenkova, A.; Calabrese, A.; Ghiron, N.L.; Tiburzi, L. Emergency Department Performance Assessment Using Administrative Data: A Managerial Framework. PLoS ONE 2023, 18, e0293401. [Google Scholar] [CrossRef] [PubMed]

- Craig, S.; O’Reilly, G.M.; Egerton-Warburton, D.; Jones, P.; Than, M.P.; Tran, V.; Taniar, D.; Moore, K.; Alvandi, A.; Tuxen-Vu, J.; et al. Making the Most of What We Have: What Does the Future Hold for Emergency Department Data? Emerg. Med. Australas. 2024, 36, 795–798. [Google Scholar] [CrossRef] [PubMed]

- Al-Surimi, K. Research versus Quality Improvement in Healthcare. Glob. J. Qual. Saf. Healthc. 2018, 1, 25–27. [Google Scholar] [CrossRef]

- Margolis, P.; Provost, L.P.; Schoettker, P.J.; Britto, M.T. Quality Improvement, Clinical Research, and Quality Improvement Research—Opportunities for Integration. Pediatr. Clin. N. Am. 2009, 56, 831–841. [Google Scholar] [CrossRef] [PubMed]

- Doezema, D.; Hauswald, M. Quality Improvement or Research: A Distinction without a Difference? IRB Ethics Hum. Res. 2002, 24, 9–12. [Google Scholar] [CrossRef]

- Hirschhorn, L.R.; Ramaswamy, R.; Devnani, M.; Wandersman, A.; Simpson, L.A.; Garcia-Elorrio, E. Research versus Practice in Quality Improvement? Understanding How We Can Bridge the Gap. Int. J. Qual. Health Care 2018, 30, 24–28. [Google Scholar] [CrossRef] [PubMed]

- Cooper, J.A.; McNair, L. How to Distinguish Research from Quality Improvement. J. Empir. Res. Hum. Res. Ethics 2015, 10, 209–210. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Solberg, L.I.; Mosser, G.; McDonald, S. The Three Faces of Performance Measurement: Improvement, Accountability, and Research. Jt. Comm. J. Qual. Improv. 1997, 23, 135–147. [Google Scholar] [CrossRef] [PubMed]

- Jones, B.; Vaux, E.; Olsson-Brown, A. How to Get Started in Quality Improvement. BMJ 2019, 364, k5408. [Google Scholar] [CrossRef] [PubMed]

- Zimmermann, G.d.S.; Siqueira, L.D.; Bohomol, E. Lean Six Sigma Methodology Application in Health Care Settings: An Integrative Review. Rev. Bras. Enferm. 2020, 73, e20190861. [Google Scholar] [CrossRef] [PubMed]

- Coughlin, K.; Posencheg, M.A. Common Quality Improvement Methodologies Including the Model for Improvement, Lean, and Six Sigma. Clin. Perinatol. 2023, 50, 285–306. [Google Scholar] [CrossRef] [PubMed]

- Hussein, M.; Pavlova, M.; Ghalwash, M.; Groot, W. The Impact of Hospital Accreditation on the Quality of Healthcare: A Systematic Literature Review. BMC Health Serv. Res. 2021, 21, 1057. [Google Scholar] [CrossRef] [PubMed]

- Hansen, K.; Boyle, A.; Holroyd, B.; Phillips, G.; Benger, J.; Chartier, L.B.; Lecky, F.; Vaillancourt, S.; Cameron, P.; Waligora, G.; et al. Updated Framework on Quality and Safety in Emergency Medicine. Emerg. Med. J. 2020, 37, 437–442. [Google Scholar] [CrossRef] [PubMed]

- Benson, K.; Hartz, A.J. A Comparison of Observational Studies and Randomized, Controlled Trials. N. Engl. J. Med. 2000, 342, 1878–1886. [Google Scholar] [CrossRef] [PubMed]

- Concato, J.; Shah, N.; Horwitz, R.I. Randomized, Controlled Trials, Observational Studies, and the Hierarchy of Research Designs. N. Engl. J. Med. 2000, 342, 1887–1892. [Google Scholar] [CrossRef]

- Vandenbroucke, J.P. Observational Research and Evidence-Based Medicine: What Should We Teach Young Physicians? J. Clin. Epidemiol. 1998, 51, 467–472. [Google Scholar] [CrossRef] [PubMed]

- Mann, C.J. Observational Research Methods. Research Design II: Cohort, Cross Sectional, and Case-Control Studies. Emerg. Med. J. 2003, 20, 54. [Google Scholar] [CrossRef] [PubMed]

- Thurlow, L.E.; Dam, P.J.V.; Prior, S.J.; Tran, V. How Tasmanian Emergency Departments ‘Choose Wisely’ When Investigating Suspected Pulmonary Embolism. Healthcare 2023, 11, 1599. [Google Scholar] [CrossRef] [PubMed]

- Tran, V.; Whitfield, J.; Askaroff, N.; Barrington, G. Procedural Sedation and Analgesia in an Australian Emergency Department: Results of the First 3 Months of a Procedural Sedation Registry. Anesthesia Res. 2024, 1, 157–167. [Google Scholar] [CrossRef]

- Evans, S.M.; Scott, I.A.; Johnson, N.P.; Cameron, P.A.; McNeil, J.J. Development of Clinical-quality Registries in Australia: The Way Forward. Med. J. Aust. 2011, 194, 360–363. [Google Scholar] [CrossRef] [PubMed]

- Hoque, D.M.E.; Kumari, V.; Hoque, M.; Ruseckaite, R.; Romero, L.; Evans, S.M. Impact of Clinical Registries on Quality of Patient Care and Clinical Outcomes: A Systematic Review. PLoS ONE 2017, 12, e0183667. [Google Scholar] [CrossRef] [PubMed]

- Hoque, D.M.E.; Kumari, V.; Ruseckaite, R.; Romero, L.; Evans, S.M. Impact of Clinical Registries on Quality of Patient Care and Health Outcomes: Protocol for a Systematic Review. BMJ Open 2016, 6, e010654. [Google Scholar] [CrossRef] [PubMed]

- Larsson, S.; Lawyer, P.; Garellick, G.; Lindahl, B.; Lundström, M. Use Of 13 Disease Registries In 5 Countries Demonstrates the Potential To Use Outcome Data To Improve Health Care’s Value. Health Aff. 2017, 31, 220–227. [Google Scholar] [CrossRef] [PubMed]

- Saberwal, G. Clinical Trial Registries: The Good, and the Not so Good. J. Biosci. 2024, 49, 90. [Google Scholar] [CrossRef]

- Gong, J.; Singer, Y.; Cleland, H.; Wood, F.; Cameron, P.; Tracy, L.M.; Gabbe, B.J. Driving Improved Burns Care and Patient Outcomes through Clinical Registry Data: A Review of Quality Indicators in the Burns Registry of Australia and New Zealand. Burns 2021, 47, 14–24. [Google Scholar] [CrossRef] [PubMed]

- Cadilhac, D.A.; Kim, J.; Lannin, N.A.; Kapral, M.K.; Schwamm, L.H.; Dennis, M.S.; Norrving, B.; Meretoja, A. National Stroke Registries for Monitoring and Improving the Quality of Hospital Care: A Systematic Review. Int. J. Stroke 2015, 11, 28–40. [Google Scholar] [CrossRef] [PubMed]

- Cameron, P.A.; Fitzgerald, M.C.; Curtis, K.; McKie, E.; Gabbe, B.; Earnest, A.; Christey, G.; Clarke, C.; Crozier, J.; Dinh, M.; et al. Over View of Major Traumatic Injury in Australia––Implications for Trauma System Design. Injury 2020, 51, 114–121. [Google Scholar] [CrossRef] [PubMed]

- Etkin, C.D.; Springer, B.D. The American Joint Replacement Registry—The First 5 Years. Arthroplast. Today 2017, 3, 67–69. [Google Scholar] [CrossRef] [PubMed]

- Versace, V.L.; Skinner, T.C.; Bourke, L.; Harvey, P.; Barnett, T. National Analysis of the Modified Monash Model, Population Distribution and a Socio-economic Index to Inform Rural Health Workforce Planning. Aust. J. Rural. Health 2021, 29, 801–810. [Google Scholar] [CrossRef] [PubMed]

- Harris, P.A.; Taylor, R.; Minor, B.L.; Elliott, V.; Fernandez, M.; O’Neal, L.; McLeod, L.; Delacqua, G.; Delacqua, F.; Kirby, J.; et al. The REDCap Consortium: Building an International Community of Software Platform Partners. J. Biomed. Inform. 2019, 95, 103208. [Google Scholar] [CrossRef] [PubMed]

- Harris, P.A.; Taylor, R.; Thielke, R.; Payne, J.; Gonzalez, N.; Conde, J.G. Research Electronic Data Capture (REDCap)—A Metadata-Driven Methodology and Workflow Process for Providing Translational Research Informatics Support. J. Biomed. Inform. 2009, 42, 377–381. [Google Scholar] [CrossRef] [PubMed]

- Ubbink, D.T.; Matthijssen, M.; Lemrini, S.; van Etten-Jamaludin, F.S.; Bloemers, F.W. Systematic Review of Barriers, Facilitators, and Tools to Promote Shared Decision Making in the Emergency Department. Acad. Emerg. Med. 2024, 31, 1037–1049. [Google Scholar] [CrossRef] [PubMed]

- Cohen, I.; Diao, Z.; Goyal, P.; Gupta, A.; Hawk, K.; Malcom, B.; Malicki, C.; Sharma, D.; Sweeney, B.; Weiner, S.G.; et al. Mapping Emergency Medicine Data to the Observational Medical Outcomes Partnership Common Data Model: A Gap Analysis of the American College of Emergency Physicians Clinical Emergency Data Registry. J. Am. Coll. Emerg. 2025, 6, 100016. [Google Scholar] [CrossRef] [PubMed]

- Carbonell, C.; Adegbulugbe, A.; Cheung, W.; Ruff, P. Barriers and Challenges to Implementing a Quality Improvement Program: Political and Administrative Challenges. JCO Glob. Oncol. 2024, 10, e2300455. [Google Scholar] [CrossRef] [PubMed]

- Chan, A.-W.; Boutron, I.; Hopewell, S.; Moher, D.; Schulz, K.F.; Collins, G.S.; Tunn, R.; Aggarwal, R.; Berkwits, M.; Berlin, J.A.; et al. SPIRIT 2025 Statement: Updated Guideline for Protocols of Randomised Trials. BMJ 2025, 389, e081477. [Google Scholar] [CrossRef] [PubMed]

- Ogrinc, G.; Davies, L.; Goodman, D.; Batalden, P.; Davidoff, F.; Stevens, D. SQUIRE 2.0 (Standards for QUality Improvement Reporting Excellence): Revised Publication Guidelines from a Detailed Consensus Process. BMJ Qual. Saf. 2016, 25, 986–992. [Google Scholar] [CrossRef] [PubMed]

- Kennedy, M.; Barnsteiner, J. The Quality of Clinician and Student Quality Improvement Reports: An Analysis of 8 Years of Submissions. J. Nurs. Sch. 2024, 56, 836–842. [Google Scholar] [CrossRef] [PubMed]

- Tawfik, G.M.; Giang, H.T.N.; Ghozy, S.; Altibi, A.M.; Kandil, H.; Le, H.-H.; Eid, P.S.; Radwan, I.; Makram, O.M.; Hien, T.T.T.; et al. Protocol Registration Issues of Systematic Review and Meta-Analysis Studies: A Survey of Global Researchers. BMC Méd. Res. Methodol. 2020, 20, 213. [Google Scholar] [CrossRef] [PubMed]

- Mačiulienė, M. Beyond Open Access: Conceptualizing Open Science for Knowledge Co-Creation. Front. Commun. 2022, 7, 907745. [Google Scholar] [CrossRef]

- Thibault, R.T.; Amaral, O.B.; Argolo, F.; Bandrowski, A.E.; Davidson, A.R.; Drude, N.I. Open Science 2.0: Towards a Truly Collaborative Research Ecosystem. PLoS Biol. 2023, 21, e3002362. [Google Scholar] [CrossRef] [PubMed]

- Pool, J.; Akhlaghpour, S.; Fatehi, F.; Burton-Jones, A. A Systematic Analysis of Failures in Protecting Personal Health Data: A Scoping Review. Int. J. Inf. Manag. 2024, 74, 102719. [Google Scholar] [CrossRef]

- Au, S.; Murray, E. Data Management for Quality Improvement: How to Collect and Manage Data. AACN Adv. Crit. Care 2021, 32, 213–218. [Google Scholar] [CrossRef] [PubMed]

- Von Elm, E.; Altman, D.G.; Egger, M.; Pocock, S.J.; Gøtzsche, P.C.; Vandenbroucke, J.P.; Initiative, S. Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) Statement: Guidelines for Reporting Observational Studies. BMJ 2007, 335, 806. [Google Scholar] [CrossRef] [PubMed]

- Torab-Miandoab, A.; Samad-Soltani, T.; Jodati, A.; Rezaei-Hachesu, P. Interoperability of Heterogeneous Health Information Systems: A Systematic Literature Review. BMC Méd. Inform. Decis. Mak. 2023, 23, 18. [Google Scholar] [CrossRef] [PubMed]

- Azarm-Daigle, M.; Kuziemsky, C.; Peyton, L. A Review of Cross Organizational Healthcare Data Sharing. Procedia Comput. Sci. 2015, 63, 425–432. [Google Scholar] [CrossRef]

- Sonkamble, R.G.; Phansalkar, S.P.; Potdar, V.M.; Bongale, A.M. Survey of Interoperability in Electronic Health Records Management and Proposed Blockchain Based Framework: MyBlockEHR. IEEE Access 2021, 9, 158367–158401. [Google Scholar] [CrossRef]

- AbuHalimeh, A. Improving Data Quality in Clinical Research Informatics Tools. Front. Big Data 2022, 5, 871897. [Google Scholar] [CrossRef] [PubMed]

- Chishtie, J.; Sapiro, N.; Wiebe, N.; Rabatach, L.; Lorenzetti, D.; Leung, A.A.; Rabi, D.; Quan, H.; Eastwood, C.A. Use of Epic Electronic Health Record System for Health Care Research: Scoping Review. J. Méd. Internet Res. 2023, 25, e51003. [Google Scholar] [CrossRef] [PubMed]

- Gavrilov, G.; Vlahu-Gjorgievska, E.; Trajkovik, V. Healthcare Data Warehouse System Supporting Cross-Border Interoperability. Health Inform. J. 2020, 26, 1321–1332. [Google Scholar] [CrossRef] [PubMed]

- Kaji, A.H.; Schriger, D.; Green, S. Looking Through the Retrospectoscope: Reducing Bias in Emergency Medicine Chart Review Studies. Ann. Emerg. Med. 2014, 64, 292–298. [Google Scholar] [CrossRef] [PubMed]

- Benchimol, E.I.; Smeeth, L.; Guttmann, A.; Harron, K.; Moher, D.; Petersen, I.; Sørensen, H.T.; von Elm, E.; Langan, S.M.; Committee, R.W. The REporting of Studies Conducted Using Observational Routinely-Collected Health Data (RECORD) Statement. PLoS Med. 2015, 12, e1001885. [Google Scholar] [CrossRef] [PubMed]

- Reid, R.J.; Wodchis, W.P.; Kuluski, K.; Lee-Foon, N.K.; Lavis, J.N.; Rosella, L.C.; Desveaux, L. Actioning the Learning Health System: An Applied Framework for Integrating Research into Health Systems. SSM-Health Syst. 2024, 2, 100010. [Google Scholar] [CrossRef]

- Teede, H.; Cadilhac, D.A.; Purvis, T.; Kilkenny, M.F.; Campbell, B.C.V.; English, C.; Johnson, A.; Callander, E.; Grimley, R.S.; Levi, C.; et al. Learning Together for Better Health Using an Evidence-Based Learning Health System Framework: A Case Study in Stroke. BMC Med. 2024, 22, 198. [Google Scholar] [CrossRef] [PubMed]

| Characteristic | Quality Improvement | Quality Assurance | Observational Research | Clinical Quality Registry |

|---|---|---|---|---|

| Goal | Improvement | Compliance | New knowledge | All |

| Instigator | Self-determinant | Regulated standards | Self-determinant | Co-designed |

| Action Approach | Proactive | Reactive | Proactive | Proactive |

| Timeline | Defined | Continuous | Defined | Continuous |

| Sample Size | Just enough | Just enough | Just in case | As much as possible |

| Bias | Accept | Minimize | Measure/Adjust | Measure/Adjust |

| Ethical Authority | Organization | Regulator | Ethics Committee | Ethics Committee |

| Governance | Organization | Regulator | Researcher(s) | Registry owner(s) |

| Effort | Low-Medium | Medium | Medium-High | High |

| Method | Project |

|---|---|

| QI | Adherence to the Chest Pain Pathway at the Royal Hobart Hospital ED |

| QI | Alcohol and Drug Presentations in Youth and Adults |

| CQR | Australia and New Zealand Emergency Department Airway Registry |

| CQR | Australia and New Zealand Hip Fracture Registry |

| CQR | Australia and New Zealand Trauma Registry |

| CQR | Australian Stroke Clinical Registry |

| OR | Does prescribing of immediate release oxycodone by emergency medicine physicians result in persistence of Schedule 8 opioids following discharge? |

| OR | Emergency department presentations with a mental health diagnosis in Australia, by jurisdiction and by sex, 2004–05 to 2016–17 |

| CQR | Emergency Drugs Network of Australia |

| OR | Epidemiology and clinical features of emergency department patients with suspected and confirmed COVID-19: A multisite report from the COVED Quality Improvement Project for July 2020 (COVED 3) |

| OR | Epidemiology and clinical features of emergency department patients with suspected COVID-19: Insights from Australia’s ‘second wave’ (COVED-4) |

| OR | Evaluating the use of computed tomography pulmonary angiography in Tasmanian emergency departments |

| QI | Geriatric trauma management |

| OR | Is there a ‘weekend effect’ in subacute and minor injuries at a mixed tertiary ED? |

| QI 1 | Medical Emergency Team Call Database |

| OR | Medical management of blood pressure and heart rate in acute type b aortic dissections: A single quaternary Centre perspective |

| OR | Pain Management and Sedation in Paediatric Ileocolic Intussusception: A Global, Multicenter, Retrospective Study |

| QI | Pain relief for major burns patients in ED: Experience at a tertiary burns referral centre and literature review |

| QI | Pelvic Pain management in the ED |

| QI 1 | Procedural Sedation in Emergency Departments |

| OR | Ruptured abdominal aortic aneurysms a study of prevalence, associated comorbidities, intervention techniques and mortality |

| QI | Sepsis—time to antibiotics and pathway audit |

| QI | Syncope clinical quality audit |

| QI | Trauma primary surveys |

| OR | Trend of emergency department presentations with a mental health diagnosis in Australia by diagnostic group, 2004–05 to 2016–17 |

| OR | Trend of emergency department presentations with a mental health diagnosis in Australia by jurisdiction and by sex, 2004–05 to 2016–17 |

| OR | Trends of emergency department presentations with a mental health diagnosis by age, Australia, 2004-05 to 2016–17: A secondary data analysis |

| QI | Validation of Alvarado Score in Male Paediatric population |

| QI | Whole body CT scan ordering in Trauma |

| Data Sources for TECOR Datapoint(s) | Data Extraction | |

|---|---|---|

| Health Executive Analytics Reporting Tool (HEART): Emergency Department Dashboard by QlikTech Australia Pty Ltd. | ||

| Age at presentation 1 Sex 1 Australian Postcode 1 Remoteness classification 1 Country of birth 1 Indigenous status 1 Hospital 1 Mode of arrival 1 Date, time of presentation 1 Date, time of triage 1 ED end date, time 1 Service episode length (total min) 1 Type of visit 1 Triage category 1 ED wait time 1 Disposition 1 Date seen by medical officer 1 Time seen by medical officer 1 | ED stay—urgency related group major diagnostic block 1 ED stay—principal diagnosis 1 ED stay—diagnosis classification type 1 ED stay—physical departure date 1 ED stay—physical departure time 1 Patient—compensable status 1 ED stay—additional diagnosis, code 1 Universal Record Number 1 Emergency Attendance ID 1 ED triage/presenting complaint 1 Admission time, date 1 Episode of care—source of funding, patient funding source 1 Emergency Department Capacity | Self-extractable |

| Health Executive Analytics Reporting Tool (HEART): Admitted Patient Dashboard by QlikTech Australia Pty Ltd. | ||

| Episode of admitted patient care Admission date, time 1 Separation date, time, mode Admission urgency status Days of hospital-in-the-home care | Hospital Capacity Episode of admitted patient care Length of stay in intensive care unit, total hours Episode of care Principal diagnosis, code Secondary diagnosis and beyond, code | Self-extractable |

| i.Patient Manager (IPM) by Dedalus (Australia) Pty Ltd. | ||

| Bed request time, date Bed available time, date | Admission/Discharge/Transfer time | Manual entry |

| TrakCare by InterSystems Australia Pty Ltd. | ||

| Pathology testing requested time, date and type | Imaging testing requested time, date and type | On Request |

| Communications Code Call Database 2 | ||

| Code Black, Red, Yellow, Purple, Brown, Blue, Care Call date, time, location Code Stroke, STEMI, Trauma Call date, time, location | Manual entry | |

| MedTasker by Nimblic Pty Ltd. | ||

| Referred by, position, specialty, time, date Referred to, position, specialty, time, date | Consultation, position, specialty, time, date | Self-extractable |

| Digital Medical Records by InfoMedix Pty Ltd. | ||

| Clinical observations Blood products given time and date Procedures performed Medication(s) prescribed | Clinical progress notes Goals of Care Radiology, Endoscopy result(s) Echocardiogram, Cardiac angiogram result(s) | Manual entry |

| IntelliSpace Picture Archiving and Communication System by Philips Electronics Australia Ltd. | ||

| Radiological imaging modality, date, time | On Request | |

| Pathology Clinical Information System by Kestral Pty Ltd. | ||

| Pathology test(s) and blood product(s) result(s) | On Request | |

| eReferrals by HealthCare Software Pty Ltd. | ||

| Outpatient referral(s) | On Request | |

| Hospital Clinical Suite by HealthCare Software Pty Ltd. | ||

| Discharge Prescription(s) | On Request | |

| Safety and Reporting Learning System (SLRS) by RLDatix Australia Pty Ltd. | ||

| Incident and safety event reporting | On Request | |

| Code | Definition |

|---|---|

| Self-extractable | Staff member(s) employed by the ED are ABLE to extract data to import to TECOR |

| On Request | Staff member(s) employed by the ED are UNABLE to extract data but able to request data to import to TECOR |

| Manual entry | Datapoints must be entered manually from the source of truth |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tran, V.; Thurlow, L.; Page, S.; Barrington, G. Framework for a Modular Emergency Departments Registry: A Case Study of the Tasmanian Emergency Care Outcomes Registry (TECOR). Hospitals 2025, 2, 18. https://doi.org/10.3390/hospitals2030018

Tran V, Thurlow L, Page S, Barrington G. Framework for a Modular Emergency Departments Registry: A Case Study of the Tasmanian Emergency Care Outcomes Registry (TECOR). Hospitals. 2025; 2(3):18. https://doi.org/10.3390/hospitals2030018

Chicago/Turabian StyleTran, Viet, Lauren Thurlow, Simone Page, and Giles Barrington. 2025. "Framework for a Modular Emergency Departments Registry: A Case Study of the Tasmanian Emergency Care Outcomes Registry (TECOR)" Hospitals 2, no. 3: 18. https://doi.org/10.3390/hospitals2030018

APA StyleTran, V., Thurlow, L., Page, S., & Barrington, G. (2025). Framework for a Modular Emergency Departments Registry: A Case Study of the Tasmanian Emergency Care Outcomes Registry (TECOR). Hospitals, 2(3), 18. https://doi.org/10.3390/hospitals2030018