Personalizing Multimedia Content Recommendations for Intelligent Vehicles Through Text–Image Embedding Approaches

Abstract

:1. Introduction

2. Related Works

2.1. Multimedia Recommendation Using Text and Image Embedding

2.2. Text Embedding

2.3. Image Feature Extraction

3. Text–Image Embedding Using Pre-Trained Models

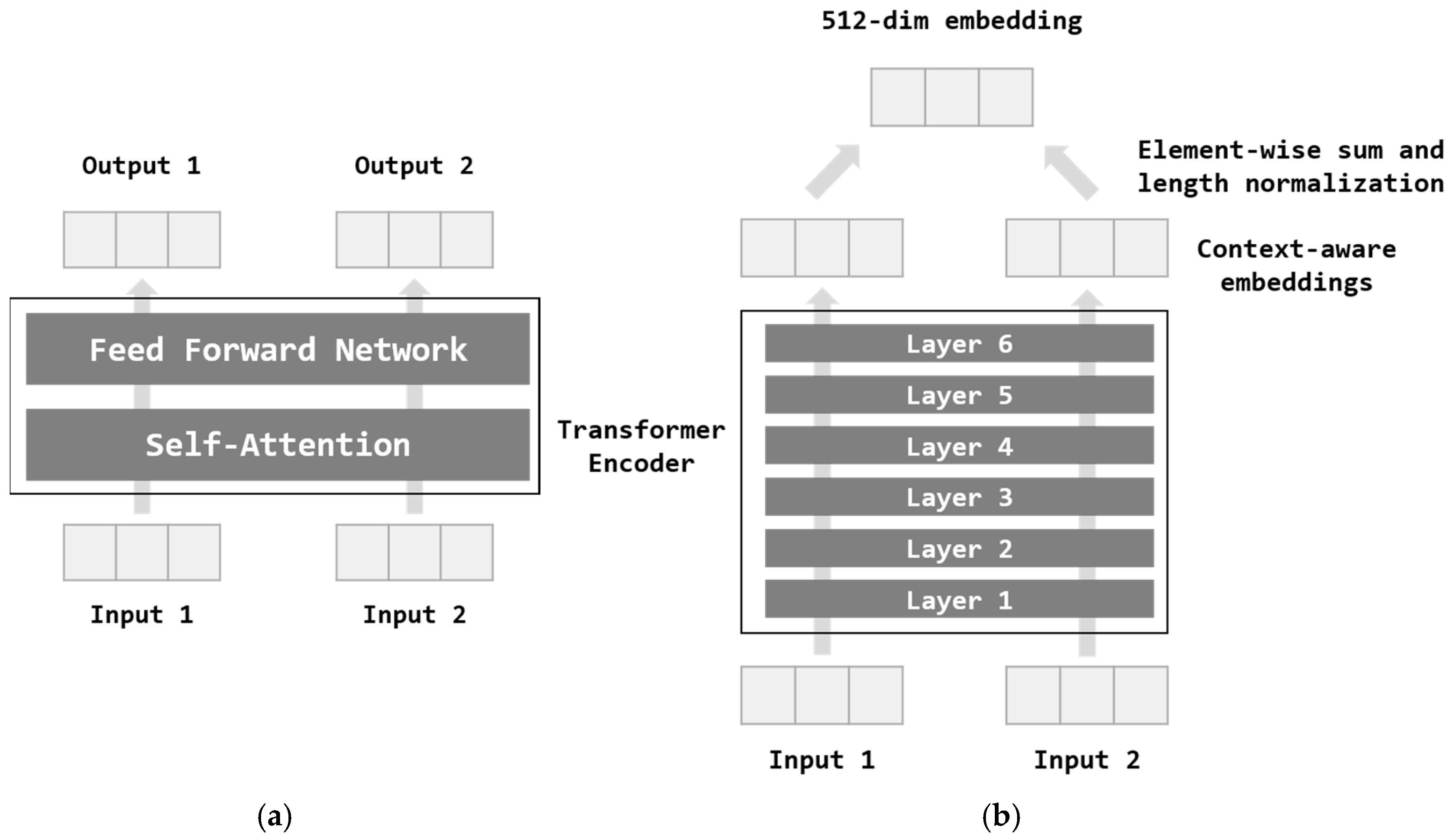

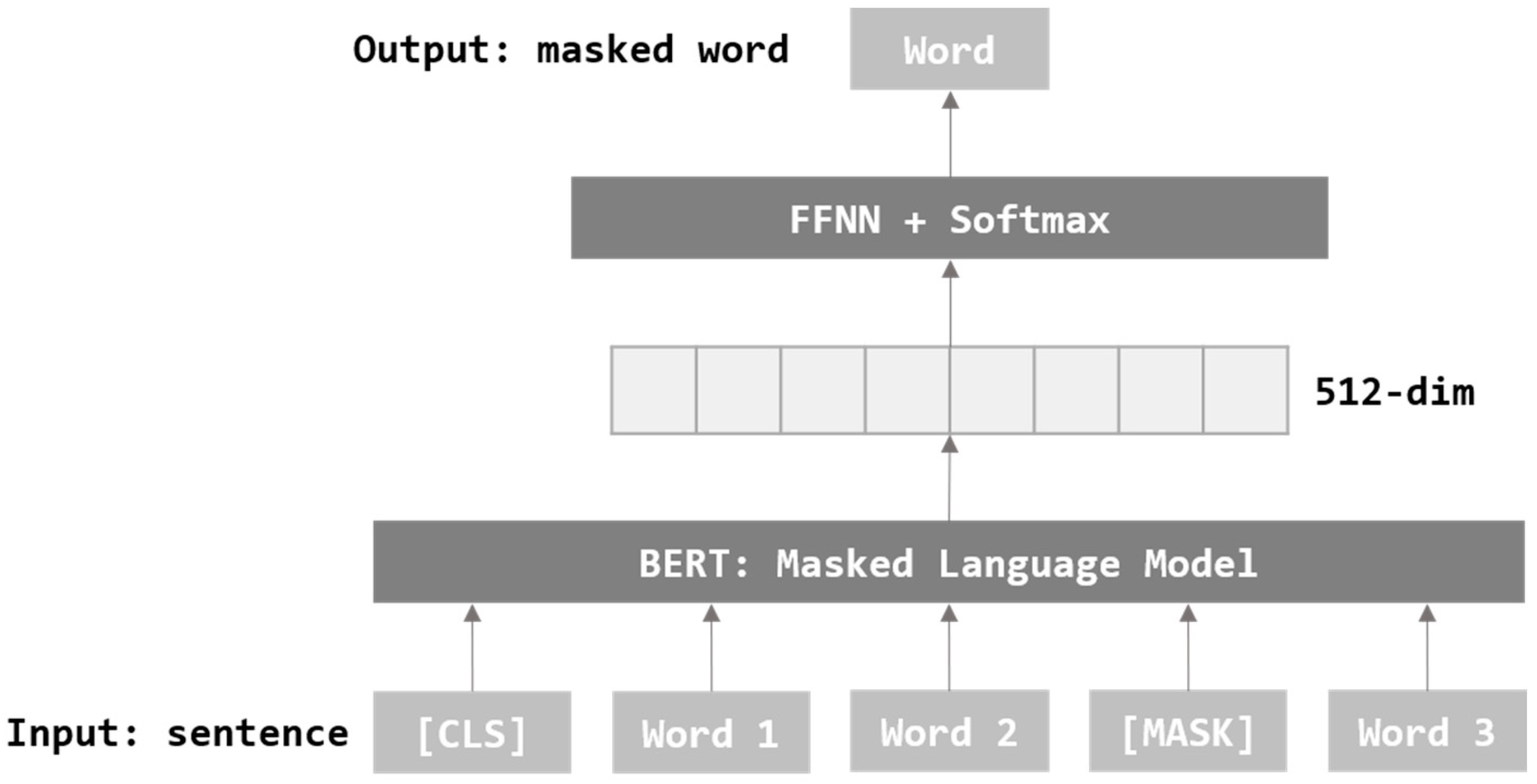

3.1. Text Embedding

3.2. Image Feature Extraction

3.3. Text–Image Embedding

4. Experiments

4.1. Text–Image Embedding

4.2. Performance Comparison of Text Embedding

4.3. Performance Comparison of Text–Image Embedding

4.4. Multimedia Recommendation Using Text–Image Embedding

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Rydenfelt, H. Transforming media agency? Approaches to automation in Finnish legacy media. New Media Soc. 2022, 24, 2598–2613. [Google Scholar] [CrossRef]

- Hong, T.; Lim, K.; Kim, P. Video-Text Embedding based Multimedia Recommendation for Intelligent Vehicular Environments. In Proceedings of the 2021 IEEE 94th Vehicular Technology Conference (VTC2021-Fall), Virtual Conference, 27 September–28 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–5. [Google Scholar]

- Sterling, J.A.; Montemore, M.M. Combining citation network information and text similarity for research article recommender systems. IEEE Access 2021, 10, 16–23. [Google Scholar] [CrossRef]

- Kuanr, M.; Mohapatra, P.; Mittal, S.; Maindarkar, M.; Fouda, M.M.; Saba, L.; Saxena, S.; Suri, J.S. Recommender system for the efficient treatment of COVID-19 using a convolutional neural network model and image similarity. Diagnostics 2022, 12, 2700. [Google Scholar] [CrossRef] [PubMed]

- Lee, T. Waymo Finally Launches an Actual Public, Driverless Taxi Service. Ars Technica, Magazine Article. 2020. Available online: https://arstechnica.com/cars/2020/10/waymo-finally-launches-an-actual-public-driverless-taxi-service/ (accessed on 20 February 2021).

- Levin, T. Elon Musk Says Tesla Will Release Its ‘Full Self-Driving’ Feature as a Subscription in Early 2021. Business Insider Australia, Magazine Article. 2020. Available online: https://www.businessinsider.com/tesla-autopilot-full-self-driving-subscription-early-2021-elon-musk-2020-12 (accessed on 20 February 2021).

- Barabás, I.; Todoruţ, A.; Cordoş, N.; Molea, A. Current challenges in autonomous driving. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2017; Volume 252, p. 012096. [Google Scholar]

- On-Road Automated Driving (ORAD) Committee. Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles: J3016. SAE International, Standard. 2021. Available online: https://www.sae.org/standards/content/j3016 (accessed on 20 February 2021).

- Moradloo, N.; Mahdinia, I.; Khattak, A.J. Safety in higher level automated vehicles: Investigating edge cases in crashes of vehicles equipped with automated driving systems. Accid. Anal. Prev. 2024, 203, 107607. [Google Scholar] [CrossRef]

- Stefanovic, M. Leveling Up AI: How Close Are We to Self-Driving Cars? Here 360 News. 2024. Available online: https://www.here.com/learn/blog/ai-how-close-are-we-to-self-driving-cars (accessed on 23 April 2024).

- When Will Self-Driving Cars Be Available? (n.d.) Imagination. Available online: https://www.imaginationtech.com/future-of-automotive/when-will-autonomous-cars-be-available/ (accessed on 23 April 2024).

- LG Display. LG Display Unveils the World’s Largest Automotive Display to Advance Future Mobility at CES 2024. PR Newswire. 2024. Available online: https://www.prnewswire.com/news-releases/lg-display-unveils-the-worlds-largest-automotive-display-to-advance-future-mobility-at-ces-2024-302029558.html (accessed on 30 March 2024).

- Ruan, Q.; Zhang, Y.; Zheng, Y.; Wang, Y.; Wu, Q.; Ma, T.; Liu, X. Recommendation Model Based on a Heterogeneous Personalized Spacey Embedding Method. Symmetry 2021, 13, 290. [Google Scholar] [CrossRef]

- Plummer, B.A.; Kordas, P.; Kiapour, M.H.; Zheng, S.; Piramuthu, R.; Lazebnik, S. Conditional image-text embedding networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 249–264. [Google Scholar]

- Wang, L.; Li, Y.; Lazebnik, S. Learning deep structure-preserving image-text embeddings. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 5005–5013. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient estimation of word representations in vector space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Pagliardini, M.; Gupta, P.; Jaggi, M. Unsupervised learning of sentence embeddings using compositional n-gram features. arXiv 2017, arXiv:1703.02507. [Google Scholar]

- Cer, D.; Yang, Y.; Kong, S.Y.; Hua, N.; Limtiaco, N.; John, R.S.; Constant, N.; Guajardo-Cespedes, M.; Yuan, S.; Tar, C.; et al. Universal sentence encoder. arXiv 2018, arXiv:1803.11175. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Zoph, B.; Le, Q.V. Neural architecture search with reinforcement learning. arXiv 2016, arXiv:1611.01578. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2018), Salt Lake City, UT, USA, 18–22 June 2018; pp. 8697–8710. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- MovieLens Datasets. Available online: https://grouplens.org/datasets/movielens/ (accessed on 20 February 2021).

| Pre-Trained Text Model Score | Sent2Vec | Universal Sentence Encoder | BERT | RoBERTa |

|---|---|---|---|---|

| Cosine Similarity | 0.8729 | 0.7747 | 0.8934 | 0.886 |

| Euclidean Distance | 0.8544 | 0.4458 | 0.8533 | 0.8433 |

| Arccos Distance | 0.8174 | 0.429 | 0.8252 | 0.8249 |

| Pre-Trained Text Model Pre-Trained Image Model | Sent2Vec | Universal Sentence Encoder | BERT | RoBERTa |

|---|---|---|---|---|

| EfficientNet | 0.6793 | 0.61 | 0.9152 | 0.7992 |

| Xception | 0.7986 | 0.5319 | 0.8654 | 0.5153 |

| NASNetLarge | 0.6587 | 0.5268 | 0.6744 | 0.5890 |

| DenseNet | 0.4203 | 0.7063 | 0.7788 | 0.7493 |

| Pre-Trained Text Model Pre-Trained Image Model | Sent2Vec | Universal Sentence Encoder | BERT | RoBERTa |

|---|---|---|---|---|

| EfficientNet | 0.6748 | 0.5665 | 0.8867 | 0.7589 |

| Xception | 0.6564 | 0.4078 | 0.8369 | 0.4901 |

| NASNetLarge | 0.5177 | 0.4 | 0.6544 | 0.5123 |

| DenseNet | 0.4157 | 0.6567 | 0.7506 | 0.6541 |

| Pre-Trained Text Model Pre-Trained Image Model | Sent2Vec | Universal Sentence Encoder | BERT | RoBERTa |

|---|---|---|---|---|

| EfficientNet | 0.6001 | 0.5811 | 0.8587 | 0.7356 |

| Xception | 0.6332 | 0.634 | 0.8201 | 0.4578 |

| NASNetLarge | 0.4612 | 0.6384 | 0.6214 | 0.4657 |

| DenseNet | 0.3849 | 0.5707 | 0.7016 | 0.6310 |

| User’s Query | |||

|---|---|---|---|

| An Animated Popular Movie with a Prince and Princess. The Characters Have an Adventure in the Fantasy World. | |||

| No. | Cosine Similarity | MovieID | Movie Title |

| 1 | 0.9221 | 1022 | Cinderella (1950) |

| 2 | 0.9208 | 595 | Beauty and the Beast (1991) |

| 3 | 0.911 | 313 | The Swan Princess (1994) |

| 4 | 0.8734 | 1032 | Alice in Wonderland (1951) |

| 5 | 0.8476 | 594 | Snow White and the Seven Dwarfs (1937) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, J.-A.; Hong, T.; Lim, K. Personalizing Multimedia Content Recommendations for Intelligent Vehicles Through Text–Image Embedding Approaches. Analytics 2025, 4, 4. https://doi.org/10.3390/analytics4010004

Choi J-A, Hong T, Lim K. Personalizing Multimedia Content Recommendations for Intelligent Vehicles Through Text–Image Embedding Approaches. Analytics. 2025; 4(1):4. https://doi.org/10.3390/analytics4010004

Chicago/Turabian StyleChoi, Jin-A, Taekeun Hong, and Kiho Lim. 2025. "Personalizing Multimedia Content Recommendations for Intelligent Vehicles Through Text–Image Embedding Approaches" Analytics 4, no. 1: 4. https://doi.org/10.3390/analytics4010004

APA StyleChoi, J.-A., Hong, T., & Lim, K. (2025). Personalizing Multimedia Content Recommendations for Intelligent Vehicles Through Text–Image Embedding Approaches. Analytics, 4(1), 4. https://doi.org/10.3390/analytics4010004