2.1. Importance of Websites Loading Time

It is a common phenomenon for a user to enter a website and, due to its slow loading speed time, abandon the website and return to the search engines’ results pages to find other websites with faster loading content. Slow loading times cause a frustration to users, making them abandon webpages even if the content meets their informational needs [

18]. In a general context, prior investigations showed that an increase in the loading time from one to three seconds could negatively impact the users’ exploration, increasing in parallel the bounce rate by up to 32% [

11]. Furthermore, BCC lost 10% of users for every additional second the website took to load its content [

12]. Another recent study in Operations Research indicated that website visitors are most sensitive to website slowdowns at the checkout webpages and least sensitive to abandoning the content on homepages [

14]. More specifically, the researchers indicated that a 10% increase in the load time is aligned with 1.7 percentage points of abandonments.

Speed matters when it comes to the users’ experience in websites. When websites express a fast loading time, visitors become more engaged, and their interaction with the content increases in terms of the depth of exploration and visit duration [

15]. High-performance websites, in terms of the speed component, retain users, exploring products and services further. For example, Pinterest increased the speed performance of specific landing pages for mobile devices, boosting organic search traffic, webpages per visit, and sign-up by up to 15% [

16]. Another practical example comes from the COOK case, a growing company in the food industry. The conducted research found that the decrease in the website loading speed time by 850 milliseconds returned an increase in the conversion rate by up to 7%, pages per session by up to 10%, and a bounce rate reduction by up to 7% as well [

19]. Regarding the paid search traffic performance optimization, Santander increased the speed performance to the landing pages by 23%, increased the click-through rate by 11%, and decreased the cost-per-click by 22%, while the cost-per-conversion fell by 15% [

20].

Speed also matters when it comes to online revenues. For Mobify, with the every 100 milliseconds decrease in the homepage and check-out webpage, session-based conversions increased from 1.11% to 1.53%. This decrease has been aligned with annual revenue of USD 380,000 up to USD 530,000 [

21]. AutoAnything is another practical example. A faster loading time resulted in a 12–13% increase in online sales [

22]. In addition, Furniture Village found that after decreasing the loading speed time of their website by 20%, there was an increase of 10% in the conversion rate for mobile devices, a 9% decrease in the bounce rate, and a 12% growth in online revenues coming from mobile devices [

23].

2.2. Prior Efforts to Assess and Improve Speed Performance

One of the first attempts to highlight the speed performance and how it impacts the users’ behavior within websites comes from Nah [

24]. According to the findings of this study, the presence of feedback increases the Web users’ tolerable waiting time. At the same time, the author indicated that the tolerable waiting time for an information retrieval is approximately 2 s. In the same year of research, Galleta et al. [

25] indicated that the users’ behavior in seeking information within websites began to flatten after waiting over 4 s. Another critical finding comes from Munyaradzi and colleagues [

26]. The author suggested a rule of thumb regarding a website’s size and its loading time, which should not exceed 8 s. A recent study investigating webpages’ speed performance comes from the Indonesian region. The authors tried to harvest data through the GTMetrix tool to produce and present initial speed performance estimations from 61 journal websites and their adopted content management system (CMS) [

27]. Their results indicated that although the Open Journal System articulates a better speed performance than other CMSs, most of the examined cases resulted in a moderate or poor speed performance.

Kaur et al. [

28] proceeded into another study that measures the websites size and loading time using several web tools. The authors investigated up to twelve university websites from the Indian region. Several tools were used by the authors, such as Pingdom, to estimate the page size in bytes, Website Grader to estimate the overall performance, and server requests using the GTMetrix. They also used the PageSpeed Insights tool of Google (PSI), indicating that the examined webpages resulted in a load time ranging from 0.14 up to 10.81 s.

Regarding PageSpeed Insights, several efforts have already adopted the tool to estimate websites’ performance. Similarly, Verma and Jaiswal [

29] used PSI to examine the top-thirty medical universities’ websites in the Indian region. They proceeded into an initial holistic performance estimation for the 30 examined webpages using the two primary metrics of PSI: the PageSpeed Insights score for mobile and desktop. In almost the same manner, one year later, Patel and Vyas [

30] tried to examine the performance of the websites’ of the open universities in India once more. The researchers used several web tools to retrieve technical performance data about their examined websites cases. Among them, PSI has been used to estimate the speed performance for mobile and desktop. In addition, a detailed comparison took place regarding the higher performance per examined website. Nevertheless, it was out of the researchers’ scope to include suggestions for improving the speed performance.

Another research contribution using PSI comes from Amjad et al. [

31]. The authors tried to harvest the performance of ten e-commerce websites in Bangladesh using multiple parameters. Such as the fully loaded time of requests, first CPU idle, speed index, start render, fully loaded time of the content, document complete time, last painted hero, first contentful paint, and the first byte. PSI has been used to retrieve data for some of these parameters: the speed index, first CPU idle, and first contentful paint. Likewise to Patel and Vyas [

30], Amjad and colleagues [

31] proceeded to compare the results among the examined e-commerce websites. However, for all the authors above, it was out of their research scope to suggest practical implications for improving the speed performance.

Bocchi et al. [

32] used three different web tools to examine the quality of experience of web users while also trying to find similarities in the metrics results among the three tools. They used PSI, Yahoo’s YSlow, and Show Slow, developed by Chernyshev [

33]. They retrieved data are from the top 100 websites as Alexa listed them to discover possible correlations between the above speed performance tools. The results did not show statistically significant correlations between the PSI and the other tools. However, the authors proposed other metrics that focused exclusively on simplifying the performance measurement systems of the website speed.

In another study coming from the LAMs’ context, Krstić and Masliković [

34] used PSI to examine the speed performance of the libraries, archives, and museum institutions of the Serbia region. The authors extracted a low up to moderate the speed performance for the examined LAMs. More specifically, national libraries resulted in a score of 52/100, archives up to 55/100, and national museums up to 51/100. However, there was no explicit indication if these results correspond to the mobile or desktop speed performance scores. Moreover, Redkina [

35] examined six domains of national libraries regarding their speed performance in mobile and desktop devices through the PSI. Generally, all the results indicated a difference in the rates as the mobile performance ranged from 8 to 16 units less than the desktop estimations.

However, based on the current research efforts, several gaps are derived. Most of these studies do not investigate the PSI metrics in depth, but they consider only the two primary metrics: the total speed performance on mobile and desktop devices. In addition, except for one study by Bocchi et al. [

32], the rest investigate only a limited number of cases. Therefore, further effort is needed to examine a larger number of webpages and the impact of each PSI metric on their loading speed time. This effort will also increase the generalizability of the results regarding the code elements that impact the webpage loading time. Moreover, it is noted that apart from Krstić and Masliković [

34] and Redkina [

35], there is no other prior research effort that involves a set of metrics to examine the LAMs webpages’ speed performance.

Another research gap is related to the efforts of transforming the derived technical analytics into valuable and practical insights that administrators could utilize to improve the webpages’ speed performance. More specifically, there is a need to propose a methodology capable of prioritizing which of the speed metrics needs rectification first, aiming to reduce the speed loading time while setting priorities within the context of the requirements of the engineering strategy [

36]. In other words, we assume that some metrics might impact on a higher level to reduce the webpages’ loading time, compared to others, on desktop and mobile devices. By extracting this kind of information, LAMs administrators could support, in a well-informed way, what to prioritize for optimization and why in specific webpages while combining both analytical thinking and prior knowledge and experience [

37].

The loading time of webpages concerning their impact on the total performance of a landing page is another aspect that needs to be highlighted. More specifically, LAMs administrators often try to develop campaigns for promoting services or organizational events, creating landing pages where potential visitors will get information about these services or events. However, visitors will get frustrated if the landing page does not express a sufficient loading speed time. For example, in the case of paid search advertising campaigns, if the usability of the landing page is poor in terms of the loading time, the cost-per-click will be increased, as the quality of a landing page impacts the ads’ performance [

13,

38]. Therefore, it should be beneficial for LAMs administrators to get an in-depth overview of the metrics that impact most webpages’ speed performance, incorporating this knowledge into the landing pages’ design and development phase.

Lastly, another drawback is related to the existence of a speed performance measurement system that estimates the loading speed time of a webpage expressing reliability, internal consistency, and validity in terms of its involved metrics. Covering this gap will help LAMs administrators rely on a speed performance measurement system that, if re-used, will return in similar results and indications for improvement [

39]. One step further, there is a need to deploy the proposed webpage speed performance measurement system in a large-scale amount of LAMs cases from all over the world. This will increase the generalization of the results and the reliability that the proposed performance measurement system stands with a sufficient internal consistency and validity. In other words, the more the LAMs webpages cases are examined, the more the results will depict the overall picture of their speed performance reliably and validly.

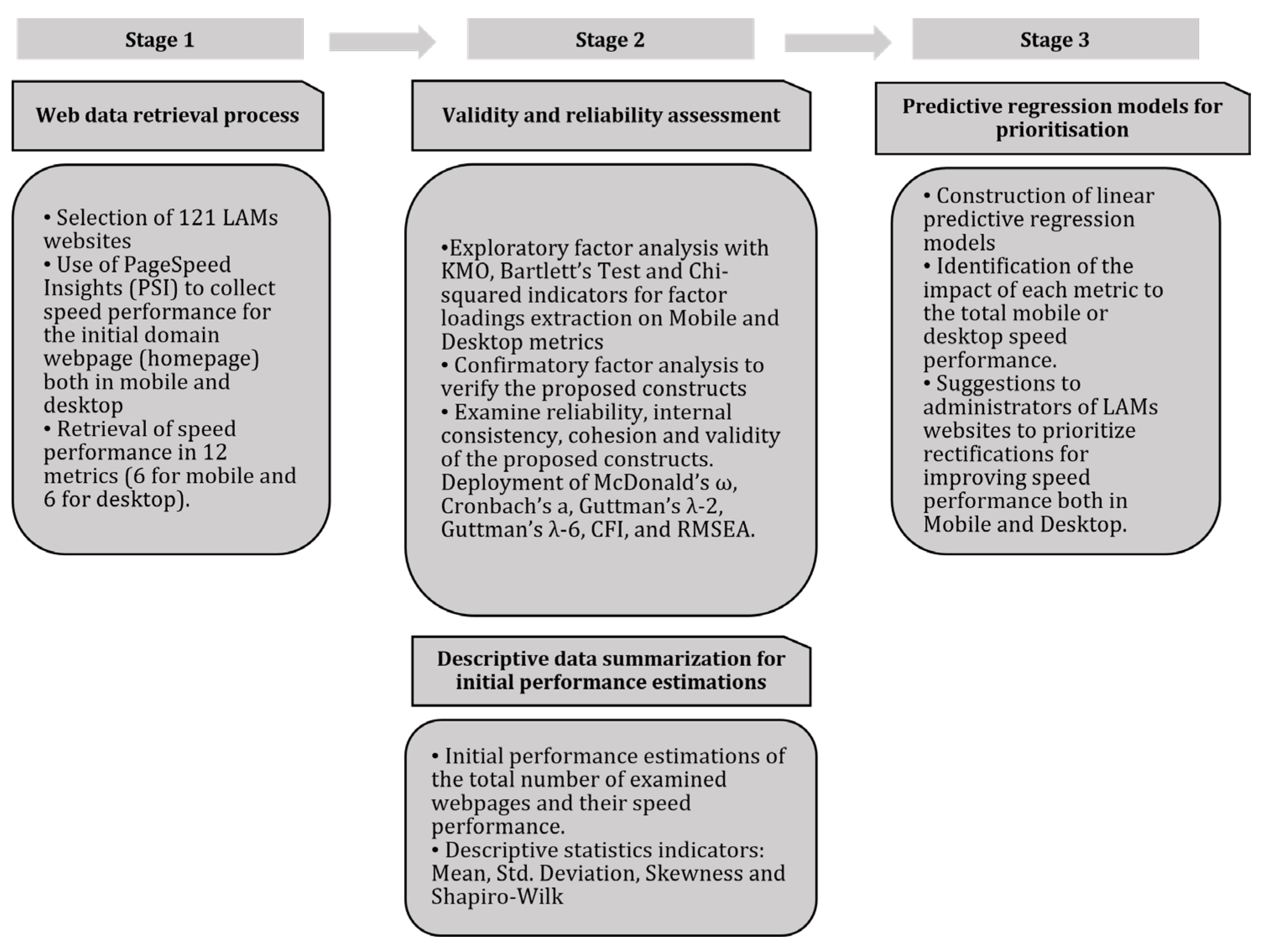

In

Table 1, we summarize the research gaps, while in the next section, a methodology is proposed to cover these gaps.