Abstract

At the Michael G. DeGroote School of Medicine, a significant component of the MD curriculum involves written narrative reflections on topics related to professional identity in medicine, with written feedback provided by their in-person longitudinal facilitators (LFs). However, it remains to be understood how generative artificial intelligence chatbots, such as ChatGPT (GPT-4), augment the feedback process and how MD students perceive feedback provided by ChatGPT versus the feedback provided by their LFs. In this study, 15 MD students provided their written narrative reflections along with the feedback they received from their LFs. Their reflections were input into ChatGPT (GPT-4) to generate instantaneous personalized feedback. MD students rated both modalities of feedback using a Likert-scale survey, in addition to providing open-ended textual responses. Quantitative analysis involved mean comparisons and t-tests, while qualitative responses were coded for themes and representational quotations. The results showed that while the LF-provided feedback was rated slightly higher in six out of eight survey items, these differences were not statistically significant. In contrast, ChatGPT scored significantly higher in helping to identify strengths and areas for improvement, as well as in providing actionable steps for improvement. Criticisms of ChatGPT included a discernible “AI tone” and paraphrasing or misuse of quotations from the reflections. In addition, MD students valued LF feedback for being more personal and reflective of the real, in-person relationships formed with LFs. Overall, findings suggest that although skepticism regarding ChatGPT’s feedback exists amongst MD students, it represents a viable avenue for deepening reflective practice and easing some of the burden on LFs.

1. Introduction

The emergence of generative artificial intelligence (GenAI) chatbots such as ChatGPT has been well-documented within medical education, with an emphasis on their ability to answer exam questions [1], design case scenarios [2], and serve as a virtual clinical or research assistant [3]. However, ChatGPT has been sparsely explored within the context of providing formative feedback on MD student assessments. Formative feedback is a helpful resource for identifying learning needs that learners themselves may be unaware of, which becomes especially pertinent as medical students begin to inhabit the professional identity of a physician [4]. This study examines MD student perspectives related to formative feedback provided by ChatGPT compared to a human preceptor, on written narrative reflections pertaining to topics on professional identity.

More recently, professional identity formation has become a key curricular element within undergraduate medical education (UGME) and emphasizes each student’s unique and transformative journey toward becoming a physician. This longitudinal process is nurtured through mentorship, self-reflection, and affirmatory experiences [5]. At the Michael G. DeGroote School of Medicine at McMaster University, a significant component of the UGME curriculum consists of assembling a portfolio of written reflections based on experiential learning aided by formative feedback from two longitudinal facilitators (LFs), typically one physician and one allied-health professional. After each curricular unit, of approximately 11 weeks in duration, MD students are given a small selection of prompts to reflect on their accrued learning experiences within that unit in the form of a written narrative reflection. These prompts are allusive to themes such as communication, decision making, health equity, moral reasoning, ethical judgment, and the social and cultural determinants of health. In addition to having the opportunity to discuss these reflections in person within a small group setting of six to eight students, the LFs also provide written formative feedback to help bring to light any pertinent insights about professional identity as the students begin their medical journey. The reflections are not graded, and the application of the formative feedback is to the students’ discretion as they navigate future experiences similar to the ones they reflected on. However, there may be a considerable amount of variability in the quality of feedback provided by different LFs. As such, ChatGPT may provide an avenue for assisting LFs in providing feedback that is more consistent in quality.

ChatGPT’s potential to augment the processes of written reflection for MD students is highly valuable. Writing and reflecting on patient experiences promotes attentiveness to both the technical and emotional facets of medicine and enables a greater sense of meaning to be extracted from these experiences [6]. Additionally, the role of feedback in the reflective process has been demonstrated as being integral to helping students probe deeper in their reflection. Quality feedback should promote willingness and ability to critically reflect [6]. Sandars mentions that “an individual’s reaction to events may not be readily apparent to them, but it can often be more apparent to others” and that “an effective, reflective learner or practitioner will actively seek out sources of feedback [4].” With reflection often being an abstract and untidy process, feedback can aid in demystifying this complexity [7]. Ideal feedback should be student-specific, supportive, and offer an unassociated perspective that can stimulate deeper reflection [7]. Wald describes this role as the “critical other [5].”

However, several dilemmas have brought about barriers to authentic reflection and high-quality feedback. For example, due to the highly individualized nature of reflection and heterogeneity of what reflection is, it is difficult for educators to provide feedback, leading to superficial comments along the lines of “it was interesting how you said [8,9].” They may also avoid giving negative feedback out of fear of upsetting the learner [9]. Additionally, educators may feel that they do not have the time to give substantive feedback or may delay providing feedback [9]. However, students need to receive the feedback promptly to have the greatest impact on their learning outcomes [10]. Ultimately, without timely personalized feedback, students may be dissuaded from reflecting authentically and miss out on attaining these beneficial learning outcomes [11].

Moreover, the integration of GenAI into feedback processes may help promote the necessary flexibility in feedback, with its ability to respond to an array of infinitely customizable prompts to suit the given context. Recently, there have been explorations into how artificial intelligence augments the reflective writing process. For example, a study by Buckingham Shum et al. used a programmed natural language processor (NLP) to parse student reflections and identify good and bad reflections based on a rubric that applied Boud’s framework to a set of labeled linguistic conventions that the parser could pick out [12]. This work by Buckingham Shum et al. has been applied in HPE contexts as well, with one study at the Johns Hopkins School of Medicine having used their writing analytics tool to quantitatively assess the depth of reflection in medical students’ written professionalism reflections [13].

However, the text-generation capabilities of large language models (LLMs) represent a new paradigm in this regard. LLMs such as ChatGPT possess the ability to provide personalized and instantaneous feedback to written narrative reflections, and it remains to be understood whether undergraduate medical students prefer this feedback to the feedback they receive from their LF. Presently, there has been limited exploration into medical students’ perceptions of ChatGPT [14,15]. Therefore, the aim of this program evaluation project was to assess MD students’ perceptions of feedback provided by ChatGPT on professional identity reflections and compare it to the feedback provided by their LFs.

2. Materials and Methods

2.1. Study Design

This study of feedback modalities in UGME utilizes both quantitative and qualitative methods to understand the differences between how feedback provided by ChatGPT versus feedback provided by a longitudinal facilitator was assessed by first- and second-year MD students, as well as whether they preferred one modality over the other. Given that educators may be unaware of the students’ needs, it is necessary to demystify their perspectives in order to optimize the deployment of AI within their learning. As such, the primary measure of this study was the perceived usefulness of the feedback as rated by the MD students.

2.2. Study Participants

A total of 15 MD students participated in this study. Participants were first- or second-year MD students from the Michael G. DeGroote School of Medicine at McMaster University, who were recruited through a convenience sampling approach via responding to a cohort-wide email notifying them of the study. Given the exploratory nature of this study, a convenience sampling approach was chosen in order to pilot this potential implementation of ChatGPT with a more manageable sample size to identify initial insights and key themes for future exploration with more rigorous recruiting. All study participants signed a detailed participant information and consent form, and were able to follow up with the study team regarding any questions or concerns with their participation. Upon consenting to participate, participants were asked via email to provide previously submitted written narrative reflections on topics related to professional identity, along with the feedback they received from their LFs. All data were de-identified prior to analysis by a member of the study team who was not affiliated with the medical school, in order to preserve anonymity. This study was approved by the Hamilton Integrated Research Ethics Board (16899, 12 April 2023).

2.3. Data Collection

The reflections were input into the ChatGPT (GPT-4) web application using a prompt informed by extant literature on characteristics of effective feedback and models of reflection in medical education, such as Kolb’s Experiential Learning Cycle, Schon’s Reflection-on-Action, and Mezirow’s provision of the “disorienting dilemma [4].” It was also informed by the rubric created by Buckingham Shum et al. for NLPs to evaluate written reflection based on linguistic features and textual moves associated with reflective competence [12]. In creating the criteria of reflection for the prompt, we drew upon 5 categories that represented components of reflection as evidenced in the literature: reference to past perspectives, expressions of challenge or discomfort, expressions of pausing to reflect, expressions of learning something specific, and reference to future implementation. In addition to providing textual examples of each of the criteria, as drawn from the Buckingham Shum et al. rubric [12]. Given that reflection is a necessary competency in becoming a physician [6], the prompt was focused on important aspects and criteria of reflection that collectively underscore its importance to professional identity formation.

We used elements of the Brown Educational Guide to the Analysis of Narrative (BEGAN) framework in the prompt to inform the desired feedback output, such as looking for evidence of the student’s emotions and lessons learned, as well as referencing specific examples from the reflection [13]. In addition, we included guiding questions to provide alternative perspectives and promote deeper reflection, as deemed to be beneficial in HPE [14].

In the process of creating the prompt to use for ChatGPT, we sought it best to standardize the prompt in order to maintain consistency in the feedback output. In the preamble for the prompt, we first described the role that we wanted ChatGPT to play. We then iteratively described how the feedback should be provided. Lastly, we concluded the prompt by emphasizing readability in the structure of the feedback output provided by ChatGPT, in addition to some words of encouragement and validation. While the LFs are similarly provided instruction for the assignments, the comparison of interest was between an instructed ChatGPT versus the LFs without any additional or supplementary instruction. Additionally, given the retrospective nature of this study, this was also more feasible. The prompt broken down by section can be seen in Table 1. The full prompt has been included as a Supplementary File (ChatGPT Feedback Prompt).

Table 1.

ChatGPT Feedback Prompt.

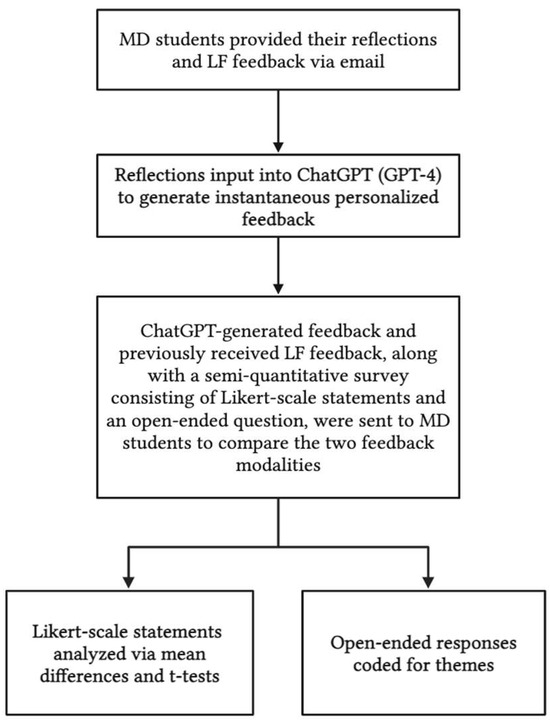

After inputting the prompt, ChatGPT would respond by accepting the task and asking for the student’s reflection to provide feedback on. We then input the student’s reflection as a second prompt, to which ChatGPT would respond by outputting the desired feedback. Due to the inherent functionality of ChatGPT, it may not respond to the same prompt in the same manner each time. As such, in order to ensure that each iteration of feedback was compositionally consistent with each other, the feedback outputs were occasionally regenerated. It is also worth mentioning that each iteration of a student’s reflection and the corresponding feedback was input as a separate conversation within ChatGPT. Upon receiving the feedback output, the feedback was sent back to the students via email, where they then answered an online-administered semi-quantitative survey asking them to rate and compare the feedback from ChatGPT versus the feedback they received from their LF. The methods of this study are depicted in Figure 1. To ensure privacy and confidentiality, only one member of the research team, who was not part of the MD program, had access to the MD student reflections and the ChatGPT conversations. At the conclusion of this study, all ChatGPT conversation history was deleted.

Figure 1.

Study Methodology Flow Chart.

The MD students were sent back their reflections along with both modalities of feedback in a single document to ensure that everything needed to complete the survey was in a single place. The survey questions consisted of eight 5-point Likert-scale statements (strongly disagree–strongly agree) and were based on characteristics of feedback literacy and effective feedback in health professions education as described in the literature, such as personalization [6,15,16,17,18], identifying strengths and areas for improvement [15,16,17,18], motivating openness to future feedback [15], highlighting specific observations [15,16,17,18], balanced tone [16,17,18], providing steps for improvement [15,16,17,18], considering the emotional implications of critical feedback [15,16,17,18], and promoting self-regulation [15,16,17,18]. Ultimately, feedback as a discursive process should be aware of the human, emotional, and personal implications, evident in statements 1, 5, and 7; while also promoting learner self-awareness of process and ability, as evidenced in statements 2–4, 6, and 8 [18]. The Likert-scale survey statements can be seen in Table 2. Additionally, the survey included the open-ended question, “What is your opinion of the feedback provided by ChatGPT versus the feedback provided by a longitudinal facilitator? Which did you prefer and what were some of the reasons you preferred one over the other? In your response, please highlight any specific aspects of the feedback that guided your thinking.” The open-ended question allows for the MD students to expand on their experience of the feedback, with the overarching research question for the qualitative portion being “what insights do MD students offer about the perceived strengths and limitations of AI-generated feedback compared to human facilitator feedback, and what factors or characteristics shape their preferences?” Exploring MD student perspectives offers critical insight into how AI tools should be implemented to meet educational needs, especially in novel areas where educators may be unaware of what students find most valuable or lacking. Their assessments of specific strengths and limitations, such as personalization, tone, and clarity, are necessary to optimize the implementation of AI in feedback processes and ensure its alignment with learner needs and priorities.

Table 2.

Likert-Scale Survey Statements.

2.4. Analysis

The data were analyzed using both quantitative and qualitative methods. For the survey answered by the MD students, the Likert-scale questions were analyzed by finding the means for each question. A paired-samples t-test was then used to assess whether there were significant differences between how the two kinds of feedback were rated. Cohen’s d was used to report effect size. Quantitative analysis was performed using IBM® SPSS® Statistics 29.0 software (IBM Corp., Armonk, NY, USA).

Additionally, qualitative data were analyzed using direct content analysis techniques as described by Hsieh and Shannon [19]. This method was chosen due to its alignment with a deductive approach, allowing us to code participant responses based on pre-existing frameworks of effective feedback in health professions education. In this case, van de Ridder’s “What is feedback in clinical education? [17]” and Noble’s characterization of feedback-literate learners [20] served as initial frameworks to identify responses that were resonant with effective feedback characteristics. This approach involved an initial phase of in vivo coding, where exact words and phrases from participant responses were identified. Following this, axial coding was used to group similar codes under broader feedback dimensions based on their alignment with predefined categories of effective feedback from van de Ridder and Noble’s frameworks, such as personalization, tone, identifying strengths and areas of improvement, and highlighting specific observations. Thematic categories were then refined and consolidated through iterative discussion among the research team. Representational quotations were chosen to illustrate each theme and ensure that student perspectives were centered and preserved. The qualitative data comprised open-ended responses from all 15 participants, resulting in approximately 3000 words of textual data. Each participant provided responses ranging from 100 to 400 words, offering sufficient detail to identify meaningful preliminary insights and themes. However, given the small sample size, there were not enough data for thematic saturation. However, the responses were ample enough to precipitate preliminary emergent insights.

3. Results

3.1. Quantitative Comparison of Feedback Modalities

A total of 15 first- and second-year MD students completed the survey. Table 3 depicts the means, mean differences, standard deviations, and the one-sided and two-sided t-test statistics across each of the comparisons between the MD students’ rating of their LF-provided feedback and the ChatGPT-provided feedback. In six of the eight Likert statements, the LFs were rated more favorably compared to ChatGPT. However, the average mean difference among each of these comparisons was less than 0.26, and in none of these comparisons were the differences statistically significant. Conversely, ChatGPT was rated more favorably by the MD students in two of the eight statements, corresponding to identifying strengths and areas of improvement and providing steps for improvement. The mean difference in each of these comparisons was −0.93 and −1.60, respectively, with both comparisons being statistically significant (p < 0.05) and showing large effect sizes (−0.84 and −1.65, respectively). Additionally, the mean across all eight statements was 3.73 for ChatGPT, compared to 3.57 for the LF-provided feedback, indicating a slight overall preference for ChatGPT with a small overall effect size of 0.16. Normality of the data was assessed using the Shapiro–Wilk test (W = 0.82, p = 0.05), indicating slight deviation from normality. As such, results from the non-parametric Wilcoxon signed-rank test were also examined, which indicated no significant difference between the LF and ChatGPT feedback conditions (Z = 14.0, p = 1.0), aligning with the small effect size.

Table 3.

Quantitative Likert-Scale Survey Results.

3.2. Qualitative Comparison of Feedback Modalities

Responses to the open-ended question were probed for representational quotations to expand on the MD students’ perspective on the feedback they received. Subsequently, these representational quotations were categorized into four themes: promoting deeper reflection through highlighting areas of improvement and providing guiding questions, ChatGPT’s misuse and paraphrasing of quotations, easily identifiable AI tone or “voice,” and valuing LF’s unique perspective and life experiences.

3.3. Theme 1: Promoting Deeper Reflection Through Highlighting Areas of Improvement and Providing Guiding Questions

A prominent point of praise for the ChatGPT-provided feedback was its ability to provide probing questions to deepen future reflective practice. The MD students commended the detail and thoughtfulness of these questions and highlighted how the feedback demonstrated a strong recognition of both their moments of personal achievement as well as the areas they could have explored in greater depth.

“The feedback from ChatGPT was thorough and insightful, offering a detailed analysis of my reflection. It effectively highlighted key strengths… while also identifying areas for improvement. I appreciated the specific questions posed by ChatGPT, as they prompted deeper reflection and encouraged me to consider how my experiences have shaped my professional identity.”

“I was pleasantly surprised by how detailed the feedback provided by ChatGPT was, and ChatGPT’s feedback provided more information regarding areas and steps for improvement. I especially liked how ChatGPT’s feedback provided guiding questions to help me engage in deeper reflection.”

Furthermore, the MD students described ChatGPT’s benefit to be beyond simply appraising their reflections, and rather, were impressed by the extent to which ChatGPT engaged with the MD students’ experiences. The MD students also described how, in a fashion akin to a human tutor, ChatGPT was able to demonstrate an understanding of their reflective process and piece together how these experiences were meaningful to them. The MD students were appreciative of the feedback and highlighted its usefulness in future reflective practice.

“The ChatGPT feedback also was superior in affirming the strengths in my feedback, and demonstrated a profound appreciation and understanding of the significance of my reflection process… The ChatGPT feedback was also excellent in providing clear, meaningful critiques on how I can further challenge myself. It posed specific questions I could ask myself, or areas in my reflection that I could dig deeper in. It is truly as if the AI had a mind of its own…”

3.4. Theme 2: ChatGPT’s Misuse and Paraphrasing of Quotations

Conversely, a common critique emphasized by the MD students was how some of the specific sentences that ChatGPT would reference directly from their reflections were often misused or lacked substantive commentary. They alluded to how ChatGPT would try to “fill space” by arbitrarily selecting quotations to draw upon and offering unsubstantial commentary. In turn, this led to the feedback feeling formulaic or digressing from the focus of their reflections.

“The ChatGPT feedback was generally more detailed, however it was very formulaic and had a lot of “fluff.” Many quotations from my reflections were utilized, but the comments on these quotes mainly just paraphrased.”

“I also really disliked the direct quotes that ChatGPT pulled from my reflection—some of them are completely meaningless in the grand scheme of what I wrote in my reflection.”

3.5. Theme 3: Easily Identifiable AI Tone or “Voice”

The expectation that ChatGPT’s writing would be robotic was referenced considerably in the MD students’ responses. In this regard, student responses were rather polarized in regard to whether they thought ChatGPT’s response was robotic or genuine. Some students highlighted that there was indeed a certain “AI-speak,” evident in the effusiveness of the feedback.

“One critique I’d give is that the feedback still reads like stereotypical AI writing… there was a tendency for the AI to be unnecessarily verbose while simultaneously not sounding any more professional or intelligent. Certain everyday conversational words like “emphasize” or “underline” were replaced by less commonly used synonyms like “underscore”, and almost every verb was accompanied by an adverb.”

Conversely, other responses spoke of a more positive perception of the ChatGPT-provided feedback, where the tone of the writing was on par with that of their human tutors.

“I did think it still felt surprisingly very personalized and didn’t feel cold or robotic…if I did not know it was AI that wrote it, I would have believed it was comments from a tutor.”

3.6. Theme 4: Valuing LF’s Unique Perspective and Life Experiences

An important factor in the MD students’ perception of the feedback was the presence or lack of the human component attached to the comments. In regard to the feedback from their LFs, the MD students attested to the importance of knowing that their feedback was coming from someone whom they had spent time with on a regular basis and for whom they had true respect and admiration. For some of the MD students, the absence of that relationship with ChatGPT precluded them from being able to truly appreciate the feedback.

“Even if the feedback it presented sounds good on paper, one cannot discount the rich life experiences that my LF has, and the profound and personal ways in which my LF understands me from our many sessions together. This is something that ChatGPT will never be able to have, and as a result, I cannot confidently say that I would certainly prefer ChatGPT’s feedback over my LF’s-rather, I am uncertain about how I feel.”

Additionally, the rich life experiences of the LFs resonated strongly with the MD students. As such, even in a few lines of feedback, the MD students were able to extract as much meaning from their LF feedback as they were able to from the lengthier ChatGPT feedback.

“The most valuable thing about the feedback from my LFs is that it was written by actual people… whom I have grown to respect in their wealth of experience and often very wise perspective on the world…. It was an important experience for me to have my personal reflections read by actual people… And their feedback… actually pointed out things I didn’t see at the time but personally value highly.”

“I have known my LF since Fall 2023 and respect him greatly. His feedback, even just the few sentences, meant more to me because I knew it was coming from him and was reflective of his expertise in the field and observation of me as a student.”

4. Discussion

The intentions of this study were to understand the differences between feedback provided by ChatGPT versus feedback provided by an LF, as well as whether first- and second-year MD students preferred one modality over the other. The results of the semi-quantitative survey suggest that the MD students preferred the LF feedback in the majority of the feedback criteria. However, the modest mean differences in the Likert-scale survey point to ChatGPT being highly comparable to the LF feedback. This suggests that ChatGPT alone may still be a worthwhile feedback modality. Interestingly, only the comparisons where ChatGPT was rated higher than the LFs were statistically significant. In addition, the responses from the open-ended survey suggest that an aspect of the ChatGPT feedback that the MD students found to be beneficial was its ability to highlight strengths and areas of improvement and provide actionable steps for improvement. In particular, the MD students appreciated how the guiding questions enabled them to engage in deeper reflective practice.

Importantly, the overall high quality of the ChatGPT feedback was attested to by the MD students. Paramount to the consideration of implementing GenAI in any capacity is ensuring that it meets the necessary quality for its desired implementation. In that regard, the MD students’ perceptions help indicate that ChatGPT can be useful for helping students identify the strengths and areas of improvement for their reflections, in addition to providing them with actionable steps for improvement. These two constructs are related in both being oriented around improvement, which emphasizes the “forward-facing” nature of effective feedback [20]. Despite this, these categories were rated highest among the ChatGPT-provided feedback and lowest among the LF-provided feedback. Perhaps, ChatGPT, not being privy to the milieu of the relationship with the MD student, is able to approach the elements of feedback that may be more sensitive, such as highlighting areas for improvement and suggesting ways to improve.

The MD students’ primary concern regarding the ChatGPT feedback was how it undermined the essentiality of the personal relationship with their feedback providers. The literature suggests that the formation of “longitudinal reflective relationships” is important for promoting receptiveness to feedback [11]. Despite this, however, the MD students felt that the ChatGPT feedback was still sufficiently personalized and able to thoughtfully engage with their reflections. As such, while ChatGPT is not intended to circumvent existing LF feedback, it could nonetheless be implemented in a use-case scenario for assisting LFs with providing high-quality feedback. In addition, the ChatGPT feedback could be used to help MD students probe deeper in their reflective practice and refine their reflections prior to submitting them to their LFs.

Moreover, ChatGPT is only able to interpret what it is presented with; it cannot see beyond the writing in the way the LFs can. For instance, while an MD student may not explicitly refer to a certain feeling, emotion, or experience in their reflection, their LF may be able to implicitly pick up on what the MD student is referring to and inform their feedback as such. Similarly, the quality of the feedback output from ChatGPT is largely dependent on the quality of the experience being reflected on and the student’s ability to articulate their experience and emotions in detail. Thus, it is possible that where ChatGPT had to “fill space” was simply a consequence of not having enough detail from the reflection to pull from. Future explorations of ChatGPT in this regard can characterize how the content and form of reflections inform the feedback output.

The perspectives of the MD students offer some valuable insight into how ChatGPT’s feedback output can be improved. The prompt can be modified to achieve a more human-esque and conversational tone to mitigate the concerns about its apparent AI dialect. Regarding the concern over its misuse of quotations, the prompt can be adjusted to have ChatGPT only draw upon quotations when especially pertinent, as well as define criteria for when and how ChatGPT should draw upon quotations from their reflections. Importantly, this study is exploratory and pragmatic in nature, navigating ethical concerns and outlining one of numerous potential implementations for AI in medical education. In order to capitalize on the paradigm-shifting value of ChatGPT, human feedback augmented with ChatGPT may provide MD students with the greatest benefit to their learning, by supplementing the essential human component of feedback with the detail of ChatGPT’s response.

Moreover, this study has some limitations. Notably, the small sample size is not fully representative of the entirety of their respective cohorts. In addition, it precluded a more rigorous statistical analysis of the Likert-scale survey responses and did not provide ample saturation for a more robust qualitative analysis of the open-ended responses. Future research can expand on these shortcomings by utilizing a larger sample size and a more sophisticated qualitative analysis. Furthermore, the Likert-scale survey items, though derived from validated feedback frameworks, occasionally assessed multiple constructs in a single item. This may have impacted the precision and can be refined in studies. Moreover, the methodology of this study overlooks the interactivity of ChatGPT. It utilized a standardized prompt and sent the feedback output to the MD students without the opportunity to further converse and interact with ChatGPT. In reality, students would be able to construct prompts themselves to the effect of their own individualized needs. The standardization of the prompt also meant that results were largely prompt-dependent, and it remains to be seen how to optimally engineer prompts for this use case. In addition, ChatGPT allows for the opportunity to further refine and extract meaning from their reflections through interactive conversations. However, this feature was not utilized in this study. It is also worth noting that students’ qualitative responses tended to emphasize ChatGPT’s feedback, rather than a true comparison between the two modalities. In the case of themes 2 and 3, perhaps the novelty of the AI feedback garnered greater attention from the MD students in their responses. As such, the responses from the MD students focused more on their experience with the AI feedback and highlighted a less direct and more implicit comparison between the feedback modalities. Future studies will be able to benefit from more targeted questioning. Lastly, given the retrospective nature of this study, we were unable to ensure whether AI was used in the completion of the reflections. While reflections were submitted as part of curriculum requirements, the possibility of AI utilization cannot be ruled out. However, MD students are encouraged to write authentically and introspectively about their personal experiences. Given the deeply personal nature of the reflections, students would have less incentive to use AI for their reflections. Nonetheless, future studies can incorporate measures to mitigate this possibility.

Nonetheless, this study outlines one of an infinite number of possible utilizations of ChatGPT, and future research can expand on this methodology by exploring additional implementations and refining the present iteration. Student perceptions of potential use cases for AI implementation are highly valuable and should be considered in optimizing its future deployment. Educators may be unaware of certain needs and expectations for how students would like to see AI implemented in their learning. As such, our findings emphasize the need for continuous, long-term appraisals of AI implementations, as needs and expectations evolve.

5. Conclusions

Overall, MD students’ perceptions of ChatGPT ranged from a variety of positive and negative viewpoints that will enrich medical educators’ understanding of GenAI use in their programs. The results of the semi-quantitative survey suggest that medical students preferred the LF feedback in the majority of the feedback criteria. However, the feedback from ChatGPT was received positively, overall. In addition, the most pertinent finding was that ChatGPT was preferred over the LF in the categories of identifying strengths and areas for improvement, as well as providing steps for improvement. The implications of this finding for medical educators are that ChatGPT has the potential to be an effective feedback modality for reflective writing, especially with regard to identifying further learning needs. However, implementing ChatGPT in supplement to human feedback may provide the most meaningful benefit, while mitigating some of the MD students’ misgivings related to ChatGPT. The integration of ChatGPT may also assist in optimizing faculty resource allocation and reducing costs. Future explorations of ChatGPT in this regard should involve consolidating student perspectives with educator perspectives, in addition to exploring possible implementations of ChatGPT feedback alongside human preceptor feedback.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/ime4030027/s1, ChatGPT Feedback Prompt.

Author Contributions

Conceptualization, N.H., L.M., U.S., N.A.-J. and M.S.; data curation, N.H.; methodology, N.H., L.M., U.S., N.A.-J. and M.S.; supervision, L.M. and M.S.; writing—original draft, N.H., L.M., U.S., N.A.-J. and M.S.; writing—review and editing, N.H., L.M., U.S., N.A.-J. and M.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study was approved by the Hamilton Integrated Research Ethics Board (16899, 12 April 2023).

Informed Consent Statement

Informed consent was obtained from all subjects involved in this study.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

The authors wish to thank the MD students who participated in this study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Gilson, A.; Safranek, C.W.; Huang, T.; Socrates, V.; Chi, L.; Taylor, R.A.; Chartash, D. How does ChatGPT perform on the United States Medical Licensing Examination (USMLE)? The implications of large language models for medical education and knowledge assessment. JMIR Med. Educ. 2023, 9, 45312. [Google Scholar] [CrossRef] [PubMed]

- Scherr, R.; Halaseh, F.F.; Spina, A.; Andalib, S.; Rivera, R. ChatGPT interactive medical simulations for early clinical education: Case study. JMIR Med. Educ. 2023, 9, 49877. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Wright, A.P.; Patterson, B.L.; Wanderer, J.P.; Turer, R.W.; Nelson, S.D.; McCoy, A.B.; Sittig, D.F.; Wright, A. Using AI-generated suggestions from ChatGPT to optimize clinical decision support. JAMIA 2023, 30, 1237–1245. [Google Scholar] [CrossRef] [PubMed]

- Sandars, J. The use of reflection in medical education: AMEE Guide No. 44. Med. Teach. 2009, 31, 685–695. [Google Scholar] [CrossRef] [PubMed]

- Holden, M.D.; Buck, E.; Luk, J.; Ambriz, F.; Boisaubin, E.V.; Clark, M.A.; Mihalic, A.P.; Sadler, J.Z.; Sapire, K.J.; Spike, J.P.; et al. Professional identity formation: Creating a longitudinal framework through TIME (Transformation in Medical Education). Acad. Med. 2015, 90, 761–767. [Google Scholar] [CrossRef] [PubMed]

- Wald, H.S.; Davis, S.W.; Reis, S.P.; Monroe, A.D.; Borkan, J.M. Reflecting on reflections: Enhancement of medical education curriculum with structured field notes and guided feedback. Acad. Med. 2009, 84, 830–837. [Google Scholar] [CrossRef] [PubMed]

- Uygur, J.; Stuart, E.; De Paor, M.; Wallace, E.; Duffy, S.; O’Shea, M.; Smith, S.; Pawlikowska, T. A Best Evidence in Medical Education systematic review to determine the most effective teaching methods that develop reflection in medical students: BEME Guide No. 51. Med. Teach. 2019, 41, 3–16. [Google Scholar] [CrossRef] [PubMed]

- De la Croix, A.; Veen, M. The reflective zombie: Problematizing the conceptual framework of reflection in medical education. Perspect. Med. Educ. 2018, 7, 394–400. [Google Scholar] [CrossRef] [PubMed]

- Lee, G.B.; Chiu, A.M. Assessment and feedback methods in competency-based medical education. Ann. Allergy Asthma Immunol. 2022, 128, 256–262. [Google Scholar] [CrossRef] [PubMed]

- Dyment, J.E.; O’Connell, T.S. Assessing the quality of reflection in student journals: A review of the research. Teach. High. Edu. 2011, 16, 81–97. [Google Scholar] [CrossRef]

- Lim, J.Y.; Ong, S.Y.K.; Ng, C.Y.H.; Chan, K.L.E.; Wu, S.Y.E.A.; So, W.Z.; Tey, G.J.C.; Lam, Y.X.; Gao, N.L.X.; Lim, Y.X.; et al. A Systematic Scoping Review of Reflective Writing in Medical Education. BMC Med. Educ. 2023, 23, 12. [Google Scholar] [CrossRef] [PubMed]

- Shum, S.B.; Sándor, Á.; Goldsmith, R.; Bass, R.; McWilliams, M. Towards Reflective Writing Analytics: Rationale, Methodology and Preliminary Results. J. Learn. Anal. 2017, 4, 58–84. [Google Scholar] [CrossRef]

- Hanlon, C.D.; Frosch, E.M.; Shochet, R.B.; Buckingham Shum, S.J.; Gibson, A.; Goldberg, H.R. Recognizing Reflection: Computer-Assisted Analysis of First Year Medical Students’ Reflective Writing. Med. Sci. Educ. 2021, 31, 109–116. [Google Scholar] [CrossRef] [PubMed]

- Park, J. Medical students’ patterns of using ChatGPT as a feedback tool and perceptions of ChatGPT in a Leadership and Communication course in Korea: A cross-sectional study. J. Educ. Eval. Health Prof. 2023, 20, 29. [Google Scholar] [CrossRef] [PubMed]

- Tangadulrat, P.; Sono, S.; Tangtrakulwanich, B. Using ChatGPT for Clinical Practice and Medical Education: Cross-Sectional Survey of Medical Students’ and Physicians’ Perceptions. JMIR Med. Educ. 2023, 9, 50658. [Google Scholar] [CrossRef] [PubMed]

- Spooner, M.; Larkin, J.; Liew, S.C.; Jaafar, M.H.; McConkey, S.; Pawlikowska, T. “Tell me what is ‘better’!” How medical students experience feedback, through the lens of self-regulatory learning. BMC Med. Educ. 2023, 23, 895. [Google Scholar] [CrossRef] [PubMed]

- Eidt, L.B. Feedback in medical education: Beyond the traditional evaluation. Rev. Assoc. Medica Bras. 2023, 69, 9–12. [Google Scholar] [CrossRef] [PubMed]

- Hardavella, G.; Aamli-Gaagnat, A.; Saad, N.; Rousalova, I.; Sreter, K.B. How to give and receive feedback effectively. Breathe Sheff. Engl. 2017, 13, 327–333. [Google Scholar] [CrossRef] [PubMed]

- Hsieh, H.F.; Shannon, S.E. Three approaches to qualitative content analysis. Qual. Health Res. 2005, 15, 1277–1288. [Google Scholar] [CrossRef] [PubMed]

- Noble, C.; Young, J.; Brazil, V.; Krogh, K.; Molloy, E. Developing residents’ feedback literacy in emergency medicine: Lessons from design-based research. AEM Educ. Train. 2023, 7, 10897. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the Academic Society for International Medical Education. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).