Abstract

Forecasting and modeling time series is a crucial aspect of economic research for academics and business practitioners. The ability to predict the direction of stock prices is vital for creating an investment plan or determining the optimal time to make a trade. However, market movements can be complex to predict, non-linear, and chaotic, making it difficult to forecast their evolution. In this paper, we investigate modeling and forecasting the daily prices of the new Morocco Stock Index 20 (MSI 20). To this end, we propose a comparative study between the results obtained from the application of the various Machine Learning (ML) methods: Support Vector Regression (SVR), eXtreme Gradient Boosting (XGBoost), Multilayer Perceptron (MLP), and Long Short-Term Memory (LSTM) models. The results show that using the Grid Search (GS) optimization algorithm, the SVR and MLP models outperform the other models and achieve high accuracy in forecasting daily prices.

1. Introduction

The 2008 recession, the stock market crash of 2015, the COVID-19 Pandemic, and the Russian Invasion of Ukraine are some of the most recent crises which have had an immense impact on the financial markets and the destruction of wealth worldwide. Modeling and forecasting the stock market is a challenge that many engineers and financial researchers face. The literature review examined studies on stock market prediction using Machine Learning (ML) models. It concluded that Deep Learning (DL) was the most commonly utilized model for forecasting stock price trends [1,2]. Traditional econometric methods might require improved performance in relevant nonlinear time series and may not be appropriate for directly forecasting stock prices because of their volatility [3]. However, for complex nonlinear financial time series, methods such as Support Vector Regression (SVR), eXtreme Gradient Boosting (XGBoost), Multilayer Perceptron (MLP), and Long Short-Term Memory (LSTM) can detect nonlinear relationships in the forecasting of stock prices [4] and achieve better fitting results by tuning multiple parameters [5]. Hyperparameter optimization or tuning in ML refers to selecting the most appropriate parameters for a particular learning model [6]. Some studies use Grid Search (GS) optimization [7], while others use Bayesian [8,9] or pigeon-inspired optimization algorithms [10]. In this paper, the GS algorithm is used to optimize the parameters of each model, such as SVR, XGBoost, MLP, and LSTM models. Then, we compare them using seven measures: Mean Error (ME), Mean Percentage Error (MPE), Mean Square Error (MSE), Mean Absolute Error (MAE), Root Mean Square Error (RMSE), Mean Absolute Percentage Error (MAPE), and R. In [11], it was discovered that using LSTM with Moving Averages (MA) yields superior results for predicting stock prices compared to SVR, as measured by different performance criteria such as MAE, MSE, MAPE, RMSE, and R values. In their research [12], Al-Nefaie et al. proposed using LSTM and MLP models to forecast the fluctuations in the Saudi stock market. They found that the correlation coefficient for the two models was higher than 0.995. The LSTM model proved to have the highest accuracy and best model fit. In [13], the authors compared performance measures such as MSE, MAE, RMSE, and MAPE to forecast stock market trends based on Auto-Regressive Integrated Moving Average (ARIMA), XGBoost, and LSTM. Their tests found that XGBoost performed the best. In a different context, ML methods such as ANN and MLP [14] were used to forecast solar irradiance. The results indicate that the MLP model with exogenous variables performs better than the other models. Similarly, in another study [15], the predictive performance and stability of eXtreme Gradient Boosting Deep Neural Networks (XGBF-DNN) made it an optimal and reliable model for forecasting hourly global horizontal irradiance using the GS algorithm. The main objective of this investigation is to improve the GS optimization algorithm by optimizing the hyperparameters of ML models. Furthermore, this study compares two ML methods and two DL methods. To achieve this objective, the research includes an in-depth literature review of 20 studies on ML models for stock market prediction. Overall, the paper aims to contribute to the stock market prediction field by improving the performance of ML models through hyperparameter optimization. Our contribution is highlighted in Table 1, which offers a more accurate prediction than other studies.

Table 1.

Some previous work using ML models for stock market forecasting.

The rest of this paper is organized as follows: Section 2 outlines the suggested methodology for modeling and forecasting financial time series, specifically stock prices. Section 3 details the implementation of the study, results, and discussion. Lastly, Section 4 offers conclusions and suggests future research directions.

2. Materials and Methods

This section provides an overview of the methodology employed in this research.

2.1. Data Collection

This research focuses on modeling and forecasting a new Moroccan Stock Index 20 (MSI 20) composed of the most liquid companies listed on the Casablanca Stock Exchange (CSE) from various sectors, including Attijariwafa Bank, Itissalat Al-Maghrib, Banque Populaire, LafargeHolcim Morocco, … etc. The MSI 20 is calculated in real time during the business hours of the CSE from Monday to Friday, and from 9:00 a.m. to 3:30 p.m. local time (Limited on weekends and holidays). The research was conducted using the Python software. In addition, several libraries were used, including Matplotlib, Pandas, NumPy, Sklearn, Tensorflow, and Keras. We use daily MSI 20 data to train each model and predict closing prices. Since the launch of the index of length , we use prices as Input = (Open, High, Low, and Closing prices), see the Table 2 below:

Table 2.

Sample data (First five days) and descriptive statistics of MSI 20 index daily prices from 18 December 2020 to 9 February 2023.

To train model parameters, we use historical data of length N,

where with represents the Open, High, and Low prices, respectively. For the output with a single observation sequence, we use only closing price,

2.2. Preprocessing

The dataset was divided into 90% of the observations used for training and 10% for model evaluation during testing. Data preprocessing is an essential step in ML that helps achieve competitive results and eliminate metric unit effects. In this case, we normalized using a min–max scale, which scales all variables to a range of :

where represents the historical data for each feature variable in the time series (Open, High, and Low prices) and the and values are the sample’s maximum and minimum values.

2.3. SVR Model

The SVR model, a new financial time series prediction method, is used to address the challenges of nonlinear regression. We assume a linear relationship exists between and as in the left side of the Equation (2). To perform nonlinear regression using SVR, the concept consists of creating an transformation that maps the original feature space X, which has N dimensions, onto the new feature space . Mathematically, this can be explained by the equation shown below:

where is the vector of weights and is a bias, is the coefficient of the Lagrange multipliers, and is a kernel function. The most commonly used kernels include linear, Gaussian, and polynomial functions [17].

2.4. XGBoost Model

XGBoost is an ML model used for stock market time series forecasting that uses a set of decision trees [18]. A gradient descent algorithm guides the process of preparing subsequent trees to minimize the loss function of the last tree [6].

where is a specified loss function that quantifies the deviations of the predicted and actual target values, and denotes the forecast on the i-th sample at the T-th boost and , where K is the number of leaves. For the regularization term, is the parameter of complexity. is the L2 norm of weight regularization, is a constant coefficient, and represents the term of regularization, which penalizes the model complexity [8].

2.5. MLP Model

The MLP is a frequently used ANN consisting of three layers of neurons: an input layer, one or more hidden layers, and an output layer. The inputs () are multiplied by their weights (), and the resulting products are combined. This sum and a bias term (b) are fed into an activation function to produce the neuron’s output () [19]. Equation (2) can be used to express this process in mathematical words:

where , the activation function, is frequently employed as a function, either continuous or discontinuous, that maps real numbers to a specific interval. Alternatively, the sigmoidal activation function can also be utilized [12].

2.6. LSTM Model

LSTM models are RNNs that excel at learning and retaining long-term dependencies, making them successful in various applications such as financial time series forecasting. The principle of an LSTM cell consists of the following four equations [20]:

where , , , , , and , , , are the weight and bias of each layer respectively, and represents the hidden layer output. The forget gate () determines if the information should be kept or discarded, while the input gate () integrates new data into the cell (). Eventually, the output gate () governs the selection of relevant data to transmit to the succeeding cell ().

2.7. Grid Search

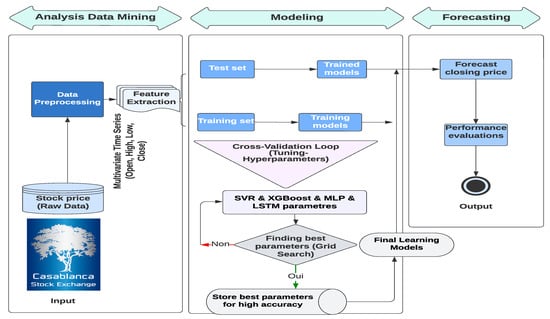

In ML, GS is commonly used to fine-tune parameters such as regularization strength, learning rate, several hidden layers …, etc. By using the GS algorithm, we can identify the optimal set of hyperparameters for the models, resulting in better predictions and improved performance. In our case, we first defined the hyperparameters and their search space, as shown in the Table 3. The optimal hyperparameters for each model are shown below in bold blue. The design of the research study is illustrated in Figure 1.

Table 3.

The GS hyper-parameter sets for the SVR, XGBoost, MLP, and LSTM models.

Figure 1.

The proposed research design architecture for analyzing, modeling, and predicting MSI 20.

3. Results and Discussion

In the section on results and discussion, we report and compare the performance of the SVR, XGBoost, MLP, and LSTM models for forecasting the MSI 20 stock market. Various evaluation measures such as ME, MPE, MSE, MAE, RMSE, MAPE, and R scores are obtained to assess the models’ accuracy. Let be the forecast of model i in time t, while is the real value in time t, is the mean value, and n is the length of the set time series (i.e., training and test sets). The error for model i at time t is defined as . The general formula for evaluation measures is presented in Table 4 below:

Table 4.

Formulas for model performance measures.

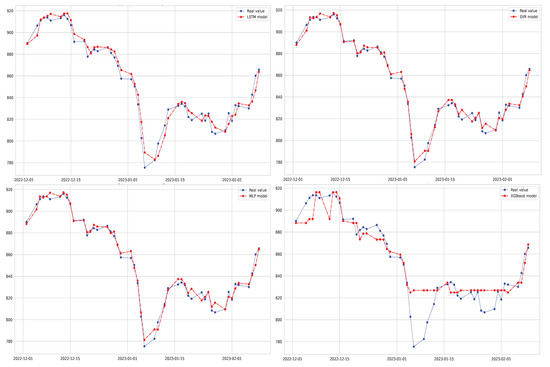

Table 5 shows the evaluation values of various forecasting models for the MSI 20 price. XGBoost had the highest error among the four models. LSTM had the second-worst performance. SVR and MLP models had lower performance metrics than XGBoost and LSTM models. However, the SVR model had the best evaluation values for forecasting MSI 20 prices, achieving optimal ME, MAE, RMSE, MPE, MAPE, and MSE values of 0.674, 3.092, 3.993, 0.082%, 0.368%, and 15.941, respectively. The MLP model also showed excellent performance metrics. However, the predicted results of the XGBoost model in Figure 2 on the right showed a specific error. They predicted values of MSI 20, especially during the fall period (6 January 2023–10 January 2023 ), indicating that the model needed more data to improve its performance. Based on the results presented in Table 5 and Figure 2, it can be concluded that the SVR model followed by MLP models had the best performance for forecasting MSI 20 compared to other models.

Table 5.

The performance analysis measures metrics of each model.

Figure 2.

MSI 20 stock price prediction: results of LSTM, SVR, MLP, and XGBoost models.

4. Conclusions and Future Work

This study applied four machine learning models—SVR, XGBoost, MLP, and LSTM—to model and forecast MSI 20 prices using multivariate time series data. The results showed that SVR outperformed the other models with lower errors and higher accuracy 98.9%. Future research could focus on improving the performance of XGBoost and exploring the potential of other models, such as CNN-LSTM, for stock market forecasting.

Author Contributions

H.O. and K.E.H. contributed equally to this manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data is available on the CSE website: https://www.casablanca-bourse.com (accessed on 10 February 2020).

Acknowledgments

We appreciate all the reviewers who helped us improve the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mintarya, L.N.; Halim, J.N.; Angie, C.; Achmad, S.; Kurniawan, A. Machine learning approaches in stock market prediction: A systematic literature review. Procedia Comput. Sci. 2023, 216, 96–102. [Google Scholar] [CrossRef]

- Ozbayoglu, A.M.; Gudelek, M.U.; Sezer, O.B. Deep learning for financial applications: A survey. Appl. Soft Comput. 2020, 93, 106384. [Google Scholar] [CrossRef]

- Li, R.; Han, T.; Song, X. Stock price index forecasting using a multiscale modeling strategy based on frequency components analysis and intelligent optimization. Appl. Soft Comput. 2022, 124, 109089. [Google Scholar] [CrossRef]

- Kim, T.; Kim, H.Y. Forecasting stock prices with a feature fusion LSTM-CNN model using different representations of the same data. PLoS ONE 2019, 14, e0212320. [Google Scholar] [CrossRef] [PubMed]

- Xu, S.; Zou, S.; Huang, J.; Yang, W.; Zeng, F. Comparison of Different Approaches of Machine Learning Methods with Conventional Approaches on Container Throughput Forecasting. Appl. Sci. 2022, 12, 9730. [Google Scholar] [CrossRef]

- Yang, L.; Shami, A. On hyperparameter optimization of machine learning algorithms: Theory and practice. Neurocomputing 2020, 415, 295–316. [Google Scholar] [CrossRef]

- Rubio, L.; Alba, K. Forecasting Selected Colombian Shares Using a Hybrid ARIMA-SVR Model. Mathematics 2022, 10, 2181. [Google Scholar] [CrossRef]

- Xia, Y.; Liu, C.; Li, Y.; Liu, N. A boosted decision tree approach using Bayesian hyper-parameter optimization for credit scoring. Expert Syst. Appl. 2017, 78, 225–241. [Google Scholar] [CrossRef]

- Liwei, T.; Li, F.; Yu, S.; Yuankai, G. Forecast of LSTM-XGBoost in Stock Price Based on Bayesian Optimization. Intell. Autom. Soft Comput. 2021, 29, 855–868. [Google Scholar] [CrossRef]

- Al-Thanoon, N.A.; Algamal, Z.Y.; Qasim, O.S. Hyper parameters Optimization of Support Vector Regression based on a Chaotic Pigeon-Inspired Optimization Algorithm. Math. Stat. Eng. Appl. 2022, 71, 4997–5008. [Google Scholar]

- Lakshminarayanan, S.K.; McCrae, J.P. A Comparative Study of SVM and LSTM Deep Learning Algorithms for Stock Market Prediction. In Proceedings of the AICS, Wuhan, China, 12–13 July 2019; pp. 446–457. [Google Scholar]

- Al-Nefaie, A.H.; Aldhyani, T.H. Predicting Close Price in Emerging Saudi Stock Exchange: Time Series Models. Electronics 2022, 11, 3443. [Google Scholar] [CrossRef]

- Goverdhan, G.; Khare, S.; Manoov, R. Time Series Prediction: Comparative Study of ML Models in the Stock Market. Res. Sq. 2022. [Google Scholar] [CrossRef]

- Ettayyebi, H.; El Himdi, K. Artificial neural network for forecasting one day ahead of global solar irradiance. In Proceedings of the Smart Application and Data Analysis for Smart Cities (SADASC’18), Casablanca, Morocco, 27–28 February 2018. [Google Scholar]

- Kumari, P.; Toshniwal, D. Extreme gradient boosting and deep neural network based ensemble learning approach to forecasting hourly solar irradiance. J. Clean. Prod. 2021, 279, 123285. [Google Scholar] [CrossRef]

- Lu, W.; Li, J.; Li, Y.; Sun, A.; Wang, J. A CNN-LSTM-based model to forecast stock prices. Complexity 2020, 2020, 6622927. [Google Scholar] [CrossRef]

- Ranković, V.; Grujović, N.; Divac, D.; Milivojević, N. Development of support vector regression identification model for prediction of dam structural behavior. Struct. Saf. 2014, 48, 33–39. [Google Scholar] [CrossRef]

- Dezhkam, A.; Manzuri, M.T. Forecasting stock market for an efficient portfolio by combining XGBoost and Hilbert–Huang transform. Eng. Appl. Artif. Intell. 2023, 118, 105626. [Google Scholar] [CrossRef]

- Koukaras, P.; Nousi, C.; Tjortjis, C. Stock Market Prediction Using Microblogging Sentiment Analysis and Machine Learning. Telecom 2022, 3, 358–378. [Google Scholar] [CrossRef]

- Wu, J.M.T.; Li, Z.; Herencsar, N.; Vo, B.; Lin, C.W. A graph-based CNN-LSTM stock price prediction algorithm with leading indicators. Multimed. Syst. 2021, 29, 1751–1770. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).