A Hybrid Deep Learning Approach for COVID-19 Diagnosis via CT and X-ray Medical Images †

Abstract

:1. Introduction

2. Related Work

3. Methods and Materials

3.1. Preprocessing

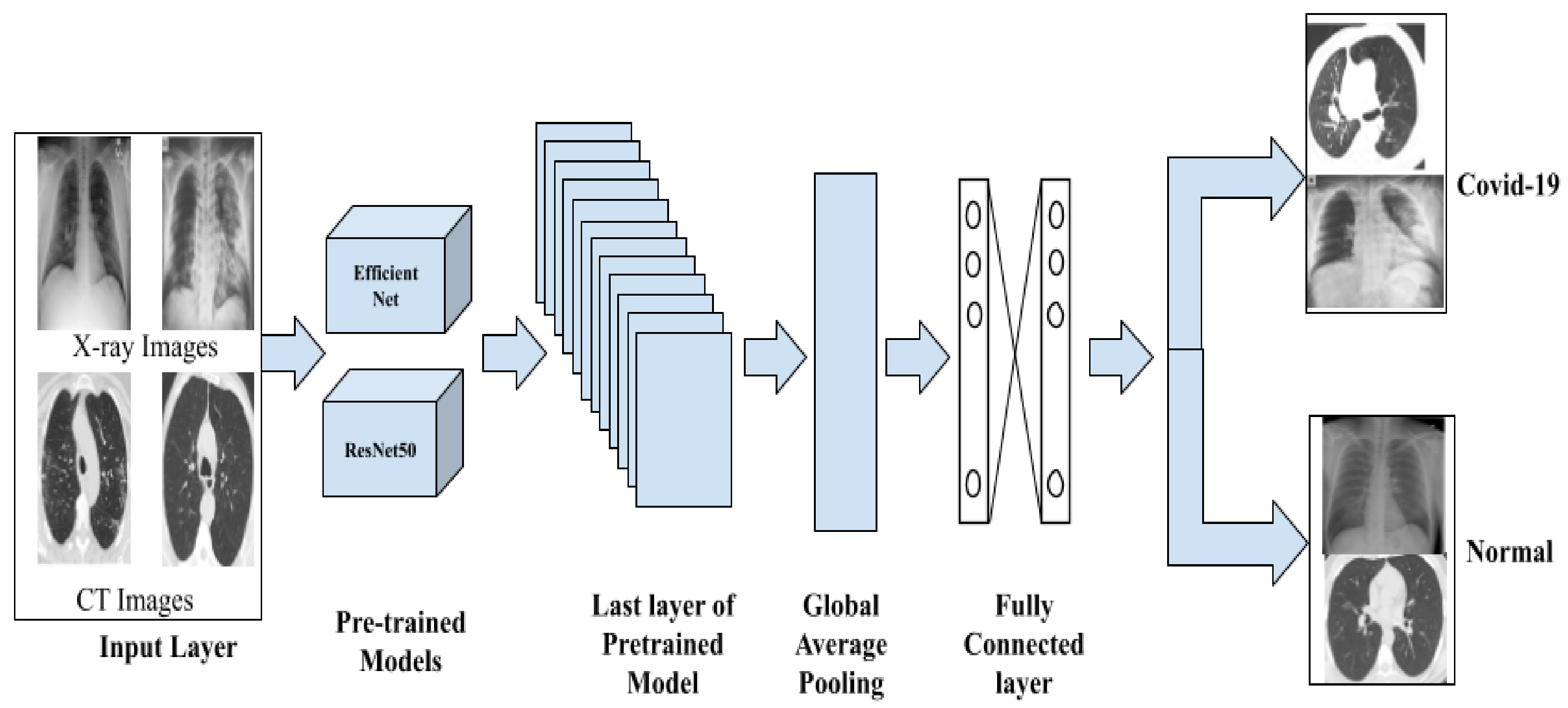

3.2. Feature Extraction

3.3. Classification

4. Experimental Analysis

4.1. Dataset

4.2. Implementation Environment

4.3. Evaluation Metrics

5. Results and Discussion

5.1. Single Straightforward Scenario

5.1.1. COVID-19 Classification Based on Chest X-ray Images

5.1.2. COVID-19 Classification Based on Chest CT Images

5.2. Hybrid Scenario: COVID-19 Classification Using Chest and X-ray Images

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tang, S.; Wang, C.; Nie, J.; Kumar, N.; Zhang, Y.; Xiong, Z.; Barnawi, A. EDL-COVID: Ensemble Deep Learning for COVID-19 Case Detection from Chest X-ray Images. IEEE Trans. Ind. Inform. 2021, 17, 6539–6549. [Google Scholar] [CrossRef]

- Islam, M.N.; Inan, T.T.; Rafi, S.; Akter, S.S.; Sarker, I.H.; Islam, A.K.M.N. A Systematic Review on the Use of AI and ML for Fighting the COVID-19 Pandemic. IEEE Trans. Artif. Intell. 2021, 1, 258–270. [Google Scholar] [CrossRef]

- Al-antari, M.A.; Hua, C.H.; Bang, J.; Lee, S. Fast deep learning computer-aided diagnosis of COVID-19 based on digital chest x-ray images. Appl. Intell. 2021, 51, 2890–2907. [Google Scholar] [CrossRef] [PubMed]

- Chola, C.; Heyat, M.B.B.; Akhtar, F.; Al Shorman, O.; Benifa, J.V.; Muaad, A.Y.M.; Masadeh, M.; Alkahatni, F. IoT Based Intelligent Computer-Aided Diagnosis and Decision Making System for Health Care. In Proceedings of the 2021 International Conference on Information Technology (ICIT), Amman, Jordanm, 14–15 July 2021; pp. 184–189. [Google Scholar] [CrossRef]

- Sekeroglu, B.; Ozsahin, I. Detection of COVID-19 from Chest X-ray Images Using Convolutional Neural Networks. SLAS Technol. 2020, 25, 553–565. [Google Scholar] [CrossRef]

- Sakib, S.; Tazrin, T.; Fouda, M.M.; Fadlullah, Z.M.; Guizani, M. DL-CRC: Deep learning-based chest radiograph classification for covid-19 detection: A novel approach. IEEE Access 2020, 8, 171575–171589. [Google Scholar] [CrossRef]

- Panwar, H.; Gupta, P.K.; Siddiqui, M.K.; Morales-Menendez, R.; Bhardwaj, P.; Singh, V. A deep learning and grad-CAM based color visualization approach for fast detection of COVID-19 cases using chest X-ray and CT-Scan images. Chaos Solitons Fractals 2020, 140, 110190. [Google Scholar] [CrossRef]

- Tabik, S.; Gomez-Rios, A.; Martin-Rodriguez, J.L.; Sevillano-Garcia, I.; Rey-Area, M.; Charte, D.; Guirado, E.; Suarez, J.L.; Luengo, J.; Valero-Gonzalez, M.A.; et al. COVIDGR Dataset and COVID-SDNet Methodology for Predicting COVID-19 Based on Chest X-ray Images. IEEE J. Biomed. Health Inform. 2020, 24, 3595–3605. [Google Scholar] [CrossRef]

- Aradhya, V.N.M.; Mahmud, M.; Chowdhury, M.; Guru, D.S.; Kaiser, M.S.; Azad, S. Learning through One Shot: A Phase by Phase Approach for COVID-19 Chest X-ray Classification. In Proceedings of the 2020 IEEE-EMBS Conference on Biomedical Engineering and Sciences (IECBES), Langkawi Island, Malaysia, 1–3 March 2021; pp. 241–244. [Google Scholar] [CrossRef]

- Aradhya, V.N.M.; Mahmud, M.; Guru, D.S.; Agarwal, B.; Kaiser, M.S. One-shot Cluster-Based Approach for the Detection of COVID-19 from Chest X-ray Images. Cognit. Comput. 2021, 13, 873–881. [Google Scholar] [CrossRef]

- Sonbhadra, S.K.; Agarwal, S.; Nagabhushan, P. Pinball-OCSVM for early-stage COVID-19 diagnosis with limited posteroanterior chest X-ray images. arXiv 2020, arXiv:2010.08115. [Google Scholar]

- Zhao, W.; Jiang, W.; Qiu, X. Deep learning for COVID-19 detection based on CT images. Sci. Rep. 2021, 11, 14353. [Google Scholar] [CrossRef]

- Taresh, M.M.; Zhu, N.; Ali, T.A.A.; Hameed, A.S.; Mutar, M.L. Transfer Learning to Detect COVID-19 Automatically from X-ray Images Using Convolutional Neural Networks. Int. J. Biomed. Imaging 2021, 2021, 8828404. [Google Scholar] [CrossRef]

- Al-masni, M.A.; Al-antari, M.A.; Min, H.; Hyeon, N.; Kim, T. A deep learning model integrating FrCN and residual convolutional networks for skin lesion segmentation and classification. In Proceedings of the 2019 IEEE Eurasia Conference on Biomedical Engineering, Healthcare and Sustainability (ECBIOS), Okinawa, Japan, 31 May–3 June 2019; pp. 95–98. [Google Scholar]

- Al-antari, M.A.; Han, S.M.; Kim, T.S. Evaluation of deep learning detection and classification towards computer-aided diagnosis of breast lesions in digital X-ray mammograms. Comput. Methods Programs Biomed. 2020, 196, 105584. [Google Scholar] [CrossRef]

- Chowdhury, M.E.H.; Rahman, T.; Khandakar, A.; Mazhar, R.; Kadir, M.A.; Mahbub, Z.B.; Islam, K.R.; Khan, M.S.; Iqbal, A.; al Emadi, N. Can AI Help in Screening Viral and COVID-19 Pneumonia? IEEE Access 2020, 8, 132665–132676. [Google Scholar] [CrossRef]

- Afshar, P.; Heidarian, S.; Enshaei, N.; Naderkhani, F.; Rafiee, M.J.; Oikonomou, A.; Fard, F.B.; Samimi, K.; Plataniotis, K.N.; Mohammadi, A. COVID-CT-MD, COVID-19 computed tomography scan dataset applicable in machine learning and deep learning. Sci. Data 2021, 8, 121. [Google Scholar] [CrossRef]

- Yang, X.; He, X.; Zhao, J.; Zhang, Y.; Zhang, S.; Xie, P. COVID-CT-Dataset: A CT Scan Dataset about COVID-19. arXiv 2020, arXiv:2003.13865. [Google Scholar]

- Alruwaili, M.; Shehab, A.; Abd El-Ghany, S. COVID-19 Diagnosis Using an Enhanced Inception-ResNetV2 Deep Learning Model in CXR Images. J. Healthc. Eng. 2021, 2021, 6658058. [Google Scholar] [CrossRef]

- Ahmad, F.; Farooq, A.; Ghani, M.U. Deep Ensemble Model for Classification of Novel Coronavirus in Chest X-ray Images. Comput. Intell. Neurosci. 2021, 2021, 8890226. [Google Scholar] [CrossRef]

- Chanda, P.B.; Banerjee, S.; Dalai, V.; Ray, R. CNN based transfer learning framework for classification of COVID-19 disease from chest X-ray. In Proceedings of the 2021 5th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 6–8 May 2021; pp. 1367–1373. [Google Scholar] [CrossRef]

- Rehman, M.; Shah, R.A.; Khan, M.B.; Ali, N.A.A.; Alotaibi, A.A.; Althobaiti, T.; Ramzan, N.; Shah, S.A.; Yang, X.; Alomainy, A.; et al. Contactless Small-Scale Movement Monitoring System Using Software Defined Radio for Early Diagnosis of COVID-19. IEEE Sens. J. 2021, 21, 17180–17188. [Google Scholar] [CrossRef]

- Hosny, K.M.; Darwish, M.M.; Li, K.; Salah, A. COVID-19 diagnosis from CT scans and chest X-ray images using low-cost Raspberry Pi. PLoS ONE 2021, 16, e0250688. [Google Scholar] [CrossRef]

- Ravi, V.; Narasimhan, H.; Chakraborty, C.; Pham, T.D. Deep learning-based meta-classifier approach for COVID-19 classification using CT scan and chest X-ray images. Multimedia Syst. 2021, 1–15. [Google Scholar] [CrossRef]

- Benmalek, E.; Elmhamdi, J.; Jilbab, A. Comparing CT scan and chest X-ray imaging for COVID-19 diagnosis. Biomed. Eng. Adv. 2021, 1, 100003. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Science, N.; Phenomena, C.; Rajpal, S.; Lakhyani, N.; Kumar, A.; Kohli, R.; Kumar, N. Using handpicked features in conjunction with ResNet-50 for improved detection of COVID-19 from chest X-ray images. Chaos Solitons Fractals 2021, 145, 110749. [Google Scholar] [CrossRef]

- Muaad, A.; Jayappa, H.; Al-Antari, M.; Lee, S. ArCAR: A Novel Deep Learning Computer-Aided Recognition for Character-Level Arabic Text Representation and Recognition. Algorithms 2021, 14, 216. [Google Scholar] [CrossRef]

- Bai, H.X.; Wang, R.; Xiong, Z.; Hsieh, B.; Chang, K.; Halsey, K.; Tran, T.M.; Choi, J.W.; Wang, D.C.; Shi, L.B.; et al. Artificial intelligence augmentation of radiologist performance in distinguishing COVID-19 from pneumonia of other origin at chest CT. Radiology 2020, 296, E156–E165. [Google Scholar] [CrossRef]

- Chowdhury, N.K.; Kabir, M.A.; Rahman, M.; Rezoana, N. ECOVNet: An Ensemble of Deep Convolutional Neural Networks Based on EfficientNet to Detect COVID-19 From Chest X-rays. arXiv 2020, arXiv:2009.11850. [Google Scholar]

- Al-antari, M.A.; Hua, C.-H.; Bang, J.; Choi, D.-J.; Kang, S.M.; Lee, S. A Rapid Deep Learning Computer-Aided Diagnosis to Simultaneously Detect and Classify the Novel COVID-19 Pandemic. In Proceedings of the 2020 IEEE-EMBS Conference on Biomedical Engineering and Sciences (IECBES)—IECBES 2020, Langkawi Island, Malaysia, 1–3 March 2021. [Google Scholar]

- Al-antari, M.A.; Al-masni, M.A.; Choi, M.-T.; Han, S.-M.; Kim, T.-S. A Fully Integrated Computer-Aided Diagnosis System for Digital X-ray Mammograms via Deep Learning Detection, Segmentation, and Classification. Int. J. Med. Inform. 2018, 117, 44–54. [Google Scholar] [CrossRef]

- Al-antari, M.A.; Al-masni, M.A.; Park, S.-U.; Park, J.; Metwally, M.K.; Kadah, Y.M.; Han, S.-M.; Kim, T.-S. An Automatic Computer-Aided Diagnosis System for Breast Cancer in Digital Mammograms via Deep Belief Network. J. Med. Biol. Eng. 2017, 38, 443–456. [Google Scholar] [CrossRef]

- Al-masni, M.A.; Al-antari, M.A.; Park, J.-M.; Gi, G.; Kim, T.-Y.; Rivera, P.; Valarezo, E.; Choi, M.; Han, S.-M.; Kim, T.-S. Simultaneous detection and classification of breast masses in digital mammograms via a deep learning YOLO-based CAD system. Comput. Methods Programs Biomed. 2018, 157, 85–94. [Google Scholar] [CrossRef] [PubMed]

- Al-masni, M.A.; Al-antari, M.A.; Choi, M.-T.; Han, S.-M.; Kim, T.-S. Skin Lesion Segmentation in Dermoscopy Images via Deep Full Resolution Convolutional Networks. Comput. Methods Programs Biomed. 2018, 162, 221–231. [Google Scholar] [CrossRef]

| Type of Images | COVID | Normal |

|---|---|---|

| CT | 1323 | 1290 |

| X-ray | 3923 | 3960 |

| Model | Az | Sp | Re | F-M |

|---|---|---|---|---|

| ResNet50 | 98.47 | 99.0 | 100 | 99.0 |

| EfficientNetB0 | 99.36 | 98.0 | 99.0 | 99.0 |

| Model | Az | Sp | Re | F-M |

|---|---|---|---|---|

| ResNet50 | 98.85 | 99.0 | 98.0 | 99.0 |

| EfficientNetB0 | 99.23 | 99.0 | 99.0 | 99.0 |

| Model | Az | Sp | Re | F-M |

|---|---|---|---|---|

| ResNet50 | 98.01 | 99.0 | 99.0 | 99.0 |

| EfficientNetB0 | 99.58 | 99.0 | 99.0 | 99.0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chola, C.; Mallikarjuna, P.; Muaad, A.Y.; Bibal Benifa, J.V.; Hanumanthappa, J.; Al-antari, M.A. A Hybrid Deep Learning Approach for COVID-19 Diagnosis via CT and X-ray Medical Images. Comput. Sci. Math. Forum 2022, 2, 13. https://doi.org/10.3390/IOCA2021-10909

Chola C, Mallikarjuna P, Muaad AY, Bibal Benifa JV, Hanumanthappa J, Al-antari MA. A Hybrid Deep Learning Approach for COVID-19 Diagnosis via CT and X-ray Medical Images. Computer Sciences & Mathematics Forum. 2022; 2(1):13. https://doi.org/10.3390/IOCA2021-10909

Chicago/Turabian StyleChola, Channabasava, Pramodha Mallikarjuna, Abdullah Y. Muaad, J. V. Bibal Benifa, Jayappa Hanumanthappa, and Mugahed A. Al-antari. 2022. "A Hybrid Deep Learning Approach for COVID-19 Diagnosis via CT and X-ray Medical Images" Computer Sciences & Mathematics Forum 2, no. 1: 13. https://doi.org/10.3390/IOCA2021-10909

APA StyleChola, C., Mallikarjuna, P., Muaad, A. Y., Bibal Benifa, J. V., Hanumanthappa, J., & Al-antari, M. A. (2022). A Hybrid Deep Learning Approach for COVID-19 Diagnosis via CT and X-ray Medical Images. Computer Sciences & Mathematics Forum, 2(1), 13. https://doi.org/10.3390/IOCA2021-10909