LSTM-Based News Article Category Classification †

Abstract

1. Introduction

- To develop a deep learning model that uses LSTM networks for classifying news articles into 14 categories;

- To achieve higher classification accuracy and better generalization compared to traditional models while handling a larger and more diverse set of categories.

2. Literature Review

3. System Description

3.1. Existing System

- Leveraging an advanced deep learning model (LSTM);

- Utilizing a richer comprehensive dataset that spans 14 distinct news categories;

- Improving classification accuracy and robustness, but also enhancing the model’s ability to handle complex and nuanced text, which is often encountered in real-world news content.

3.2. Proposed System

- The Data Loading Module is responsible for loading the dataset from the CSV file.

- The Data Preprocessing Module handles the preparation of raw data as illustrated in Figure 2. This includes the following below factors:

- Lowercase Conversion: All text data was transformed to lowercase letters to ensure consistency in the representation of words and to facilitate subsequent processing steps.

- Special Characters Removal: Most of the time, news articles contain special symbols like currency symbols, e.g., £, €, $. These symbols complicate the procedure and are not relevant or useful. Hence, these are removed in this step.

- Punctuation Removal: Punctuation marks, including periods, commas, and quotation marks, were removed from the text data. This step was performed to eliminate irrelevant characters that do not convey meaningful information for text classification tasks.

- Label Encoding: The categorical target variable, “category,” needed to be converted into a numerical format for model consumption. This was achieved using Label Encoding, where each unique news category was assigned a unique integer identifier. Following this, these integer labels were one-hot encoded into a binary vector representation. One-hot encoding creates a binary vector for each label, where a ‘1’ in a specific position indicates the presence of that category and ‘0’ otherwise.

- Tokenization: A Tokenizer was initialized with a maximum vocabulary size of 20,000 unique words. The tokenizer builds a vocabulary based on the most frequent words in the training corpus. Words not found in this vocabulary are designated as “out-of-vocabulary” tokens. This process converts each word in the text into a corresponding integer ID from the vocabulary.

- Sequence Padding: After tokenization, the resulting sequences of integer IDs varied in length. The LSTM model has to be given fixed size input; hence, these sequences were added to make each one a fixed maximum length of 300. Shorter sequences were padded with zeros and longer sequences were truncated. This standardization is critical for batch processing in neural networks, as it allows all input sequences in a batch to have the same dimension.

- Model Building and Training Module:

- Input Layer: The initial stage of this architecture involves preparing raw news article text for machine processing. This begins with the “Input” of the raw text, which is then transformed into “Tokens”. This conversion is critical, as it makes the input text significantly easier for machine learning models to read and process.

- Embedding Layer: Following tokenization, the “Embedding Layer” plays a crucial role in transforming the discrete tokens into continuous, dense numerical vectors, explicitly labeled in Figure 3 as “Word Embeddings—128 D Vectors”. The embedding layer is responsible for mapping each token to a fixed-size vector with a dimension of 128. The embedding dimension was chosen as 128 to deliver a balance between the expressive power of the model and computational efficiency. This ensures that the semantic relationships of the words are identified while keeping the model as simple as possible. These vectors are dynamic in nature; they are learned by the model throughout the process of training.

- LSTM Layer: The “LSTM Layer” is the main sequential processing component of the architecture. The LSTM layer accepts the sequence of the above-discussed 128-dimensional word embeddings as its input. Each word embedding is processed one by one, in addition to storing an internal “cell state” (portraying long-term memory) and a “hidden state” (portraying short-term memory). The parameter “Unit = 64” in the LSTM layer indicates that this layer consists of 64 memory cells or neurons as shown in Figure 3. These provide enough capacity for the model to learn the long-term dependencies. This also helps to avoid overfitting or unnecessary training time. Each of these 64 units energetically contributes to preserving and processing information over time. This encourages the LSTM to be capable of learning complex patterns and dependencies in the content of news articles.

- Max Pooling 1-D Layer: After the LSTM layer, the next layer is “Max Pooling Layer 1D,” which is responsible for performing a down-sampling operation on the sequential output provided by the previous layer. The LSTM gives the order of hidden states, where each state resembles an input token. The main aim of the pooling layer is to shorten the long and variable-length sequence into compact and fixed-size representation. Max Pooling 1D functions by sliding a window (its size is defined by a parameter named pool_size) across the sequence of input and then selecting the highest value within the window. This process efficiently selects the most prominent feature from each local region of the output of LSTM.

- Dense Layer with ReLU Activation: The “Dense Layer,” which is also known as a fully connected layer, receives the flattened and aggregated features from the Max Pooling 1D layer. In the dense layer, each neuron is connected to input features from the preceding layer, enabling it to learn complex combinations and interactions among these features. Crucially, the “Activation = Relu” (Rectified Linear Unit) is applied to the output of this dense layer;

- Dropout Layer: A dropout rate of value 0.5 was applied as a regularization strategy, a widely accepted setting that effectively mitigates overfitting by randomly deactivating half of the neurons during training. These design decisions were made to optimize model generalization and stability across the dataset.

- Output Layer with Softmax Activation: The “Output Layer” is the last section of the neural network which is accountable for creating the ultimate predictions or classifications. It takes the processed and regularized features from the preceding layers and transforms the features into a suitable format for categorizing the input news article. The Softmax activation function is applied in this layer. Softmax is specifically designed for multi-class classification problems, where an input belongs to one of several mutually exclusive categories. It converts the raw output scores (often referred to as logits) from the network into a probability distribution across all defined classes.

- 4

- Evaluation Module: This module evaluates the performance of the model using metrics such as accuracy and precision. It ensures that the models are effectively categorizing news articles.

- 5

- Custom Input Categorization Module: Users can input customized news articles’ title and body, which the system categorizes into categories using the trained models.

Dataset Description

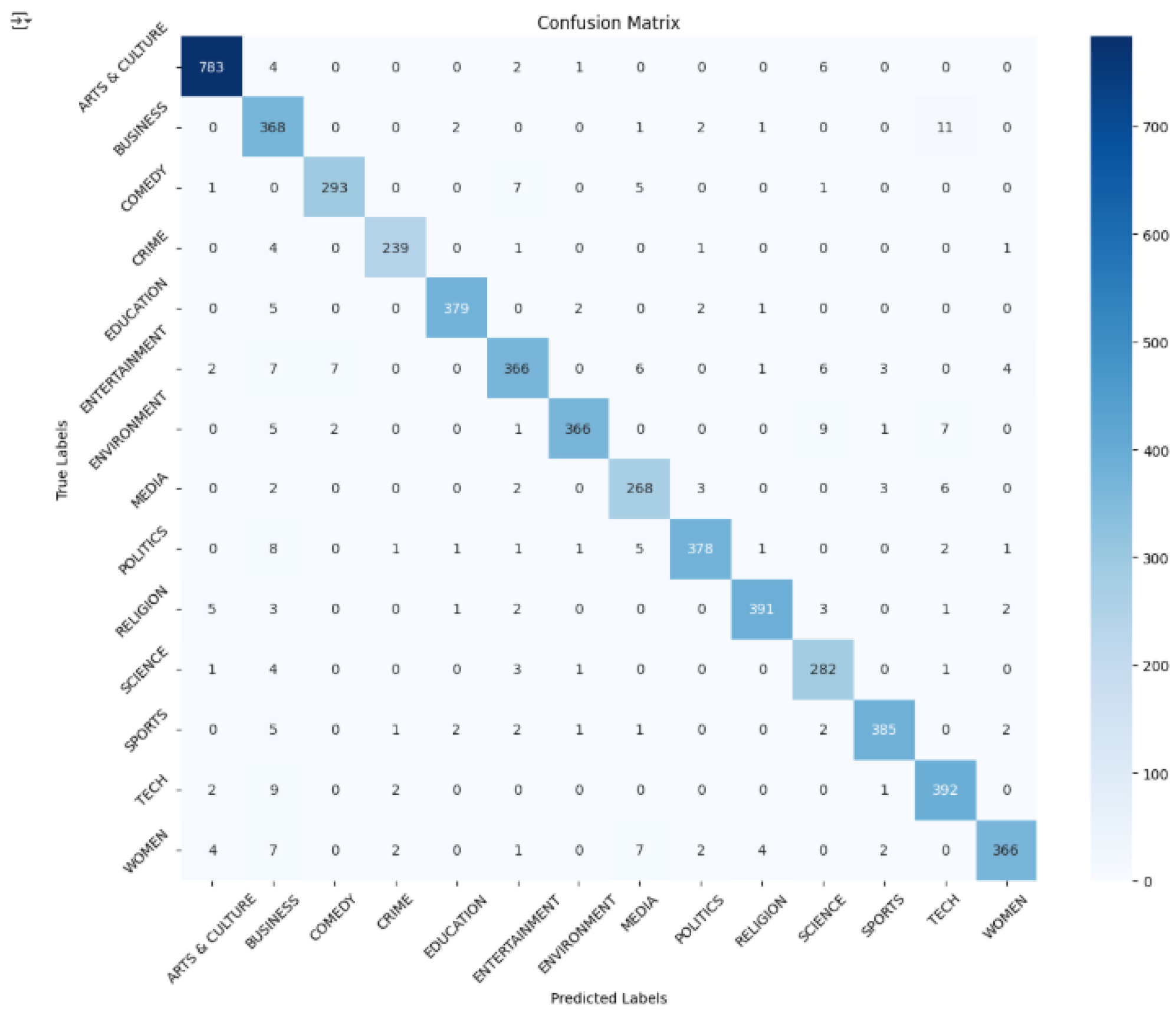

4. Result Analysis

5. Conclusions

6. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| NLP | Natural Language Processing. |

| LSTM | Long Short-Term Memory. |

| RNN | Recurrent Neural Network. |

| CNN | Convolutional Neural Network. |

| CSE | Computer Science & Engineering. |

| SVM | Support Vector Machines. |

| NB | Naïve Bayes. |

| KNN | K Nearest Neighbors |

| TF-IDF | Term Frequency–Inverse Document Frequency |

| DL | Deep Learning. |

References

- Naseeba, B.; Challa, N.P.; Doppalapudi, A.; Chirag, S.; Nair, N.S. Machine Learning Model for News Article Classification. In Proceedings of the 5th International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 23–25 January 2023; pp. 1009–1016. [Google Scholar]

- Jia, Y.; Chen, Z.; Yu, S. Reader emotion classification of news headlines. In Proceedings of the 5th International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 23–25 January 2023; p. 16. [Google Scholar]

- B, S.; Santhanalakshmi, S. News Article Topic Classification Using Embeddings. In Proceedings of the 14th International Conference on Computing Communication and Networking Technologies, Delhi, India, 6–8 July 2023; pp. 1–7. [Google Scholar]

- Abinaya, N.; Jayadharshini, P.; Priyanka, S.; Keerthika, S.; Santhiya, S. Online News Article Classification Using Machine Learning Approaches. In Proceedings of the 2nd International Conference on Disruptive Technologies (ICDT), Greater Noida, India, 15–16 March 2024; pp. 1394–1396. [Google Scholar]

- Ramasubramanian, C.; Ramya, R. Effective Preprocessing Activities in Text Mining using Improved Porter Stemmer Algorithm. Int. J. Adv. Res. Comput. Commun. Eng. 2023, 2, 4536–4538. [Google Scholar]

- V, M.; T, D.; Kalaiyarasi, M. Classification of Newspaper Article Classification by Employing Support Vector Machine in Comparison with Perceptron to Improve Accuracy. In Proceedings of the Eighth International Conference on Science Technology Engineering and Mathematics (ICONSTEM), Chennai, India, 6–7 April 2023; p. 16. [Google Scholar]

- Punitha, S.C.; Punithavalli, M. Performance Evaluation of Semantic Based and Ontology Based Text Document Clustering Techniques. Int. J. Adv. Res. Comput. Commun. Eng. 2012, 30, 100–106. [Google Scholar] [CrossRef]

- Bracewell, D.B.; Yan, J.; Ren, F.; Kuroiwa, S. Category Classification and Topic Discovery of Japanese and English News Articles. Electron. Notes Theor. Comput. Sci. 2009, 225, 51–56. [Google Scholar] [CrossRef]

- Stein, A.J.; Weerasinghe, J.; Mancoridis, S.; Greenstadt, R. News Article Text classification and Summary for Authors and topics. Int. J. Adv. Res. Comput. Commun. Eng. 2012, 10, 1–12. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rafat, Y.; Narayana, P.; Mohana, R.M.; Srilatha, K. LSTM-Based News Article Category Classification. Comput. Sci. Math. Forum 2025, 12, 8. https://doi.org/10.3390/cmsf2025012008

Rafat Y, Narayana P, Mohana RM, Srilatha K. LSTM-Based News Article Category Classification. Computer Sciences & Mathematics Forum. 2025; 12(1):8. https://doi.org/10.3390/cmsf2025012008

Chicago/Turabian StyleRafat, Yusra, Potu Narayana, R. Madana Mohana, and Kolukuluri Srilatha. 2025. "LSTM-Based News Article Category Classification" Computer Sciences & Mathematics Forum 12, no. 1: 8. https://doi.org/10.3390/cmsf2025012008

APA StyleRafat, Y., Narayana, P., Mohana, R. M., & Srilatha, K. (2025). LSTM-Based News Article Category Classification. Computer Sciences & Mathematics Forum, 12(1), 8. https://doi.org/10.3390/cmsf2025012008