Automatic Detection of Foraging Hens in a Cage-Free Environment with Computer Vision Technology

Abstract

1. Introduction

2. Materials and Methods

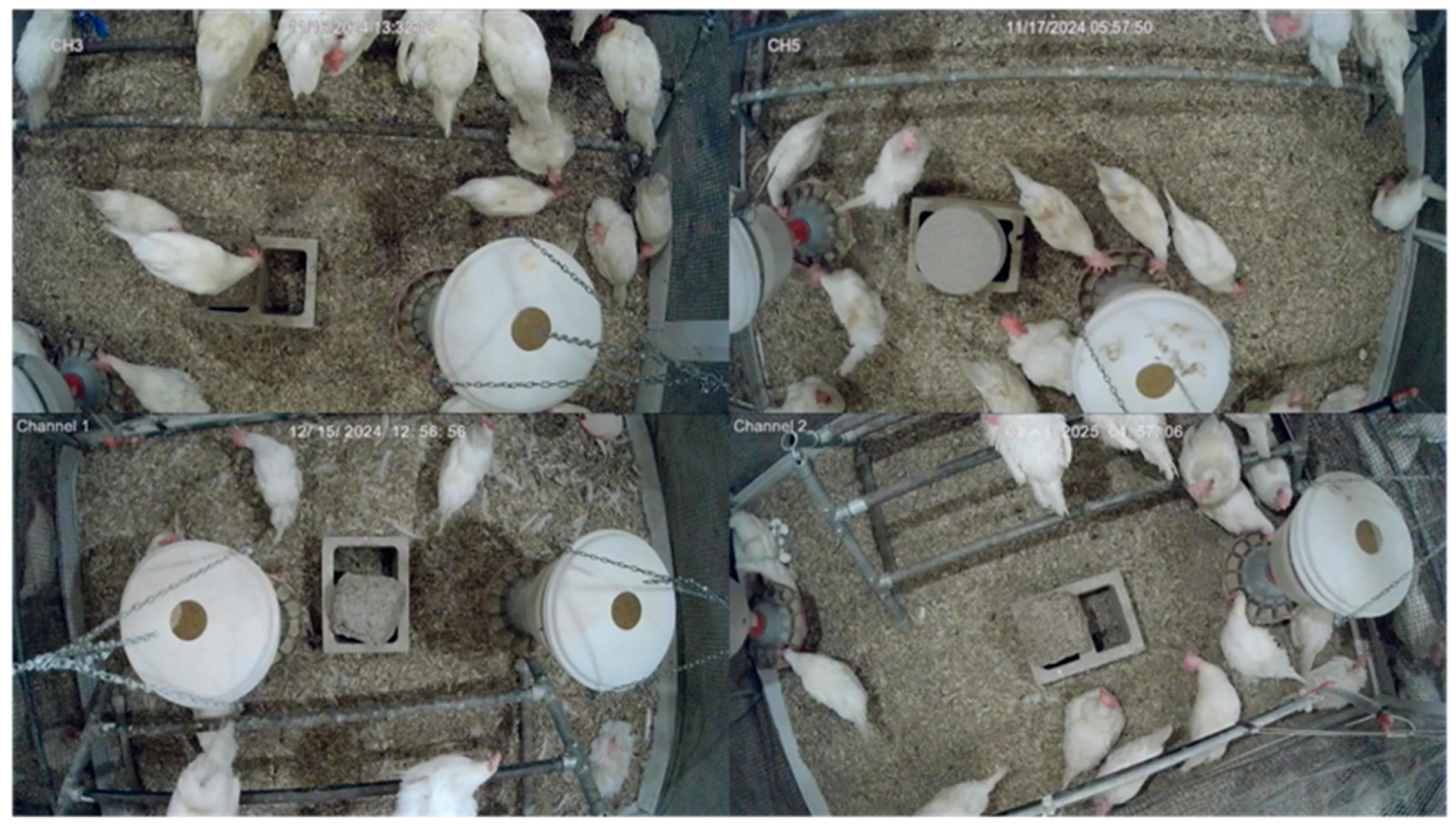

2.1. Experimental Setup

2.2. Image Acquisition

2.3. Image Pre-Processing and Annotation

2.4. Model Training

2.5. Testing

2.6. Performance Metrics

2.6.1. Precision

2.6.2. Recall

2.6.3. mAP@0.5

3. Results

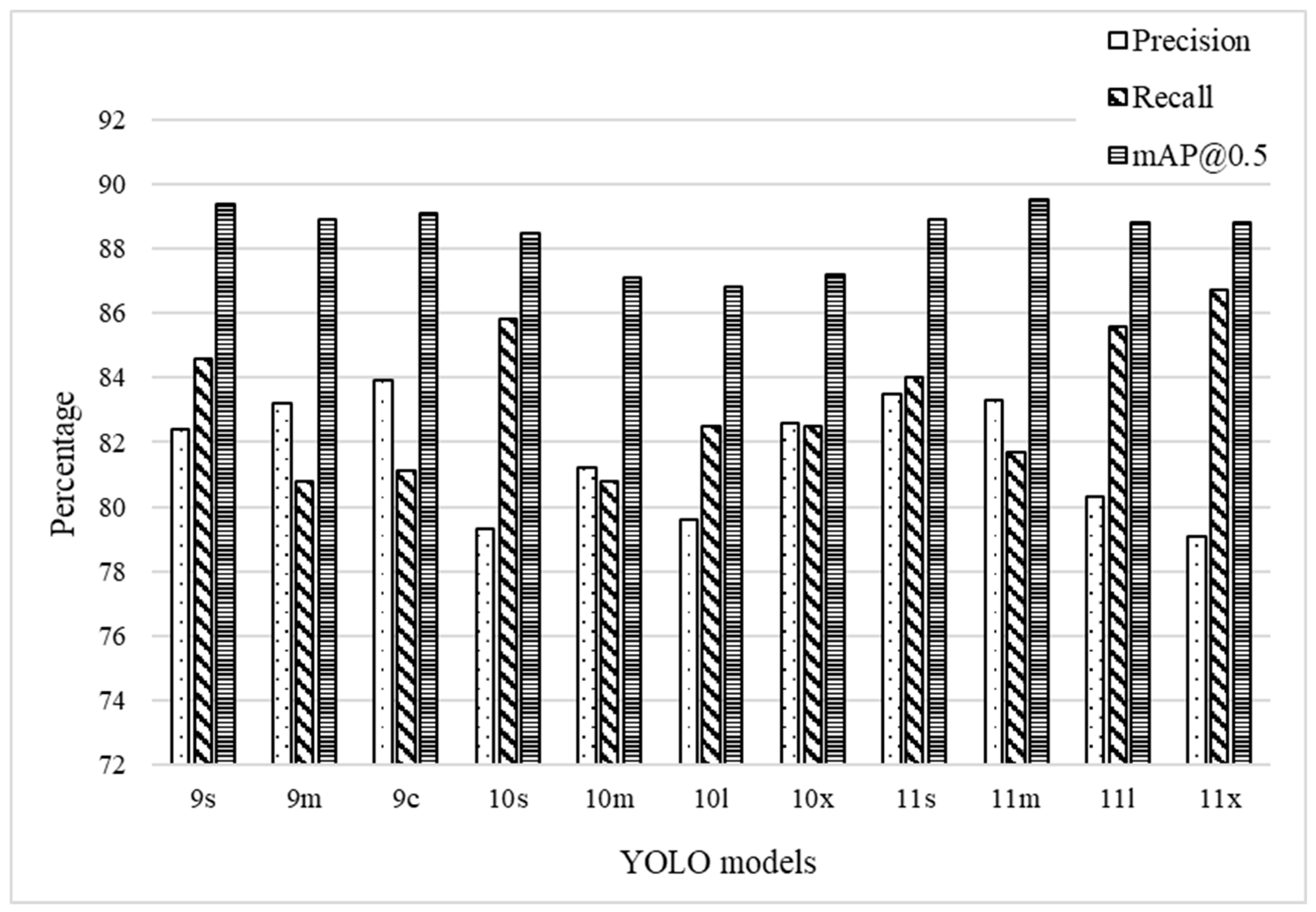

3.1. Performance Metrics

3.1.1. Precision

3.1.2. Recall

3.1.3. mAP@0.5

3.2. Confusion Matrix

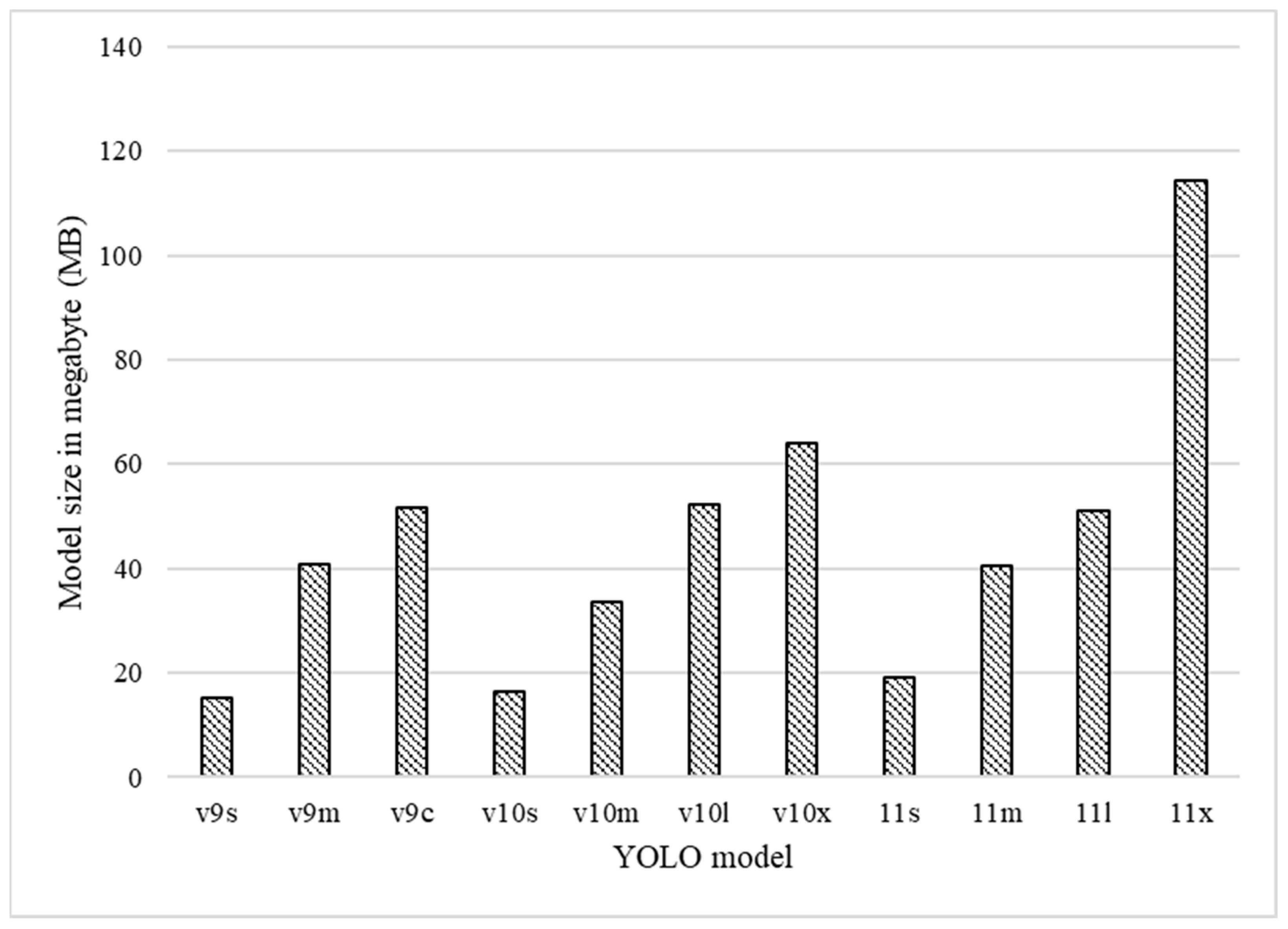

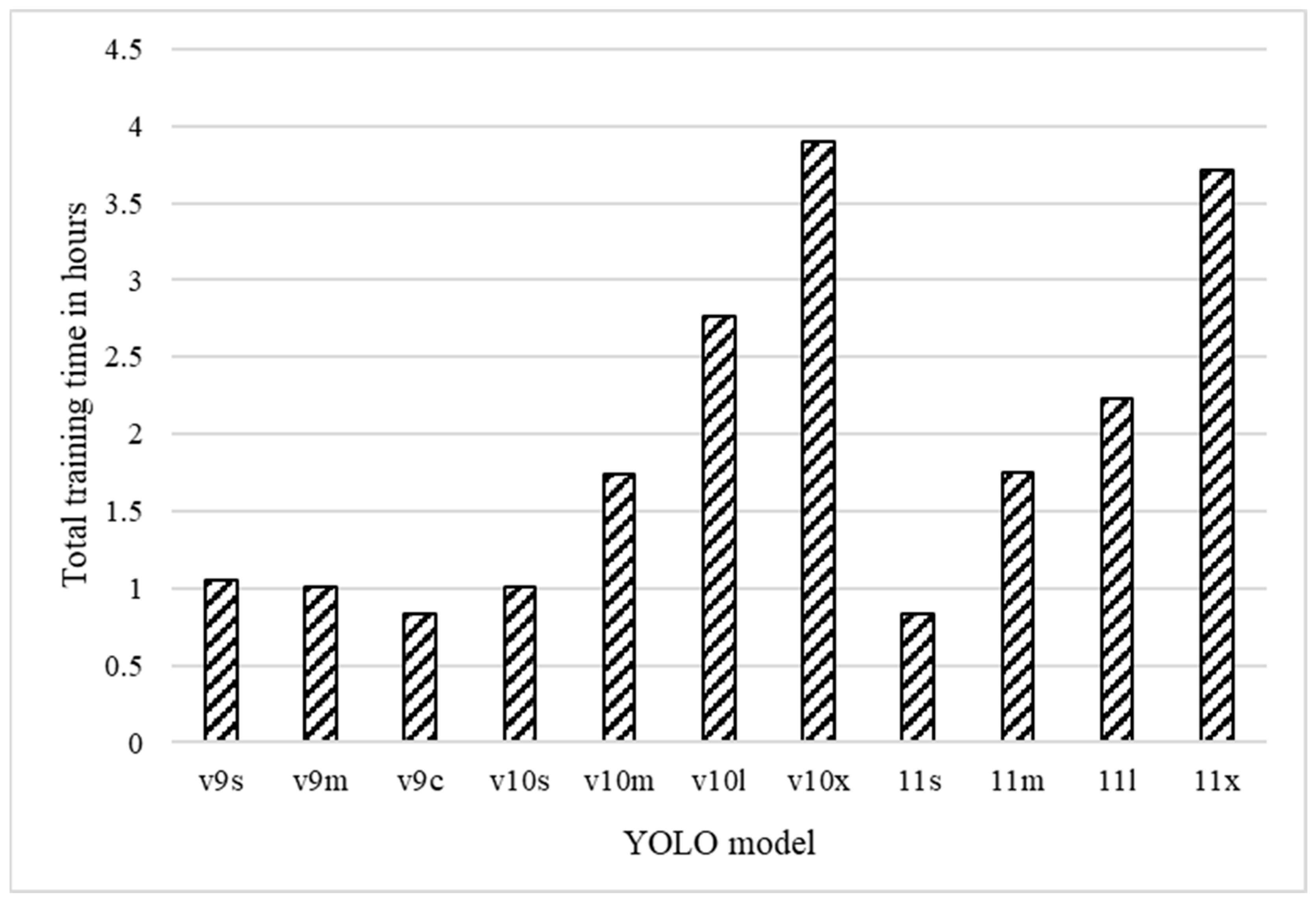

3.3. Computing Resource Use

3.3.1. Model Size

3.3.2. Training Time

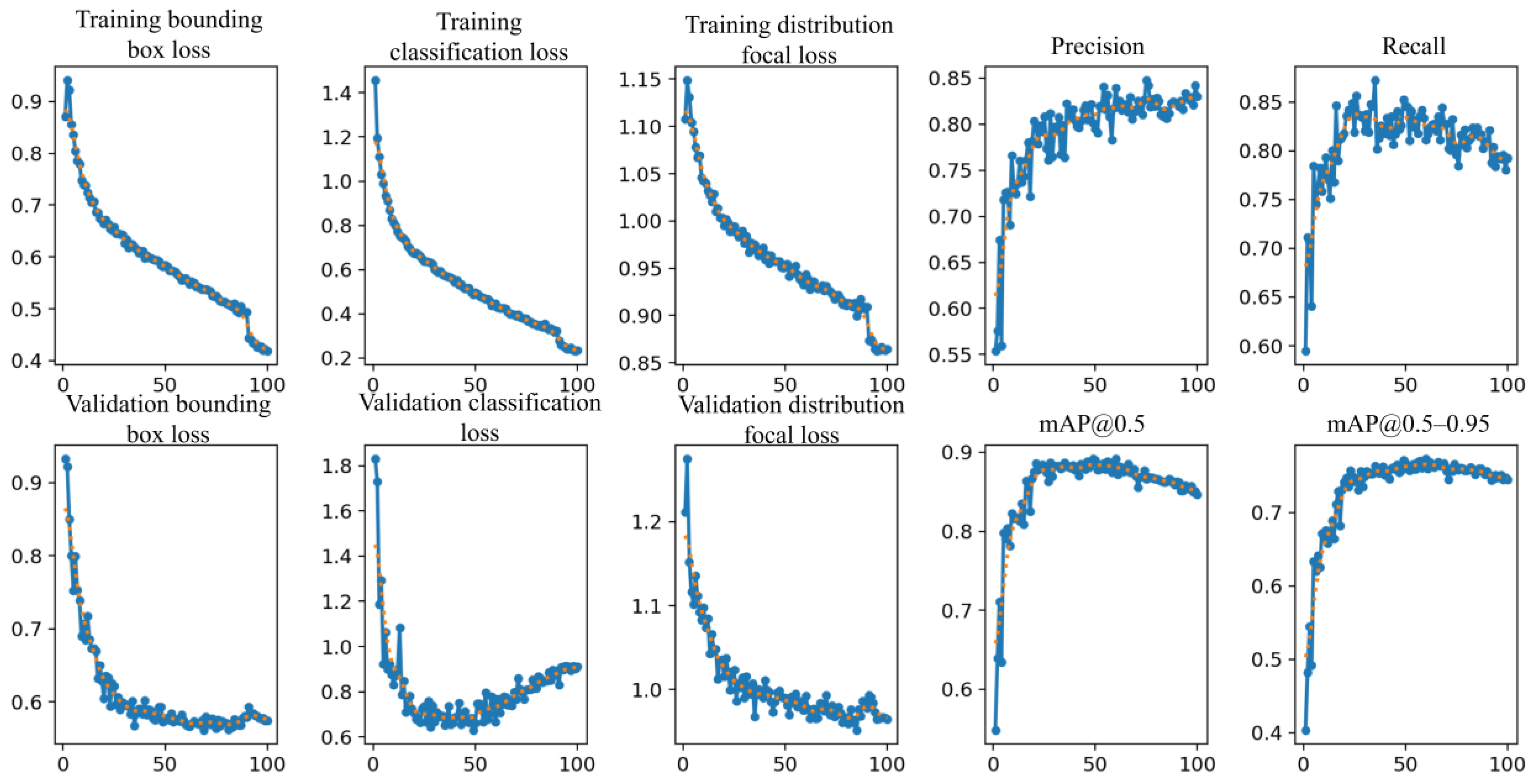

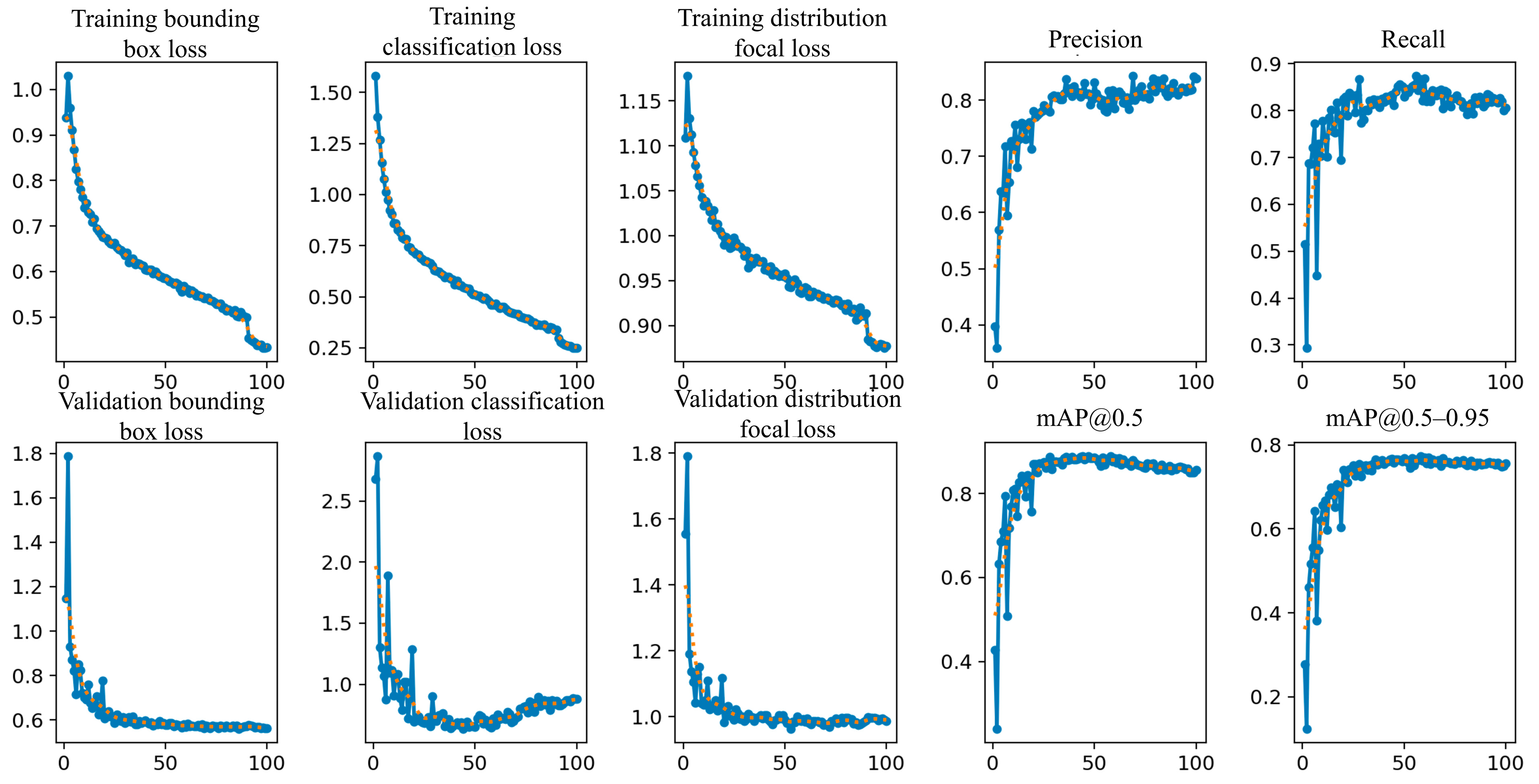

3.4. Model Training and Validation Evaluation

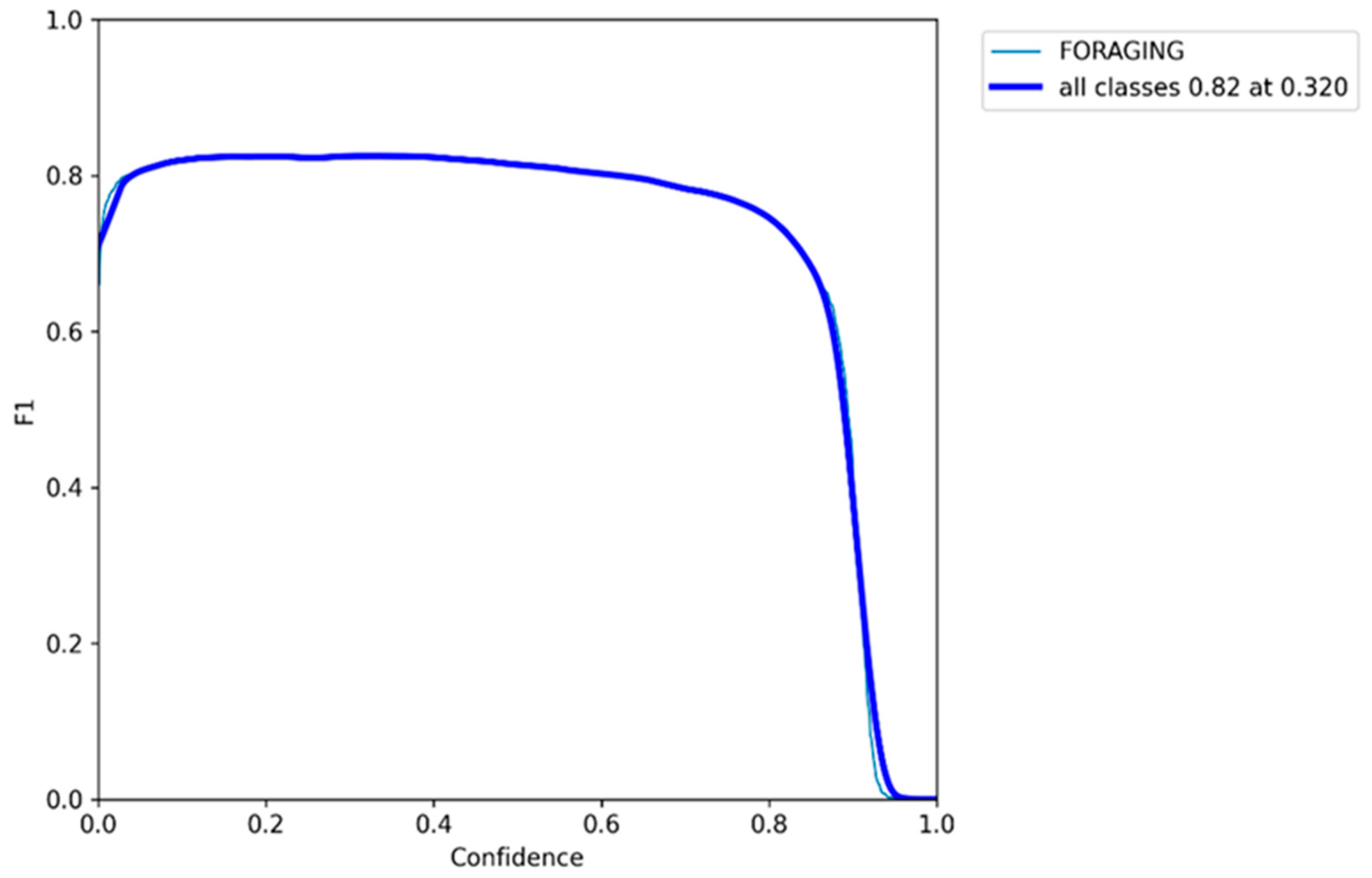

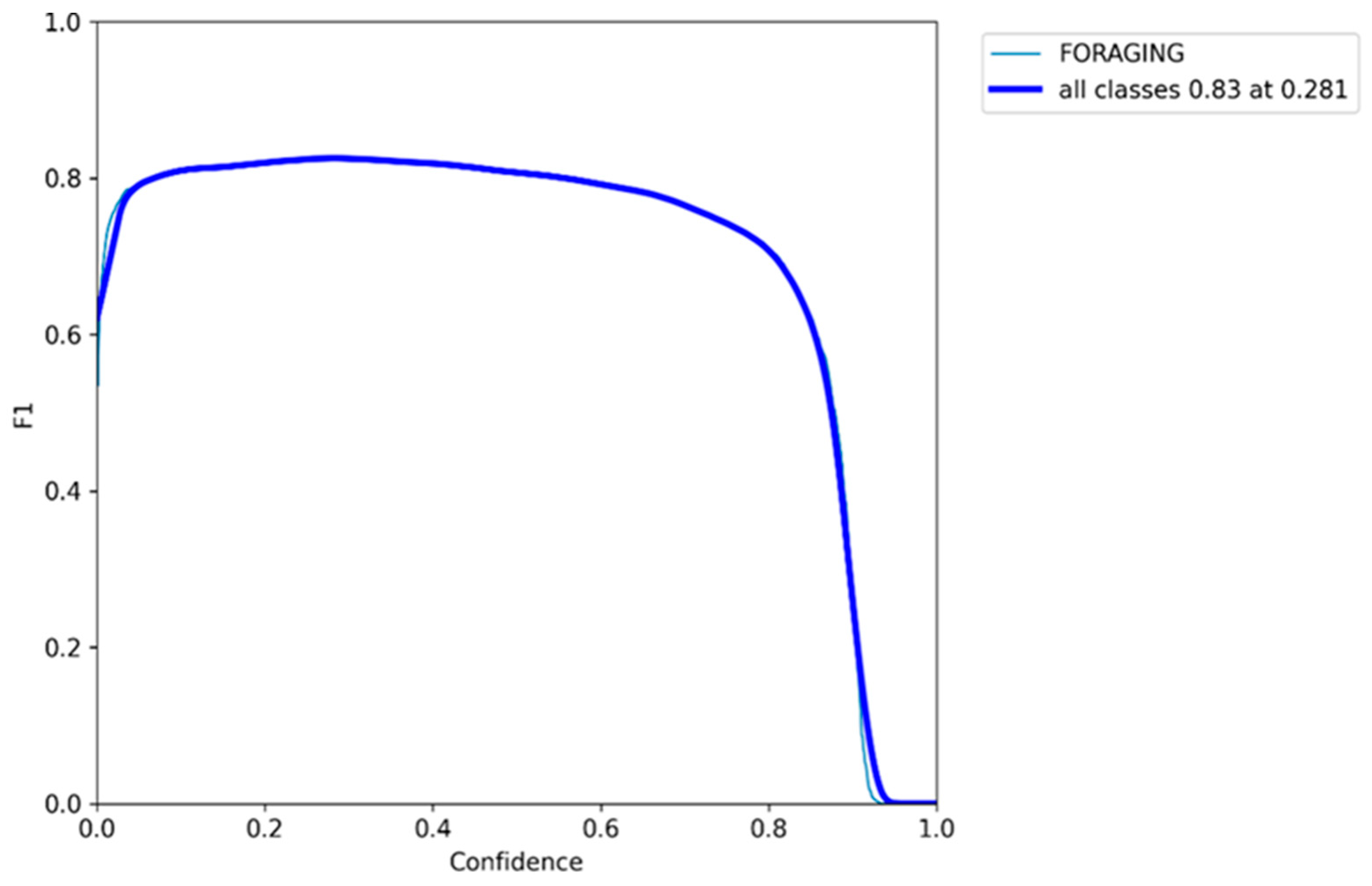

3.5. F1 Confidence Curve

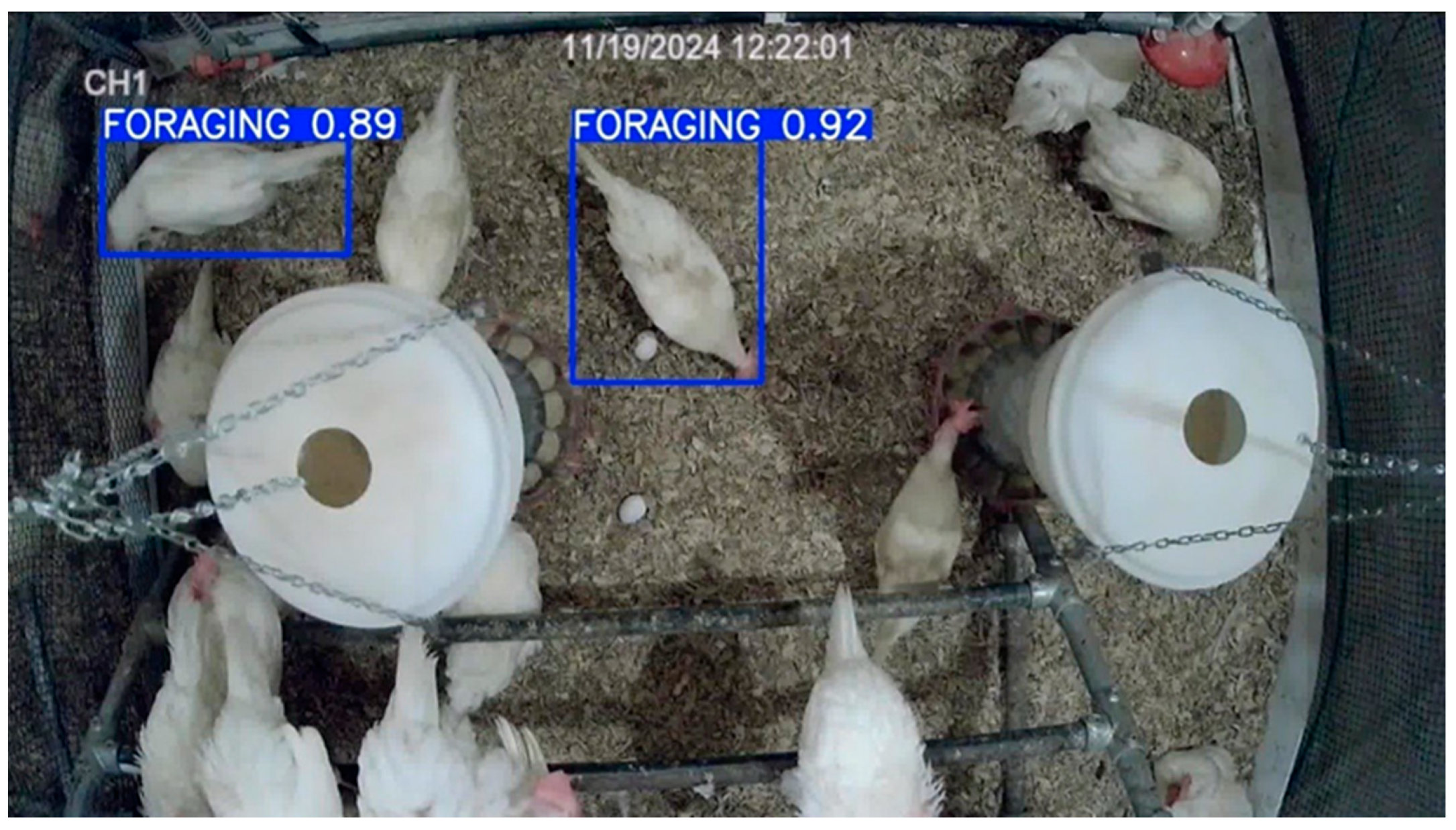

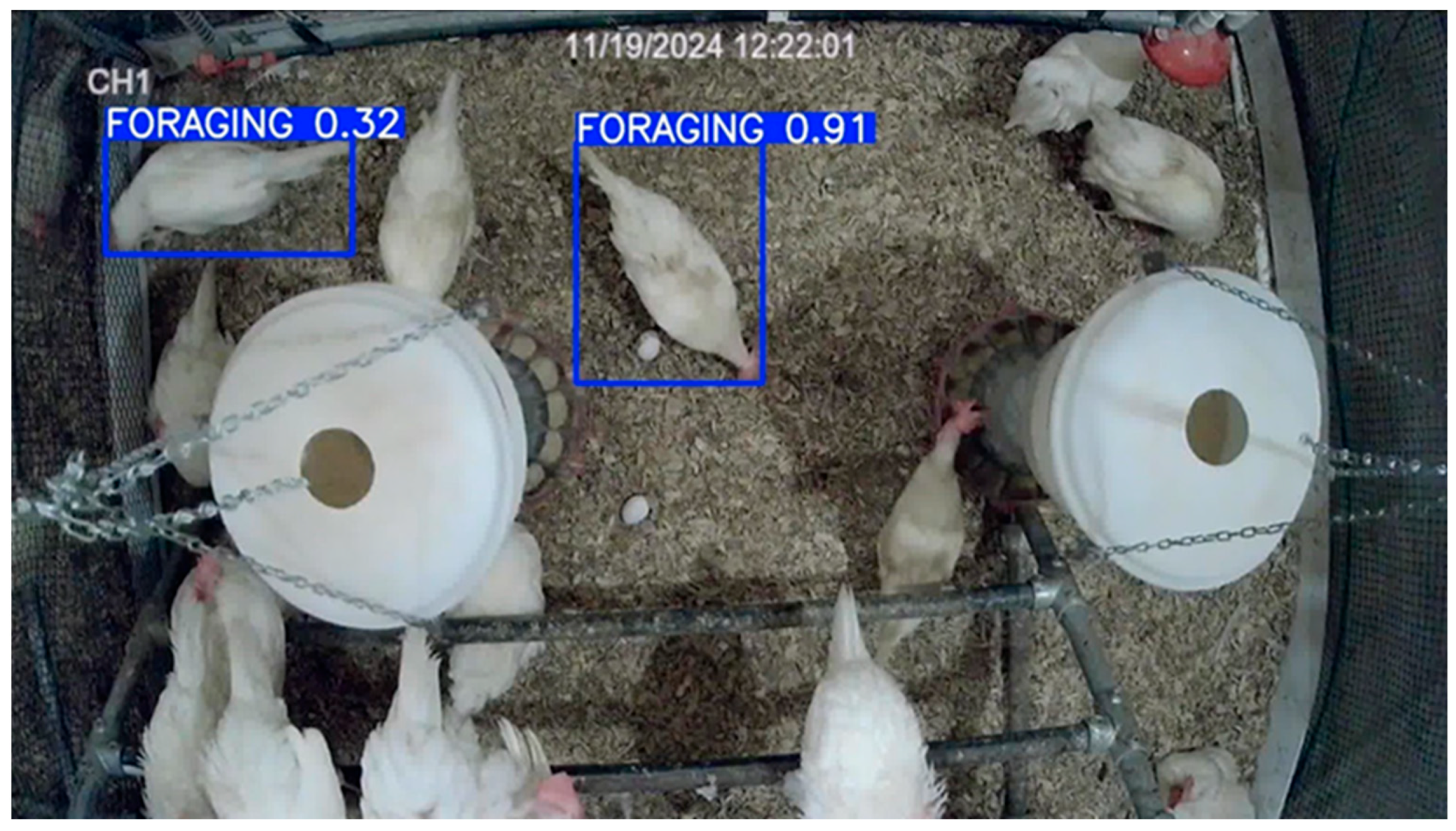

3.6. Model Deployment in Test Dataset

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CF | Cage-free |

| CC | Conventional cages |

| CNN | Convolutional Neural Network |

| YOLO | You Only Look Once |

| UGA | University of Georgia |

| IACUC | Institutional Animal Care and Use Committee |

| mAP | Mean average precision |

| IoU | Intersection over union |

| mAP@0.5 | Mean average precision at 50% intersection over union |

References

- Myers, M.; Ruxton, C.H.S. Eggs: Healthy or Risky? A Review of Evidence from High Quality Studies on Hen’s Eggs. Nutrients 2023, 15, 2657. [Google Scholar] [CrossRef] [PubMed]

- El-Sabrout, K.; Aggag, S.; Mishra, B.; Ortiz, L.T. Advanced Practical Strategies to Enhance Table Egg Production. Scientifica 2022, 2022, 1393392. [Google Scholar] [CrossRef] [PubMed]

- Gautron, J.; Réhault-Godbert, S.; Van de Braak, T.; Dunn, I. Review: What are the challenges facing the table egg industry in the next decades and what can be done to address them? Animal 2021, 15, 100282. [Google Scholar] [CrossRef] [PubMed]

- Cavero, D.; Schmutz, M.; Bessei, W. Welfare aspects in egg production. Lohmann Inf. 2022, 54, 20–32. [Google Scholar]

- Rodenburg, T.B.; Giersberg, M.F.; Petersan, P.; Shields, S. Freeing the hens: Workshop outcomes for applying ethology to the development of cage-free housing systems in the commercial egg industry. Appl. Anim. Behav. Sci. 2022, 251, 105629. [Google Scholar] [CrossRef]

- Lusk, J.L. Consumer preferences for cage-free eggs and impacts of retailer pledges. Agribusiness 2018, 35, 129–148. [Google Scholar] [CrossRef]

- Caputo, V.; Staples, A.J.; Tonsor, G.T.; Lusk, J.L. Egg producer attitudes and expectations regarding the transition to cage-free production: A mixed-methods approach. Poult. Sci. 2023, 102, 103058. [Google Scholar] [CrossRef] [PubMed]

- Dong, X. Why did U.S. food retailers voluntarily pledge to go cage-free with eggs? Eur. Rev. Agric. Econ. 2025, 52, 98–122. [Google Scholar] [CrossRef]

- Hartcher, K.M.; Jones, B. The welfare of layer hens in cage and cage-free housing systems. World’s Poult. Sci. J. 2017, 73, 767–782. [Google Scholar] [CrossRef]

- Appleby, M.C.; Hughes, B.O. Welfare of laying hens in cages and alternative systems: Environmental, physical and behavioural aspects. World’s Poult. Sci. J. 1991, 47, 109–128. [Google Scholar] [CrossRef]

- Dawkins, M.S. Time budgets in Red Junglefowl as a baseline for the assessment of welfare in domestic fowl. Appl. Anim. Behav. Sci. 1989, 24, 77–80. [Google Scholar] [CrossRef]

- Höhne, A.; Petow, S.; Bessei, W.; Schrader, L. Contrafreeloading and foraging-related behavior in hens differing in laying performance and phylogenetic origin. Poult. Sci. 2023, 102, 102489. [Google Scholar] [CrossRef] [PubMed]

- Blokhuis, H.J. Feather-pecking in poultry: Its relation with ground-pecking. Appl. Anim. Behav. Sci. 1986, 16, 63–67. [Google Scholar] [CrossRef]

- Huber-Eicher, B.; Wechsler, B. Feather pecking in domestic chicks: Its relation to dustbathing and foraging. Anim. Behav. 1997, 54, 757–768. [Google Scholar] [CrossRef] [PubMed]

- Wechsler, B.; Huber-Eicher, B. The effect of foraging material and perch height on feather pecking and feather damage in laying hens. Appl. Anim. Behav. Sci. 1998, 58, 131–141. [Google Scholar] [CrossRef]

- Newberry, R.C.; Keeling, L.J.; Estevez, I.; Bilčík, B. Behaviour when young as a predictor of severe feather pecking in adult laying hens: The redirected foraging hypothesis revisited. Appl. Anim. Behav. Sci. 2007, 107, 262–274. [Google Scholar] [CrossRef]

- Rudkin, C. Feather pecking and foraging uncorrelated–the redirection hypothesis revisited. Br. Poult. Sci. 2022, 63, 265–273. [Google Scholar] [CrossRef] [PubMed]

- Li, G.; Huang, Y.; Chen, Z.; Chesser, G.D., Jr.; Purswell, J.L.; Linhoss, J.; Zhao, Y. Practices and Applications of Convolutional Neural Network-Based Computer Vision Systems in Animal Farming: A Review. Sensors 2021, 21, 1492. [Google Scholar] [CrossRef] [PubMed]

- Okinda, C.; Nyalala, I.; Korohou, T.; Okinda, C.; Wang, J.; Achieng, T.; Wamalwa, P.; Mang, T.; Shen, M. A review on computer vision systems in monitoring of poultry: A welfare perspective. Artif. Intell. Agric. 2020, 4, 184–208. [Google Scholar] [CrossRef]

- Yang, X.; Bist, R.B.; Paneru, B.; Liu, T.; Applegate, T.; Ritz, C.; Kim, W.; Regmi, P.; Chai, L. Computer Vision-Based cybernetics systems for promoting modern poultry Farming: A critical review. Comput. Electron. Agric. 2024, 225, 109339. [Google Scholar] [CrossRef]

- Campbell, M.; Miller, P.; Díaz-Chito, K.; Hong, X.; McLaughlin, N.; Parvinzamir, F.; Del Rincón, J.M.; O’COnnell, N. A computer vision approach to monitor activity in commercial broiler chickens using trajectory-based clustering analysis. Comput. Electron. Agric. 2024, 217, 108591. [Google Scholar] [CrossRef]

- Nasiri, A.; Zhao, Y.; Gan, H. Automated detection and counting of broiler behaviors using a video recognition system. Comput. Electron. Agric. 2024, 221, 108930. [Google Scholar] [CrossRef]

- Fodor, I.; Taghavi, M.; Ellen, E.D.; van der Sluis, M. Top-view characterization of broiler walking ability and leg health using computer vision. Poult. Sci. 2025, 104, 104724. [Google Scholar] [CrossRef] [PubMed]

- Paneru, B.; Bist, R.; Yang, X.; Chai, L. Tracking dustbathing behavior of cage-free laying hens with machine vision technologies. Poult. Sci. 2024, 103, 104289. [Google Scholar] [CrossRef] [PubMed]

- Paneru, B.; Bist, R.; Yang, X.; Chai, L. Tracking perching behavior of cage-free laying hens with deep learning technologies. Poult. Sci. 2024, 103, 104281. [Google Scholar] [CrossRef] [PubMed]

- Vijayakumar, A.; Vairavasundaram, S. YOLO-based Object Detection Models: A Review and its Applications. Multimed. Tools Appl. 2024, 83, 83535–83574. [Google Scholar] [CrossRef]

- Hidayatullah, P.; Syakrani, N.; Sholahuddin, M.R.; Gelar, T.; Tubagus, R. YOLOv8 to YOLO11: A Comprehensive Architecture In-depth Comparative Review. arXiv 2025, arXiv:2501.13400v2. [Google Scholar]

- Jegham, N.; Koh, C.Y.; Abdelatti, M.F.; Hendawi, A. YOLO Evolution: A Comprehensive Benchmark and Architectural Review of YOLOv12, YOLO11, and Their Previous Versions. arXiv 2024, arXiv:2411.00201v3. [Google Scholar]

- Subedi, S.; Bist, R.B.; Yang, X.; Li, G.; Chai, L. Advanced Deep Learning Methods for Multiple Behavior Classification of Cage-Free Laying Hens. AgriEngineering 2025, 7, 24. [Google Scholar] [CrossRef]

- Jocher, G.; Qiu, J.; Chaurasia, A. Ultralytics YOLO. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 10 June 2025).

- Diwan, T.; Anirudh, G.; Tembhurne, J.V. Object detection using YOLO: Challenges, architectural successors, datasets and applications. Multimed. Tools Appl. 2023, 82, 9243–9275. [Google Scholar] [CrossRef] [PubMed]

- Subedi, S.; Bist, R.; Yang, X.; Chai, L. Tracking pecking behaviors and damages of cage-free laying hens with machine vision technologies. Comput. Electron. Agric. 2023, 204, 107545. [Google Scholar] [CrossRef]

- Paramathma, M.K.; Kumar, I.B.; Karuppasamypandiyan, M. 2024YOLO Based Automatic Poultry Monitoring System. In Proceedings of the 2024 3rd International Conference for Advancement in Technology (ICONAT), Goa, India, 6–8 September 2024. [Google Scholar] [CrossRef]

- Guo, Y.; Aggrey, S.E.; Oladeinde, A.; Johnson, J.; Zock, G.; Chai, L. A machine vision-based method optimized for restoring broiler chicken images occluded by feeding and drinking equipment. Animals 2021, 11, 123. [Google Scholar] [CrossRef] [PubMed]

- Pu, H.; Lian, J.; Fan, M. Automatic Recognition of Flock Behavior of Chickens with Convolutional Neural Network and Kinect Sensor. Int. J. Pattern Recognit. Artif. Intell. 2018, 32, 1850023. [Google Scholar] [CrossRef]

| Component | Specification |

|---|---|

| GPU | NVIDIA RTX 4000 (Ada Generation) |

| Parallel computing platform and API | CUDA version: 12.5 |

| RAM | 64 GB |

| Operating system | Ubuntu 24.1 |

| Libraries | PyTorch 2.7.0, Ultralytics, OpenCV |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dahal, S.; Yang, X.; Paneru, B.; Dhungana, A.; Chai, L. Automatic Detection of Foraging Hens in a Cage-Free Environment with Computer Vision Technology. Poultry 2025, 4, 34. https://doi.org/10.3390/poultry4030034

Dahal S, Yang X, Paneru B, Dhungana A, Chai L. Automatic Detection of Foraging Hens in a Cage-Free Environment with Computer Vision Technology. Poultry. 2025; 4(3):34. https://doi.org/10.3390/poultry4030034

Chicago/Turabian StyleDahal, Samin, Xiao Yang, Bidur Paneru, Anjan Dhungana, and Lilong Chai. 2025. "Automatic Detection of Foraging Hens in a Cage-Free Environment with Computer Vision Technology" Poultry 4, no. 3: 34. https://doi.org/10.3390/poultry4030034

APA StyleDahal, S., Yang, X., Paneru, B., Dhungana, A., & Chai, L. (2025). Automatic Detection of Foraging Hens in a Cage-Free Environment with Computer Vision Technology. Poultry, 4(3), 34. https://doi.org/10.3390/poultry4030034